Assessing System-Wide Safety Readiness for Successful Human–Robot Collaboration Adoption

Abstract

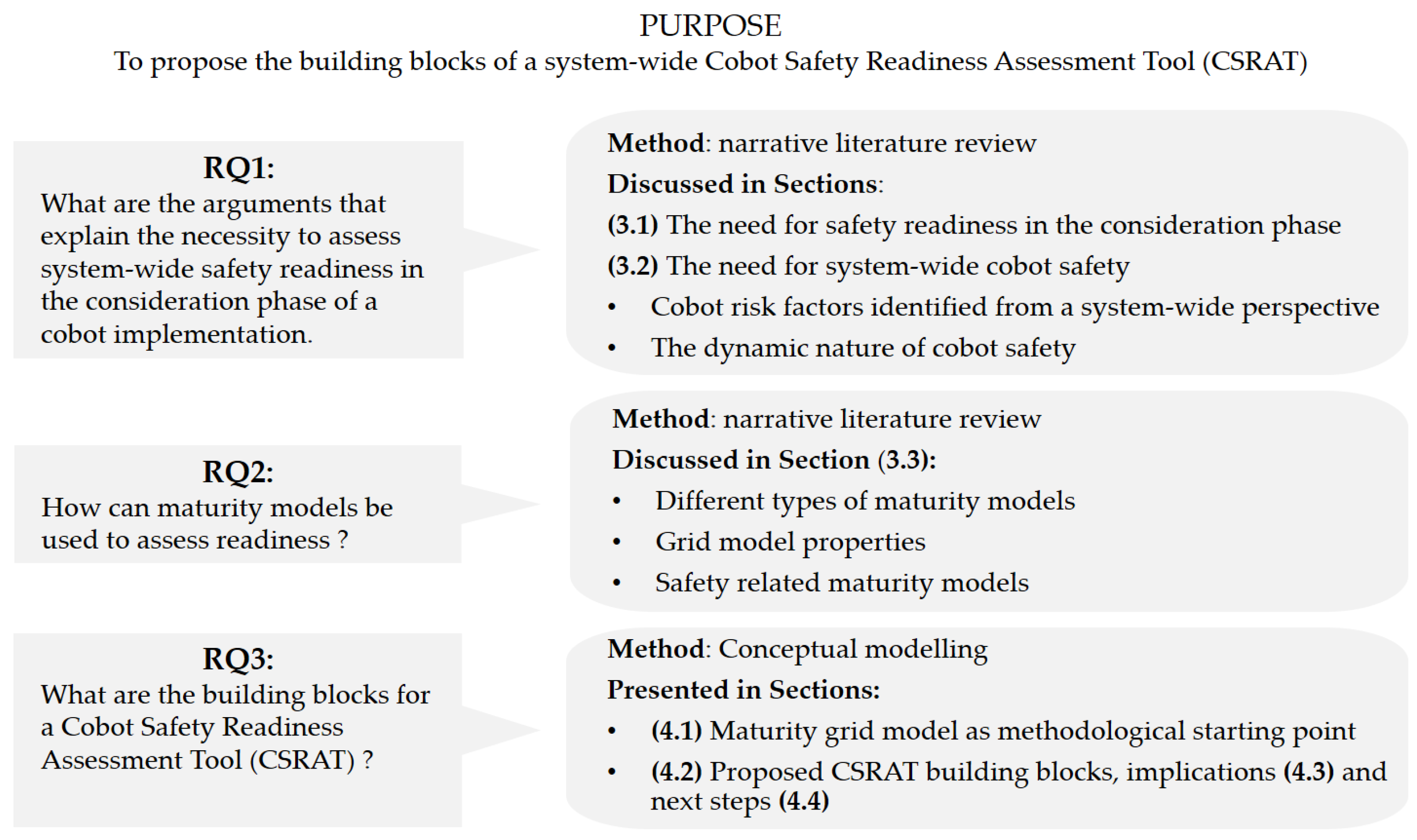

:1. Introduction

- RQ1: What are the arguments that explain the necessity of assessing system-wide safety readiness in the consideration phase of cobot implementation?

- RQ2: How can maturity models be used to assess readiness?

- RQ3: What are the building blocks for a Cobot Safety Readiness Assessment Tool?

2. Materials and Methods

3. Context, Scoping, and Critical Review

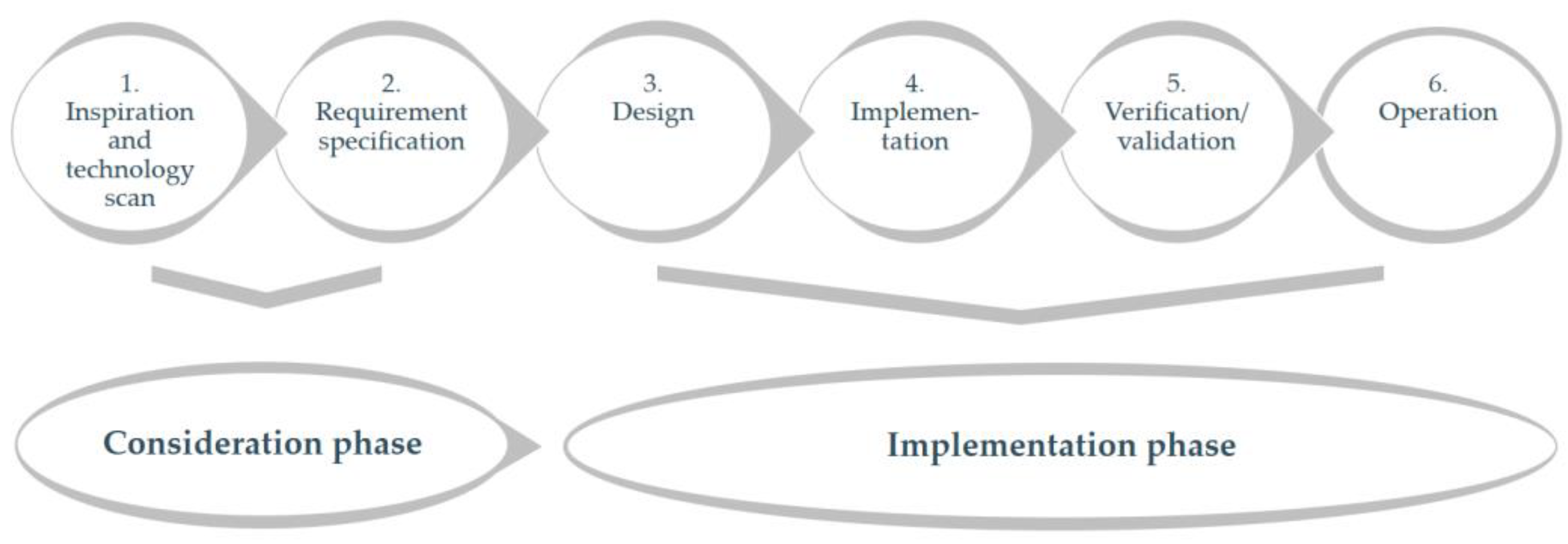

3.1. The Need for Safety Awareness Readiness in the Consideration Phase

3.2. The Need for System-Wide Cobot Safety

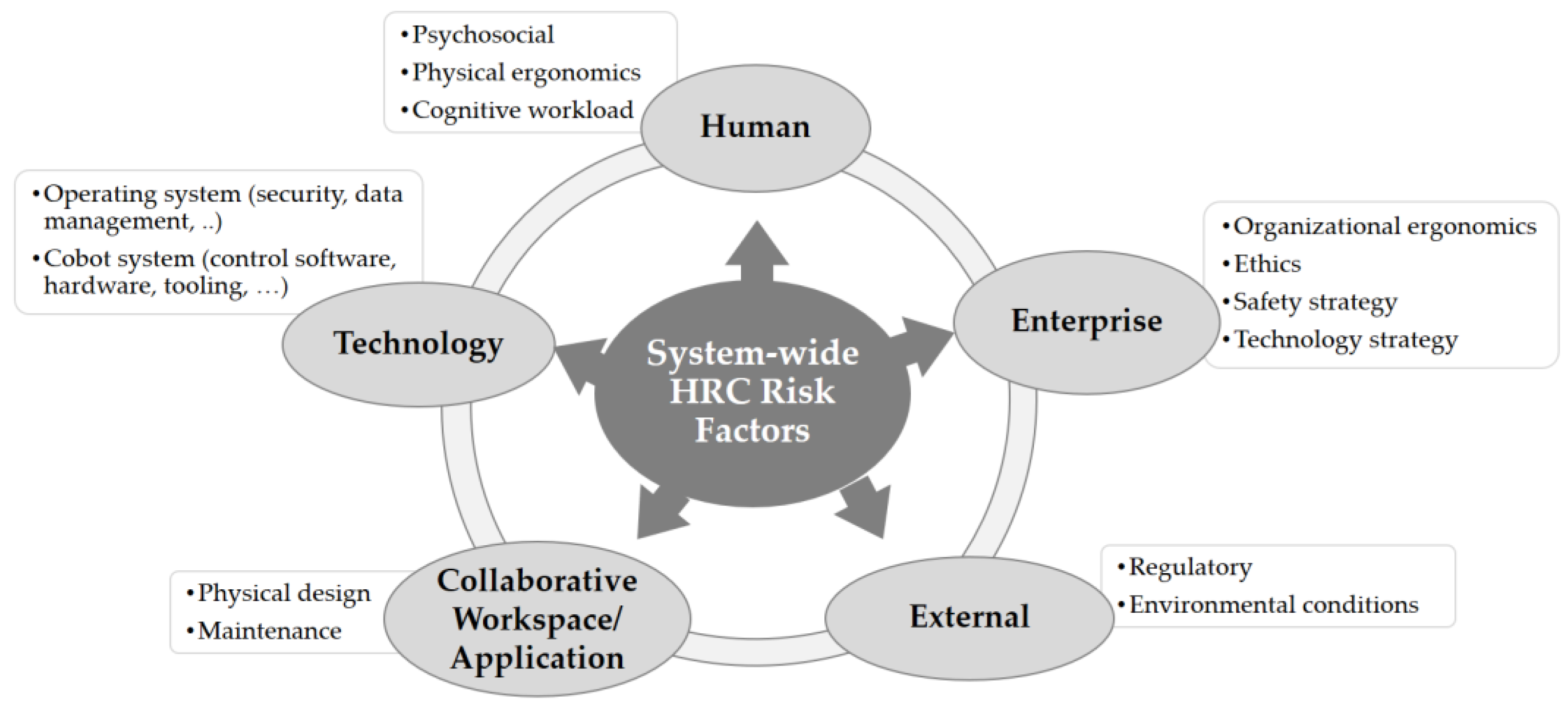

3.2.1. Cobot Risk Factors Identified from a System-Wide Perspective

3.2.2. Cobot Safety Is Dynamic and Not Limited to the Consideration Phase

3.3. Maturity Models

3.3.1. Different Types of Maturity Models

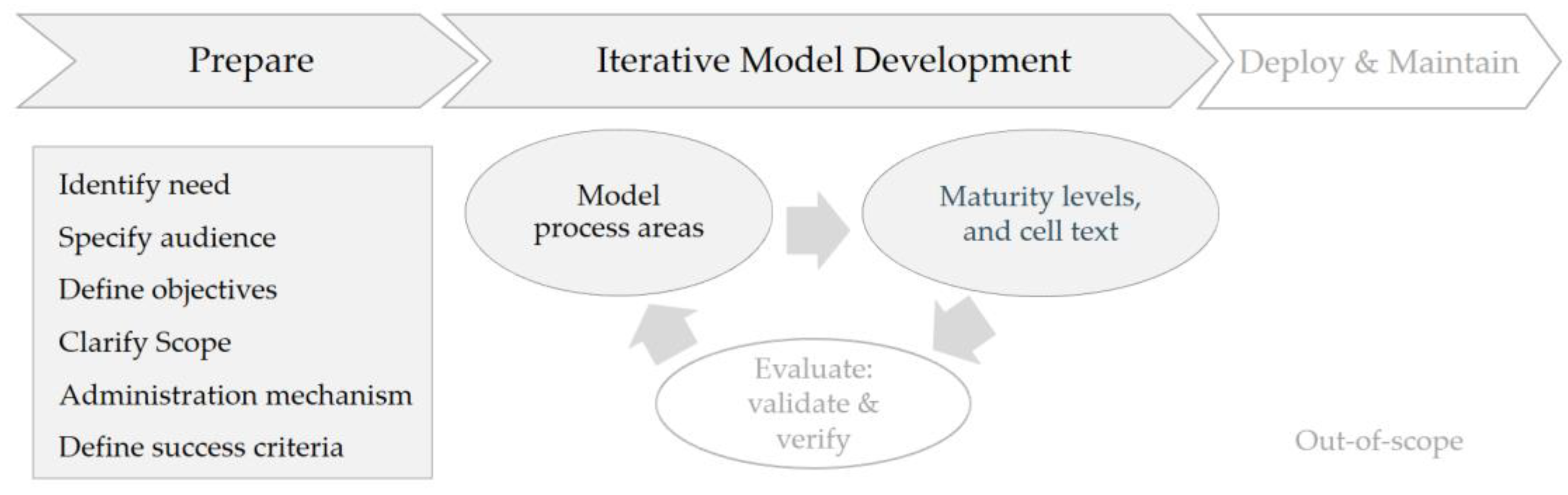

3.3.2. Maturity Grid Model Properties, Design Process, and Guidelines

3.3.3. Safety-Related Maturity Models

4. Discussion

4.1. Appropriateness of a Maturity Grid Model as a Methodological Starting Point

4.2. Proposal of the Building Blocks of the Cobot Safety Readiness Tool (CSRAT)

4.2.1. Prepare

4.2.2. Model Development

- Level 1: No idea about cobot risk factors;

- Level 2: Aware of the risk factors for cobots, but not sure how to cope with them and which safety management frameworks and tools to use;

- Level 3: Previous safety management frameworks and tools are in place and can be used, but adaption is needed to work with cobots;

- Level 4: Tools are either newly installed or adapted to working with cobots.

- We have the right knowledge in-house (Yes/No);

- If no: We know where to find this knowledge externally (Yes/No).

4.3. Theoretical and Managerial Implications

4.4. Next Steps

5. Limitations

6. Summary and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- De Carolis, A.; Macchi, M.; Negri, E.; Terzi, S. Guiding manufacturing companies towards digitalization a methodology for supporting manufacturing companies in defining their digitalization roadmap. In Proceedings of the 2017 International Conference on Engineering, Technology and Innovation (ICE/ITMC), Madeira, Portugal, 27–29 June 2017; pp. 487–495. [Google Scholar]

- Kamble, S.S.; Gunasekaran, A.; Gawankar, S.A. Sustainable Industry 4.0 framework: A systematic literature review identifying the current trends and future perspectives. Process Saf. Environ. Prot. 2018, 117, 408–425. [Google Scholar] [CrossRef]

- euRobotics SPARC Robotics 2020 Multi-Annual Roadmap For Robotics in Europe; EU-Robotics AISBL: The Hauge, The Netherlands, 2016.

- Breque, M.; De Nul, L.; Petridis, A. Industry 5.0 towards a Sustainable, Human-Centric and Resilient European Industry; Publications Office of the European Union: Brussels, Belgium, 2021. [Google Scholar]

- Bednar, P.M.; Welch, C. Socio-Technical Perspectives on Smart Working: Creating Meaningful and Sustainable Systems. Inf. Syst. Front. 2020, 22, 281–298. [Google Scholar] [CrossRef] [Green Version]

- ISO ISO/TS 15066:2016—Robots and Robotic Devices—Collaborative Robots. Available online: https://www.iso.org/standard/62996.html (accessed on 21 October 2021).

- Inkulu, A.K.; Bahubalendruni, M.V.A.R.; Dara, A.; SankaranarayanaSamy, K. Challenges and opportunities in human robot collaboration context of Industry 4.0—A state of the art review. Ind. Robot Int. J. Robot. Res. Appl. 2022, 49, 226–239. [Google Scholar] [CrossRef]

- Mukherjee, D.; Gupta, K.; Chang, L.H.; Najjaran, H. A Survey of Robot Learning Strategies for Human-Robot Collaboration in Industrial Settings. Robot. Comput. Integr. Manuf. 2022, 73, 102231. [Google Scholar] [CrossRef]

- Vicentini, F. Collaborative Robotics: A Survey. J. Mech. Des. 2021, 143, 040802. [Google Scholar] [CrossRef]

- Vanderborght, B. Unlocking the Potential of Industrial Human-Robot Collaboration for Economy and Society; Publications Office of the European Union: Brussels, Belgium, 2019. [Google Scholar]

- Vogt, G. International Federation of Robotics Editorial World Robotics 2020. World Robot. Rep. 2020, 5–9. Available online: https://ifr.org/img/worldrobotics/Editorial_WR_2020_Industrial_Robots.pdf (accessed on 20 April 2022).

- Saenz, J.; Elkmann, N.; Gibaru, O.; Neto, P. Survey of methods for design of collaborative robotics applications—Why safety is a barrier to more widespread robotics uptake. In Proceedings of the 2018 4th International Conference on Mechatronics and Robotics Engineering, Valenciennes, France, 7–11 February 2018; ACM: New York, NY, USA, 2018; pp. 95–101. [Google Scholar]

- Berx, N.; Decré, W.; Pintelon, L. Examining the Role of Safety in the Low Adoption Rate of Collaborative Robots. Procedia CIRP 2022, 106, 51–57. [Google Scholar] [CrossRef]

- Aaltonen, I.; Salmi, T. Experiences and expectations of collaborative robots in industry and academia: Barriers and development needs. Procedia Manuf. 2019, 38, 1151–1158. [Google Scholar] [CrossRef]

- Dominguez, E. Engineering a Safe Collaborative Application. In The 21st Century Industrial Robot: When Tools Become Collaborators. Intelligent Systems, Control and Automation: Science and Engineering; Aldinhas Ferreira, M.I., Fletcher, S.R., Eds.; Intelligent Systems, Control and Automation: Science and Engineering; Springer International Publishing: Cham, Switzerland, 2022; Volume 81, pp. 173–189. ISBN 978-3-030-78512-3. [Google Scholar]

- Matheson, E.; Minto, R.; Zampieri, E.G.G.; Faccio, M.; Rosati, G. Human–Robot Collaboration in Manufacturing Applications: A Review. Robotics 2019, 8, 100. [Google Scholar] [CrossRef] [Green Version]

- Grahn, S.; Johansson, K.; Eriksson, Y. Safety Assessment Strategy for Collaborative Robot Installations. In Robots Operating in Hazardous Environments; InTech: Rijeka, Croatia, 2017. [Google Scholar]

- Adriaensen, A.; Costantino, F.; Di Gravio, G.; Patriarca, R. Teaming with industrial cobots: A socio-technical perspective on safety analysis. Hum. Factors Ergon. Manuf. Serv. Ind. 2021, 32, 173–198. [Google Scholar] [CrossRef]

- Berx, N.; Decré, W.; Morag, I.; Chemweno, P.; Pintelon, L. Identification and classification of risk factors for human-robot collaboration from a system-wide perspective. Comput. Ind. Eng. 2022, 163, 107827. [Google Scholar] [CrossRef]

- Chemweno, P.; Pintelon, L.; Decre, W. Orienting safety assurance with outcomes of hazard analysis and risk assessment: A review of the ISO 15066 standard for collaborative robot systems. Saf. Sci. 2020, 129, 104832. [Google Scholar] [CrossRef]

- Brocal, F.; González, C.; Komljenovic, D.; Katina, P.F.; Sebastián, M.A. Emerging Risk Management in Industry 4.0: An Approach to Improve Organizational and Human Performance in the Complex Systems. Complexity 2019, 2019, 2089763. [Google Scholar] [CrossRef] [Green Version]

- International Organization for Standardization (ISO). Draft International Standard ISO/DIS 10218-1 Robotics—Safety Requirements for Robot Systems in an Industrial Environment—Part 1: Robots; International Organization for Standardization: Geneva, Switzerland, 2020. [Google Scholar]

- International Organization for Standardization (ISO). Draft International Standard ISO/DIS 10218-2 Robotics—Safety Requirements for Robot Systems in an Industrial Environment—Part 2: Robot Systems, Robot Applications and Robot Cells Integration; International Organization for Standardization: Geneva, Switzerland, 2020. [Google Scholar]

- Kadir, B.A.; Broberg, O.; Souza da Conceição, C. Designing Human-Robot Collaborations in Industry 4.0: Explorative Case Studies. In Proceedings of the International Design Conference, Dubrovnik, Croatia, 21–24 May 2018; Volume 2, pp. 601–610. [Google Scholar]

- Guiochet, J.; Machin, M.; Waeselynck, H. Safety-critical advanced robots: A survey. Rob. Auton. Syst. 2017, 94, 43–52. [Google Scholar] [CrossRef] [Green Version]

- Gualtieri, L.; Rauch, E.; Vidoni, R. Development and validation of guidelines for safety in human-robot collaborative assembly systems. Comput. Ind. Eng. 2022, 163, 107801. [Google Scholar] [CrossRef]

- Wendler, R. The maturity of maturity model research: A systematic mapping study. Inf. Softw. Technol. 2012, 54, 1317–1339. [Google Scholar] [CrossRef]

- van Dyk, L. The Development of a Telemedicine Service Maturity Model. Ph.D. Thesis, Stellenbosch University, Stellenbosch, South Africa, 2013. [Google Scholar]

- Dochy, F. A guide for writing scholarly articles or reviews. Educ. Res. Rev. 2006, 4, 1–21. [Google Scholar] [CrossRef]

- Maier, A.M.; Moultrie, J.; Clarkson, P.J. Assessing Organizational Capabilities: Reviewing and Guiding the Development of Maturity Grids. IEEE Trans. Eng. Manag. 2012, 59, 138–159. [Google Scholar] [CrossRef]

- IEEE Adoption of ISO/IEC 15288: 2002, Systems Engineering-System Life Cycle Processes; The Institute of Electrical and Electronics Engineers, Inc.: New York, NY, USA, 2005.

- Saenz, J.; Fassi, I.; Prange-Lasonder, G.B.; Valori, M.; Bidard, C.; Lassen, A.B.; Bessler-Etten, J. COVR Toolkit—Supporting safety of interactive robotics applications. In Proceedings of the 2021 IEEE 2nd International Conference on Human-Machine Systems (ICHMS), Magdeburg, Germany, 8–10 September 2021; pp. 1–6. [Google Scholar]

- Matthias, B.; Kock, S.; Jerregard, H.; Kallman, M.; Lundberg, I. Safety of collaborative industrial robots: Certification possibilities for a collaborative assembly robot concept. In Proceedings of the 2011 IEEE International Symposium on Assembly and Manufacturing (ISAM), Tampere, Finland, 25–27 May 2011; pp. 1–6. [Google Scholar]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement Instruments for the Anthropomorphism, Animacy, Likeability, Perceived Intelligence, and Perceived Safety of Robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef] [Green Version]

- You, S.; Kim, J.-H.; Lee, S.; Kamat, V.; Robert, L.P. Enhancing perceived safety in human–robot collaborative construction using immersive virtual environments. Autom. Constr. 2018, 96, 161–170. [Google Scholar] [CrossRef]

- Baltrusch, S.J.; Krause, F.; de Vries, A.W.; van Dijk, W.; de Looze, M.P. What about the Human in Human Robot Collaboration? A literature review on HRC’s effects on aspects of job quality. Ergonomics 2021, 65, 719–740. [Google Scholar] [CrossRef] [PubMed]

- Carayon, P.; Hancock, P.; Leveson, N.; Noy, I.; Sznelwar, L.; van Hootegem, G. Advancing a sociotechnical systems approach to workplace safety—Developing the conceptual framework. Ergonomics 2015, 58, 548–564. [Google Scholar] [CrossRef] [PubMed]

- Charalambous, G.; Fletcher, S.; Webb, P. Development of a Human Factors Roadmap for the Successful Implementation of Industrial Human-Robot Collaboration. In Advances in Ergonomics of Manufacturing: Managing the Enterprise of the Future; Springer: Cham, Switzerland, 2016; pp. 195–206. [Google Scholar]

- Kadir, B.A.; Broberg, O. Human well-being and system performance in the transition to industry 4.0. Int. J. Ind. Ergon. 2020, 76, 102936. [Google Scholar] [CrossRef]

- Kopp, T.; Baumgartner, M.; Kinkel, S. Success factors for introducing industrial human-robot interaction in practice: An empirically driven framework. Int. J. Adv. Manuf. Technol. 2021, 112, 685–704. [Google Scholar] [CrossRef]

- Hale, A.; Hovden, J. Management and culture: The third age of safety. A review of approaches to organizational aspects of safety, health and environment. In Occupational Injury; CRC Press: Boca Raton, FL, USA, 1998; p. 38. ISBN 9780429218033. [Google Scholar]

- Jain, A.; Leka, S.; Zwetsloot, G.I.J.M. Work, Health, Safety and Well-Being: Current State of the Art. In Managing Health, Safety and Well-Being. Aligning Perspectives on Health, Safety and Well-Being; Springer: Dordrecht, The Netherlands, 2018; pp. 1–31. [Google Scholar]

- Borys, D.; Else, D.; Leggett, S. The fifth age of safety: The adaptive age. J. Health Serv. Res. Policy 2009, 1, 19–27. [Google Scholar]

- Margherita, E.G.; Braccini, A.M. Socio-technical perspectives in the Fourth Industrial Revolution—Analysing the three main visions: Industry 4.0, the socially sustainable factory of Operator 4.0 and Industry 5.0. In Proceedings of the 7th International Workshop on Socio-Technical Perspective in IS Development (STPIS 2021), Trento, Italy, 8 April 2021. [Google Scholar]

- Nayernia, H.; Bahemia, H.; Papagiannidis, S. A systematic review of the implementation of industry 4.0 from the organisational perspective. Int. J. Prod. Res. 2021, 1–32. [Google Scholar] [CrossRef]

- Martinetti, A.; Chemweno, P.K.; Nizamis, K.; Fosch-Villaronga, E. Redefining Safety in Light of Human-Robot Interaction: A Critical Review of Current Standards and Regulations. Front. Chem. Eng. 2021, 3, 1–12. [Google Scholar] [CrossRef]

- Sgarbossa, F.; Grosse, E.H.; Neumann, W.P.; Battini, D.; Glock, C.H. Human factors in production and logistics systems of the future. Annu. Rev. Control 2020, 49, 295–305. [Google Scholar] [CrossRef]

- Cardoso, A.; Colim, A.; Bicho, E.; Braga, A.C.; Menozzi, M.; Arezes, P. Ergonomics and Human Factors as a Requirement to Implement Safer Collaborative Robotic Workstations: A Literature Review. Safety 2021, 7, 71. [Google Scholar] [CrossRef]

- Hollnagel, E. The Four Cornerstones of Resilience Engineering. In Resilience Engineering Perspectives, Volume 2: Preparation and Restoration; Nemeth, C.P., Hollnagel, E., Dekker, S., Eds.; CRC Press: Boca Raton, FL, USA, 2009; pp. 117–134. [Google Scholar]

- Hale, A.; Borys, D. Working to rule or working safely? Part 2: The management of safety rules and procedures. Saf. Sci. 2013, 55, 222–231. [Google Scholar] [CrossRef] [Green Version]

- Dekker, S. Foundation of Safety Science of Understanding Accidents and Disasters; Routledge: London, UK, 2019; ISBN 9781138481787. [Google Scholar]

- Leveson, N.G. Engineering a Safer World Systems Thinking Applied to Safety; The MIT Press: Cambridge, MA, USA, 2011; ISBN 978-0-262-01662-9. [Google Scholar]

- Patriarca, R.; Bergström, J.; Di Gravio, G.; Costantino, F. Resilience engineering: Current status of the research and future challenges. Saf. Sci. 2018, 102, 79–100. [Google Scholar] [CrossRef]

- Eimontaitre, I.; Fletcher, S. Preliminary development of the Psychological Factors Assessment Framework for manufacturing human-robot collaboration. Indust. SMEs 2020, 105–144. [Google Scholar]

- Kim, W.; Kim, N.; Lyons, J.B.; Nam, C.S. Factors affecting trust in high-vulnerability human-robot interaction contexts: A structural equation modelling approach. Appl. Ergon. 2020, 85, 103056. [Google Scholar] [CrossRef] [PubMed]

- Weiss, A.; Wortmeier, A.-K.; Kubicek, B. Cobots in Industry 4.0: A Roadmap for Future Practice Studies on Human–Robot Collaboration. IEEE Trans. Hum. Mach. Syst. 2021, 51, 335–345. [Google Scholar] [CrossRef]

- Charalambous, G.; Fletcher, S.R. Trust in Industrial Human—Robot Collaboration. In The 21st Century Industrial Robot: When Tools Become Collaborators; Springer International Publishing: New York, NY, USA, 2021; pp. 87–103. ISBN 9783030785130. [Google Scholar]

- Akalin, N.; Kristoffersson, A.; Loutfi, A. Do you feel safe with your robot? Factors influencing perceived safety in human-robot interaction based on subjective and objective measures. Int. J. Hum. Comput. Stud. 2022, 158, 102744. [Google Scholar] [CrossRef]

- Sarter, N.B.; Woods, D.D. How in the world did we ever get into that mode? Mode error and awareness in supervisory control. Hum. Factors 1995, 37, 5–19. [Google Scholar] [CrossRef]

- Michaelis, J.E.; Siebert-Evenstone, A.; Shaffer, D.W.; Mutlu, B. Collaborative or Simply Uncaged? Understanding Human-Cobot Interactions in Automation. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; ACM: New York, NY, USA, 2020; pp. 1–12. [Google Scholar]

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. Situation Awareness, Mental Workload, and Trust in Automation: Viable, Empirically Supported Cognitive Engineering Constructs. J. Cogn. Eng. Decis. Mak. 2008, 2, 140–160. [Google Scholar] [CrossRef]

- Parasuraman, A. Technology Readiness Index (Tri). J. Serv. Res. 2000, 2, 307–320. [Google Scholar] [CrossRef]

- Liu, C.; Tomizuka, M. Control in a safe set: Addressing safety in human-robot interactions. ASME Dyn. Syst. Control Conf. DSCC 2014, 2014, 3. [Google Scholar] [CrossRef]

- Simões, A.C.; Lucas Soares, A.; Barros, A.C. Drivers Impacting Cobots Adoption in Manufacturing Context: A Qualitative Study. In Lecture Notes in Mechanical Engineering; Springer: Cham, Switzerland, 2019; Volume 1, pp. 203–212. ISBN 9783030187156. [Google Scholar]

- Oxford English Dictionary: Maturity. Available online: https://www-oed-com.kuleuven.e-bronnen.be/view/Entry/115126?redirectedFrom=maturity#eid (accessed on 26 January 2022).

- Lahrmann, G.; Marx, F.; Mettler, T.; Winter, R.; Wortmann, F. Inductive Design of Maturity Models: Applying the Rasch Algorithm for Design Science Research. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6629, pp. 176–191. ISBN 9783642206320. [Google Scholar]

- Becker, J.; Knackstedt, R.; Pöppelbuß, J. Developing Maturity Models for IT Management. Bus. Inf. Syst. Eng. 2009, 1, 213–222. [Google Scholar] [CrossRef]

- dos Santos-Neto, J.B.S.; Costa, A.P.C.S. Enterprise maturity models: A systematic literature review. Enterp. Inf. Syst. 2019, 13, 719–769. [Google Scholar] [CrossRef]

- Pereira, R.; Serrano, J. A review of methods used on IT maturity models development: A systematic literature review and a critical analysis. J. Inf. Technol. 2020, 35, 161–178. [Google Scholar] [CrossRef]

- Mettler, T.; Ballester, O. Maturity Models in Information Systems: A Review and Extension of Existing Guidelines. Proc. Int. Conf. Inf. Syst. 2021, 1–16. [Google Scholar]

- Normann Andersen, K.; Lee, J.; Mettler, T.; Moon, M.J. Ten Misunderstandings about Maturity Models. In Proceedings of the The 21st Annual International Conference on Digital Government Research, Omaha, NE, USA, 9–11 June 2021; ACM: New York, NY, USA, 2020; pp. 261–266. [Google Scholar]

- Mettler, T. Thinking in Terms of Design Decisions When Developing Maturity Models. Int. J. Strateg. Decis. Sci. 2010, 1, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Mittal, S.; Khan, M.A.; Romero, D.; Wuest, T. A critical review of smart manufacturing & Industry 4.0 maturity models: Implications for small and medium-sized enterprises (SMEs). J. Manuf. Syst. 2018, 49, 194–214. [Google Scholar] [CrossRef]

- Schumacher, A.; Erol, S.; Sihn, W. A Maturity Model for Assessing Industry 4.0 Readiness and Maturity of Manufacturing Enterprises. Procedia CIRP 2016, 52, 161–166. [Google Scholar] [CrossRef]

- Mettler, T. Maturity assessment models: A design science research approach. Int. J. Soc. Syst. Sci. 2011, 3, 81. [Google Scholar] [CrossRef] [Green Version]

- Mittal, S.; Khan, M.A.; Romero, D.; Wuest, T. Building Blocks for Adopting Smart Manufacturing. Procedia Manuf. 2019, 34, 978–985. [Google Scholar] [CrossRef]

- Schmitt, P.; Schmitt, J.; Engelmann, B. Evaluation of proceedings for SMEs to conduct I4.0 projects. Procedia CIRP 2019, 86, 257–263. [Google Scholar] [CrossRef]

- de Bruin, T.; Rosemann, M.; Freeze, R.; Kulkarni, U. Understanding the main phases of developing a maturity assessment model. In Proceedings of the Australasian Conference on Information Systems (ACIS), Sydney, Australia, 30 November–2 December 2005. [Google Scholar]

- Pöppelbuß, J.; Röglinger, M. What makes a useful maturity model? A framework of general design principles for maturity models and its demonstration in business process management. In Proceedings of the 19th European Conference on Information Systems (ECIS 2011), Helsinki, Finland, 9–11 June 2011. [Google Scholar]

- Lasrado, L.A.; Vatrapu, R.; Andersen, K.N. A set theoretical approach to maturity models: Guidelines and demonstration. In Proceedings of the 2016 International Conference on Information Systems, ICIS 2016: Digital Innovation at the Crossroads, Dublin, Ireland, 11–14 December 2016; Association for Information Systems. AIS Electronic Library (AISeL): Atlanta, GA, USA, 2016; Volume 37, pp. 1–20. [Google Scholar]

- Rohrbeck, R. Corporate Foresight towards a Maturity Model for the Future Orientation of a Firm; Springer: Berlin/Heidelberg, Germany, 2012; ISBN 9783790827613. [Google Scholar]

- De Carolis, A.; Macchi, M.; Kulvatunyou, B.; Brundage, M.P.; Terzi, S. Maturity Models and Tools for Enabling Smart Manufacturing Systems: Comparison and Reflections for Future Developments. In IFIP Advances in Information and Communication Technology; Springer: Cham, Switzerland, 2017; Volume 517, pp. 23–35. ISBN 9783319729046. [Google Scholar]

- Fraser, P.; Moultrie, J.; Gregory, M. The use of maturity models/grids as a tool in assessing product development capability. In Proceedings of the IEEE International Engineering Management Conference, Cambridge, UK, 18–20 August 2002; Volume 1, pp. 244–249. [Google Scholar]

- Moultrie, J.; Clarkson, P.J.; Probert, D. Development of a Design Audit Tool for SMEs. J. Prod. Innov. Manag. 2007, 24, 335–368. [Google Scholar] [CrossRef]

- Maier, A.M.; Eckert, C.M.; John Clarkson, P. Identifying requirements for communication support: A maturity grid-inspired approach. Expert Syst. Appl. 2006, 31, 663–672. [Google Scholar] [CrossRef]

- Moultrie, J.; Sutcliffe, L.; Maier, A. A maturity grid assessment tool for environmentally conscious design in the medical device industry. J. Clean. Prod. 2016, 122, 252–265. [Google Scholar] [CrossRef] [Green Version]

- Campos, T.L.R.; da Silva, F.F.; de Oliveira, K.B.; de Oliveira, O.J. Maturity grid to evaluate and improve environmental management in industrial companies. Clean Technol. Environ. Policy 2020, 22, 1485–1497. [Google Scholar] [CrossRef]

- Cienfuegos, I.J. Developing a Risk Maturity Model for Dutch Municipalities. Ph.D. Thesis, University of Twente, Enschede, The Netherlands, 2013. [Google Scholar]

- Golev, A.; Corder, G.D.; Giurco, D.P. Barriers to Industrial Symbiosis: Insights from the Use of a Maturity Grid. J. Ind. Ecol. 2015, 19, 141–153. [Google Scholar] [CrossRef]

- Mullaly, M. Longitudinal Analysis of Project Management Maturity. Proj. Manag. J. 2006, 37, 62–73. [Google Scholar] [CrossRef]

- Van Looy, A.; De Backer, M.; Poels, G.; Snoeck, M. Choosing the right business process maturity model. Inf. Manag. 2013, 50, 466–488. [Google Scholar] [CrossRef]

- Galvez, D.; Enjolras, M.; Camargo, M.; Boly, V.; Claire, J. Firm Readiness Level for Innovation Projects: A New Decision-Making Tool for Innovation Managers. Adm. Sci. 2018, 8, 6. [Google Scholar] [CrossRef]

- Jones, M.D.; Hutcheson, S.; Camba, J.D. Past, present, and future barriers to digital transformation in manufacturing: A review. J. Manuf. Syst. 2021, 60, 936–948. [Google Scholar] [CrossRef]

- De Carolis, A.; Macchi, M.; Negri, E.; Terzi, S. A Maturity Model for Assessing the Digital Readiness of Manufacturing Companies. In IFIP Advances in Information and Communication Technology; Springer: Cham, Switzerland, 2017; Volume 513, pp. 13–20. ISBN 9783319669229. [Google Scholar]

- Zoubek, M.; Simon, M. A Framework for a Logistics 4.0 Maturity Model with a Specification for Internal Logistics. MM Sci. J. 2021, 2021, 4264–4274. [Google Scholar] [CrossRef]

- Kaassis, B.; Badri, A. Development of a Preliminary Model for Evaluating Occupational Health and Safety Risk Management Maturity in Small and Medium-Sized Enterprises. Safety 2018, 4, 5. [Google Scholar] [CrossRef] [Green Version]

- Zhao, X.; Hwang, B.-G.; Low, S.P. Developing Fuzzy Enterprise Risk Management Maturity Model for Construction Firms. J. Constr. Eng. Manag. 2013, 139, 1179–1189. [Google Scholar] [CrossRef]

- Poghosyan, A.; Manu, P.; Mahamadu, A.-M.; Akinade, O.; Mahdjoubi, L.; Gibb, A.; Behm, M. A web-based design for occupational safety and health capability maturity indicator. Saf. Sci. 2020, 122, 104516. [Google Scholar] [CrossRef]

- Jääskeläinen, A.; Tappura, S.; Pirhonen, J. Maturity Analysis of Safety Performance Measurement. In Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2020; Volume 1026, pp. 529–535. ISBN 9783030279271. [Google Scholar]

- Elibal, K.; Özceylan, E. Comparing industry 4.0 maturity models in the perspective of TQM principles using Fuzzy MCDM methods. Technol. Forecast. Soc. Change 2022, 175, 121379. [Google Scholar] [CrossRef]

- Maisiri, W.; van Dyk, L. Industry 4.0 Competence Maturity Model Design Requirements: A Systematic Mapping Review. In Proceedings of the 2020 IFEES World Engineering Education Forum—Global Engineering Deans Council (WEEF-GEDC), Cape Town, South Africa, 16–19 November 2020; pp. 1–6. [Google Scholar]

- Chonsawat, N.; Sopadang, A. Defining SMEs’ 4.0 Readiness Indicators. Appl. Sci. 2020, 10, 8998. [Google Scholar] [CrossRef]

- Forsgren, N.; Humble, J.; Kim, G. Accelerate Building and Scaling High Performing Technology Organizations; IT Revolution Publishing: Portland, Oregon, 2018; ISBN 978-1942788331. [Google Scholar]

- Deutsch, C.; Meneghini, C.; Mermut, O.; Lefort, M. Measuring Technology Readiness to improve Innovation Management. In Proceedings of the The XXI ISPIM Conference, Bilbao, Spain, 6–9 June 2010; INO: Québec, Canada, 2010; pp. 1–11. [Google Scholar]

- Bethel, C.L.; Henkel, Z.; Baugus, K. Conducting Studies in Human-Robot Interaction. In Human-Robot Interaction Evaluation Methods and Their Standardization; Springer: Cham, Switzerland, 2020; pp. 91–124. [Google Scholar]

- Mettler, T.; Rohner, P. Situational maturity models as instrumental artifacts for organizational design. In Proceedings of the Proceedings of the 4th International Conference on Design Science Research in Information Systems and Technology—DESRIST’09, Philadelphia, PA, USA, 7–8 May 2009; ACM Press: New York, NY, USA, 2009; p. 1. [Google Scholar]

- Gualtieri, L.; Palomba, I.; Merati, F.A.; Rauch, E.; Vidoni, R. Design of Human-Centered Collaborative Assembly Workstations for the Improvement of Operators’ Physical Ergonomics and Production Efficiency: A Case Study. Sustainability 2020, 12, 3606. [Google Scholar] [CrossRef]

- Neumann, W.P.; Winkelhaus, S.; Grosse, E.H.; Glock, C.H. Industry 4.0 and the human factor—A systems framework and analysis methodology for successful development. Int. J. Prod. Econ. 2021, 233, 107992. [Google Scholar] [CrossRef]

- Peruzzini, M.; Grandi, F.; Pellicciari, M. Exploring the potential of Operator 4.0 interface and monitoring. Comput. Ind. Eng. 2020, 139, 105600. [Google Scholar] [CrossRef]

| Maturity Grid Dimension | Sub-dimension | Description | Level 1. No idea | Level 2. Aware, no tools | Level 3. Aware, tools in place | Level 4. Tools adapted for cobots | Choosen Level Score | We have the right knowledge | |

| No idea about cobot risk factors | Aware of the risk factors for cobots, but not sure how to cope with them and which tools to use | Tools are known and in place, but not adapted for cobots | Tools are adapted for cobots | In-house Y/N | Not in-houseKnow where to findY/N | ||||

| HUMAN | Psychosocial | Psychosocial risk factors are a combination of psychological and social factors. Psychosocial risks can arise from poor work design and organisation or poor social context of work. They may result in negative psychological, physical and social outcomes such as work-related stress, burnout or depression. In human-robot collaboration, trust between human and cobot is known to have an influence on the safety of the operator. | We are not aware of how psychosocial factors can play a role as a hazard when humans collaborate with a cobot. | We know that psychosocial factors can have an influence on the safety of the operator, but we do not exactly know which are the specific drivers and how to identify them. | We know that psychosocial factors can have an influence on the safety of the operator, but have not yet added this in our safety management tools (e.g. occupational health & safety surveys, satisfaction or worker commitment surveys, trust measures, or job demand & resources investigation). | We know that psychosocial factors can have an influence on the safety of the operator, and already included this aspect in our safety management tools (e.g. occupational health & safety surveys, satisfaction or worker commitment surveys, trust measures, or job demand & resources investigation). | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Berx, N.; Adriaensen, A.; Decré, W.; Pintelon, L. Assessing System-Wide Safety Readiness for Successful Human–Robot Collaboration Adoption. Safety 2022, 8, 48. https://doi.org/10.3390/safety8030048

Berx N, Adriaensen A, Decré W, Pintelon L. Assessing System-Wide Safety Readiness for Successful Human–Robot Collaboration Adoption. Safety. 2022; 8(3):48. https://doi.org/10.3390/safety8030048

Chicago/Turabian StyleBerx, Nicole, Arie Adriaensen, Wilm Decré, and Liliane Pintelon. 2022. "Assessing System-Wide Safety Readiness for Successful Human–Robot Collaboration Adoption" Safety 8, no. 3: 48. https://doi.org/10.3390/safety8030048

APA StyleBerx, N., Adriaensen, A., Decré, W., & Pintelon, L. (2022). Assessing System-Wide Safety Readiness for Successful Human–Robot Collaboration Adoption. Safety, 8(3), 48. https://doi.org/10.3390/safety8030048