Can Complexity-Thinking Methods Contribute to Improving Occupational Safety in Industry 4.0? A Review of Safety Analysis Methods and Their Concepts

Abstract

1. Introduction

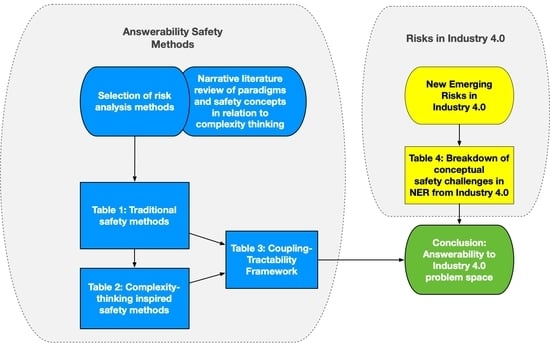

2. Materials and Methods

3. Results

3.1. Safety Analysis Methods

3.1.1. Critical Analysis of Traditional Safety Methods

3.1.2. An Historical Introduction to Traditional Causation Models

3.1.3. Reflections on Quantitative Methods and Techniques

3.2. Complexity-Thinking-Related Safety Paradigms and Concepts

3.2.1. From Linear Decomposition to Non-Linear Systems Causality

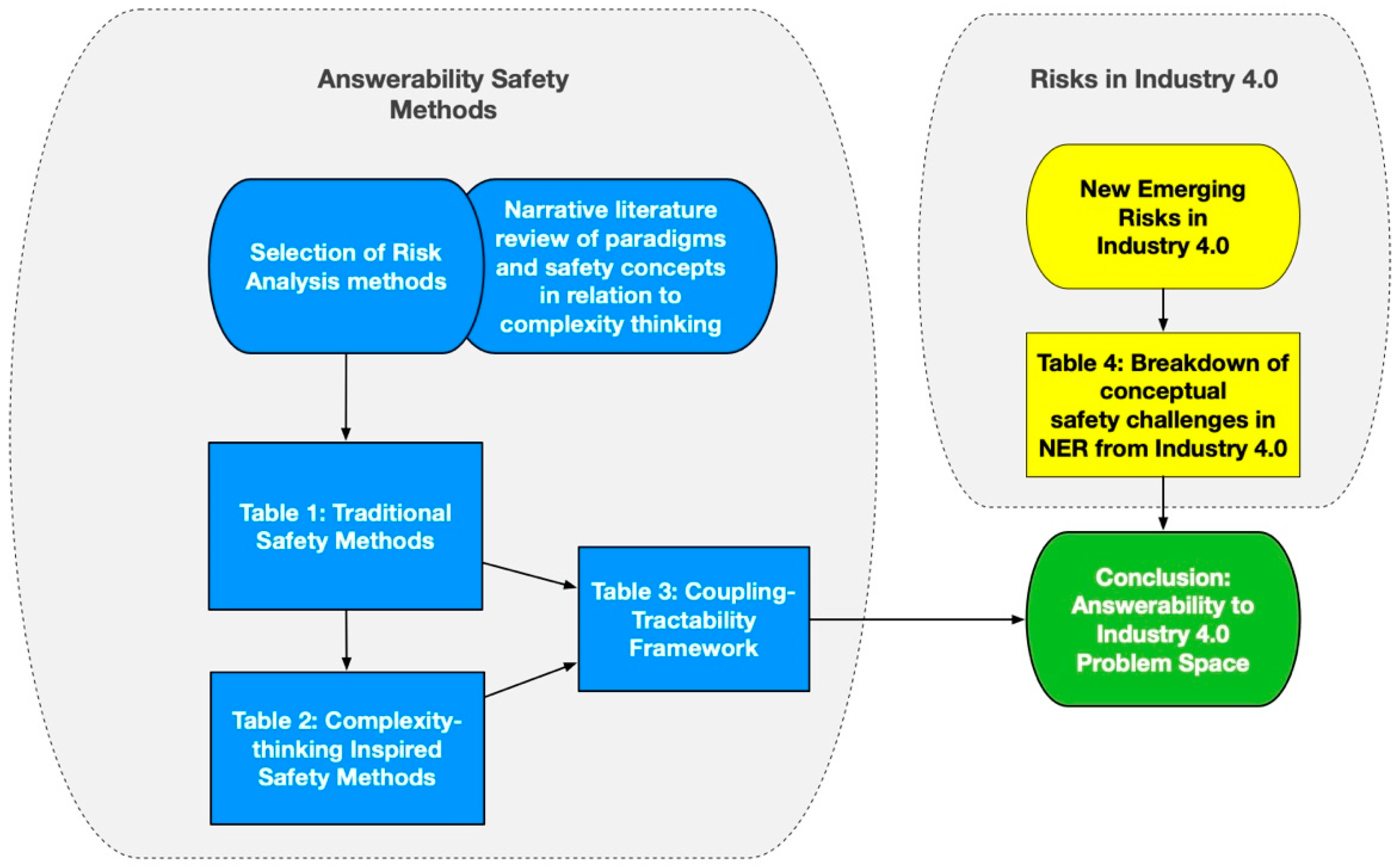

3.2.2. Complexity Expressed as a Combination of Tight Couplings and Non-Linear Tractability

3.2.3. Shift from Safety-I to Safety-II

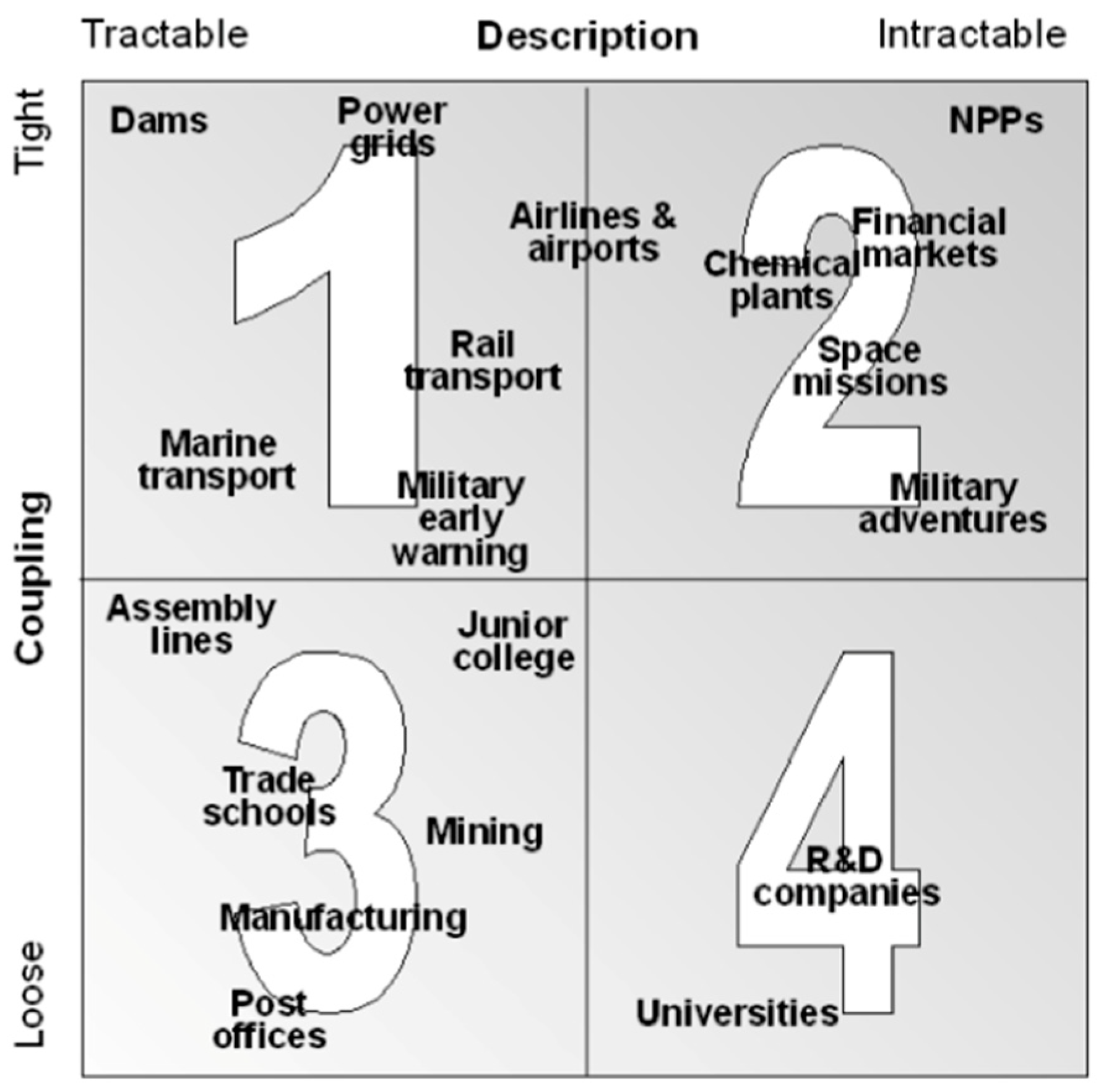

3.2.4. Causation Model Concepts and Their Relation to Coupling and Tractability

3.2.5. Causation Models and the Human Contribution

3.2.6. The Identification of Complexity-Thinking Methods

Event Analysis of Systemic Teamwork (EAST)

System-Theoretic Accident Model and Processes (STAMP)

Functional Resonance Analysis Method (FRAM)

3.2.7. Resilience Engineering

3.2.8. Work-as-Imagined versus Work-as-Done

3.2.9. Complexity-Thinking Critique from the Narrative Literature Review

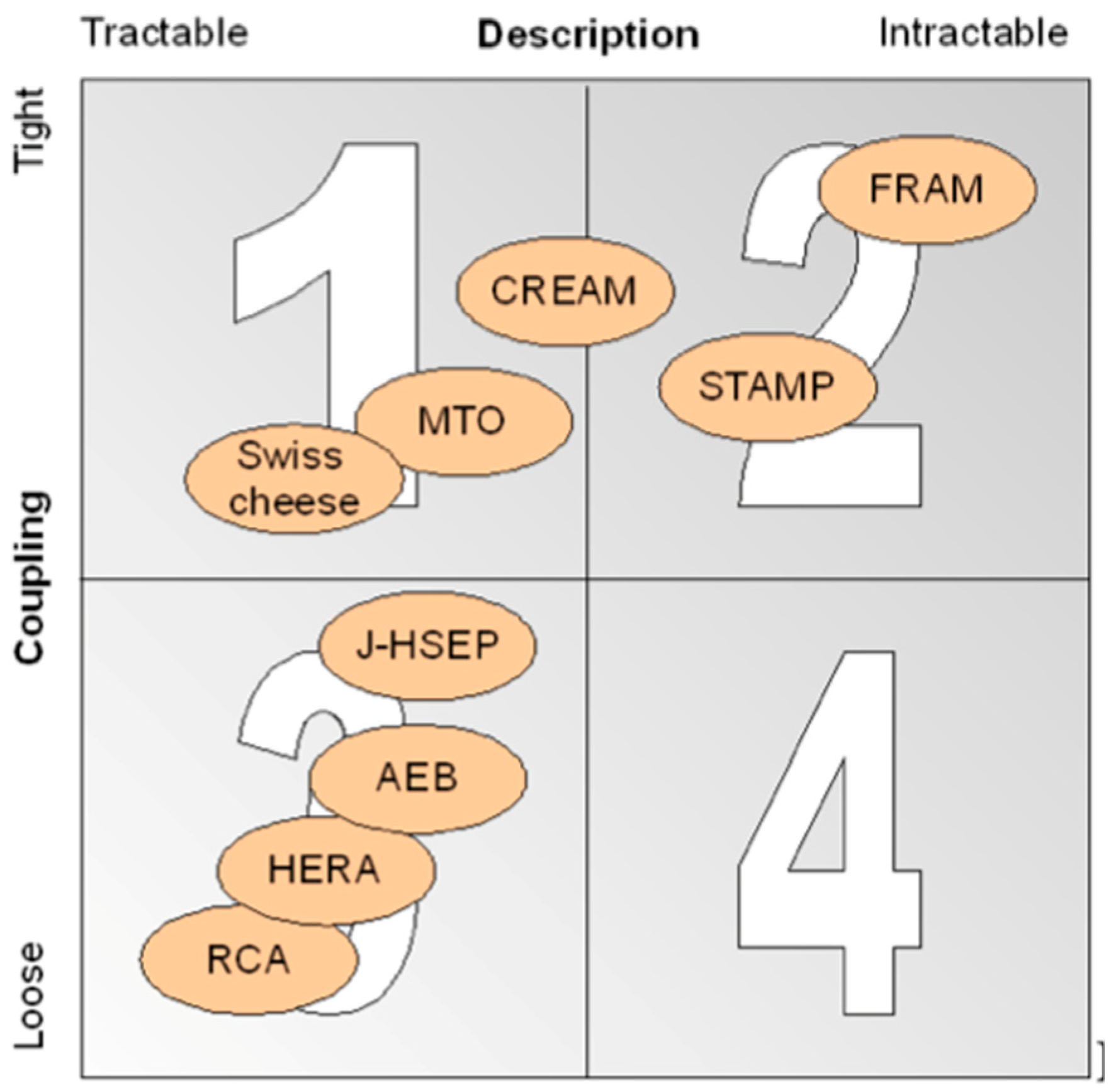

3.3. Breakdown of Challenges

3.3.1. Interconnectivity

3.3.2. Autonomy and Automation in Joint Human–Agent Activity

3.3.3. Supervisory Control

4. Discussion

4.1. Current Use of Complexity-Thinking-Inspired Methods for Industry 4.0

4.2. Practicability of Complexity-Thinking-Inspired Methods for Industry 4.0

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lasi, H.; Fettke, P.; Kemper, H.-G.; Feld, T.; Hoffmann, M. Industry 4.0. Bus. Inf. Syst. Eng. 2014, 6, 239–242. [Google Scholar] [CrossRef]

- Kagermann, H.; Wahlster, W.; Helbig, J. Recommendations for Implementing the Strategic Initiative INDUSTRIE 4.0: Final Report of the Industrie4.0 Working Group; Acatech: Munich, Germany, 2013. [Google Scholar]

- Lee, J.; Davari, H.; Singh, J.; Pandhare, V. Industrial Artificial Intelligence for Industry 4.0-Based Manufacturing Systems. Manuf. Lett. 2018, 18, 20–23. [Google Scholar] [CrossRef]

- Kamble, S.S.; Gunasekaran, A.; Gawankar, S.A. Sustainable Industry 4.0 framework: A systematic literature review identifying the current trends and future perspectives. Process Saf. Environ. Prot. 2018, 117, 408–425. [Google Scholar] [CrossRef]

- Hermann, M.; Pentek, T.; Otto, B. Design Principles for Industrie 4.0 Scenarios. In Proceedings of the 2016 49th Hawaii International Conference on System Sciences (HICSS), Koloa, HI, USA, 5–8 January 2016; pp. 3928–3937. [Google Scholar]

- Lu, Y. Industry 4.0: A survey on technologies, applications and open research issues. J. Ind. Inf. Integr. 2017, 6, 1–10. [Google Scholar] [CrossRef]

- Miranda, J.; Ponce, P.; Molina, A.; Wright, P. Sensing, smart and sustainable technologies for Agri-Food 4.0. Comput. Ind. 2019, 108, 21–36. [Google Scholar] [CrossRef]

- Winkelhaus, S.; Grosse, E.H. Logistics 4.0: A systematic review towards a new logistics system. Int. J. Prod. Res. 2019, 1–26. [Google Scholar] [CrossRef]

- Harms-Ringdahl, L. Safety Analysis, Principles and Practice in Occupational Safety, 2nd ed.; Taylor and Francis: London, UK; New York, NY, USA, 2005. [Google Scholar]

- Waterson, P.; Robertson, M.M.; Cooke, N.J.; Militello, L.; Roth, E.; Stanton, N.A. Defining the methodological challenges and opportunities for an effective science of sociotechnical systems and safety. Ergonomics 2015, 58, 565–599. [Google Scholar] [CrossRef]

- Hollnagel, E. The Changing Nature Of Risks. Ergon. Aust. J. 2008, 22, 33–46. [Google Scholar]

- Fernández, F.B.; Pérez, M.Á.S. Analysis and Modeling of New and Emerging Occupational Risks in the Context of Advanced Manufacturing Processes. Procedia Eng. 2015, 100, 1150–1159. [Google Scholar] [CrossRef]

- Badri, A.; Boudreau-Trudel, B.; Souissi, A.S. Occupational health and safety in the industry 4.0 era: A cause for major concern? Saf. Sci. 2018, 109, 403–411. [Google Scholar] [CrossRef]

- Hollnagel, E.; Speziali, J. Study on Developments in Accident Investigation Methods: A Survey of the State-of-the-Art; SKI Report 2008:50 (Swedish Nuclear Power Inspectorate); CNRS: Paris, France, 2008; ISSN 1104-1374.

- Pasman, H.J.; Rogers, W.J.; Mannan, M.S. How can we improve process hazard identification? What can accident investigation methods contribute and what other recent developments? A brief historical survey and a sketch of how to advance. J. Loss Prev. Process Ind. 2018, 55, 80–106. [Google Scholar] [CrossRef]

- Leveson, N.G. Engineering a Safer World: Systems Thinking Applied to Safety; MIT Press: Cambridge, MA, USA; London, UK, 2011. [Google Scholar]

- Fabiano, B.; Reverberi, A.P.; Varbanov, P.S. Safety opportunities for the synthesis of metal nanoparticles and short-cut approach to workplace risk evaluation. J. Clean. Prod. 2019, 209, 297–308. [Google Scholar] [CrossRef]

- Flaspöler, E.; Reinert, D.; Brun, E. Expert Forecast on Emerging Physical Risks Related to Occupational Safety and Health; European Agency for Safety and Health at Work: Bilbao, Spain, 2009; pp. 176–198. [Google Scholar]

- Brocal, F.; Sebastián, M.A. Identification and Analysis of Advanced Manufacturing Processes Susceptible of Generating New and Emerging Occupational Risks. Procedia Eng. 2015, 132, 887–894. [Google Scholar] [CrossRef]

- Onnasch, L.; Wickens, C.D.; Li, H.; Manzey, D. Human performance consequences of stages and levels of automation: An integrated meta-analysis. Hum. Factors 2014, 56, 476–488. [Google Scholar] [CrossRef]

- Hovden, J.; Albrechtsen, E.; Herrera, I.A. Is there a need for new theories, models and approaches to occupational accident prevention? Saf. Sci. 2010, 48, 950–956. [Google Scholar] [CrossRef]

- Hulme, A.; Stanton, N.A.; Walker, G.H.; Waterson, P.; Salmon, P.M. What do applications of systems thinking accident analysis methods tell us about accident causation? A systematic review of applications between 1990 and 2018. Saf. Sci. 2019, 117, 164–183. [Google Scholar] [CrossRef]

- Dekker, S.; Cilliers, P.; Hofmeyr, J.-H. The complexity of failure: Implications of complexity theory for safety investigations. Saf. Sci. 2011, 49, 939–945. [Google Scholar] [CrossRef]

- Salmon, P.M.; Walker, G.H.; GJ, M.R.; Goode, N.; Stanton, N.A. Fitting methods to paradigms: Are ergonomics methods fit for systems thinking? Ergonomics 2017, 60, 194–205. [Google Scholar] [CrossRef]

- Yousefi, A.; Rodriguez Hernandez, M.; Lopez Peña, V. Systemic accident analysis models: A comparison study between AcciMap, FRAM, and STAMP. Process Saf. Prog. 2018, 38. [Google Scholar] [CrossRef]

- ISO 31010. Risk Management-Risk Assessment Techniques; International Organization for Standardization: Geneva, Switzerland, 2009. [Google Scholar]

- Lees, F.P. Loss Prevention in the Process Industries: Hazard Identification, Assessment and Control; Butterworths: Oxford, UK, 1980. [Google Scholar]

- Johnson, W.G.; Council, N.S. MORT Safety Assurance Systems; Marcel Dekker, Incorporated: New York, NY, USA, 1980. [Google Scholar]

- Organisation, I.L. Major Hazard Control: A Practical Manual: An ILO Contribution to the International Programme on Chemical Safety of UNEP, ILO, WHO (IPCS); International Labour Office: Geneva, Switzerland, 1988. [Google Scholar]

- Bahr, N.J. System Safety Engineering and Risk Assessment: A Practical Approach; Taylor and Francis: Washington, DC, USA, 1997. [Google Scholar]

- Khanzode, V.V.; Maiti, J.; Ray, P.K. Occupational injury and accident research: A comprehensive review. Saf. Sci. 2012, 50, 1355–1367. [Google Scholar] [CrossRef]

- ISO 45001. Occupational Health and Safety Management Systems Requirements with Guidance for Use; International Organization for Standardization: Geneva, Switzerland, 2018. [Google Scholar]

- Reis, M.; Gins, G. Industrial Process Monitoring in the Big Data/Industry 4.0 Era: From Detection, to Diagnosis, to Prognosis. Processes 2017, 5, 35. [Google Scholar] [CrossRef]

- De Felice, F.; Petrillo, A.; Di Salvo, B.; Zomparelli, F. Prioritising the Safety Management Elements Through AAHP Model and Key Performance Indicators. In Proceedings of the Conference on Modeling and Applied Simulation, Larnaca, Cyprus, 26–28 September 2016. [Google Scholar]

- Chan, A.H.S.; Kwok, W.Y.; Duffy, V.G. Using AHP for determining priority in a safety management system. Ind. Manag. Data Syst. 2004, 104, 430–445. [Google Scholar] [CrossRef]

- Antonsen, S. Safety Culture Assessment: A Mission Impossible? J. Contingencies Crisis Manag. 2009, 17, 242–254. [Google Scholar] [CrossRef]

- Le Coze, J.C. How safety culture can make us think. Saf. Sci. 2019, 118, 221–229. [Google Scholar] [CrossRef]

- Dekker, S.; Nyce, J.M. There is safety in power, or power in safety. Saf. Sci. 2014, 67, 44–49. [Google Scholar] [CrossRef]

- Pasman, H.J.; Rogers, W.J.; Mannan, M.S. Risk assessment: What is it worth? Shall we just do away with it, or can it do a better job? Saf. Sci. 2017, 99, 140–155. [Google Scholar] [CrossRef]

- Lundberg, J.; Rollenhagen, C.; Hollnagel, E. What-You-Look-For-Is-What-You-Find—The consequences of underlying accident models in eight accident investigation manuals. Saf. Sci. 2009, 47, 1297–1311. [Google Scholar] [CrossRef]

- Heinrich, H.W. Industrial Accident Prevention: A Scientific Approach; McGraw-Hill: New York, NY, USA, 1931. [Google Scholar]

- Amalberti, R. The Paradoxes of almost totally safe transportation systems. Saf. Sci. 2001, 37, 109–126. [Google Scholar] [CrossRef]

- Underwood, P.; Waterson, P. Accident Analysis Models and Methods: Guidance for Safety Professionals; Loughborough University: Loughborough, Leicestershire, UK, 2013. [Google Scholar]

- Ibl, M.; Čapek, J. A Behavioural Analysis of Complexity in Socio-Technical Systems under Tension Modelled by Petri Nets. Entropy 2017, 19, 572. [Google Scholar] [CrossRef]

- Carayon, P.; Hancock, P.; Leveson, N.; Noy, I.; Sznelwar, L.; van Hootegem, G. Advancing a sociotechnical systems approach to workplace safety--developing the conceptual framework. Ergonomics 2015, 58, 548–564. [Google Scholar] [CrossRef]

- Smith, D.; Veitch, B.; Khan, F.; Taylor, R. Understanding industrial safety: Comparing Fault tree, Bayesian network, and FRAM approaches. J. Loss Prev. Process Ind. 2017, 45, 88–101. [Google Scholar] [CrossRef]

- Patriarca, R.; Di Gravio, G.; Costantino, F. A Monte Carlo evolution of the Functional Resonance Analysis Method (FRAM) to assess performance variability in complex systems. Saf. Sci. 2017, 91, 49–60. [Google Scholar] [CrossRef]

- Patriarca, R.; Falegnami, A.; Costantino, F.; Bilotta, F. Resilience engineering for socio-technical risk analysis: Application in neuro-surgery. Reliab. Eng. Syst. Saf. 2018, 180, 321–335. [Google Scholar] [CrossRef]

- Mosleh, A. PRA: A Perspective on Strengths, Current Limitations, and Possible Improvements. Nucl. Eng. Technol. 2014, 46, 1–10. [Google Scholar] [CrossRef]

- Paté-Cornell, E.; Dillon, R. Probabilistic risk analysis for the NASA space shuttle: A brief history and current work. Reliab. Eng. Syst. Saf. 2001, 74, 345–352. [Google Scholar] [CrossRef]

- Wellock, T.R. A Figure of Merit: Quantifying the Probability of a Nuclear Reactor Accident. Technol. Cult. 2017, 58, 678–721. [Google Scholar] [CrossRef]

- Kritzinger, D. Aircraft System Safety: Assessments for Initial Airworthiness Certification; Elsevier, Woodhead Publishing: Cambridge, UK, 2017. [Google Scholar] [CrossRef]

- Zadeh, L.A. Is there a need for fuzzy logic? Inf. Sci. 2008, 178, 2751–2779. [Google Scholar] [CrossRef]

- Leveson, N. The Use of Safety Cases in Certification and Regulation. J. Syst. Saf. 2011, 47, 1–9. [Google Scholar]

- Alvarenga, M.A.B.; Frutuoso e Melo, P.F.; Fonseca, R.A. A critical review of methods and models for evaluating organizational factors in Human Reliability Analysis. Prog. Nucl. Energy 2014, 75, 25–41. [Google Scholar] [CrossRef]

- Roy, N.; Eljack, F.; Jiménez-Gutiérrez, A.; Zhang, B.; Thiruvenkataswamy, P.; El-Halwagi, M.; Mannan, M.S. A review of safety indices for process design. Curr. Opin. Chem. Eng. 2016, 14, 42–48. [Google Scholar] [CrossRef]

- Khan, F.I.; Husain, T.; Abbasi, S.A. Safety Weighted Hazard Index (SWeHI). Process Saf. Environ. Prot. 2001, 79, 65–80. [Google Scholar] [CrossRef]

- Hollnagel, E. Safety-I and Safety-II, The Past and Future of Safety Management; Ashgate: Farnham, UK; Surrey, UK; Burlington, VT, USA, 2014; p. 200. [Google Scholar]

- Leveson, N.G. Applying systems thinking to analyze and learn from events. Saf. Sci. 2011, 49, 55–64. [Google Scholar] [CrossRef]

- Turner, B.A.; Pidgeon, N.F. Man-Made Disasters; Butterworth-Heinemann: Oxford, UK, 1978. [Google Scholar]

- Perrow, C. Normal Accidents: Living with High Risk Technologies, 2nd ed.; Princeton University Press: Princeton, NJ, USA, 1984. [Google Scholar]

- Le Coze, J.C. Reflecting on Jens Rasmussen’s legacy. A strong program for a hard problem. Saf. Sci. 2015, 71, 123–141. [Google Scholar] [CrossRef]

- Woods, D.D.; Cook, R.I. Nine Steps to Move Forward from Error. Cogn. Technol. Work 2002, 4, 137–144. [Google Scholar] [CrossRef]

- Dekker, S.; Hollnagel, E.; Woods, D.D.; Cook, R. Resilience Engineering: New Directions for Measuring and Maintaining Safety in Complex Systems; Lund University School of Aviation: Lund, Sweden, 2008. [Google Scholar]

- Borys, D.; Leggett, S. The fifth age of safety: The adaptive age? J. Health Saf. Res. Pract. 2009, 1, 19–27. [Google Scholar]

- Xu, L.D.; He, W.; Li, S. Internet of Things in Industries: A Survey. IEEE Trans. Ind. Inform. 2014, 10, 2233–2243. [Google Scholar] [CrossRef]

- Colgate, J.E.; Wannasuphoprasit, W.; Peshkin, M.A. Cobots: Robots for Collaboration with Human Operators. In Proceedings of the International Mechanical Engineering Congress and Exhibition, Atlanta, GA, USA, 17–22 November 1996; pp. 433–439. [Google Scholar]

- Hollnagel, E. FRAM: The Functional Resonance Analysis Method: Modelling Complex Socio-Technical Systems; Ashgate Publishing, Limited: Farnham, UK, 2012. [Google Scholar]

- Hollnagel, E.; Leonhardt, J.; Licu, T.; Shorrock, S. From Safety-I to Safety-II: A White Paper; Eurocontrol: Brussels, Belgium, 2013. [Google Scholar]

- Hollnagel, E. Human Reliability Assessment in Context. Nucl. Eng. Technol. 2005, 37, 159–166. [Google Scholar]

- Shorrock, S.T.; Kirwan, B. Development and application of a human error identification tool for air traffic control. Appl. Ergon. 2002, 33, 319–336. [Google Scholar] [CrossRef]

- Boring, R.L.; Hendrickson, S.M.L.; Forester, J.A.; Tran, T.Q.; Lois, E. Issues in benchmarking human reliability analysis methods: A literature review. Reliab. Eng. Syst. Saf. 2010, 95, 591–605. [Google Scholar] [CrossRef]

- Reason, J.; Hollnagel, E.; Paries, J. Revisiting The Swiss Cheese Model of Accidents; Eurocontrol Experimental Centre: Brétigny-sur-Orge, France, 2006. [Google Scholar]

- Hollnagel, E.; Woods, D.D.; Leveson, N. Resilience Engineering: Concepts and Precepts; Ashgate: Farnham, UK, 2006. [Google Scholar]

- Hale, A.; Borys, D. Working to rule, or working safely? Part 1: A state of the art review. Saf. Sci. 2013, 55, 207–221. [Google Scholar] [CrossRef]

- Braithwaite, J.; Wears, R.L.; Hollnagel, E. Resilient Health Care: Reconciling Work-as Imagined and Work-as-Done; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Hollnagel, E. The Nitty-Gritty of Human Factors. In Human Factors and Ergonomics in Practice: Improving Performance and Well-Being in the Real World; Shorrock, S., Williams, C., Eds.; CRC Press: Boca Raton, FL, USA; Taylor & Francis Group: Boca Raton, FL, USA, 2017. [Google Scholar]

- Hollnagel, E. CREAM—Cognitive Reliability and Error Analysis Method. Available online: http://erikhollnagel.com/ideas/cream.html (accessed on 10 August 2018).

- Bjerga, T.; Aven, T.; Zio, E. Uncertainty treatment in risk analysis of complex systems: The cases of STAMP and FRAM. Reliab. Eng. Syst. Saf. 2016, 156, 203–209. [Google Scholar] [CrossRef]

- Underwood, P.; Waterson, P. Systemic accident analysis: examining the gap between research and practice. Accid. Anal. Prev. 2013, 55, 154–164. [Google Scholar] [CrossRef] [PubMed]

- Patriarca, R.; Bergström, J.; Di Gravio, G. Defining the functional resonance analysis space: Combining Abstraction Hierarchy and FRAM. Reliab. Eng. Syst. Saf. 2017, 165, 34–46. [Google Scholar] [CrossRef]

- Stanton, N.A. Representing distributed cognition in complex systems: How a submarine returns to periscope depth. Ergonomics 2014, 57, 403–418. [Google Scholar] [CrossRef] [PubMed]

- de Vries, L.; Bligård, L.-O. Visualising safety: The potential for using sociotechnical systems models in prospective safety assessment and design. Saf. Sci. 2019, 111, 80–93. [Google Scholar] [CrossRef]

- Leveson, N. A new accident model for engineering safer systems. Saf. Sci. 2004, 42, 237–270. [Google Scholar] [CrossRef]

- Walker, G.H.; Gibson, H.; Stanton, N.A.; Baber, C.; Salmon, P.; Green, D. Event Analysis of Systemic Teamwork (EAST): A novel integration of ergonomics methods to analyse C4i activity. Ergonomics 2006, 49, 1345–1369. [Google Scholar] [CrossRef]

- Salmon, L.; Stanton, N.A.; Walker, G.H.; Jenkins, D.P. Distributed Situation Awareness: Theory, Measurement and Application to Teamwork; Ashgate Publishing Limited: Farnham, UK, 2009. [Google Scholar]

- Patriarca, R.; Bergström, J.; Di Gravio, G.; Costantino, F. Resilience engineering: Current status of the research and future challenges. Saf. Sci. 2018, 102, 79–100. [Google Scholar] [CrossRef]

- Woltjer, R. Resilience Assessment Based on Models of Functional Resonance. In Proceedings of the 3rd Symposium on Resilience Engineering, Antibes-Juan-les-Pins, France, 28–30 October 2008. [Google Scholar]

- Woods, D.D. Four concepts for resilience and the implications for the future of resilience engineering. Reliab. Eng. Syst. Saf. 2015, 141, 5–9. [Google Scholar] [CrossRef]

- Moppett, I.K.; Shorrock, S.T. Working out wrong-side blocks. Anaesthesia 2018, 73, 407–420. [Google Scholar] [CrossRef]

- Adriaensen, A.; Patriarca, R.; Smoker, A.; Bergstrom, J. A socio-technical analysis of functional properties in a joint cognitive system: A case study in an aircraft cockpit. Ergonomics 2019. [Google Scholar] [CrossRef] [PubMed]

- Patriarca, R.; Adriaensen, A.; Peters, M.; Putnam, J.; Constantino, F.; Di Gravio, G. Receipt and Dispatch of an Aircraft: A Functional Risk Analysis. In Proceedings of the 8th REA Symposium Embracing Resilience: Scaling Up and Speeding Up, Kalmar, Sweden, 24–27 June 2019. [Google Scholar]

- Clay-Williams, R.; Hounsgaard, J.; Hollnagel, E. Where the rubber meets the road: Using FRAM to align work-as-imagined with work-as-done when implementing clinical guidelines. Implement. Sci. 2015, 10, 125. [Google Scholar] [CrossRef] [PubMed]

- Green, B.N.; Johnson, C.D.; Adams, A. Writing narrative literature reviews for peer-reviewed journals: Secrets of the trade. J. Chiropr. Med. 2006, 5, 101–117. [Google Scholar] [CrossRef]

- Smith, K.M.; Valenta, A.L. Safety I to Safety II: A Paradigm Shift or More Work as Imagined? Comment on “False Dawns and New Horizons in Patient Safety Research and Practice”. Int. J. Health Policy Manag. 2018, 7, 671–673. [Google Scholar] [CrossRef]

- Nemeth, C.P.; Cook, R.I.; O’Connor, M.; Klock, P.A. Using Cognitive Artifacts to Understand Distributed Cognition. IEEE Trans. Syst. Man Cybern.-Part A 2004, 34, 726–735. [Google Scholar] [CrossRef]

- Hollnagel, E.; Woods, D.D. Joint Cognitive Systems: Patterns in Cognitive Systems Engineering; Taylor & Francis: Boca Raton, FL, USA; London, UK; New York, NY, USA, 2006. [Google Scholar]

- Woods, D.D.; Roth, E.M. Cognitive Engineering: Human Problem Solving with Tools. Hum. Factors 1988, 30, 415–430. [Google Scholar] [CrossRef]

- Bradshaw, J.M.; Hoffman, R.R.; Johnson, M.; Woods, D.D. The Seven Deadly Myths of “Autonomous Systems”. IEEE Intell. Syst. 2013, 28, 54–61. [Google Scholar] [CrossRef]

- de Winter, J.C.F.; Dodou, D. Why the Fitts list has persisted throughout the history of function allocation. Cogn. Technol. Work 2011, 16, 1–11. [Google Scholar] [CrossRef]

- Dekker, S.W.A.; Woods, D.D. MABA-MABA or Abracadabra? Progress on Human-Automation Co-ordination. Cogn. Technol. Work 2002, 4, 240–244. [Google Scholar] [CrossRef]

- Klein, G.; Woods, D.D.; Bradshaw, J.M.; Hoffman, R.R.; Feltovich, P.J. Ten Challenges for Making Automation a “Team Player” in Joint Human-Agent Activity. IEEE Intell. Syst. 2004, 19, 91–95. [Google Scholar] [CrossRef]

- Hoffman, R.R.; Johnson, M.; Bradshaw, J.M.; Underbrink, A. Trust in Automation. IEEE Intell. Syst. 2013, 28, 84–88. [Google Scholar] [CrossRef]

- Lyons, J.B.; Clark, M.A.; Wagner, A.R.; Schuelke, M.J. Certifiable Trust in Autonomous Systems: Making the Intractable Tangible. AI Mag. 2017, 38, 37–49. [Google Scholar] [CrossRef]

- Nayyar, M.; Wagner, A.R. When Should a Robot Apologize? Understanding How Timing Affects Human-Robot Trust Repair; Springer: Cham, Germany, 2018; pp. 265–274. [Google Scholar]

- D’Addona, D.M.; Bracco, F.; Bettoni, A.; Nishino, N.; Carpanzano, E.; Bruzzone, A.A. Adaptive automation and human factors in manufacturing: An experimental assessment for a cognitive approach. CIRP Ann. 2018, 67, 455–458. [Google Scholar] [CrossRef]

- BEA. Final Accident Report on Flight AF 447; Accident Report; Bureau d’Enquêtes et d’Analyses pour la sécurité de l’aviation civile: Paris, France, 2012.

- Nederlandse Onderzoeksraad voor Veiligheid. Neergestort Tijdens Nadering, Boeing 737–800, Nabij Amsterdam Schiphol Airport, 25 Februari 2009; Nederlandse Onderzoeksraad voor Veiligheid: The Hague, The Netherlands, 2010. [Google Scholar]

- Sarter, N. Investigating mode errors on automated flight decks: Illustrating the problem-driven, cumulative, and interdisciplinary nature of human factors research. Hum. Factors 2008, 50, 506–510. [Google Scholar] [CrossRef] [PubMed]

- Sarter, N.B.; Woods, D.D. How in the World Did We Ever Get into That Mode? Mode Error and Awareness in Supervisory Control. Hum. Factors 1995, 37, 5–19. [Google Scholar] [CrossRef]

- Dekker, S. Patient Safety: A Human Factors Approach; CRC Press: Boca Raton, FL, USA; London, UK; New York, NY, USA; Francis and Taylor Group: Boca Raton, FL, USA; London, UK; New York, NY, USA, 2011. [Google Scholar]

- Woods, D.D. The Risks of Autonomy. J. Cogn. Eng. Decis. Mak. 2016, 10, 131–133. [Google Scholar] [CrossRef]

- Wiener, E.L. Human Factors of Advanced Technology (“Glass Cockpit”) Transport Aircraft; Ames Reseacrh Center, NASA: Moffett Field, CA, USA, 1989. [Google Scholar]

- Cook, R.I.; Woods, D.D.; Howie, M.B.; McColligan, E. Cognitive High Workload, Consequences Of High Consequence ‘Clumsy’ Automation Human Performance. Paper presented at the Fourth Annual Workshop on Space Operations Applications and Research (SOAR 90), Albuquerque, NM, USA, 26–28 June 1990; pp. 543–546. [Google Scholar]

- Lee, J.D.; Seppelt, B.D. Human Factors and Ergonomics in Automation Design. In Handbook of Human Factors and Ergonomics; Salvendy, G., Ed.; Wiley: Hoboken, NJ, USA, 2012. [Google Scholar] [CrossRef]

- Woods, D.D.; McColligan, E.; Howie, M.B.; Cook, R.I. Cognitive Consequences of Clumsy Automation on High Workload, High Consequence Human Performance; NASA Publication: Washington, DC, USA, 1991. [Google Scholar]

- Fitts, P.M. Human Engineering for an Effective Air-Navigation and Traffic-Control System; National Research Council: Washington, DC, USA, 1951. [Google Scholar]

- Bainbridge, L. Ironies of automation. Automatica 1983, 19, 775–779. [Google Scholar] [CrossRef]

- Woods, D.D.; Sarter, N.B. Capturing the dynamics of attention control from individual to distributed systems: The shape of models to come. Theor. Issues Ergon. Sci. 2010, 11, 7–28. [Google Scholar] [CrossRef]

- Department of Defense. Autonomy Community of Interest (COI), Test and Evaluation, Verification and Validation (TEVV) Working Group, Technology Investment Strategy 2015–2018; Office of the Assistant Secretary of Defense for Research & Engineering: Washington, DC, USA, 2015. [Google Scholar]

- Dekker, S. Report of the Flight Crew Human Factors Investigation Conducted for the Dutch Safety Board Into the Accident of TK1951, Boeing 737–800 Near Amsterdam Schiphol Airport, February 25 2009; Lunds Universitet, School of Aviation: Lund, Sweden, 2009. [Google Scholar]

- Banks, V.A.; Stanton, N.A. Analysis of driver roles: Modelling the changing role of the driver in automated driving systems using EAST. Theor. Issues Ergon. Sci. 2019, 20, 284–300. [Google Scholar] [CrossRef]

- Dekker, S. The Field Guide to Understanding Human Error; Ashgate: Farnham, UK, 2006. [Google Scholar]

- Abdulkhaleq, A.; Wagner, S.; Leveson, N. A Comprehensive Safety Engineering Approach for Software-Intensive Systems Based on STPA. Procedia Eng. 2015, 128, 2–11. [Google Scholar] [CrossRef]

- Friedberg, I.; McLaughlin, K.; Smith, P.; Laverty, D.; Sezer, S. STPA-SafeSec: Safety and security analysis for cyber-physical systems. J. Inf. Secur. Appl. 2017, 34, 183–196. [Google Scholar] [CrossRef]

- Patriarca, R.; Di Gravio, G.; Costantino, F. myFRAM: An open tool support for the functional resonance analysis method. In Proceedings of the 2017 2nd International Conference on System Reliability and Safety (ICSRS), Milan, Italy, 20–22 December 2017; pp. 439–443. [Google Scholar]

- Albery, S.; Borys, D.; Tepe, S. Advantages for risk assessment: Evaluating learnings from question sets inspired by the FRAM and the risk matrix in a manufacturing environment. Saf. Sci. 2016, 89, 180–189. [Google Scholar] [CrossRef]

- Gattola, V.; Patriarca, R.; Tomasi, G.; Tronci, M. Functional resonance in industrial operations: A case study in a manufacturing plant. IFAC-PapersOnLine 2018, 51, 927–932. [Google Scholar] [CrossRef]

- Melanson, A.; Nadeau, S. Managing OHS in Complex and Unpredictable Manufacturing Systems: Can FRAM Bring Agility? Springer: Cham, Germany, 2016; Volume 490, pp. 341–348. [Google Scholar]

- Zheng, Z.; Tian, J.; Zhao, T. Refining operation guidelines with model-checking-aided FRAM to improve manufacturing processes: A case study for aeroengine blade forging. Cogn. Technol. Work 2016, 18, 777–791. [Google Scholar] [CrossRef]

- Salmon, P.M.; Read, G.J.M.; Walker, G.H.; Goode, N.; Grant, E.; Dallat, C.; Carden, T.; Naweed, A.; Stanton, N.A. STAMP goes EAST: Integrating systems ergonomics methods for the analysis of railway level crossing safety management. Saf. Sci. 2018, 110, 31–46. [Google Scholar] [CrossRef]

- Patriarca, R.; Falegnami, A.; Bilotta, F. Embracing simplexity: The role of artificial intelligence in peri-procedural medical safety. Expert Rev. Med. Devices 2019, 16, 77–79. [Google Scholar] [CrossRef]

- Abdulkhaleq, A.; Wagner, S. XSTAMPP: An eXtensible STAMP Platform as Tool Support for Safety Engineering; University of Stuttgart: Stuttgart, Germany, 2015. [Google Scholar]

- Patriarca, R.; Constantino, F.; Di Gravio, G. myFRAM: An IT Open Tool to Support the Application of the FRAM. Available online: https://functionalresonance.com/onewebmedia/23%20FRAMily%202018_myFRAM_Patriarca%20Riccardo%20et%20al.pdf (accessed on 10 August 2019).

- The Functional Resonance Analysis Method, FRAM Model Visualiser (FMV). Available online: https://functionalresonance.com/FMV/index.html (accessed on 15 August 2019).

- Patriarca, R.; Del Pinto, G.; Di Gravio, G.; Costantino, F. FRAM for Systemic Accident Analysis: A Matrix Representation of Functional Resonance. Int. J. Reliab. Qual. Saf. Eng. 2017. [Google Scholar] [CrossRef]

- Or, L.B.; Arogeti, S.; Hartmann, D. Challenges in Future Mathematical Modelling of Hierarchical Functional Safety Control Structures within STAMP Safety Model. In Proceedings of the MATEC Web of Conferences, Amsterdam, The Netherlands, 2 November 2018. [Google Scholar] [CrossRef]

- Rosa, L.V.; Haddad, A.N.; de Carvalho, P.V.R. Assessing risk in sustainable construction using the Functional Resonance Analysis Method (FRAM). Cogn. Technol. Work 2015, 17, 559–573. [Google Scholar] [CrossRef]

- Vincent, C.; Amalberti, R. Safer Healthcare, Strategies for the Real World; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

| Method | Concept [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26] | Paradigm | Basis for Structuring | Coupling/Tractability |

|---|---|---|---|---|

| Energy Analysis [9] | Identifies energies that can harm human beings. | Energy barrier thinking | Volumes that jointly cover the entire object | Loose coupling—tractable |

| Hazard and Operability Studies (HAZOP) [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26] | Identifies deviations from intended design of equipment, based on the use of predetermined guide words. It is generally carried out by a multi-disciplinary team during a set of meetings. | Linear causality | Deviation of operational parameters | Tight coupling—tractable |

| Failure Mode and Effect Analysis (FMEA) [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26] | Identifies failures of components and their effects on the system. | Linear causality and decompositional analysis | Reliability from components or modules | Tight coupling—tractable |

| Fault Tree Analysis [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26] | Causal factors are deductively identified, organized in a logical manner and represented pictorially in a tree diagram that depicts causal factors and their logical relationships to the top event. | Energy barrier thinking, linear causality and decompositional analysis | Fault propagation resulting from initial event | Loose coupling—tractable |

| Event (Effect) Tree Analysis [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26] | Analyzes alternative consequences of a specified hazardous event. | Energy barrier thinking, single cause philosophy and decompositional analysis | Fault propagation back to initial event | Loose coupling—tractable |

| Action Error Method [9] | Identifies departures from specified job procedures that can lead to hazards. | Taylorism | Phases of work of operator | Loose coupling—tractable |

| Job Safety Analysis [9] | Identifies hazards in job procedures. | Rationalist, prescriptive and top-down belief in procedures and Taylorism | Elements in an individual job task | Loose coupling—tractable |

| Deviation Analysis [9] | Identifies deviations from the planned and normal production processes. | Rationalist, prescriptive and top-down belief in procedures and Taylorism | Activities (e.g., activity flow or job procedure) | Loose coupling—tractable |

| Safety Function Analysis [9] | A structured description of a system’s safety functions, including an evaluation of their adequacies and weaknesses. | Energy barrier thinking | Defenses or safety functions of the system | Loose coupling—tractable |

| Change Analysis [9] | Establishes the causes of problems through comparisons with problem-free situations. | Failure without acknowledging context sensitivity or emergent behavior | Discrepancy between as-is and as-should-be situation | Loose coupling—tractable |

| Root Cause Analysis (RCA) [26] | Attempts to identify the roots or original causes instead of dealing only with immediately obvious symptoms. | Single cause philosophy, linear causality and decompositional analysis | Initiating failure causes and effects | Loose coupling—tractable |

| Human Reliability Assessment (HRA) [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26] | Identification and prediction of human errors in relation to strictly predefined tasks. | Human reliability assessment and Taylorism | Human error | Loose coupling—tractable |

| Deterministic Probabilistic Risk Assessment (e.g., Risk Indices -FN Curves (the cumulative frequency ‘F’ of people affected ‘N’) [26] | Deterministic probabilistic risk assessment (PRA) produces a semi-quantitative measurement of risks based on frequency and severity scales. | (Semi-)quantitative causality credo | Ordinal or cumulative frequency and/or severity of harmful events | Loose coupling—tractable |

| Databases (e.g., Reaction Matrix—Consequence Analysis) [26] | Analysis of consequences of chemical risks like fire, explosions, the release of toxic gases or the determination of toxic effects or combinations of chemicals. | Database | Chemical and physical reactions | Loose coupling—tractable |

| Cognitive Task Analysis [26] | An analysis method that addresses the underlying mental processes that give rise to errors. | Task analysis as the key to understanding system mismatches | Tasks | Loose coupling—tractable |

| Bayesian Networks [26] | A method that use a graphical model to represent a set of variables and their probabilistic relationships. The network is comprised of nodes that represent a random variable and arrows that link parent nodes to a child nodes. | Epidemiological causation model | Events (and their related degrees of belief) | Tight coupling—tractable |

| Layer Protection Analysis (LOPA) [26] | LOPA is a semi-quantitative method for estimating the risks associated with undesired events or scenarios and the presence of sufficient measures to control them. A cause–consequence pair is selected, and the preventive layers of protection are identified. | Epidemiological causation model and energy barrier thinking | Multiple defenses | Tight coupling—tractable |

| Bowtie Analysis [26] | A simple, diagrammatic way of describing and analyzing the pathways of a risk from causes to consequences. The focus of the bowtie is on the barriers between the causes and the risk, and the risks and consequences. | Epidemiological causation model and energy barrier thinking | Multiple causes and defenses | Tight coupling—tractable |

| Method | Concept | Paradigm | Basis for Structuring | Coupling/Tractability |

|---|---|---|---|---|

| STAMP [16,21,24,79,80,83,84] | Creation of a model of the functional control structure for the system in question by identifying the system-level hazards, safety constraints and functional requirements. | Feedback control system | Most basic element in the model is a constraint, whereas basic structuring is the feedback control system | Tight coupling—intractable |

| FRAM [21,24,68,79,80,83] | Systemic analysis of complex process dependencies, based on the idea of resonance arising from the inherent variability of everyday performance. | Functional resonance | Dependencies among functions or tasks | Tight coupling—intractable |

| Event Analysis of Systemic Teamwork (EAST) [24,82,85] | A means of modeling distributed cognition in systems via three network models (i.e., task, social and information) and their combination. | Propositional network | Task, social and information network connections | Tight coupling—intractable |

| Tractable | Intractable | |

|---|---|---|

| Tightly coupled | Bayesian Networks Layer Protection Analysis (LOPA) Bowtie Analysis Hazard and Operability Studies (HAZOP) Failure Mode Effect and Analysis (FMEA) | EAST FRAM STAMP Sneak Circuit Analysis Monte Carlo Simulation 1 |

| Loosely coupled | Energy Analysis | |

| Fault Tree Analysis | ||

| Event (Effect) Tree Analysis | ||

| Action Error Method | ||

| Job Safety Analysis | ||

| Deviation Analysis | ||

| Safety Function Analysis | ||

| Change Analysis | ||

| Root Cause Analysis | ||

| Human Reliability Assessment | ||

| Deterministic Probabilistic Risk Assessment (e.g., FN curves—Risk Indices) Databases (e.g., Reaction Matrix—Consequence Analysis) | ||

| Cognitive Task Analysis Audits |

| Concept | Breakdown of Challenges | Label |

|---|---|---|

| Interconnectivity 1 | Oversimplifications in the face of the complexities of joint systems | Joint cognitive system as a system of distributed cognition with emergent behavior |

| Oversimplification of functional allocation problems | Human–machine opposition fallacy | |

| Disintegrative units of analysis that separate humans, machines and interfaces | Separation fallacy | |

| Oversimplification of different degrees of substitution between people and automation given different levels of autonomy and authority of machines | Substitution myth | |

| Autonomy 2 | Transform practice and coordination across human and machine roles | Envisioned word problem |

| Create new kinds of cognitive work for humans, often at the wrong times; every automation advance will be exploited to require operational efficiency | The law of stretched systems | |

| Create more threads to track; makes it harder for people to remain aware of and integrate all of the activities and changes around them | Coordination costs | |

| New knowledge and skill demands are imposed on humans and humans might no longer have a sufficient context to make decisions, because they have been left out of the loop | Transformation of knowledge and expertise | |

| Coordinate/synchronize joint activity; make machine a team player. Team play with people and other agents is critical to success | Principles of interdependence | |

| Resulting explosion of features, options and modes creates new demands, types of errors and paths toward failure | Transparency of complex systems | |

| Machines, humans and macrocognitive work systems are fallible; errors are therefore systemic; new problems are associated with human–machine coordination breakdowns; machines now obscure information necessary for human decision making. | Principles of complexity | |

| Automation in Joint Human–Agent Activity 3 | To be a team player, an intelligent agent must fulfill an agreement (often tacit) to facilitate coordination, work toward shared goals and prevent breakdowns in team coordination | Shared knowledge, goals and intentions that are committed to goal alignment |

| To be an effective team player, intelligent agents must be able to adequately model the other participants’ intentions and actions vis-à-vis the joint activity’s state and evolution | Adequate (shared) models | |

| Human–agent team members must be mutually predictable | Predictability; | |

| Agents must be directable | directability | |

| Agents must be able to make pertinent aspects of their status and intentions obvious to their teammates | Revealing status and intentions | |

| Agents must be able to observe and interpret pertinent signals of status and intentions | Interpretation of signals | |

| Agents must be able to engage in goal negotiation | Goal negotiation | |

| Support technologies for planning and autonomy must enable a collaborative approach | Collaboration | |

| All team members must help control the costs of coordinated activity (note: the authors mean cost as in effort, not financial cost) | Coordination cost control | |

| Shift in Supervisory Control 4 | Supervisor must have real as well as titular authority | Elimination of responsibility—authority double binds |

| Supervisor must be able to redirect a lower-order machine cognitive system when the machine’s problem solving breaks down | Operator has the authority to abort the operation; strategies for management of system boundaries | |

| Need for a common or shared representation of the state of the world and of the state of the problem-solving process | Adequate (shared) models |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adriaensen, A.; Decré, W.; Pintelon, L. Can Complexity-Thinking Methods Contribute to Improving Occupational Safety in Industry 4.0? A Review of Safety Analysis Methods and Their Concepts. Safety 2019, 5, 65. https://doi.org/10.3390/safety5040065

Adriaensen A, Decré W, Pintelon L. Can Complexity-Thinking Methods Contribute to Improving Occupational Safety in Industry 4.0? A Review of Safety Analysis Methods and Their Concepts. Safety. 2019; 5(4):65. https://doi.org/10.3390/safety5040065

Chicago/Turabian StyleAdriaensen, Arie, Wilm Decré, and Liliane Pintelon. 2019. "Can Complexity-Thinking Methods Contribute to Improving Occupational Safety in Industry 4.0? A Review of Safety Analysis Methods and Their Concepts" Safety 5, no. 4: 65. https://doi.org/10.3390/safety5040065

APA StyleAdriaensen, A., Decré, W., & Pintelon, L. (2019). Can Complexity-Thinking Methods Contribute to Improving Occupational Safety in Industry 4.0? A Review of Safety Analysis Methods and Their Concepts. Safety, 5(4), 65. https://doi.org/10.3390/safety5040065