Abstract

Improving the reliability of automotive perceptive sensors in degraded weather conditions, including fog, is an important issue for road safety and the development of automated driving. Cerema has designed the PAVIN platform reproducing fog and rain conditions to evaluate optical automotive sensor performance under these conditions. In order to increase the variety of scenarios and technologies under test, the use of digital simulation becomes a major asset. The purpose of this paper is to revive the debate around the realism of the various models underlying the numerical methods. The simulation of the radiative transfer equation by Monte Carlo methods and by simplified noise models is examined. The results of this paper show some gaps in foggy scenes between the ray-tracing method, which is considered to be the most realistic, and simple models for contrast evaluation, which can have a particularly strong impact on obstacle detection algorithms.

1. Introduction

The development of automated mobility is at the heart of many roadmaps of professional associations in the mobility sector or of the institutions responsible for public economic and societal development policies [1,2]. These roadmaps highlight in particular the driving scenario approach to demonstrating the safety of automated road transport systems, even including scenarios based on digital simulation, taking into account degraded weather conditions among the various layers (infrastructure, objects on the road, manoeuvres, etc.) used to describe a scenario (French ADScene-MOSAR, German PEGASUS, Dutch StreetWise, Israeli CDV projects, etc.).

The development of automated vehicles requires the integration of several driving assistance technologies based on optical devices such as radar, LiDAR, camera, etc. These are used for obstacle detection, pedestrian detection, traffic sign recognition, trajectory correction, etc. The safety related to the performance of these devices is a major issue. It is well-known that these devices are perturbed by adverse weather conditions such as rain, snow, or fog [3,4,5,6,7,8]. Consequently, automotive suppliers and car manufacturers are overall interested in making the various technologies more reliable by testing them under extreme conditions and in the most exhaustive possible situations [4]. To achieve this, perception and driving assistance sensors, operating at different wavelengths, require the various meteorological conditions to be considered in addition to a large variety of targets to detect or recognize in the road scene. Then, testing these advanced and complex technologies is generally not feasible based only on experimental work. For this reason, digital simulation solutions are developed to massively test new and varied technologies under the most exhaustive conditions, which is not possible exclusively experimentally.

The Horizon Europe ROADVIEW project (Robust Automated Driving in Extreme Weather), supporting this work, aims to address the impact of harsh weather conditions on automotive perception sensors. Work Package 3, devoted to digital simulation, will develop sensor noise modelling to represent the variations in harsh weather characteristics and thus generate realistic synthetic sensor data. The goal is to build automated vehicle testing by simulation-assisted methods.

Cerema, equipped with its fog and rain PAVIN platform [9,10], has carried out work for years with several national and international partners aimed at better understanding the behaviour of the perception systems of intelligent vehicles under harsh weather conditions [11,12]. Additionally, in support of its experimental work, Cerema is also interested in the theoretical aspects of electromagnetic wave propagation under adverse meteorological conditions, including fog, in order to meet the challenges of numerical simulation of autonomous vehicles in all weather conditions [13].

Nowadays, there exist optical sensor simulators (for camera, radar and LiDAR) that consider atmospheric conditions such as fog or rain. We can cite some software: Ansys AVxcelerate Sensors [14], Carla Simulator [15,16], Pro-SiVIC [17,18,19], Electro-Optics sensor simulation [20,21], AVSimulation [22], 4DVirtualiz [23], Mitsuba [24], etc. Different electromagnetic wave propagation models are used in these simulators, ranging from simple Beer–Lambert type attenuation laws to complex offline ray-tracing simulations.

Numerical simulation plays an important role in the field of automated vehicles. Indeed, it reduces the costs of massive sensor testing, while ensuring optimal safety. This also makes certain dangerous scenarios feasible, such as testing a pedestrian detection system requiring the presence of a pedestrian in real-world simulation, thus risking being run over during the experiment. In several applications and in particular in those related to automated vehicles, the use of real-time simulation is an important issue because it provides access to all the simulation variables in real time. This instantaneous access is necessary in some cases such as X-in-the-loop applications to test sensors [25]. Most real-time simulators in the automotive and computer science community use Koschmieder’s model (from the Beer–Lambert method) to foggify sensor data [26,27,28,29], but only a few studies have addressed the question of the realism of the Koschmieder model among other fog models [28,29].

The main goal of this work is to revive the debate about the realism of fog models by comparing a simple commonly used 1D simulator based on Beer–Lambert type attenuation laws and a full Monte Carlo simulator developed by Cerema, called SWEET (Simulating WEather for intElligent Transportation systems). More specifically, we are interested in comparing the realism of simulated camera images in the visible wavelength range for two scenes (intra-urban scene and extra-urban scene) based on the SWEET simulator and on the simplified 1D model. In order to also compare our work to the literature, we also chose to compare our SWEET simulator to the Mitsuba simulator. Finally, there are studies in the literature that have addressed the impact of degraded meteorological conditions on other types of cameras operating in other wavelength ranges (NIR, SWIR, LWIR) [30]. These wavelengths are not the subject of this paper, but we will certainly address them in future work.

Section 2 details the mathematical background of the simulation tools which are compared: ray-tracing methods for the 3D radiative transfer simulation and Koschmieder law-based harsh weather noises. Section 3 describes the applications of the simulation methods to foggy scenes by camera imaging. Section 4 and Section 5 show the results of the investigation, respectively, by a visual comparison between the different simulation methods and by a more quantitative comparison based on relevant metrics for contrast assessment. Finally, Section 6 offers a conclusion to the study.

2. Mathematical Background for Modelling and Numerical Simulation

In order to model perception sensors, it is necessary to be able to model the path of a light ray in space, including under harsh weather conditions, such as fog. The propagation of electromagnetic waves in participating media, such as fog, is governed by the radiative transfer equation (RTE) in which the optical parameters (related to scattering, absorption and extinction) of the medium are considered. Assuming unpolarized light, by using the Mie scattering theory [31], these wavelength-dependent parameters can be computed thanks to the droplet size distribution (DSD) of the fog.

The RTE serves to simulate the radiance corresponding, for a wavelength , to the intensity of the electromagnetic energy flux (in W) of the radiation propagating in the direction u, per unit of area (in m) perpendicular to the direction of propagation, per unit of solid angle (in sr) and per unit of wavelength (in microns), and expressed in .

We recall here the RTE [31]:

where c is the speed of light, t, r, u, , , and denote, respectively, the time, the position in space, the wave propagation direction, the scattering coefficient, the absorption coefficient, the phase function for the wavelength and a space continuous source. The three-dimensional unit sphere is denoted by . By using the vocabulary dedicated to the rendering volumetric path tracing method, the terms in blue and green represent the out-scattering and absorption terms, respectively. These two terms together form the extinction term. The term in red corresponds to the in-scattering term, which is the most expensive part to calculate as it requires integration over all paths in the scene. Finally, the term in brown is for emission.

Optical passive objects and local light sources are taken into account thanks to the boundary conditions of Equation (1). For each light source occupying the space region S and emitting light from its surface , we have:

where denotes the outward normal vector of S at point , and is given. For each passive object occupying the space region O with a surface denoted by , we have:

where denotes the outward normal vector of O at point r, and is the BRDF of object O at point .

In the following, we will focus on the stationary case of Equation (1) with a homogeneous fog (, and do not depend on r) and a phase function depending only on (scalar product), hence:

where we note the extinction coefficient at the wavelength . Boundary conditions related to this stationary case are given by Equations (2) and (3) in which the time t is removed. The physical significance of the phase function is important. Indeed, for a photon moving at the speed of light in a medium, the phase function gives the probability of the resulting direction of this particle when it interacts with a water droplet. From an energy point of view, coefficients and define the amount of energy scattered and absorbed by the water droplets. These optical characteristics of the medium (, and ) are determined according to Mie theory and DSD measurements, under the assumption of spherical water droplets.

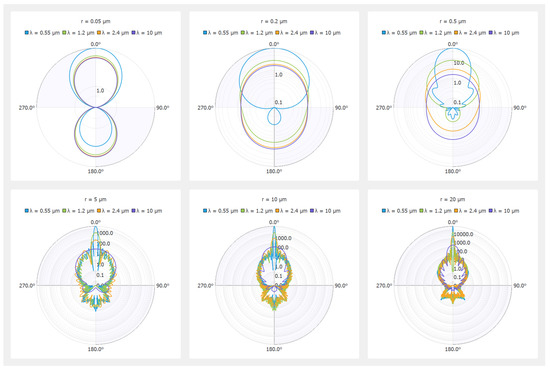

The phase function for six radii of spherical water droplets and different wavelengths from the visible to the thermal infrared range is shown in polar coordinates in Figure 1. One angle is sufficient to represent this phase function due to the spherical symmetry. We can notice a very weak influence of the wavelength on the phase function for small spheres (r = 0.05 m and r = 0.2 m), which is in accordance with Rayleigh’s theory under unpolarized incident light [31,32]. On the other hand, for sphere radii beyond 0.5 m, the influence of the wavelength is noticeable, and backscattering gradually disappears. Finally, it should be noted that all of the curves presented in Figure 1 consider the variation in the complex refractive index of water according to the wavelength. This shows the importance of using accurate microscopic fog data (actually the measured DSD), not just approximate models simply recalibrated on macroscopic fog density, as already explained [13].

Figure 1.

Polar representation of the phase function for six radii (r) of spherical particles and different wavelengths ().

The SWEET simulator developed by Cerema is a research-oriented and physically based simulator for internal use complementary to the Fog and Rain PAVIN platform. It is written in C++, runs on Linux and Microsoft Windows and uses OpenCL for GPU computing. Optimisation techniques such as a SAH kd-Tree acceleration technique of order O(N log N) (N being the number of triangular faces of a scene) are used. More details on these kd-Trees can be found in [33]. For the moment, the whole simulator is fully developed in-house at Cerema. Future work will allow the use of heavily optimized resources, such as the Embree library [34]. We wanted to develop this homemade tool, designed for applications related to the perceptual sensors of autonomous vehicles under adverse weather conditions, in order to be able to add building blocks and to be less dependent on other simulators.

SWEET parallelisation is performed on the rays and not on the volumes of 3D space. Indeed, the rays are distributed on the GPU calculation units. The simulator is designed to be as conservative as possible regarding memory consumption. The rate of convergence of the Monte Carlo algorithm is of order O, N being the number of traced rays. The computation time varies according to the complexity of the scene and the density of the participating medium: highly reflective materials and dense fog conditions slow down the convergence since in these cases the photons are likely to undergo multi-collision and multi-reflection. As an example, on a computer with an NVIDIA GeForce GTX 1080 graphic board, 12 CPU cores and 64 GB of memory, it takes 2 s to path-trace 10 million rays in a relatively complex scene (1.27 Million triangles) with a sparse isotropic participating medium. For a dense isotropic medium in the same scene, the same calculation takes 3 s.

The current version of SWEET only supports Lambertian surfaces; textures are not taken into account for the moment. Ongoing work will enable BSDFs for different types of scattering surfaces. The scattering of the participating medium is supported through measured/computed phase functions (tabbed functions). More scattering models will be implemented in the future (isotropic, Henyey–Greenstein, micro-flakes, etc.). SWEET supports the COLLADA file format, and additional integration is planned in the future. As the characteristics of the participating medium and those of the materials can depend on the wavelength, SWEET is spectral, and its calculations can be made in the range of visible wavelengths (VIS) as well as all wavelengths ranging from VIS up to thermal infrared (LWIR).

SWEET solves the RTE by using a (backward) Monte Carlo method based on full volumetric path tracing in a participating medium: a probabilistic representation of Equation (4) is provided by introducing a stochastic process mimicking photons moving in the medium and being able to interact with droplets of fog [31,35]. More precisely, a ray is sampled from the observation location before being traced along a random direction at the velocity of light c during a random duration following an exponential probabilistic law (the probability that is greater than a given deterministic value t is equal to ). If the photon reaches a light source, the random walk is finished. If it reaches an object, the reflective surface properties of this object are taken into account to modify the direction of the photon. If no source or object is reached, a new direction is sampled thanks to the phase function (collision with a water droplet) and a new duration . The algorithm will continue until a source or an absorbing material is reached.

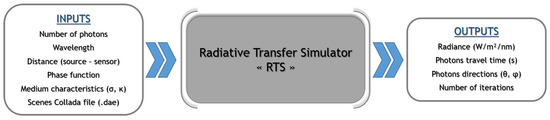

The main inputs/outputs of SWEET are illustrated in Figure 2.

Figure 2.

Illustration of the main inputs–outputs of the SWEET simulator.

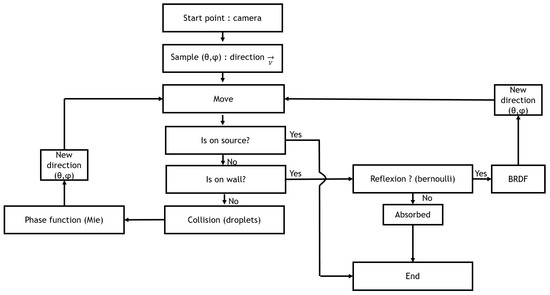

The implementation of the SWEET Monte Carlo computing engine generates N independent realisations according to the flowchart in Figure 3. In this flowchart, we see that the photon advances step-by-step in 3D space. At each step, we check whether it encounters a droplet of water or an obstacle in its path. If this is the case, we then evaluate whether the photon is totally absorbed or if it is deviated, then move on to the next iteration. For each realisation (simulation), we can calculate a weighted radiance. The average of all weighted radiance values gives an approximation of the radiance . Millions of paths need to be traced to obtain a good quality result without much noise.

Figure 3.

Diagram of the radiative transfer equation simulation method.

A particular case of Equation (4) is often used in a way to achieve an analytical solution. It consists of eliminating the collision term and assuming a constant source q. In this case, assuming there is no object and no local source between points and for x a real and , we have:

leading to the Beer–Lambert solution if :

The simple case presented above corresponds to the framework of the Koschmieder theory [36,37] allowing the contrast between a black object and a sky background to be evaluated based on visibility attenuation due to the extinction of the medium between the object and the observer. This theory is used in image processing to artificially add fog to an image: the intensity of a pixel is linked to the intensity without fog and an air–light intensity :

where is the real-world distance between the observer (camera) and the real point associated with the pixel , and is the extinction coefficient of the fog for the visible range ( 550 nm). In the sequel, we will call the hazing image method Equation (7) the 1D model or indifferently the Koschmieder model.

The visibility, or meteorological optical range (MOR), is the distance for which the luminous flux of a collimated light beam is reduced to 5% of its original value [38,39]. According to this definition, the visibility MOR is related to the extinction coefficient as follows:

It is important to mention that in Equation (8) is considered in the visible band and is assumed constant by the WMO [38]. This is not relevant for infrared wavelengths [40].

We can note that the 1D model Equation (7) can simulate a foggy scene if a previous image without fog is available, which is not the case for the Monte-Carlo-based 3D method.

The SWEET simulator and the 1D model are therefore implemented and used to model different use cases that we define in the following section.

3. Use Cases

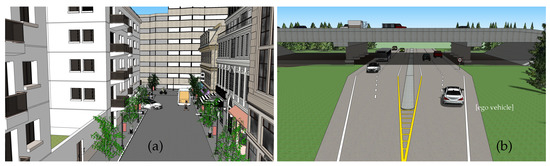

The SWEET simulator can be used in different ways depending on what the user wants to compute, whether it concerns physical quantities such as radiance and irradiance, in any wavelength domain, or the simulation of an entire sensor (i.e., a camera, a LiDAR, etc.). Here we present two use cases for the simulation of a 3D road scene with a camera (operating in the visible light range), as shown in Figure 4. The camera (pinhole model) is placed at the front of the ego vehicle for the extra-urban scene (Figure 4b) which is moving as in real conditions, and at the street entrance for the intra-urban scene (Figure 4a). Both day/night and different fog conditions are experimented.

Figure 4.

Collada-type models for the road scenes (intra-urban (a) and extra-urban (b)).

We first apply the SWEET simulator without fog in order to build a reference image, which is needed by the 1D model (Koschmieder) to obtain a foggy image. On the other hand, the scene is simulated again by using SWEET with foggy conditions. The results are compared in two steps: (i) first we compare the results of SWEET to those given by the Mitsuba renderer [24], this was performed only for the intra-urban scene in day conditions, (ii) then, the foggy images of SWEET are compared to those obtained by the Koschmieder model.

For a more objective comparison, we will use some basic metrics defined in Section 5. We could have made a more objective visual comparison through metrics such as the Visual Differences Predictor (VDP) [41] if we had a reference image (taken by a real camera for the same scene and under the same conditions). This will be added in subsequent work.

4. Visual Results

The intra-urban scene (Figure 4a) was simulated in day conditions. The daylight is taken into account in SWEET by setting an airlight radiance value ( W/m/sr, which is the radiance of the sun). The camera is a simple model of a perspective pinhole type, with consideration of three wavelengths for RGB channels (700 nm, 550 nm, 450 nm). More complex camera models can be implemented in the future (distortion, spectral responses of RGB filters, Bayer matrix, electronic, etc.).

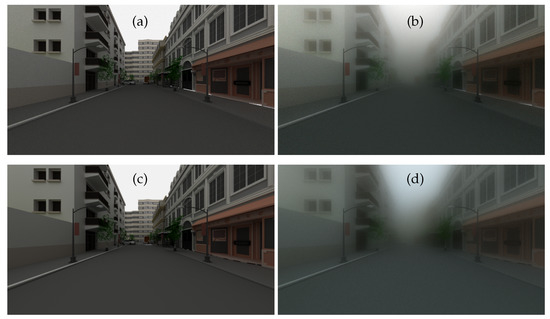

Figure 5 shows the rendering results obtained by SWEET (a and b) and by Mitsuba (c and d). The same tabbed phase functions as well as the same optical characteristics of the medium (albedo, extinction) are used in the two simulators, corresponding to a visibility of 20 m. These characteristics differ for the colour channels which are calculated separately, then merged to obtain the RGB images of Figure 5.

Figure 5.

Simulated images for the intra-urban scene in day conditions by the SWEET simulator without fog (a) and with fog (MOR = 20 m, (b)) and by the Mitsuba renderer without fog (c) and with fog (MOR = 20 m, (d)).

We note that the renderings of the two simulators are very similar by retaining the same assumptions (without textures, same linear interpolation of colours). Indeed, we did not use the textures in Mitsuba first so that we could compare the results with those of SWEET, which does not integrate the textures, but also because we are more interested in the effects of the fog on the rendering than in the side effects of scene textures. However, we notice the small difference in the colour of the sky in images with fog (Figure 5b,d). This is probably due to slight deviations in the airlight radiance values in Mitsuba for one or more channels. Future investigations will elucidate this point. These results required scene rework and colour re-calibration as Mitsuba is a bit different from SWEET in some aspects. Metrics will be used in the following section to continue the comparison of these images.

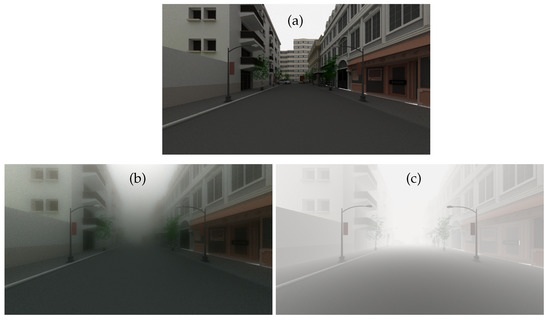

The images of SWEET without fog are used in the Koschmieder model by considering the same airlight radiance. The images in Figure 6 are obtained with the SWEET simulator without/with fog (a and b) and with the Koschmieder model for the same visibility and the same airlight radiance (c).

Figure 6.

Simulated images for the intra-urban scene with the SWEET simulator without fog (a) and with fog (MOR = 20 m, (b)) and with the Koschmieder model (c) in day conditions.

At first glance, one can appreciate the realism of the fog produced by the SWEET simulator compared to the Koschmieder model. Indeed, a blur effect can be noticed in the SWEET image, which is not the case for the Koschmieder image.

As we can notice in Figure 6c, Koschmieder’s model brightens the foggy image much more than SWEET (Figure 6b). This can be critical because some objects in the scene are not even visible with the SWEET simulation and are partially visible with the Koschmieder model (e.g., the two pedestrians on the right).

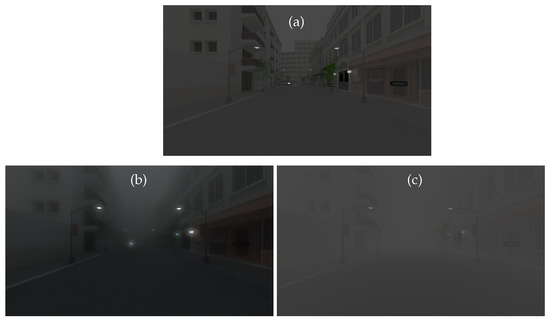

Now, we compare the models in night conditions, i.e., by considering a very low airlight radiance (5 W/m²/sr) in both models (the full 3D solution of the RTE given by SWEET and the Koschmieder model). The resulting images are given in Figure 7.

Figure 7.

Simulated images for the intra-urban scene with the SWEET simulator without fog (a) and with fog (MOR = 20 m, (b)) and with the Koschmieder model (c) in night conditions.

Once again, we can notice a nice rendering of the SWEET image (Figure 7b) compared to the one given by Koschmieder (Figure 7c). Indeed, we can observe the halo effects in the former that we do not see in the latter (Koschmieder’s case). This finding was somewhat expected, as it has been identified in the literature that the Koschmieder model is not relevant under night conditions [38]. Contrary to daytime behaviour, the Koschmieder model darkens the rendering at night.

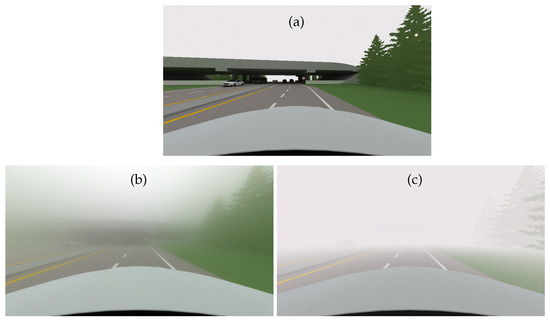

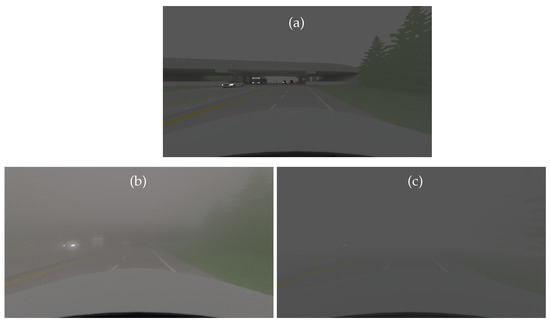

Figure 8 gives the simulated images by SWEET and the Koschmieder model for the extra-urban scene (Figure 4b). We obtain the same observation as for the intra-urban scene. We can notice that the Koschmieder model brightens too much under the viaduct (Figure 8c), while this is not the case for the image simulated by SWEET (Figure 8b). Moreover, the latter allows more objects to be seen (i.e., the car in front, trees, the viaduct) which are totally invisible with the Koschmieder model.

Figure 8.

Simulated images for the extra-urban scene with the SWEET simulator without fog (a) and with fog (MOR = 20 m, (b)) and with the Koschmieder model (c) in day conditions.

Figure 9 gives the simulations of the extra-urban scene in night conditions.

Figure 9.

Simulated images for the extra-urban scene with the SWEET simulator without fog (a) and with fog (MOR = 20 m, (b)) and with the Koschmieder model (c) in night conditions.

The halo effects are visible in the SWEET image (Figure 9b) and not in the Koschmieder one (Figure 9c). In fact, as mentioned above, the Koschmieder model darkens too much at night.

We have visually seen that the images obtained differ markedly between the Koschmieder and SWEET models. From a subjective point of view, we can say that the SWEET model brings a lot of improvement, thanks to its integration of 3D volumetry. We now propose to verify the impact of the blurring on a realistic image. Then, we propose to set up metrics to confirm what we perceive visually thanks to a discussion of quantitative metrics on real and simulated images in the next section.

The images in Figure 10 show a section of the French A75 highway, at the Col de la Fageole point, without fog (a) and with fog (b) (see [42] for a detailed description of the database). The image with fog (Figure 10b) can be compared to the simulated images (Figure 6b and Figure 8b). Although the latter are not made in the same scene, it still allows us to observe a similarity in the visual aspect of the fog in all these images (i.e., blurry effects). This confirms the realism of the rendering performed by SWEET. In perspective, we will then propose simulations on this specific use case by reproducing the same scene as that of the Col de la Fageole. Meanwhile, we propose to compare the general behaviour obtained on real and metrically simulated images in the next section.

Figure 10.

Real camera images taken from a section of the French A75 highway (at the Col de la Fageole point) without fog (a) and with fog (MOR = 156 m, (b)), both images are in grayscale.

5. Quantitative Results with Specific Metrics

In this section, we push the interpretation of the images even further, and we then propose analyses by two metrics:

- intensity analysis on lines of pixels;

- contrast analysis.

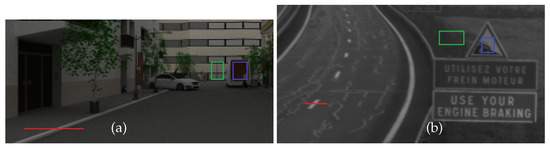

First, we present in the images of Figure 11, the specific regions where we will calculate the metrics for both real and simulated images. The pixel lines in red are used in order to verify the blurring effect produced by the fog on areas of sudden change of intensity (contours, ground markings, edge of building, etc.). The polygons shown in blue/green are used to compute the contrast according to the WMO definition [38]:

where C is the contrast, is the radiance of the object (blue polygons) and the radiance of the surrounding object area (green polygons).

Figure 11.

Pixel lines (in red) and contrast polygons (in blue/green) for the urban simulated scene (a) and the real one from a section of the French A75 highway (at the Col de la Fageole point) (b).

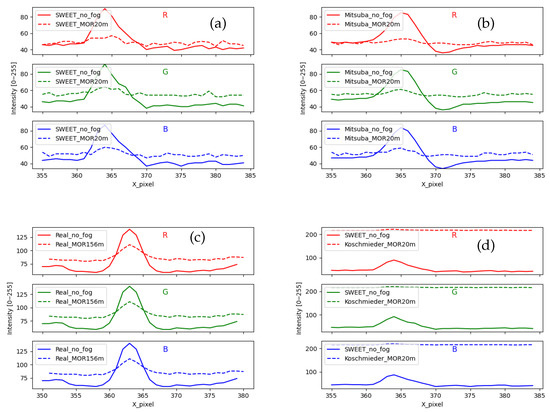

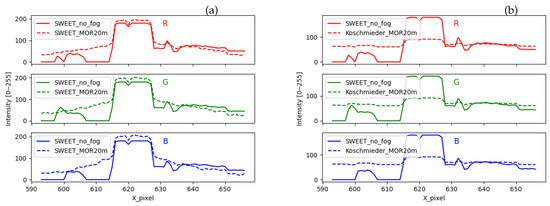

We start by analysing the evolutions of the pixel lines. The curves in Figure 12 are obtained for SWEET images (a), Mitsuba images (b), real images (c) and Koschmieder images (d). The peak in all the curves without fog (solid lines) represents the studied object, i.e., marking on the ground in the case of real camera images (Figure 11b) and the edge of the sidewalk in the case of the intra-urban road simulated scene (Figure 11a).

Figure 12.

Comparing the intensity of a pixel line for images coming from SWEET (a), Mitsuba (b), real camera images (c) and Koschmieder (d).

We can notice the similar behaviour of SWEET and Mitsuba through Figure 12a,b. However, Sweet images contain a little more noise, which can be eliminated in the future using denoising and sampling techniques. Moreover, we can notice through the solid curves in Figure 12b that the peak is smoother than in (a), which looks more like the curves of the real images (c).

The presence of fog in the scene simulated by SWEET, Mitsuba and in the real road scene attenuates the peak representing the object (ground markings, edge of sidewalk) and slightly lightens the scene around as can be seen through the curves in the broken line of Figure 12a–c. However, in the case of the Koschmieder model, the behaviour is completely different, and this again confirms that this model excessively brightens the whole image as can be seen in Figure 12d.

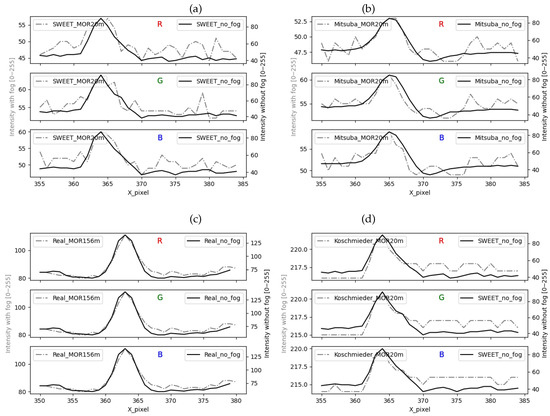

The curves with/without fog in Figure 12 are rescaled (separate y-axis for curves in solid/broken line), giving rise to Figure 13. We can immediately see that the rise towards the peak of the discontinuous curves is not identical. Indeed, the image with fog simulated by SWEET and Mitsuba (discontinuous curve in Figure 13a,b) presents a gradual rise like the real case (discontinuous curve in Figure 13c). On the other hand, a very sharp slope is observed in the case of Koschmieder (discontinuous curve in Figure 13d). This confirms the presence of the blur effect in the images simulated by SWEET, which is a realistic effect, which is not the case for Koschmieder.

Figure 13.

Comparing the intensity of a pixel line for images coming from SWEET (a), Mitsuba (b), real camera images (c) and Koschmieder (d), rescaled figures.

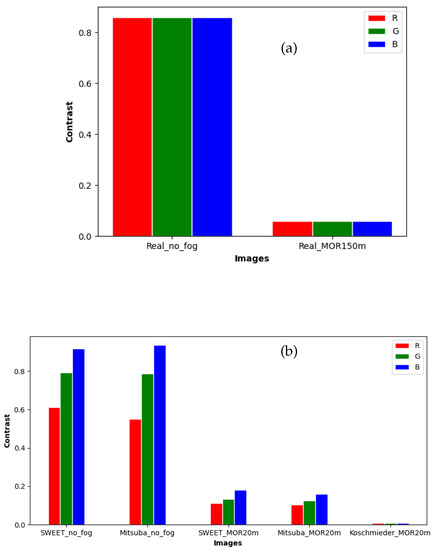

Contrast levels of the image simulated by SWEET and Mitsuba are reduced similarly for the three colour channels (red, green and blue) when the fog is applied, whereas in the case of Koschmieder, the contrast levels are almost zero as shown in Figure 14b. By comparison with the results of the real images (Figure 14a), this shows once again that the SWEET simulation of foggy images is more relevant.

Figure 14.

Comparing the contrast of objects for images from real images (a) to that of simulated images (SWEET, Mitsuba and Koschmieder) (b).

The contrast levels for the real scene (Figure 14a) are identical for the three channels (R, G and B). This is because the images of the real scene (Figure 10) are in grayscale.

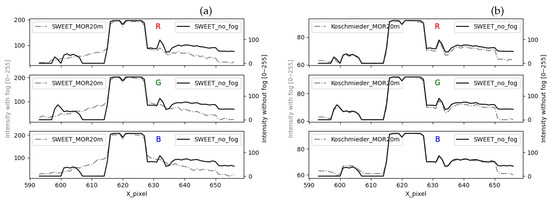

We now propose to verify the halo phenomenon by using the line pixels comparison on the night images (only SWEET is used). Figure 15 shows the simulated urban scene by SWEET. In the following, we propose to analyse the halo effects more closely through the pixel line in red.

Figure 15.

Pixel line (in red) for the urban simulated scene at night.

The peak of the curves in Figure 16 located in the pixel interval [617–628] corresponds to the white light of the lamp. The intensity of that white light is mostly preserved in the foggy image simulated by SWEET (broken line curves in Figure 16a). However, that peak is almost entirely attenuated by the Koschmieder model (broken line curves in Figure 16b). Around the lamp, the curve with fog simulated by SWEET (broken line curves in Figure 16a) has a gradient slope representing the effect of the halo. This is not the case with the Koschmieder model for which the curve gives almost a constant (broken line curves in Figure 16b).

Figure 16.

Comparing the intensity of a pixel line for the urban scene simulated by the SWEET simulator (a) and Koschmieder (b).

The shape of the discontinuous curve in Figure 17b is the same as the one without fog. This confirms that the Koschmieder model darkens the whole image. On the other hand, we can notice the gradient slope given by the SWEET simulator (discontinuous curve in Figure 17a).

Figure 17.

Comparing the intensity of a pixel line for the urban scene simulated by the SWEET simulator (a) and Koschmieder (b), rescaled figures.

6. Conclusions and Perspectives

For the evaluation and design of optical sensors for automated vehicles, digital simulation is a tool that can be used to approach reality in numerous configurations and with different degrees of realism. In this context, the SWEET simulation tool developed by Cerema meets the needs of research into the simulation of road scenes under degraded weather conditions, including fog. The choice of Monte Carlo methods (ray-tracing) in this simulator is justified by the accuracy of the results and the expected realism of the simulated scenes. Other methods based on simplified models of electromagnetic propagation through fog can be used as they can reach real-time execution.

In this paper, SWEET was first compared to the Mitsuba simulator. This comparison with a visual approach, then with some basic metrics, showed that the two simulators gave very close renderings. Improvements can be made to SWEET to reduce noise and smoothen curves as noted through Figure 12a–c. After that, SWEET and Mitsuba were both compared to the simplified Koschmieder model, which is widely used in the simulation of fog effects in images. The visual comparison of the images obtained by the two models (i.e., SWEET vs. Koschmieder) highlighted the realism of SWEET, which is able to reproduce real effects observable in road scene images (i.e., blurring effect, halo effect, etc.) as was seen through Figure 6b,c, Figure 7b,c, Figure 8b,c and Figure 9b,c. These visual observations were then quantified using metrics on pixel lines and comparison of contrast levels of several objects in the scenes. The results obtained in this work show a good match of the metrics between real fog images and images from the SWEET simulator as can be noted in Figure 12a compared to Figure 12c. The Koschmieder model shows deviations from the real images as can be seen in Figure 12d.

Future work will consist of continuing the comparison between SWEET, Mitsuba and the Koschmieder model on a wider variety of images, for other levels of fog and for other metrics of interest that could be built on higher level indicators, such as VDP or obstacle detection algorithms, for example. The Koschmieder model could be extended by adding the consideration of halo effects, blurring, etc. as has been performed in [26] in order to be able to use it in support of other simulators while guaranteeing satisfactory realism. Moreover, it would be interesting to perform more applications using common datasets. It will also be useful to compare the physical radiance outputs of the SWEET simulator with experimental measurements acquired with a spectroradiometer or a hyperspectral camera using the PAVIN platform or real-world sites instrumented by Cerema and its partners. Furthermore, ongoing work would complete future versions of SWEET with textures and BSDFs in order to get as close as possible to physical reality. Another perspective will be to build a database of images generated by SWEET in order to train machine learning algorithms able to deliver harsh weather noise models that can be run in real time. The work may also be extended to other wavelengths for cameras operating in SWIR and LWIR ranges or to active technologies such as LiDAR and RADAR.

Author Contributions

Conceptualization, A.B.-D., P.D. and F.B.; data curation, A.B.-D.; formal analysis, A.B.-D., P.D. and F.B.; funding acquisition, F.B., P.D.; investigation, A.B.-D., P.D. and F.B.; methodology, A.B.-D., F.B. and P.D.; project administration, F.B. and P.D.; software, A.B.-D. and P.D.; supervision, F.B.; validation, F.B. and P.D.; visualization, A.B.-D.; writing—original draft, A.B.-D.; writing—review and editing, A.B.-D., P.D. and F.B. All authors have read and agreed to the published version of the manuscript.

Funding

Co-funded by the European Union (grant no. 101069576) and supported by Innovate UK (contract no. 10045139) and the Swiss State Secretariat for Education, Research and Innovation (contract no. 22.00123). Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union or the European Climate, Infrastructure and Environment Executive Agency (CINEA). Neither the European Union nor the granting authority can be held responsible for them.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; nor in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| SWEET | Simulating WEather for intElligent Transportation systems |

| PAVIN | Plateforme d’Auvergne Véhicules Intelligents |

| LiDAR | Light Detection And Ranging |

| RADAR | RAdio Detection And Ranging |

| RTE | Radiative Transfer Equation |

| DSD | Droplet Size Distribution |

| MOR | Meteorological Optical Range |

| SWIR | Short-Wave InfraRed |

| LWIR | Long-Wave InfraRed |

References

- United Nations. Economic and Social Council, Economic Commission for Europe, New Assessment/Test Method for Automated Driving (NATM); United Nations: New York, NY, USA, 2021. [Google Scholar]

- European CCAM Partnership. Strategic Research and Innovation Agenda 2021–2027; European CCAM Partnership: Brussels, Belgium, 2022. [Google Scholar]

- Mai, N.A.M.; Duthon, P.; Khoudour, L.; Crouzil, A.; Velastin, S.A. 3D Object Detection with SLS-Fusion Network in Foggy Weather Conditions. Sensors 2021, 21, 6711. [Google Scholar] [CrossRef] [PubMed]

- Bijelic, M.; Mannan, F.; Gruber, T.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing Through Fog Without Seeing Fog: Deep Sensor Fusion in the Absence of Labeled Training Data. arXiv 2019, arXiv:1902.08913. [Google Scholar]

- Pfeuffer, A.; Dietmayer, K. Robust semantic segmentation in adverse weather conditions by means of sensor data fusion. In Proceedings of the 2019 22th International Conference on Information Fusion (FUSION), Ottawa, ON, Canada, 2–5 July 2019; IEEE: New York, NY, USA, 2019; pp. 1–8. [Google Scholar]

- Li, Y.; Duthon, P.; Colomb, M.; Ibanez-Guzman, J. What happens for a ToF LiDAR in fog? Trans. Intell. Transp. Syst. 2020, 22, 6670–6681. [Google Scholar] [CrossRef]

- Heinzler, R.; Piewak, F.; Schindler, P.; Stork, W. Cnn-based lidar point cloud de-noising in adverse weather. IEEE Robot. Autom. Lett. 2020, 5, 2514–2521. [Google Scholar] [CrossRef]

- Kutila, M.; Pyykönen, P.; Ritter, W.; Sawade, O.; Schäufele, B. Automotive LIDAR sensor development scenarios for harsh weather conditions. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 265–270. [Google Scholar] [CrossRef]

- Duthon, P.; Colomb, M.; Bernardin, F. Fog classification by their droplet size distributions. Application to the characterization of Cerema’s PAVIN BP plateform. Atmosphere 2020, 11, 596. [Google Scholar] [CrossRef]

- Colomb, M.; Hirech, K.; André, P.; Boreux, J.; Lacôte, P.; Dufour, J. An innovative artificial fog production device improved in the European project “FOG”. Atmos. Res. 2008, 87, 242–251. [Google Scholar] [CrossRef]

- DENSE Project. 2016. Available online: https://www.dense247.eu/ (accessed on 18 February 2023).

- AWARD Project. 2021. Available online: https://award-h2020.eu (accessed on 18 February 2023).

- Duthon, P.; Colomb, M.; Bernardin, F. Light Transmission in Fog: The Influence of Wavelength on the Extinction Coefficient. Appl. Sci. 2019, 9, 2843. [Google Scholar] [CrossRef]

- ANSYS. Ansys Avxcelerate Sensors Datasheet. 2022. Available online: https://www.ansys.com/content/dam/resource-center/datasheet/ansys-avxcelerate-sensors-datasheet.pdf (accessed on 18 February 2023).

- CARLA. 2022. Available online: https://carla.org/ (accessed on 18 February 2023).

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An Open Urban Driving Simulator. In Proceedings of the 1st Annual Conference on Robot Learning, Santa Clara, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

- Gruyer, D.; Pechberti, S.; Glaser, S. Development of Full Speed Range ACC with SiVIC, a virtual platform for ADAS Prototyping, Test and Evaluation. In Proceedings of the IEEE Intelligent Vehicles Symposium, Gold Coast City, Australia, 23–26 June 2013; pp. 100–105. [Google Scholar]

- Hadj-Bachir, M.; de Souza, P. LIDAR Sensor Simulation in Adverse Weather Condition for Driving Assistance Development. Working Paper or Preprint. Available online: https://hal.science/hal-01998668/ (accessed on 18 February 2023).

- ProSivic. 2022. Available online: https://www.esi-group.com/products/virtual-systems-controls (accessed on 18 February 2023).

- Electro-Optics Sensor Simulation, OKTAL. 2022. Available online: https://www.oktal-se.fr/how-to-buy/packaged-solution/ (accessed on 18 February 2023).

- Latger, J.; Cathala, T.; Douchin, N.; Goff, A.L. Simulation of active and passive infrared images using the SE-WORKBENCH. In Proceedings of the Infrared Imaging Systems: Design, Analysis, Modeling, and Testing XVIII; Holst, G.C., Ed.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2007; Volume 6543, p. 654302. [Google Scholar] [CrossRef]

- AVSimulation. 2022. Available online: https://www.avsimulation.com/ (accessed on 18 February 2023).

- 4DVirtualiz. 2022. Available online: https://www.4d-virtualiz.com/en (accessed on 18 February 2023).

- Jakob, W.; Speierer, S.; Roussel, N.; Nimier-David, M.; Vicini, D.; Zeltner, T.; Nicolet, B.; Crespo, M.; Leroy, V.; Zhang, Z. Mitsuba 3 Renderer. 2022. Available online: https://mitsuba-renderer.org (accessed on 18 February 2023).

- Bélanger, J.; Venne, P.; Paquin, J.N. The what, where, and why of real-time simulation. Planet RT 2010, 1, 37–49. [Google Scholar]

- Dumont, E. Caractérisation, modélisation et simulation des effets visuels du brouillard pour l’usager de la route. Ph.D. Thesis, University of Paris 5 UFR— Mathematics & Computer Science, Paris, France, 2002. [Google Scholar]

- Zdrojewska, D. Real time rendering of heterogenous fog based on the graphics hardware acceleration. In Proceedings of the Central European Seminar on Computer Graphics, Budmerice, Slovakia, 11–14 May 2004. [Google Scholar]

- Zaher, G. Simulating Weather Conditions on Digital Images. Master’s Thesis, DE–Faculty of Informatics, Debrecen, Hungary, 2020. [Google Scholar]

- Hasirlioglu, S. A Novel Method for Simulation-Based Testing and Validation of Automotive Surround Sensors under Adverse Weather Conditions. Ph.D. Thesis, University of Linz, Linz, Austria, 2020. [Google Scholar]

- Pinchon, N.; Cassignol, O.; Bernardin, F.; Nicolas, A.; Leduc, P.; Tarel, J.P.; Bremond, R.; Bercier, E.; Brunet, J. All-weather vision for automotive safety: Which spectral band? In Proceedings of the AMAA 2018, Advanced Microsystems for Automotive Applications, Berlin, Germany, 11–12 September 2018; pp. 3–15. [Google Scholar] [CrossRef]

- Modest, M. Radiative Heat Transfer; Elsevier Science: Amsterdam, The Netherlands, 2003. [Google Scholar]

- Van de Hulst, H. Light Scattering by Small Particles; Courier Corporation: Chelmsford, MA, USA, 1957. [Google Scholar]

- Wald, I.; Havran, V. On building fast kd-Trees for Ray Tracing, and on doing that in O(N log N). In Proceedings of the 2006 IEEE Symposium on Interactive Ray Tracing, Salt Lake City, UT, USA, 18–20 September 2006; IEEE: New York, NY, USA, 2006. [Google Scholar] [CrossRef]

- Afra, A.T.; Wald, I.; Benthin, C.; Woop, S. Embree Ray Tracing Kernels: Overview and New Features. In Proceedings of the ACM SIGGRAPH 2016 Talks No. 52, p. 2; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Lapeyre, B.; Pardoux, É.; Pardoux, E.; Sentis, R.; Craig, A.; Craig, F. Introduction to Monte Carlo Methods for Transport and Diffusion Equations; Oxford University Press: Oxford, UK, 2003. [Google Scholar]

- Koschmieder, H. Theorie der horizontalen Sichtweite. Beiträge Phys. Freien Atmosphäre 1924, 12, 33–55. [Google Scholar]

- Lee, Z.; Shang, S. Visibility: How Applicable is the Century-Old Koschmieder Model? J. Atmos. Sci. 2016, 73, 4573–4581. [Google Scholar] [CrossRef]

- World Meteorological Organization. Guide to Meteorological Instruments and Methods of Observation, 2014 ed; Updated in 2017—WMO-No. 8; World Meteorological Organization: Geneva, Switzerland, 2014; p. 1163. [Google Scholar]

- Gordon, J.I. Daytime Visibility, a Conceptual Review; SIO Ref. 80-1; Defense Technical Information Center: Fort Belvoir, VA, USA, 1979; p. 22. [Google Scholar]

- Arnulf, A.; Bricard, J.; Curé, E.; Véret, C. Transmission by Haze and Fog in the Spectral Region 035 to 10 Microns. J. Opt. Soc. Am. 1957, 47, 491. [Google Scholar] [CrossRef]

- Daly, S.J. Visible differences predictor: An algorithm for the assessment of image fidelity. In Human Vision, Visual Processing, and Digital Display III, Proceedings of the SPIE/IS&T 1992 Symposium on Electronic Imaging: Science and Technology, San Jose, CA, USA, 9–14 February 1992; Rogowitz, B.E., Ed.; SPIE: Bellingham, WA, USA, 1992. [Google Scholar] [CrossRef]

- Dahmane, K.; Duthon, P.; Bernardin, F.; Colomb, M.; Chausse, F.; Blanc, C. WeatherEye-Proposal of an Algorithm Able to Classify Weather Conditions from Traffic Camera Images. Atmosphere 2021, 12, 717. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).