Abstract

Early and accurate tomato disease detection using easily available leaf photos is essential for farmers and stakeholders as it help reduce yield loss due to possible disease epidemics. This paper aims to visually identify nine different infectious diseases (bacterial spot, early blight, Septoria leaf spot, late blight, leaf mold, two-spotted spider mite, mosaic virus, target spot, and yellow leaf curl virus) in tomato leaves in addition to healthy leaves. We implemented EfficientNetB5 with a tomato leaf disease (TLD) dataset without any segmentation, and the model achieved an average training accuracy of 99.84% ± 0.10%, average validation accuracy of 98.28% ± 0.20%, and average test accuracy of 99.07% ± 0.38% over 10 cross folds.The use of gradient-weighted class activation mapping (GradCAM) and local interpretable model-agnostic explanations are proposed to provide model interpretability, which is essential to predictive performance, helpful in building trust, and required for integration into agricultural practice.

1. Introduction

Early detection and control of crop disease is essential for farmers, stakeholders, and precision agriculture researchers to reduce the production losses. Current farm practices rely on visual identification of plant diseases by farm staff with the backup of specialists using additional resources and tools, such as microscopes [1]. However, agricultural professionals cannot constantly be present in the field to perform thorough monitoring, and farmers lack the expertise required to conduct the detection procedure [2].

Multispectral, RGB, and hyperspectral sensors have been used for crop-disease detection [3]. Recently, crop-disease detection utilizing a variety of image sensors has shown encouraging results when combining data-driven approaches, such as machine learning (ML) and deep learning (DL) [4]. Tomatoes are a commercially significant vegetable crop on a global scale, and various pathogens (viral, bacterial, and fungal illnesses [5,6,7,8]) that affect tomatoes have been identified [9].

A number of researchers have focused on the use of classification models in disease diagnosis. The majority of the suggested classifiers are developed and validated, with an emphasis on extracting deep features from images in order to categorize the foliage disorders [10,11]. For instance, in an experiment, Trivedi et al. [12] classified nine different kinds of tomato leaf diseases using a convolution neural network and a dataset of 3000 tomato leaves. They attained an accuracy of 98.49% using pre-processed and segmented tomato leaf images.

Although the existing deep-learning models for tomato-leaf-disease recognition achieved high accuracy on selected leaf image datasets, their interpretability and explainability are not sufficiently investigated to engender trust in using such models in practice. The eXplainable Artificial Intelligence (XAI) and DL algorithms that produce human-readable explanations for AI judgments lay the groundwork for imaging-based artificial-intelligence applications [13] in various domains, such as health informatics [14], computer vision [15], and many more.

Given that DL-based learning may autonomously extract features from an image without the need for human feature engineering, it is vital to explain the model’s output in cases when the XAI can enhance it. A few studies have anticipated XAI with DL models for the prediction of different subtypes of tomato leaf diseases (TLD) to include explanatory results [16]. Considering the high accuracy of DL models on TLD disease detection and the lack of explainability of the model’s output by existing works, this study aims to build a DL-XAI framework for TLD disease detection.

In this work, a TLD detection approach using a pre-trained DL model and an explainable solution highlighting the most significant portions of the foliage that contribute to disease categorization is proposed. The goal of the DL-XAI guided solution is to aid agricultural-based decision making. Additionally, the major contributions in this study are summarized as follows:

- 1.

- An explainable driven deep-learning framework for TLD recognition is developed using transfer learning with EfficientNetB5. The framework is named “(BotanicX-AI)”.

- 2.

- GradCAM and LIME are utilized to provide explanation of the outcomes provided by the BotanicX-AI framework.

- 3.

- The proposed study compares current pre-trained DL models [17,18,19,20,21] with a common fine-tuned architecture for TLD detection and conducts ablative research to determine which DL model performs the best.

A brief summary of earlier work addressing the issue of disease detection in tomatoes is given in Section 2. The EfficientNetB5 architecture suggested for the TLD diagnosis, classification, and the XAI approach is described in depth in Section 3. Section 4 provides evaluation metrics, and Section 5 provides a description of the experimental findings. Real-time sensation to enhance the model confidence is covered in Section 6, and Section 7 concludes by offering recommendations for further investigation.

2. Related Works

DL models have made significant advances in a variety of fields including, but not limited to, deep fakes [22,23], satellite image analysis [24], image classification [25,26], the optimization of artificial neural networks [27,28], the processing of natural language [29,30], fin-tech [31], intrusion detection [32], steganography [33], and biomedical image analysis [14,34]. CNNs have recently surfaced as one of the most commonly used techniques for plant disease identification [35,36].

By removing the constraints brought on by poor illumination and homogeneity in complicated environment scenarios, several works have concentrated their efforts on recognizing characteristics, while some authors have introduced real-time prediction [37,38]. For instance, research has been performed using DL models with the advancement of XAI techniques to develop a disease detection system with the major objective of pinpointing the disease and identifying the major areas of the plant and their parts that contribute to the classification [16,39].

The PlantVillage (PV) [40] dataset is a publicly available resource that contains images of various plant leaves with a range of disorders, including a tomato leaf disorder (TLD) [38]. This dataset has been used in multiple research works, including the following: Zhao et al. [41] achieved classification of TLDs using a multi-class feature-extraction approach. The residual block and the attention strategies were both integrated into the model, which was built on a deep CNN model. The model outperformed various deep-learning models with an overall accuracy of 99.24%.

Using the same image set, Bhujel et al. [42] examined the effectiveness of identifying various tomato diseases using a lightweight DL model. To enhance the performance of the model, a lightweight CNN method was combined with a number of attention strategies. The study explored the network architecture, performance, and computational complexity for the TLD dataset. The results showed improving classification accuracy upon building the compact and computationally efficient model with an accuracy of 99.69%.

TLD categorization was suggested by Ozbılge et al. [43] as an alternative to the well-known pre-trained knowledge-transferred ImageNet deep-network model and the compact deep-neural-network design with only six layers. The model’s performance on the PVdataset was tested using a number of statistical methods, and an accuracy of 99.70% was achieved. Antonio et. al. [44] suggested the use of a custom CNN-based architecture, which achieved a training accuracy of 99.99%, validation accuracy of 99.64%, precision of 99.00%, and a F1-score of 99.00% with the PV tomato dataset. With regard to the classification of the nine tomato illnesses, the recall metric had a value of 0.99.

The PlantVillage dataset was also utilized by Suryawati et al. [45] to train a model using Alexnet, GoogleNet, and VGGNet, which achieved test accuracies of 91.52%, 89.68%, and 95.25%, respectively. Transfer learning was used by Hong et al. [46] to reduce the quantity of training data needed, the amount of time typically required, and the cost of computation. Five deep-network topologies—Xception, Resnet50, MobileNet, ShuffleNet, and Densenet121—were employed to glean features from the 10 various tomato leaf disorders form the PV dataset. During the experiment, network architectures with various learning rates were contrasted. ShuffleNet had a recognition accuracy of 83.68%, whereas DenseNet and Xception had accuracies of 97.10% when the parameters were at their highest.

Vijay et al. [47] used CNN and K-nearest neighbor (KNN) models in their classification of tomato leaf disorders using the PlantVillage dataset, while LIME was used to provide explainability for the predictions made by each model. The CNN model performed better than the KNN model when used to detect leaf disease. The accuracy, precision, recall, and F1-score of the CNN model were 98.5%, 93%, 93% and 93%—all greater than those of the KNN model, which only managed to reach values of 83.6%, 90%, 84%, and 86%, respectively. Noyan et al. [48] claimed that the PlantVillage dataset is biased through the association of the background color to specific TLDs with an accuracy of around 40% for classification based on the use of background pixels only. However, Mzoughi et al. [49] demonstrated bias in the PV dataset, with image background colour associated with disease class. Additionally, they also showed improved identification outcomes, particularly in the setting of pictures with complicated backgrounds.

Kaur et al. [50] used an EfficientNetB7 model to examine leaf diseases of grape plants from the PlantVillage dataset. For the purpose of extracting the most important characteristics, the fully connected layer was created. The variance approach was then used to exclude extraneous features from the feature extractor vector. The logistic regression approach was then used to minimize the characteristics that had achieved a classification precision of 98.7%.

3. Materials and Methods

3.1. Dataset and Pre-Processing

TLD images of 10 distinct categories (healthy, bacterial spot, early blight, Septoria leaf spot, late blight, leaf mold, two-spotted spider mite, mosaic virus, target spot, and yellow leaf curl virus) were collected from the publicly available Kaggle dataset [51]—a total of 11,000 images distributed evenly among 10 classes in groups of 1100 each. The dataset was split into the ratio of 90:10 for the training and test sets. Furthermore, 10% of the training set was used as a validation set.

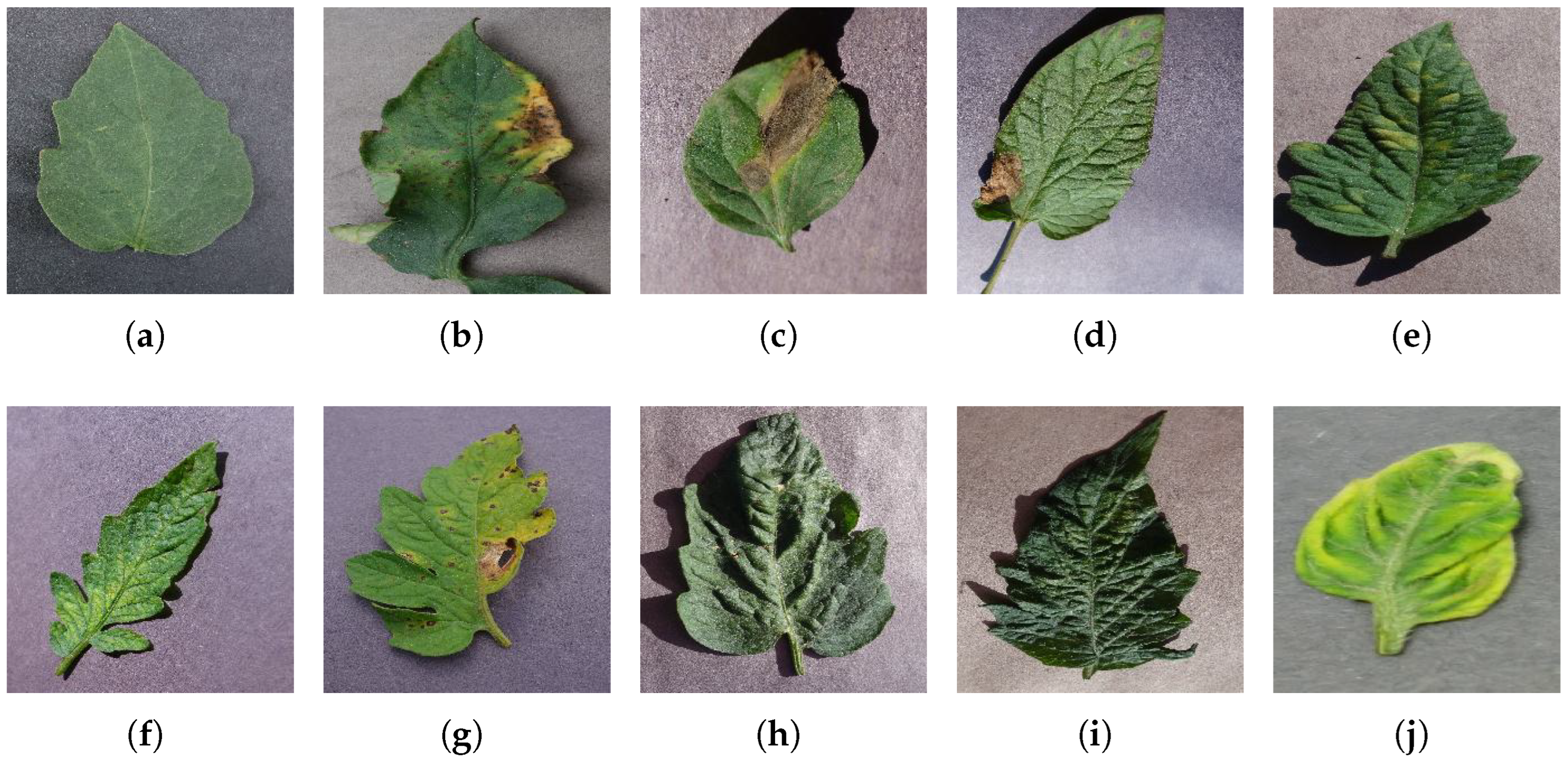

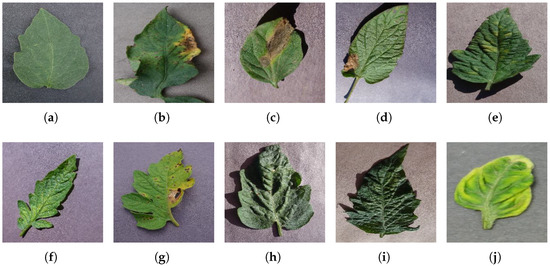

For better computational efficiency, each image was resized to 200 × 250. For faster convergence and to prevent the model from over and under fitting, images were shuffled relative to the position. The training samples were horizontally flipped, rotated (by a range of 20 degrees), zoomed (by a 0.2 range), and shifted (the width and height by 0.2). Figure 1 displays sample TLD images from the dataset for individual categories.

Figure 1.

Distinct sample images from the dataset for individual diseases. (a–j) denote “healthy”, “bacterial spot”, “early blight”, “late blight”, “leaf mold”, “mosaic virus”, “Septoria leaf spot”, “target spot”, “two spotted spider mite”, and ”yellow leaf curl virus” classes, respectively.

3.2. Proposed Method

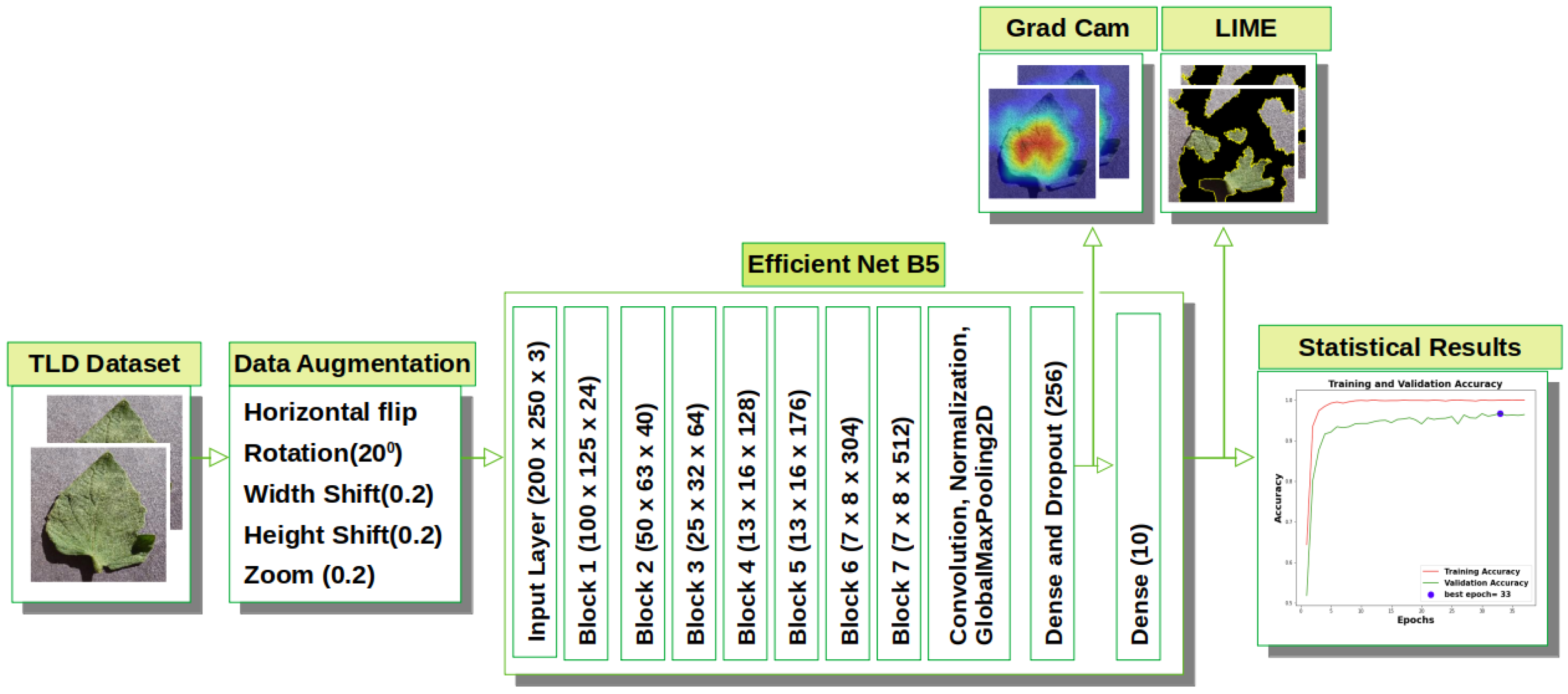

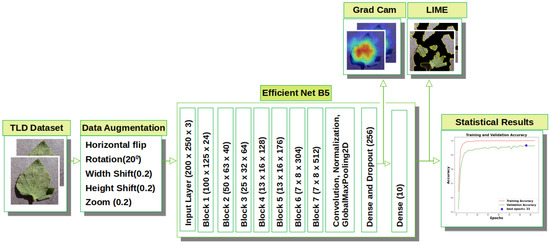

The two main components of the proposed DL-XAI framework for TLD identification are TLD classification and explanation based on XAI algorithms. The conceptual structure of the proposed model is shown in Figure 2.

Figure 2.

Proposed high level conceptual architecture of explanation—driven DL model (BotanicX-AI) for TLD detection.

3.2.1. EfficientNetB5

In order to increase the efficiency and accuracy of the model, the authors in [52] introduced EfficientNet with a novel scaling strategy that evenly increases the network’s depth, breadth, and resolution. The basic efficientNet consists of three types of blocks: stem, body, and final blocks. Based on the composition of the body (keeping the stem and final blocks the same in each variant), EfficientNet has different variants, such as EffiecientNetB0 and EffiecientNetB1. The body of each version of EffiecentNet consists of five modules, where each modules has depth-wise convolution, batch normalization, and activation layers [53].

Considering the comparative analysis from Keras [54] on the basis of size, top-one and top-five accuracy, and parameters and depth, the EfficientNetB5 model was selected as the base model in this study. To augment the base model [52], batch normalization, dense and dropout layers were added on top. Finally, a softmax layer was added with 10 dense units for TLD classification. Regularization penalties, such as a kernel regularizer (L2 regularization = 0.016), activity regularizer (L1 regularization = 0.06), and bias regularizer (L1 regularization = 0.06), were applied on a per-layer basis. A dropout rate of 0.4 and softmax activation was used on the output layer. A summary of the proposed model is shown in Table 1.

Table 1.

Summary for the proposed model. Note that “Param. #” denotes the number of parameters in the respective layer.

3.2.2. Explainable AI

Traditional evaluation metrics do not adequately reflect the steps taken by the AI system to arrive at an output and do not allow for interpretation of the outcome. Therefore, an AI algorithm must properly describe how it arrived at its result. Particularly in domains involving high-stake decision-making, there has been an upsurge in demand for explainability of deep-learning-based systems [55]. Professionals may more easily understand the data from the DL model and use this understanding to make a quick and accurate diagnosis of a specific type of TLD. For this, LIME, and GradCAM, two commonly used XAI algorithms, are used in this study.

A binary vector (∈ {0, 1}d) denoting the “presence” or “absence” of a continuous patch of super-pixel was used for the explainable details of denoting an instance (x ∈ d) using LIME. For our model, g ∈ G with domain {0, 1}d was used to present the information visually. g represents the absence/presence of the interpretable components. Every instance of g ∈ G was not enough to encapsulate the explanation; as a result, Ω(g) was used to measure the complexity of the explanation.

To belong in any of the 10 classes for the model f: d→, f(x) was the probability of x, and x(z) was used as a proximity measure between an instance z to x to define locality around x. The fidelity function, ℑ(f,g,πx), was used as a measure of how unfaithful g was in approximating f in the locality defined by πx. When Ω(g) was as low as possible to increase the interpretations, the fidelity function was also minimized. The explanation generated by LIME can be summarized as Equation (1).

GradCAM is a technique that calculates the gradient of a differentiable output, such as a class score, with respect to the convolutional features of a chosen layer. It is mostly used for image classification tasks but can also be applied to semantic segmentation. In the proposed model, the softmax layer generates a score for each class and each pixel to assist in the semantic segmentation task. Equation (2) explains GradCAM mapping for a specific class C with N pixels and AK as a feature map.

where,

3.3. Implementation Details

Using Keras [54] in Python [56], the suggested DL model and XAI algorithms were implemented in Google Colab [57] with an NVIDIA K80 graphics processing unit and 12 GB of RAM. Python (version 3.7) and Keras (version 2.5.0), which collaborate with the TensorFlow (version 2.5.0) framework, was provided by Google Colab as a runtime platform. During the training and validation of the proposed model, two callbacks were implemented. The first callback was used to monitor the validation loss and learning rate reduction with a factor of 0.5. The second callback was used for early stopping by recovering the best points throughout the course of four epochs. Furthermore, to prevent the model from over-fitting, both callbacks were imposed within 50 epochs.

4. Evaluation Metrics

To evaluate the model’s ability in recognizing TLD images, standard performance metrics, such as the precision (4), recall (5), F-score (6), and accuracy (7), were applied [58].

where, respectively, “”, “”, “”, and “” stand for “true positive”, “true negative”, “false positive”, and “false negative”. Along with measuring the model’s performance, assessments for individual classes were enacted using the confusion matrix.

5. Results and Discussion

5.1. Comparison with Existing Pre-Trained DL Models

In order to compare the performance of the fine-tuned EfficientNetB5 model with other existing transfer-learning approaches using pre-trained Dl models, we chose MobileNet [17], Xception [19], VGG16 [18], ResNet50 [20], and DenseNet121 [21] and implemented transfer learning on the TLD dataset under the same implementation details as described in Section 3.3. Since the existing DL models range from heavy-weight (high numbers of trainable parameters) to light-weight (lower number of trainable parameters), we opted to cover both kind of models where VGG-16, ResNet50, etc. represent the heavy-weight models, while MobileNet represents the lightweight model). The proposed DL model with EfficientNetB5 outperformed the other models in terms of accuracy and loss (Table 2).

Table 2.

Analysis of the suggested model in comparison to the MobileNet, Xception, VGG16, ResNet50, and DenseNet121 models. Training accuracy, training loss, validation accuracy, validation loss, test accuracy, and test loss are abbreviated as ‘TA’, ‘TL’, ‘VA’, ‘VL’, ‘TsA’, and ‘TsL’, respectively.

More specifically, the proposed DL model with EfficientNetB5 achieved the highest test accuracy of 99.07% in comparison to the test accuracy of the other models, such as MobileNet (94.00%), Xception (95.32%), VGG16 (93.35%), ResNet50 (96.03%), and DenseNet121 (96.30%). In comparing the performance of the proposed DL model with existing pre-trained DL models, EfficientNetB5 had better performance (by 2.77% accuracy) compared to the second-best performing model (DenseNet121). Similarly, among the comparison cohort, VGG16 was the least performing model (with test accuracy of 93.35%), which is significantly lower (by 5.72%) than the performance of the proposed DL model with EfficientNetB5.

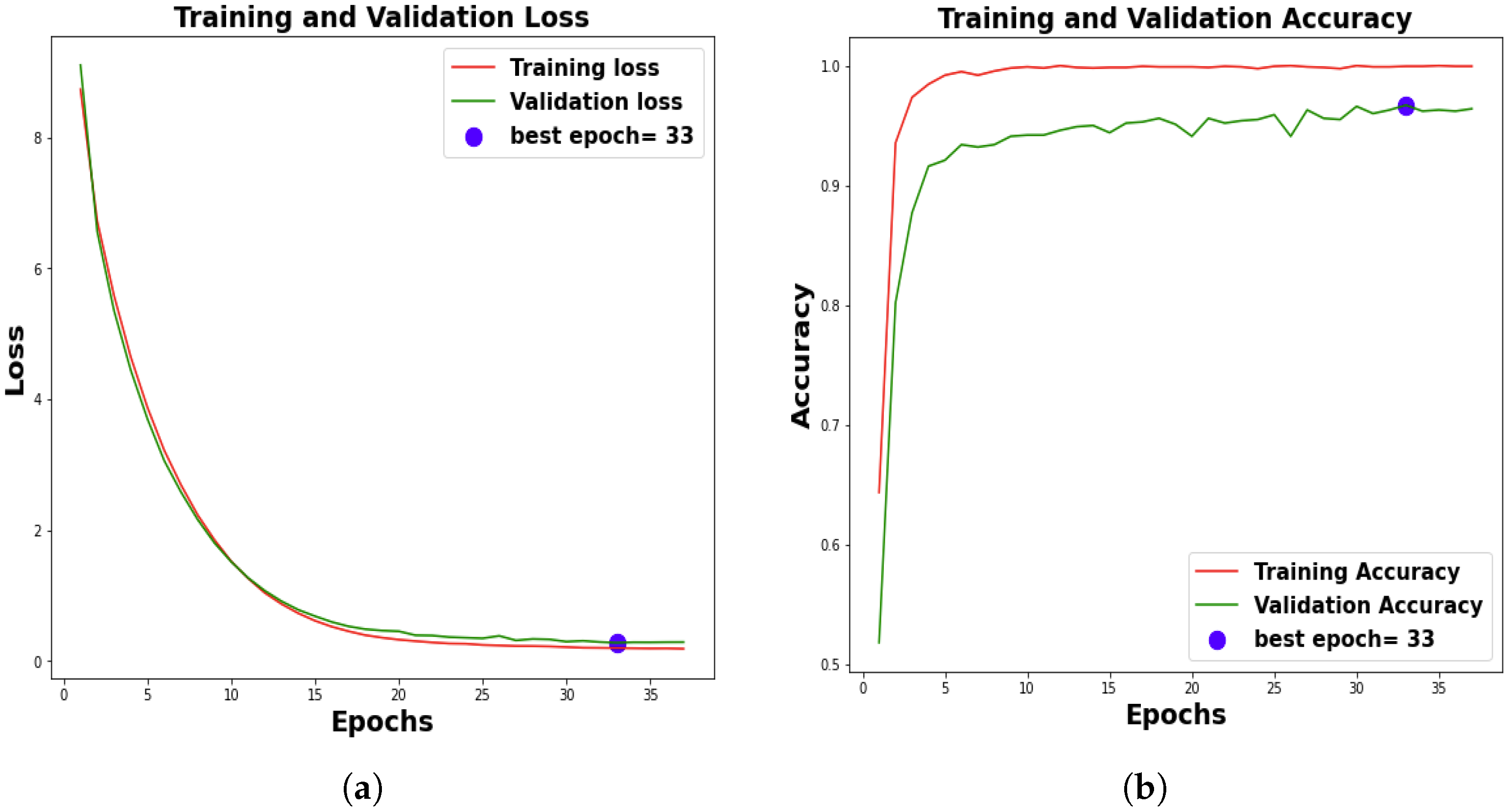

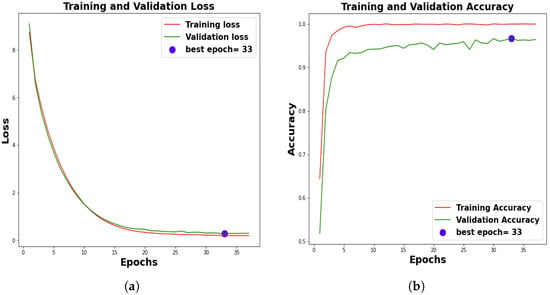

5.2. Model Explanation with EfficientNetB5

Conventional statistical validation protocols were considered, encompassing the metrics of model loss and accuracy across the training, validation, and test sets as well as the precision, F1-score, and recall. In terms of model training, a predetermined termination criterion of 50 epochs was imposed. Figure 3 shows the 10th-fold results, and the model achieved an average training accuracy of 99.84% ± 0.10%and validation accuracy of 98.28% ± 0.20% (refer to Table 3).

Figure 3.

Training and validation results. (a) 99.84% ± 0.10% average training accuracy and 99.07% ± 0.38% average validation accuracy over 10 folds. (b) 0.18 ± 0.01 training loss and 0.24 ± 0.02 validation loss.

Table 3.

Ten-fold training, testing, and validation performance in percentages; training accuracy (TA), validation accuracy (VA), training loss (TL), validation loss (VL), test accuracy (TsA), and test loss (TsL).

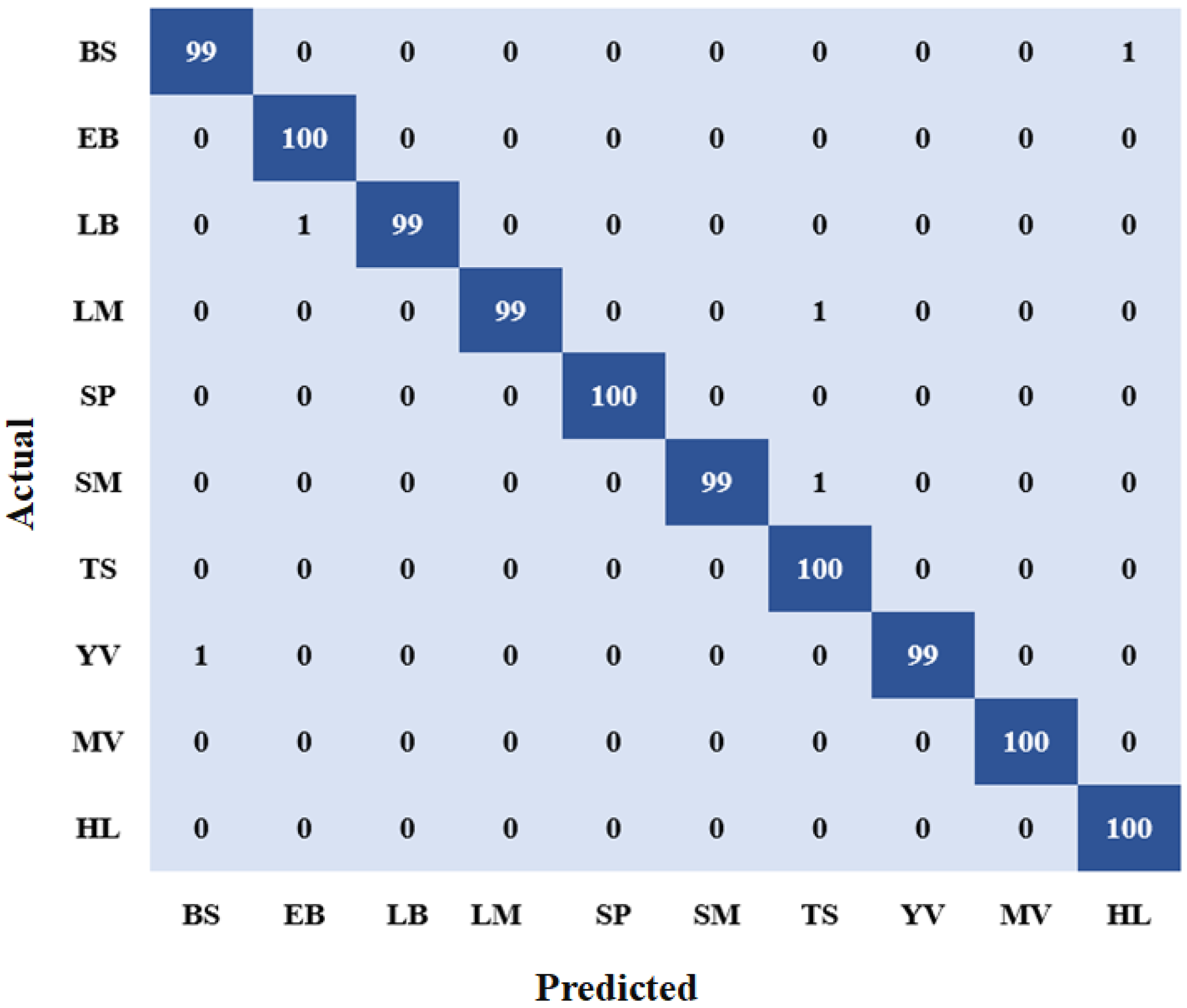

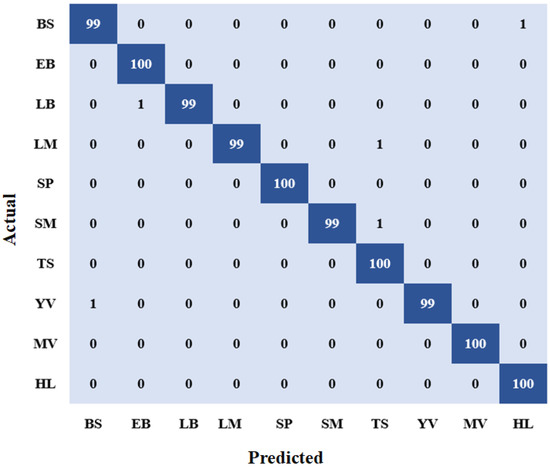

On a test set that was not shown to the model during training, the model was further assessed to achieve a test accuracy of 99.07% ± 0.38% and a test loss of 0.20 ± 0.03. The results from the confusion matrix (Figure 4) show that bacterial spot, late blight, leaf mold, spider mite, and yellow curl had a single instance predicted wrong, whereas the early bright, Septoria leaf spot, target spot, mosaic virus, and healthy categories were all predicted correctly. The precision, F1-scores and recall of the individual categories are shown in Table 4.

Figure 4.

Confusion Matrix. ‘BS’, ‘EB’, ‘LB’, ‘LM’, ‘SP’, ‘SM’, ‘TS’, ‘YV’, ‘MV’, and ‘HL’ stand for bacterial spot, early blight, late blight, leaf mold, Septoria leaf spot, spider mite, target spot, yellow curl virus, mosaic virus, and healthy leaves, respectively.

Table 4.

Class-wise prevision, recall, F1-score, and support (number of samples) for each TLD class on the test dataset.

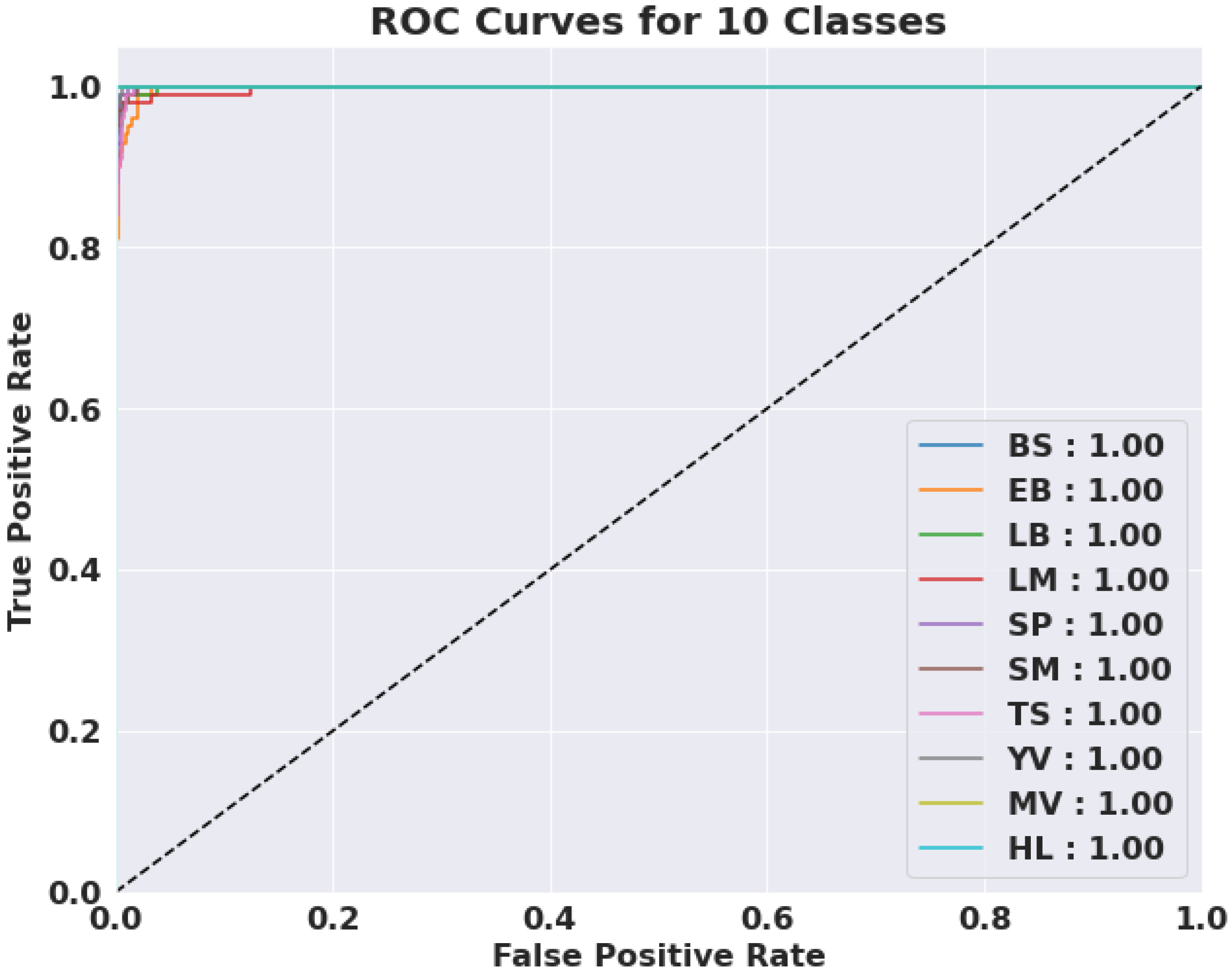

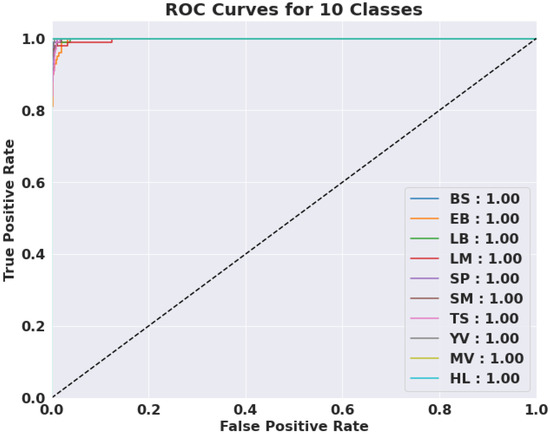

We evaluated the AUC ROC scores for each class to assess the efficiency of the proposed model (Figure 5). The average AUC ROC score across all classes was 1.0—demonstrating the model’s accuracy in correctly classifying instances. The CNN performed well for all courses as per the AUC ROC values for individual classes. These outcomes show how successfully the model handled the multi-class categorization issue.

Figure 5.

The AUC-ROC results of the proposed model with an AUC score of 1.0.

5.3. Model Explanation with XAI

5.3.1. GradCAM

The GradCAM technique was employed to discern the salient regions of TLD images that were instrumental in the classification process by leveraging the spatial information retained by convolutional layers. To evaluate the efficacy of the proposed visual explanation techniques, a comprehensive examination of individual TLD samples from every category was conducted, which involved visual inspection of the heatmaps generated by the methodologies. The heatmap is shown in Table 5 as a result.

Table 5.

Explainable AI result interpretations for TLD.

Regarding the results of GradCAM for bacterial spot (Table 5, first row), the middle section of the leaf holds the greatest impact on the classification and heatmap positions on the center section. On the other hand, for early blight, the right portion in the image shows as more infectious, and the heatmap is plotted accordingly. For leaf mold, GradCAM is concentrated on the yellow section of the leaf. However, in the GradCAM heatmap for the class target spot (Table 5, fifth row), some background portion of the leaf images is also highlighted with the majority of the gradient concentrated on the center of the images. This shows that the model is also considering the background weight while making decisions; however, the impact of the background is negligible as seen while testing the model on the independent test images (refer to Section 6).

5.3.2. LIME

A matrix was created with 150 rows of randomly generated ones and zeros, where the columns were made up of superpixels. The matrix was split with a ratio of 0.2, and it was perturbed using a kernel size of 3 by 3 and a maximum distance of 100 units. The perturbations were applied to the top 20 numerical features, which were adjusted to align with the averages and standard deviation of the training data using a normal (0, 1) sampling method and by undoing the mean-centering and scaling operations. A robust binary feature was crafted using training-distribution-based sampling to generate categorical features. This method was executed to develop a feature that is unequivocally assigned the value of 1 when it corresponds to the instance being described. The individual segmentation of TLD is shown in Table 5.

Considering bacterial spot from Table 5, first row, the leaf holds the bacterial spot. The center portion of the leaf has the greatest impact on the classification, and LIME segments the portions accordingly. For early blight, in examining the LIME output, we can see that EfficientNetB5 activates toward the right section of the leaf. Accordingly, for leaf mold, the yellow section of the leaf is indicated as an important characteristic used by the network to classify the leaf as leaf mold.

5.4. Comparison with the State-of-the-Art Methods

Table 6 compares the classification performance of the proposed model with existing state-of-the-art approaches. To increase the performance’s coherence and relevance, we chose the most current disease detection models using deep-learning approaches based on TLD categorization. To serve as a comparison population, we choose a total of seven contemporary DL methods. Additionally, this comparative cohort employed both transfer learning-based DL models and custom-CNN models that were created from scratch. Only two of the four publications employed customizable CNNs with LIME as XAI. The TLD dataset was classified using the transfer learning technique in the other three studies; however, none of them employed XAI. The proposed method outperformed all other state-of-the-art methods.

Table 6.

Comparison of our model with the state-of-the-art methods on the TLD dataset.

6. Independent Validation

The use of test data independent of the training set is recommended in any model building. In this case, Noyan et al. [48] noted bias in the PlantVillage (PV) dataset associated with background color. It was, therefore, essential to impose our model on tomato leaf images that were drawn from other sources. Five random images for respective classes were collected from [59], which holds 32,535 images for 11 different tomato leaf diseases. Resized to 250 × 200, the collected images (not used to train, test, and validate the proposed model) were fed into the model, and real-time sensational validation for individual categories was calculated. The predicted probability for the sample images and GradCAM results are tabulated in Table 7. It is seen that the GradCAM results identify the infected section, and no spots are present inside the healthy portion of the leaf. Table 8 shows the class-wise accuracy. Both leaf mold and spider mite achieved 80% accuracy, whereas the rest of the categories achieved 100% with an average accuracy of 96%.

Table 7.

Real-time results.

Table 8.

Class-wise accuracy for the independent dataset. ‘BS’, ‘EB’, ‘LB’, ‘LM’, ‘SP’, ‘SM’, ‘TS’, ‘YV’, ‘MV’, and ‘HL’ stand for bacterial spot, early blight, late blight, leaf mold, Septoria leaf spot, spider mite, target spot, yellow curl virus, mosaic virus, and healthy leaves, respectively.

7. Conclusions and Future Work

In this study, we suggested an explanation-generation (XAI) framework together with a CNN model for categorizing TLD diseases into nine separate classes. Transfer learning with EfficientNetB5 was used to create the BotanicX-AI to deliver explanation-driven findings, and GradCAM and LIME produced a detailed explanation of the results. The proposed work was compared to existing pre-trained DL models for TLD detection with a common fine-tuned architecture, and ablative research to find the optimal DL model was conducted.

The test and training accuracies obtained with an XAI-based CNN model to predict TLD were 99.07% ± 0.38% and 99.84% ± 0.10%, respectively. The explanations generated with both GradCAM and LIME were able to identify the specific region that contributed to the categorization of TLD. The suggested model shows that XAI and EfficientNetB5 generated admissible explanations of the outcomes with high classification accuracy.

In contrast, we observed that GradCAM failed to identify regions of an image used by model prediction due to the gradient-averaging step. HiResCAM is a generative adversarial network (GAN), and global model-specific explanation AI, such as kernel SHapley Additive exPlanations (SHAP), are recommended as potential alternatives. Trials of these procedures are recommended. The further development of the PlantVillage dataset for tomato leaf disorders is also recommended to address the issue of bias associated with the background as raised by [48]. Furthermore, as the proposed model is based on the EfficientNetB5 architecture, it may be further evaluated with datasets that have more information and images.

Author Contributions

Conceptualization, M.B. and T.B.S.; Investigation, M.B. and T.B.S.; Methodology, M.B. and T.B.S.; Project administration, A.N., T.B.S. and K.B.W.; Resources, M.B., T.B.S., A.N. and K.B.W.; Software and Coding, M.B. and T.B.S.; Supervision, A.N. and K.B.W.; Validation, M.B., T.B.S., A.N. and K.B.W.; Writing—original draft, M.B.; Writing—review and editing, M.B., T.B.S., A.N. and K.B.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available tomato leaf diseases dataset, https://www.kaggle.com/datasets/kaustubhb999/tomatoleaf (Accessed on 3 July 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bock, C.; Parker, P.; Cook, A.; Gottwald, T. Visual rating and the use of image analysis for assessing different symptoms of citrus canker on grapefruit leaves. Plant Dis. 2008, 92, 530–541. [Google Scholar] [CrossRef]

- Khakimov, A.; Salakhutdinov, I.; Omolikov, A.; Utaganov, S. Traditional and current-prospective methods of agricultural plant diseases detection: A review. IOP Conf. Ser. Earth Environ. Sci. 2022, 951, 012002. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for precision agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Deep learning techniques to classify agricultural crops through UAV imagery: A review. Neural Comput. Appl. 2022, 34, 9511–9536. [Google Scholar] [CrossRef]

- Rietra, R.; Heinen, M.; Oenema, O. A Review of Crop Husbandry and Soil Management Practices Using Meta-Analysis Studies: Towards Soil-Improving Cropping Systems. Land 2022, 11, 255. [Google Scholar] [CrossRef]

- Collinge, D.B.; Jensen, D.F.; Rabiey, M.; Sarrocco, S.; Shaw, M.W.; Shaw, R.H. Biological control of plant diseases–what has been achieved and what is the direction? Plant Pathol. 2022, 71, 1024–1047. [Google Scholar] [CrossRef]

- Elsakhawy, T.; Omara, A.E.D.; Abowaly, M.; El-Ramady, H.; Badgar, K.; Llanaj, X.; Törős, G.; Hajdú, P.; Prokisch, J. Green Synthesis of Nanoparticles by Mushrooms: A Crucial Dimension for Sustainable Soil Management. Sustainability 2022, 14, 4328. [Google Scholar] [CrossRef]

- Singh, A.; Goswami, S.; Vinutha, T.; Jain, R.; Ramesh, S.; Praveen, S. Retrotransposons-based genetic regulation underlies the cellular response to two genetically diverse viral infections in tomato. Physiol. Mol. Plant Pathol. 2022, 120, 101839. [Google Scholar] [CrossRef]

- Fidan, H.; Gonbadi, A.; Sarikaya, P.; Çaliş, Ö. Investigation of activity of Tobamovirus in pepper plants containing L4 resistance gene. Mediterr. Agric. Sci. 2022, 35, 83–90. [Google Scholar] [CrossRef]

- Kumar, M.S.; Ganesh, D.; Turukmane, A.V.; Batta, U.; Sayyadliyakat, K.K. Deep Convolution Neural Network Based solution for Detecting Plant Diseases. J. Pharm. Negat. Results 2022, 13, 464–471. [Google Scholar]

- Russel, N.S.; Selvaraj, A. Leaf species and disease classification using multiscale parallel deep CNN architecture. Neural Comput. Appl. 2022, 34, 19217–19237. [Google Scholar] [CrossRef]

- Trivedi, N.K.; Gautam, V.; Anand, A.; Aljahdali, H.M.; Villar, S.G.; Anand, D.; Goyal, N.; Kadry, S. Early Detection and Classification of Tomato Leaf Disease Using High-Performance Deep Neural Network. Sensors 2021, 21, 7987. [Google Scholar] [CrossRef] [PubMed]

- Munquad, S.; Si, T.; Mallik, S.; Das, A.B.; Zhao, Z. A Deep Learning–Based Framework for Supporting Clinical Diagnosis of Glioblastoma Subtypes. Front. Genet. 2022, 13, 855420. [Google Scholar] [CrossRef] [PubMed]

- Bhandari, M.; Shahi, T.B.; Siku, B.; Neupane, A. Explanatory classification of CXR images into COVID-19, Pneumonia and Tuberculosis using deep learning and XAI. Comput. Biol. Med. 2022, 150, 106156. [Google Scholar] [CrossRef] [PubMed]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Kinger, S.; Kulkarni, V. Explainable ai for deep learning based disease detection. In Proceedings of the 2021 Thirteenth International Conference on Contemporary Computing (IC3-2021), Noida, India, 5–7 August 2021; pp. 209–216. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Wu, X.; Liu, R.; Yang, H.; Chen, Z. An Xception Based Convolutional Neural Network for Scene Image Classification with Transfer Learning. In Proceedings of the 2020 second International Conference on Information Technology and Computer Application (ITCA), Guangzhou, China, 18–20 December 2020; pp. 262–267. [Google Scholar] [CrossRef]

- Çinar, A.; Yildirim, M. Detection of tumors on brain MRI images using the hybrid convolutional neural network architecture. Med. Hypotheses 2020, 139, 109684. [Google Scholar] [CrossRef]

- Nandhini, S.; Ashokkumar, K. An automatic plant leaf disease identification using DenseNet-121 architecture with a mutation-based henry gas solubility optimization algorithm. Neural Comput. Appl. 2022, 34, 5513–5534. [Google Scholar] [CrossRef]

- Bhandari, M.; Neupane, A.; Mallik, S.; Gaur, L.; Qin, H. Auguring Fake Face Images Using Dual Input Convolution Neural Network. J. Imaging 2023, 9, 3. [Google Scholar] [CrossRef]

- Masood, M.; Nawaz, M.; Malik, K.M.; Javed, A.; Irtaza, A.; Malik, H. Deepfakes Generation and Detection: State-of-the-art, open challenges, countermeasures, and way forward. Appl. Intell. 2022, 53, 3974–4026. [Google Scholar] [CrossRef]

- McAllister, E.; Payo, A.; Novellino, A.; Dolphin, T.; Medina-Lopez, E. Multispectral satellite imagery and machine learning for the extraction of shoreline indicators. Coast. Eng. 2022, 174, 104102. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X.; Liu, G. Tomato pests recognition algorithm based on improved YOLOv4. Front. Plant Sci. 2022, 13, 1894. [Google Scholar] [CrossRef]

- Arco, J.E.; Ortiz, A.; Ramírez, J.; Martínez-Murcia, F.J.; Zhang, Y.D.; Górriz, J.M. Uncertainty-driven ensembles of multi-scale deep architectures for image classification. Inf. Fusion 2023, 89, 53–65. [Google Scholar] [CrossRef]

- Bhandari, M.; Parajuli, P.; Chapagain, P.; Gaur, L. Evaluating Performance of Adam Optimization by Proposing Energy Index. In Recent Trends in Image Processing and Pattern Recognition: Proceedings of the fourth International Conference, RTIP2R 2021, Msida, Malta, 8–10 December 2021; Santosh, K., Hegadi, R., Pal, U., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 156–168. [Google Scholar]

- Alsaiari, A.O.; Moustafa, E.B.; Alhumade, H.; Abulkhair, H.; Elsheikh, A. A coupled artificial neural network with artificial rabbits optimizer for predicting water productivity of different designs of solar stills. Adv. Eng. Softw. 2023, 175, 103315. [Google Scholar] [CrossRef]

- Shahi, T.B.; Sitaula, C. Natural language processing for Nepali text: A review. Artif. Intell. Rev. 2021, 55, 3401–3429. [Google Scholar] [CrossRef]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Comput. Surv. 2023, 55, 1–35. [Google Scholar] [CrossRef]

- Khanal, M.; Khadka, S.R.; Subedi, H.; Chaulagain, I.P.; Regmi, L.N.; Bhandari, M. Explaining the Factors Affecting Customer Satisfaction at the Fintech Firm F1 Soft by Using PCA and XAI. FinTech 2023, 2, 70–84. [Google Scholar] [CrossRef]

- Chapagain, P.; Timalsina, A.; Bhandari, M.; Chitrakar, R. Intrusion Detection Based on PCA with Improved K-Means. In Proceedings of the Innovations in Electrical and Electronic Engineering, New Delhi, India, 8–9 January 2022; Mekhilef, S., Shaw, R.N., Siano, P., Eds.; Springer: Singapore, 2022; pp. 13–27. [Google Scholar]

- Bhandari, M.; Panday, S.; Bhatta, C.P.; Panday, S.P. Image Steganography Approach Based Ant Colony Optimization with Triangular Chaotic Map. In Proceedings of the 2022 second International Conference on Innovative Practices in Technology and Management (ICIPTM), Pradesh, India, 23–25 February 2022; Volume 2, pp. 429–434. [Google Scholar] [CrossRef]

- Chakraborty, S.; Mali, K. An overview of biomedical image analysis from the deep learning perspective. In Research Anthology on Improving Medical Imaging Techniques for Analysis and Intervention; IGI Global: Hershey, PA, USA, 2023; pp. 43–59. [Google Scholar]

- Lakshmanarao, A.; Babu, M.R.; Kiran, T.S.R. Plant Disease Prediction and classification using Deep Learning ConvNets. In Proceedings of the 2021 International Conference on Artificial Intelligence and Machine Vision (AIMV), Gandhinagar, India, 24–26 September 2021; pp. 1–6. [Google Scholar]

- Militante, S.V.; Gerardo, B.D.; Dionisio, N.V. Plant leaf detection and disease recognition using deep learning. In Proceedings of the 2019 IEEE Eurasia Conference on IOT, Communication and Engineering (ECICE), Yunlin, Taiwan, 3–6 October 2019; pp. 579–582. [Google Scholar]

- Mattihalli, C.; Gedefaye, E.; Endalamaw, F.; Necho, A. Real time automation of agriculture land, by automatically detecting plant leaf diseases and auto medicine. In Proceedings of the 2018 32nd International Conference on Advanced Information Networking and Applications Workshops (WAINA), Krakow, Poland, 16–18 May 2018; pp. 325–330. [Google Scholar]

- Pinto, L.A.; Mary, L.; Dass, S. The Real-Time Mobile Application for Identification of Diseases in Coffee Leaves using the CNN Model. In Proceedings of the 2021 Second International Conference on Electronics and Sustainable Communication Systems (ICESC), Coimbatore, India, 4–6 August 2021; pp. 1694–1700. [Google Scholar]

- Gaur, L.; Bhandari, M.; Shikhar, B.S.; Nz, J.; Shorfuzzaman, M.; Masud, M. Explanation-Driven HCI Model to Examine the Mini-Mental State for Alzheimer’s Disease. ACM Trans. Multimed. Comput. Commun. Appl. 2022. [Google Scholar] [CrossRef]

- Hughes, D.; Salathé, M. An open access repository of images on plant health to enable the development of mobile disease diagnostics. arXiv 2015, arXiv:1511.08060. [Google Scholar]

- Zhao, S.; Peng, Y.; Liu, J.; Wu, S. Tomato leaf disease diagnosis based on improved convolution neural network by attention module. Agriculture 2021, 11, 651. [Google Scholar] [CrossRef]

- Bhujel, A.; Kim, N.E.; Arulmozhi, E.; Basak, J.K.; Kim, H.T. A lightweight Attention-based convolutional neural networks for tomato leaf disease classification. Agriculture 2022, 12, 228. [Google Scholar] [CrossRef]

- Ozbılge, E.; Ulukok, M.K.; Toygar, O.; Ozbılge, E. Tomato Disease Recognition Using a Compact Convolutional Neural Network. IEEE Access 2022, 10, 77213–77224. [Google Scholar] [CrossRef]

- Guerrero-Ibañez, A.; Reyes-Muñoz, A. Monitoring Tomato Leaf Disease through Convolutional Neural Networks. Electronics 2023, 12, 229. [Google Scholar] [CrossRef]

- Suryawati, E.; Sustika, R.; Yuwana, R.; Subekti, A.; Pardede, H. Deep Structured Convolutional Neural Network for Tomato Diseases Detection. In Proceedings of the 2018 International Conference on Advanced Computer Science and Information Systems (ICACSIS), Yogyakarta, Indonesia, 27–28 October 2018; pp. 385–390. [Google Scholar] [CrossRef]

- Hong, H.; Lin, J.; Huang, F. Tomato Disease Detection and Classification by Deep Learning. In Proceedings of the 2020 International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Fuzhou, China, 12–14 June 2020; pp. 25–29. [Google Scholar] [CrossRef]

- Vijay, N. Detection of Plant Diseases in Tomato Leaves: With Focus on Providing Explainability and Evaluating User Trust. Master’s Thesis, University of Skövde, Skövde, Sweden, September 2021. [Google Scholar]

- Noyan, M.A. Uncovering bias in the PlantVillage dataset. arXiv 2022, arXiv:2206.04374. [Google Scholar]

- Mzoughi, O.; Yahiaoui, I. Deep learning-based segmentation for disease identification. Ecol. Inform. 2023, 75, 102000. [Google Scholar] [CrossRef]

- Kaur, P.; Harnal, S.; Tiwari, R.; Upadhyay, S.; Bhatia, S.; Mashat, A.; Alabdali, A.M. Recognition of leaf disease using hybrid convolutional neural network by applying feature reduction. Sensors 2022, 22, 575. [Google Scholar] [CrossRef] [PubMed]

- Kaustubh, B. Tomato Leaf Disease Detection. Available online: https://www.kaggle.com/datasets/kaustubhb999/tomatoleaf (accessed on 3 July 2022).

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Sitaula, C.; Shahi, T.B. Monkeypox virus detection using pre-trained deep learning-based approaches. J. Med Syst. 2022, 46, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Chollet, F. Keras. Available online: https://github.com/fchollet/keras (accessed on 3 July 2022).

- Van der Velden, B.; Kuijf, H.; Gilhuijs, K.; Viergever, M. Explainable artificial intelligence (XAI) in deep learning-based medical image analysis. Med. Image Anal. 2022, 79, 102470. [Google Scholar] [CrossRef] [PubMed]

- Van Rossum, G.; Drake, F.L. Python 3 Reference Manual; CreateSpace: Scotts Valley, CA, USA, 2009. [Google Scholar]

- Carneiro, T.; Da NóBrega, R.V.M.; Nepomuceno, T.; Bian, G.B.; De Albuquerque, V.H.C.; Filho, P.P.R. Performance Analysis of Google Colaboratory as a Tool for Accelerating Deep Learning Applications. IEEE Access 2018, 6, 61677–61685. [Google Scholar] [CrossRef]

- Shahi, T.B.; Sitaula, C.; Neupane, A.; Guo, W. Fruit classification using attention-based MobileNetV2 for industrial applications. PLoS ONE 2022, 17, e0264586. [Google Scholar] [CrossRef] [PubMed]

- Khan, Q. Tomato Disease Multiple Sources. Available online: https://www.kaggle.com/datasets/cookiefinder/tomato-disease-multiple-sources (accessed on 3 July 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).