Against that introductory backdrop, we foreshadow the intent of our paper: to discuss the scientific aspect of engineering design, based on taking requirements as sets of hypotheses for the structure and behavior of the system, and testing the implemented system accordingly. What will emerge is a distinction between “Verification”, “Evaluation”, and “Validation”—and, through examples, we will discuss these terms by considering motivational factors such as “What” they are, “How” we proceed, and “Why”.

Briefly stated, “Verification” is the process whereby any particular system requirement is observed to see whether or not it fulfils a specification (a binary measure). “Evaluation” measures how well the system can be used to fulfil a task—it is a performance measure, posed in terms of the speed and accuracy (or task time and error rate) for a suitably well-posed task. “Validation” calls into play considerations of internal vs external validity of the experiments, the sources of cognitive bias that may corrupt these measures, and questions regarding the elusive abstract entities (i.e., the “Constructs”) which we wish to measure but cannot be measured directly (e.g., “Surgical Skill”, “Anatomical Knowledge”, “Respect for Tissue”, etc.).

2.2. An Example of the Validation of a Measurement System

One guiding principle that helps to properly define the terms is to emphasize that “Validity” is an attribute of an inference. When we make measures, they are used to draw conclusions; the validity of the inferences which we draw from those measures (which in turn can lead to conclusions) is what we are striving for. An inference is based on the evidence gathered from observations; one example of an observation is a quantitative measure. We could search the literature for a small set of papers which provide examples of validation of measurement systems; within the domain of biomedical engineering, we think that these are proper uses of the terms. These papers would share a characteristic in which each of them describes systems that are used to make physical measurements. We argue that this calls into play the notion of a ‘gold standard’—which is in a sense, a ‘specification’ on which verification, evaluation, and validation all become synonymous terms—but only for systems that are designed to perform physical measurements.

A recent paper [

7] describes a camera-based system to measure the motion of the hand in a virtual environment; their system validity was “evaluated” based on “repeatability”, “precision”, and “reproducibility”. Their system “reliability” was estimated using a correlation with manual methods, a separate intraclass correlation coefficient on the measures, and a paired t-test and bias test. Note that the authors used these methods to gather evidence on the consistency of repeated measures, and to test for any difference between the depth camera and manual measurement procedures. The first word in their paper’s title is “Validity”. To be sure, their comparisons against alternate measurement systems can appropriately be called “concurrent validity”. When their paper suggests that the evidence was gathered “in the lab”, this might suggest that “out of the lab” measurements might differ. This raises the question of “internal validity” versus “external validity”. While this is described as a system “Validation”, there is no mention of ‘construct validity’, ‘content validity’, ‘external validity’, or even ‘face validity’. We suggest that this is because these extended and abstract forms of validity must be reserved for systems that are more complex than simple measurement systems.

We suspect that this is where much confusion enters the wider use of these terms. For systems that are designed to make physical measurements, ‘verification’, ‘evaluation’, and ‘validation’ all collapse more or less into synonymous terms. Let us now examine other domains in which systems are being designed as tools for accomplishing more complex tasks.

We have to take care, however; since while most would agree that ‘verification’, ‘evaluation’, and ‘validation’ are three separate levels of empirical investigation that need to take place as part of the whole design process for complex systems, there is some confusion in the literature as to what terms should be used to refer to them. One does not need to search far for examples of the terms being used in interchangeable ways. Therefore, while we move towards considerations of the use of “Validity,” let us explore this in conjunction with related terms “,Reproducibility, Replicability, Repeatability, and Reliability”.

2.3. Reproducibility, Replicability, Repeatability, and Reliability

In the context of the requirements and analysis of engineering systems, and in particular for medical devices, the concepts related to “Measurements” are “Accuracy”, “Precision”/“Recall”, and “Repeatability”, and “Reliability”. For “Specifications” derived from empirical values set by clinicians, the relevant concepts are to compare against a specified “Ground Truth”, and to consider inter- and intra- “Observer Variability”, under the more general term of “Uncertainty”. These terms are often confused, used as synonyms, and even misunderstood. We will briefly define the terms here and later, and illustrate them with examples. No matter how you slice or dice the terms, the following three seem to come up as synonyms: reproduce, replicate, and repeat.

The terms stack up (i.e., our “R”-terms will stack up in a hierarchy, just as our “V”-terms). Consider that if measurements are not repeatable, then they cannot be reliable. If measurements are not reliable, then experiments cannot be replicated, i.e., they are irreproducible.

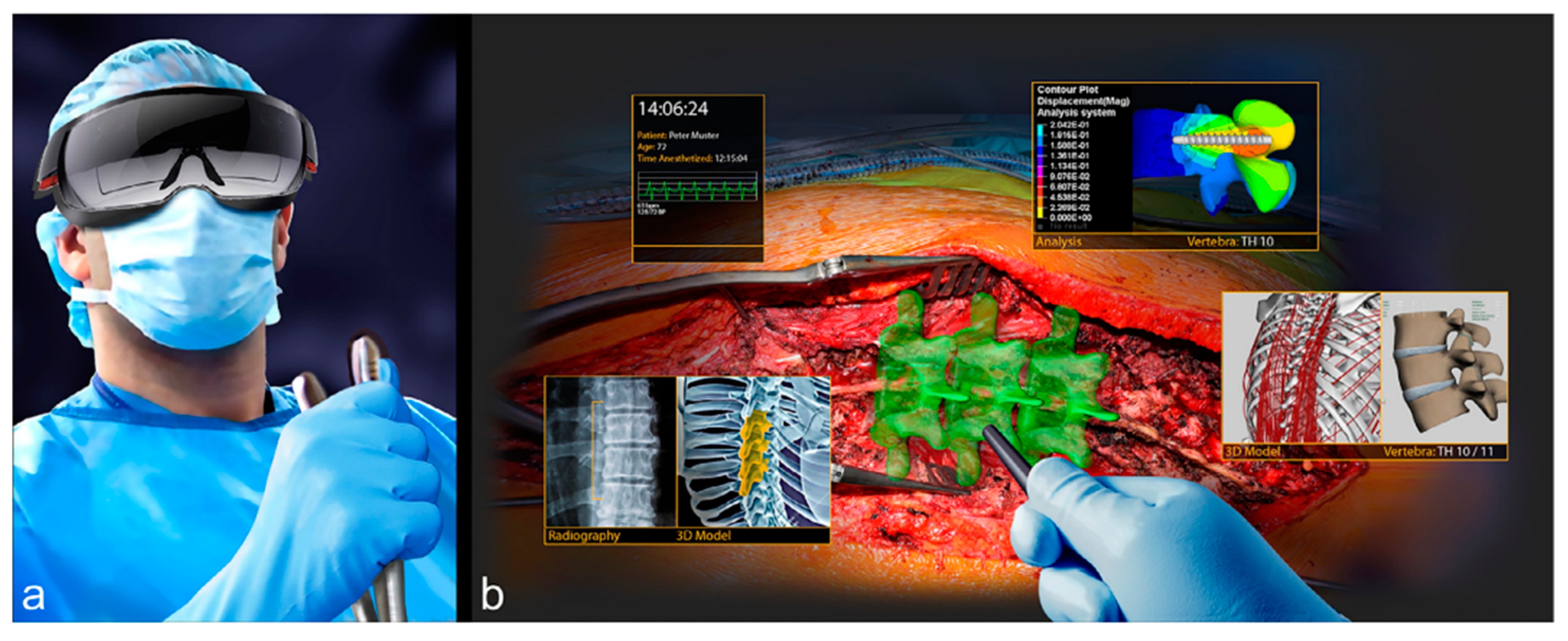

For example, for the AR/VR system for computer-assisted spine surgery described above (

Figure 1), the main specification is the desired accuracy of the final screw position with respect to the preoperative plan (ground truth). Some of the main relevant measurements are: position of the screws in the preoperative plan, registration accuracy of the planned screw positions with respect to the acquired pedicle digital representation (previously validated), accuracy and precision of the tracked instrument and of the drilled borehole alignment with the preoperative plan, and postoperative. The observer variability is the difference of the desired preoperative screw position as defined by two or more clinicians, e.g., the pedicle screw angle and screw depth. The uncertainty is the target and range of values that are set as specifications by the system designer and the clinicians, e.g., the pedicle screw angle within one degree of the target value. Both the observer variability and the uncertainty can be both patient-specific (a single patient) or the mean of a set of patients (patient cohort).

The terms accuracy and precision are well known and have precise definitions, cf. Wikipedia (

Figure 3). Accuracy quantifies the difference between observed or measured values and what was established as the true value, also called ground truth. Precision is how close the measurements are to each other. Accuracy measures systematic errors, i.e., the statistical bias of a given measure from a mean value. Precision measures the statistical variability of random errors. A measurement can be accurate or not, precise or not, or neither.

We now discuss the specifications and their relation to the ground truth, to uncertainty, and to accuracy. For this purpose, we will use the analogy of an archer shooting at a target (

Figure 3 and

Figure 4). When the target ground truth is and defined as the specification with its exact mean value (center of the target) and deviations from it (concentric circles), the accuracy and precision are as defined before (

Figure 4a). However, when the mean ground truth value is not precisely known, as it is often the case for medical specifications as a result of uncertainty or observer variability in defining them, the ground truth target location is shifted (

Figure 4b). In this case, the uncertainty affects the accuracy. However, when the uncertainty of the target ground truth is larger than the accuracy, the specifications are always met (

Figure 4c). In this case, improving the accuracy of the system or defining tighter specifications is unnecessary, as it will not affect the outcome. However, when the uncertainty of the target ground truth is smaller than the accuracy, the specifications are not always met (

Figure 4d). In this case, improving the accuracy of the system or defining tighter specifications does make a difference and should be carefully considered. However, when the accuracy is improved but has a bias, the specifications will never be met (

Figure 4e).

These scenarios have important consequences when performing the analysis of a system and its comparison with a conventional procedure (

Figure 4f). For simplicity, assume that we focus on a single real-valued parameter, e.g., the pedicle screw angle in the AR/VR system for spine surgery shown above (

Figure 2). For a given patient and procedure, this angle has an ideal value, that is, the angle for which the pedicle screw is most beneficial/best performs its job. In most cases, this angle cannot be known precisely, as it depends on many complex and interrelated phenomena. Instead, we choose a target value. This is the empirical value that, in the opinion of the treating physicians, will yield the best results for the patient. The target value, in our case the target pedicle screw angle, is in fact a range—the interval defined by the minimum and maximum acceptable value. This interval reflects the uncertainty that is associated with the target value.

Now, consider two possible ways to achieve the target value: the conventional procedure, e.g., in our example the manual orientation of the pedicle screw hole drill (conventional), and the augmented reality navigated method (navigated). To establish the accuracy of each method independently, the achieved values of repeated trials with the method are compared to the target value—the mean value, measured value, standard deviation, and minimum and maximum values. When the measured values interval is fully included in the target value uncertainty interval, the method is deemed accurate. The intervals overlap defines the measure of accuracy.

Consider now the comparison between the conventional and the navigated procedure. One approach would be to directly compare the mean values and intervals for each method. However, this two-way comparison does not take into account the target value and its uncertainty interval. Thus, even when the interval of the navigated procedure is inside the interval of the conventional procedure, which may indicate that the navigated procedure is more accurate than the conventional procedure, it can still be away from the target value and/or have little overlap with its uncertainty interval.

This illustration leads us to postulate that the correct way to compare the performance accuracy of two procedures—manual and navigated in our examples (cf.

Figure 5 and

Figure 6)—is three-way comparison of the target value and its uncertainty, the conventional and the navigated, and procedure accuracies and measurement inaccuracies. Measures can be biased and/or uncertain. Distinguishing the two kinds of error is important, but we would also need their task time, in order to fully Evaluate their Performance. Note that ‘Performance’ is the product of speed and accuracy.

We now relate the concepts of uncertainty and accuracy to the three elements of the analysis: verification, evaluation, validation.

Verification: The ideal and target values, and their associated uncertainty, are directly relevant for the verification since they are the values to which the experimental results values will be compared to determine the pass/fail criteria. The verification must take into account the uncertainty of the target value. Note that the verification does not involve comparison with other ways of performing the procedure, e.g., conventional. Thus, when validating the AR system described above (

Figure 2), there is no need to collect and analyze results from the conventional procedure.

Evaluation: since it measures how well a system can be used to fulfil a task, evaluation necessarily involves the three-way comparison with the conventional method. It must take into account the both the target uncertainty and the accuracy of the conventional and the navigated procedure. Thus, when

Evaluating the AR/VR System for spine surgery described above (

Figure 2), there is a need to collect and analyze results from both the conventional and the navigated procedures.

Validation: since validation calls into play considerations of internal vs external validity of the experiments, the sources of cognitive bias that may corrupt these measures, and other user-related issues, e.g., surgical skills and anatomical knowledge, mostly involves the new system and less the conventional one. Thus, a two-way comparison with the target and with additional considerations is in order. The uncertainty and accuracy are relevant and part of the validation, and come together with other criteria. It is only when these criteria are stated with respect to the conventional procedure that the three-way comparison is in order. Thus, for example if an improvement of surgical skills or a reduction in operating time is stated, then these measures must be determined both for the navigated and for the conventional procedures.