Analysis of Real-Time Face-Verification Methods for Surveillance Applications

Abstract

1. Introduction

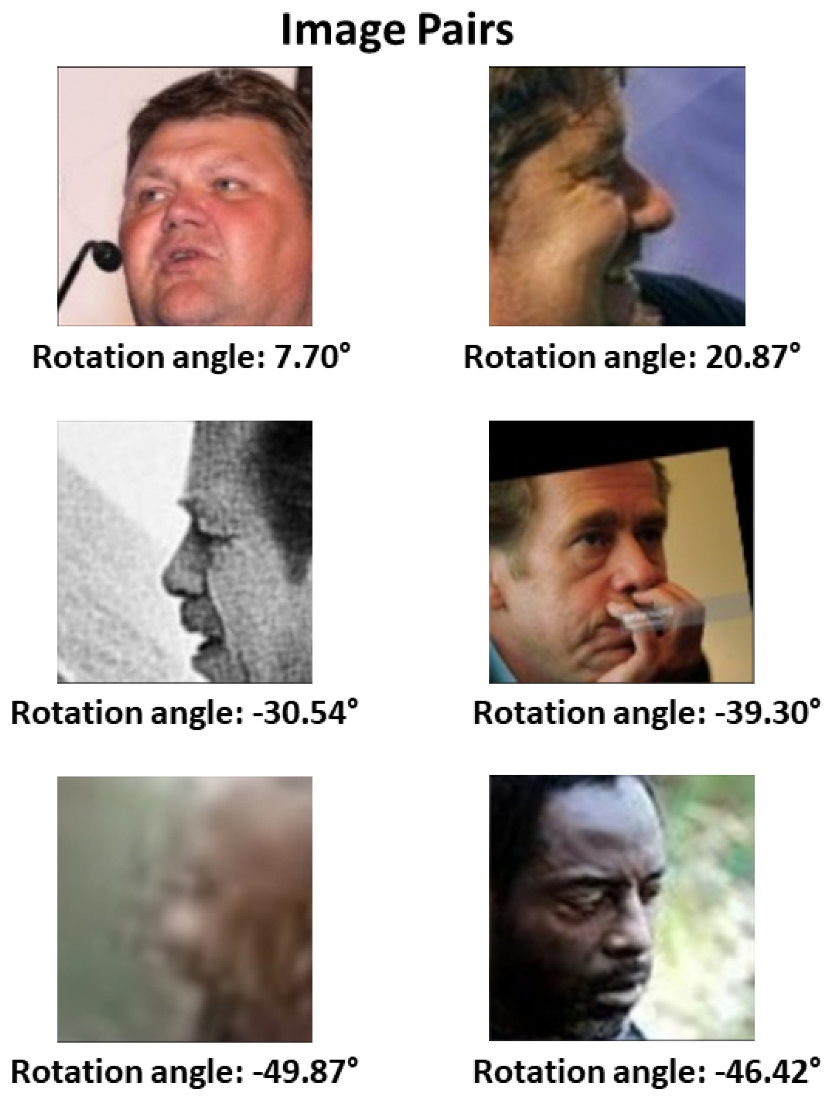

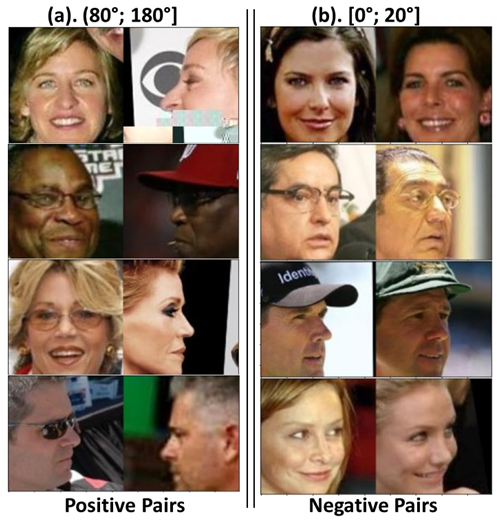

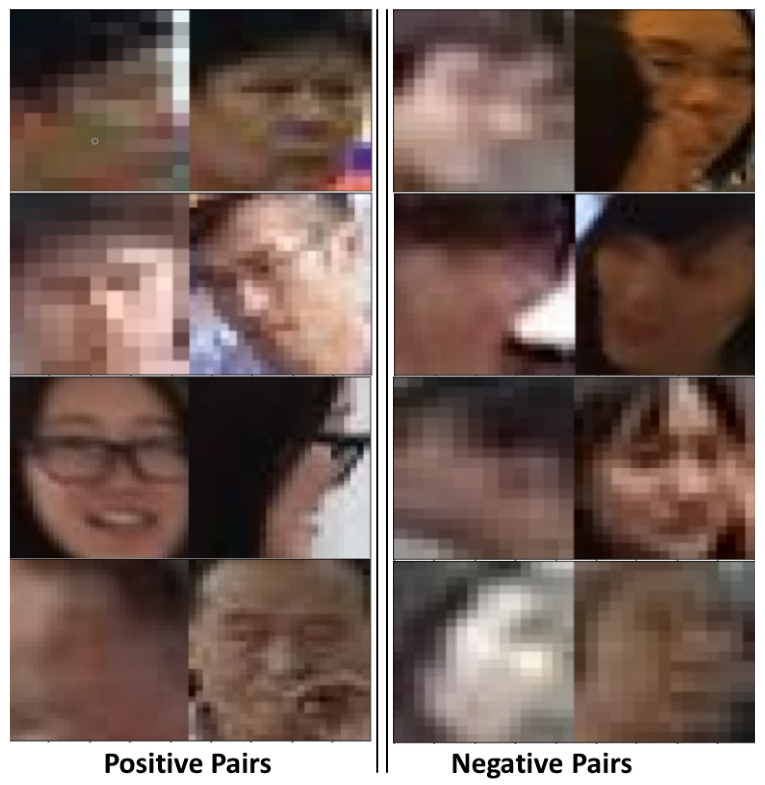

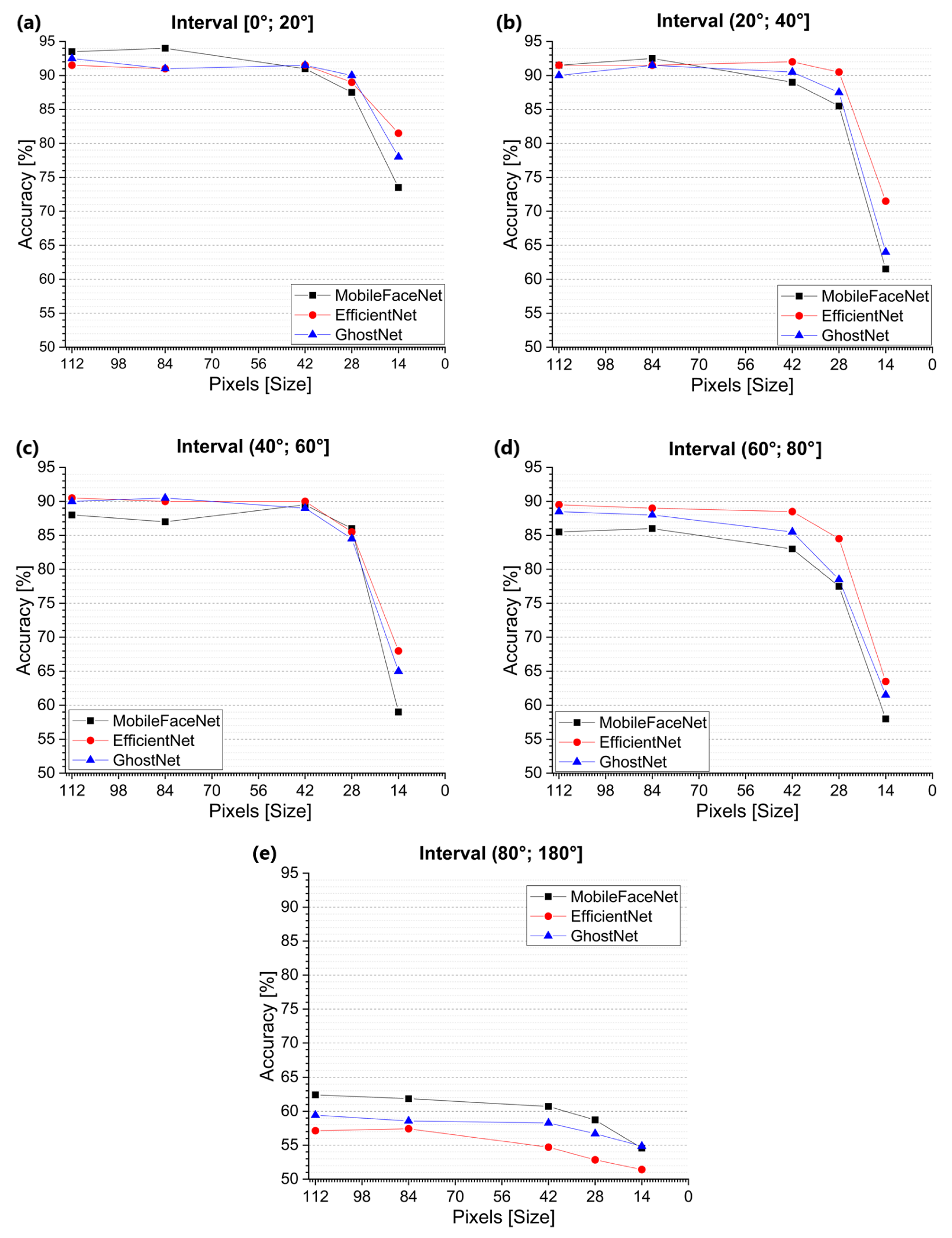

- A methodical analysis of the effect of facial rotation and low resolution was conducted for the face verification of the three aforementioned architectures.

2. Related Work

2.1. Datasets

2.1.1. Training Datasets

2.1.2. Evaluation Datasets

2.2. Face-Recognition Methods

3. Face Recognition in Real-Time

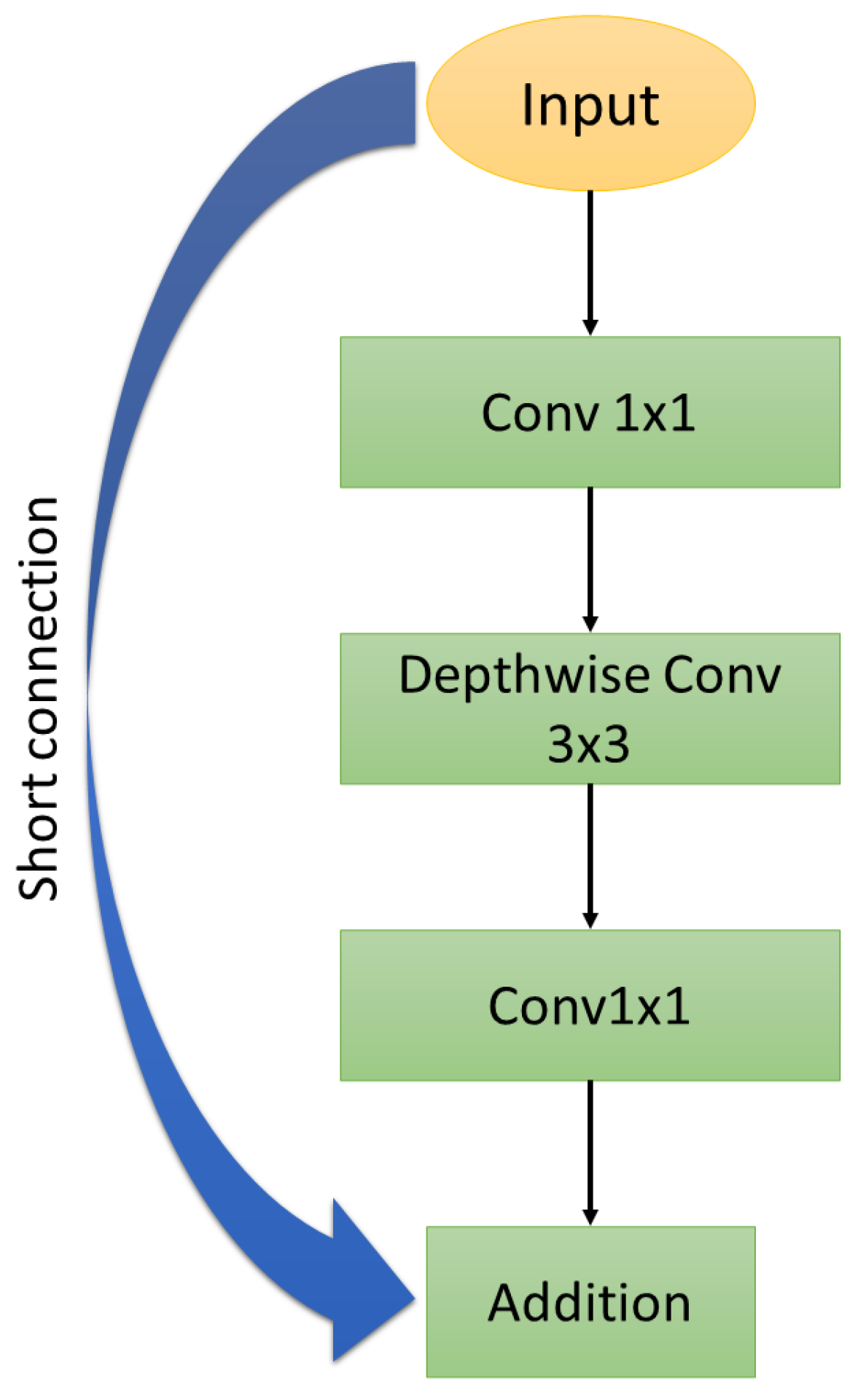

3.1. MobileFaceNet

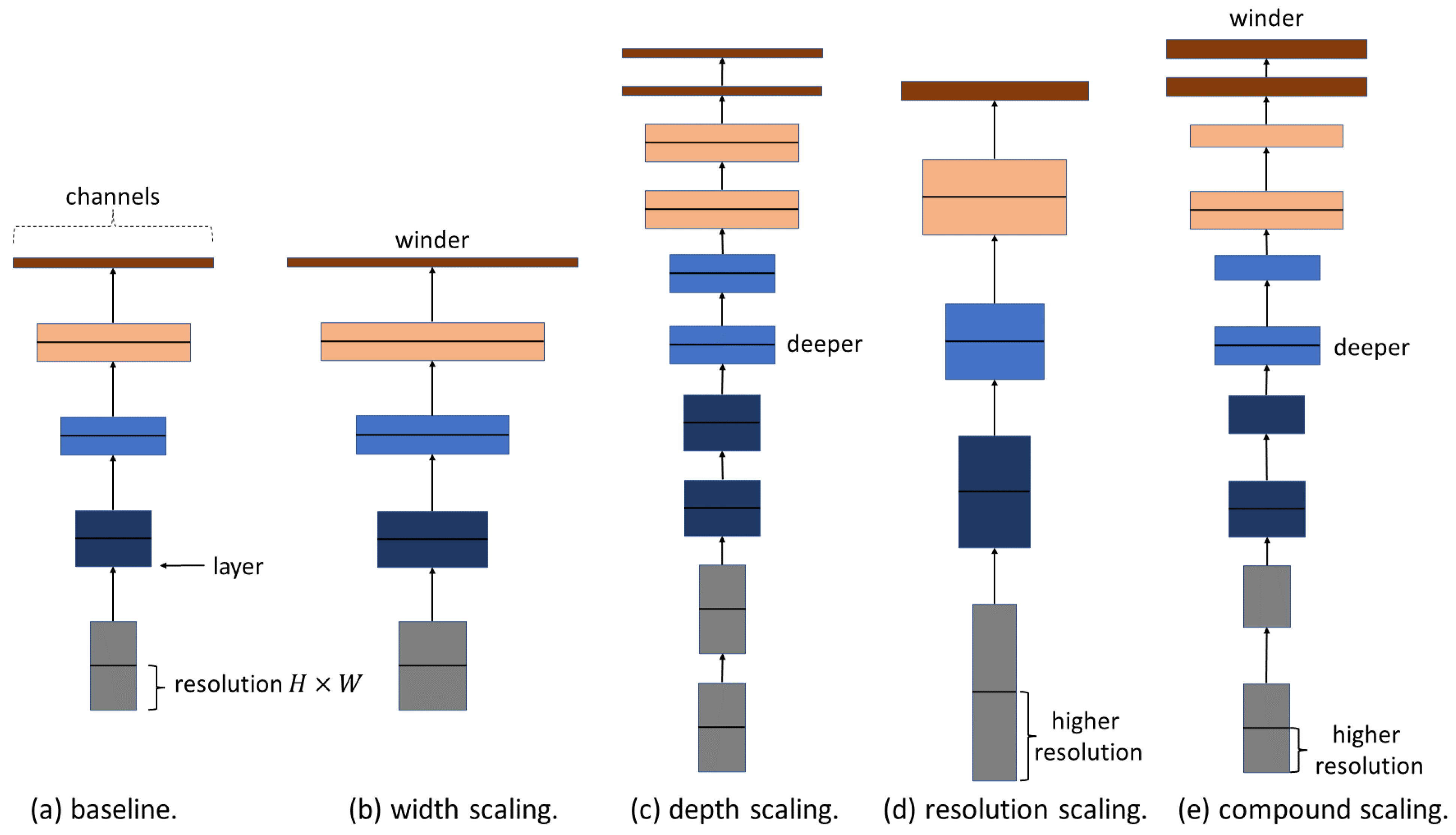

3.2. EfficientNet

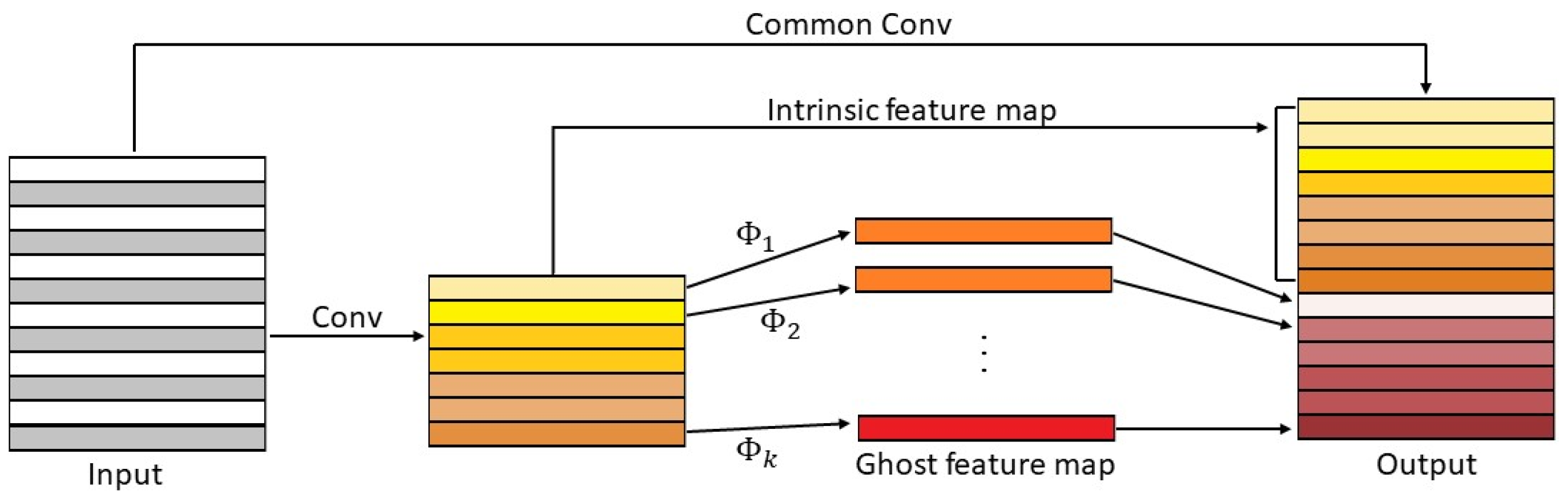

3.3. GhostNet

4. Experiment Setup

4.1. Implementation Details

4.2. Datasets

5. Experimental Results

5.1. Evaluation with Conventional Datasets

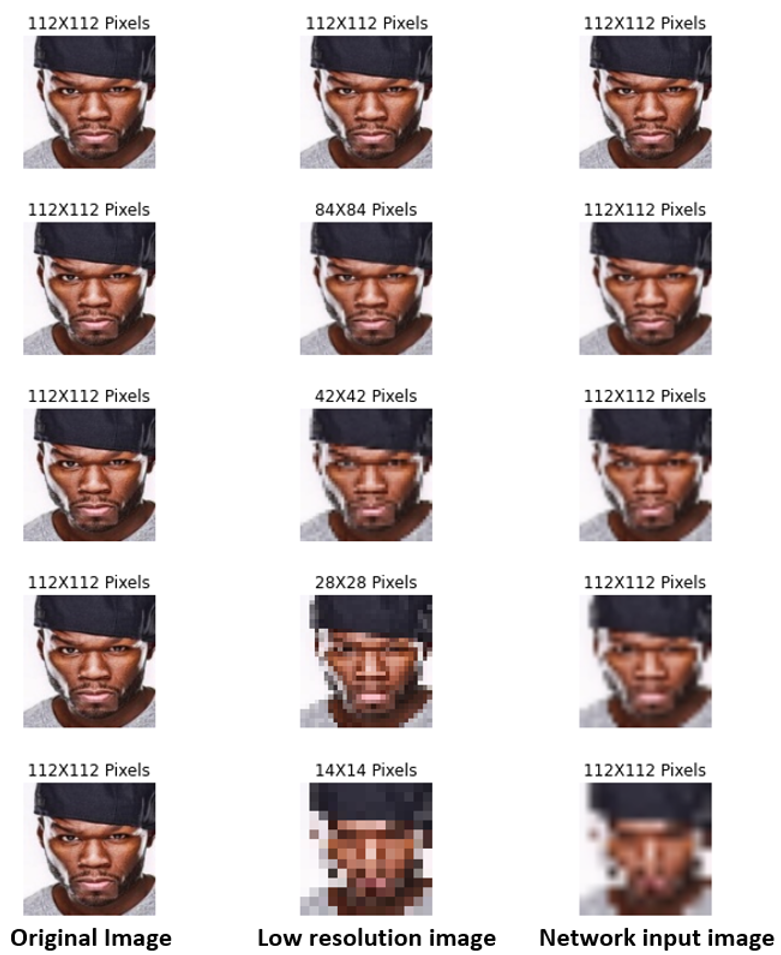

5.2. Evaluation with the Proposed Evaluation Subset

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- de Freitas Pereira, T.; Schmidli, D.; Linghu, Y.; Zhang, X.; Marcel, S.; Günther, M. Eight Years of Face Recognition Research: Reproducibility, Achievements and Open Issues. arXiv 2022, arXiv:2208.04040. [Google Scholar]

- Sundaram, M.; Mani, A. Face Recognition: Demystification of Multifarious Aspect in Evaluation Metrics; Intech: London, UK, 2016. [Google Scholar]

- Zhang, A.; Lipton, Z.C.; Li, M.; Smola, A.J. Dive into deep learning. arXiv 2021, arXiv:2106.11342. [Google Scholar]

- Boutros, F.; Damer, N.; Kuijper, A. QuantFace: Towards lightweight face recognition by synthetic data low-bit quantization. arXiv 2022, arXiv:2206.10526. [Google Scholar]

- Taigman, Y.; Yang, M.; Ranzato, M.; Wolf, L. Deepface: Closing the gap to human-level performance in face verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. Arcface: Additive angular margin loss for deep face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4690–4699. [Google Scholar]

- Meng, Q.; Zhao, S.; Huang, Z.; Zhou, F. Magface: A universal representation for face recognition and quality assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 14225–14234. [Google Scholar]

- Boutros, F.; Siebke, P.; Klemt, M.; Damer, N.; Kirchbuchner, F.; Kuijper, A. PocketNet: Extreme lightweight face recognition network using neural architecture search and multistep knowledge distillation. IEEE Access 2022, 10, 46823–46833. [Google Scholar] [CrossRef]

- Chen, S.; Liu, Y.; Gao, X.; Han, Z. Mobilefacenets: Efficient cnns for accurate real-time face verification on mobile devices. In Proceedings of the Chinese Conference on Biometric Recognition, Urumqi, China, 11–12 August 2018; pp. 428–438. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Zheng, T.; Deng, W. Cross-pose lfw: A database for studying cross-pose face recognition in unconstrained environments. Beijing Univ. Posts Telecommun. Tech. Rep. 2018, 5, 7. [Google Scholar]

- Cheng, Z.; Zhu, X.; Gong, S. Surveillance face recognition challenge. arXiv 2018, arXiv:1804.09691. [Google Scholar]

- Sengupta, S.; Chen, J.C.; Castillo, C.; Patel, V.M.; Chellappa, R.; Jacobs, D.W. Frontal to profile face verification in the wild. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–9. [Google Scholar]

- Yi, D.; Lei, Z.; Liao, S.; Li, S.Z. Learning face representation from scratch. arXiv 2014, arXiv:1411.7923. [Google Scholar]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A. Deep Face Recognition; BMVC Press: Swansea, UK, 2015. [Google Scholar]

- Guo, Y.; Zhang, L.; Hu, Y.; He, X.; Gao, J. Ms-celeb-1m: A dataset and benchmark for large-scale face recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 87–102. [Google Scholar]

- Gecer, B.; Bhattarai, B.; Kittler, J.; Kim, T.K. Semi-supervised adversarial learning to generate photorealistic face images of new identities from 3d morphable model. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 217–234. [Google Scholar]

- Zhu, Z.; Huang, G.; Deng, J.; Ye, Y.; Huang, J.; Chen, X.; Zhu, J.; Yang, T.; Guo, J.; Lu, J.; et al. Masked face recognition challenge: The webface260m track report. arXiv 2021, arXiv:2108.07189. [Google Scholar]

- DeepGlint. Trillion Pairs Testing Faceset; DeepGlint: Beijing, China, 2019. [Google Scholar]

- Huang, G.B.; Mattar, M.; Berg, T.; Learned-Miller, E. Labeled faces in the wild: A database forstudying face recognition in unconstrained environments. In Proceedings of the Workshop on Faces in ‘Real-Life’ Images: Detection, Alignment, and Recognition, Marseille, France, 17–20 October 2008. [Google Scholar]

- Gross, R.; Matthews, I.; Cohn, J.; Kanade, T.; Baker, S. Multi-pie. Image Vis. Comput. 2010, 28, 807–813. [Google Scholar] [CrossRef]

- Grgic, M.; Delac, K.; Grgic, S. SCface–surveillance cameras face database. Multimed. Tools Appl. 2011, 51, 863–879. [Google Scholar] [CrossRef]

- Wang, M.; Deng, W. Deep face recognition: A survey. Neurocomputing 2021, 429, 215–244. [Google Scholar] [CrossRef]

- Chen, J.; Guo, Z.; Hu, J. Ring-regularized cosine similarity learning for fine-grained face verification. Pattern Recognit. Lett. 2021, 148, 68–74. [Google Scholar] [CrossRef]

- Chen, J.C.; Patel, V.M.; Chellappa, R. Unconstrained face verification using deep cnn features. In Proceedings of the 2016 IEEE winter conference on applications of computer vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–9. [Google Scholar]

- Guo, G.; Zhang, N. A survey on deep learning based face recognition. Comput. Vis. Image Underst. 2019, 189, 102805. [Google Scholar] [CrossRef]

- Liu, W.; Wen, Y.; Yu, Z.; Li, M.; Raj, B.; Song, L. Sphereface: Deep hypersphere embedding for face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 212–220. [Google Scholar]

- Wang, H.; Wang, Y.; Zhou, Z.; Ji, X.; Gong, D.; Zhou, J.; Li, Z.; Liu, W. Cosface: Large margin cosine loss for deep face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5265–5274. [Google Scholar]

- Zhang, X.; Zhao, R.; Qiao, Y.; Wang, X.; Li, H. Adacos: Adaptively scaling cosine logits for effectively learning deep face representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10823–10832. [Google Scholar]

- Wang, X.; Zhang, S.; Wang, S.; Fu, T.; Shi, H.; Mei, T. Mis-Classified Vector Guided Softmax Loss for Face Recognition. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12241–12248. [Google Scholar] [CrossRef]

- Boutros, F.; Damer, N.; Kirchbuchner, F.; Kuijper, A. Elasticface: Elastic margin loss for deep face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1578–1587. [Google Scholar]

- Zhao, J.; Cheng, Y.; Xu, Y.; Xiong, L.; Li, J.; Zhao, F.; Jayashree, K.; Pranata, S.; Shen, S.; Xing, J.; et al. Towards pose invariant face recognition in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2207–2216. [Google Scholar]

- Ju, Y.J.; Lee, G.H.; Hong, J.H.; Lee, S.W. Complete face recovery gan: Unsupervised joint face rotation and de-occlusion from a single-view image. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 4–8 January 2022; pp. 3711–3721. [Google Scholar]

- Nam, G.P.; Choi, H.; Cho, J.; Kim, I.J. PSI-CNN: A pyramid-based scale-invariant CNN architecture for face recognition robust to various image resolutions. Appl. Sci. 2018, 8, 1561. [Google Scholar] [CrossRef]

- Shahbakhsh, M.B.; Hassanpour, H. Empowering Face Recognition Methods Using a GAN-based Single Image Super-Resolution Network. Int. J. Eng. 2022, 35, 1858–1866. [Google Scholar] [CrossRef]

- Maity, S.; Abdel-Mottaleb, M.; Asfour, S.S. Multimodal low resolution face and frontal gait recognition from surveillance video. Electronics 2021, 10, 1013. [Google Scholar] [CrossRef]

- Nadeem, A.; Ashraf, M.; Rizwan, K.; Qadeer, N.; AlZahrani, A.; Mehmood, A.; Abbasi, Q.H. A Novel Integration of Face-Recognition Algorithms with a Soft Voting Scheme for Efficiently Tracking Missing Person in Challenging Large-Gathering Scenarios. Sensors 2022, 22, 1153. [Google Scholar] [CrossRef]

- Mishra, N.K.; Dutta, M.; Singh, S.K. Multiscale parallel deep CNN (mpdCNN) architecture for the real low-resolution face recognition for surveillance. Image Vis. Comput. 2021, 115, 104290. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Martínez-Díaz, Y.; Méndez-Vázquez, H.; Luevano, L.S.; Chang, L.; Gonzalez-Mendoza, M. Lightweight low-resolution face recognition for surveillance applications. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 5421–5428. [Google Scholar]

- Oo, S.L.M.; Oo, A.N. Child Face Recognition System Using Mobilefacenet. Ph.D. Thesis, University of Information Technology, Mandalay, Myanmar, 2019. [Google Scholar]

- Xiao, J.; Jiang, G.; Liu, H. A Lightweight Face Recognition Model based on MobileFaceNet for Limited Computation Environment. EAI Endorsed Trans. Internet Things 2021, 7, 1–9. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Y.; Hu, Y.; Shi, H.; Mei, T. Facex-zoo: A pytorch toolbox for face recognition. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event, 20–24 October 2021; pp. 3779–3782. [Google Scholar]

- Hempel, T.; Abdelrahman, A.A.; Al-Hamadi, A. 6D Rotation Representation For Unconstrained Head Pose Estimation. arXiv 2022, arXiv:2202.12555. [Google Scholar]

| Input | Operator | t | c | n | s |

|---|---|---|---|---|---|

| 1122 × 3 | Conv3×3 | - | 64 | 1 | 2 |

| 562 × 64 | Depthwise Conv3×3 | - | 64 | 1 | 1 |

| 562 × 64 | Bottleneck | 2 | 64 | 5 | 2 |

| 282 × 64 | Bottleneck | 4 | 128 | 1 | 2 |

| 142 × 128 | Bottleneck | 2 | 128 | 6 | 1 |

| 142 × 128 | Bottleneck | 4 | 128 | 1 | 2 |

| 72 × 128 | Bottleneck | 2 | 128 | 2 | 1 |

| 72 × 128 | Conv1×1 | - | 512 | 1 | 1 |

| 72 × 512 | Linear GDConv7×7 | - | 512 | 1 | 1 |

| 12 × 512 | Linear Conv1×1 | - | 128 | 1 | 1 |

| Stage | Operator | Resolution | Stride | # of Channels | Layers |

|---|---|---|---|---|---|

| 1 | Conv3×3 | 224 × 224 | 2 | 32 | 1 |

| 2 | MBConv1, k3×3 | 112 × 112 | 1 | 16 | 1 |

| 3 | MBConv6, k3×3 | 112 × 112 | 2 | 24 | 2 |

| 4 | MBConv6, k5×5 | 56 × 56 | 2 | 40 | 2 |

| 5 | MBConv6, k3×3 | 28 × 28 | 2 | 80 | 3 |

| 6 | MBConv6, k5×5 | 14 × 14 | 1 | 112 | 3 |

| 7 | MBConv6, k5×5 | 14 × 14 | 2 | 192 | 4 |

| 8 | MBConv6, k3×3 | 7 × 7 | 1 | 320 | 1 |

| 9 | Conv1×1 and Pooling and FC | 7 × 7 | 1 | 1280 | 1 |

| Input | Operator | t | c | SE | Stride |

|---|---|---|---|---|---|

| 2242 × 3 | Conv2d3×3 | - | 16 | - | 2 |

| 1122 × 16 | G-bneck | 16 | 16 | - | 1 |

| 1122 × 16 | G-bneck | 48 | 24 | - | 2 |

| 562 × 24 | G-bneck | 72 | 24 | - | 1 |

| 562 × 24 | G-bneck | 72 | 40 | 1 | 2 |

| 282 × 40 | G-bneck | 120 | 40 | 1 | 1 |

| 282 × 40 | G-bneck | 240 | 180 | - | 2 |

| 142 × 80 | G-bneck | 200 | 80 | - | 1 |

| 142 × 80 | G-bneck | 184 | 80 | - | 1 |

| 142 × 80 | G-bneck | 184 | 80 | - | 1 |

| 142 × 80 | G-bneck | 480 | 112 | 1 | 1 |

| 142 × 112 | G-bneck | 672 | 112 | 1 | 1 |

| 142 × 112 | G-bneck | 672 | 160 | 1 | 2 |

| 72 × 160 | G-bneck | 960 | 160 | - | 1 |

| 72 × 160 | G-bneck | 960 | 160 | 1 | 1 |

| 72 × 160 | G-bneck | 960 | 160 | - | 1 |

| 72 × 160 | G-bneck | 960 | 160 | 1 | 1 |

| 72 × 160 | Conv2d1×1 | - | 960 | - | 1 |

| 72 × 960 | AvgPool7×7 | - | - | - | - |

| 12 × 960 | Conv2d1×1 | - | 1280 | - | 1 |

| Intervals | # of CPLFW Images | # of CFPW Images | Total # of Images | # of Positive Pairs | # of Negative Pairs | Total # of Pairs |

|---|---|---|---|---|---|---|

| 326 | 74 | 400 | 100 | 100 | 200 | |

| 400 | - | 400 | 100 | 100 | 200 | |

| 400 | - | 400 | 100 | 100 | 200 | |

| 400 | - | 400 | 100 | 100 | 200 | |

| - | 1400 | 1400 | 350 | 350 | 700 | |

| Total | 1526 | 1474 | 3000 | 750 | 750 | 1500 |

| Model | Accuracy (%) |

|---|---|

| MobileFaceNet | 83.08 |

| EfficientNet-B0 | 85.16 |

| GhostNet | 83.51 |

| Intervals | # of Pairs | MobileFaceNet (%) | EfficientNet-B0 (%) | GhostNet (%) |

|---|---|---|---|---|

| 1237 | 86.41 | 87.55 | 86.82 | |

| 1127 | 88.55 | 89.17 | 87.57 | |

| 1258 | 84.18 | 87.28 | 85.77 | |

| 1017 | 80.13 | 83.77 | 82.10 | |

| 1225 | 78.93 | 81.06 | 78.53 |

| Model | Accuracy (%) |

|---|---|

| MobileFaceNet | 63.78 |

| EfficientNet-B0 | 63.82 |

| GhostNet | 62.58 |

| Model | Accuracy (%) |

|---|---|

| MobileFaceNet | 76.93 |

| EfficientNet-B0 | 75.06 |

| GhostNet | 75.86 |

| Intervals | # of Pairs | MobileFaceNet (%) | EfficientNet-B0 (%) | GhostNet (%) |

|---|---|---|---|---|

| 200 | 93.50 | 91.50 | 82.50 | |

| 200 | 91.50 | 91.50 | 90.00 | |

| 200 | 88.00 | 90.50 | 90.00 | |

| 200 | 85.00 | 89.50 | 88.50 | |

| 700 | 62.42 | 57.14 | 59.42 |

| Model | 14-Pixel Accuracy (%) | 28-Pixel Accuracy (%) | 42-Pixel Accuracy (%) | 84-Pixel Accuracy (%) | 112-Pixel Accuracy (%) |

|---|---|---|---|---|---|

| MobileFaceNet | 59.06 | 72.26 | 75.33 | 76.80 | 76.93 |

| EfficientNet-B0 | 61.93 | 71.26 | 73.80 | 75.00 | 75.06 |

| GhostNet | 61.40 | 71.86 | 74.73 | 75.46 | 75.86 |

| Method | GPU (GTX 1060) | CPU (Intel Core i7) |

|---|---|---|

| MobileFaceNet | 27.0 ms (37.0 FPS) | 29.9 ms (33.4 FPS) |

| EfficientNet-B0 | 52.1 ms (19.2 FPS) | 55.6 ms (18.0 FPS) |

| GhostNet | 30.3 ms (32.9 FPS) | 33.5 ms (29.9 FPS) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Perez-Montes, F.; Olivares-Mercado, J.; Sanchez-Perez, G.; Benitez-Garcia, G.; Prudente-Tixteco, L.; Lopez-Garcia, O. Analysis of Real-Time Face-Verification Methods for Surveillance Applications. J. Imaging 2023, 9, 21. https://doi.org/10.3390/jimaging9020021

Perez-Montes F, Olivares-Mercado J, Sanchez-Perez G, Benitez-Garcia G, Prudente-Tixteco L, Lopez-Garcia O. Analysis of Real-Time Face-Verification Methods for Surveillance Applications. Journal of Imaging. 2023; 9(2):21. https://doi.org/10.3390/jimaging9020021

Chicago/Turabian StylePerez-Montes, Filiberto, Jesus Olivares-Mercado, Gabriel Sanchez-Perez, Gibran Benitez-Garcia, Lidia Prudente-Tixteco, and Osvaldo Lopez-Garcia. 2023. "Analysis of Real-Time Face-Verification Methods for Surveillance Applications" Journal of Imaging 9, no. 2: 21. https://doi.org/10.3390/jimaging9020021

APA StylePerez-Montes, F., Olivares-Mercado, J., Sanchez-Perez, G., Benitez-Garcia, G., Prudente-Tixteco, L., & Lopez-Garcia, O. (2023). Analysis of Real-Time Face-Verification Methods for Surveillance Applications. Journal of Imaging, 9(2), 21. https://doi.org/10.3390/jimaging9020021