Self-Supervised Learning of Satellite-Derived Vegetation Indices for Clustering and Visualization of Vegetation Types

Abstract

1. Introduction

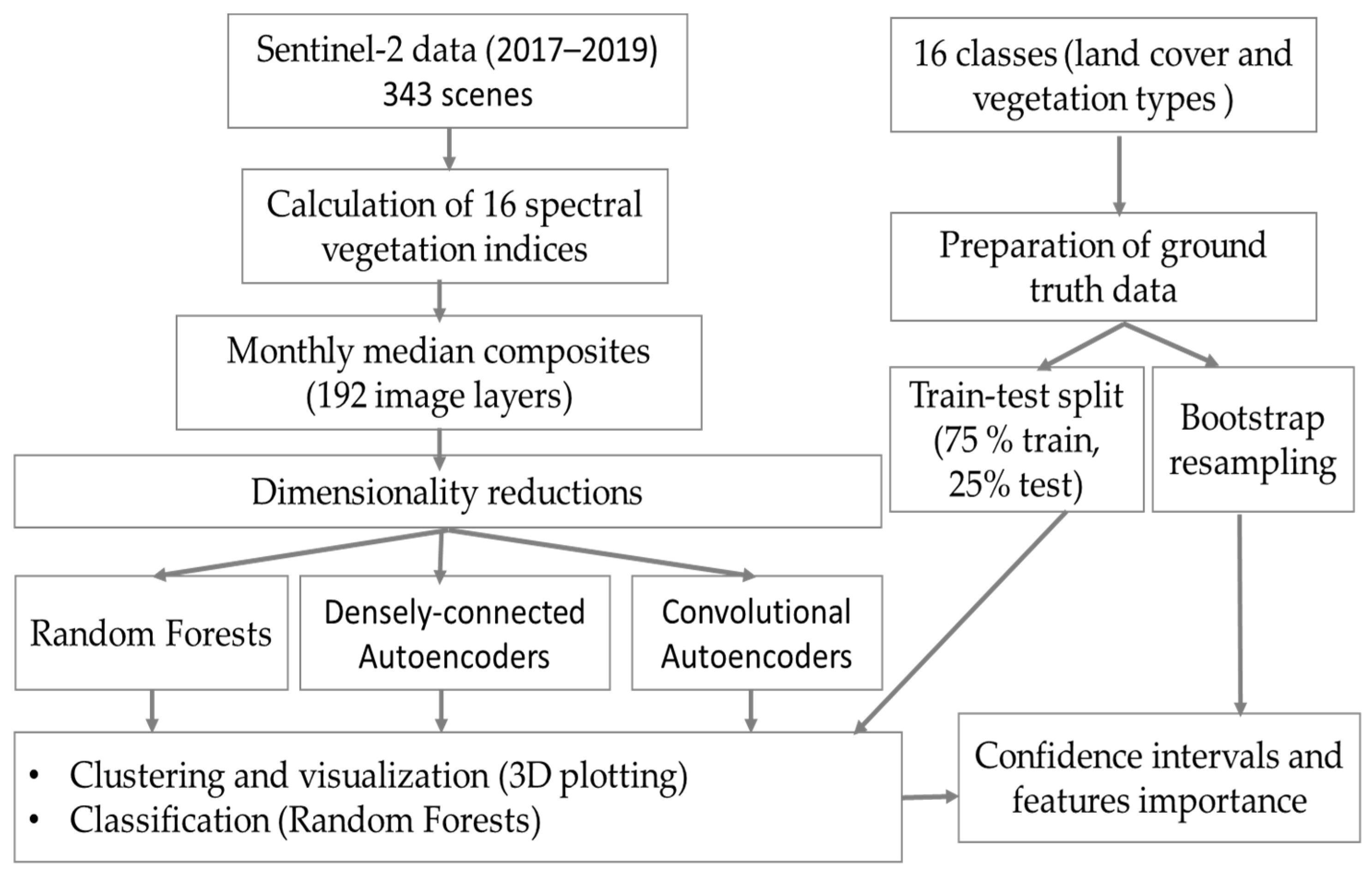

2. Materials and Methods

2.1. Study Area

2.2. Collection of Ground Truth Data

2.3. Processing of Satellite Data

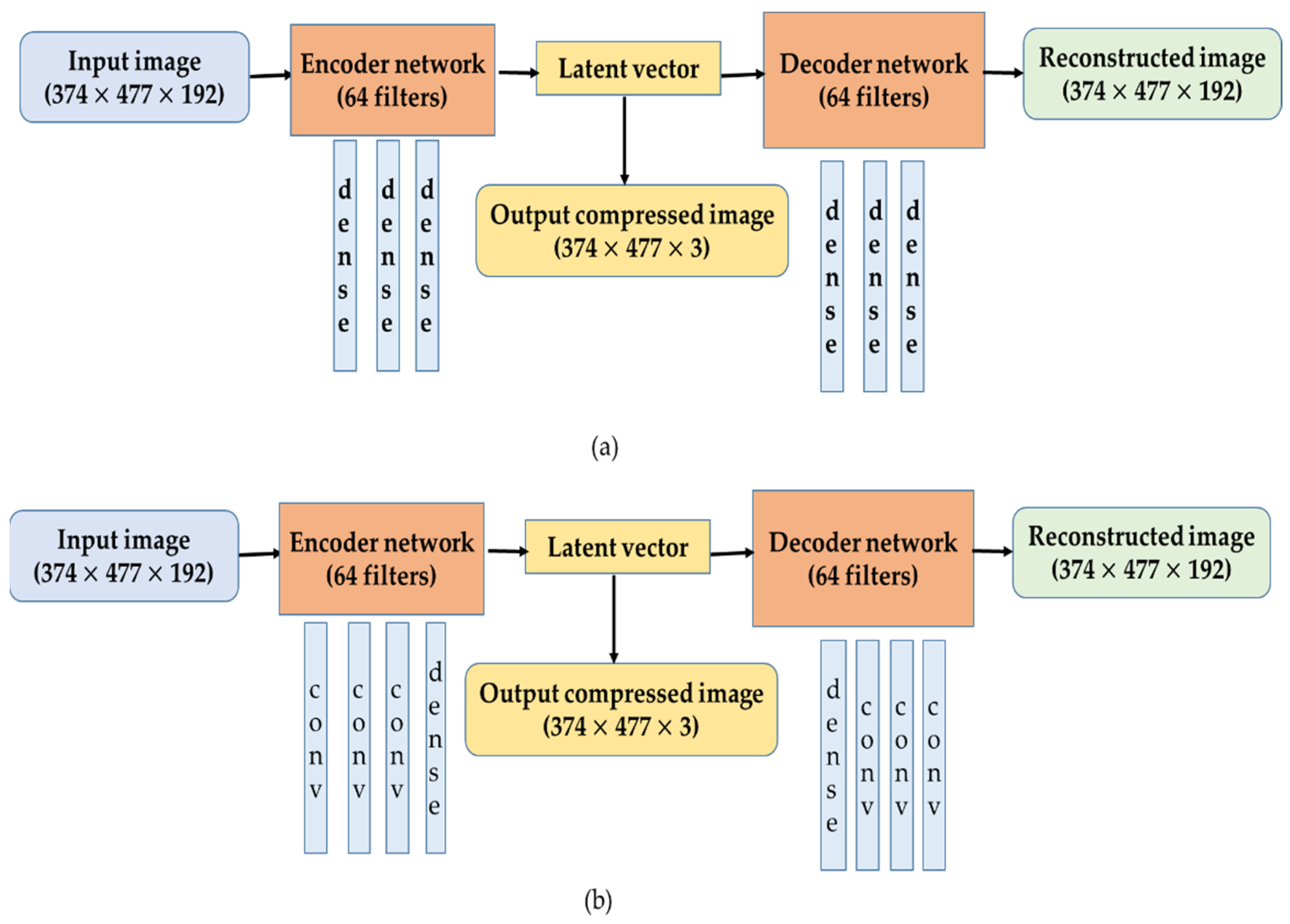

2.4. Dimensionality Reduction

2.5. Quantitative Evaluation

3. Results

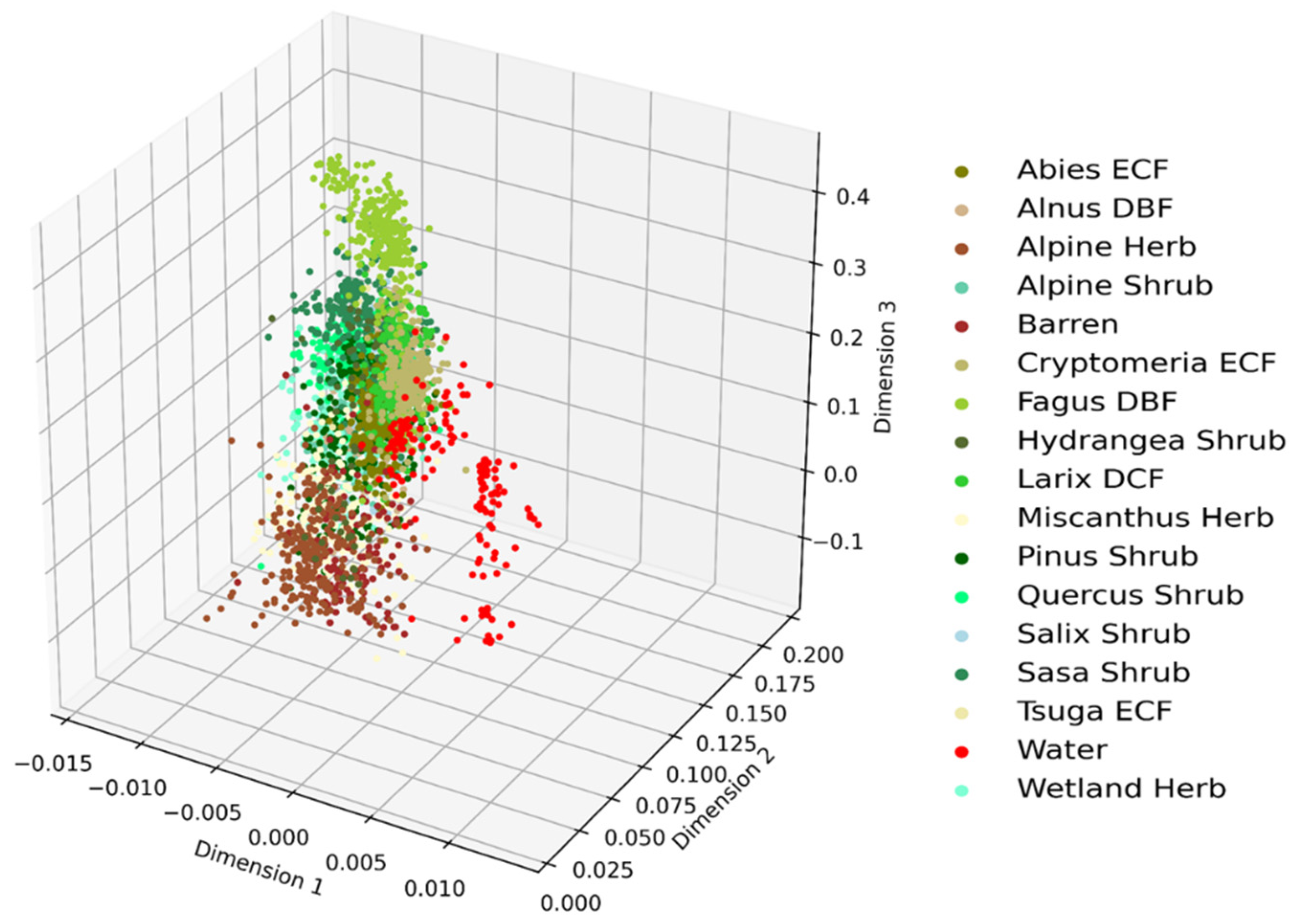

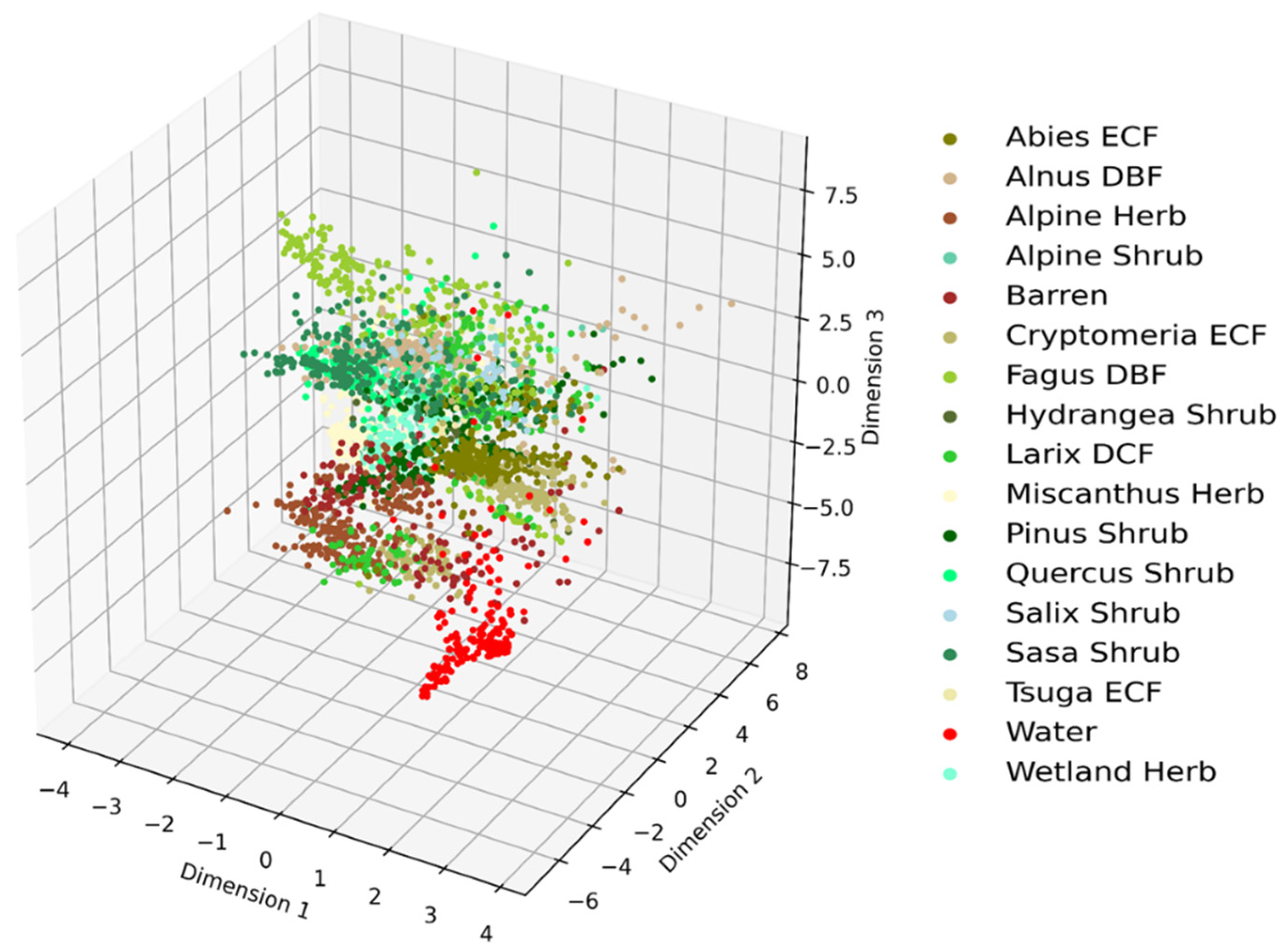

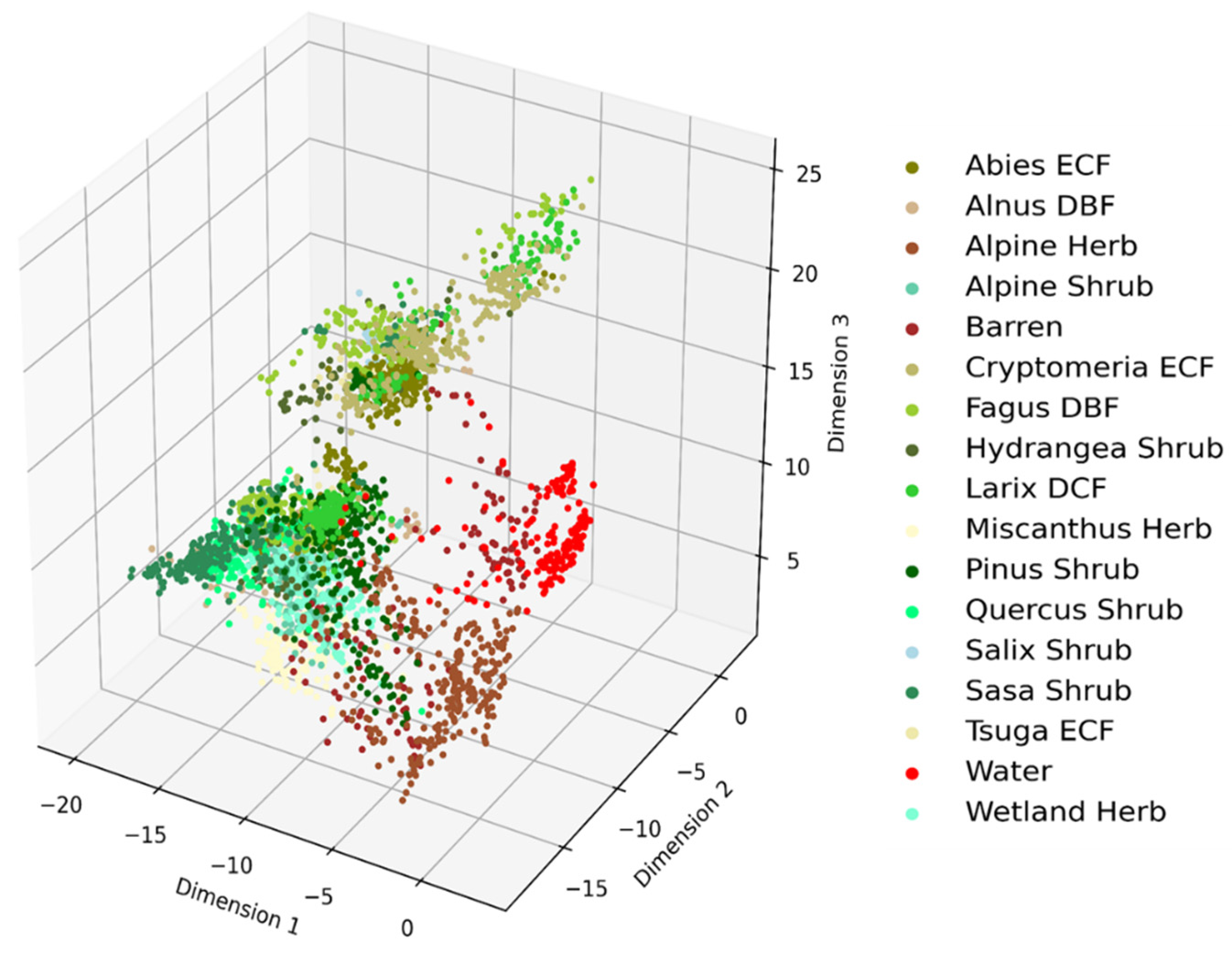

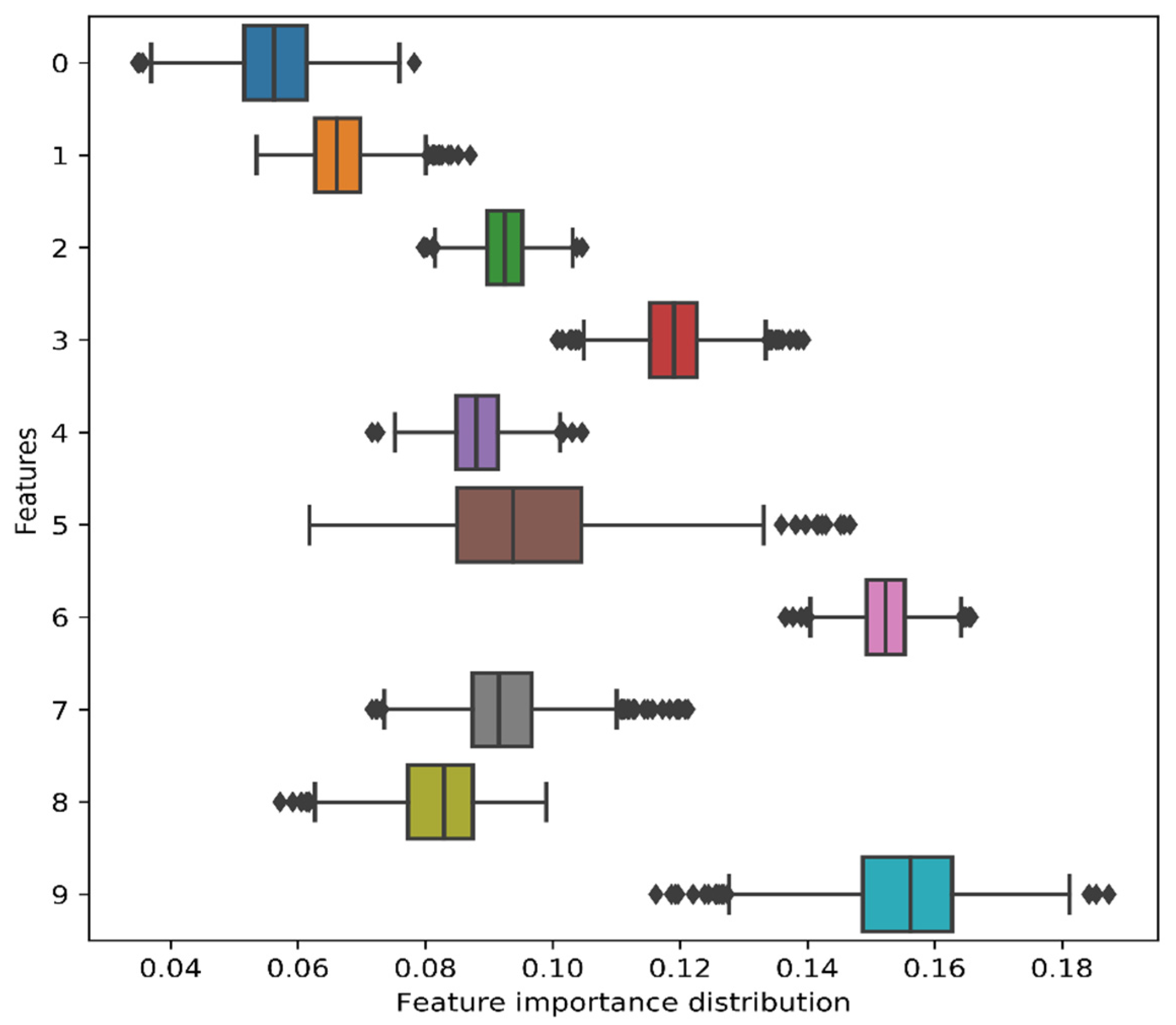

3.1. Clustering and Visualization

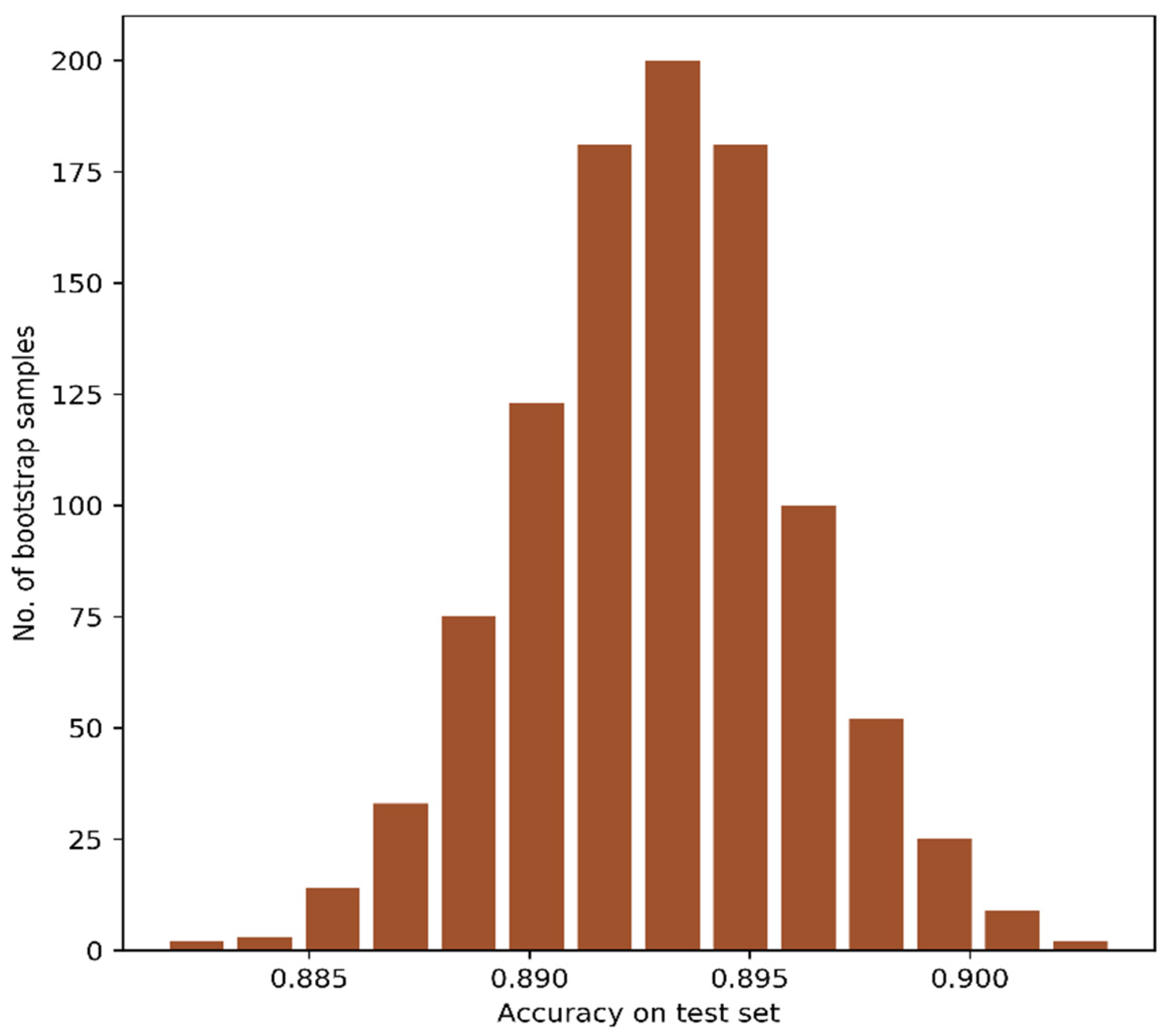

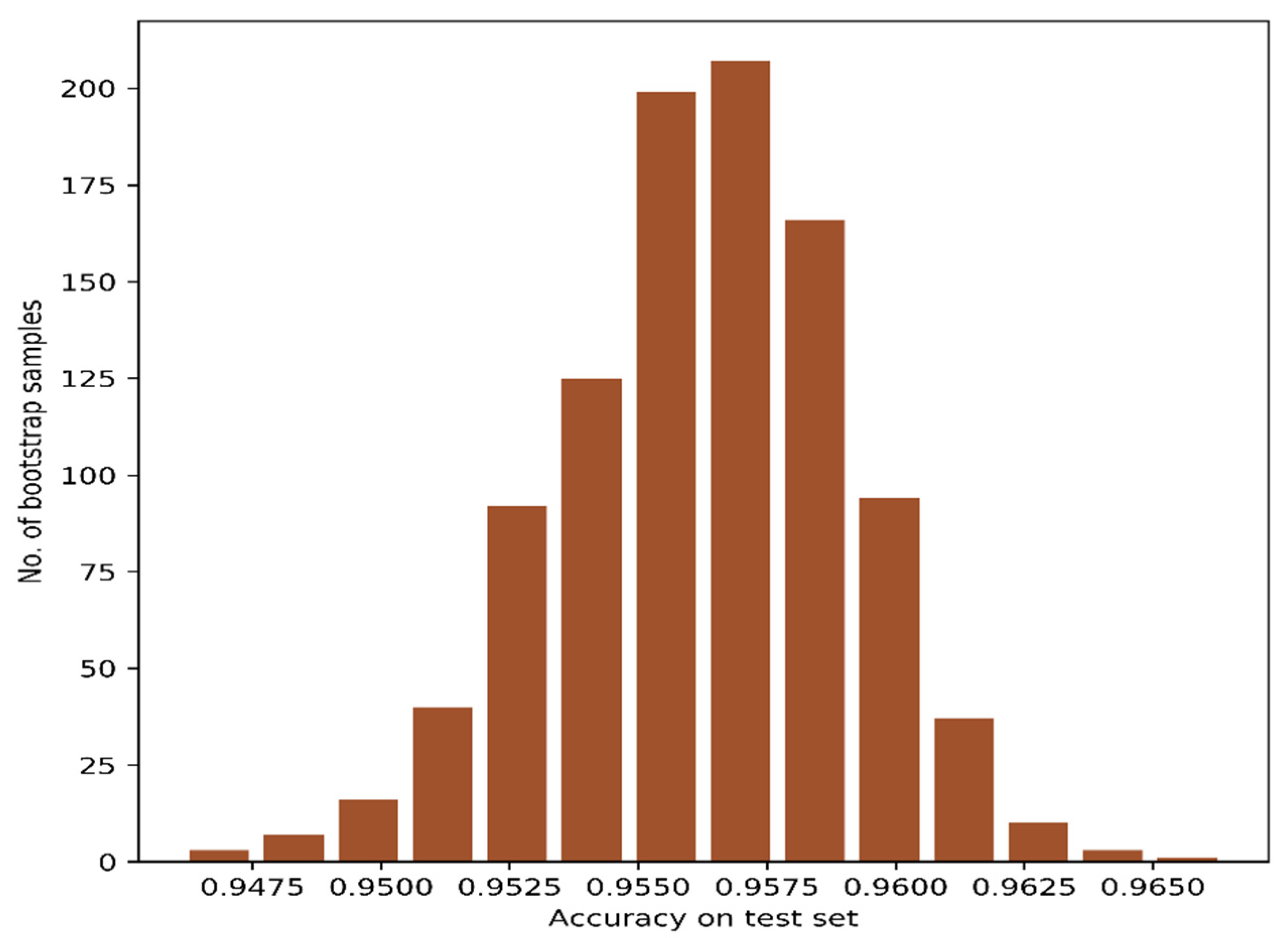

3.2. Confidence Intervals

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ustin, S.L.; Gamon, J.A. Remote sensing of plant functional types: Tansley review. New Phytol. 2010, 186, 795–816. [Google Scholar] [CrossRef]

- Deepak, M.; Keski-Saari, S.; Fauch, L.; Granlund, L.; Oksanen, E.; Keinänen, M. Leaf canopy layers affect spectral reflectance in silver birch. Remote Sens. 2019, 11, 2884. [Google Scholar] [CrossRef]

- Bannari, A.; Morin, D.; Bonn, F.; Huete, A.R. A review of vegetation indices. Remote Sens. Rev. 1995, 13, 95–120. [Google Scholar] [CrossRef]

- Teillet, P. Effects of spectral, spatial, and radiometric characteristics on remote sensing vegetation indices of forested regions. Remote Sens. Environ. 1997, 61, 139–149. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sens. 2017, 2017, 1–17. [Google Scholar] [CrossRef]

- Bach, F. Breaking the curse of dimensionality with convex neural networks. J. Mach. Learn. Res. 2017, 18, 629–681. [Google Scholar]

- Poggio, T.; Mhaskar, H.; Rosasco, L.; Miranda, B.; Liao, Q. Why and when can deep-but not shallow-networks avoid the curse of dimensionality: A review. Int. J. Autom. Comput. 2017, 14, 503–519. [Google Scholar] [CrossRef]

- Yan, W.; Sun, Q.; Sun, H.; Li, Y.; Ren, Z. Multiple kernel dimensionality reduction based on linear regression virtual reconstruction for image set classification. Neurocomputing 2019, 361, 256–269. [Google Scholar] [CrossRef]

- Reddy, G.T.; Reddy, M.P.K.; Lakshmanna, K.; Kaluri, R.; Rajput, D.S.; Srivastava, G.; Baker, T. Analysis of dimensionality reduction techniques on big data. IEEE Access 2020, 8, 54776–54788. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Biau, G. Analysis of a random forests model. J. Mach. Learn. Res. 2012, 13, 1063–1095. [Google Scholar]

- Archer, K.J.; Kimes, R.V. Empirical characterization of random forest variable importance measures. Comput. Stat. Data Anal. 2008, 52, 2249–2260. [Google Scholar] [CrossRef]

- Genuer, R.; Poggi, J.-M.; Tuleau-Malot, C. Variable selection using random forests. Pattern Recognit. Lett. 2010, 31, 2225–2236. [Google Scholar] [CrossRef]

- Behnamian, A.; Millard, K.; Banks, S.N.; White, L.; Richardson, M.; Pasher, J. A systematic approach for variable selection with random forests: Achieving stable variable importance values. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1988–1992. [Google Scholar] [CrossRef]

- Poona, N.K.; Ismail, R. Reducing hyperspectral data dimensionality using random forest based wrappers. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium—IGARSS, Melbourne, VIC, Australia, 21–26 July 2013; pp. 1470–1473. [Google Scholar]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Lefkovits, L.; Lefkovits, S.; Emerich, S.; Vaida, M.F. Random Forest Feature Selection Approach for Image Segmentation; Verikas, A., Radeva, P., Nikolaev, D.P., Zhang, W., Zhou, J., Eds.; SPIE: Bellingham, WA, USA, 2017; p. 1034117. [Google Scholar]

- Gilbertson, J.K.; van Niekerk, A. Value of dimensionality reduction for crop differentiation with multi-temporal imagery and machine learning. Comput. Electron. Agric. 2017, 142, 50–58. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Cutler, D.R.; Edwards, T.C.; Beard, K.H.; Cutler, A.; Hess, K.T.; Gibson, J.; Lawler, J.J. Random forests for classification in ecology. Ecology 2007, 88, 2783–2792. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Tipping, M.E.; Bishop, C.M. Probabilistic principal component analysis. J. R. Stat. Soc. Ser. 1999, 61, 611–622. [Google Scholar] [CrossRef]

- Abdi, H.; Williams, L.J. Principal component analysis: Principal component analysis. WIREs Comp. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- LJPvd, M.; Hinton, G. Visualizing high-dimensional data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Gisbrecht, A.; Schulz, A.; Hammer, B. Parametric nonlinear dimensionality reduction using kernel t-SNE. Neurocomputing 2015, 147, 71–82. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Tao, D.; Huang, X. A modified stochastic neighbor embedding for multi-feature dimension reduction of remote sensing images. ISPRS J. Photogramm. Remote Sens. 2013, 83, 30–39. [Google Scholar] [CrossRef]

- Oliveira, J.J.M.; Cordeiro, R.L.F. Unsupervised dimensionality reduction for very large datasets: Are we going to the right direction? Knowl. Based Syst. 2020, 196, 105777. [Google Scholar] [CrossRef]

- Zhang, Z.; Verbeke, L.; De Clercq, E.; Ou, X.; De Wulf, R. Vegetation change detection using artificial neural networks with ancillary data in Xishuangbanna, Yunnan Province, China. Chin. Sci. Bull. 2007, 52, 232–243. [Google Scholar] [CrossRef]

- Clark, J.Y.; Corney, D.P.A.; Tang, H.L. Automated plant identification using artificial neural networks. In Proceedings of the 2012 IEEE Symposium on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), San Diego, CA, USA, 9–12 May 2012; pp. 343–348. [Google Scholar]

- Pacifico, L.D.S.; Macario, V.; Oliveira, J.F.L. Plant classification using artificial neural networks. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–6. [Google Scholar]

- Hilbert, D. The utility of artificial neural networks for modelling the distribution of vegetation in past, present and future climates. Ecol. Model. 2001, 146, 311–327. [Google Scholar] [CrossRef]

- Sharma, S.; Ochsner, T.E.; Twidwell, D.; Carlson, J.D.; Krueger, E.S.; Engle, D.M.; Fuhlendorf, S.D. Nondestructive estimation of standing crop and fuel moisture content in tallgrass prairie. Rangel. Ecol. Manag. 2018, 71, 356–362. [Google Scholar] [CrossRef]

- Carpenter, G.A.; Gopal, S.; Macomber, S.; Martens, S.; Woodcock, C.E. A neural network method for mixture estimation for vegetation mapping. Remote Sens. Environ. 1999, 70, 138–152. [Google Scholar] [CrossRef]

- Wang, Y.; Yao, H.; Zhao, S. Auto-Encoder based dimensionality reduction. Neurocomputing 2016, 184, 232–242. [Google Scholar] [CrossRef]

- Mohbat; Mukhtar, T.; Khurshid, N.; Taj, M. Dimensionality reduction using discriminative autoencoders for remote sensing image retrieval. In Image Analysis and Processing—ICIAP 2019; Ricci, E., Rota Bulò, S., Snoek, C., Lanz, O., Messelodi, S., Sebe, N., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; Volume 11751, pp. 499–508. ISBN 978-3-030-30641-0. [Google Scholar]

- Pinaya, W.H.L.; Vieira, S.; Garcia-Dias, R.; Mechelli, A. Autoencoders. In Machine Learning; Elsevier: Amsterdam, The Netherlands, 2020; pp. 193–208. ISBN 978-0-12-815739-8. [Google Scholar]

- Hinton, G.E. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Al-Hmouz, R.; Pedrycz, W.; Balamash, A.; Morfeq, A. Logic-Driven autoencoders. Knowl. Based Syst. 2019, 183, 104874. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Tanre, D. Atmospherically resistant vegetation index (ARVI) for EOS-MODIS. IEEE Trans. Geosci. Remote Sens. 1992, 30, 261–270. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially located platform and aerial photography for documentation of grazing impacts on wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Remote sensing of chlorophyll concentration in higher plant leaves. Adv. Space Res. 1998, 22, 689–692. [Google Scholar] [CrossRef]

- Falkowski, M.J.; Gessler, P.E.; Morgan, P.; Hudak, A.T.; Smith, A.M.S. Characterizing and mapping forest fire fuels using ASTER imagery and gradient modeling. For. Ecol. Manag. 2005, 217, 129–146. [Google Scholar] [CrossRef]

- Sims, D.A.; Gamon, J.A. Relationships between leaf pigment content and spectral reflectance across a wide range of species, leaf structures and developmental stages. Remote Sens. Environ. 2002, 81, 337–354. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Spectral reflectance changes associated with autumn senescence of Aesculus hippocastanum L. and Acer platanoides L. leaves. Spectral features and relation to chlorophyll estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Penuelas, J.; Frederic, B.; Filella, I. Semi-Empirical indices to assess carotenoids/chlorophyll-a ratio from leaf spectral reflectance. Photosynthetica 1995, 31, 221–230. [Google Scholar]

- Gitelson, A.A.; Stark, R.; Grits, U.; Rundquist, D.; Kaufman, Y.; Derry, D. Vegetation and soil lines in visible spectral space: A concept and technique for remote estimation of vegetation fraction. Int. J. Remote Sens. 2002, 23, 2537–2562. [Google Scholar] [CrossRef]

- Gregorutti, B.; Michel, B.; Saint-Pierre, P. Correlation and variable importance in random forests. Stat. Comput. 2017, 27, 659–678. [Google Scholar] [CrossRef]

- Chi, M.; Plaza, A.; Benediktsson, J.A.; Sun, Z.; Shen, J.; Zhu, Y. Big data for remote sensing: Challenges and opportunities. Proc. IEEE 2016, 104, 2207–2219. [Google Scholar] [CrossRef]

- Haut, J.M.; Paoletti, M.E.; Plaza, J.; Plaza, A. Fast dimensionality reduction and classification of hyperspectral images with extreme learning machines. J. Real Time Image Proc. 2018, 15, 439–462. [Google Scholar] [CrossRef]

- Villoslada, M.; Bergamo, T.F.; Ward, R.D.; Burnside, N.G.; Joyce, C.B.; Bunce, R.G.H.; Sepp, K. Fine scale plant community assessment in coastal meadows using UAV based multispectral data. Ecol. Indic. 2020, 111, 105979. [Google Scholar] [CrossRef]

- Kobayashi, N.; Tani, H.; Wang, X.; Sonobe, R. Crop classification using spectral indices derived from Sentinel-2A imagery. J. Inf. Telecommun. 2020, 4, 67–90. [Google Scholar] [CrossRef]

- Wang, S.; Azzari, G.; Lobell, D.B. Crop type mapping without field-level labels: Random forest transfer and unsupervised clustering techniques. Remote Sens. Environ. 2019, 222, 303–317. [Google Scholar] [CrossRef]

- Alaibakhsh, M.; Emelyanova, I.; Barron, O.; Sims, N.; Khiadani, M.; Mohyeddin, A. Delineation of riparian vegetation from Landsat multi-temporal imagery using PCA: Delineation of riparian vegetation from landsat multi-temporal imagery. Hydrol. Process. 2017, 31, 800–810. [Google Scholar] [CrossRef]

- Dadon, A.; Mandelmilch, M.; Ben-Dor, E.; Sheffer, E. Sequential PCA-based classification of mediterranean forest plants using airborne hyperspectral remote sensing. Remote Sens. 2019, 11, 2800. [Google Scholar] [CrossRef]

- Halladin-Dąbrowska, A.; Kania, A.; Kopeć, D. The t-SNE algorithm as a tool to improve the quality of reference data used in accurate mapping of heterogeneous non-forest vegetation. Remote Sens. 2019, 12, 39. [Google Scholar] [CrossRef]

- Tasdemir, K.; Milenov, P.; Tapsall, B. Topology-Based hierarchical clustering of self-organizing maps. IEEE Trans. Neural Netw. 2011, 22, 474–485. [Google Scholar] [CrossRef]

- Riese, F.M.; Keller, S.; Hinz, S. Supervised and semi-supervised self-organizing maps for regression and classification focusing on hyperspectral data. Remote Sens. 2019, 12, 7. [Google Scholar] [CrossRef]

| Vegetation Types | Ground Truth Data Size |

|---|---|

| (1) Abies Evergreen Conifer Forest (ECF) | 300 |

| (2) Alnus Deciduous Broadleaf Forest (DBF) | 300 |

| (3) Alpine Herb | 300 |

| (4) Alpine Shrub | 300 |

| (5) Barren-Built-up area | 300 |

| (6) Cryptomeria-Chamaecyparis Evergreen Conifer Forest (ECF) | 300 |

| (7) Fagus-Quercus Deciduous Broadleaf Forest (DBF) | 300 |

| (8) Hydrangea Shrub | 165 |

| (9) Miscanthus Herb | 300 |

| (10) Pinus Shrub | 300 |

| (11) Quercus Shrub | 300 |

| (12) Salix Shrub | 108 |

| (13) Sasa Shrub | 300 |

| (14) Tsuga Evergreen Conifer Forest (ECF) | 107 |

| (15) Water | 300 |

| (16) Wetland Herb | 300 |

| Vegetation Indices | Formula | References |

|---|---|---|

| (1) Atmospherically Resistant Vegetation Index (ARVI) | Kaufman and Tanre [40] | |

| (2) Enhanced Vegetation Index (EVI) | Huete et al. [41] | |

| (3) Green Atmospherically Resistant Index (GARI) | Gitelson et al. [42] | |

| (4) Green Chlorophyll Index (GCI) | Gitelson et al. [43] | |

| (5) Green Leaf Index (GLI) | Louhaichi et al. [44] | |

| (6) Green Normalized Difference Vegetation Index (GNDVI) | Gitelson and Merzlyak [45] | |

| (7) Green Red Vegetation Index (GRVI) | Falkowski et al. [46] | |

| (8) Modified Red Edge Normalized Difference Vegetation Index (MRENDVI) | Sims and Gamon [47] | |

| (9) Modified Red Edge Simple Ratio (MRESR) | Sims and Gamon [47] | |

| (10) Modified Soil Adjusted Vegetation Index (MSAVI) | Qi et al., 1994 [48] | |

| (11) Normalized Difference Vegetation Index (NDVI) | Rouse et al. [49] | |

| (12) Optimized Soil Adjusted Vegetation Index (OSAVI) | Rondeaux et al. [50] | |

| (13) Red Edge Normalized Difference Vegetation Index (RENDVI) | Gitelson and Merzlyak [51] | |

| (14) Soil-Adjusted Vegetation Index (SAVI) | Huete [52] | |

| (15) Structure Insensitive Pigment Index (SIPI) | Penuelas et al. [53] | |

| (16) Visible Atmospherically Resistant Index (VARI) | Gitelson, et al. [54] |

| Features | CAEs | AEs | RFs |

|---|---|---|---|

| 3 | 88.7–89.9% | 81.2–85.2% | 76.7–81.2% |

| 5 | 92.7–93.8% | 87.9–91.4% | 84.4–88.6% |

| 10 | 95.0–96.2% | 91.5–94.6% | 90.2–93.7% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharma, R.C.; Hara, K. Self-Supervised Learning of Satellite-Derived Vegetation Indices for Clustering and Visualization of Vegetation Types. J. Imaging 2021, 7, 30. https://doi.org/10.3390/jimaging7020030

Sharma RC, Hara K. Self-Supervised Learning of Satellite-Derived Vegetation Indices for Clustering and Visualization of Vegetation Types. Journal of Imaging. 2021; 7(2):30. https://doi.org/10.3390/jimaging7020030

Chicago/Turabian StyleSharma, Ram C., and Keitarou Hara. 2021. "Self-Supervised Learning of Satellite-Derived Vegetation Indices for Clustering and Visualization of Vegetation Types" Journal of Imaging 7, no. 2: 30. https://doi.org/10.3390/jimaging7020030

APA StyleSharma, R. C., & Hara, K. (2021). Self-Supervised Learning of Satellite-Derived Vegetation Indices for Clustering and Visualization of Vegetation Types. Journal of Imaging, 7(2), 30. https://doi.org/10.3390/jimaging7020030