Abstract

The perceptual quality of digital images is often deteriorated during storage, compression, and transmission. The most reliable way of assessing image quality is to ask people to provide their opinions on a number of test images. However, this is an expensive and time-consuming process which cannot be applied in real-time systems. In this study, a novel no-reference image quality assessment method is proposed. The introduced method uses a set of novel quality-aware features which globally characterizes the statistics of a given test image, such as extended local fractal dimension distribution feature, extended first digit distribution features using different domains, Bilaplacian features, image moments, and a wide variety of perceptual features. Experimental results are demonstrated on five publicly available benchmark image quality assessment databases: CSIQ, MDID, KADID-10k, LIVE In the Wild, and KonIQ-10k.

1. Introduction

As digital media takes a more central part in our daily lives and work, research on image and video quality assessment becomes more and more important. In many cases, the visual quality has to be optimized for content, like movies and sport games. In these cases, automatic assessment methods should take the actual image or video content into account to give the viewer the best experience. In medical imaging, a poor image quality may mean a misdiagnosis.

There are two ways of measuring image quality [1]. The obvious way is to ask people to give their opinions on a number of test images which is called subjective quality assessment. However, such a procedure can be very time-consuming and expensive to set up the experimental environment. That is why, objective image quality assessment has become a hot research topic because it deals with mathematical models and algorithms that are able to assess perceptual quality of digital images automatically. In the literature, objective image quality assessment algorithms are grouped according to the availability of the reference, pristine image. Specifically, full-reference image quality assessment (FR-IQA) algorithms possess full information for both the distorted image and the reference image, while no-reference image quality assessment (NR-IQA) methods predict perceptual quality exclusively based on the distorted image. Reduced-reference image quality assessment (RR-IQA) represents a middle course because it possess partial information about the reference image and full information about the distorted image.

1.1. Related Work

NR-IQA has gained a lot of attention in the recent decades. Although, the reference image is not available for NR-IQA algorithms, they can make assumptions about the distortions present in a given input image. Hence, they can be divided into distortion-specific and general-purpose groups. As the name indicates, distortion-specific methods assume the presence of certain distortions, such as JPEG [2] or JPEG2000 [3] compression noise. In contrast, general-purpose algorithms do not restrict themselves to specific distortions. General-purpose methods can be further divided into opinion-unaware [4,5] and opinion-aware [6] classes. Opinion-unaware ones do not require subjective quality scores in the training process, while opinion-aware algorithms usually rely on different regression frameworks trained on subjective scores.

Models based on natural scene statistics (NSS) have been very popular in opinion-aware NR-IQA. The main idea is that pristine (distortion-free) images obey certain statistical regularities and distorted images’ statistics deviate significantly from these regularities. As a consequence, these models contain three distinct stages: (1) feature extraction, (2) NSS modeling, and (3) regression. Hence, the main differences between NSS-based algorithms are connected to the above-mentioned three steps. For instance, blind image quality index (BIQI) [7] extracts features in the wavelet domain over three scales and three orientations. Moreover, generalized Gaussian distribution is fitted to the sub-band coefficients and the fitting parameters are utilized as quality-aware features. Finally, a trained support vector regressor (SVR) is applied to map features onto perceptual quality scores. In contrast, blind image integrity notator using DCT statistics (BLIINDS) [8] utilizes the statistics of local DCT coefficients. On the other hand, the mapping from features to quality scores is carried out by probabilistic prediction algorithms. In contrast, Liu et al. [9] utilized the orientation information from curvelet transform to determine correlation between scale and orientation energy distributions. Similarly to [7], an SVR is used to map the feature vectors onto quality scores. Gu et al. [10] combined NSS-based features with the free energy principle. He et al. [11] integrated NSS-based features and sparse representation. Mittal et al. [6] extracted NSS-based features from the spatial domain. Namely, mean subtracted contrast normalized (MSCN) coefficients were first determined from the raw pixel data. Subsequently, a generalized Gaussian distribution was fitted to MSCN coefficients. Moreover, an asymmetric generalized Gaussian distribution was also fitted to the products of neighboring MSCN coefficients. Similarly to BIQI [7], the fitting parameters were considered as quality-aware features and mapped to perceptual quality scores with a SVR. In [12], NSS features from multiple domains were combined. In contrast, Jenadeleh and Moghaddam [13] estimated the parameters of NSS features by a Wakeby distribution model.

Another line of papers extracts directly quality-aware statistical features from images and maps them to quality scores. Zhang et al. [14] generated quality-aware features from the joint generalized local binary pattern statistics. In contrast, Li et al. [15] proposed a gradient weighted histogram of local binary patterns for quality aware features. In [16], a set of quality aware statistical features (first digit distribution in the gradient magnitude and wavelet domain, color statistics) were combined with powerful perceptual features (colorfulness, global contrast factor, entropy, etc.) to train an Gaussian process regression (GPR) algorithm for quality prediction.

Recently, convolutional neural networks have become a prominent technology in the field of image processing. The deployment of CNNs in NR-IQA is gaining a lot of attention due to their representational power. Usually, CNNs consist of four types of components, such as convolutional, activation, pooling, and fully-connected layers stacked on each other. On the other hand, features extracted from CNNs trained on huge databases, such as ImageNet [17], have shown excellent representational power in many image processing tasks [18,19,20]. First, Kang et al. [21] proposed a CNN-based solution for NR-IQA. Specifically, the authors trained a CNN regression framework on non-overlapping image patches. The perceptual quality of the overall input image was determined by pooling the patches’ quality scores. Later, the proposed architecture was developed further by Kang et al. [22] to simultaneously estimate perceptual quality and image distortion types. Similarly, Kim et al. [23] proposed a regression CNN framework, but FR-IQA behavior was first imitated by generating a local quality map. Namely, the patches were first regressed onto quality scores obtained by a traditional FR-IQA metric. In contrast, Bianco et al. [24] applied fine-tuned AlexNet [25] to extract deep features from -sized image patches. Zeng et al. [26] developed the approach of [24] further. Namely, they extracted features with the help of a ResNet [27] architecture and elaborated a probabilistic representation of distorted images. In [28], an Inception-V3 [29] network was utilized as feature extracted and it was pointed out that considering the features of multiple layers is able to improve the performance of perceptual quality prediction. In contrast, Liu et al. [30] trained a Siamese CNN to rank images in terms of perceptual quality. Subsequently, the trained Siamese CNN was used to transfer knowledge into a traditional CNN. Lin and Wang [31] proposed a quality-aware generative network for reference image generation. To this end, a quality-aware loss function was also proposed. Moreover, the knowledge about the discrepancy between real and generated reference images was incorporated into a regression CNN which estimated the perceptual quality of distorted images.

Another line of NR-IQA algorithms focuses on combining the results of existing methods to improve prediction performance [32,33]. For instance, Ieremeiev et al. [34] trained a neural network on the results of eleven different NR-IQA algorithms to boost performance.

1.2. Contributions

In this study, an NR-IQA method is presented which relies on a novel feature vector containing a set of quality-aware features that globally characterizes the statistics of a given input image to be assessed. Specifically, the proposed feature vector partially improves further our previous work [16]. A set of shape descriptors is proposed to the local fractal dimension distribution and first digit distribution feature vectors to capture better image distortions. Moreover, we point out that besides the wavelet coefficients [16], discrete cosine transform coefficients and singular values of an image are also suitable to derive first digit distribution features based on Benford’s law. Motivated by the model of extended classical receptive field (ECRF), Bilaplacian quality-aware features are also incorporated into the introduced model. Unlike previous methods, the degradation of image edges are quantified by image moments. Experimental results and performance comparison to the state-of-the-art are presented on five publicly available IQA benchmark databases: CSIQ, MDID, KADID-10k, LIVE In the Wild, and KonIQ-10k.

1.3. Structure

The rest of this study is organized as follows. Section 2 describes our proposed method for NR-IQA. Next, Section 3 shows experimental results and analysis including the description of the applied IQA benchmark databases and evaluation protocol, a parameter study, and a comparison to other state-of-the-art algorithms. Finally, a conclusion is drawn in Section 4.

2. Proposed Method

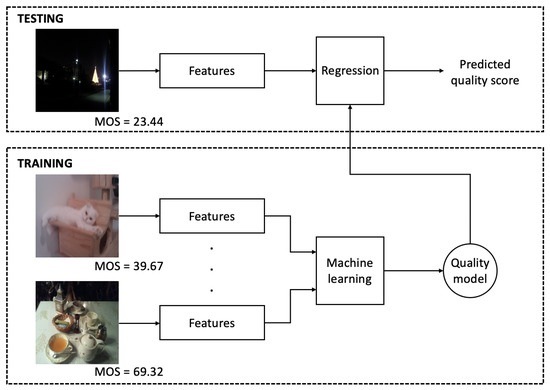

The general overview of the proposed NR-IQA method is shown in Figure 1. As the workflow indicates, a set of feature vectors is extracted from the training images to train a machine learning model which is applied in the testing phase for mapping feature vectors into perceptual quality scores. In Section 3, a detailed parameter study is presented to find the most suitable regression using five IQA benchmark databases.

Figure 1.

Block diagram of the proposed method.

As pointed out by Ghadiyaram and Bovik [35], a various set of features is necessary to accurately predict artificially and authentically distorted digital images’ perceptual quality. In this study, a novel set of quality-aware features is proposed that characterizes an image by taking into account its global statistics. The introduced method relies on a 132-dimensional feature vector including extended local fractal dimension distribution feature vector, extended first digit distribution (FDD) feature vectors, Bilaplacian features, image moments, histogram variances of relative gradient orientation (RO), gradient magnitude (RM), relative gradient magnitude (GM) maps, and perceptual features (colorfulness, sharpness, dark channel feature, contrast). The used features are summarized in Table 1 where quality-aware features proposed by this study are typed in bold.

Table 1.

Summary of features applied in the introduced no-reference image quality assessment (NR-IQA) algorithm. Quality-aware features proposed in this study are typed in bold.

2.1. Extended Local Fractal Dimension Distribution Feature Vector

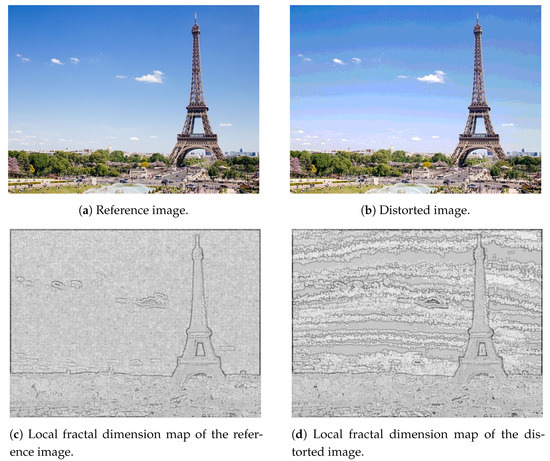

In [41], Pentland demonstrated that natural scenes, such as mountains, trees, clouds, etc., can be described by fractal surfaces because fractals look like as natural surfaces. Various image distortions often change the local regularities of digital images’ texture. Thus, distortions change the local fractal dimension distribution of a given test image. Consequently, the histogram of local fractal dimension distributions are quality-aware features [16]. As in our previous study [16], the local fractal dimension map of an image is created by considering each pixel in the original image as a center of a 7-by-7 rectangular neighborhood and the fractal dimension is calculated from this neighborhood. The box-counting method is applied to determine the fractal dimension of an image patch because it is able to represent complexity and easy to implement [42,43]. Similarly to our previous work [16], a 10-bin normalized histogram was calculated considering the values between and 3 from the local fractal dimension map. Figure 2 depicts the local fractal dimension maps of a reference-distorted image pair. It can be observed that distortions in texture appear very strongly in the local fractal dimension.

Figure 2.

Illustration of local fractal dimension maps.

Although the normalized histogram of local fractal dimension distribution is able to describe the irregularities of natural scene, the following statics are attached to the normalized histogram to construct an effective feature vector: skewness, kurtosis, entropy, median, spread, and standard deviation. The skewness is determined as

where stands for the mean of v and is the standard deviation of v. The kurtosis is obtained as

The entropy is obtained as

where stands for the histogram count of v.

2.2. Extended First Digit Distribution Feature Vectors

Benford’s distribution concerns the leading digit (the first non-zero digit, range: 1–9) of values in a data set. Frank Benford published an article entitled “The law of anomalous numbers” in 1938 [44] where he analyzed the leading digit values from diverse sources, such as populations of counties, length of rivers, or death rates. Benford conjectured that the distribution of the leading digit has probability mass function

Those data sets, that follows the particular pattern defined by Equation (4) for their leading digits, are said to satisfy Benford’s law. It was pointed out by Pérez-González et al. [45] that the luminance values of digital images do not satisfy Benford’s law. However, the discrete cosine transform (DCT) coefficients of a digital image produces a good match with Benford’s law [45].

In our previous work [16], we utilized the wavelet domain to obtain first digit distribution (FDD) feature vectors, since we pointed out that the FDD in the wavelet transform domain matches very well with the Benford’s law prediction in case of distortion-free, pristine images. On the other hand, various image distortions result in a significant deviation from the prediction of the Benford’s law in FDD. However, the discrete cosine transform (DCT) coefficients’ and singular values’ FDD shows similar properties to those of wavelet domain. In this study, normalized FDD feature vectors are extracted from the DCT coefficients [45] and the singular values, besides the wavelet transform domain. Moreover, the FDD distribution feature vectors are augmented by statistics as in the previous subsection, such as symmetric Kullback–Leibler divergence between the actual FDD and Benford’s law prediction, skewness, kurtosis, entropy, median, spread, and standard deviation. As already mentioned, symmetric Kullback–Leibler () divergence is determined between the actual FDD (denoted by ) and Benford’s distribution (denoted by ):

where the Kullback–Leibler (KL) divergence is given as:

In addition to , skewness, kurtosis, entropy, median, spread, and standard deviation were also attached to the normalized FDD to obtain the extended FDD feature vector. As a result, an extended FDD feature vector has a length of 17. Moreover, extended FDD feature vectors are extracted from the horizontal, vertical, and diagonal wavelet coefficients, DCT coefficients, and singular values.

Table 2 illustrates the average FDD of singular values in the KADID-10k [46] database with respect to the five different distortion levels found in this database. It can be observed that the between the actual FDD and the Benford’s distribution is roughly proportional with the level of distortion. Furthermore, the relative frequency of ones and twos are also roughly proportional with the level of image distortion. That is why, the FDDs in different domains were chosen as quality-aware descriptors and were extended with and histogram shape descriptors, such as skewness, kurtosis, entropy, median, spread, and standard deviation.

Table 2.

Average first digit distribution (FDD) of singular values in the KADID-10k [46] database with respect to the five different distortion levels of KADID-10k. Level 1 stands for the lowest level of distortion, while Level 5 denotes the highest distortion. The column indicates the symmetric Kullback–Leibler divergence between the actual FDD and the prediction of Benford’s law.

2.3. Bilaplacian Features

Gerhard et al. [47] pointed out that the human visual system (HVS) is adapted to the statistical regularities in images. Moreover, Marr [48] emphasized the importance of studying zero-crossings at multiple scales to interpret the intensity changes found in the image. At the same time, the extended classical receptive field (ECRF) of retinal ganglion cells can be modeled as a combination of three zero-mean Gaussians at three different scales [49]. These are equivalent to a Bilaplacian of the Gaussian filter [49,50]. On the other hand, Gaussian filtering introduces an undesirable distortion in IQA. In our method, color space is applied, since it is suggested by ITU-R BT.601 for video broadcasting to obtain Bilaplacian features. The direct conversion from color space to is the following:

where R, G, and B denote the red, green, and blue color channels, respectively.

Generally, the Laplacian filters are approximated by convolution kernels whose sum are zero [51]. In this paper, the following popular kernels are utilized:

An image can be converted to the Bilaplacian domain by convolving it with two Laplacian kernels, formally can be written as:

where ∗ stands for the operation of convolution. In our study, , , , , , , and masks are considered. As already mentioned, the channels of color space are used to obtain the Bilaplacian features. This means that Y, , and channels are convolved with the Bilaplacian masks independently from each other. As a consequence, seven Bilaplacian maps can be obtained for each color channel. Subsequently, the histogram variance of each channels is taken. The histogram variance is defined as

where stands for v’s normalized histogram to unit sum.

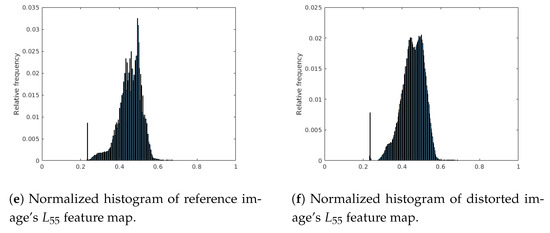

Figure 3 illustrates a reference, distortion-free image and its artificially distorted counterpart from the KADID-10k [46] database. It can be seen that even a moderate amount of noise can significantly distort the normalized histogram of Bilaplacian feature maps. That is why the histogram variances of the Bilaplacian feature maps were applied as quality-aware features.

Figure 3.

Illustration of Bilaplacian features. The first row contains a reference-distorted image pair. The second row consists of the Bilaplacian feature maps obtained by the filter. The third row contains the normalized histograms of the Bilaplacian feature maps.

2.4. Image Moments

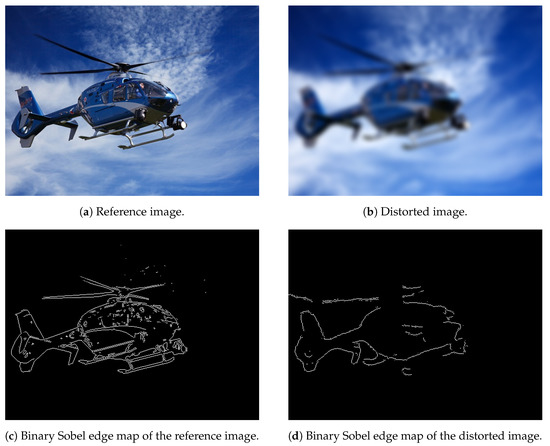

A number of IQA metrics have utilized that the structural distortions of digital images correlate well with the degradation of edges [52,53]. In this paper, we propose to use the global, binary Sobel edge map of a digital image and determine the eight central moments which are used as quality-aware features.

First, the Sobel operator computes an approximation of the gradient of an image. If is considered as the source image, and are determined as:

where ∗ stands for the convolution operator, and are the horizontal and vertical derivative approximations, respectively. The gradient magnitude approximations can be obtained:

The binary Sobel edge map is determined by thresholding using the quadruple of ’s mean as cutoff threshold. Finally, edge thinning is applied to remove spurious points from the edge map [54]. The central moments of the digital image are defined as

where and are the coordinates of the binary image’s centroid. By definition, the centroid of a binary image is the arithmetic mean of all coordinates. It can be shown that central moments are translational invariant [55] (Figure 4).

Figure 4.

Structural distortions correlate well with the degradation. Central moments are applied as quality-aware features to quantify edge degradation.

2.5. Gradient Features

Image gradient magnitude and orientation features have become very popular both in FR-IQA and NR-IQA since they are strong predictive factors of perceptual image quality [36]. In this study, the histogram variances of gradient magnitude (GM), relative gradient orientation (RO), and relative gradient magnitude (RM) are incorporated into our model to quantify the changes in gradient [36].

2.6. Perceptual Features

The following perceptual features are adopted in our model, since they are coherent with the HVS’s quality perception. Specifically, colorfulness [37], sharpness [38], dark channel feature [39], and contrast [40] were applied in our study.

Yendrikhovskij et al. [56] demonstrated that colorfulness plays an important role in human perceptual quality judgments, since humans like better more colorful images. In this study, the metric of Hasler and Suesstrunk [37] was adopted:

where and . Furthermore, R, G, and B stand for the red, green, and blue channels, respectively. Variables and denote the standard deviation and mean of the matrices given in the subscripts, respectively.

Image sharpness determines the amount of detail that is realized in the image. Sharpness can be observed most clearly on image edges and for that reason it is widely considered as an image quality factor. In this study, the metric of Bahrami and Kot [38]—maximum local variation (MLV)—was adopted to characterize the sharpness of an image because its low computational costs.

First, Tang et al. [57] proposed dark channel features for photo quality assessment. Dark channel features were designed originally for single image haze removal [39]. An image’s dark channel is defined as:

where is a color channel of I and is a neighborhood of pixel i. In our implementation, is a rectangular -sized patch. The dark channel feature of image I is defined as:

where S denotes the area of the input image.

There are many definitions of image contrast in the literature. The easiest way to explain contrast is the difference between the brightest and darkest pixel values. Therefore, the HVS’s capability to recognize and separate objects on an image heavily depends on image contrast. Consequently, contrast is an image quality factor. In this study, Matkovic et al.’s [40] global contrast factor (GCF) model was adopted which is defined as follows:

where , . Moreover, s are defined as

where w and h stand for the width and height of the input image, respectively, and

where the Ls denote the pixel values after gamma correction and assuming that the image is reshaped into a row-wise one dimensional array.

Table 3 illustrates the average values of the applied perceptual features(, sharpness, , and ) in the KADID-10k [46] database with respect to the five different distortion levels. It can be observed that the applied four perceptual features strongly correlate with the distortion levels.

Table 3.

The average values of perceptual features in the KADID-10k [46] database with respect to the five different distortion levels. Level 1 stands for the lowest level of distortion, while Level 5 denotes the highest distortion.

3. Experimental Results

In this section, our experimental results are presented. Section 3.1 gives a brief overview about the used publicly available IQA benchmark databases. Next, Section 3.2 describes the used experimental setup and evaluation metrics. Section 3.3 contains a parameter study in which our design choices are reasoned. Subsequently, Section 3.4 consists of a performance comparison to other state-of-the-art NR-IQA algorithms using publicly available IQA benchmark databases. Finally, Section 3.5 and Section 3.6 contain detailed results with respect to distortion types and levels.

3.1. Databases

Five publicly available benchmark IQA databases are used in this study to demonstrate and validate the results of the proposed method including CSIQ [58], KADID-10k [46], MDID [59], LIVE In the Wild [35], and KonIQ-10k [60] datasets.

CSIQ [58] has 30 reference images, each one distorted by one of six predefined distortion types at four or five different distortion levels. MDID [59] contains 20 reference images and 1600 distorted images derived from the reference images using multiple distortions of random types and distortion levels. Moreover, the authors [59] proposed a novel subjective rating method, called pair comparison sorting, to obtain more accurate data. KADID-10k [46] consists of 10,125 distorted images derived from 81 pristine (distortion free), reference images using 25 different distortion types at 5 different distortion levels. Moreover, each image is associated with a differential MOS value in the range of . In contrast, LIVE In the Wild [35] database contains images captured by mobile camera devices so the images are affected by an intricate mixture of different distortion types. In total, it contains 1162 authentically distorted images which were evaluated by 8100 human observers. Similarly, KonIQ-10k [60] database consists of digital images with authentic distortions. Specifically, 10,073 images were sampled from the YFCC100m [61] database using seven quality indicators, one content indicator, and machine tags. Moreover, 120 quality ratings were collected for all images using crowd sourcing platforms.

Table 4 presents a comparison of the applied IQA benchmark databases with respect to their main characteristics.

Table 4.

Publicly available IQA benchmark databases used in this paper.

3.2. Experimental Setup and Evaluation Metrics

To evaluate our model and other state-of-the-art algorithms, databases containing artificial distortions (CSIQ [58], MDID, and KADID-10k [46]) are divided into a training set and a test with respect to the pristine, reference images to avoid any semantic content overlapping between these two sets. Databases with authentic distortions (LIVE In the Wild) are simply divided into a training and a test set. Moreover, approximately 80% of images are in the training set and the remaining 20% are in the test. In this study, two widely applied correlation criteria are employed including Pearson’s linear correlation coefficient (PLCC) and Spearman’s rank order correlation coefficient (SROCC). For both PLCC and SROCC, a higher value indicates a better performance of the examined NR-IQA algorithm. Furthermore, we report average PLCC and SROCC values which were measured over 100 random train–test splits.

3.3. Parameter Study

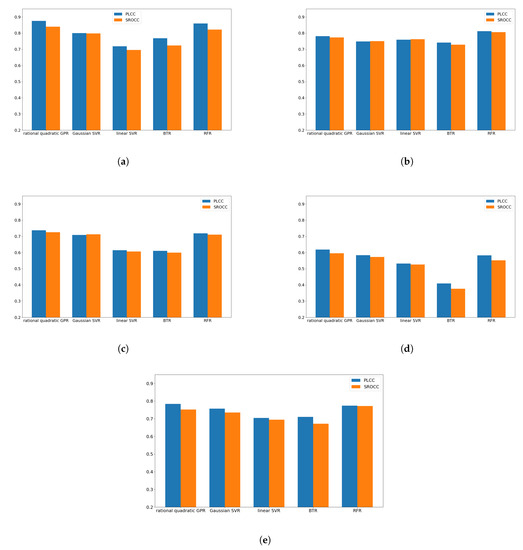

In this subsection, a parameter study is carried out to find an optimal regression technique for the proposed quality-aware global statistical features. Specifically, we made experiments with five different regression algorithms, such as rational quadratic Gaussian process regressor (GPR) [62], Gaussian support vector regressor (SVR) [63], linear SVR [63], binary tree regression (BTR) [64], and random forest regression (RFR) [65]. The results are summarized in Figure 5. It can be seen that rational quadratic GPR provides the best performance on CSIQ [58], KADID-10k [46], and LIVE In the Wild [35]. On MDID [59], RFR provides the best results, while rational quadratic GPR is the second best. On KonIQ-10k [60], rational quadratic GPR and RFR give similar results. As a consequence, rational quadratic GPR was chosen in our method. Moreover, this architecture is codenamed GSF-IQA in the following subsections and compared to the state-of-the-art.

Figure 5.

Performance comparison of rational quadratic Gaussian process regressor (GPR), Gaussian support vector regressor (SVR), linear SVR, binary tree regression (BTR), and random forest regression (RFR) techniques. Average Pearson’s linear correlation coefficient (PLCC) and Spearman’s rank order correlation coefficient (SROCC) values measured 100 random train–test splits are plotted. (a) CSIQ [58]. (b) MDID [59]. (c) KADID-10k [46]. (d) LIVE In the Wild [35]. (e) KonIQ-10k [60].

3.4. Comparison to the State-of-the-Art

To compare our GSF-IQA method to the state-of-the-art, several NR-IQA methods were collected whose original source codes are available online, including BLIINDS-II [66], BMPRI [67], BRISQUE [6], CurveletQA [9], DIIVINE [68], ENIQA [69], GRAD-LOG-CP [70], NBIQA [71], PIQE [4], OG-IQA [36], SPF-IQA [16], and SSEQ [72].

As already mentioned, five benchmark IQA databases are used in this study: CSIQ [58], MDID [59], KADID-10k [46], LIVE In the Wild [35], and KonIQ-10k [60]. The measured results of the proposed method and other state-of-the-art algorithms on artificial distortions (CSIQ [58], MDID [59], and KADID-10K [46]) can be seen in Table 5, while those on authentic distortions (LIVE In the Wild [35] and KonIQ-10k [60]) are summarized in Table 6. In addition to this, Table 7 presents the results of the one-sided t-test which was applied to give evidence for the statistical significance of GSF-IQA’s results on the used IQA benchmark databases. In this table, each record is encoded by two symbols. Namely, ‘1’ means that the proposed GSF-IQA method is statistically significantly better than the NR-IQA method in the row on the IQA benchmark database in the column. The ‘-’ symbol is adopted when there is no significant difference between GSF-IQA and another NR-IQA method. Table 8 illustrates the weighted and direct average of PLCC and SROCC values found in Table 5 and Table 6.

Table 5.

Comparison of GSF-IQA to the state-of-the-art on artificial distortions. Mean PLCC and SROCC are measured over 100 random train–test splits with respect to the reference images. Best results are typed in bold, second best results are typed in italic.

Table 6.

Comparison of GSF-IQA to the state-of-the-art on authentic distortions. Mean PLCC and SROCC are measured over 100 random train–test splits. Best results are typed in bold, second best results are typed in italic.

Table 7.

One-sided t-test. Symbol ‘1’ means that the proposed GSF-IQA method is statistically better than the NR-IQA method in the row on the IQA benchmark database in the column. Symbol ‘-’ is used when there is no significant difference.

Table 8.

Comparison of GSF-IQA to the state-of-the-art. Weighted and direct average of measured PLCC and SROCC values are reported. Best results are typed in bold, second best results are typed in italic.

From the results presented in Table 5, Table 6, Table 7 and Table 8, it can be seen that the proposed GSF-IQA provides the best results on four out of five IQA benchmark databases. Moreover, it gives the second best PLCC and SROCC values on LIVE In the Wild [35]. From the significance tests, it can be observed that the improvement is statistically significant on all databases containing artificial distortions. On the other hand, the difference between the best and the second best performing methods on LIVE In the Wild [35] and KonIQ-10k [60] is statistically not significant. It can be also observed from Table 8 that the proposed GSF-IQA method is able to outperform other state-of-the-art algorithms in terms of direct and weighted average PLCC and SROCC values. Specifically, GSF-IQA outperforms the second best method by approximately 0.02 both in terms of direct and weighted average PLCC and SROCC values.

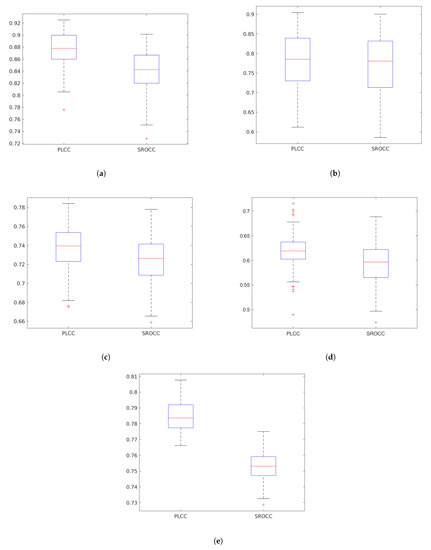

Figure 6 depicts the boxplots of PLCC and SROCC values produced by GSF-IQA on each applied IQA benchmark database. On every box, the red central mark stands for the median value, and the blue bottom and top edges of the box denote the 25th and 75th percentiles, respectively. In addition, the whiskers indicate the most extreme values which are not considered as outliers. The outliers are depicted by ’+’.

Figure 6.

Box plots of the PLCC and SROCC values produced by GSF-IQA on five IQA benchmark databases (CSIQ [58], MDID [59], KADID-10k [46], LIVE In the Wild [35], and KonIQ-10k [60]). Measured over 100 random train–test splits. (a) CSIQ [58]. (b) MDID [59]. (c) KADID-10k [46]. (d) LIVE In the Wild [35]. (e) KonIQ-10k [60].

3.5. Performance over Different Distortion Types

In this subsection, we examine the performance of the state-of-the-art NR-IQA methods over different distortion types. Specifically, we report on average SROCC values measured over the different distortion types of KADID-10k database [46]. As already mentioned, this database consists of images with 25 different distortion types, such as Gaussian blur (GB), lens blur (LB), motion blur (MB), color diffusion (CD), color shift (CS), color quantization (CQ), color saturation 1 (CSA1), color saturation 2 (CSA2), JPEG2000 compression noise (JP2K), JPEG compression noise (JPEG), white noise (WN), white noise in color component (WNCC), impulse noise (IN), multiplicative noise (MN), denoise, brighten, darken, mean shift (MS), jitter, non-eccentricity patch (NEP), pixelate, quantization, color block (CB), high sharpen (HS), and contrast change (CC). The results are summarized in Table 9. It can be seen that the proposed GSF-IQA algorithm is able to provide the best results on 12 out of 25 distortion types.

Table 9.

Mean SROCC value comparison on different distortion types of the KADID-10k [46] database. Measured over 100 random train–test splits with respect to the reference images. The best results are typed in bold.

3.6. Performance over Different Distortion Levels

In this subsection, we examine the performance of the state-of-the-art NR-IQA methods over different distortion levels. Specifically, we report on average SROCC values measured over the different distortion levels of the KADID-10k database [46]. The results are summarized in Table 10. As one can see from the results, the proposed GSF-IQA algorithm is able to outperform all the other state-of-the-art methods on all distortion levels.

Table 10.

Mean SROCC value comparison on different distortion levels of the KADID-10k [46] database. Measured over 100 random train–test splits with respect to the reference images. The best results are typed in bold.

4. Conclusions

In this paper, we proposed a novel NR-IQA algorithm based on a set of novel quality-aware features which globally characterizes the statistics of an image. First, we utilized that various image distortions change the local regularities of the texture. Thus, an extended local fractal dimension feature was proposed to quantify the texture’s degradation. Second, we demonstrated that first digit distributions of wavelet coefficients, DCT coefficients, and singular values can be used as quality-aware features and proposed extended first digit distribution feature vectors. This model was improved by Bilaplacian features which was inspired by the extended classical receptive field model of retinal ganglion cells. To quantify the degradation of edges, image moments were incorporated into the model. The proposed algorithm was tested on five publicly available benchmark databases including CSIQ, MDID, KADID-10k, LIVE In the Wild, and KonIQ-10k. It was demonstrated that our proposal is able to outperform other state-of-the-art methods both on artificial and authentic distortions. There are two main directions of future research. Beyond feature concatenation, it is worth to study the selection process of relevant attributes provided by different sources. Moreover, the incorporation of local statistical features provided by local feature descriptors may improve the performance, since some distortion types do not uniformly distribute in the image.

To facilitate the reproducibility of the presented results, the source code of the proposed method and test environments written in MATLAB R2020a environment are available at: https://github.com/Skythianos/GSF-IQA, accessed on 5 February 2021.

Funding

This research received no external funding.

Data Availability Statement

No new data were created or analysed in this study. Data sharing is not applicable to this article.

Acknowledgments

We thank the anonymous reviewers for their careful reading of our manuscript and their many insightful comments and suggestions.

Conflicts of Interest

The author declares no conflict of interest.

References

- Xu, L.; Lin, W.; Kuo, C.C.J. Visual Quality Assessment by Machine Learning; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Zhan, Y.; Zhang, R. No-reference JPEG image quality assessment based on blockiness and luminance change. IEEE Signal Process. Lett. 2017, 24, 760–764. [Google Scholar] [CrossRef]

- Sazzad, Z.P.; Kawayoke, Y.; Horita, Y. No reference image quality assessment for JPEG2000 based on spatial features. Signal Process. Image Commun. 2008, 23, 257–268. [Google Scholar] [CrossRef]

- Venkatanath, N.; Praneeth, D.; Bh, M.C.; Channappayya, S.S.; Medasani, S.S. Blind image quality evaluation using perception based features. In Proceedings of the 2015 Twenty First National Conference on Communications (NCC), Mumbai, India, 27 February–1 March 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Moorthy, A.K.; Bovik, A.C. A two-step framework for constructing blind image quality indices. IEEE Signal Process. Lett. 2010, 17, 513–516. [Google Scholar] [CrossRef]

- Saad, M.A.; Bovik, A.C.; Charrier, C. A DCT statistics-based blind image quality index. IEEE Signal Process. Lett. 2010, 17, 583–586. [Google Scholar] [CrossRef]

- Liu, L.; Dong, H.; Huang, H.; Bovik, A.C. No-reference image quality assessment in curvelet domain. Signal Process. Image Commun. 2014, 29, 494–505. [Google Scholar] [CrossRef]

- Gu, K.; Zhai, G.; Yang, X.; Zhang, W. Using free energy principle for blind image quality assessment. IEEE Trans. Multimed. 2014, 17, 50–63. [Google Scholar] [CrossRef]

- He, L.; Tao, D.; Li, X.; Gao, X. Sparse representation for blind image quality assessment. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1146–1153. [Google Scholar]

- Wu, Q.; Li, H.; Meng, F.; Ngan, K.N.; Luo, B.; Huang, C.; Zeng, B. Blind image quality assessment based on multichannel feature fusion and label transfer. IEEE Trans. Circuits Syst. Video Technol. 2015, 26, 425–440. [Google Scholar] [CrossRef]

- Jenadeleh, M.; Moghaddam, M.E. BIQWS: Efficient Wakeby modeling of natural scene statistics for blind image quality assessment. Multimed. Tools Appl. 2017, 76, 13859–13880. [Google Scholar] [CrossRef]

- Zhang, M.; Muramatsu, C.; Zhou, X.; Hara, T.; Fujita, H. Blind image quality assessment using the joint statistics of generalized local binary pattern. IEEE Signal Process. Lett. 2014, 22, 207–210. [Google Scholar] [CrossRef]

- Li, Q.; Lin, W.; Fang, Y. No-reference quality assessment for multiply-distorted images in gradient domain. IEEE Signal Process. Lett. 2016, 23, 541–545. [Google Scholar] [CrossRef]

- Varga, D. No-Reference Image Quality Assessment Based on the Fusion of Statistical and Perceptual Features. J. Imaging 2020, 6, 75. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 248–255. [Google Scholar]

- Sharif Razavian, A.; Azizpour, H.; Sullivan, J.; Carlsson, S. CNN features off-the-shelf: An astounding baseline for recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops; IEEE Computer Society: Washington, DC, USA, 2014; pp. 806–813. [Google Scholar]

- Chen, Y. Exploring the Impact of Similarity Model to Identify the Most Similar Image from a Large Image Database. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2020; Volume 1693, p. 012139. [Google Scholar]

- Wu, J.; Ma, J.; Liang, F.; Dong, W.; Shi, G. End-to-End Blind Image Quality Assessment with Cascaded Deep Features. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1858–1863. [Google Scholar]

- Kang, L.; Ye, P.; Li, Y.; Doermann, D. Convolutional neural networks for no-reference image quality assessment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1733–1740. [Google Scholar]

- Kang, L.; Ye, P.; Li, Y.; Doermann, D. Simultaneous estimation of image quality and distortion via multi-task convolutional neural networks. In Proceedings of the 2015 IEEE international conference on image processing (ICIP), Quebec, QC, Canada, 27–30 September 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 2791–2795. [Google Scholar]

- Kim, J.; Lee, S. Fully deep blind image quality predictor. IEEE J. Sel. Top. Signal Process. 2016, 11, 206–220. [Google Scholar] [CrossRef]

- Bianco, S.; Celona, L.; Napoletano, P.; Schettini, R. On the use of deep learning for blind image quality assessment. Signal Image Video Process. 2018, 12, 355–362. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; University of Toronto: Toronto, ON, Canada, 2012; pp. 1097–1105. [Google Scholar]

- Zeng, H.; Zhang, L.; Bovik, A.C. A probabilistic quality representation approach to deep blind image quality prediction. arXiv 2017, arXiv:1708.08190. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Varga, D. Multi-Pooled Inception Features for No-Reference Image Quality Assessment. Appl. Sci. 2020, 10, 2186. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Liu, X.; van de Weijer, J.; Bagdanov, A.D. Rankiqa: Learning from rankings for no-reference image quality assessment. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1040–1049. [Google Scholar]

- Lin, K.Y.; Wang, G. Hallucinated-IQA: No-reference image quality assessment via adversarial learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 732–741. [Google Scholar]

- Fastowicz, J.; Lech, P.; Okarma, K. Combined Metrics for Quality Assessment of 3D Printed Surfaces for Aesthetic Purposes: Towards Higher Accordance with Subjective Evaluations. In Proceedings of the International Conference on Computational Science, Amsterdam, The Netherlands, 3–5 June 2020; Springer: Cham, Switzerland, 2020; pp. 326–339. [Google Scholar]

- Okarma, K.; Fastowicz, J.; Lech, P.; Lukin, V. Quality Assessment of 3D Printed Surfaces Using Combined Metrics Based on Mutual Structural Similarity Approach Correlated with Subjective Aesthetic Evaluation. Appl. Sci. 2020, 10, 6248. [Google Scholar] [CrossRef]

- Ieremeiev, O.; Lukin, V.; Ponomarenko, N.; Egiazarian, K. Combined no-reference IQA metric and its performance analysis. Electron. Imaging 2019, 2019, 260–261. [Google Scholar] [CrossRef]

- Ghadiyaram, D.; Bovik, A.C. Massive online crowdsourced study of subjective and objective picture quality. IEEE Trans. Image Process. 2015, 25, 372–387. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Hua, Y.; Zhao, Q.; Huang, H.; Bovik, A.C. Blind image quality assessment by relative gradient statistics and adaboosting neural network. Signal Process. Image Commun. 2016, 40, 1–15. [Google Scholar] [CrossRef]

- Hasler, D.; Suesstrunk, S.E. Measuring colorfulness in natural images. Human Vision and Electronic Imaging VIII; International Society for Optics and Photonics: Bellingham, WA, USA, 2003; Volume 5007, pp. 87–95.

- Bahrami, K.; Kot, A.C. A fast approach for no-reference image sharpness assessment based on maximum local variation. IEEE Signal Process. Lett. 2014, 21, 751–755. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- Matkovic, K.; Neumann, L.; Neumann, A.; Psik, T.; Purgathofer, W. Global contrast factor-a new approach to image contrast. Comput. Aesthet. 2005, 2005, 1. [Google Scholar]

- Pentland, A.P. Fractal-based description of natural scenes. IEEE Trans. Pattern Anal. Mach. Intell. 1984, 6, 661–674. [Google Scholar] [CrossRef] [PubMed]

- Al-Kadi, O.S.; Watson, D. Texture analysis of aggressive and nonaggressive lung tumor CE CT images. IEEE Trans. Biomed. Eng. 2008, 55, 1822–1830. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Du, Q.; Sun, C. An improved box-counting method for image fractal dimension estimation. Pattern Recognit. 2009, 42, 2460–2469. [Google Scholar] [CrossRef]

- Benford, F. The law of anomalous numbers. Proc. Am. Philos. Soc. 1938, 78, 551–572. [Google Scholar]

- Pérez-González, F.; Heileman, G.L.; Abdallah, C.T. Benford’s lawin image processing. In Proceedings of the 2007 IEEE International Conference on Image Processing, San Antonio, TX, USA, 16 September–19 October 2007; IEEE: Piscataway, NJ, USA, 2007; Volume 1, pp. I–405. [Google Scholar]

- Lin, H.; Hosu, V.; Saupe, D. KADID-10k: A large-scale artificially distorted IQA database. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Berlin, Germany, 5–7 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–3. [Google Scholar]

- Gerhard, H.E.; Wichmann, F.A.; Bethge, M. How sensitive is the human visual system to the local statistics of natural images? PLoS Comput. Biol. 2013, 9, e1002873. [Google Scholar] [CrossRef]

- Marr, D.; Hildreth, E. Theory of edge detection. Proc. R. Soc. London. Ser. B. Biol. Sci. 1980, 207, 187–217. [Google Scholar]

- Ghosh, K.; Sarkar, S.; Bhaumik, K. A possible mechanism of zero-crossing detection using the concept of the extended classical receptive field of retinal ganglion cells. Biol. Cybern. 2005, 93, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, K.; Sarkar, S.; Bhaumik, K. Understanding image structure from a new multi-scale representation of higher order derivative filters. Image Vis. Comput. 2007, 25, 1228–1238. [Google Scholar] [CrossRef]

- Krig, S. Computer Vision Metrics; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Martini, M.G.; Hewage, C.T.; Villarini, B. Image quality assessment based on edge preservation. Signal Process. Image Commun. 2012, 27, 875–882. [Google Scholar] [CrossRef]

- Sadykova, D.; James, A.P. Quality assessment metrics for edge detection and edge-aware filtering: A tutorial review. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Udupi, India, 13–16 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2366–2369. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef]

- Flusser, J. On the independence of rotation moment invariants. Pattern Recognit. 2000, 33, 1405–1410. [Google Scholar] [CrossRef]

- Yendrikhovskij, S.; Blommaert, F.J.; de Ridder, H. Optimizing color reproduction of natural images. In Proceedings of the Color and Imaging Conference, Scottsdale, AZ, USA, 17–20 November 1998; Society for Imaging Science and Technology: Painted Post, NY, USA, 1998; Volume 1998, pp. 140–145. [Google Scholar]

- Tang, X.; Luo, W.; Wang, X. Content-based photo quality assessment. IEEE Trans. Multimed. 2013, 15, 1930–1943. [Google Scholar] [CrossRef]

- Larson, E.C.; Chandler, D.M. Most apparent distortion: Full-reference image quality assessment and the role of strategy. J. Electron. Imaging 2010, 19, 011006. [Google Scholar]

- Sun, W.; Zhou, F.; Liao, Q. MDID: A multiply distorted image database for image quality assessment. Pattern Recognit. 2017, 61, 153–168. [Google Scholar] [CrossRef]

- Lin, H.; Hosu, V.; Saupe, D. KonIQ-10K: Towards an ecologically valid and large-scale IQA database. arXiv 2018, arXiv:1803.08489. [Google Scholar]

- Thomee, B.; Shamma, D.A.; Friedland, G.; Elizalde, B.; Ni, K.; Poland, D.; Borth, D.; Li, L.J. YFCC100M: The new data in multimedia research. Commun. ACM 2016, 59, 64–73. [Google Scholar] [CrossRef]

- Seeger, M. Gaussian processes for machine learning. Int. J. Neural Syst. 2004, 14, 69–106. [Google Scholar] [CrossRef] [PubMed]

- Drucker, H.; Burges, C.J.; Kaufman, L.; Smola, A.; Vapnik, V. Support vector regression machines. Adv. Neural Inf. Process. Syst. 1996, 9, 155–161. [Google Scholar]

- Loh, W.Y. Classification and regression trees. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2011, 1, 14–23. [Google Scholar] [CrossRef]

- Cutler, A.; Cutler, D.R.; Stevens, J.R. Random forests. In Ensemble Machine Learning; Springer: Berlin/Heidelberg, Germany, 2012; pp. 157–175. [Google Scholar]

- Saad, M.A.; Bovik, A.C.; Charrier, C. Blind image quality assessment: A natural scene statistics approach in the DCT domain. IEEE Trans. Image Process. 2012, 21, 3339–3352. [Google Scholar] [CrossRef]

- Min, X.; Zhai, G.; Gu, K.; Liu, Y.; Yang, X. Blind image quality estimation via distortion aggravation. IEEE Trans. Broadcast. 2018, 64, 508–517. [Google Scholar] [CrossRef]

- Moorthy, A.K.; Bovik, A.C. Blind image quality assessment: From natural scene statistics to perceptual quality. IEEE Trans. Image Process. 2011, 20, 3350–3364. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, Q.; Lin, M.; Yang, G.; He, C. No-reference color image quality assessment: From entropy to perceptual quality. EURASIP J. Image Video Process. 2019, 2019, 77. [Google Scholar] [CrossRef]

- Xue, W.; Mou, X.; Zhang, L.; Bovik, A.C.; Feng, X. Blind image quality assessment using joint statistics of gradient magnitude and Laplacian features. IEEE Trans. Image Process. 2014, 23, 4850–4862. [Google Scholar] [CrossRef] [PubMed]

- Ou, F.Z.; Wang, Y.G.; Zhu, G. A novel blind image quality assessment method based on refined natural scene statistics. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1004–1008. [Google Scholar]

- Liu, L.; Liu, B.; Huang, H.; Bovik, A.C. No-reference image quality assessment based on spatial and spectral entropies. Signal Process. Image Commun. 2014, 29, 856–863. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).