Abstract

Image super-resolution (SR) models based on the generative adversarial network (GAN) face challenges such as unnatural facial detail restoration and local blurring. This paper proposes an improved GAN-based model to address these issues. First, a Multi-scale Hybrid Attention Residual Block (MHARB) is designed, which dynamically enhances feature representation in critical face regions through dual-branch convolution and channel-spatial attention. Second, an Edge-guided Enhancement Block (EEB) is introduced, generating adaptive detail residuals by combining edge masks and channel attention to accurately recover high-frequency textures. Furthermore, a multi-scale discriminator with a weighted sub-discriminator loss is developed to balance global structural and local detail generation quality. Additionally, a phase-wise training strategy with dynamic adjustment of learning rate (Lr) and loss function weights is implemented to improve the realism of super-resolved face images. Experiments on the CelebA-HQ dataset demonstrate that the proposed model achieves a PSNR of 23.35 dB, a SSIM of 0.7424, and a LPIPS of 24.86, outperforming classical models and delivering superior visual quality in high-frequency regions. Notably, this model also surpasses the SwinIR model (PSNR: 23.28 dB → 23.35 dB, SSIM: 0.7340 → 0.7424, and LPIPS: 30.48 → 24.86), validating the effectiveness of the improved model and the training strategy in preserving facial details.

1. Introduction

Face super-resolution (FSR) focuses on synthesizing high-resolution (HR) facial textures from low-resolution (LR) inputs. This task has gained considerable attention in computer vision research to address real-world challenges [1,2,3]. This field holds significant application value in security surveillance [4], medical image enhancement [5], and remote sensing image analysis [6]. However, due to the unique anatomical structure of human faces and the high sensitivity of the human visual system to facial details, FSR presents greater technical challenges compared to general image super-resolution. These challenges primarily include the precise restoration of high-frequency texture details and the maintenance of natural facial structure coherence. The inherent loss of high-frequency information in LR images further impedes conventional methods from generating outputs that meet human visual perception standards for high-quality reconstruction.

Early image SR methods primarily relied on interpolation algorithms [7,8,9] or reconstruction-based approaches [10,11], but these techniques often produced local blurring and artifacts when enlarging images at high magnification factors. The advent of the convolutional neural network (CNN) [12] significantly advanced SR technology. Dong et al. [13] pioneered the development of SRCNN, establishing an end-to-end model for SR. Their approach leverages CNN to transform LR inputs into HR outputs. However, it struggled to capture intricate image details. To accelerate reconstruction speed, FSRCNN employed deconvolution techniques and parameter-sharing strategies [14]. In 2016, following the introduction of the ResNet [15], Kim et al. [16] proposed the Very Deep Super-Resolution (VDSR) model. This approach enhances feature extraction by increasing network depth and accelerated training via residual learning. However, it demands substantial computational resources and exhibits limitations in recovering intricate textures. Lim et al. [17] introduced the EDSR model, leveraging deep residual networks for SR, but its large parameter count and high computational costs limited practical deployment. Subsequently, RCAN [18] incorporated the channel attention mechanism [19] to enhance feature representation, achieving breakthrough performance in PSNR. Nevertheless, methods optimized via mean squared error (MSE) generally exhibit over-smoothing artifacts in generated images.

To enhance image SR quality, Ledig et al. pioneered the integration of generative adversarial network (GAN) [20] into SR tasks by proposing the SRGAN model [21]. ESRGAN [22] further optimized network architecture through Residual-in-Residual Dense Blocks (RRDB) and a relativistic average discriminator, significantly improving detail recovery capabilities. Park et al. proposed the SRFeat [23] model, which incorporates two discriminators to mitigate high-frequency noise during the super-resolution process. The RankSRGAN [24] model proposed by Zhang et al. performs super-resolution reconstruction by leveraging image contrast information. In 2020, Shang et al. introduced the RFB-ESRGAN model [25], which incorporates a receptive field block to enhance feature extraction capabilities. The SPSR model [26] proposed by Ma et al. integrates a gradient-based branch structure into the GAN framework to alleviate geometric distortions in image reconstruction. Wang et al. extended this framework by incorporating RRDBs and skip connections to develop Real-ESRGAN [27], enabling end-to-end image inpainting and achieving superior SR performance. Nevertheless, GAN-based methods still face challenges such as training instability, geometric distortions (e.g., facial misalignment), and high-frequency detail degradation. Furthermore, existing approaches typically assume known degradation processes (e.g., Bicubic), whereas real-world LR images exhibit complex degradation patterns, leading to diminished generalization performance. Some studies have attempted to incorporate facial priors for localized detail enhancement [28]. However, errors in prior estimation directly propagate into the SR reconstruction process, compromising model robustness.

To overcome these limitations, we introduce an enhanced GAN for FSR incorporating Multi-scale Hybrid Attention Residual Blocks (MHARB) and an Edge-guided Enhancement Block (EEB). Key contributions are as follows:

(1) We propose a multi-scale hybrid residual block with attention mechanisms (MHARB), which incorporates dual-branch convolution and channel-spatial attention (CBAM). CBAM dynamically adjusts feature weights through channel-wise and spatial attention, thereby improving the feature extraction capability.

(2) To address high-frequency blurring in critical regions (e.g., eyes and teeth), we design an Edge-guided Enhancement Block (EEB). This module first generates a spatial weight map via an edge detection convolution layer (activating edge regions) and then employs channel attention and a Tanh activation function to derive an adaptive detail fusion strategy. This approach achieves localized enhancement of high-frequency regions while mitigating gradient explosion risks.

(3) We propose an improved multi-scale discriminator network architecture. This model progressively processes inputs at three distinct scales and employs a weighted sub-discriminator loss to enforce the generator to simultaneously preserve global consistency and local texture authenticity.

(4) The training process adopts a phased, fine-grained training mechanism. The generator’s Lr is dynamically adjusted using a decreasing strategy (initially large, then small). Simultaneously, the weights of the L1 loss and multi-scale adversarial loss functions are decayed to balance the generation quality of face images with model stability.

2. Related Work

2.1. GAN

GAN, proposed by Goodfellow in the year of 2014, revolutionized generative modeling by fitting real data distributions through adversarial training. This groundbreaking framework introduced a novel paradigm of “generator-discriminator” competition, thereby redefining the design principles of traditional generative models.

The original GAN has only two sub-networks: a generator (G) and a discriminator (D). The generator’s input is random noise and produces synthesized images , aiming to learn a mapping relationship that approximates a target data distribution . Conversely, the discriminator receives either real images or generated images as input and outputs a discriminative probability. It aims to optimize the discriminator’s ability to accurately discriminate between the real and generated images through adversarial training. Mathematically, GAN is formulated as follows:

During model training, the generator is initially fixed while the discriminator parameters are updated to maximize . Subsequently, the discriminator is held constant as the generator parameters are optimized to minimize . This adversarial process is repeated iteratively until the generator converges to or approximates the real images’ distribution.

2.2. Attention Mechanisms

2.2.1. Spatial Attention

Spatial attention [29] dynamically adjusts the weights of spatial location information in feature maps to enhance the focus on critical regions. Assuming the input feature map , the spatial attention weight matrix is formulated as follows:

where and denote Average-Pooling and Max-Pooling, respectively, while represents a convolution layer with a kernel size. The derived feature map is represented as:

where denotes element-wise multiplication. During the SR reconstruction of face images, spatial attention enhances feature extraction capabilities in critical regions (e.g., eyes and mouth) while suppressing background noise.

2.2.2. Channel Attention

Channel attention models inter-channel relationships to adaptively adjust the weights of individual channels. Given an input feature map , the mathematical formulation of the channel attention weight vector is expressed as follows:

where and denote the parameters of the Fully Connected Layers. And the output is expressed as:

During face image reconstruction, channel attention enhances the response to high-frequency detail channels (e.g., eyes and teeth) by emphasizing critical regions.

2.2.3. Hybrid Attention Mechanism

The hybrid attention mechanism typically combines spatial and channel attention architectures in either serial or parallel configurations to jointly model spatial localization and channel-wise feature importance. A representative implementation, CBAM [30], processes input feature maps through a two-stage pipeline: initially applying channel attention to emphasize critical channels, followed by spatial attention to refine localized features, ultimately yielding refined feature representations.

3. Proposed Method

3.1. Network Architecture

Our proposed SR GAN comprises a generator and a multi-scale discriminator. To address the challenges of unnatural facial detail restoration and local blurring in existing GAN-based face super-resolution (FSR) methods, our model introduces three key architectural innovations:

(1) The multi-scale hybrid attention residual block (MHARB) can dynamically enhance key facial regions (such as eyes and mouth) while suppressing irrelevant background noise.

(2) The edge-guided enhancement block (EEB) can adaptively generate high-frequency detail, effectively restoring sharp textures in contour areas (such as teeth, hair) without introducing artifacts.

(3) The multi-scale discriminator with weighted loss forces the generator to balance the consistency of the global structure and the authenticity of local details through the weighted sub-discriminator loss.

3.1.1. Generator Design

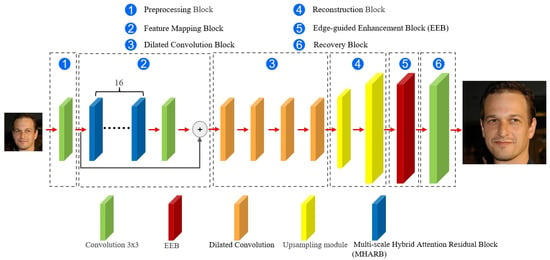

The generator employs a cascaded architecture to progressively recover high-frequency details through Preprocessing Block, Feature Mapping Block, Dilated Convolution Block, Reconstruction Block, Edge-guided Enhancement Block (EEB), and Recovery Block, as illustrated in Figure 1.

Figure 1.

Architectural of the generator.

(1) Preprocessing Block

The LR face image is first passed through a 3 × 3 convolutional layer. A LeakReLU activation function is then applied to extract initial shallow features.

(2) Feature Mapping Block

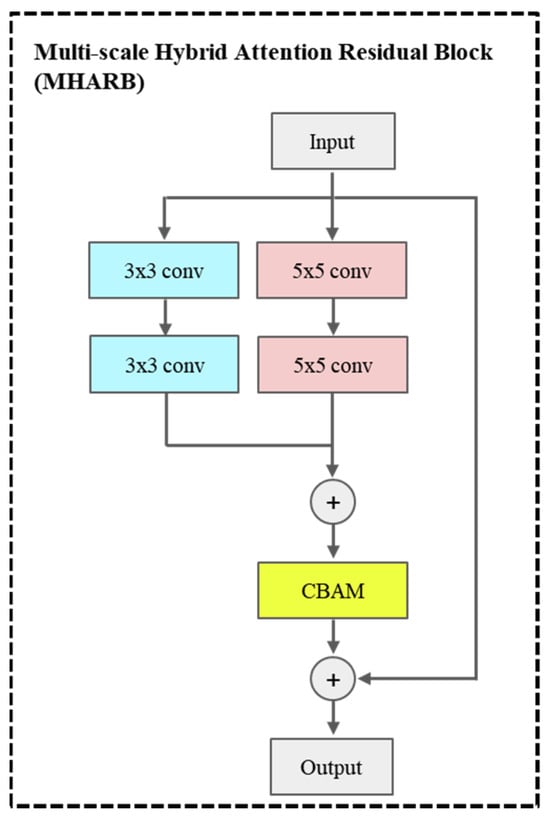

Feature Mapping Block uses 16 MHARBs and adds a residual link. The improved residual block, namely, MHARB, incorporates two parallel convolutional pathways with kernel sizes of 3 × 3 and 5 × 5, as illustrated in Figure 2. The outputs of the dual pathways are combined through element-wise summation and subsequently calibrated by the hybrid attention module CBAM. CBAM sequentially performs channel attention and spatial attention to generate a spatial-channel joint weight map, enhancing responses in critical regions. Assuming the input image is denoted as , the mathematical formulation is expressed as follows:

where denotes the convolution operation at the i-th layer. The convolution kernel in Formula (6) is 3 × 3, and both padding and stride are 1. While the convolution kernel in Formula (7) is 5 × 5 in size, its padding is 2, and the stride size remains 1. Their number of output channels is 64. For Equations (8) and (9), no convolution operation is performed. Therefore, the number of channels in the feature map is 64 for both.

The residual block’s output is derived as:

The generator incorporates 16 stacked residual blocks to progressively deepen feature representations.

(3) Dilated Convolution Block

The architecture cascades four groups of dilated convolutional layers with progressively expanding dilation rates (). This design captures long-range dependencies in face images through incrementally enlarged receptive fields, thereby mitigating detail loss.

(4) Reconstruction Block

This block employs two groups of upsampling units to upscale the feature map resolution to .

Figure 2.

Improved residual block.

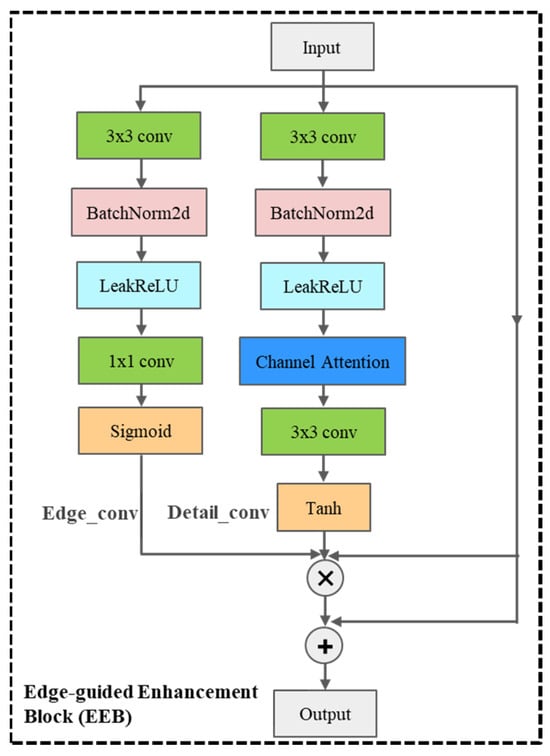

(5) Edge-guided Enhancement Block (EEB)

The EEB incorporates an edge detection branch and a detail enhancement branch, structured as two parallel pathways, as illustrated in Figure 3.

Figure 3.

Edge-guided Enhancement Block.

EEB consists of two parallel branches: the edge detection branch and the detail enhancement branch, aiming to precisely restore high-frequency texture details through an adaptive fusion strategy. The edge detection branch generates the masks of the edge regions (such as facial contours, eyes, and teeth). The detail enhancement branch can control the enhancement amplitude of high-frequency details.

Edge Detection Branch: A 1 × 1 convolution compresses the input feature map to a single channel, which is then passed by a Sigmoid function to generate an edge mask. Here, high-frequency regions correspond to contours.

Detail Enhancement Branch: A 3 × 3 convolutional layer and channel attention module collaboratively generate channel-wise weights, which are subsequently activated via the Tanh function to output enhancement coefficients for high-frequency regions, emphasizing fine structural details.

The edge mask and detail mask are combined to enhance high-frequency details in edge regions, with the formulation expressed as:

where and denote the edge mask and detail enhancement coefficient, respectively. The former controls the spatial extent of enhancement (i.e., where to amplify), while the latter regulates the intensity of amplification (i.e., how much to amplify). The symbol denotes element-wise multiplication between tensors.

(6) Recovery Block

This block has only one convolutional layer. The feature map is reconstructed as a 3-channel HR image after a 3 × 3 convolutional layer.

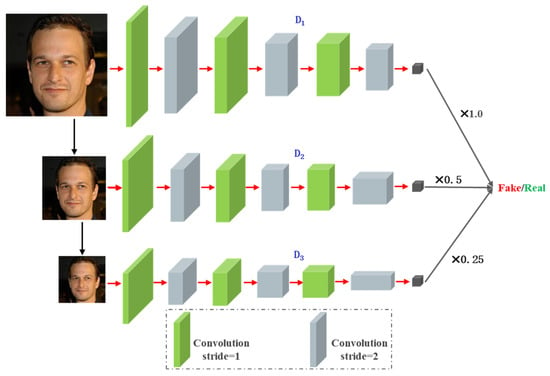

3.1.2. Discriminator Design

The multi-scale discriminator comprises three sub-discriminators () structured hierarchically to process face images at resolutions of , respectively, as shown in Figure 4.

Figure 4.

Architectural of the discriminator.

Each sub-discriminator adopts a similar infrastructure, and they all use three downsamplings with a stride of 2. After progressive downsampling, the input images are fed into discriminators for multi-scale analysis. The outputs of these multi-scale discriminators are combined through a weighted summation with weights , enabling comprehensive supervision of generator training. This design forces the generator to approximate the real data distribution across both global structures (emphasized by ) and local textures (emphasized by ).

3.2. Loss Functions

The discriminator comprises three sub-discriminators , where each sub-discriminator corresponds to an input face image downscaled by a factor of relative to the original resolution. The generator’s multi-scale adversarial loss aims to deceive all discriminators into classifying their inputs as “real,” whereas the discriminator’s multi-scale adversarial loss strives to distinguish between the real and generated images. Consequently, the adversarial losses for both the generator and discriminators are formally defined as follows:

where represents the LR input image, denotes the original HR image, and is the weight coefficient for the k-th sub-discriminator. The weight coefficients for different scales are defined according to the following rules:

Consequently, the weight coefficients are assigned as , where higher-resolution discriminators receive greater weights, while lower-resolution ones exhibit progressively decreasing weights.

In practical training, the generator is augmented with a perceptual loss function and an L1 loss function to enable joint optimization:

In the above equation, are designated as the weighting coefficients for the multi-scale adversarial loss, perceptual loss, and L1 loss function, respectively.

The total loss of the discriminator is defined as:

4. Experiments and Results

4.1. Datasets and Experimental Settings

The CelebA-HQ dataset is a publicly available dataset for computer vision research, comprising 30,000 face images with varying resolutions. The dataset includes images at five distinct scales: , catering to diverse application requirements. For this study, we curated a subset of 15,000 images with resolutions of and , with images designated as the test set.

The model training was conducted on a hardware server equipped with 8 Nvidia 3090 Ti GPUs (Santa Clara, CA, USA), running Ubuntu 18.04 and Py-Torch 2.1.0. All other hyperparameters are listed in Table 1.

Table 1.

Hyperparameters of the training.

During the initial phase of generator training, a larger learning rate (Lr) is employed to rapidly converge toward the local minimum of the loss function. Given that face image SR tasks require the restoration of high-frequency details, the Lr is progressively reduced during the later training stages to prevent parameter oscillations near the optimal value, thereby ensuring rapid convergence. The Lr for the generator is defined as follows:

The generator employs a loss function that integrates three components: a perceptual loss, an L1 loss, and a multi-scale adversarial loss. Among these, L1 loss emphasizes pixel-level errors; however, insufficient weight allocation to this term may fail to adequately constrain the generator’s output. Conversely, the multi-scale adversarial loss drives the generator to produce realistic facial images, but excessive weighting may induce color artifacts in localized regions. To address this, the training process dynamically adjusts loss weights through a phase-wise focus on distinct objectives (structural fidelity followed by detail refinement), thereby balancing L1 and multi-scale adversarial loss contributions for high-fidelity facial reconstruction. The mathematical formulations are defined as follows:

for , , and . As epochs exceed , progressively increases while decreases during training.

4.2. Comparative Experiments

To validate the SR performance on face images, our proposed model was compared against classical approaches, including Bicubic interpolation, SRCNN, EDSR, SRGAN, ESRGAN, Real-ESRGAN, and SwinIR. All baseline models were retrained from scratch on the same dataset without utilizing pretrained weights from the original models. The architectures of the compared models strictly adhered to the specifications described in the original papers, with minor adjustments to hyperparameters made to accommodate hardware constraints on our server (e.g., GPU memory limitations). Quantitative evaluation was conducted using the widely adopted PSNR, SSIM [31], and LPIPS [32]. Higher PSNR and SSIM values indicate greater similarity between super-resolved images and the original HR images. The lower the LPIPS value is, the higher the similarity between the images is.

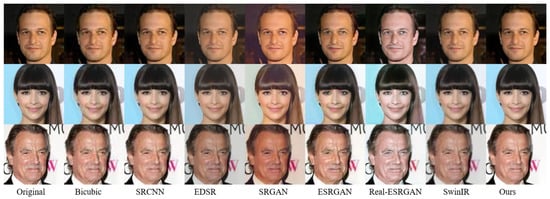

Figure 5 is the comparative analysis of SR results for face images. The first column presents the original HR images, followed by super-resolved outcomes from seven benchmark models: Bicubic interpolation, SRCNN, EDSR, SRGAN, ESRGAN, Real-ESRGAN and SwinIR, respectively. The final column displays the proposed model’s results.

Figure 5.

Visual comparison of super-resolved images.

Bicubic interpolation, a traditional interpolation-based method, generates new pixel values using a 16-pixel neighborhood. While computationally efficient, it introduces significant distortions in high-frequency face regions, achieving the lowest performance among all methods. SRCNN, the first CNN designed for SR, employs only three convolutional layers, limiting its receptive field and restoration capability for complex face textures. EDSR incorporates a deep residual architecture, substantially improving reconstruction quality. However, its high computational complexity and tendency to produce over-smoothing artifacts in super-resolved face images remain critical limitations. SRGAN pioneered the integration of GAN into image SR, demonstrating enhanced performance relative to conventional interpolation-based approaches. However, its generated high-frequency textures exhibit overly stylized and repetitive patterns, with noticeable blurring artifacts around face regions such as the mouth. The ESRGAN model enhances the generator architecture through improved feature fusion mechanisms, yet it still suffers from structural distortions in complex face images. Real-ESRGAN employs spectral normalization and a U-Net architecture to boost discriminative capability, but the absence of perceptual loss constraints leads to blurred details in critical regions like the eyes. SwinIR introduces the Swin Transformer into the super-resolution task, improving the computational efficiency through “window-level” self-attention while maintaining good modeling ability for local and global information. Its super-resolution result is superior to the previous several methods. In contrast, our proposed improved model demonstrates optimal reconstruction performance across both global structures and fine details, aligning with human visual perception.

To conduct comparative experiments in a rigorous manner, this study performs a quantitative analysis of our proposed model against six baseline models. To test the stability of the model, we conducted three independent tests on all the models on three different test sets, that is, to obtain the means and standard deviations of PSNR, SSIM, and LPIPS. The PSNR, SSIM, and LPIPS metrics are presented in Table 2. Among these, the Bicubic model exhibits the lowest values, consistent with the qualitative comparisons shown in Figure 5. As the first GAN-based SR model, SRGAN demonstrates relatively inferior performance due to its simplistic architecture, with its metric values surpassing only baseline methods but trailing behind advanced GAN-based approaches like ESRGAN. Our improvements to the generator and discriminator, along with a phased and refined training strategy, resulted in the highest PSNR and SSIM values, as well as the lowest LPIPS in all evaluated models. In addition, in terms of the standard deviation of PSNR, SSIM, and LPIPS, the stability of our method in the three independent tests is also the best.

Table 2.

Quantitative comparison of super-resolved images.

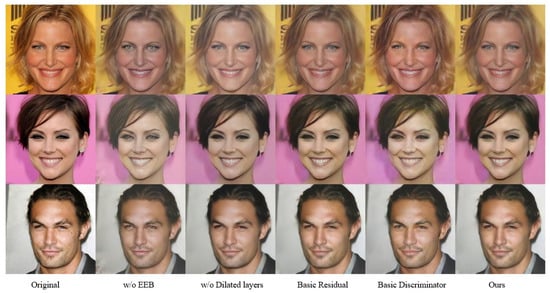

This study implemented four key improvements to the model architecture: the incorporation of an Edge-guided Enhancement Block (EEB) and dilated convolution block, the redesign of standard residual blocks into Multi-scale Hybrid Attention Residual Blocks (MHARB), and the enhancement of the standard discriminator to a multi-scale discriminator. To systematically evaluate the contribution of each component in face image SR tasks, an ablation study was conducted. Qualitative comparisons of the ablation study are visualized in Figure 6, with quantitative metrics provided in Table 3.

Figure 6.

Visual comparison of ablation experiments.

Table 3.

Quantitative comparison of ablation experiments.

The EEB employs a detail-adaptive enhancement strategy to effectively reduce noise while precisely restoring high-frequency textures. Experimental results prove that the absence of EEB leads to severe distortions in high-frequency details (e.g., ocular regions) of complex face images, causing visual discomfort. The multi-scale discriminator, through feature extraction across multiple scales, balances the generation quality of global structures and local details, thereby better constraining the generator to produce realistic face images. Its removal results in blurred artifacts in regions such as the eyes. The MHARB enhances the generator’s modeling capacity for critical facial features via dual-branch convolution fused with CBAM. Conversely, the removal of dilated convolution modules or the use of original residual blocks leads to a decline in PSNR and SSIM values.

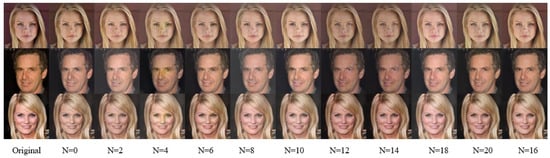

The generator model incorporates 16 improved residual blocks following standard convolutional layers. This study assesses how the number of residual blocks affects SR performance through comparative experiments. The results in Figure 7 and Table 4 reveal that incrementally increasing residual blocks gradually enhances global color fidelity and texture sharpness in super-resolved face images. However, when N exceeds 16, the quantitative metrics decline instead of improving. Furthermore, excessive residual blocks lead to unacceptably high computational overhead. Therefore, is selected as the optimal configuration.

Figure 7.

Visual comparison of residual block quantity variations.

Table 4.

Quantitative comparison of residual block quantity variations.

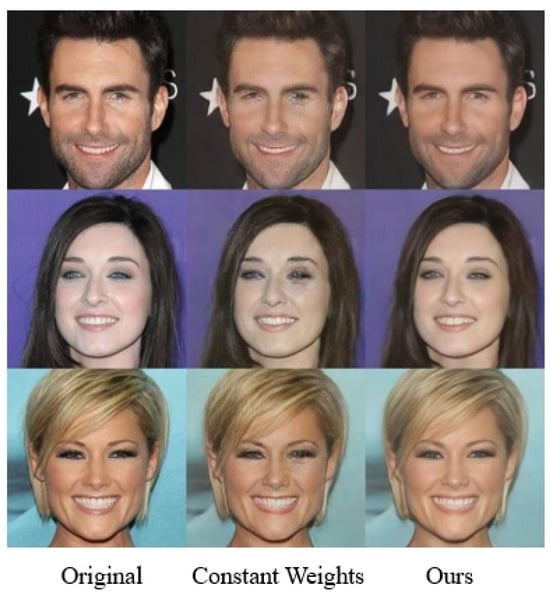

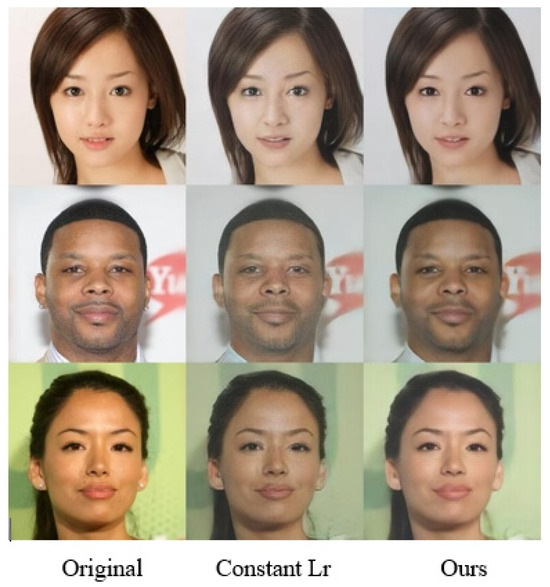

During model training, the weights of the multi-scale adversarial loss function and L1 loss function were dynamically adjusted. By progressively reducing the multi-scale adversarial loss’s weight and increasing the L1 loss’s weight, the reconstruction of high-frequency components in face images was enhanced. In Figure 8, the eyes in the second column of facial images exhibit noticeable scar-like artifacts, showing significant discrepancies compared to the original HR counterparts. Table 5 illustrates the performance improvements in PSNR, SSIM, and LPIPS. The results indicate an enhancement of 1.56 dB for PSNR, 0.0398 for SSIM, and 2.1 for LPIPS.

Figure 8.

Visual comparison of dynamic weight adjustment in loss functions.

Table 5.

Quantitative comparison of dynamic weight adjustment in loss functions.

Furthermore, during model training, a progressive reduction strategy was applied to the generator’s Lr, achieving superior SR results. Figure 9 demonstrates that the decreasing Lr strategy better preserves texture details in reconstructed images. Table 6 illustrates the performance improvements. The PSNR shows an enhancement of 2.67 dB; LPIPS is decreased by 0.49, while the SSIM rises by 0.0306, confirming the effectiveness of the proposed Lr adaptation.

Figure 9.

Visual comparison of dynamic adjustment in Lr.

Table 6.

Quantitative comparison of dynamic adjustment in Lr.

To further demonstrate the generalization ability of the proposed method on different image distributions, we conducted a cross-dataset evaluation experiment on the DIV2K dataset. DIV2K is a widely-used benchmark dataset in image super-resolution tasks, featuring high-resolution images with rich texture details and complex degradation patterns. The details of the experiment retain the same hyperparameters and training settings as described in Section 4.1. The evaluation metrics also follow the same evaluation protocol (PSNR, SSIM, and LPIPS) as CelebA-HQ.

As shown in Figure 10 and Table 7, the proposed model achieved competitive performance on DIV2K compared to other methods, demonstrating its robustness to diverse image characteristics.

Figure 10.

Visual comparison on DIV2K dataset.

Table 7.

Quantitative comparison on DIV2K dataset.

5. Conclusions

This study proposes a novel GAN model incorporating multi-scale attention mechanisms and the Edge-guided Enhancement Block to address the insufficient restoration of high-frequency details and poor training stability in face image super-resolution tasks. Through systematic architectural improvements and a phase-wise training strategy, our model significantly enhances the quality and perceptual appeal of super-resolved images, outperforming other classical SR frameworks in experimental evaluations.

In future work, we will focus on further optimizing the model architecture through the systematic exploration of lightweight designs to reduce computational overhead. Additionally, training of the model with augmented and diverse datasets will be conducted to enhance generalization capabilities and robustness against variations in real-world scenarios.

Author Contributions

Conceptualization, methodology, and writing, Q.L.; validation, L.L.; project administration, L.C.; and funding acquisition, Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

The work is supported in part by the Key Projects of Natural Science Research in Anhui Colleges and Universities under Grant 2023AH051546, in part by the University Natural Science Foundation of Anhui Province under Grant 2022AH010085, in part by the Opening Foundation of State Key Laboratory of Cognitive Intelligence under Grant COGOS-2023HE02, and in part by Program of Anhui Education Department under Grant 2024jsqygz83.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data utilized in this study are sourced from publicly available datasets, accessed at the link https://gitcode.com/Resource-Bundle-Collection/8a929/tree/main (accessed on 14 December 2023).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Deng, X.; Zhang, H.; Li, X. HPG-GAN: High-Quality Prior-Guided Blind Face Restoration Generative Adversarial Network. Electronics 2023, 12, 3418. [Google Scholar] [CrossRef]

- Yekeben, Y.; Cheng, S.; Du, A. CGFTNet: Content-Guided Frequency Domain Transform Network for Face Super-Resolution. Information 2024, 15, 765. [Google Scholar] [CrossRef]

- Lu, X.; Gao, X.; Chen, Y.; Chen, G.; Yang, T.; Chen, Y. PEFormer: A pixel-level enhanced CNN-transformer hybrid network for face image super-resolution. Signal Image Video Process. 2024, 18, 7303–7317. [Google Scholar] [CrossRef]

- Farooq, M.; Dailey, M.N.; Mahmood, A.; Moonrinta, J.; Ekpanyapong, M. Human face super-resolution on poor quality surveillance video footage. Neural Comput. Appl. 2021, 33, 13505–13523. [Google Scholar] [CrossRef]

- Li, Y.; Sixou, B.; Peyrin, F. A review of the deep learning methods for medical images super resolution problems. IRBM 2021, 42, 120–133. [Google Scholar] [CrossRef]

- Wang, X.; Yi, J.; Guo, J.; Song, Y.; Lyu, J.; Xu, J.; Min, H. A review of image super-resolution approaches based on deep learning and applications in remote sensing. Remote Sens. 2022, 14, 5423. [Google Scholar] [CrossRef]

- Khaledyan, D.; Amirany, A.; Jafari, K.; Moaiyeri, M.H.; Khuzani, A.Z.; Mashhadi, N. Low-cost implementation of bilinear and bicubic image interpolation for real-time image super-resolution. In Proceedings of the IEEE Global Humanitarian Technology Conference (GHTC), Seattle, WA, USA, 29 October–1 November 2020; pp. 1–5. [Google Scholar]

- Huang, W.; Xue, Y.; Hu, L.; Liuli, H. S-EEGNet: Electroencephalogram signal classification based on a separable convolution neural network with bilinear interpolation. IEEE Access 2020, 8, 131636–131646. [Google Scholar] [CrossRef]

- Triwijoyo, B.K.; Adil, A. Analysis of medical image resizing using bicubic interpolation algorithm. J. Ilmu Komput. 2021, 14, 20. [Google Scholar] [CrossRef]

- Rhee, S.; Kang, M.G. Discrete cosine transform based regularized high-resolution image reconstruction algorithm. Opt. Eng. 1999, 38, 1348–1356. [Google Scholar] [CrossRef]

- Ye, D.J.; Zhou, B.; Zhong, B.Y.; Wei, W.; Duan, X.M. POCS-based super-resolution image reconstruction using local gradient constraint. In Proceedings of the Third International Symposium on Image Computing and Digital Medicine, New York, NY, USA, 24–26 August 2019; pp. 274–277. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NA, USA, 3–6 December 2012; Volume 25. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 184–199. [Google Scholar]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the super-resolution convolutional neural network. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 391–407. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; Volume 27. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Shi, W. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Change Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Park, S.J.; Son, H.; Cho, S.; Hong, K.S.; Lee, S. Srfeat: Single image super-resolution with feature discrimination. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 439–455. [Google Scholar]

- Zhang, W.; Liu, Y.; Dong, C.; Qiao, Y. Ranksrgan: Generative adversarial networks with ranker for image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3096–3105. [Google Scholar]

- Shang, T.; Dai, Q.; Zhu, S.; Yang, T.; Guo, Y. Perceptual extreme super-resolution network with receptive field block. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 440–441. [Google Scholar]

- Ma, C.; Rao, Y.; Cheng, Y.; Chen, C.; Lu, J.; Zhou, J. Structure-Preserving Super Resolution with Gradient Guidance. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Wang, X.; Xie, L.; Dong, C.; Shan, Y. Real-esrgan: Training real-world blind super-resolution with pure synthetic data. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1905–1914. [Google Scholar]

- Wang, X.; Yu, K.; Dong, C.; Loy, C.C. Recovering realistic texture in image super-resolution by deep spatial feature transform. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 606–615. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A. Spatial transformer networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Setiadi, D.R.I.M. PSNR vs SSIM: Imperceptibility quality assessment for image steganography. Multimed. Tools Appl. 2021, 80, 8423–8444. [Google Scholar] [CrossRef]

- Ghildyal, A.; Liu, F. Shift-tolerant perceptual similarity metric. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 91–107. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).