Research on Digital Orthophoto Production Technology for Indoor Murals in the Context of Climate Change and Environmental Protection

Abstract

1. Introduction

2. Experimental Methods and Workflow

3. Overview of the Study Area

4. Experimental Design

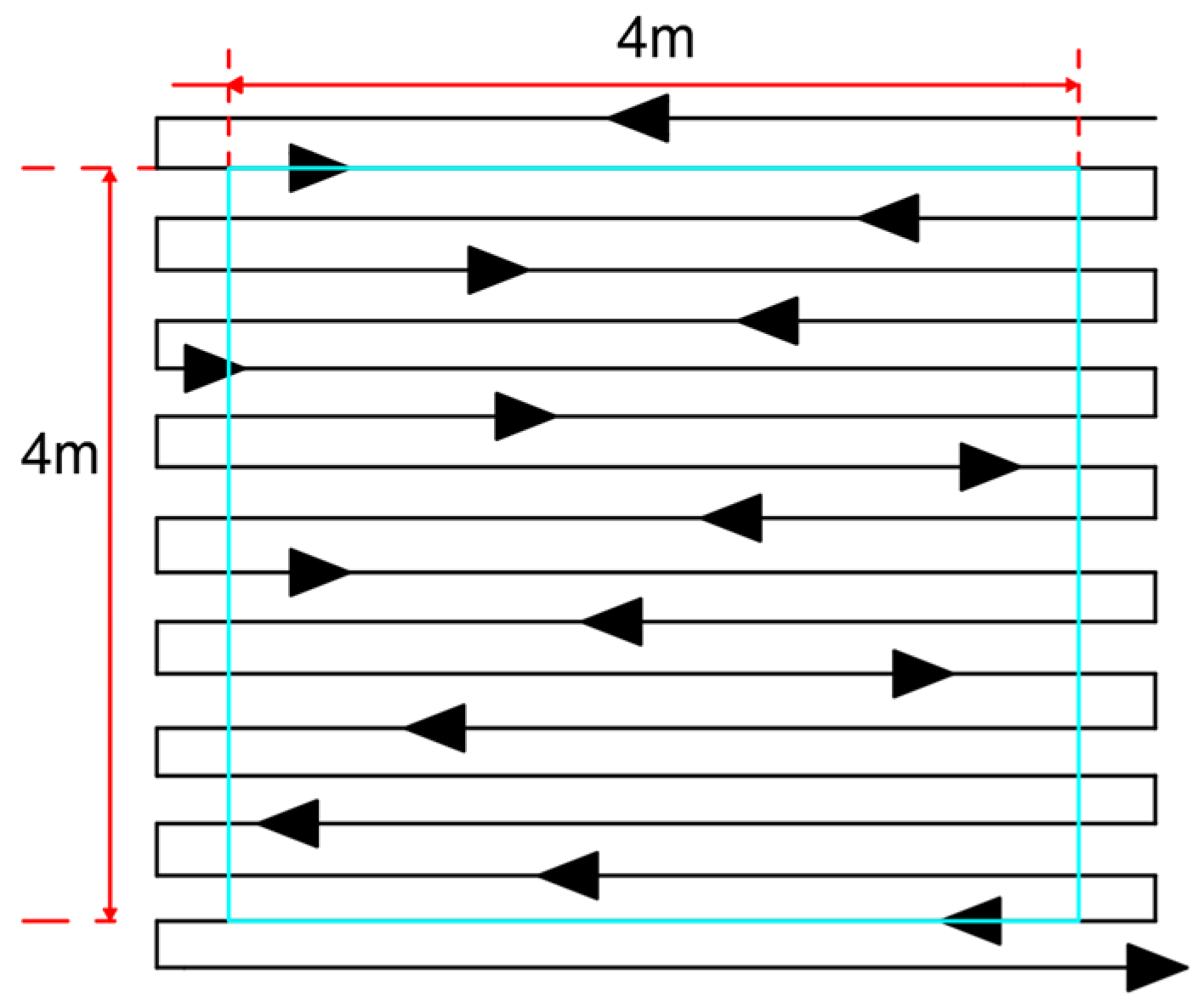

4.1. Image Acquisition Route Planning

4.2. Control Point Distribution

5. Data Acquisition and Processing

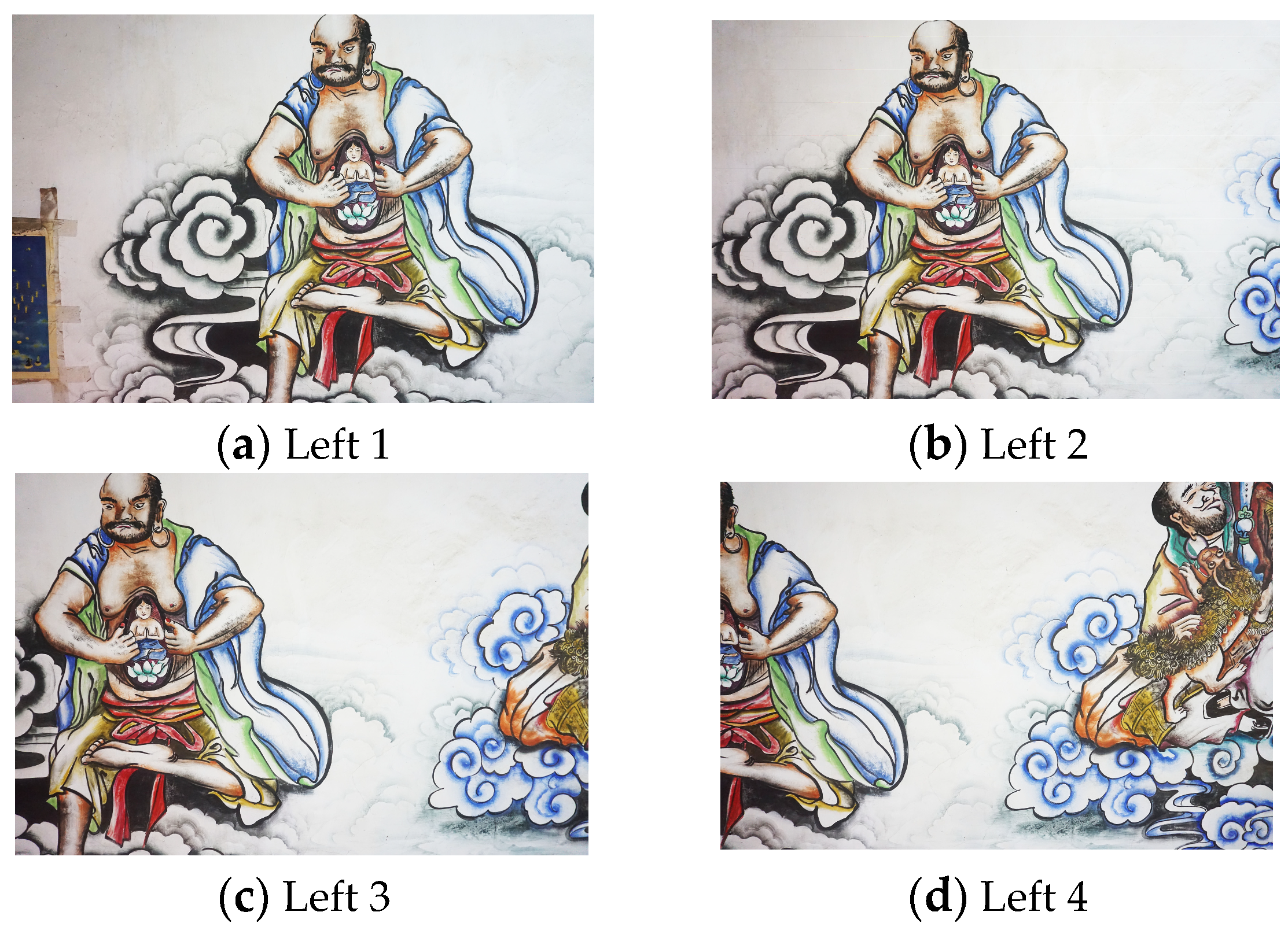

5.1. Digital Image Acquisition

5.2. Control Point Acquisition

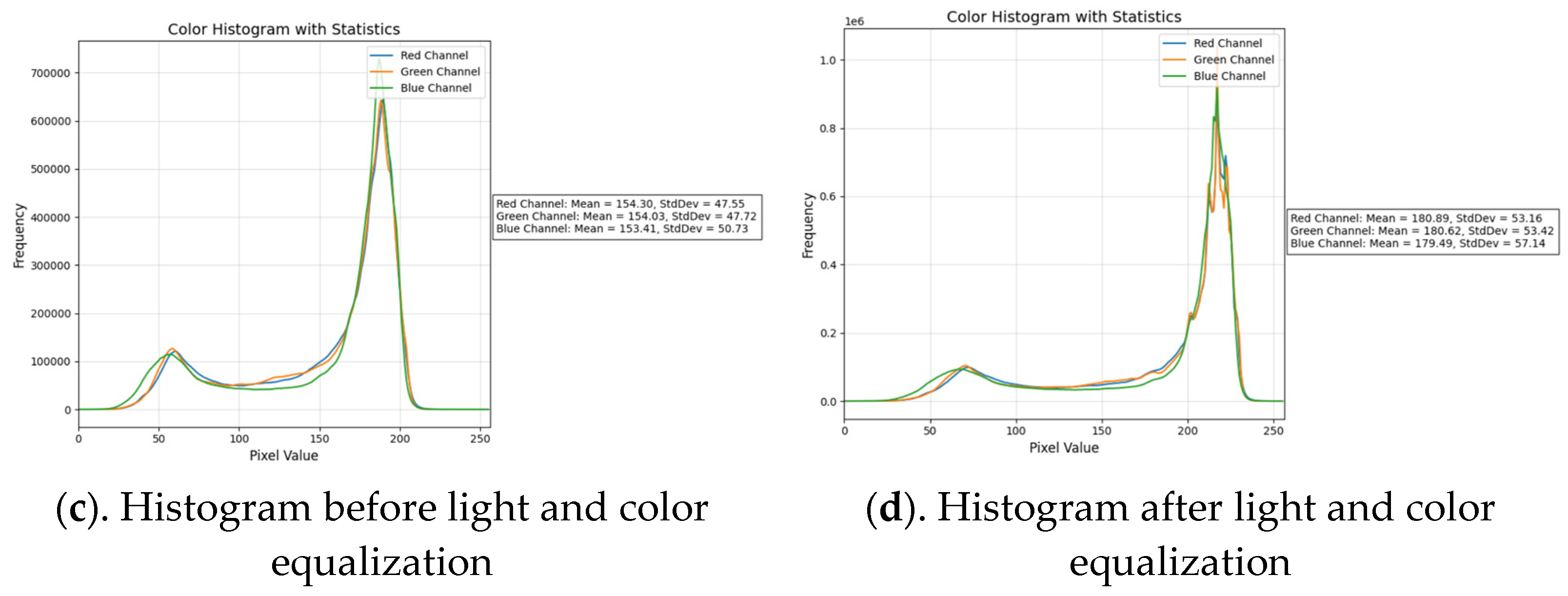

5.3. Image Preprocessing

5.4. Control Point Coordinate Transformation

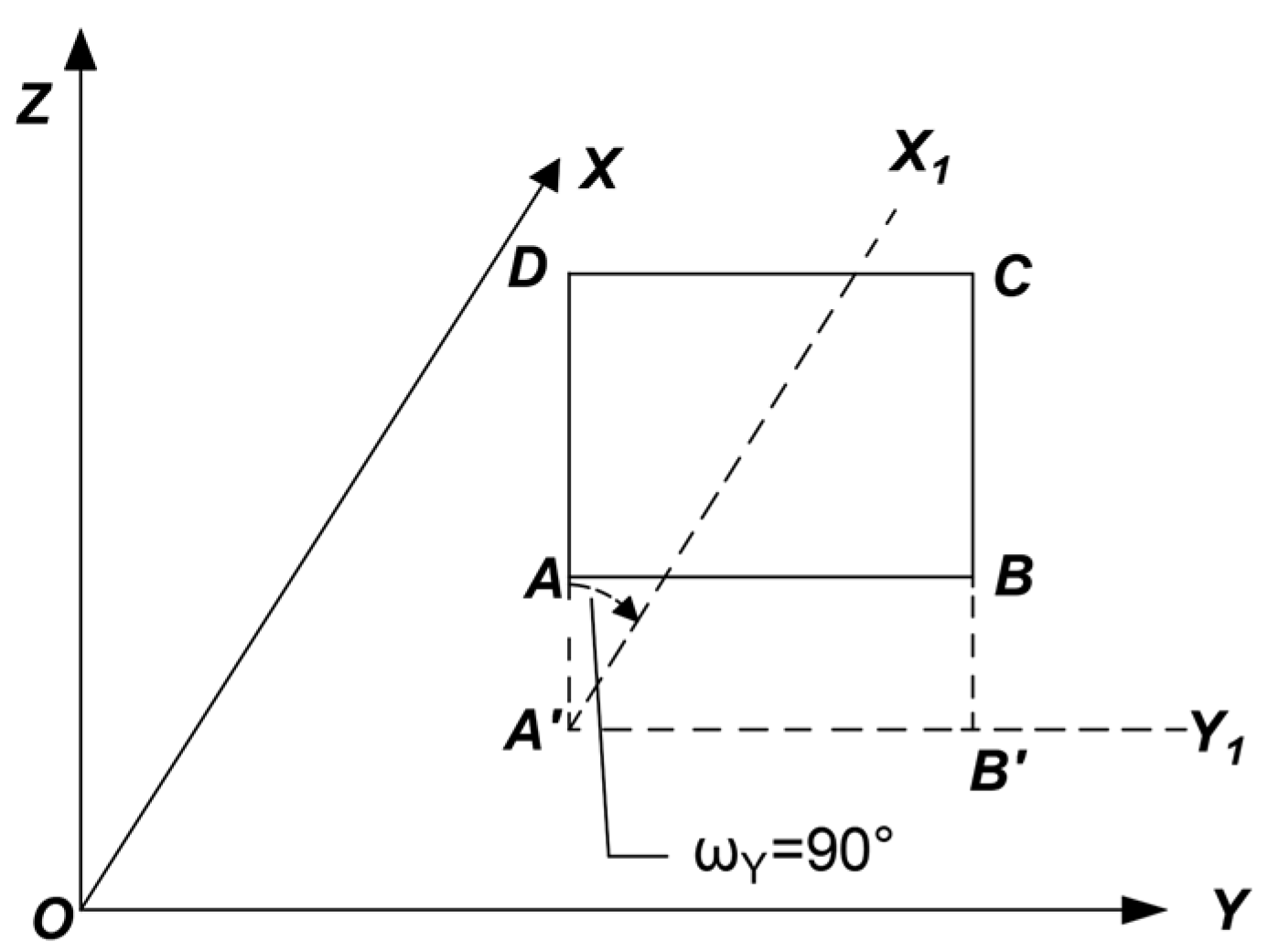

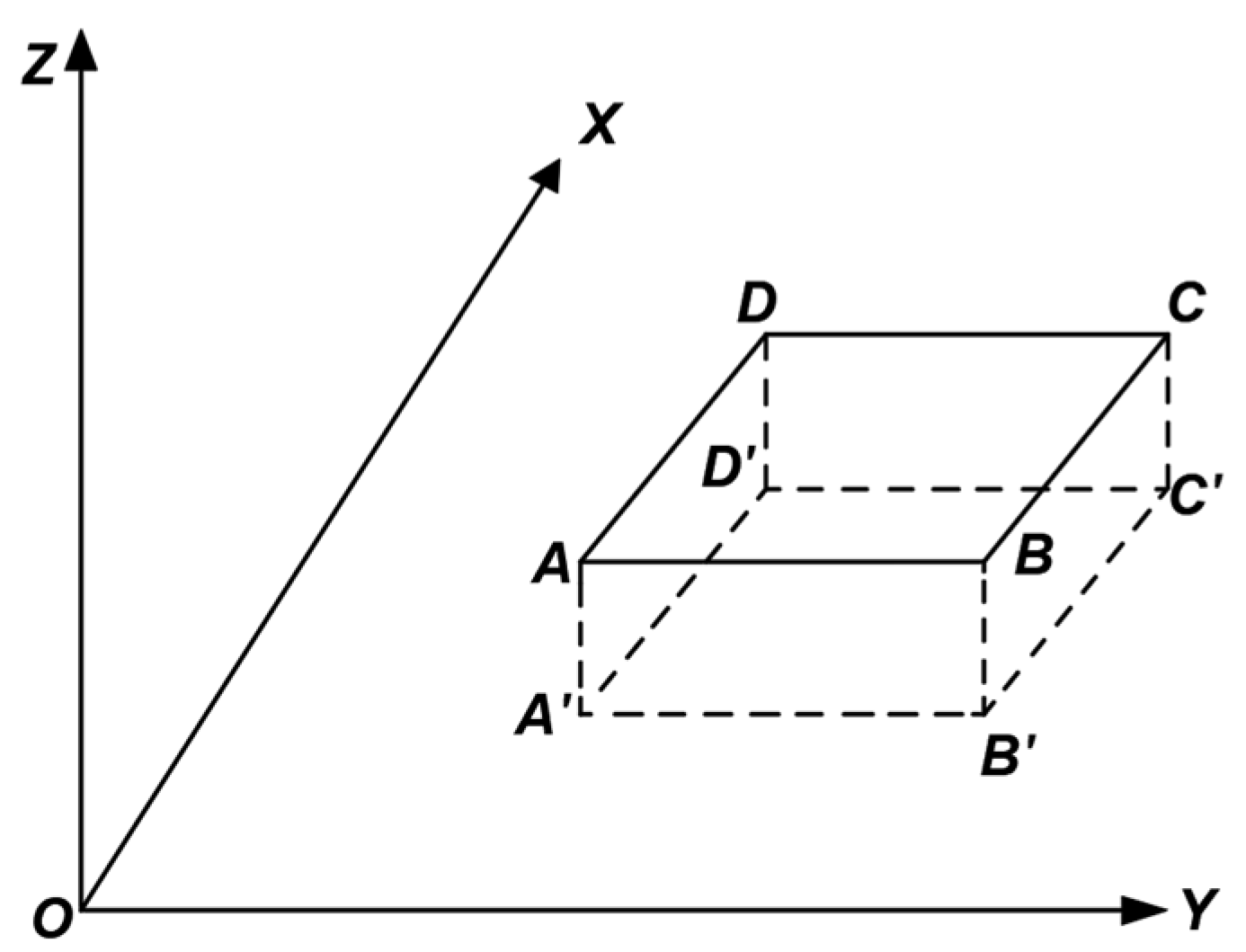

5.4.1. Rotation Around the Y-Axis

5.4.2. Translation Parameters

5.4.3. Coordinate Transformation

5.5. Orthophoto Generation

- (1)

- Aerial Triangulation Processing

- (2)

- Control Point Import and Processing

- (3)

- Point Cloud Densification

- (4)

- Construction of the 3D Mesh

- (5)

- Texture Mapping

- (6)

- Orthophoto Generation

6. Experimental Results and Discussion

6.1. Image Accuracy

6.2. Sustainability Benefits

7. Discussion

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Wang, Y.; Wu, X. Current progress on murals: Distribution, conservation and utilization. Herit. Sci. 2023, 11, 61. [Google Scholar] [CrossRef] [PubMed]

- Learner, T.; Sanchez-Pons, M.; Shank, W. (Eds.) Conservation Issues in Modern and Contemporary Murals; Cambridge Scholars Publishing: Newcastle Upon Tyne, UK, 2015. [Google Scholar]

- Shank, W.; Norris, D.H. Giving contemporary murals a longer life: The challenges for muralists and conservators. Stud. Conserv. 2008, 53 (Suppl. S1), 12–16. [Google Scholar] [CrossRef]

- Smith, J.; Brown, T.; Lee, S.; Wilson, R. Low-carbon solutions for heritage documentation. Sustainability 2023, 15, 112. [Google Scholar] [CrossRef]

- Laohaviraphap, N.; Waroonkun, T. Integrating Artificial Intelligence and the Internet of Things in Cultural Heritage Preservation: A Systematic Review of Risk Management and Environmental Monitoring Strategies. Buildings 2024, 14, 3979. [Google Scholar] [CrossRef]

- Colley, S. Ethics and digital heritage. In The Ethics of Cultural Heritage; Springer: New York, NY, USA, 2014; pp. 13–32. [Google Scholar]

- Petracek, P.; Kratky, V.; Baca, T.; Petrlik, M.; Saska, M. New era in cultural heritage preservation: Cooperative aerial autonomy for fast digitalization of difficult-to-access interiors of historical monuments. IEEE Robot. Autom. Mag. 2024, 31, 8–25. [Google Scholar] [CrossRef]

- Gao, Z.; Du, M.; Cao, N.; Hou, M.; Wang, W.; Lyu, S. Application of hyperspectral imaging technology to digitally protect murals in the Qutan temple. Herit. Sci. 2023, 11, 8. [Google Scholar] [CrossRef]

- Molloy, L. Low-resource/No-resource: Lowering the Barriers to Sustainable Digital Preservation in the Contemporary Art Professions. In Proceedings of the iPRES 2024 Papers—International Conference on Digital Preservation, Ghent, Belgium, 16–20 September 2024. [Google Scholar]

- Muthuswamy, V.V.; Esakki, S. Exploring Sustainable Cultural Preservation: Strategies, Challenges, and Community Engagement in Heritage Conservation Efforts. Rita Rev. Indexada Textos Academicos 2024, 21, 129–146. Available online: https://redfundamentos.com/menu-script/index.php/rita/article/view/412 (accessed on 21 March 2025).

- Hou, M.; Lei, Y.; Lu, X.; Zhang, X.; Han, X. Extraction of Mural Sketch Information Based on Hyperspectral Data. Sci. Surv. Mapp. 2014, 39, 89–92+101. [Google Scholar] [CrossRef]

- Sun, P.; Hou, M.; Lyu, S.; Wang, W.; Li, S.; Mao, J.; Li, S. Enhancement and restoration of scratched murals based on hyperspectral imaging—A case study of murals in the Baoguang Hall of Qutan Temple, Qinghai, China. Sensors 2022, 22, 9780. [Google Scholar] [CrossRef]

- Luo, X.; Luo, X.J.; Guo, X.; Zheng, X. Application of Close-Range Photogrammetry in 3D Model Reconstruction of Cultural Relics. Cult. Relics Identif. Apprec. 2022, 17, 136–139. [Google Scholar]

- Yang, W.; Tang, X.; Zhang, P.; Hu, B.; Jin, Z. Research on Virtual Restoration Method for Tomb Mural Pigment Colors Based on Fusion Spectrum Analysis. Sci. Conserv. Archaeol. 2023, 35, 11–23. [Google Scholar] [CrossRef]

- Liu, X.W.; Dong, G.Y.; Mu, X.R. Digital Restoration of Fragmented Cultural Relics Based on Close-Range Photogrammetry. Beijing Surv. Mapp. 2024, 38, 868–873. [Google Scholar]

- Wang, L.W. Application of Point Cloud Image Maps in Facade Mapping of Historical Preservation Buildings. Surv. Mapp. Stand. 2024, 40, 134–139. [Google Scholar] [CrossRef]

- Soto-Martin, O.; Fuentes-Porto, A.; Martin-Gutierrez, J. A digital reconstruction of a historical building and virtual reintegration of mural paintings to create an interactive and immersive experience in virtual reality. Appl. Sci. 2020, 10, 597. [Google Scholar] [CrossRef]

- Muralidhar, S.; Bhardwaj, A. Preservation and Archiving of Historic Murals Using a Digital Non-Metric Camera. Eng. Proc. 2024, 82, 60. [Google Scholar] [CrossRef]

- Rizzi, A.; Voltolini, F.; Girardi, S.; Gonzo, L.; Remondino, F. Digital preservation, documentation and analysis of paintings, monuments and large cultural heritage with infrared technology, digital cameras and range sensors. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2007, 36, 631–636. [Google Scholar]

- Majid, Z.; Ariff, M.F.M.; Idris, K.M.; Yusoff, A.R.; Idris, K.M.; Aspuri, A.; Abbas, M.A.; Zainuddin, K.; Ghani, A.R.A.; Saeman, A.A.B. Three-dimensional mapping of AN ancient cave paintings Using close-range photogrammetry and terrestrial laser scanning technologies. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 453–457. [Google Scholar] [CrossRef]

- McClinton, E.K. Applications of Photogrammetric Modeling to Roman Wall Painting: A Case Study in the House of Marcus Lucretius. Arts 2019, 8, 89. [Google Scholar] [CrossRef]

- Yilmaz, H.; Yakar, M.; Gulec, S.; Dulgerler, O. Importance of digital close-range photogrammetry in documentation of cultural heritage. J. Cult. Herit. 2007, 8, 428–433. [Google Scholar] [CrossRef]

- Feng, W. Essential Techniques of Close-Range Photogrammetry. Sci. Surv. Mapp. 2000, 25, 26–30+1. [Google Scholar]

- Bornstein, D.; Keep, J.T. New Dimensions in Conservation Imaging: Combining Photogrammetry and Photometric Stereo for 3D Documentation of Heritage Artefacts. AICCM Bull. 2023, 44, 148–162. [Google Scholar] [CrossRef]

- Chen, Z.; Du, W.J.; Ni, J.G. Production Process and Technology of Digital Orthophoto Maps. China Land Sci. 2009, 23, 51–55. [Google Scholar]

- Zhu, S.; Qiang, S.; Zou, X.; Xu, X. Study on Aerial Control Layout Schemes for Digital Camera (DMC). Mod. Surv. Mapp. 2008, 31, 9–10. [Google Scholar]

- Yang, F.; Li, G.Y.; Wang, L. Research on 3D Coordinate Transformation Methods. Bull. Surv. Mapp. 2010, 6, 5–7+15. [Google Scholar]

- Wu, J.Z.; Wang, A.Y. A Unified Model for Spatial Cartesian Coordinate Transformation. J. Geod. Geodyn. 2015, 35, 1046–1048+105. [Google Scholar]

- Pan, Y.; Xiao, G.; Wang, X.; Sun, C. A Method for Obtaining Orthophoto Images of Dangerous Rock Faces Based on Coordinate Transformation. Explor. Sci. Technol. 2019, 2, 5–9. [Google Scholar]

- Wang, L.; Cao, Z. Solution to Mixed Additive and Multiplicative Error Models for Seven-Parameter Transformation. Geospat. Inf. 2024, 49, 59–63. [Google Scholar] [CrossRef]

- Zhang, L.; Chen, W.; Gupta, A. GPU-accelerated photogrammetry for sustainable heritage conservation. Sustainability 2022, 14, 5210. [Google Scholar] [CrossRef]

- Zhang, X.; Zhen, H.; Mike, J.R.; Yang, W.; Zhang, S.; Li, H. Optimization of 3D Reconstruction Parameters for Runoff Plots Based on PhotoScan. Trans. Chin. Soc. Agric. Mach. 2019, 50, 144–150+230. [Google Scholar]

- Tang, C.; Yang, X. Application of Agisoft PhotoScan Multi-View 3D Modeling Software in the Protection of Historical and Cultural Villages. Comput. Era 2023, 3, 63–66. [Google Scholar] [CrossRef]

- Zhao, H.; Tong, G.; Yan, X. Application of Agisoft PhotoScan in 3D Modeling of Stone Tools. Acta Anthropol. Sin. 2021, 40, 40–48. [Google Scholar] [CrossRef]

- Apple Inc. iPhone 15 Pro Environmental Report; Apple Inc.: Cupertino, CA, USA, 2023. [Google Scholar]

- Polycam Inc. Polycam: LiDAR & 3D Scanner (Version 5.2) [Mobile Application Software]. Apple App Store. 2023. Available online: https://apps.apple.com/us/app/polycam-lidar-3d-%E6%89%AB%E6%8F%8F%E4%BB%AA/id1532482376?l=zh (accessed on 21 March 2025).

- Sony. α6000 Camera Technical Manual; Sony: Tokyo, Japan, 2023. [Google Scholar]

- FARO. Focus S Laser Scanner Product Manual; FARO: Lake Mary, FL, USA, 2023. [Google Scholar]

- De Santoli, L. Guidelines on energy efficiency of cultural heritage. Energy Build. 2015, 86, 534–540. [Google Scholar]

- Ecoinvent Association. Ecoinvent Database v3.8 [Life Cycle Inventory Database]; Ecoinvent: Zurich, Switzerland, 2023; Available online: https://ecoinvent.org/the-ecoinvent-database/ (accessed on 21 March 2025).

- National Development and Reform Commission of China. Provincial Power Grid Carbon Emission Factors; National Development and Reform Commission of China: Beijing, China, 2023.

- Phase One. iXM Camera Environmental Report; Phase One: Copenhagen, Denmark, 2023. [Google Scholar]

- UNESCO. Digital Heritage and Climate Action; UNESCO: Paris, France, 2021. [Google Scholar]

| Equipment | Specifications |

|---|---|

| Camera | Sony A6000 |

| Lens | SELP1650 |

| Tape Measure | 10 m |

| Measuring Tape | 10 m |

| Tripod | 1.78 m–6.1 m |

| Imaging Parameters | Specifications |

|---|---|

| Focal Length | 16 mm |

| Sensor Size | 23.5 mm × 15.6 mm |

| Image Resolution | 6000 × 4000 (pixels) |

| Pixel Size | 0.00392 mm |

| Effective Shooting Distance | ≥25 cm |

| Parameter Name | Parameter Value |

|---|---|

| Number of Channels | 800 |

| Horizontal Static Accuracy | ±(2.5 mm + 0.5 × 10−6D) |

| Vertical Static Accuracy | ±(5.0 mm + 0.5 × 10−6D) |

| Horizontal RTK Accuracy | ±(5.0 mm + 0.5 × 10−6D) |

| Vertical RTK Accuracy | ±(5.0 mm + 0.5 × 10−6D) |

| Data Update Rate | 5 HZ |

| Initialization Time | 10 s |

| Supported Coordinate Systems Price | WGS-84, CGCS2000, etc. ¥15,000 |

| Parameter Name | Parameter Value |

|---|---|

| Minimum Sight Distance | 1.0 m |

| Distance Measurement Accuracy | 2 mm + 2 × 10−6D |

| Measuring Range | 1000 m (prismless), 1200 m (reflector sheet), 5000 m (single prism) |

| Angle Measurement Accuracy Price | 2″ ¥30,000 |

| Compensation Accuracy | 1″ |

| Serial Number | X (m) | Y (m) | Z (m) |

|---|---|---|---|

| PT1 | 2,833,609.1640 | 621,640.9092 | 1990.2913 |

| PT2 | 2,833,609.1650 | 621,640.1129 | 1989.5557 |

| PT3 | 2,833,609.1650 | 621,640.8998 | 1989.4680 |

| ........ | ........ | ........ | ........ |

| PT16 | 2,833,609.1600 | 621,643.9145 | 1988.1582 |

| PT17 | 2,833,609.1590 | 621,643.1151 | 1988.2473 |

| PT18 | 2,833,609.1610 | 621,643.1779 | 1988.7685 |

| ........ | ........ | ........ | ........ |

| PT37 | 2,833,609.1620 | 621,643.6559 | 1990.7750 |

| PT38 | 2,833,609.1670 | 621,639.7280 | 1989.7507 |

| PT39 | 2,833,609.1690 | 621,639.6791 | 1990.1572 |

| Serial Number | X1 (m) | Y1 (m) | Z1 (m) |

|---|---|---|---|

| PT1 | 2,833,607.5849 | 621,643.1158 | 1988.7122 |

| PT2 | 2,833,608.3205 | 621,643.9121 | 1988.7112 |

| PT3 | 2,833,608.4082 | 621,643.1252 | 1988.7112 |

| ........ | ........ | ........ | ........ |

| PT16 | 2,833,609.7180 | 621,640.1105 | 1988.7162 |

| PT17 | 2,833,609.6289 | 621,640.9099 | 1988.7172 |

| PT18 | 2,833,609.1077 | 621,640.8471 | 1988.7152 |

| ........ | ........ | ........ | ........ |

| PT37 | 2,833,607.1012 | 621,640.3691 | 1988.7142 |

| PT38 | 2,833,608.1255 | 621,644.2970 | 1988.7092 |

| PT39 | 2,833,607.7190 | 621,644.3459 | 1988.7072 |

| Serial Number | X (m) | Y (m) | X1 (m) | Y1 (m) | ΔX (m) | ΔY (m) |

|---|---|---|---|---|---|---|

| 1 | 2,833,608.4082 | 621,643.1252 | 2,833,608.4035 | 621,643.1236 | 0.0047 | 0.0016 |

| 2 | 2,833,610.4777 | 621,643.0476 | 2,833,610.4843 | 621,643.0528 | −0.0066 | −0.0052 |

| 3 | 2,833,609.8795 | 621,641.8945 | 2,833,609.8792 | 621,641.8935 | 0.0003 | 0.0010 |

| 4 | 2,833,609.1077 | 621,640.8471 | 2,833,609.1059 | 621,640.8489 | 0.0018 | −0.0018 |

| 5 | 2,833,608.2907 | 621,642.1375 | 2,833,608.2926 | 621,642.1344 | −0.0019 | 0.0031 |

| 6 | 2,833,608.7893 | 621,641.5604 | 2,833,608.7905 | 621,641.5620 | −0.0012 | −0.0016 |

| Indicator | iPhone 15 Pro + Polycam | This Method | Phase One iXM | Faro Focus S | Data Source |

|---|---|---|---|---|---|

| Energy Consumption per Task (Wh) | 16 | 50.55 | 220.0 | 160.0 | [35,36,37,38] |

| Carbon Emissions per Task (kg CO2) | 0.31 | 0.56 | 2.41 | 8.59 | [39,40,41] |

| Equipment Cost (10,000 CNY) | 1 | 4.5 | 50.0 | 60.0 | Manufacturer’s Official Price |

| Community Accessibility | High | High | Low | Medium | [42] |

| Public Education Potential | Supports Online Sharing | Supports Online Sharing | Limited | Limited | [43] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, X.; Yang, Y.; Yan, D. Research on Digital Orthophoto Production Technology for Indoor Murals in the Context of Climate Change and Environmental Protection. J. Imaging 2025, 11, 140. https://doi.org/10.3390/jimaging11050140

Zhou X, Yang Y, Yan D. Research on Digital Orthophoto Production Technology for Indoor Murals in the Context of Climate Change and Environmental Protection. Journal of Imaging. 2025; 11(5):140. https://doi.org/10.3390/jimaging11050140

Chicago/Turabian StyleZhou, Xiwang, Yongming Yang, and Dingfei Yan. 2025. "Research on Digital Orthophoto Production Technology for Indoor Murals in the Context of Climate Change and Environmental Protection" Journal of Imaging 11, no. 5: 140. https://doi.org/10.3390/jimaging11050140

APA StyleZhou, X., Yang, Y., & Yan, D. (2025). Research on Digital Orthophoto Production Technology for Indoor Murals in the Context of Climate Change and Environmental Protection. Journal of Imaging, 11(5), 140. https://doi.org/10.3390/jimaging11050140