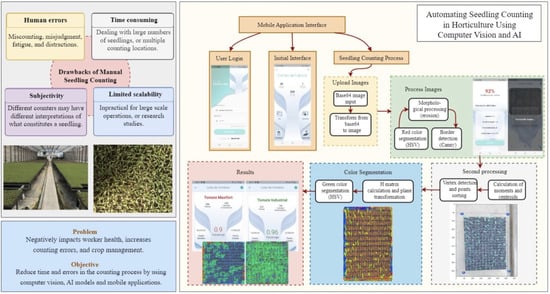

Automating Seedling Counts in Horticulture Using Computer Vision and AI

Abstract

:1. Introduction

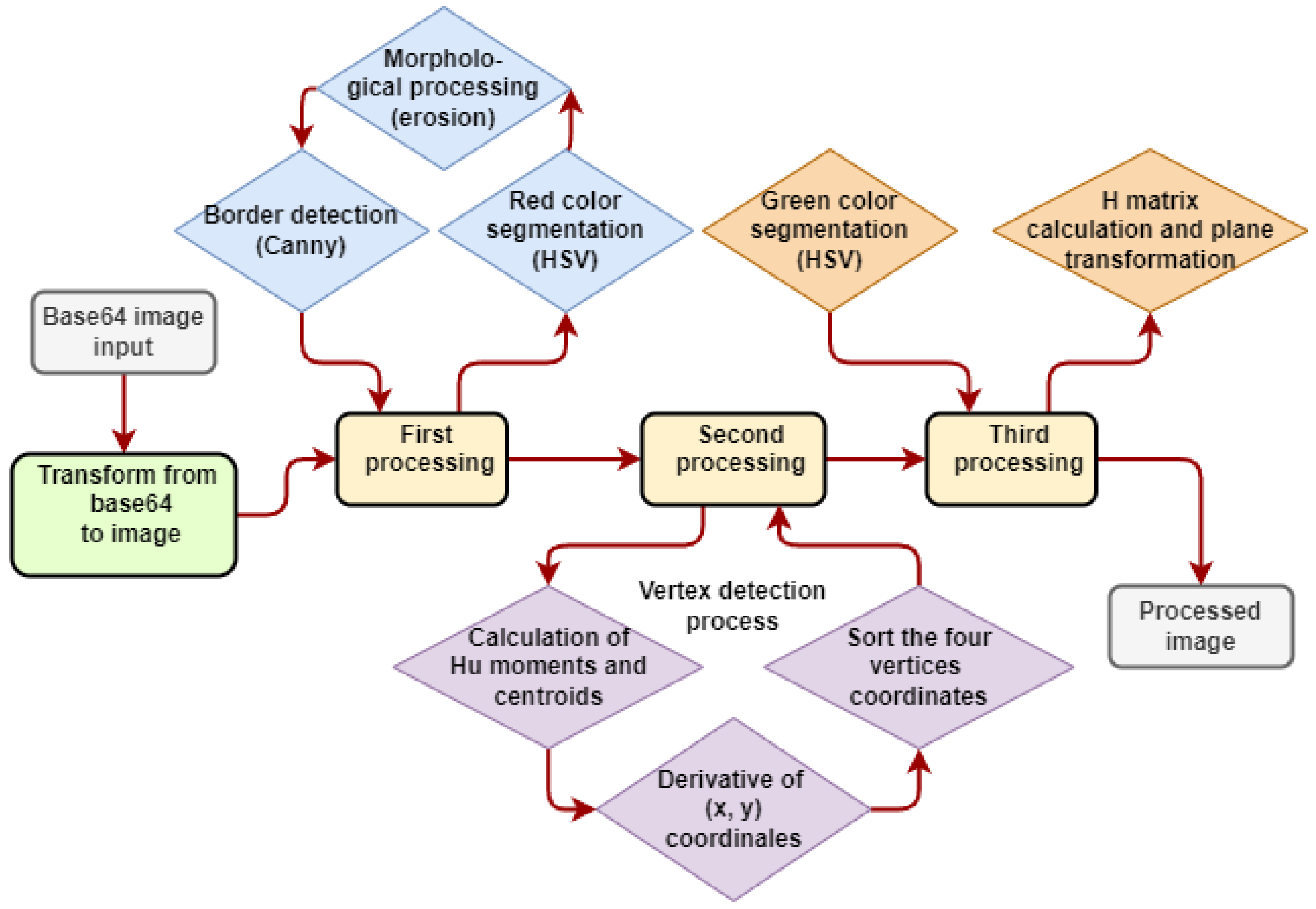

2. Materials and Methods

2.1. Seedling Growing Process and Traditional Seedling Counting Method

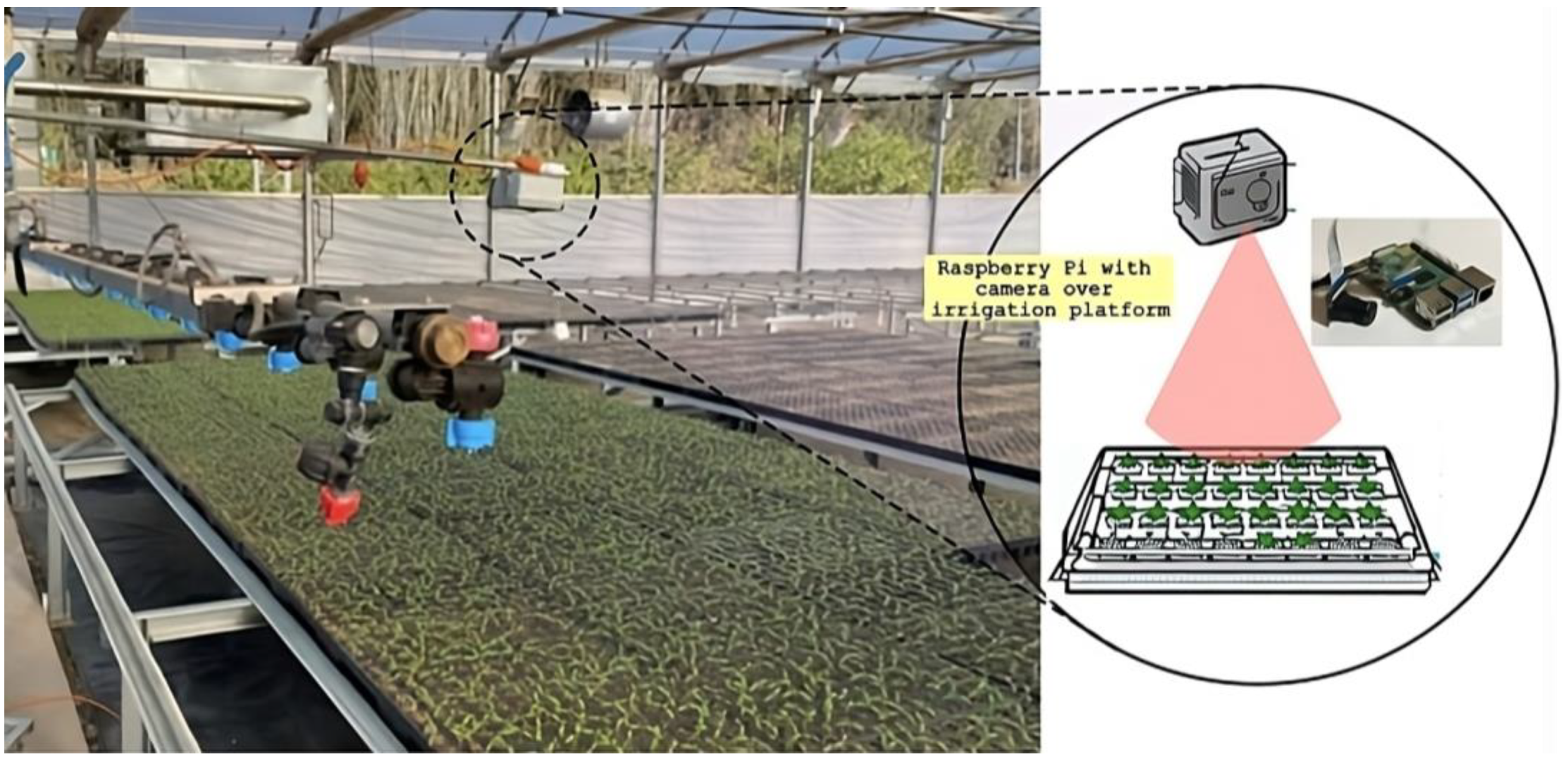

2.2. Hardware Development

- from picamera import PiCamera

- from time import sleep

- import datetime

- now = datetime.datetime.now()

- path = “/home/pi/Desktop/imagenes/”+str(now)+”.jpg”

- camera = PiCamera()

- camera.start_preview()

- sleep(5)

- camera.capture(path)

- camera.stop_preview()

- tab was used [41] with the following code:

- x/5 * *x * * python /home/pi/Desktop/capture_images.py

- x x/4 x x * python /home/pi/Desktop/send_images_to_server.py

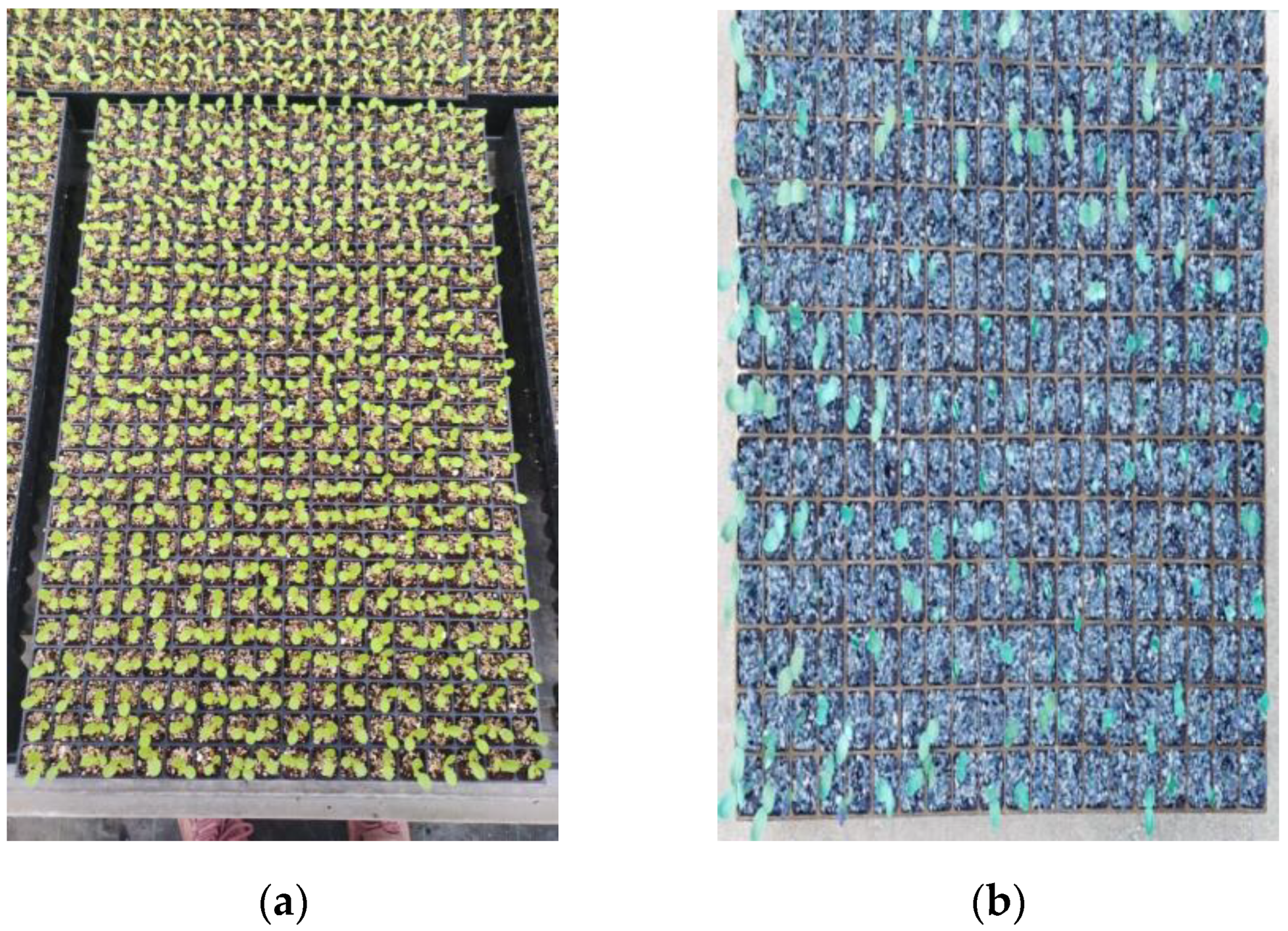

2.3. Description of the Dataset, Initial Filters, and Increasing Dataset Quality

2.4. Color Space

2.5. Morphological Transformation

2.6. Canny Technique to Detect Tray Borders

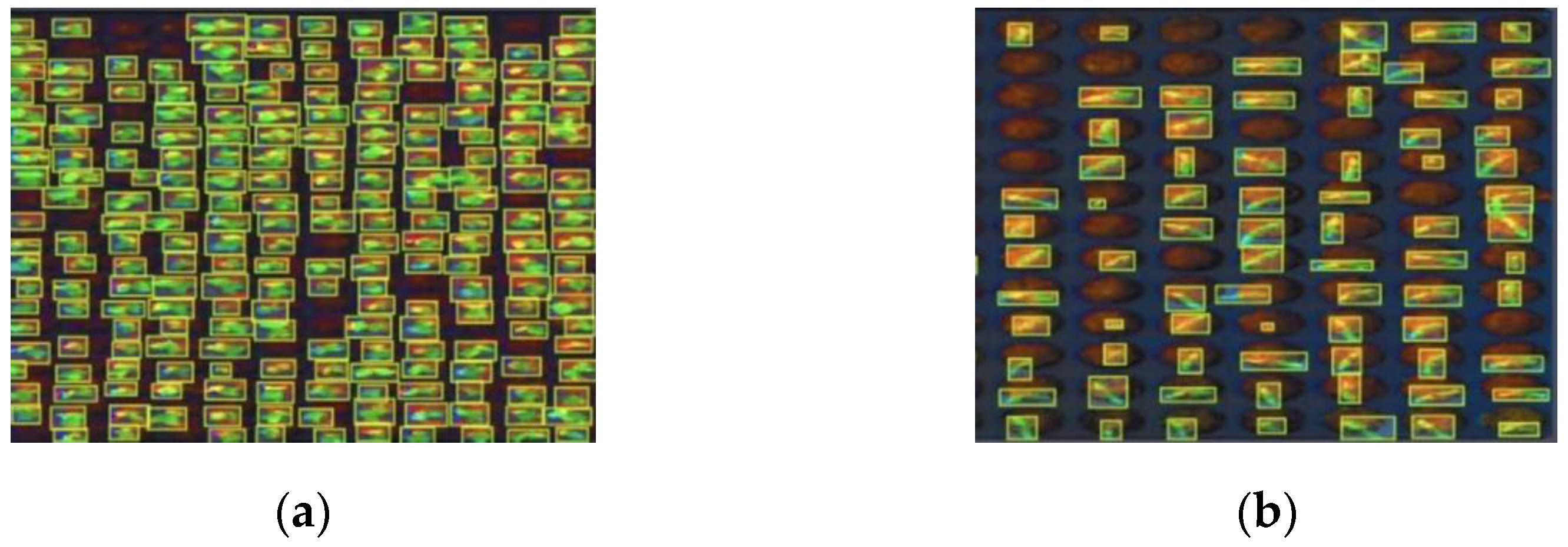

2.7. Local and Global Descriptors

2.8. Perspective Transformation and Homography

2.9. Machine Learning Processing

- Item: {

- name: ‘crop

- id: 1

- }

2.10. Data Science Methodology Applied

2.11. Model Evaluation

2.12. Tools and Frameworks

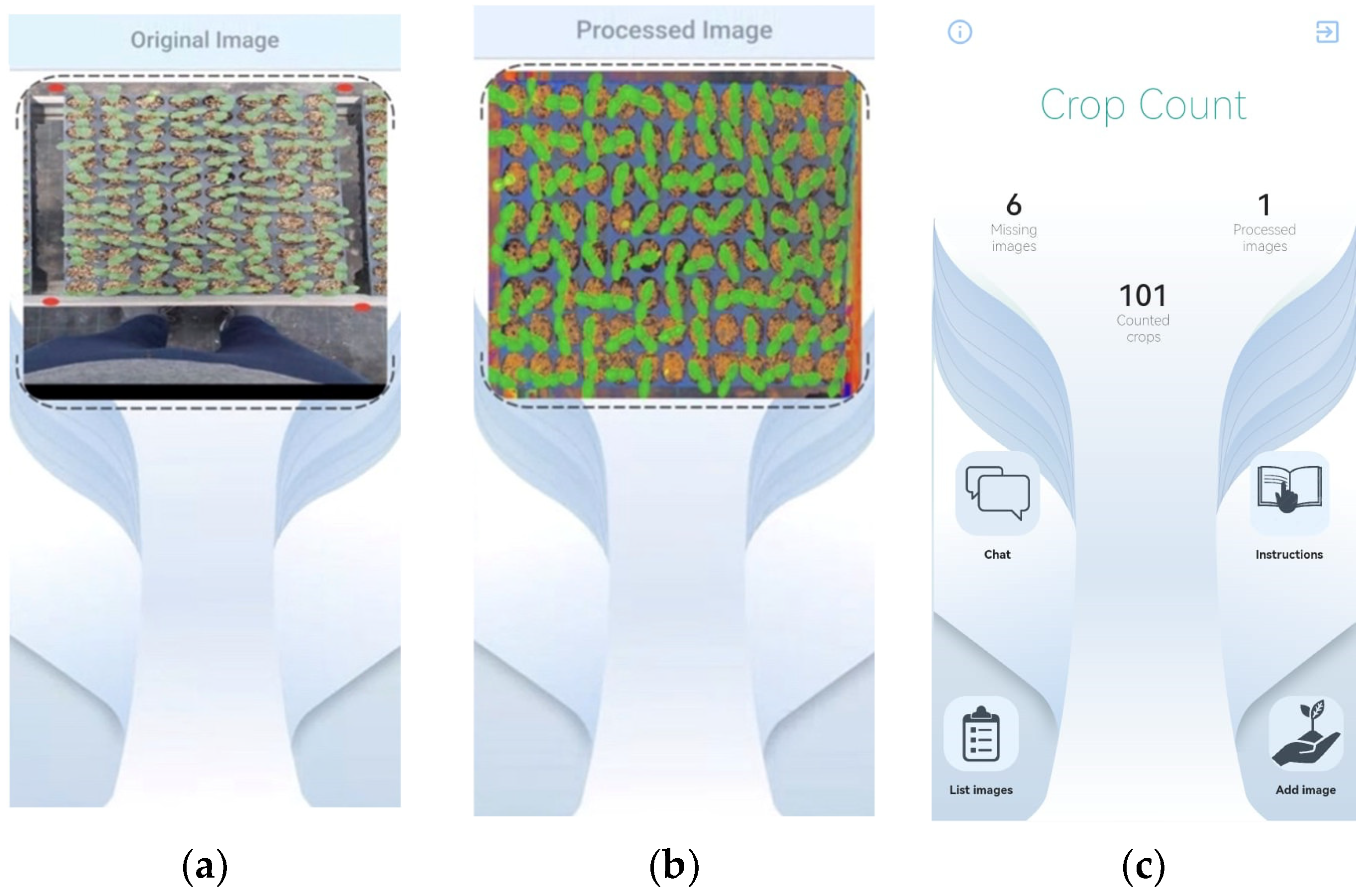

3. Results

3.1. Hardware Mounting and Field Deployment

3.2. Initial Dataset

3.3. Data Pre-Processing

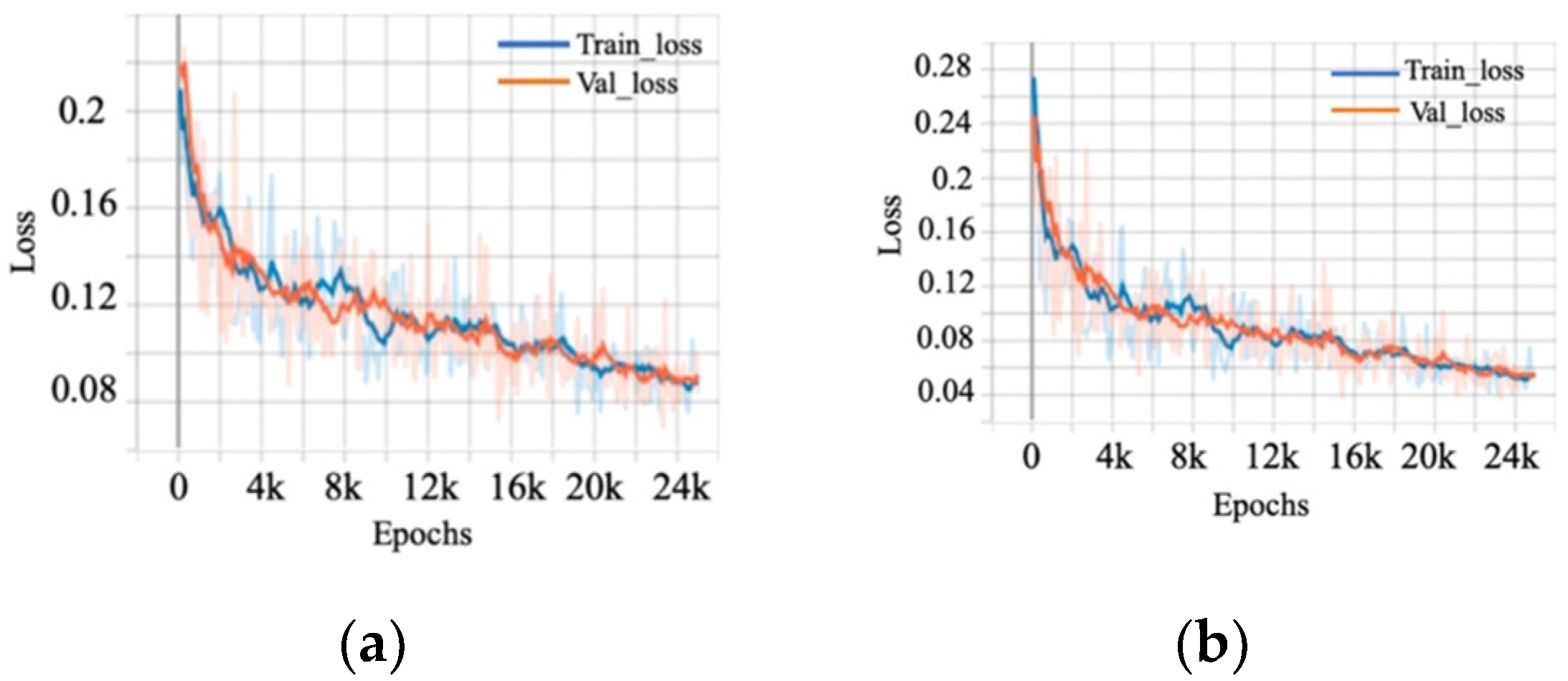

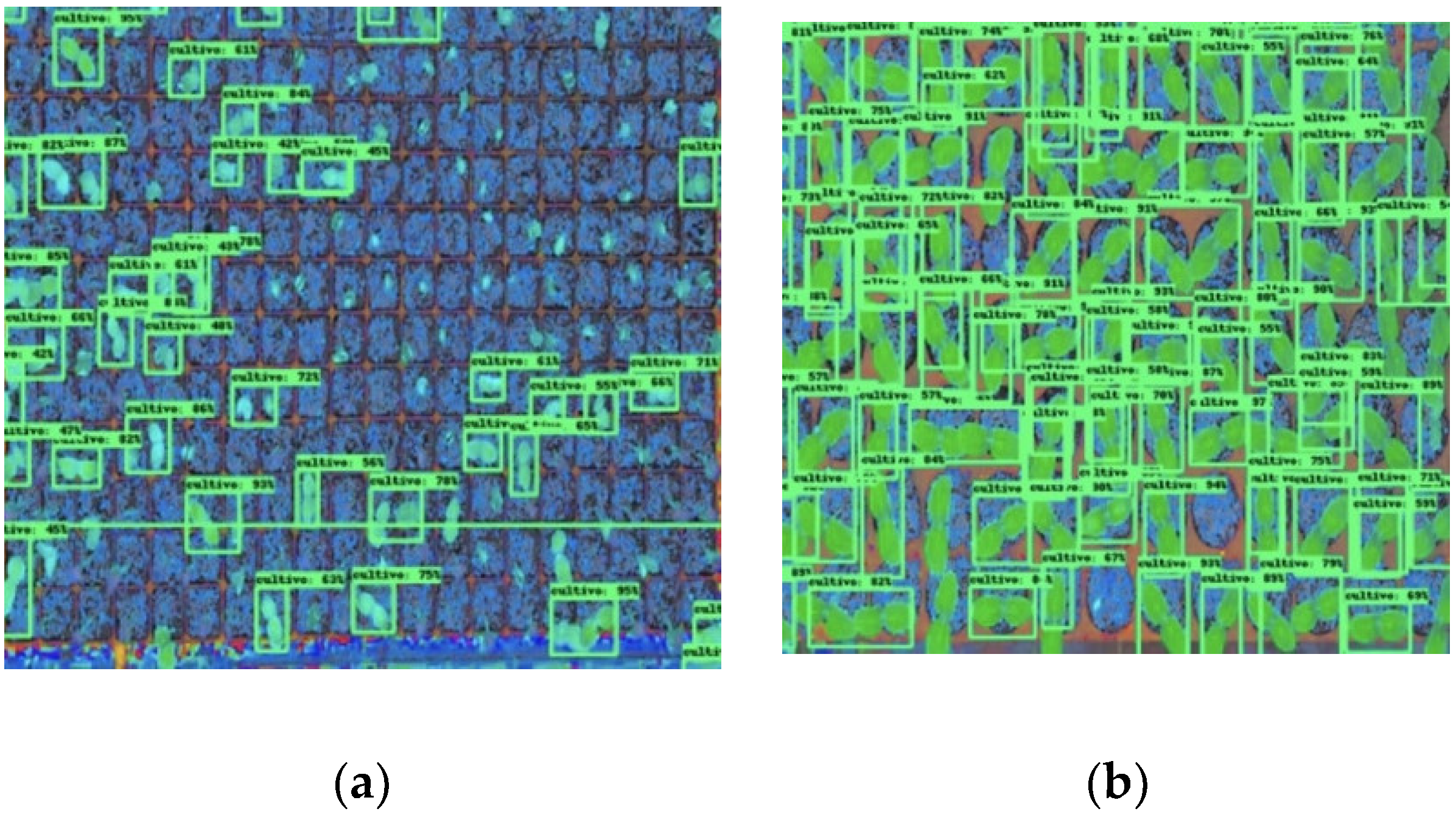

3.4. Detection Model Evaluation

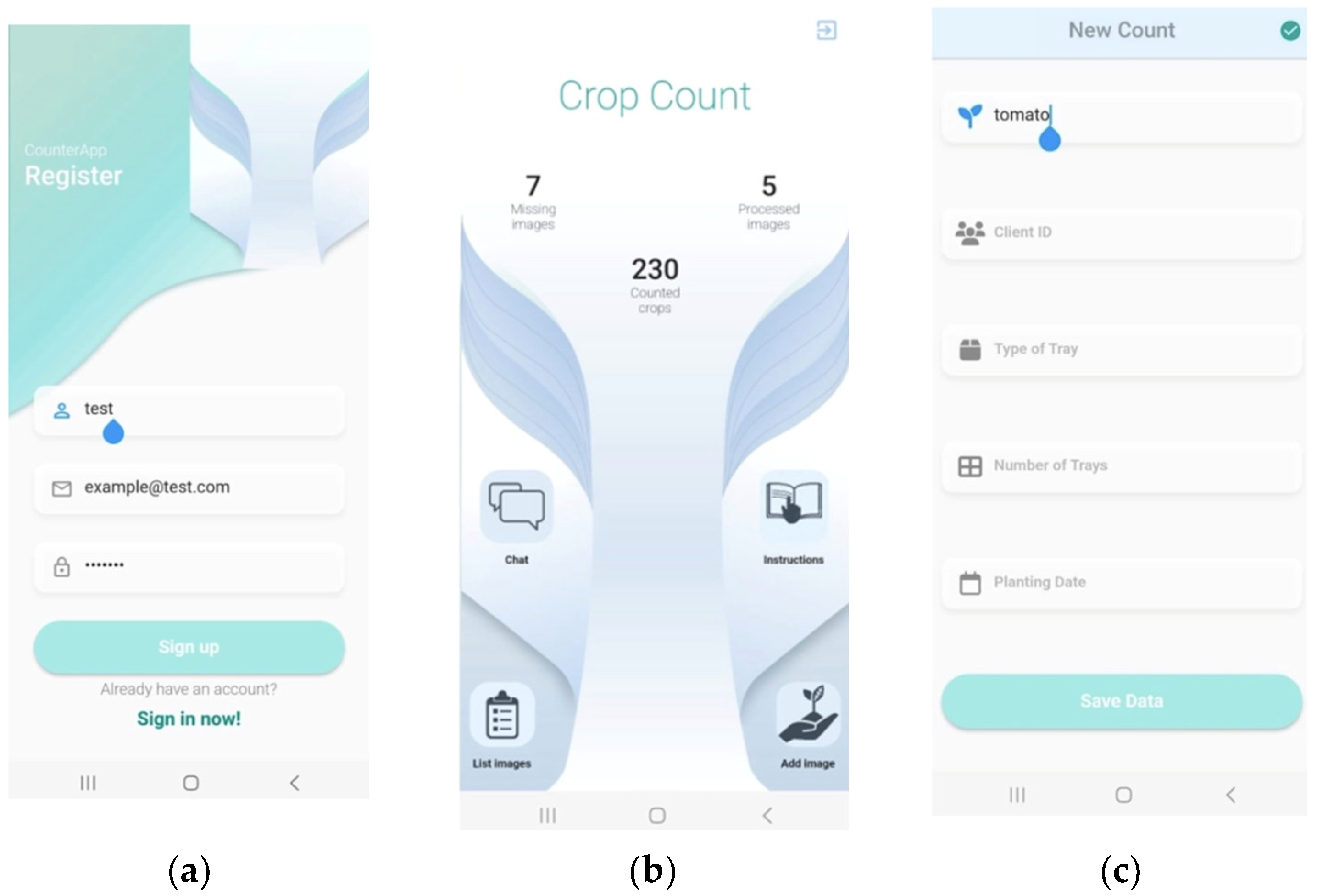

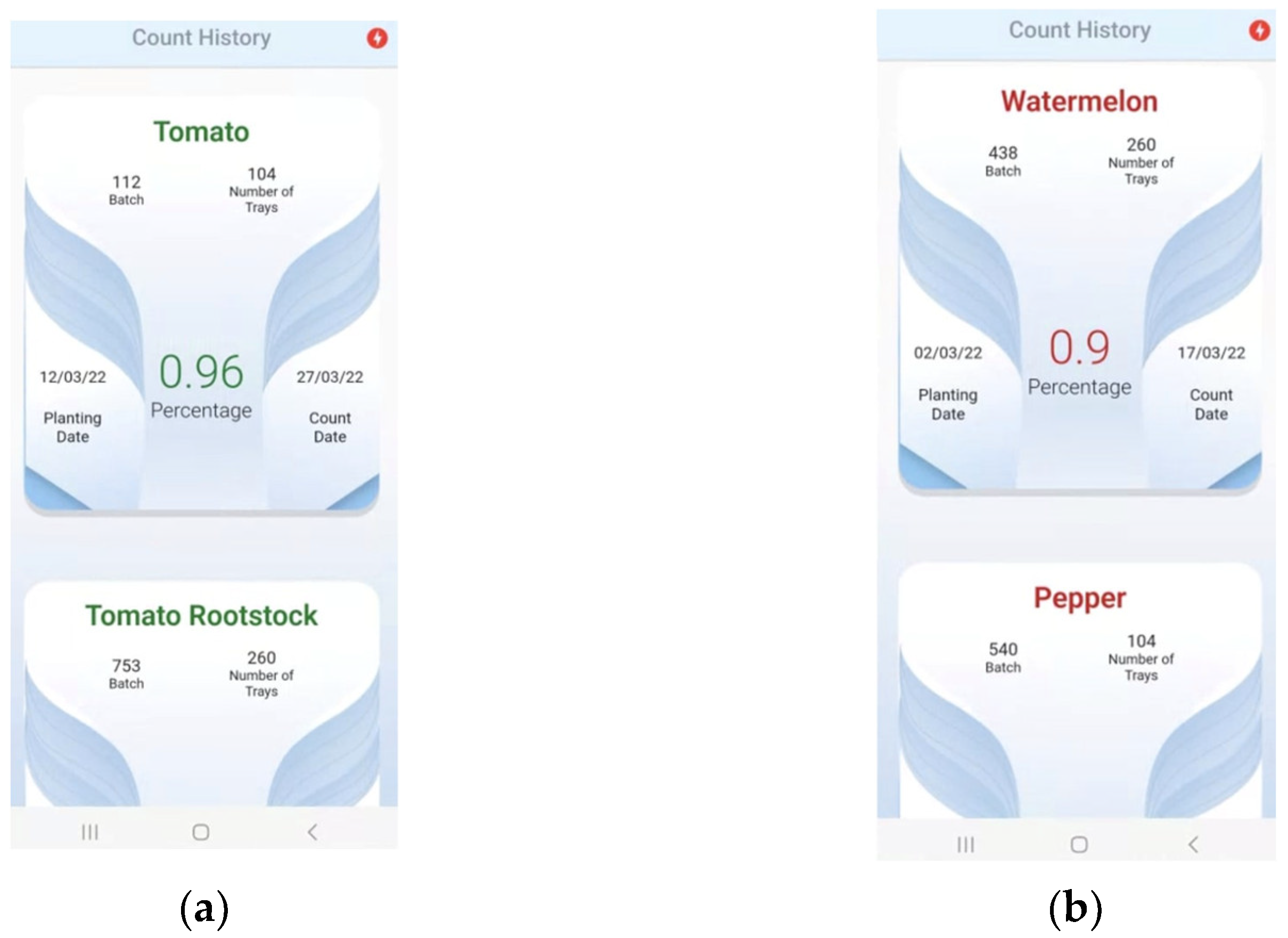

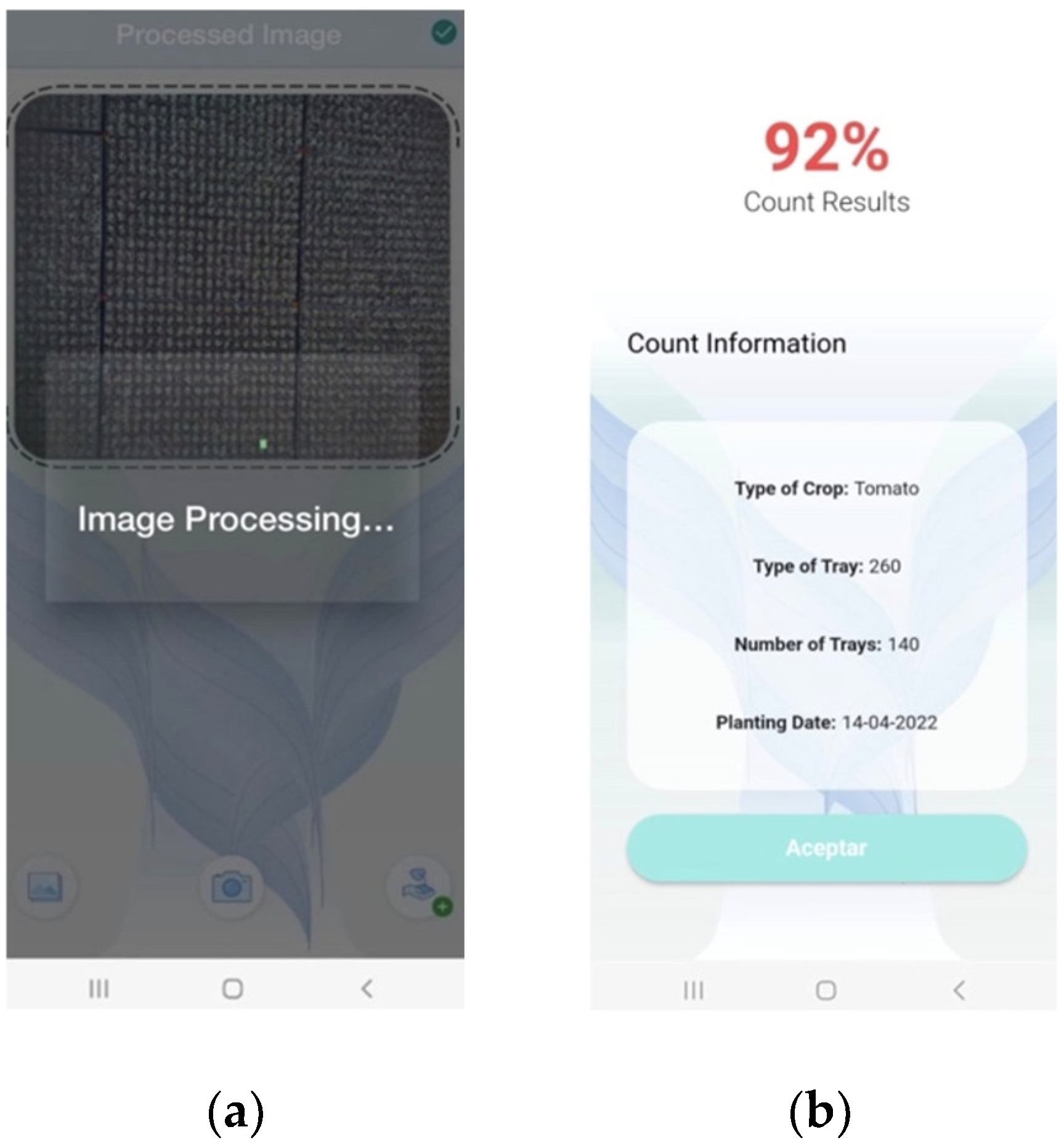

3.5. Development of A Mobile Application

3.6. Deployment and Final Field Testing

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Khan, M.M.; Akram, M.T.; Janke, R.; Qadri, R.W.K.; Al-Sadi, A.M.; Farooque, A.A. Urban Horticulture for Food Secure Cities through and beyond COVID-19. Sustainability 2020, 12, 9592. [Google Scholar] [CrossRef]

- Boretti, A.; Rosa, L. Reassessing the Projections of the World Water Development Report. NPJ Clean Water 2019, 2, 15. [Google Scholar] [CrossRef]

- Woolston, C. Healthy People, Healthy Planet: The Search for a Sustainable Global Diet. Nature 2020, 588, S54. [Google Scholar] [CrossRef] [PubMed]

- Huang, K.; Li, X.; Liu, X.; Seto, K.C. Projecting Global Urban Land Expansion and Heat Island Intensification through 2050. Environ. Res. Lett. 2019, 14, 114037. [Google Scholar] [CrossRef]

- Dixon, T.J.; Tewdwr-Jones, M. Urban Futures: Planning for City Foresight and City Visions. In Urban Futures; Policy Press: Bristol, UK, 2021; pp. 1–16. [Google Scholar]

- Perkins-Kirkpatrick, S.E.; Stone, D.A.; Mitchell, D.M.; Rosier, S.; King, A.D.; Lo, Y.T.E.; Pastor-Paz, J.; Frame, D.; Wehner, M. On the Attribution of the Impacts of Extreme Weather Events to Anthropogenic Climate Change. Environ. Res. Lett. 2022, 17, 024009. [Google Scholar] [CrossRef]

- Beacham, A.M.; Vickers, L.H.; Monaghan, J.M. Vertical Farming: A Summary of Approaches to Growing Skywards. J. Hortic. Sci. Biotechnol. 2019, 94, 277–283. [Google Scholar] [CrossRef]

- Gómez, C.; Currey, C.J.; Dickson, R.W.; Kim, H.-J.; Hernández, R.; Sabeh, N.C.; Raudales, R.E.; Brumfield, R.G.; Laury-Shaw, A.; Wilke, A.K.; et al. Controlled Environment Food Production for Urban Agriculture. HortScience 2019, 54, 1448–1458. [Google Scholar] [CrossRef]

- O’Sullivan, C.A.; Bonnett, G.D.; McIntyre, C.L.; Hochman, Z.; Wasson, A.P. Strategies to Improve the Productivity, Product Diversity and Profitability of Urban Agriculture. Agric. Syst. 2019, 174, 133–144. [Google Scholar] [CrossRef]

- Durmus, D. Real-Time Sensing and Control of Integrative Horticultural Lighting Systems. J. Multidiscip. Sci. J. 2020, 3, 266–274. [Google Scholar] [CrossRef]

- Halgamuge, M.N.; Bojovschi, A.; Fisher, P.M.J.; Le, T.C.; Adeloju, S.; Murphy, S. Internet of Things and Autonomous Control for Vertical Cultivation Walls towards Smart Food Growing: A Review. Urban For. Urban Green. 2021, 61, 127094. [Google Scholar] [CrossRef]

- Cusworth, S.J.; Davies, W.J.; McAinsh, M.R.; Stevens, C.J. Sustainable Production of Healthy, Affordable Food in the UK: The Pros and Cons of Plasticulture. Food Energy Secur. 2022, 11, e404. [Google Scholar] [CrossRef]

- Wunderlich, S.M.; Feldman, C.; Kane, S.; Hazhin, T. Nutritional Quality of Organic, Conventional, and Seasonally Grown Broccoli Using Vitamin C as a Marker. Int. J. Food Sci. Nutr. 2008, 59, 34–45. [Google Scholar] [CrossRef]

- Carrasco, G.; Fuentes-Penailillo, F.; Perez, R.; Rebolledo, P.; Manriquez, P. An Approach to a Vertical Farming Low-Cost to Reach Sustainable Vegetable Crops. In Proceedings of the 2022 IEEE International Conference on Automation/XXV Congress of the Chilean Association of Automatic Control (ICA-ACCA), Curico, Chile, 24–28 October 2022; IEEE: Piscataway, NJ, USA; pp. 1–6. [Google Scholar]

- Haase, D.L.; Bouzza, K.; Emerton, L.; Friday, J.B.; Lieberg, B.; Aldrete, A.; Davis, A.S. The High Cost of the Low-Cost Polybag System: A Review of Nursery Seedling Production Systems. Land 2021, 10, 826. [Google Scholar] [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Automation in Agriculture by Machine and Deep Learning Techniques: A Review of Recent Developments. Precis. Agric. 2021, 22, 2053–2091. [Google Scholar] [CrossRef]

- Zhou, C.; Ye, H.; Hu, J.; Shi, X.; Hua, S.; Yue, J.; Xu, Z.; Yang, G. Automated Counting of Rice Panicle by Applying Deep Learning Model to Images from Unmanned Aerial Vehicle Platform. Sensors 2019, 19, 3106. [Google Scholar] [CrossRef]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep Learning Based Oil Palm Tree Detection and Counting for High-Resolution Remote Sensing Images. Remote Sens. 2017, 9, 22. [Google Scholar] [CrossRef]

- Mekhalfi, M.L.; Nicolò, C.; Bazi, Y.; Al Rahhal, M.M.; Alsharif, N.A.; Maghayreh, E. Al Contrasting YOLOv5, Transformer, and EfficientDet Detectors for Crop Circle Detection in Desert. IEEE Geosci. Remote Sens. Lett. 2022, 19, 3003205. [Google Scholar] [CrossRef]

- Loukatos, D.; Kondoyanni, M.; Kyrtopoulos, I.-V.; Arvanitis, K.G. Enhanced Robots as Tools for Assisting Agricultural Engineering Students’ Development. Electronics 2022, 11, 755. [Google Scholar] [CrossRef]

- Loukatos, D.; Templalexis, C.; Lentzou, D.; Xanthopoulos, G.; Arvanitis, K.G. Enhancing a Flexible Robotic Spraying Platform for Distant Plant Inspection via High-Quality Thermal Imagery Data. Comput. Electron. Agric. 2021, 190, 106462. [Google Scholar] [CrossRef]

- Moraitis, M.; Vaiopoulos, K.; Balafoutis, A.T. Design and Implementation of an Urban Farming Robot. Micromachines 2022, 13, 250. [Google Scholar] [CrossRef]

- Psiroukis, V.; Espejo-Garcia, B.; Chitos, A.; Dedousis, A.; Karantzalos, K.; Fountas, S. Assessment of Different Object Detectors for the Maturity Level Classification of Broccoli Crops Using UAV Imagery. Remote Sens. 2022, 14, 731. [Google Scholar] [CrossRef]

- Kasimati, A.; Espejo-García, B.; Darra, N.; Fountas, S. Predicting Grape Sugar Content under Quality Attributes Using Normalized Difference Vegetation Index Data and Automated Machine Learning. Sensors 2022, 22, 3249. [Google Scholar] [CrossRef] [PubMed]

- Singh, A.; Arora, M. CNN Based Detection of Healthy and Unhealthy Wheat Crop. In Proceedings of the 2020 International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 10–12 September 2020; pp. 121–125. [Google Scholar]

- Fei, S.; Hassan, M.A.; Xiao, Y.; Su, X.; Chen, Z.; Cheng, Q.; Duan, F.; Chen, R.; Ma, Y. UAV-Based Multi-Sensor Data Fusion and Machine Learning Algorithm for Yield Prediction in Wheat. Precis. Agric. 2023, 24, 187–212. [Google Scholar] [CrossRef]

- Darwin, B.; Dharmaraj, P.; Prince, S.; Popescu, D.E.; Hemanth, D.J. Recognition of Bloom/Yield in Crop Images Using Deep Learning Models for Smart Agriculture: A Review. Agronomy 2021, 11, 646. [Google Scholar] [CrossRef]

- Wiley, V.; Lucas, T. Computer Vision and Image Processing: A Paper Review. Int. J. Artif. Intell. Res. 2018, 2, 22. [Google Scholar] [CrossRef]

- Bhargava, A.; Bansal, A. Fruits and Vegetables Quality Evaluation Using Computer Vision: A Review. J. King Saud Univ. Comput. Inf. Sci. 2021, 33, 243–257. [Google Scholar] [CrossRef]

- Dubrofsky, E. Homography Estimation. Master’s Thesis, The University of British Columbia, Vancouver, BC, Canada, 2009. [Google Scholar]

- Finlayson, G.; Gong, H.; Fisher, R.B. Color Homography: Theory and Applications. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 20–33. [Google Scholar] [CrossRef]

- Li, J.; Allinson, N.M. A Comprehensive Review of Current Local Features for Computer Vision. Neurocomputing 2008, 71, 1771–1787. [Google Scholar] [CrossRef]

- Khan, A.; Laghari, A.; Awan, S. Machine Learning in Computer Vision: A Review. ICST Trans. Scalable Inf. Syst. 2018, 8, 169418. [Google Scholar] [CrossRef]

- Rahnemoonfar, M.; Sheppard, C. Deep Count: Fruit Counting Based on Deep Simulated Learning. Sensors 2017, 17, 905. [Google Scholar] [CrossRef]

- Mu, Y.; Chen, T.-S.; Ninomiya, S.; Guo, W. Intact Detection of Highly Occluded Immature Tomatoes on Plants Using Deep Learning Techniques. Sensors 2020, 20, 2984. [Google Scholar] [CrossRef]

- Praveen Kumar, J.; Domnic, S. Image Based Leaf Segmentation and Counting in Rosette Plants. Inf. Process. Agric. 2019, 6, 233–246. [Google Scholar] [CrossRef]

- Wu, J.; Yang, G.; Yang, X.; Xu, B.; Han, L.; Zhu, Y. Automatic Counting of in Situ Rice Seedlings from UAV Images Based on a Deep Fully Convolutional Neural Network. Remote Sens. 2019, 11, 691. [Google Scholar] [CrossRef]

- Tseng, H.-H.; Yang, M.-D.; Saminathan, R.; Hsu, Y.-C.; Yang, C.-Y.; Wu, D.-H. Rice Seedling Detection in UAV Images Using Transfer Learning and Machine Learning. Remote Sens. 2022, 14, 2837. [Google Scholar] [CrossRef]

- Bai, Y.; Nie, C.; Wang, H.; Cheng, M.; Liu, S.; Yu, X.; Shao, M.; Wang, Z.; Wang, S.; Tuohuti, N.; et al. A Fast and Robust Method for Plant Count in Sunflower and Maize at Different Seedling Stages Using High-Resolution UAV RGB Imagery. Precis. Agric. 2022, 23, 1720–1742. [Google Scholar] [CrossRef]

- Moharram, D.; Yuan, X.; Li, D. Tree Seedlings Detection and Counting Using a Deep Learning Algorithm. Appl. Sci. 2023, 13, 895. [Google Scholar] [CrossRef]

- Cron. Expert Shell Scripting; Apress: Berkeley, CA, USA, 2009; pp. 81–85. [Google Scholar]

- Auliasari, R.N.; Novamizanti, L.; Ibrahim, N. Identifikasi Kematangan Daun Teh Berbasis Fitur Warna Hue Saturation Intensity (HSI) Dan Hue Saturation Value (HSV). JUITA J. Inform. 2020, 8, 217. [Google Scholar] [CrossRef]

- Lesiangi, F.S.; Mauko, A.Y.; Djahi, B.S. Feature Extraction Hue, Saturation, Value (HSV) and Gray Level Cooccurrence Matrix (GLCM) for Identification of Woven Fabric Motifs in South Central Timor Regency. J. Phys. Conf. Ser. 2021, 2017, 012010. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, J.; Wang, Y.; Zhao, Y.; Zhang, S. Field Crop Extraction Based on Machine Vision. In Proceedings of the 2021 IEEE International Conference on Mechatronics and Automation (ICMA), Takamatsu, Japan, 8–11 August 2021; IEEE: Piscataway, NJ, USA; pp. 1–5. [Google Scholar]

- Wilson, J.N.; Ritter, G.X. Handbook of Computer Vision Algorithms in Image Algebra; CRC Press: Boca Raton, FL, USA, 2000; ISBN 9780429115059. [Google Scholar]

- Vizilter, Y.v.; Pyt’Ev, Y.P.; Chulichkov, A.I.; Mestetskiy, L.M. Morphological Image Analysis for Computer Vision Applications. Intell. Syst. Ref. Libr. 2015, 73, 9–58. [Google Scholar] [CrossRef]

- Soille, P. Erosion and Dilation. In Morphological Image Analysis; Springer: Berlin/Heidelberg, Germany, 2004; pp. 63–103. [Google Scholar]

- Chen, S.; Haralick, R.M. Recursive Erosion, Dilation, Opening, and Closing Transforms. IEEE Trans. Image Process. 1995, 4, 335–345. [Google Scholar] [CrossRef]

- Mokrzycki, W.; Samko, M. Canny Edge Detection Algorithm Modification. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Int. Conf. Comput. Vis. Graph. 2012, 7594, 533–540. [Google Scholar] [CrossRef]

- Patrício, D.I.; Rieder, R. Computer Vision and Artificial Intelligence in Precision Agriculture for Grain Crops: A Systematic Review. Comput. Electron. Agric. 2018, 153, 69–81. [Google Scholar] [CrossRef]

- Tripathi, M.K.; Maktedar, D.D. A Role of Computer Vision in Fruits and Vegetables among Various Horticulture Products of Agriculture Fields: A Survey. Inf. Process. Agric. 2020, 7, 183–203. [Google Scholar] [CrossRef]

- Zhang, W.; Li, X.; Yu, J.; Kumar, M.; Mao, Y. Remote Sensing Image Mosaic Technology Based on SURF Algorithm in Agriculture. EURASIP J. Image Video Process. 2018, 2018, 85. [Google Scholar] [CrossRef]

- Stanhope, T.P.; Adamchuk, V.I. Feature-Based Visual Tracking for Agricultural Implements. IFAC-PapersOnLine 2016, 49, 359–364. [Google Scholar] [CrossRef]

- Nagar, H.; Sharma, R.S. Pest Detection on Leaf Using Image Processing. In Proceedings of the 2021 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 27–29 January 2021; IEEE: Piscataway, NJ, USA; pp. 1–5. [Google Scholar]

- Hu, M.K. Visual Pattern Recognition by Moment Invariants. IRE Trans. Inf. Theory 1962, 8, 179–187. [Google Scholar] [CrossRef]

- Alam, M.; Alam, M.S.; Roman, M.; Tufail, M.; Khan, M.U.; Khan, M.T. Real-Time Machine-Learning Based Crop/Weed Detection and Classification for Variable-Rate Spraying in Precision Agriculture. In Proceedings of the 2020 7th International Conference on Electrical and Electronics Engineering (ICEEE), Antalya, Turkey, 14–16 April 2020; IEEE: Piscataway, NJ, USA; pp. 273–280. [Google Scholar]

- Ramirez-Paredes, J.-P.; Hernandez-Belmonte, U.-H. Visual Quality Assessment of Malting Barley Using Color, Shape and Texture Descriptors. Comput. Electron. Agric. 2020, 168, 105110. [Google Scholar] [CrossRef]

- Gómez-Reyes, J.K.; Benítez-Rangel, J.P.; Morales-Hernández, L.A.; Resendiz-Ochoa, E.; Camarillo-Gomez, K.A. Image Mosaicing Applied on UAVs Survey. Appl. Sci. 2022, 12, 2729. [Google Scholar] [CrossRef]

- Kharismawati, D.E.; Akbarpour, H.A.; Aktar, R.; Bunyak, F.; Palaniappan, K.; Kazic, T. CorNet: Unsupervised Deep Homography Estimation for Agricultural Aerial Imagery. Lect. Notes Comput. Sci. (Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinform.) 2020, 12540, 400–417. [Google Scholar] [CrossRef]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine Learning and Deep Learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Greener, J.G.; Kandathil, S.M.; Moffat, L.; Jones, D.T. A Guide to Machine Learning for Biologists. Nat. Rev. Mol. Cell Biol. 2021, 23, 40–55. [Google Scholar] [CrossRef] [PubMed]

- Joseph, F.J.J.; Nonsiri, S.; Monsakul, A. Keras and TensorFlow: A Hands-On Experience. EAI/Springer Innov. Commun. Comput. 2021, 85–111. [Google Scholar] [CrossRef]

- Ajit, A.; Acharya, K.; Samanta, A. A Review of Convolutional Neural Networks. In Proceedings of the International Conference on Emerging Trends in Information Technology and Engineering, ic-ETITE, Vellore, India, 24–25 February 2020. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6999–7019. [Google Scholar] [CrossRef]

- Schröer, C.; Kruse, F.; Gómez, J.M. A Systematic Literature Review on Applying CRISP-DM Process Model. Procedia Comput. Sci. 2021, 181, 526–534. [Google Scholar] [CrossRef]

- Fuentes-Penailillo, F.; Ortega-Farias, S.; de la Fuente-Saiz, D.; Rivera, M. Digital Count of Sunflower Plants at Emergence from Very Low Altitude Using UAV Images. In Proceedings of the 2019 IEEE CHILEAN Conference on Electrical, Electronics Engineering, Information and Communication Technologies (CHILECON), Valparaíso, Chile, 13–27 November 2019; IEEE: Piscataway, NJ, USA; pp. 1–5. [Google Scholar]

- Yang, B.; Xu, Y. Applications of Deep-Learning Approaches in Horticultural Research: A Review. Hortic. Res. 2021, 8, 123. [Google Scholar] [CrossRef] [PubMed]

- Fukuda, M.; Okuno, T.; Yuki, S. Central Object Segmentation by Deep Learning to Continuously Monitor Fruit Growth through RGB Images. Sensors 2021, 21, 6999. [Google Scholar] [CrossRef]

- Saedi, S.I.; Khosravi, H. A Deep Neural Network Approach towards Real-Time on-Branch Fruit Recognition for Precision Horticulture. Expert Syst. Appl. 2020, 159, 113594. [Google Scholar] [CrossRef]

- Behera, S.K.; Jena, J.J.; Rath, A.K.; Sethy, P.K. Horticultural Approach for Detection, Categorization and Enumeration of on Plant Oval Shaped Fruits. Adv. Intell. Syst. Comput. 2019, 813, 71–84. [Google Scholar] [CrossRef]

- Yin, H.; Yang, C.; Lu, J. Research on Remote Sensing Image Classification Algorithm Based on EfficientNet. In Proceedings of the 2022 7th International Conference on Intelligent Computing and Signal Processing, ICSP, Virtual, 15–17 April 2022; pp. 1757–1761. [Google Scholar] [CrossRef]

- Koonce, B. EfficientNet. In Convolutional Neural Networks with Swift for Tensorflow; Apress: Berkeley, CA, USA, 2021; pp. 109–123. [Google Scholar] [CrossRef]

- Abedi, A.; Khan, S.S. Improving State-of-the-Art in Detecting Student Engagement with Resnet and TCN Hybrid Network. In Proceedings of the 2021 18th Conference on Robots and Vision, CRV, Burnaby, BC, Canada, 26–28 May 2021; pp. 151–157. [Google Scholar] [CrossRef]

- Dzhurov, Y.; Krasteva, I.; Ilieva, S. Personal Extreme Programming–An Agile Process for Autonomous Developers. In Proceedings of the International Conference on Software, Services & Semantic Technologies, Sofia, Bulgaria, 28–29 October 2009; pp. 252–259. [Google Scholar]

- Hanan, J.J. Greenhouses: Advanced Technology for Protected Horticulture; CRC Press: Boca Raton, FL, USA, 2017; pp. 1–684. [Google Scholar] [CrossRef]

- Lin, K.; Chen, J.; Si, H.; Wu, J. A Review on Computer Vision Technologies Applied in Greenhouse Plant Stress Detection. Commun. Comput. Inf. Sci. 2013, 363, 192–200. [Google Scholar] [CrossRef]

- Tian, Z.; Ma, W.; Yang, Q.; Duan, F. Application Status and Challenges of Machine Vision in Plant Factory—A Review. Inf. Process. Agric. 2022, 9, 195–211. [Google Scholar] [CrossRef]

- Xu, T.; Qi, X.; Lin, S.; Zhang, Y.; Ge, Y.; Li, Z.; Dong, J.; Yang, X. A Neural Network Structure with Attention Mechanism and Additional Feature Fusion Layer for Tomato Flowering Phase Detection in Pollination Robots. Machines 2022, 10, 1076. [Google Scholar] [CrossRef]

- Zhou, C.; Hu, J.; Xu, Z.; Yue, J.; Ye, H.; Yang, G. A Novel Greenhouse-Based System for the Detection and Plumpness Assessment of Strawberry Using an Improved Deep Learning Technique. Front. Plant Sci. 2020, 11, 559. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Liu, J.; Liu, G. Diseases Detection of Occlusion and Overlapping Tomato Leaves Based on Deep Learning. Front. Plant Sci. 2021, 12, 2812. [Google Scholar] [CrossRef]

- Blehm, C.; Vishnu, S.; Khattak, A.; Mitra, S.; Yee, R.W. Computer Vision Syndrome: A Review. Surv. Ophthalmol. 2005, 50, 253–262. [Google Scholar] [CrossRef]

- Kaiser, E.; Ouzounis, T.; Giday, H.; Schipper, R.; Heuvelink, E.; Marcelis, L.F.M. Adding Blue to Red Supplemental Light Increases Biomass and Yield of Greenhouse-Grown Tomatoes, but Only to an Optimum. Front. Plant Sci. 2019, 9, 2002. [Google Scholar] [CrossRef]

- Paradiso, R.; Proietti, S. Light-Quality Manipulation to Control Plant Growth and Photomorphogenesis in Greenhouse Horticulture: The State of the Art and the Opportunities of Modern LED Systems. J. Plant Growth Regul. 2022, 41, 742–780. [Google Scholar] [CrossRef]

- Hemming, J.; Rath, T. PA—Precision Agriculture. J. Agric. Eng. Res. 2001, 78, 233–243. [Google Scholar] [CrossRef]

- Fonteijn, H.; Afonso, M.; Lensink, D.; Mooij, M.; Faber, N.; Vroegop, A.; Polder, G.; Wehrens, R. Automatic Phenotyping of Tomatoes in Production Greenhouses Using Robotics and Computer Vision: From Theory to Practice. Agronomy 2021, 11, 1599. [Google Scholar] [CrossRef]

- Afonso, M.; Fonteijn, H.; Fiorentin, F.S.; Lensink, D.; Mooij, M.; Faber, N.; Polder, G.; Wehrens, R. Tomato Fruit Detection and Counting in Greenhouses Using Deep Learning. Front. Plant Sci. 2020, 11, 1759. [Google Scholar] [CrossRef] [PubMed]

- Benavides, M.; Cantón-Garbín, M.; Sánchez-Molina, J.A.; Rodríguez, F. Automatic Tomato and Peduncle Location System Based on Computer Vision for Use in Robotized Harvesting. Appl. Sci. 2020, 10, 5887. [Google Scholar] [CrossRef]

| Crop | Tray Type |

|---|---|

| Tomato | 72, 104, 260, 486 |

| Broccoli | 260 |

| Watermelon | 260 |

| Pepper | 104 |

| Lettuce | 260 |

| Cabbage | 104 |

| Metrics | EfficientNet | SSD MobileNet | ResNet |

|---|---|---|---|

| Loss Classification | 0.10 | 0.08 | 0.13 |

| Loss Localization | 0.13 | 0.05 | 0.07 |

| Mean Average Precision (mAP) | 0.617 | 0.567 | 0.554 |

| Test | Average Time | Number of Trays Counted | Number of Seedlings per Tray | Percentage Obtained |

|---|---|---|---|---|

| Industrial worker | 12 min 40 s | 30 trays | 89 | 85.5% |

| Our proposal | 9 min 35 s | 30 trays | 89 | 91.3% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fuentes-Peñailillo, F.; Carrasco Silva, G.; Pérez Guzmán, R.; Burgos, I.; Ewertz, F. Automating Seedling Counts in Horticulture Using Computer Vision and AI. Horticulturae 2023, 9, 1134. https://doi.org/10.3390/horticulturae9101134

Fuentes-Peñailillo F, Carrasco Silva G, Pérez Guzmán R, Burgos I, Ewertz F. Automating Seedling Counts in Horticulture Using Computer Vision and AI. Horticulturae. 2023; 9(10):1134. https://doi.org/10.3390/horticulturae9101134

Chicago/Turabian StyleFuentes-Peñailillo, Fernando, Gilda Carrasco Silva, Ricardo Pérez Guzmán, Ignacio Burgos, and Felipe Ewertz. 2023. "Automating Seedling Counts in Horticulture Using Computer Vision and AI" Horticulturae 9, no. 10: 1134. https://doi.org/10.3390/horticulturae9101134

APA StyleFuentes-Peñailillo, F., Carrasco Silva, G., Pérez Guzmán, R., Burgos, I., & Ewertz, F. (2023). Automating Seedling Counts in Horticulture Using Computer Vision and AI. Horticulturae, 9(10), 1134. https://doi.org/10.3390/horticulturae9101134