Real-Time Detection and Location of Potted Flowers Based on a ZED Camera and a YOLO V4-Tiny Deep Learning Algorithm

Abstract

1. Introduction

2. Materials and Methods

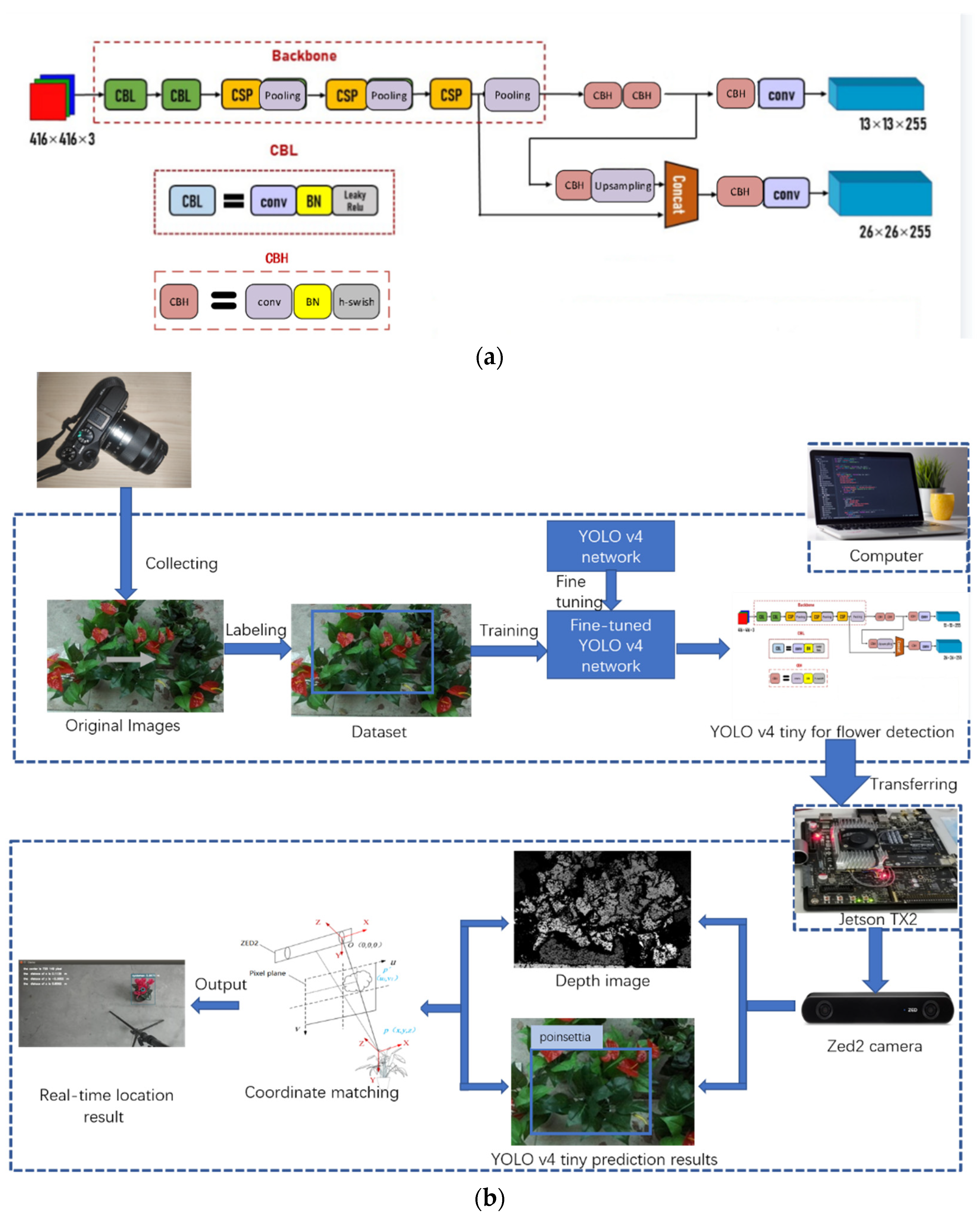

2.1. Process of the Flower Detection and Location Based on the ZED 2 Stereo Camera and the YOLO V4-Tiny Model

2.2. Potted Flower Detection Based on the YOLO V4-Tiny Model

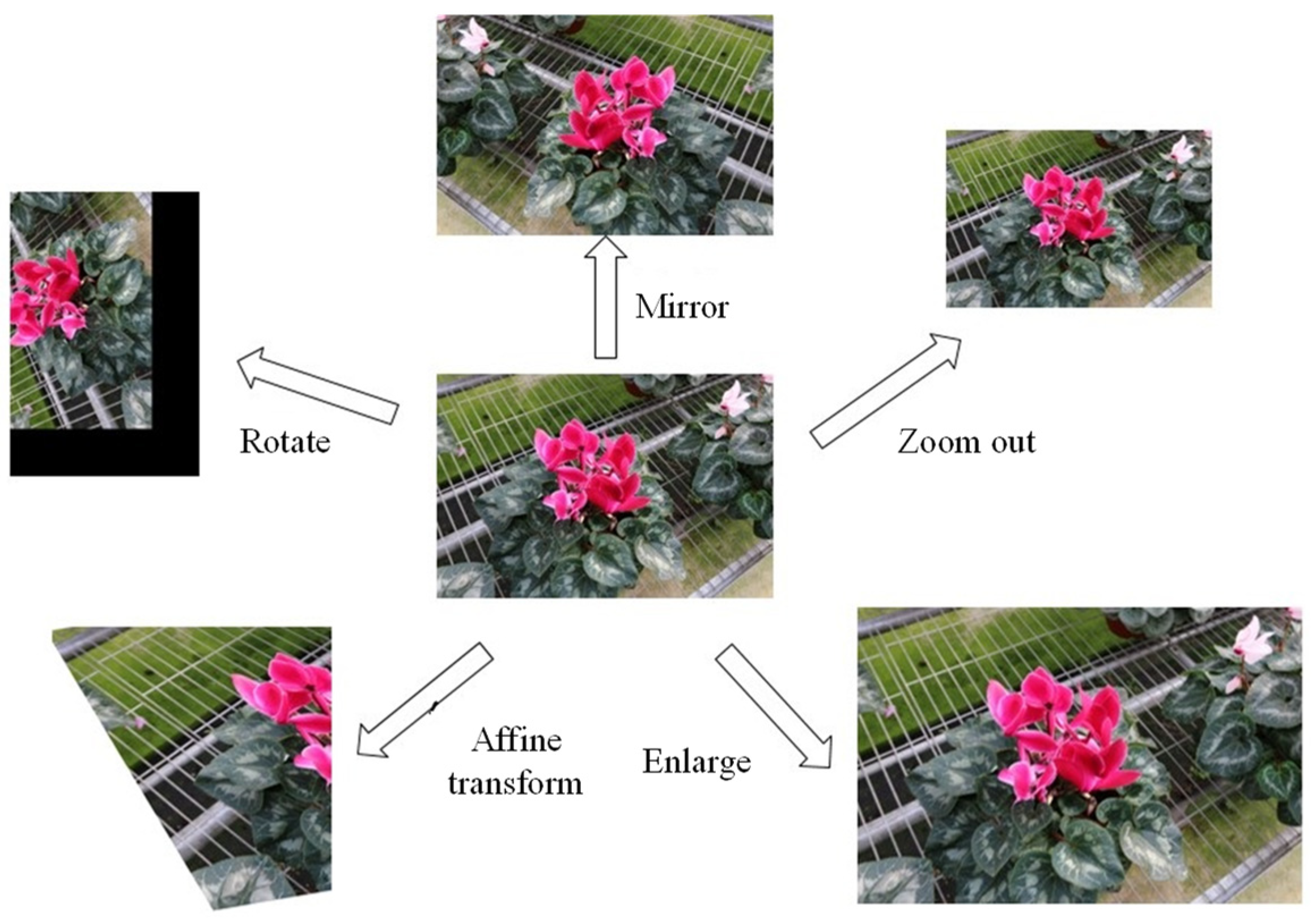

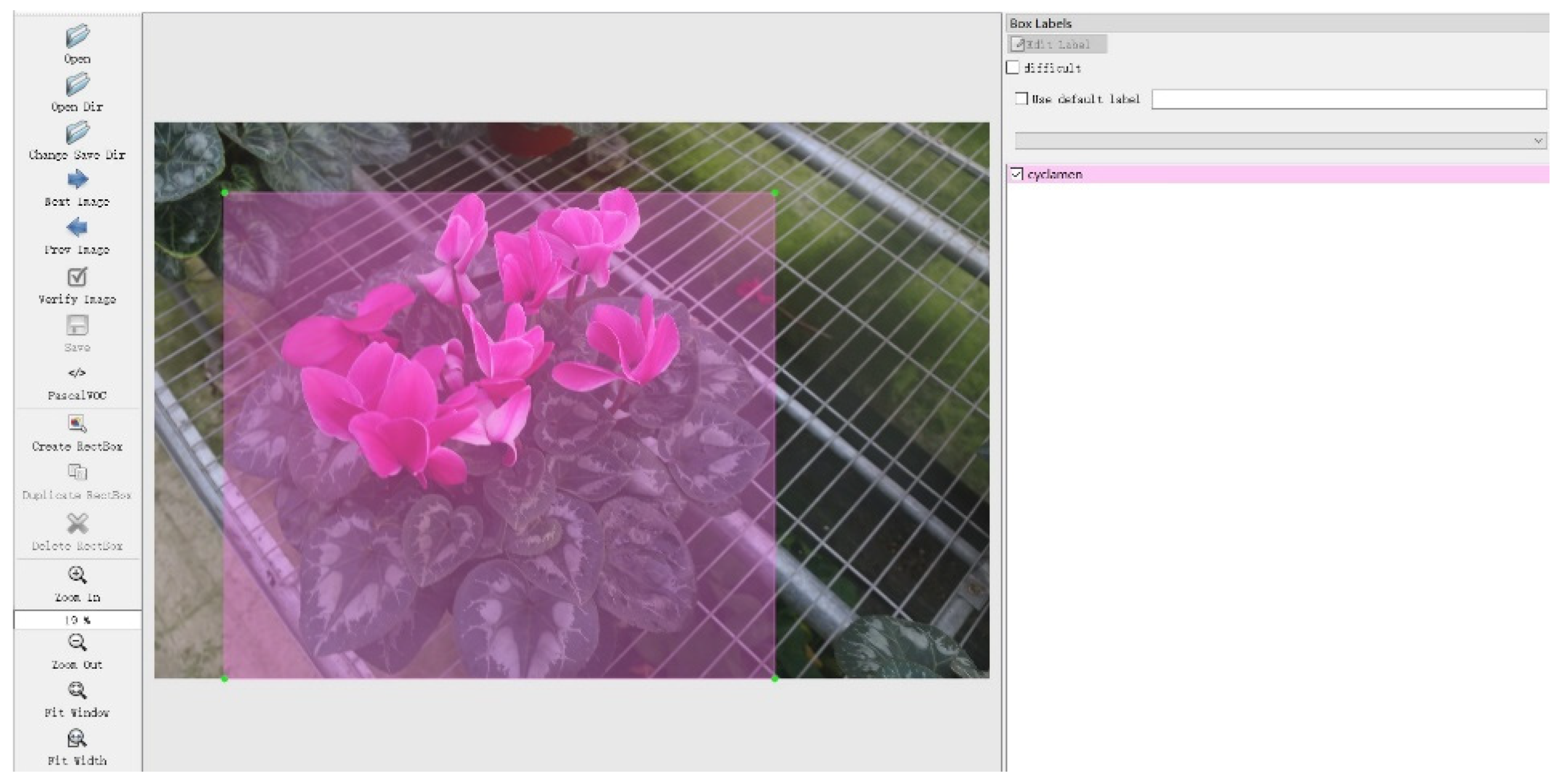

2.2.1. Data Collection for YOLO V4-Tiny Model Training

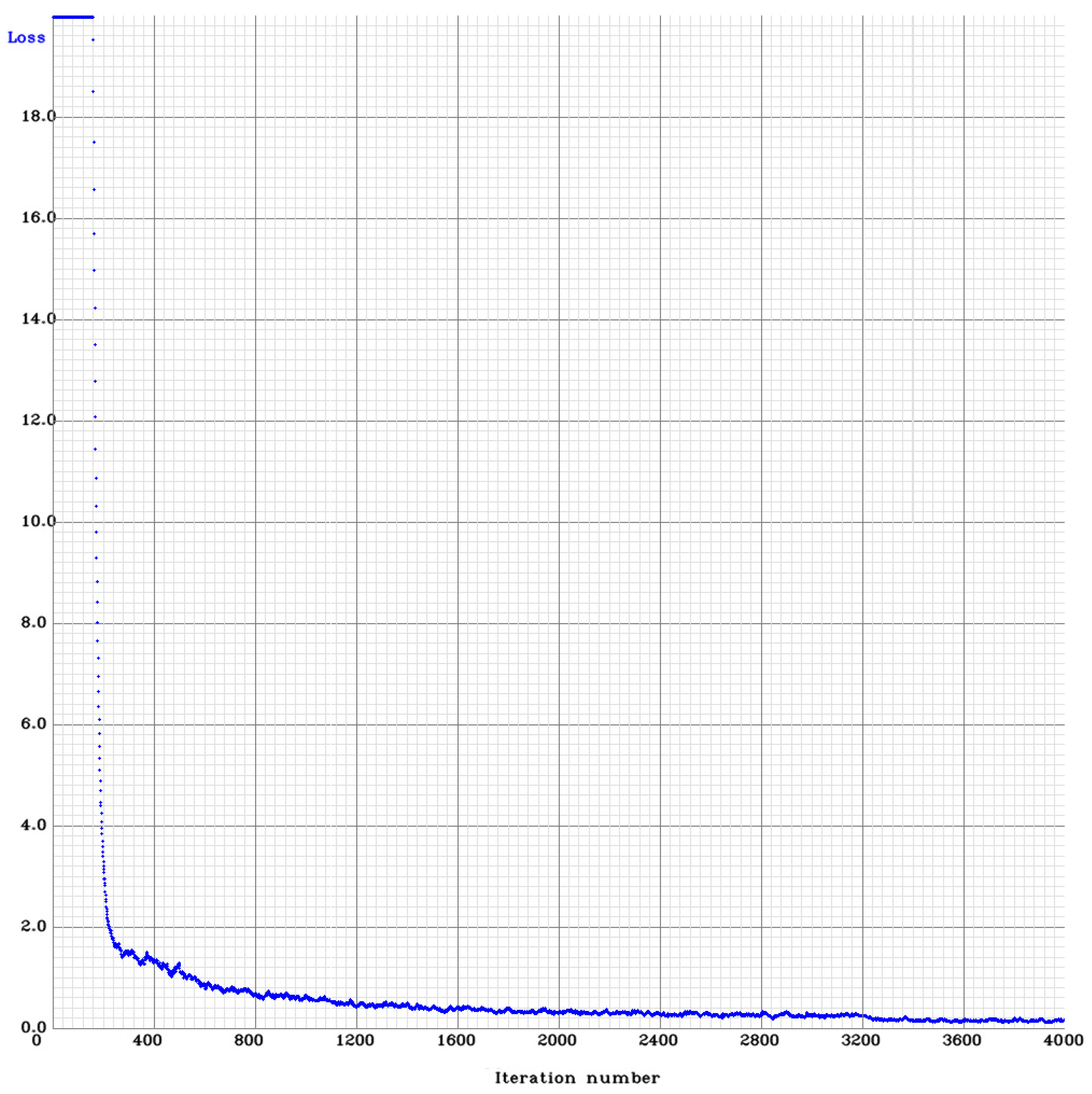

2.2.2. Model Training

2.3. Real-Time Detection Based on the ZED 2 Camera and the Jetson TX2

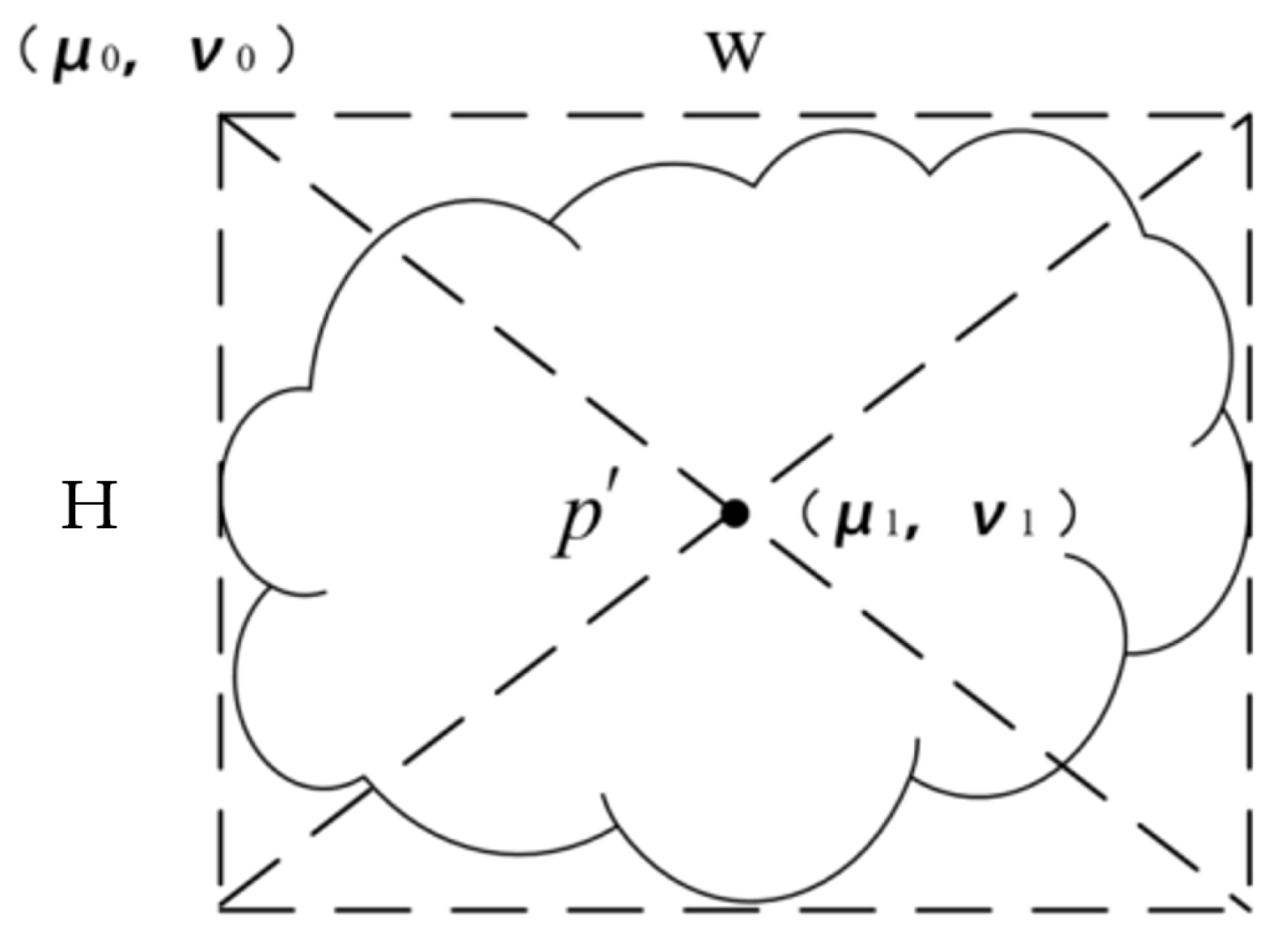

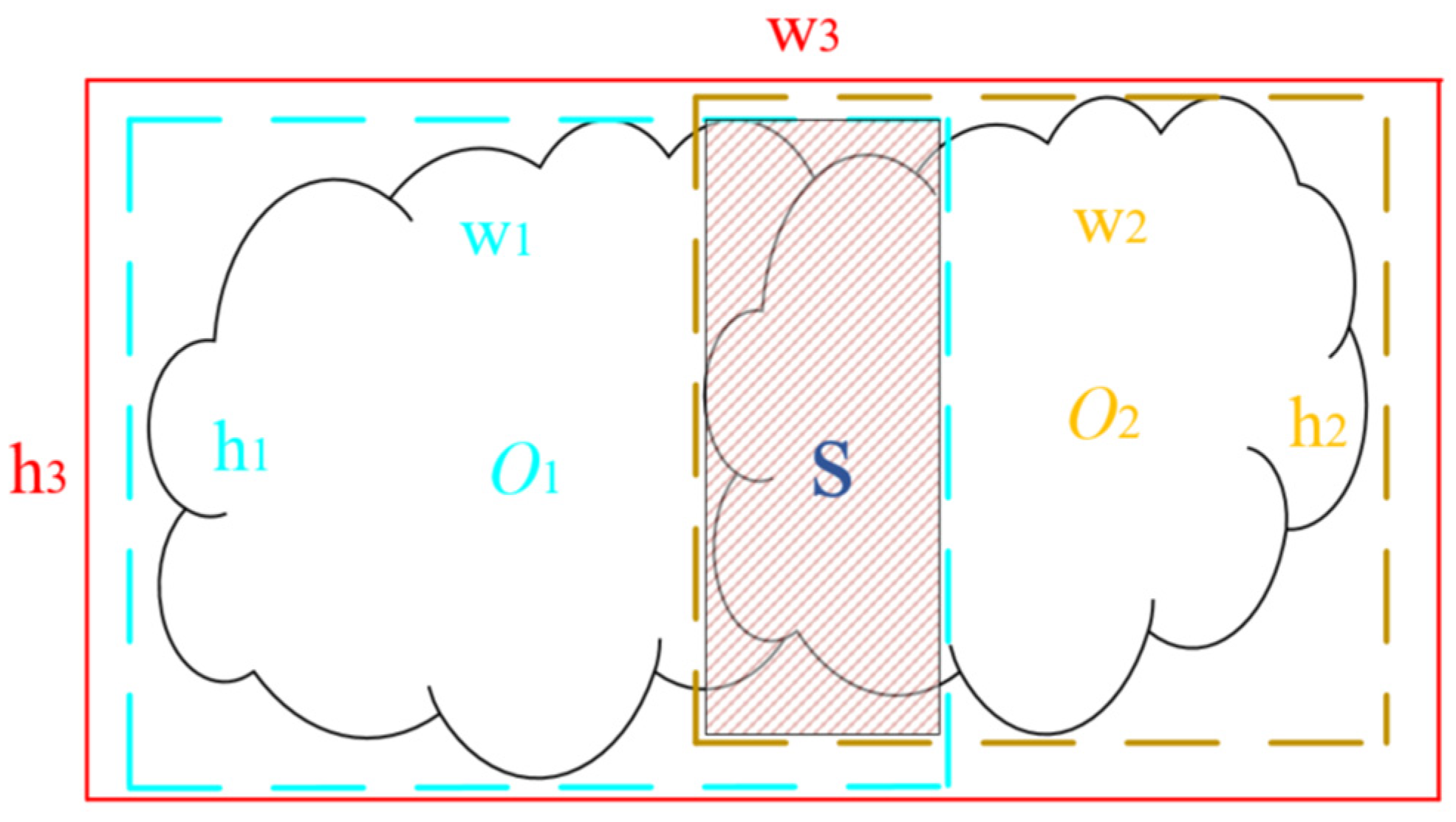

2.3.1. Plane Location Based on the YOLO V4-Tiny Detection Result

2.3.2. Spatial Location Based on the ZED 2 Stereo Camera

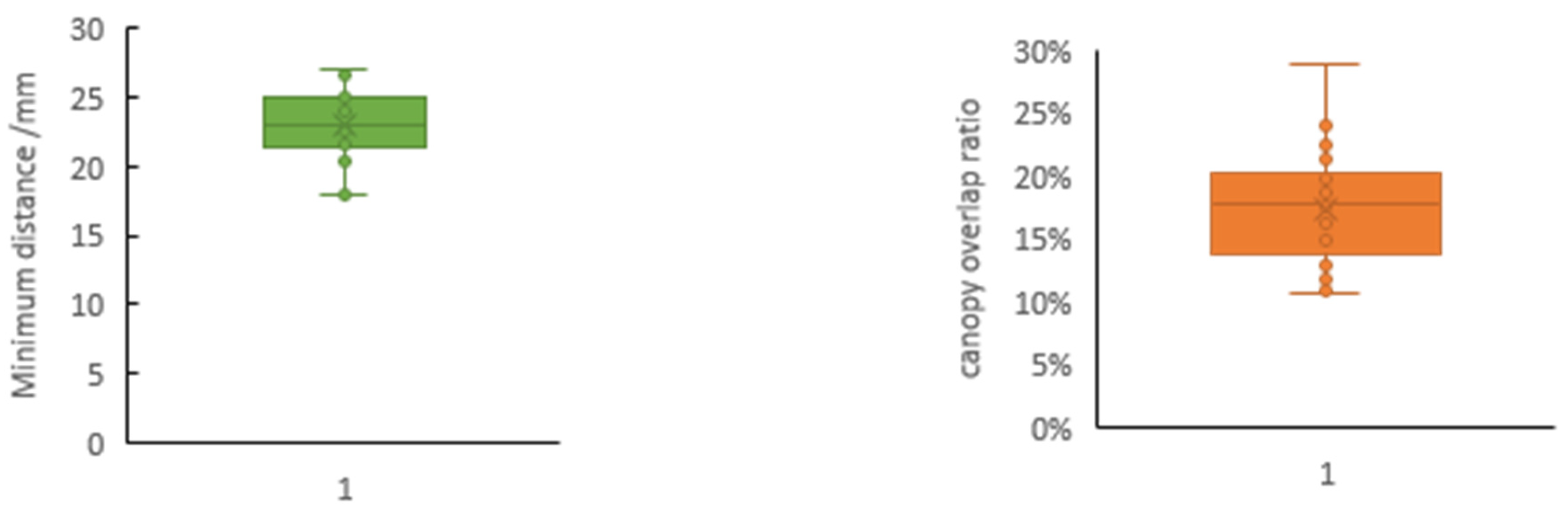

2.4. Detection Accuracy Affected by a Different Overlap Ratio

2.5. Detection Accuracy Affected by Natural Light

3. Results

3.1. Training Results of the YOLO V4-Tiny Model

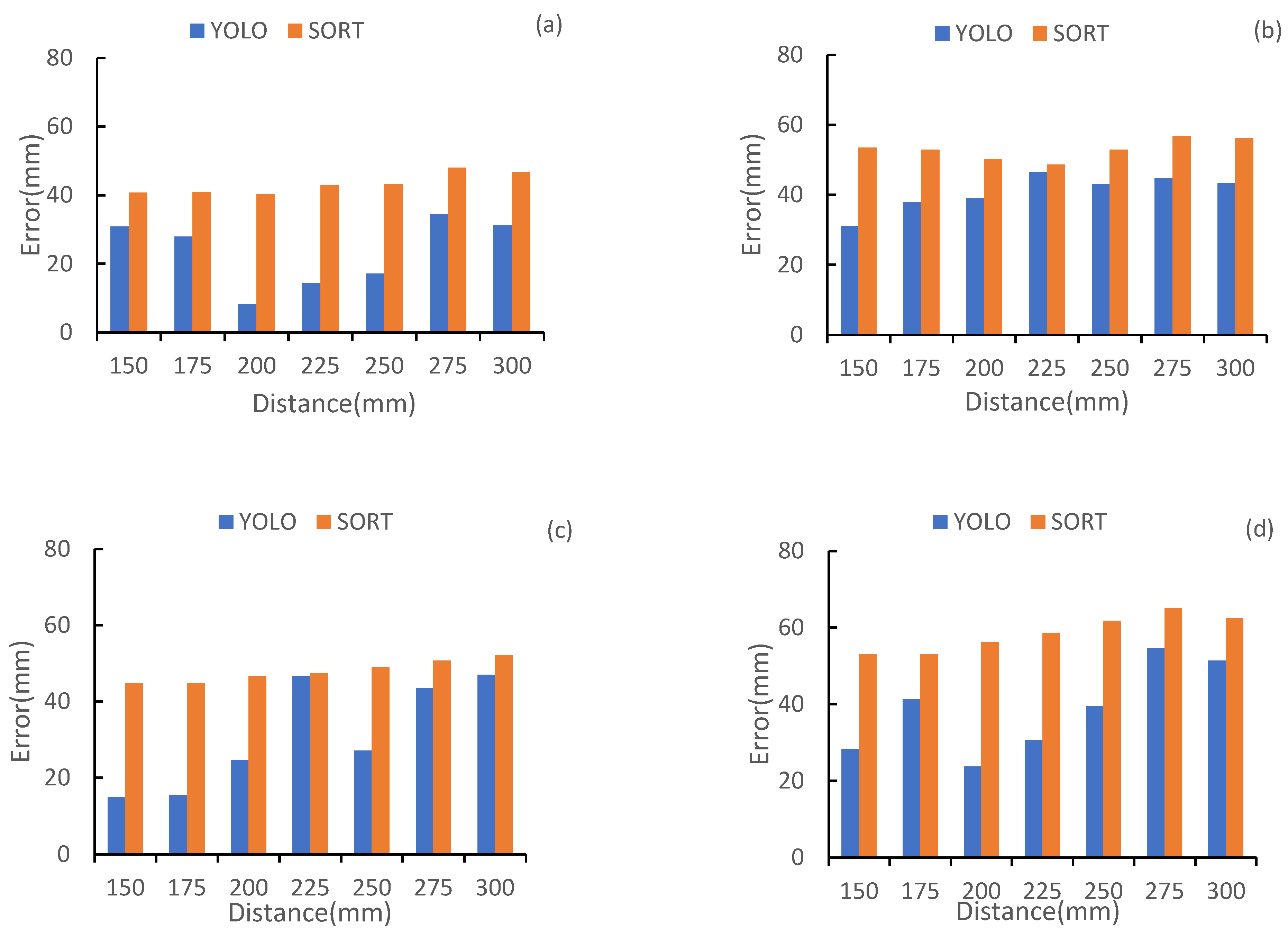

3.2. Spatial Location Results

3.3. Detection Results of Different Overlap Ratio

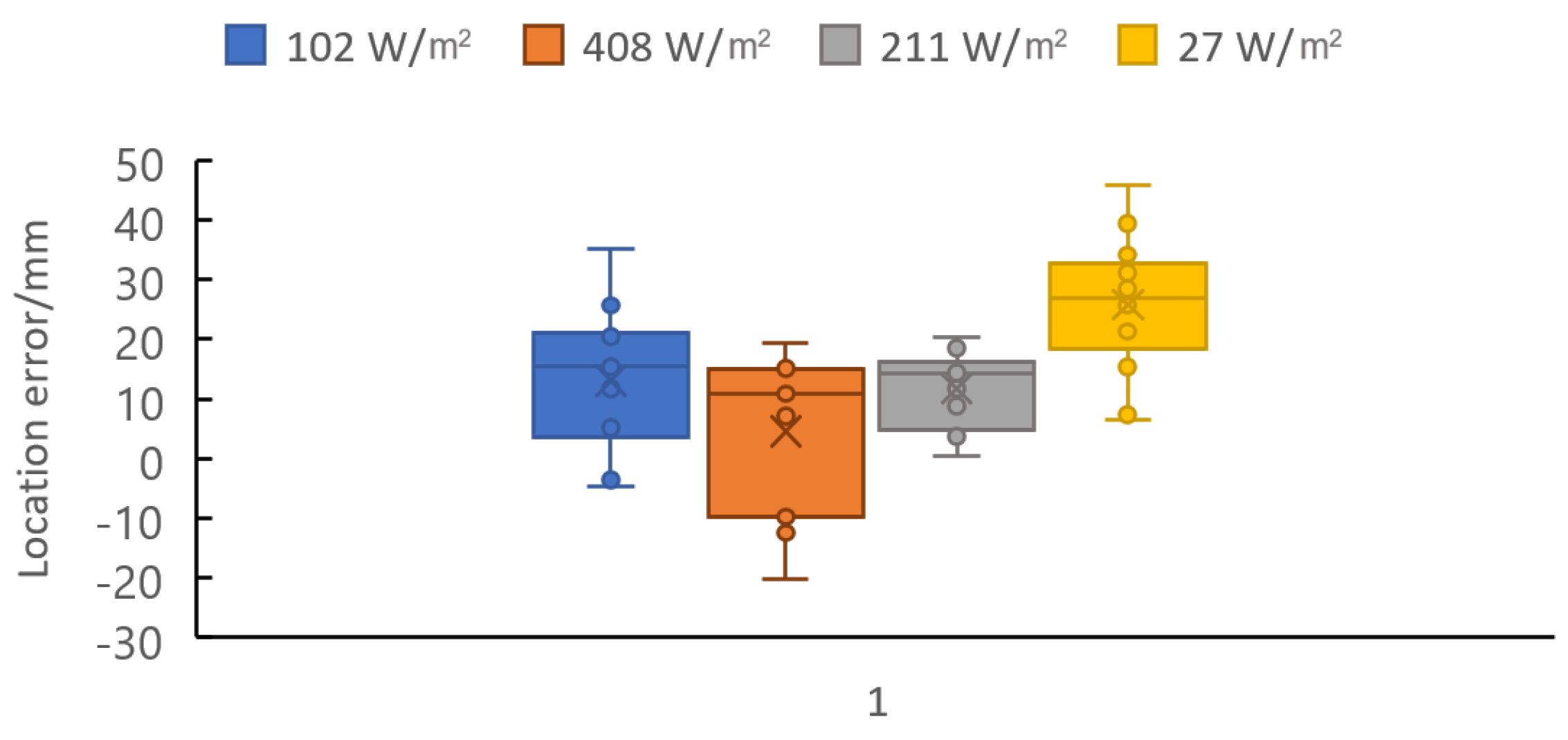

3.4. Detection and Location Results with Different Lights

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Adebayo, I.A.; Pam, V.K.; Arsad, H.; Samian, M.R. The Global Floriculture Industry: Status and Future Prospects. In The Global Floriculture Industry: Shifting Directions, New Trends, and Future Prospects, 1st ed.; Hakeem, K.R., Ed.; Apple Academic Press: New York, NY, USA, 2020. [Google Scholar]

- Laura, D. Floriculture’s Future Hangs in the Balance between Labor and Technology. Available online: https://www.greenhousegrower.com/management/top-100/floricultures-future-hangs-in-the-balance-between-labor-and-technology/ (accessed on 5 November 2021).

- Jin, Y.C.; Liu, J.Z.; Xu, Z.J.; Yuan, S.Q.; Li, P.P.; Wang, J.Z. Development status and trend of agricultural robot technology. Int. J. Agric. Biol. Eng. 2021, 14, 1–19. [Google Scholar] [CrossRef]

- Jin, B.K. The Direction of Management Development of American Flower Growers in Response to Globalization: Potted Flowering Plants Growers of Salinas, California. Agric. Mark. J. Jpn. 2009, 18, 889–901. [Google Scholar]

- Soleimanipour, A.; Chegini, G.R. A vision-based hybrid approach for identification of Anthurium flower cultivars. Comput. Electron. Agric. 2020, 174, 105460. [Google Scholar] [CrossRef]

- Aleya, K.F. Automated Damaged Flower Detection Using Image Processing. J. Glob. Res. Comput. Sci. 2013, 4, 21–24. [Google Scholar]

- Islam, S.; Foysal, M.F.A.; Jahan, N. A Computer Vision Approach to Classify Local Flower using Convolutional Neural Network. In Proceedings of the 2020 4th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 13–15 May 2020. [Google Scholar]

- Sethy, P.K.; Routray, B.; Behera, S.K. Detection and Counting of Marigold Flower Using Image Processing Technique. In Advances in Computer, Communication and Control; Biswas, U.B.A., Pal, S., Biswas, A., Sarkar, D., Haldar, S., Eds.; Springer: Singapore, 2019; Volume 41, pp. 87–93. [Google Scholar]

- Guo, H. Research of Lilium Cut Flower Detecting System Based on Machine Vision. Mech. Eng. 2016, 10, 217–220. [Google Scholar]

- Shen, G.; Wu, W.; Shi, Y.; Yang, P.; Zhou, Q. The latest progress in the research and application of smart agriculture in China. China Agric. Inform. 2018, 30, 1–14. [Google Scholar]

- Zhuang, J.J.; Luo, S.M.; Hou, C.J.; Tang, Y.; He, Y.; Xue, X.Y. Detection of orchard citrus fruits using a monocular machine vision-based method for automatic fruit picking applications. Comput. Electron. Agric. 2018, 152, 64–73. [Google Scholar] [CrossRef]

- Horton, R.; Cano, E.; Bulanon, D.; Fallahi, E. Peach Flower Monitoring Using Aerial Multispectral Imaging. J. Imaging 2017, 3, 2. [Google Scholar] [CrossRef]

- Aggelopoulou, A.D.; Bochtis, D.; Fountas, S.; Swain, K.C.; Gemtos, T.A.; Nanos, G.D. Yield prediction in apple orchards based on image processing. Precis. Agric. 2011, 12, 448–456. [Google Scholar] [CrossRef]

- Sarkate, R.S.; Kalyankar, N.V.; Khanale, P.B. Application of computer vision and color image segmentation for yield prediction precision. In Proceedings of the 2013 International Conference on Information Systems and Computer Networks (ISCON), Mathura, India, 9–10 March 2013. [Google Scholar]

- Zhao, C.Y.; Lee, W.S.; He, D.J. Immature green citrus detection based on colour feature and sum of absolute transformed difference (SATD) using colour images in the citrus grove. Comput. Electron. Agric. 2016, 124, 243–253. [Google Scholar] [CrossRef]

- Mavridou, E.; Vrochidou, E.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Machine Vision Systems in Precision Agriculture for Crop Farming. J. Imaging 2019, 5, 89. [Google Scholar] [CrossRef]

- Rehman, T.U.; Mahmud, M.S.; Chang, Y.K.; Jin, J.; Shin, J. Current and future applications of statistical machine learning algorithms for agricultural machine vision systems. Comput. Electron. Agric. 2018, 156, 585–605. [Google Scholar] [CrossRef]

- Grimm, J.; Herzog, K.; Rist, F.; Kicherer, A.; Toepfer, R.; Steinhage, V. An adaptable approach to automated visual detection of plant organs with applications in grapevine breeding. Biosyst. Eng. 2019, 183, 170–183. [Google Scholar] [CrossRef]

- Rahnemoonfar, M.; Sheppard, C. Deep Count: Fruit Counting Based on Deep Simulated Learning. Sensors 2017, 17, 905. [Google Scholar] [CrossRef]

- Tan, W.X.; Zhao, C.J.; Wu, H.R. Intelligent alerting for fruit-melon lesion image based on momentum deep learning. Multimed. Tools Appl. 2016, 75, 16741–16761. [Google Scholar] [CrossRef]

- Williams, H.A.M.; Jones, M.H.; Nejati, M.; Seabright, M.J.; Bell, J.; Penhall, N.D.; Barnett, J.J.; Duke, M.D.; Scarfe, A.J.; Ahn, H.S.; et al. Robotic kiwifruit harvesting using machine vision, convolutional neural networks, and robotic arms. Biosyst. Eng. 2019, 181, 140–156. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (Cvpr), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wu, D.H.; Lv, S.C.; Jiang, M.; Song, H.B. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Chang, Y.-W.; Hsiao, Y.-K.; Ko, C.-C.; Shen, R.-S.; Lin, W.-Y.; Lin, K.-P. A Grading System of Pot-Phalaenopsis Orchid Using YOLO-V3 Deep Learning Model; Springer International Publishing: Cham, Switzerland, 2021; pp. 498–507. [Google Scholar]

- Cheng, Z.B.; Zhang, F.Q. Flower End-to-End Detection Based on YOLOv4 Using a Mobile Device. Wirel. Commun. Mob. Comput. 2020, 2020, 8870649. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Kumar, S.; Gupta, H.; Yadav, D.; Ansari, I.A.; Verma, O.P. YOLOv4 algorithm for the real-time detection of fire and personal protective equipments at construction sites. Multimed. Tools Appl. 2021, 31, 1–21. [Google Scholar] [CrossRef]

- Li, X.; Pan, J.; Xie, F.; Zeng, J.; Li, Q.; Huang, X.; Liu, D.; Wang, X. Fast and accurate green pepper detection in complex backgrounds via an improved Yolov4-tiny model. Comput. Electron. Agric. 2021, 191, 106503. [Google Scholar] [CrossRef]

- Tran, T.M. A Study on Determination of Simple Objects Volume Using ZED Stereo Camera Based on 3D-Points and Segmentation Images. Int. J. Emerg. Trends Eng. Res. 2020, 8, 1990–1995. [Google Scholar] [CrossRef]

- Ortiz, L.E.; Cabrera, E.V.; Goncalves, L. Depth Data Error Modeling of the ZED 3D Vision Sensor from Stereolabs. Electron. Lett. Comput. Vis. Image Anal. 2018, 17, 1–15. [Google Scholar] [CrossRef]

- Gupta, T.; Li, H. Indoor mapping for smart cities—An affordable approach: Using Kinect Sensor and ZED stereo camera. In Proceedings of the International Conference on Indoor Positioning & Indoor Navigation 2017, Sapporo, Japan, 18–21 September 2017; pp. 1–8. [Google Scholar]

- Varma, V.S.; Adarsh, S.; Ramachandran, K.I.; Nair, B.B. Real Time Detection of Speed Hump/Bump and Distance Estimation with Deep Learning using GPU and ZED Stereo Camera. Procedia Comput. Sci. 2018, 143, 988–997. [Google Scholar] [CrossRef]

- Handa, A.; Newcombe, R.A.; Angeli, A.; Davison, A.J. Real-Time Camera Tracking: When is High Frame-Rate Best? Computer Vision—ECCV 2012; Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 222–235. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple Online and Realtime Tracking with a Deep Association Metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017. [Google Scholar]

- Hsu, T.H.; Lee, C.H.; Chen, L.H. An interactive flower image recognition system. Multimed. Tools Appl. 2011, 53, 53–73. [Google Scholar] [CrossRef]

- Almendral, K.; Babaran, R.; Carzon, B.; Cu, K.; Lalanto, J.M.; Abad, A.C. Autonomous Fruit Harvester with Machine Vision. J. Telecommun. Electron. Comput. Eng. 2018, 10, 79–86. [Google Scholar]

- Ding, S.; Li, L.; Li, Z.; Wang, H.; Zhang, Y.C. Smart electronic gastroscope system using a cloud-edge collaborative framework. Future Gener. Comput. Syst. 2019, 100, 395–407. [Google Scholar] [CrossRef]

- Mittal, S. A Survey on optimized implementation of deep learning models on the NVIDIA Jetson platform. J. Syst. Archit. 2019, 97, 428–442. [Google Scholar] [CrossRef]

- Lee, H.H.; Hong, K.S. Automatic recognition of flower species in the natural environment. Image Vis. Comput. 2017, 61, 98–114. [Google Scholar] [CrossRef]

- Tian, M.; Chen, H.; Wang, Q. Detection and Recognition of Flower Image Based on SSD network in Video Stream. J. Phys. Conf. Ser. 2019, 1237, 032045. [Google Scholar] [CrossRef]

- Yamamoto, K.; Guo, W.; Yoshioka, Y.; Ninomiya, S. On plant detection of intact tomato fruits using image analysis and machine learning methods. Sensors 2014, 14, 12191–12206. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Zhang, Y.; Gu, R. Research Status and Prospects on Plant Canopy Structure Measurement Using Visual Sensors Based on Three-Dimensional Reconstruction. Agriculture 2020, 10, 462. [Google Scholar] [CrossRef]

- Maruyama, Y.; Yamaguchi, T.; Nonaka, Y. Planning of Potted Flower Production Conducive to Optimum Greenhouse Utilization. J. Jpn. Ind. Manag. Assoc. 2000, 52, 177–185. [Google Scholar]

- Ji, R.; Fu, Z.; Qi, L. Real-time plant image segmentation algorithm under natural outdoor light conditions. N. Z. J. Agric. Res. 2007, 50, 847–854. [Google Scholar] [CrossRef]

- Hansheng, H.U.; Zhao, L.; Zengwu, L.I.; Fang, Z. Study of the Quality Standards of Potted Poinsettia (Euphorbia pulcherrima) and Establishment of It’s Ministerial Standards in China. J. Cent. South For. Univ. 2003, 23, 112–114. [Google Scholar]

- Park, S.; Yamane, K.; Fujishige, N.; Yamaki, Y. Effects of Growth and Development of Potted Cyclamen as a Home-Use Flower on Consumers’ Emotions. Hortic. Res. 2008, 7, 317–322. [Google Scholar] [CrossRef][Green Version]

- Maruyama, Y.; Yamaguchi, T.; Nonaka, Y. The Planning of Optimum Use of Bench Space for Potted Flower Production in a Newly Constructed Greenhouse. J. Jpn. Ind. Manag. Assoc. 2002, 52, 381–395. [Google Scholar]

- Pan, J.; Mcelhannon, J. Future Edge Cloud and Edge Computing for Internet of Things Applications. IEEE Internet Things J. 2017, 5, 439–449. [Google Scholar] [CrossRef]

| Average Precision (AP) | Recall | Intersection over Union (IoU) | Mean Average Precision (mAP) | Average Detection Frame Rate | |||

|---|---|---|---|---|---|---|---|

| Poinsettia | Cyclamen | Average | |||||

| IoU = 0.5 | 90.56% | 88.89% | 89.00% | 87.00% | 68.18% | 89.72% | 16 FPS |

| Time | 9:00 | 13:00 | 15:00 | 17:00 |

|---|---|---|---|---|

| Radiation (W/m2) | 102 | 408 | 211 | 27 |

| Detected numbers | 14 | 15 | 15 | 13 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Gao, Z.; Zhang, Y.; Zhou, J.; Wu, J.; Li, P. Real-Time Detection and Location of Potted Flowers Based on a ZED Camera and a YOLO V4-Tiny Deep Learning Algorithm. Horticulturae 2022, 8, 21. https://doi.org/10.3390/horticulturae8010021

Wang J, Gao Z, Zhang Y, Zhou J, Wu J, Li P. Real-Time Detection and Location of Potted Flowers Based on a ZED Camera and a YOLO V4-Tiny Deep Learning Algorithm. Horticulturae. 2022; 8(1):21. https://doi.org/10.3390/horticulturae8010021

Chicago/Turabian StyleWang, Jizhang, Zhiheng Gao, Yun Zhang, Jing Zhou, Jianzhi Wu, and Pingping Li. 2022. "Real-Time Detection and Location of Potted Flowers Based on a ZED Camera and a YOLO V4-Tiny Deep Learning Algorithm" Horticulturae 8, no. 1: 21. https://doi.org/10.3390/horticulturae8010021

APA StyleWang, J., Gao, Z., Zhang, Y., Zhou, J., Wu, J., & Li, P. (2022). Real-Time Detection and Location of Potted Flowers Based on a ZED Camera and a YOLO V4-Tiny Deep Learning Algorithm. Horticulturae, 8(1), 21. https://doi.org/10.3390/horticulturae8010021