Monitoring Chrysanthemum Cultivation Areas Using Remote Sensing Technology

Abstract

1. Introduction

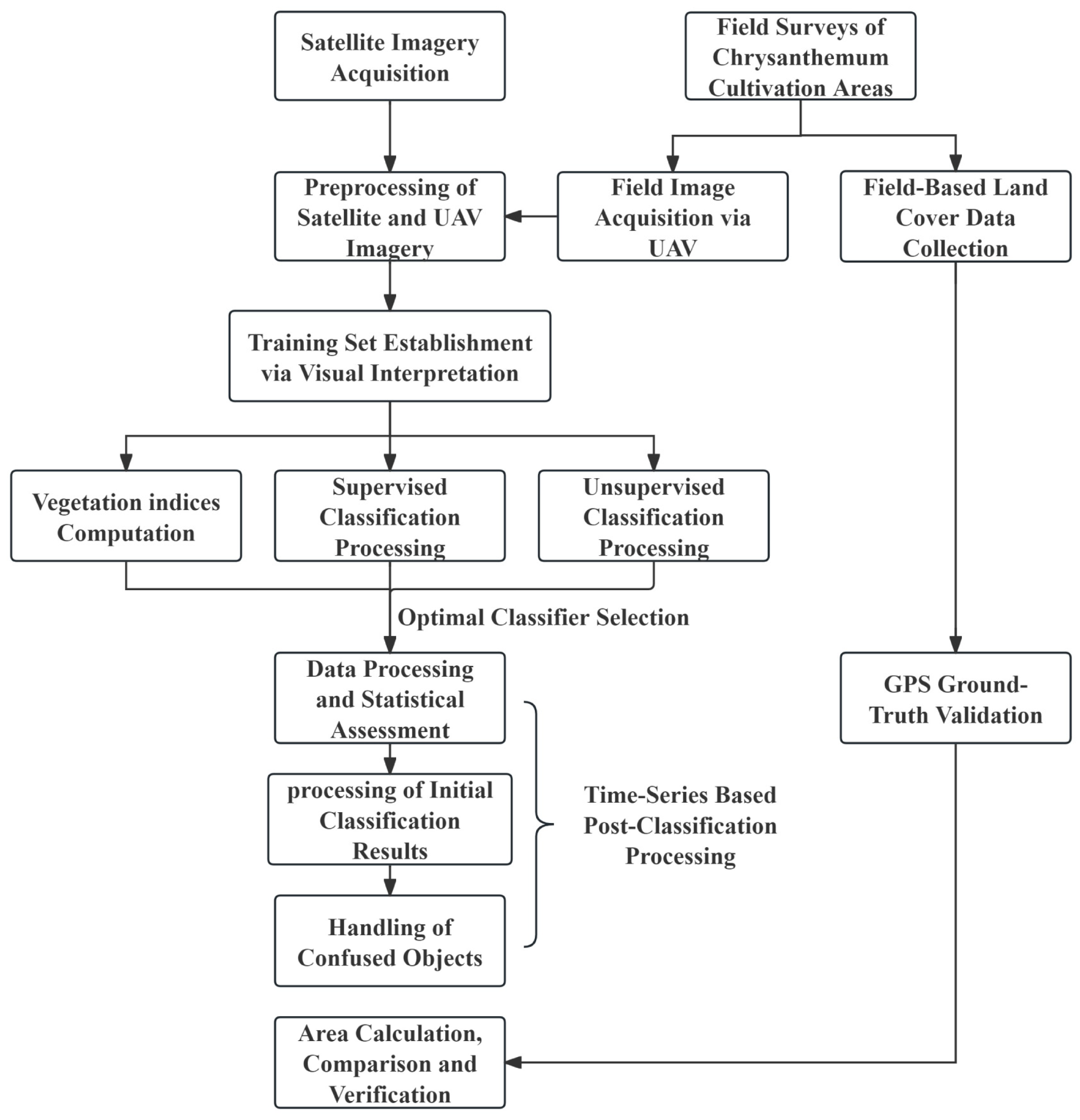

2. Materials and Methods

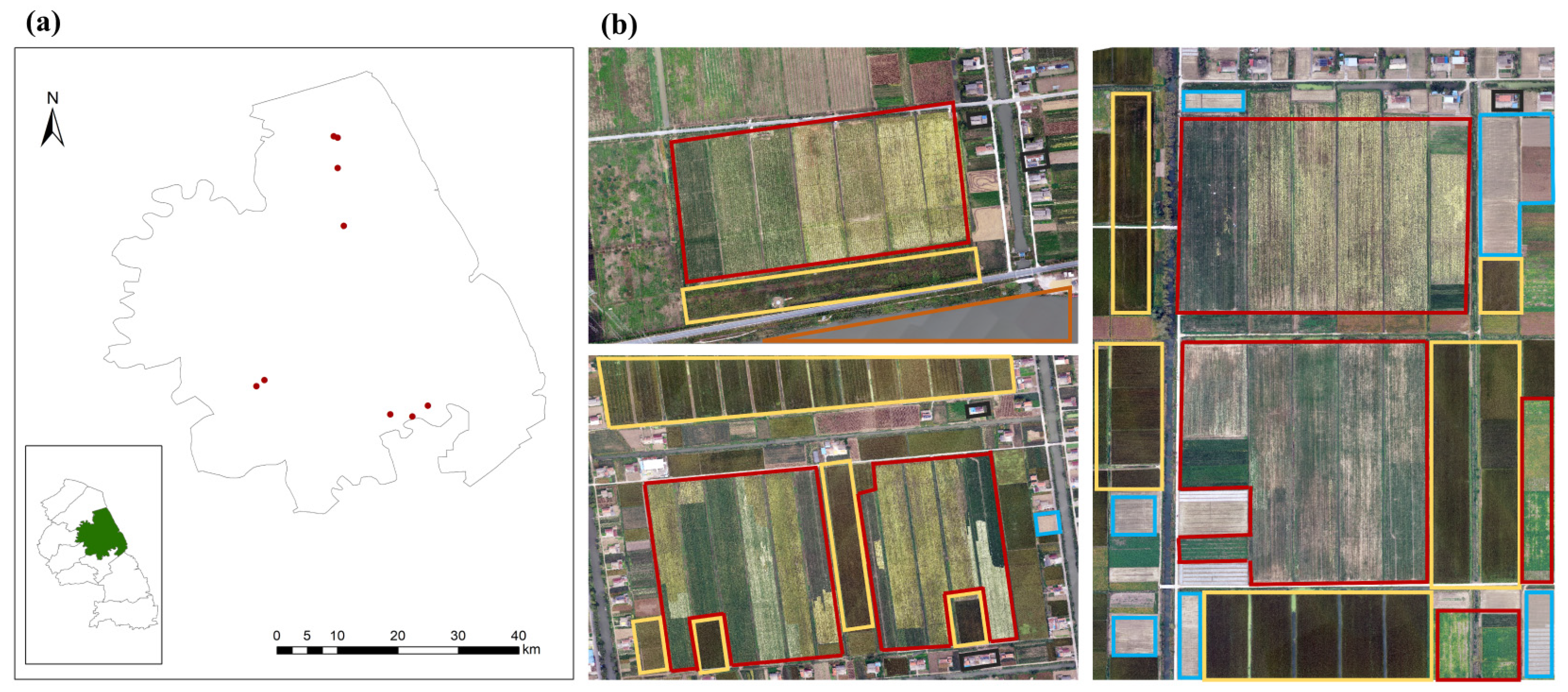

2.1. Study Area Description

2.2. Acquisition of Remote Sensing Data

2.3. Preprocessing of Remote Sensing Data

2.4. Calculation of the Vegetation Indices (VIs)

2.5. Unsupervised Classification and Supervised Classification

2.6. Accuracy Validation

3. Results

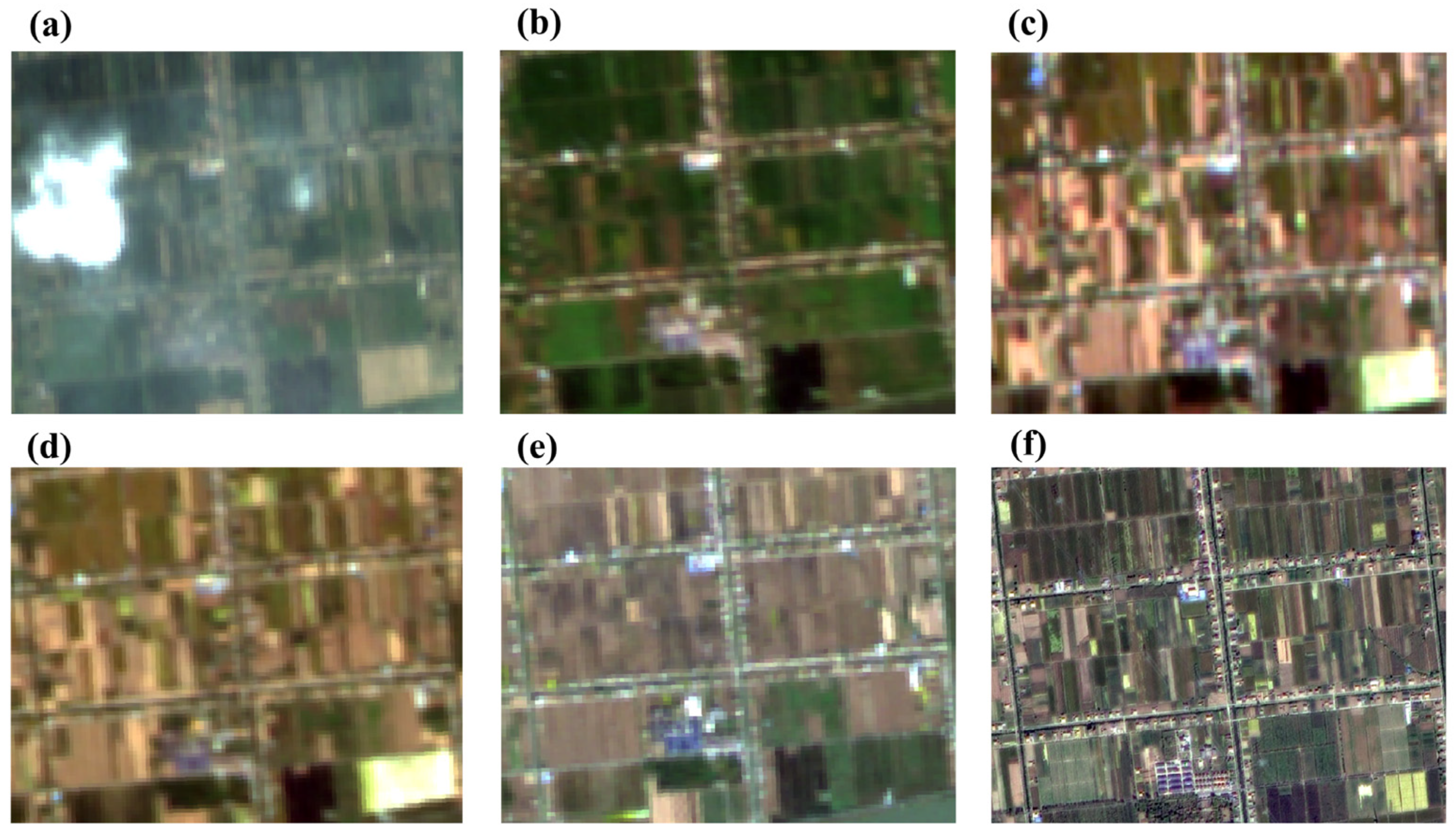

3.1. Analysis of Drone Image Features

3.2. Analysis of Satellite Remote Sensing Image Features

3.3. VI Calculation for Sheyang County

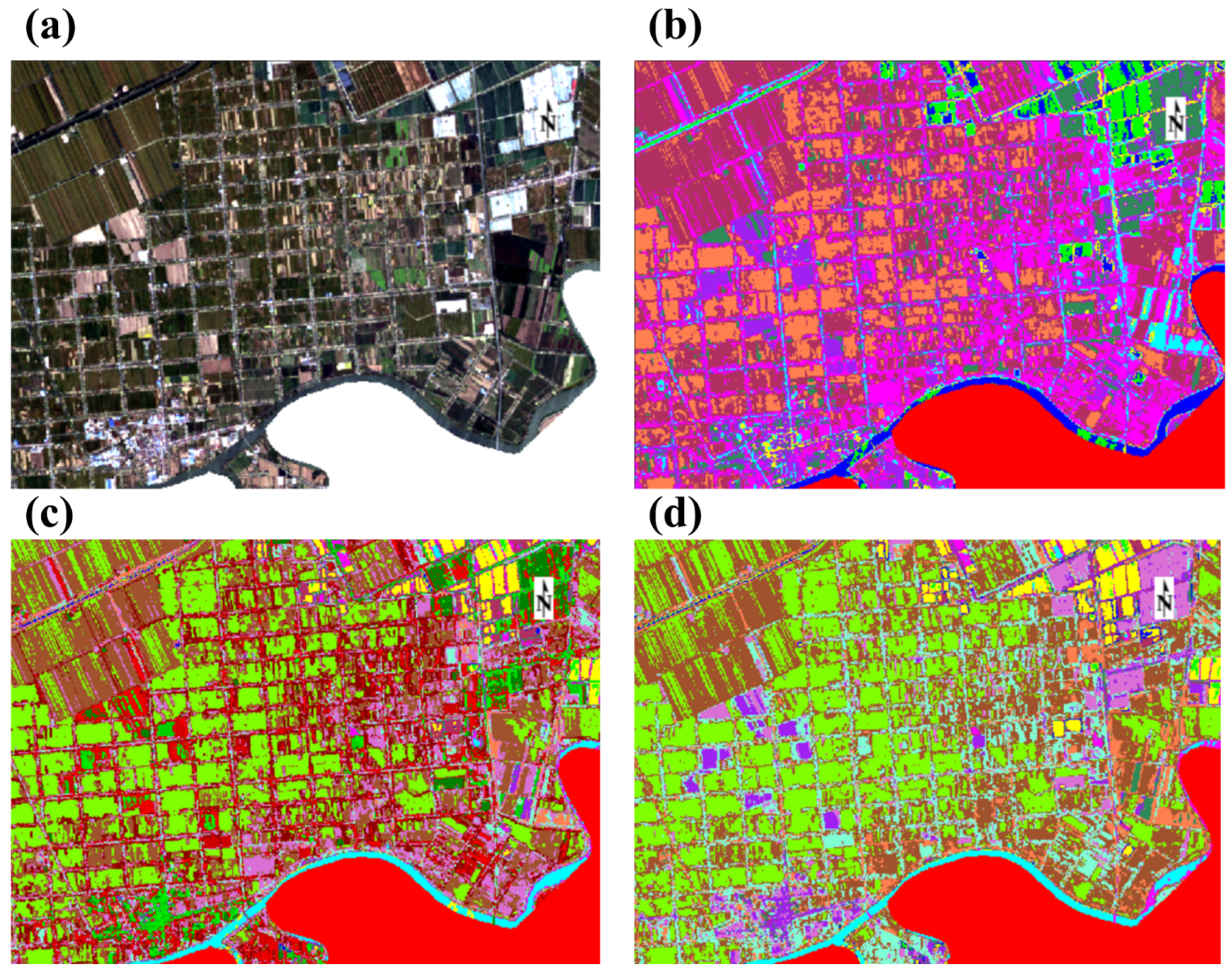

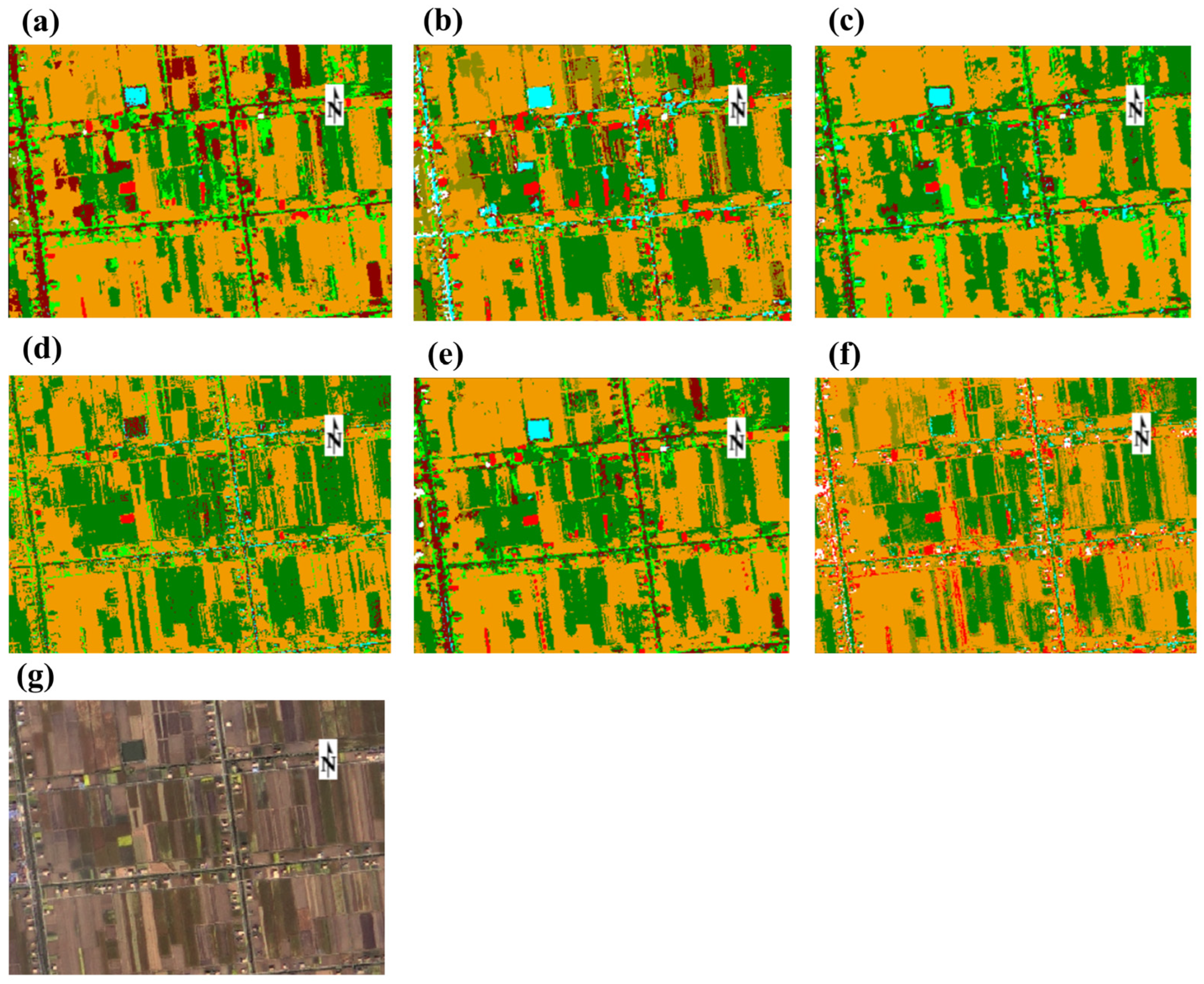

3.4. Assessment Results of Unsupervised and Supervised Classification Methods

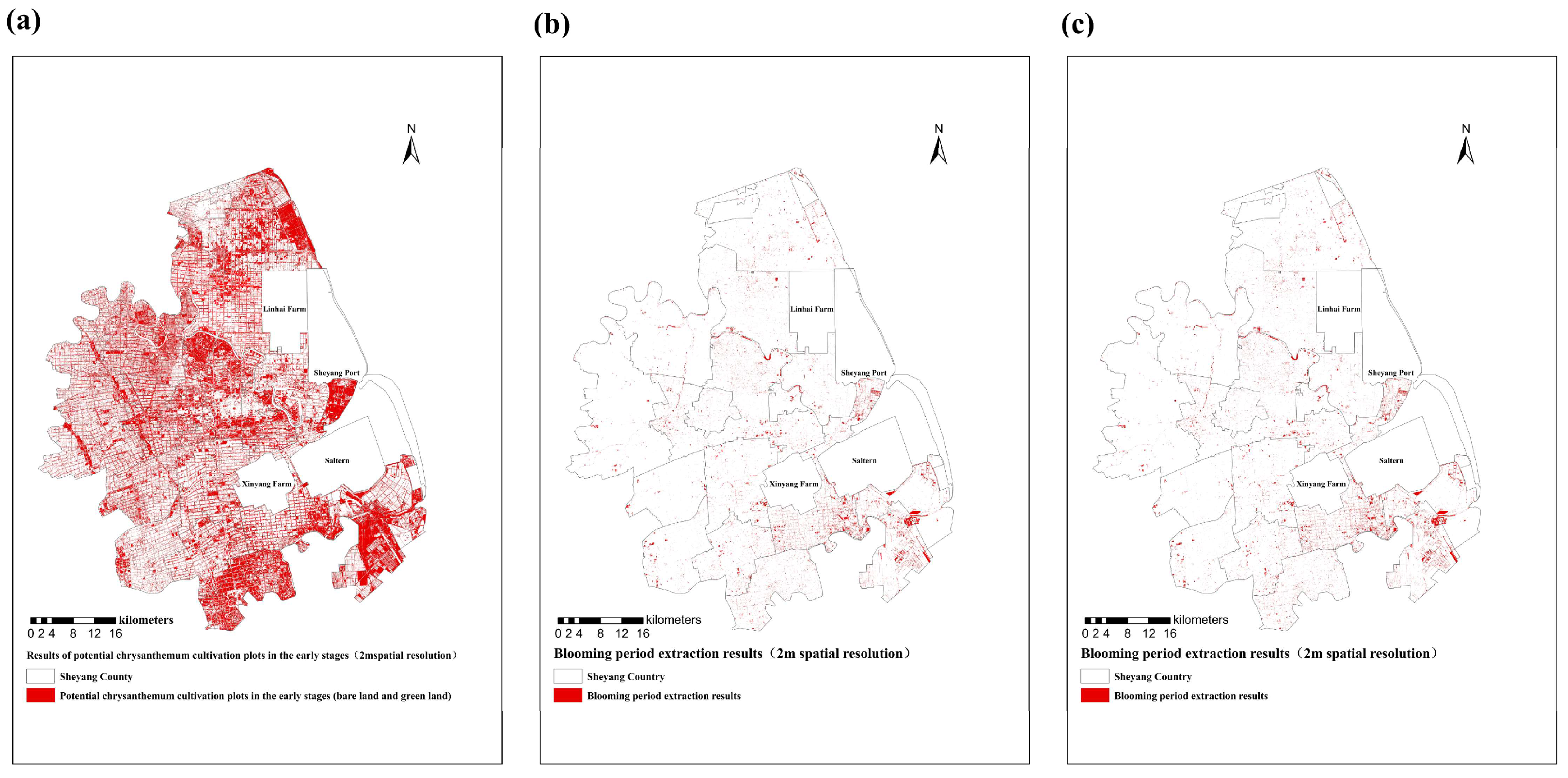

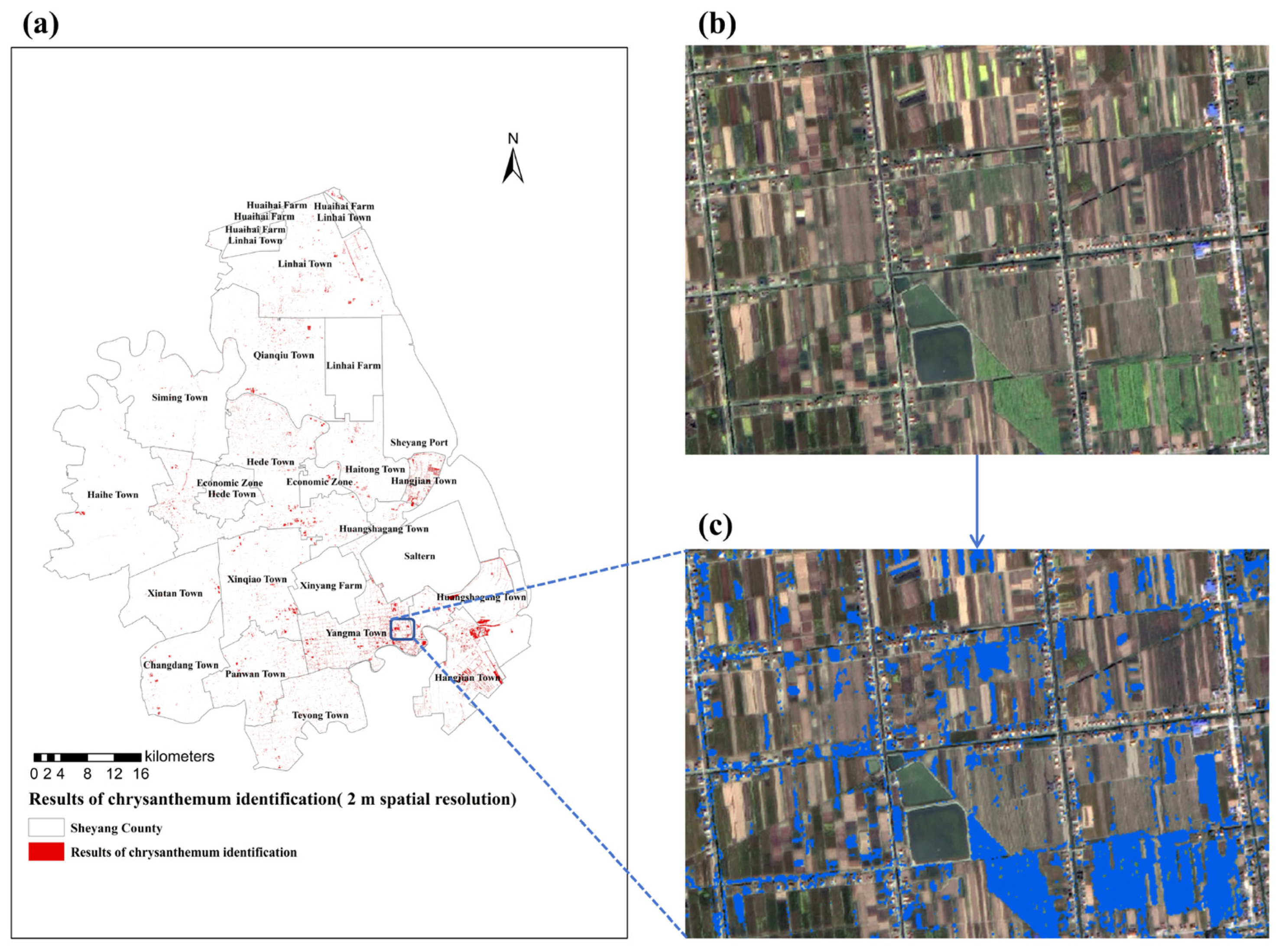

3.5. Time-Series-Based Post-Classification Processing

3.5.1. Processing of Initial Classification Results

3.5.2. Accuracy Assessment

3.5.3. Handling of Confused Objects

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CE | Commission Errors |

| CIgreen | Green Chlorophyll Index |

| EVI2 | Enhanced Vegetation Index 2 |

| GNDVI | Green Normalized Difference Vegetation Index |

| MaDC | Mahalanobis Distance classification |

| MDC | Minimum Distance classification |

| MLC | Maximum Likelihood classification |

| NDVI | Normalized Difference Vegetation Index |

| NNC | Neural Network classification |

| OA | Overall Accuracy |

| OE | Omission Errors |

| PA | Producer’s Accuracy |

| PC | Parallelepiped classification |

| SR | Simple Ratio |

| SVM | Support Vector Machine classification |

| UAV | unmanned aerial vehicle |

| VIs | Vegetation indices |

References

- Zhang, Z.J.; Hu, W.J.; Yu, A.Q.; Wu, L.H.; Yang, D.Q.; Kuang, H.X.; Wang, M. Review of polysaccharides from Chrysanthemum morifolium Ramat.: Extraction, purification, structural characteristics, health benefits, structural-activity relationships and applications. Int. J. Biol. Macromol. 2024, 278 Pt 3, 134919. [Google Scholar] [CrossRef]

- Yuan, H.W.; Jiang, S.; Liu, Y.; Daniyal, M.; Jian, Y.Q.; Peng, C.Y.; Shen, J.L.; Liu, S.F.; Wang, W. The flower head of Chrysanthemum morifolium Ramat. (Juhua): A paradigm of flowers serving as Chinese dietary herbal medicine. J. Ethnopharmacol. 2020, 261, 113043. [Google Scholar] [CrossRef]

- Hao, N.; Gao, X.; Zhao, Q.; Miao, P.Q.; Cheng, J.W.; Li, Z.; Liu, C.Q.; Li, W.L. Rapid origin identification of chrysanthemum morifolium using laser-induced breakdown spectroscopy and chemometrics. Postharvest Biol. Technol. 2023, 197, 112226. [Google Scholar] [CrossRef]

- Laban, N.; Abdellatif, B.; Ebeid, H.M.; Shedeed, H.A.; Tolba, M.F. Seasonal Multi-temporal Pixel Based Crop Types and Land Cover Classification for Satellite Images Vsing Convolutional Neural Networks. In Proceedings of the 2018 13th International Conference on Computer Engineering and Systems (ICCES), Cairo, Egypt, 18–19 December 2018; pp. 21–26. [Google Scholar] [CrossRef]

- Bazzi, H.; Baghdadi, N.; El Hajj, M.; Zribi, M.; Minh, D.H.T.; Ndikumana, E.; Courault, D.; Belhouchette, H. Mapping Paddy Rice Using Sentinel-1 SAR Time Series in Camargue, France. Remote Sens. 2019, 11, 887. [Google Scholar] [CrossRef]

- Hong, D.; Yokoya, N.; Xia, G.S.; Chanussot, J.; Zhu, X.X. X-ModalNet: A semi-supervised deep cross-modal network for classification of remote sensing data. ISPRS J. Photogramm. Remote Sens. 2020, 167, 12–23. [Google Scholar] [CrossRef]

- Ló, T.B.; Corrêa, U.B.; Araújo, R.M.; Johann, J.A. Temporal convolutional neural network for land use and land cover classification using satellite images time series. Arab. J. Geosci. 2023, 16, 585. [Google Scholar] [CrossRef]

- Kikaki, K.; Kakogeorgiou, L.; Mikeli, P.; Raitsos, D.E.; Karantzalos, K. MARIDA: A benchmark for Marine Debris detection from Sentinel-2 remote sensing data. PLoS ONE 2022, 17, e0262247. [Google Scholar] [CrossRef]

- Munawar, H.S.; Hammad, A.W.A.; Waller, S.T. Remote Sensing Methods for Flood Prediction: A Review. Sensors 2022, 22, 960. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Chang, W.; Yao, Y.; Yao, Z.Y.; Zhao, Y.Y.; Li, S.M.; Liu, Z.; Zhang, X.D. Cropformer: A new generalized deep learning classification approach for multi-scenario crop classification. Front. Plant Sci. 2023, 14, 1130659. [Google Scholar] [CrossRef] [PubMed]

- Aziz, G.; Minallah, N.; Saeed, A.; Frnda, J.; Khan, W. Remote sensing based forest cover classification using machine learning. Sci. Rep. 2024, 14, 69. [Google Scholar] [CrossRef] [PubMed]

- Kasampalis, D.A.; Alexandridis, T.K.; Deva, C.; Challinor, A.; Moshou, D.; Zalidis, G. Contribution of remote sensing on crop models: A review. J. Imaging 2018, 4, 52. [Google Scholar] [CrossRef]

- Zhang, R.; Zhang, M.; Yan, Y.; Chen, Y.; Jiang, L.L.; Wei, X.X.; Zhang, X.B.; Li, H.T.; Li, M.H. Promoting the Development of Astragalus mongholicus Bunge Industry in Guyang County (China) Based on MaxEnt and Remote Sensing. Front. Plant Sci. 2022, 13, 908114. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Wei, X.; Sun, S.; Li, M.; Shi, T.; Zhang, X. Assessment of Carbon Sequestration Capacity of E. ulmoides in Ruyang County and Its Ecological Suitability Zoning Based on Satellite Images of GF-6. Sensors 2023, 23, 7895. [Google Scholar] [CrossRef] [PubMed]

- Shi, T.T.; Zhang, X.B.; Guo, L.P.; Jing, Z.X.; Huang, L.Q. Research on remote sensing recognition of wild planted Lonicera japonica based on deep convolutional neural network. China J. Chin. Mater. Medica 2020, 45, 5658–5662. [Google Scholar] [CrossRef]

- Wu, B.F.; Zhang, M.; Zeng, H.W.; Tian, F.Y.; Potgieter, A.B.; Qin, X.L.; Yan, N.N.; Chang, S.; Zhao, Y.; Dong, Q.H.; et al. Challenges and opportunities in remote sensing-based crop monitoring: A review. Natl. Sci. Rev. 2023, 10, nwac290. [Google Scholar] [CrossRef]

- Qian, Y.; Yang, Z.; Di, L.; Rahman, M.S.; Tan, Z.; Xue, L.; Gao, F.; Yu, E.G.; Zhang, X. Crop Growth Condition Assessment at County Scale Based on Heat-Aligned Growth Stages. Remote Sens. 2019, 11, 2439. [Google Scholar] [CrossRef]

- Meroni, M.; d’Andrimont, R.; Vrieling, A.; Fasbender, D.; Lemoine, G.; Rembold, F.; Seguini, L.; Verhegghen, A. Comparing land surface phenology of major European crops as derived from SAR and multispectral data of Sentinel-1 and-2. Remote Sens. Environ. 2021, 253, 112232. [Google Scholar] [CrossRef]

- Jewan, S.Y.Y.; Singh, A.; Billa, L.; Sparkes, D.; Murchie, E.; Gautam, D.; Cogato, A.; Pagay, V. Can Multi-Temporal Vegetation Indices and Machine Learning Algorithms Be Used for Estimation of Groundnut Canopy State Variables? Horticulturae 2024, 10, 748. [Google Scholar] [CrossRef]

- Hartigan, J.A.; Wong, M.A. Algorithm AS 136: A K-means clustering algorithm. J. R. Stat. Soc. (Appl. Stat.) 1979, 28, 100–108. [Google Scholar] [CrossRef]

- Krishna, K.; Murty, M.N. Genetic K-means algorithm. IEEE Trans. Syst. Man Cybern. Part B Cybern. 1999, 29, 433–439. [Google Scholar] [CrossRef]

- Zeng, L.; Li, T.B.; Huang, H.T.; Zeng, P.; He, Y.X.; Jing, L.H.; Yang, Y.; Jiao, S.T. Identifying Emeishan basalt by supervised learning with Landsat-5 and ASTER data. Front. Earth Sci. 2023, 10, 1097778. [Google Scholar] [CrossRef]

- Escobar-Flores, J.G.; Sandoval, S.; Gámiz-Romero, E. Unmanned aerial vehicle images in the machine learning for agave detection. Environ. Sci. Pollut. Res. Int. 2022, 29, 61662–61673. [Google Scholar] [CrossRef] [PubMed]

- Elfarra, F.G.; Calin, M.A.; Parasca, S.V. Computer-aided detection of bone metastasis in bone scintigraphy images using parallelepiped classification method. Ann. Nucl. Med. 2019, 33, 866–874. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.C.; Qin, Q.M.; Grussenmeyer, P.; Koehl, M. Urban surface water body detection with suppressed built-up noise based on water indices from Sentinel-2 MSI imagery. Remote Sens. Environ. 2018, 219, 259–270. [Google Scholar] [CrossRef]

- Qazi, U.K.; Ahmad, I.; Minallah, N.; Zeeshan, M. Classification of tobacco using remote sensing and deep learning techniques. Agron. J. 2023, 116, 839–847. [Google Scholar] [CrossRef]

- Song, A.; Zhao, S.; Chen, S.; Jiang, J.F.; Chen, S.M.; Li, H.Y.; Chen, Y.; Chen, X.; Fang, W.M.; Chen, F.D. The abundance and diversity of soil fungi in continuously monocropped chrysanthemum. Sci. World J. 2013, 2013, 632920. [Google Scholar] [CrossRef]

- Wang, T.; Yang, K.; Ma, Q.; Jiang, X.; Zhou, Y.Q.; Kong, D.L.; Wang, Z.Y.; Parales, R.E.; Li, L.; Zhao, X. Rhizosphere Microbial Community Diversity and Function Analysis of Cut Chrysanthemum During Continuous Monocropping. Front. Microbiol. 2022, 13, 801546. [Google Scholar] [CrossRef]

- Wu, C.; Peng, J.; Song, T. An Integrated Investigation of the Relationship between Two Soil Microbial Communities (Bacteria and Fungi) and Chrysanthemum Zawadskii (Herb.) Tzvel. Wilt Disease. Microorganisms 2024, 12, 337. [Google Scholar] [CrossRef]

- Xiao, X.; Zhu, W.; Du, C.; Shi, Y.D.; Wang, J.F. Effects of crop rotation and bio-organic manure on soil microbial characteristics of Chrysanthemum cropping system. Ying Yong Sheng Tai Xue Bao 2015, 26, 1779–1784. [Google Scholar] [CrossRef]

- Liu, D.H.; Liao, Z.Y.; Liu, Q.; Bao, W.Z.; Jiang, J.Y.; Wang, M.H.; Miao, Y.H.; Ma, Y.P.; Bao, J.F. Ecological planting mode of “one cultivation, two changes and three adjustments” of Fubaiju in Macheng. Biot. Resour. 2022, 44, 484–491. [Google Scholar] [CrossRef]

- Maitiniyazi, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Wang, H.; Li, W.W.; Huang, W.; Niu, J.Q.; Nie, K. Research on land use classification of hyperspectral images based on multiscale superpixels. Math. Biosci. Eng. 2020, 17, 5099–5119. [Google Scholar] [CrossRef]

- Xu, J.F.; Zhu, Y.; Zhong, R.H.; Lin, Z.X.; Xu, J.L.; Jiang, H.; Huang, J.F.; Li, H.F.; Lin, T. DeepCropMapping: A multi-temporal deep learning approach with improved spatial generalizability for dynamic corn and soybean mapping. Remote Sens. Environ. 2020, 247, 111946. [Google Scholar] [CrossRef]

- Potgieter, A.B.; Zhao, Y.; Zarco-Tejada, P.J.; Chenu, K.; Zhang, Y.F.; Porker, K.; Biddulph, B.; Dang, Y.P.; Neale, T.; Roosta, F.; et al. Evolution and application of digital technologies to predict crop type and crop phenology in agriculture. Silico Plants 2021, 3, diab017. [Google Scholar] [CrossRef]

- Gu, C.; Liang, J.; Liu, X.Y.; Sun, B.Y.; Sun, T.S.; Yu, J.G.; Sun, C.X.; Wan, H.W.; Gao, J.X. Application and prospects of hyperspectral remote sensing in monitoring plant diversity in grassland. Chin. J. Appl. Ecol. 2024, 35, 1397–1407. [Google Scholar] [CrossRef]

- Guo, J.X.; Zhang, M.X.; Wang, C.C.; Zhang, R.; Shi, T.T.; Wang, X.Y.; Zhang, X.B.; Li, M.H. Application of remote sensing technology in medicinal plant resources. China J. Chin. Mater. Medica 2021, 46, 4689–4696. [Google Scholar] [CrossRef]

- Futerman, S.I.; Laor, Y.; Eshel, G.; Cohen, Y. The potential of remote sensing of cover crops to benefit sustainable and precision fertilization. Sci. Total Environ. 2023, 891, 164630. [Google Scholar] [CrossRef]

| Classification Methods | Overall Accuracy (OA)/% | Kappa Coefficient | Producer’s Accuracy (PA)/% | User’s Accuracy (UA)/% | Chrysanthemum Area with 2 m Spatial Resolution/m2 | Processing Time for 2 m Spatial Resolution Imagery/s | Processing Time for 16 m Spatial Resolution Imagery/s |

|---|---|---|---|---|---|---|---|

| Maximum Likelihood Classification (MLC) | 95.45 | 0.94 | 97.9 | 99.71 | 685,579.03 | 37 | 2 |

| Neural Network Classification (NNC) | 95.34 | 0.93 | 97.5 | 95.17 | 2,697,192.58 | 9192 | 226 |

| Mahalanobis Distance Classification (MaDC) | 91.68 | 0.88 | 88.72 | 99.68 | 392,595.25 | 37 | 1 |

| Parallelepiped Classification (PC) | 88.85 | 0.84 | 90.01 | 56.66 | 5,699,213.42 | 12 | 1 |

| Minimum Distance Classification (MDC) | 85.87 | 0.80 | 60.02 | 100 | 102,925.11 | 13 | 1 |

| Support Vector Machine Classification (SVM) | 97.55 | 0.97 | 99.02 | 99.48 | 707,741.69 | 20,276 | 8 |

| Regions | Month | OA/% | Kappa | PA/% | UA/% |

|---|---|---|---|---|---|

| Linhai Town | August | 99.34 | 0.99 | 97.94 | 89.15 |

| September | 97.48 | 0.96 | 98.88 | 68.10 | |

| October | 88.95 | 0.84 | 93.48 | 53.50 | |

| November | 93.80 | 0.91 | 98.16 | 19.99 | |

| Yangma Town | August | 86.92 | 0.82 | 96.12 | 91.99 |

| October | 96.25 | 0.95 | 96.70 | 98.08 | |

| November | 96.01 | 0.94 | 97.78 | 100.00 | |

| Huangjian Town | August | 92.00 | 0.88 | 96.93 | 93.01 |

| October | 96.40 | 0.94 | 96.30 | 60.69 | |

| November | 88.14 | 0.83 | 97.20 | 19.43 | |

| Teyong Town | August | 94.62 | 0.92 | 65.14 | 88.95 |

| October | 95.37 | 0.91 | 62.67 | 74.04 | |

| November | 94.99 | 0.92 | 95.96 | 100.00 | |

| Hede Town | August | 87.15 | 0.81 | 95.47 | 84.93 |

| September | 97.77 | 0.97 | 99.98 | 56.75 | |

| October | 91.86 | 0.83 | 66.28 | 51.94 | |

| November | 98.59 | 0.98 | 87.83 | 46.26 | |

| Huangshagang Town | August | 90.92 | 0.86 | 98.37 | 74.42 |

| October | 98.22 | 0.97 | 83.51 | 92.60 | |

| November | 89.20 | 0.82 | 100.00 | 97.18 | |

| Haitong Town | August | 93.48 | 0.88 | 96.02 | 90.77 |

| October | 96.05 | 0.94 | 86.19 | 29.53 | |

| November | 93.51 | 0.89 | 95.80 | 17.94 | |

| Xinqiao Town, Panwan Town | August | 96.61 | 0.95 | 92.25 | 55.15 |

| October | 93.98 | 0.87 | 86.37 | 57.65 | |

| November | 87.58 | 0.81 | 93.97 | 31.84 | |

| Changdang Town | August | 91.34 | 0.87 | 99.26 | 97.85 |

| September | 96.40 | 0.94 | 99.53 | 65.02 | |

| October | 97.84 | 0.92 | 99.23 | 35.22 | |

| November | 97.62 | 0.96 | 92.30 | 25.03 | |

| Xintan Town, Haihe Town, Siming Town | August | 88.17 | 0.83 | 98.57 | 89.71 |

| October | 92.84 | 0.89 | 85.83 | 74.62 | |

| November | 93.69 | 0.89 | 91.61 | 44.48 | |

| Qianqiu Town | August | 97.97 | 0.97 | 99.17 | 99.75 |

| October | 89.35 | 0.80 | 85.32 | 20.04 | |

| November | 91.20 | 0.86 | 84.55 | 40.49 |

| Regions | Chrysanthemum Area with 2 m Spatial Resolution/m2 | Chrysanthemum Area with 16 m Spatial Resolution/m2 | Conversion Ratio/% |

|---|---|---|---|

| Linhai Town | 2,592,552.13 | 2,776,735.73 | 93.37 |

| Yangma Town | 10,603,930.93 | 9,956,659.343 | 106.50 |

| Huangjian Town | 9,244,783.37 | 5,358,070.392 | 172.54 |

| Teyong Town | 2,342,952.75 | 3,237,189.983 | 72.38 |

| Hede Town | 7,214,260.95 | 4,050,060.313 | 178.13 |

| Huangshagang Town | 3,478,411.90 | 3,221,309.184 | 107.98 |

| Haitong Town | 1,307,883.31 | 2,880,202.84 | 45.41 |

| Xinqiao Town, Panwan Town | 5,085,690.69 | 5,072,248.433 | 100.27 |

| Changdang Town | 1,066,312.37 | 2,053,597.21 | 51.92 |

| Xintan Town, Haihe Town, Siming Town | 1,613,960.99 | 5,390,912.34 | 29.94 |

| Qianqiu Town | 2,399,604.05 | 2,335,552.518 | 102.74 |

| Total | 46,950,343.44 | 46,332,538.29 | / |

| Regions | Area Before Conversion in 2021/m2 | Area After Conversion in 2021/m2 | Area Before Conversion in 2023/m2 | Area After Conversion in 2023/m2 |

|---|---|---|---|---|

| Linhai Town | 3,207,711.59 | 2,994,940.94 | 3,267,248.00 | 3,050,528.24 |

| Yangma Town | 8,438,880.70 | 8,987,483.15 | 11,338,732.64 | 12,075,851.31 |

| Huangjian Town | 3,872,972.99 | 6,682,404.98 | 1,706,032.06 | 2,943,577.75 |

| Teyong Town | 2,466,405.83 | 1,785,089.03 | 4,103,990.97 | 2,970,309.74 |

| Hede Town | 4,530,806.73 | 8,070,601.30 | 5,689,998.01 | 10,135,436.83 |

| Huangshagang Town | 1,225,233.69 | 1,323,023.40 | 2,108,460.24 | 2,276,743.02 |

| Haitong Town | 2,051,333.97 | 931,498.79 | 1,776,734.57 | 806,804.80 |

| Xinqiao Town, Panwan Town | 3,437,713.15 | 3,446,823.63 | 7,506,761.21 | 7,526,655.31 |

| Changdang Town | 1,143,205.88 | 593,599.64 | 1,368,774.40 | 710,724.13 |

| Xintan Town, Haihe Town, Siming Town | 4,553,457.80 | 1,363,239.24 | 7,495,043.56 | 2,243,907.36 |

| Qianqiu Town | 2,226,428.92 | 2,287,487.79 | 2,729,306.55 | 2,804,156.62 |

| Total | 37,154,151.24 | 38,466,191.89 | 49,091,082.22 | 47,546,718.12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, Y.; Wu, M.-T.; Pu, C.-J.; Chen, J.-M.; Jing, Z.-X.; Shi, T.-T.; Zhang, X.-B.; Yan, H. Monitoring Chrysanthemum Cultivation Areas Using Remote Sensing Technology. Horticulturae 2025, 11, 933. https://doi.org/10.3390/horticulturae11080933

Ye Y, Wu M-T, Pu C-J, Chen J-M, Jing Z-X, Shi T-T, Zhang X-B, Yan H. Monitoring Chrysanthemum Cultivation Areas Using Remote Sensing Technology. Horticulturae. 2025; 11(8):933. https://doi.org/10.3390/horticulturae11080933

Chicago/Turabian StyleYe, Yin, Meng-Ting Wu, Chun-Juan Pu, Jing-Mei Chen, Zhi-Xian Jing, Ting-Ting Shi, Xiao-Bo Zhang, and Hui Yan. 2025. "Monitoring Chrysanthemum Cultivation Areas Using Remote Sensing Technology" Horticulturae 11, no. 8: 933. https://doi.org/10.3390/horticulturae11080933

APA StyleYe, Y., Wu, M.-T., Pu, C.-J., Chen, J.-M., Jing, Z.-X., Shi, T.-T., Zhang, X.-B., & Yan, H. (2025). Monitoring Chrysanthemum Cultivation Areas Using Remote Sensing Technology. Horticulturae, 11(8), 933. https://doi.org/10.3390/horticulturae11080933