A Review of Intelligent Orchard Sprayer Technologies: Perception, Control, and System Integration

Abstract

1. Introduction

2. Perception and Intelligent Control

2.1. Sensor Technologies

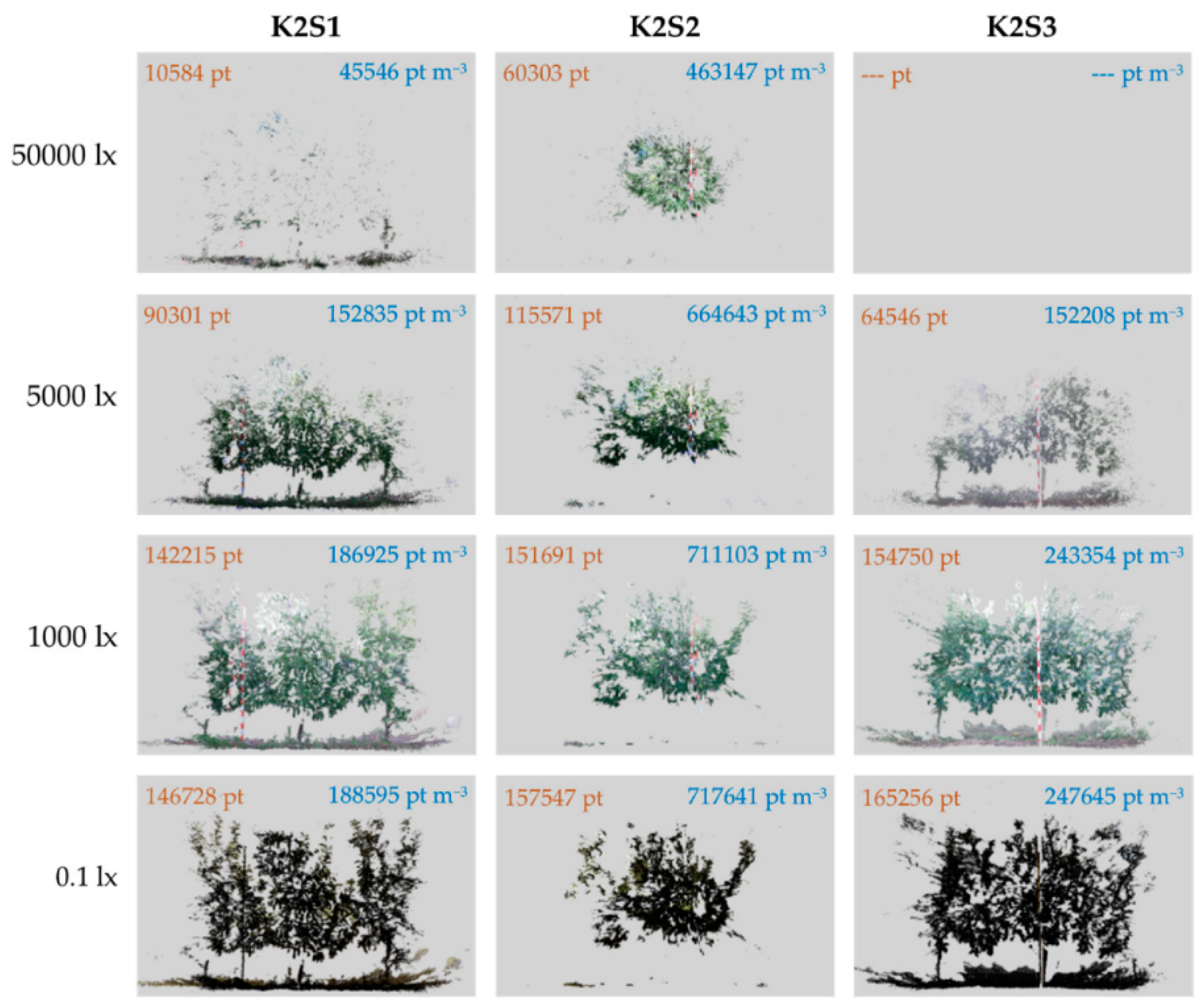

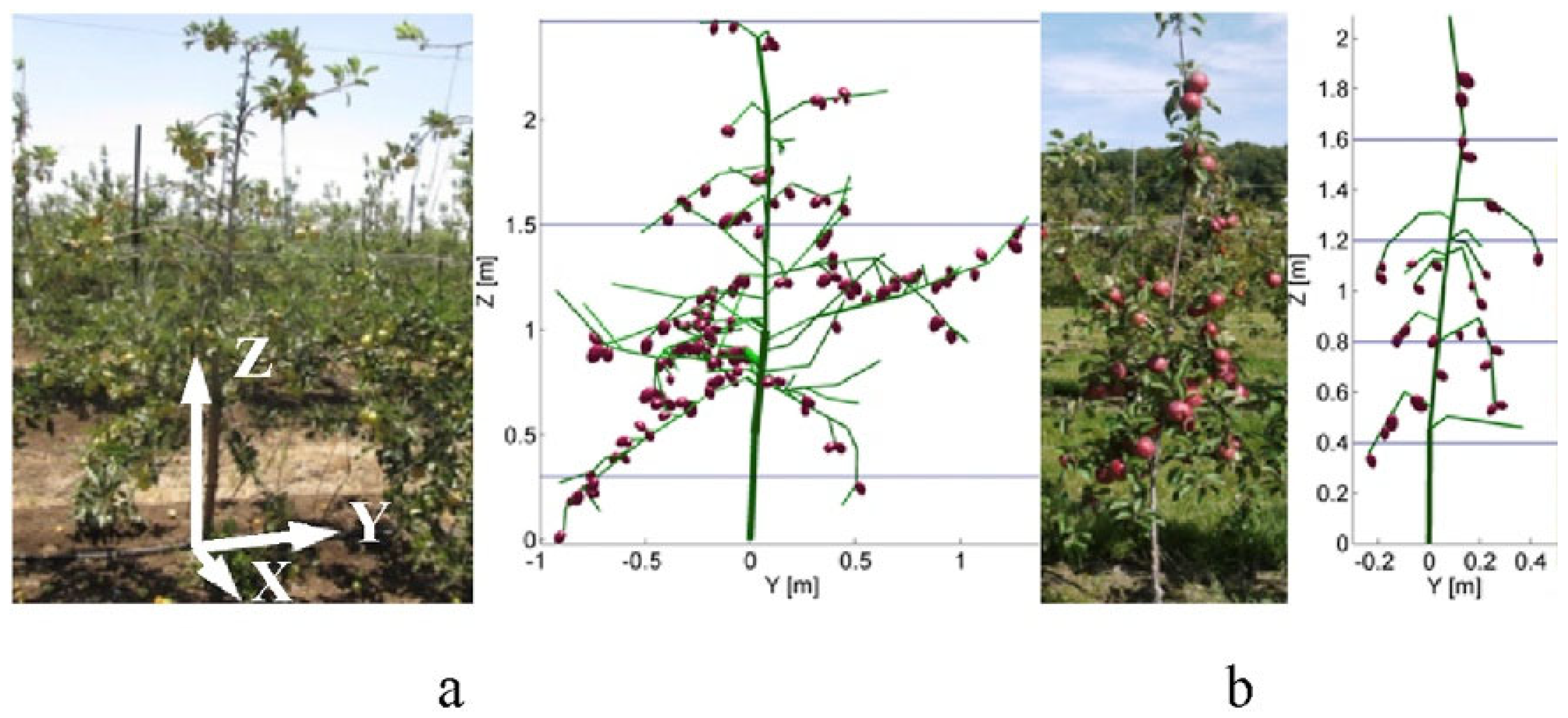

2.1.1. RGB-D Sensor

2.1.2. LiDAR Sensor

2.1.3. Ultrasonic Sensor

2.1.4. Comparative Evaluation of Orchard Sensor Technologies

2.1.5. Multi-Sensor Fusion

2.2. Object Detection Algorithms

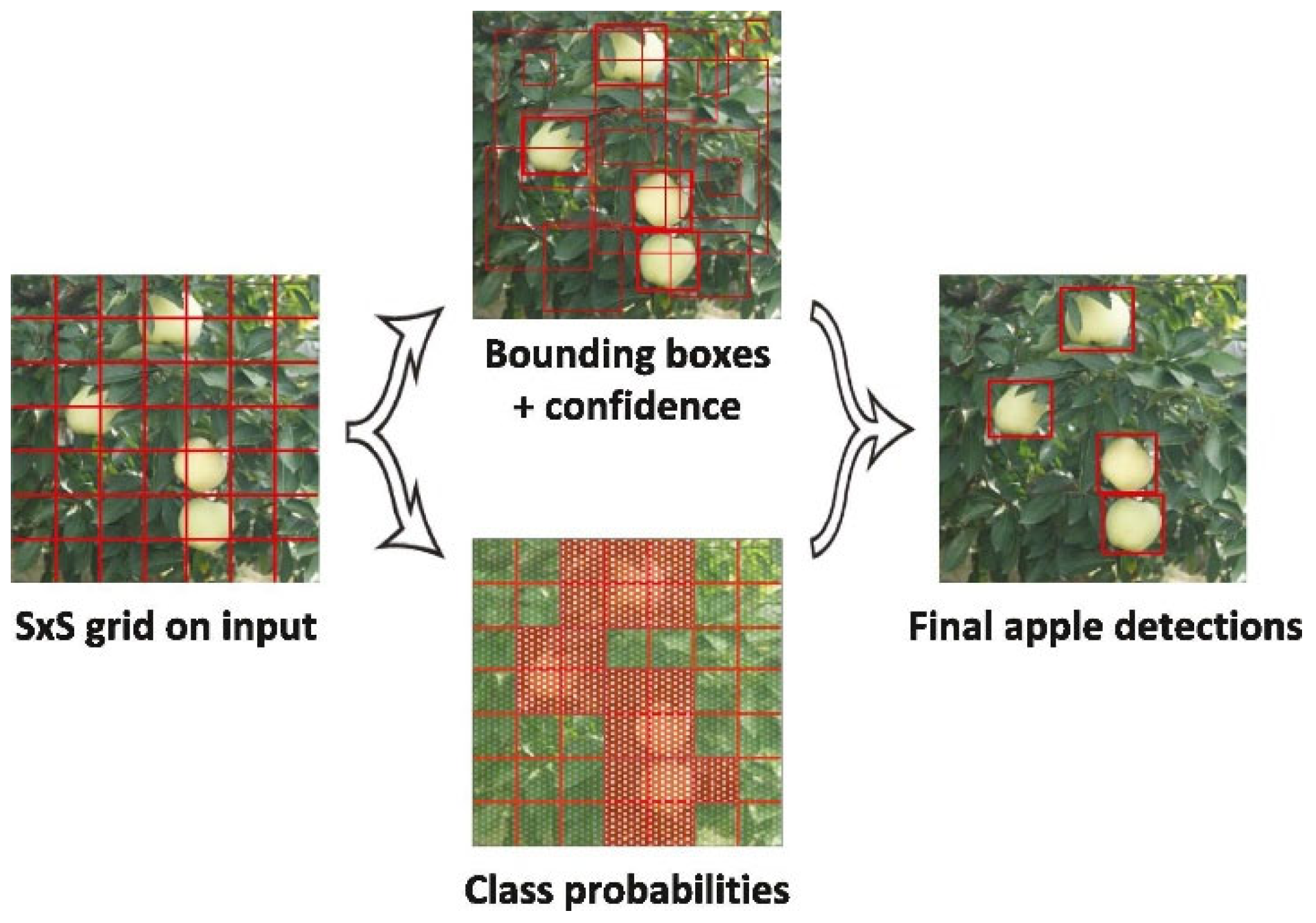

2.2.1. General Architectures—YOLO Series Algorithms

2.2.2. Adaptive Mechanisms—Attention Mechanisms

2.2.3. Customized Detection Architectures and Fusion Strategies

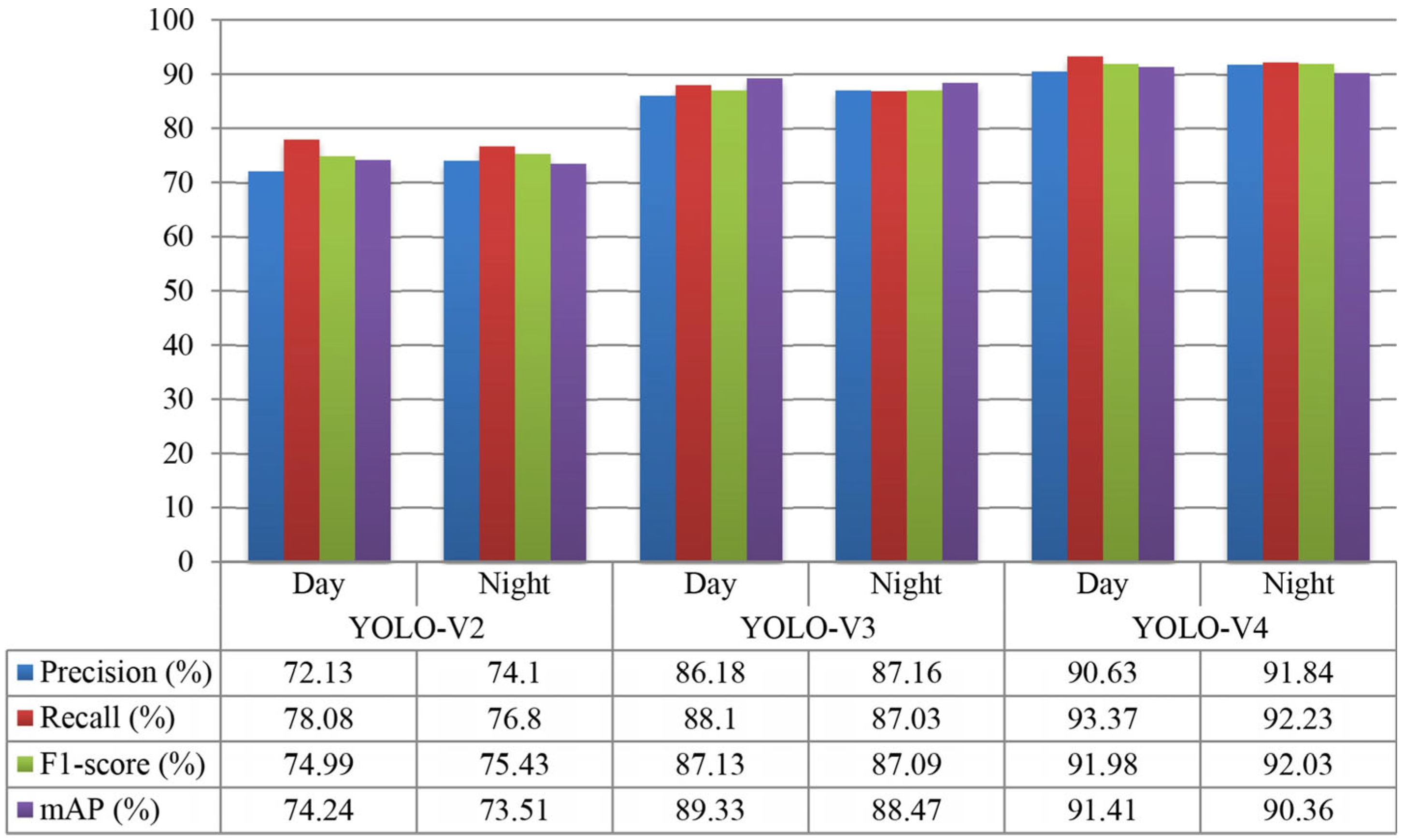

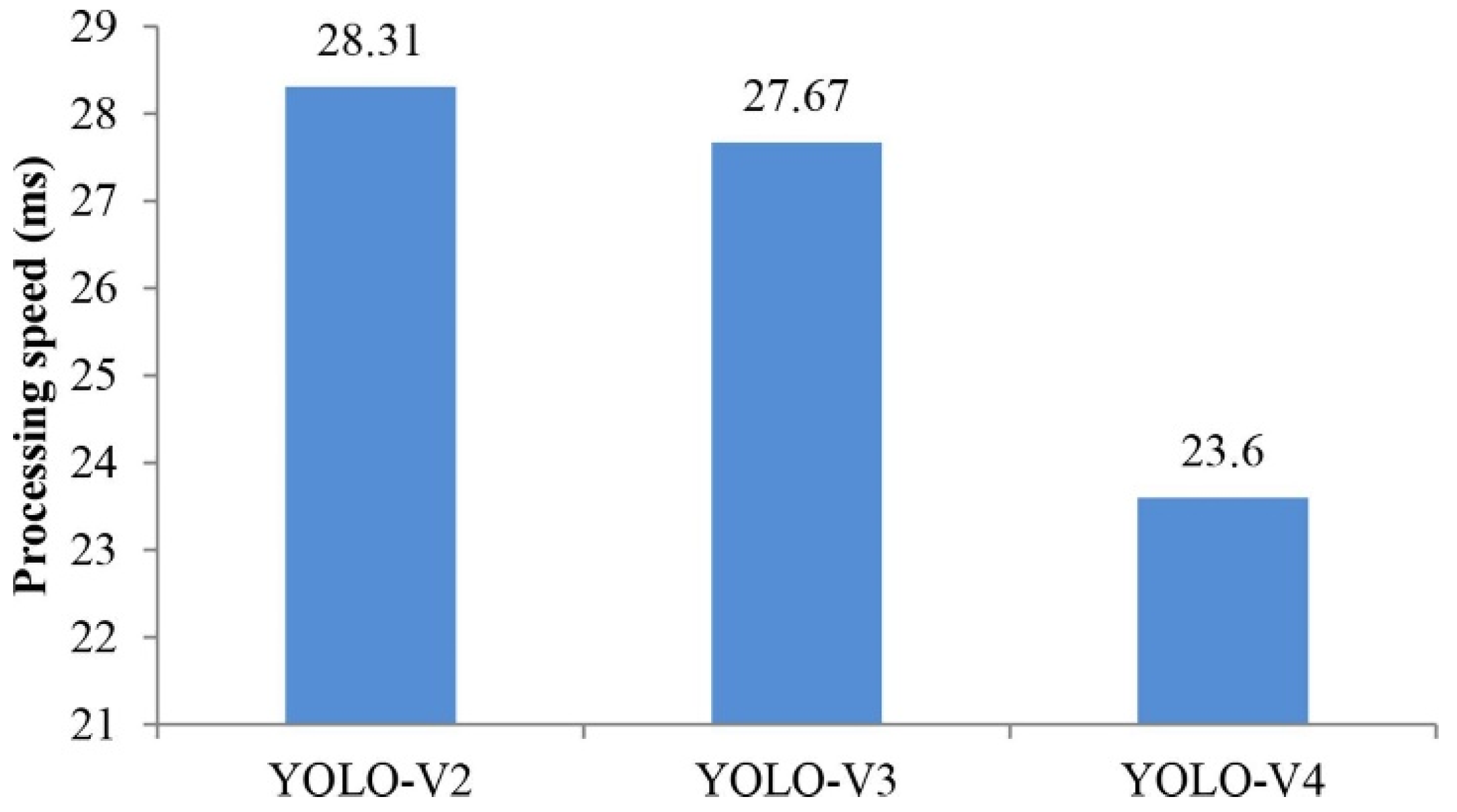

2.2.4. Performance of YOLO Models Under Complex Orchard Conditions

2.3. Real-Time Control Strategies for Orchard Spraying Systems

2.3.1. Key Factors Affecting Deposition Efficiency

2.3.2. Spray Control Strategies and Algorithms

3. Spray Deposition and Pesticide Drift Control

3.1. Key Factors Affecting Spray Deposition Efficiency

3.2. Mechanisms and Mitigation Techniques of Pesticide Drift

3.3. Variable Rate Spraying Strategies

4. Autonomous Navigation and System Integration

4.1. Autonomous Navigation in Orchard Environments

4.2. Modular Integration of Intelligent Spraying Systems

5. Challenges and Future Perspectives

5.1. Current Technological Bottlenecks

5.1.1. Limitations in Perception and Intelligent Control

5.1.2. Limitations in Orchard Navigation

5.2. Barriers to Application and Adoption

5.3. Future Trends and Research Priorities

- (1)

- Development of robust and cost-effective perception systems. Given the frequent challenges of varying illumination, target occlusion, and morphological differences in orchards, future perception systems must enhance their robustness in complex environments. Emphasis should be placed on developing cost-effective multimodal sensor fusion technologies, such as integrated perception schemes combining RGB-D, LiDAR, and ultrasonic sensors, to enhance the accurate recognition of tree structures and fruit targets.

- (2)

- Synergistic optimization of intelligent navigation and spraying control systems. Current systems suffer from task segmentation and response delays. Future efforts should focus on establishing a linkage mechanism between path planning and spraying execution, enabling real-time dynamic path optimization and task scheduling. Additionally, navigation systems should incorporate multi-source perception fusion and self-learning capabilities to handle complex terrains and path interferences, thereby improving operational efficiency and spray coverage accuracy.

- (3)

- Environment-aware adaptive spraying strategies. Considering the variation in crop types, growth stages, and environmental conditions, deep learning and big data analytics should be leveraged to enable adaptive adjustment of spraying parameters. Causal inference models linking environment, crop characteristics, and spraying outcomes should be established to enable need-based and differentiated spraying, thereby reducing pesticide usage and improving environmental sustainability.

- (4)

- Interdisciplinary collaboration and system integration innovation. The inherent complexity of intelligent orchard spraying systems necessitates the deep integration of multidisciplinary technologies. Future efforts should enhance cross-domain collaboration among agricultural engineering, artificial intelligence, robotics, and the Internet of Things, achieving technological synergy in hardware platforms, algorithm optimization, and system integration to drive full-process intelligent spraying operations.

- (5)

- Agricultural data sharing and intelligent service platform development. Establishing a unified agricultural big data platform and open service system is crucial to improving the operational efficiency and intelligence level of orchard systems. Standardized acquisition and sharing of sensor data, remote sensing imagery, and operational information should be promoted to enhance data connectivity and technical interoperability among farmers, enterprises, and research institutions, thereby supporting intelligent decision-making.

- (6)

- Dual promotion through policy guidance and market mechanisms. The successful implementation of technology ultimately depends on both governmental support and market forces. On one hand, governments should introduce targeted support policies for smart agriculture, fostering pilot programs and industry standardization. On the other hand, enterprises should leverage technological leadership and product commercialization to accelerate the adoption of intelligent spraying systems and increase user acceptance and coverage.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shen, L.; Zenan, S.; Man, H. The impact of digital literacy on farmers’ pro-environmental behavior: An analysis with the Theory of Planned Behavior. Front. Sustain. Food Syst. 2024, 8, 1432184. [Google Scholar] [CrossRef]

- Xiao, L.; Liu, J.; Ge, J. Dynamic game in agriculture and industry cross-sectoral water pollution governance in developing countries. Agric. Water Manag. 2021, 243, 106417. [Google Scholar] [CrossRef]

- Jin, Y.; Liu, J.; Xu, Z.; Yuan, S.; Li, P.; Wang, J. Development status and trend of agricultural robot technology. Int. J. Agric. Biol. Eng. 2021, 14, 1–19. [Google Scholar] [CrossRef]

- Zhou, J.; Zou, X.; Song, S.; Chen, G. Quantum Dots Applied to Methodology on Detection of Pesticide and Veterinary Drug Residues. J. Agric. Food Chem. 2018, 66, 1307–1319. [Google Scholar] [CrossRef] [PubMed]

- Gao, Q.; Wang, Y.; Li, Y.; Yang, W.; Jiang, W.; Liang, Y.; Zhang, Z. Residue behaviors of six pesticides during apple juice production and storage. Food Res. Int. 2024, 177, 113894. [Google Scholar] [CrossRef]

- Lykogianni, M.; Bempelou, E.; Karamaouna, F.; Aliferis, K.A. Do pesticides promote or hinder sustainability in agriculture? The challenge of sustainable use of pesticides in modern agriculture. Sci. Total Environ. 2021, 795, 148625. [Google Scholar] [CrossRef]

- Xu, Y.; Hassan, M.; Sharma, A.S.; Li, H.; Chen, Q. Recent advancement in nano-optical strategies for detection of pathogenic bacteria and their metabolites in food safety. Crit. Rev. Food Sci. Nutr. 2023, 63, 486–504. [Google Scholar] [CrossRef] [PubMed]

- Jiang, L.; Hassan, M.; Ali, S.; Li, H.; Sheng, R.; Chen, Q. Evolving trends in SERS-based techniques for food quality and safety: A review. Trends Food Sci. Technol. 2021, 112, 225–240. [Google Scholar] [CrossRef]

- Wei, Z.; Xue, X.; Salcedo, R.; Zhang, Z.; Gil, E.; Sun, Y.; Li, Q.; Shen, J.; He, Q.; Dou, Q.; et al. Key Technologies for an Orchard Variable-Rate Sprayer: Current Status and Future Prospects. Agronomy 2022, 13, 59. [Google Scholar] [CrossRef]

- Louisa, P. Digital Agriculture and Labor A Few Challenges for Social Sustainability. Sustainability 2021, 13, 5980. [Google Scholar] [CrossRef]

- Li, F.; Zhang, W. Research on the Effect of Digital Economy on Agricultural Labor Force Employment and Its Relationship Using SEM and fsQCA Methods. Agriculture 2023, 13, 566. [Google Scholar] [CrossRef]

- Xi, T.; Li, C.; Qiu, W.; Wang, H.; Lv, X.; Han, C.; Ahmad, F. Droplet Deposition Behavior on a Pear Leaf Surface under Wind-Induced Vibration. Appl. Eng. Agric. 2020, 36, 913–926. [Google Scholar] [CrossRef]

- Ye, L.; Wu, F.; Zou, X.; Li, J. Path planning for mobile robots in unstructured orchard environments: An improved kinematically constrained bi-directional RRT approach. Comput. Electron. Agric. 2023, 215, 108453. [Google Scholar] [CrossRef]

- Shepherd, M.; Turner, J.A.; Small, B.; Wheeler, D. Priorities for science to overcome hurdles thwarting the full promise of the ‘digital agriculture’ revolution. J. Sci. Food Agric. 2020, 100, 5083–5092. [Google Scholar] [CrossRef] [PubMed]

- Taseer, A.; Han, X. Advancements in variable rate spraying for precise spray requirements in precision agriculture using Unmanned aerial spraying Systems: A review. Comput. Electron. Agric. 2024, 219, 108841. [Google Scholar] [CrossRef]

- Dou, H.; Zhang, C.; Li, L.; Hao, G.; Ding, B.; Gong, W.; Huang, P. Application of variable spray technology in agriculture. In IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2018; p. 012007. [Google Scholar] [CrossRef]

- Chen, P.; Ding, X.; Chen, M.; Song, H.; Imran, M. The Impact of Resource Spatial Mismatch on the Configuration Analysis of Agricultural Green Total Factor Productivity. Agriculture 2025, 15, 23. [Google Scholar] [CrossRef]

- Calatrava, J.; Martínez-Granados, D.; Zornoza, R.; Gonzalez-Rosado, M.; Lozano-Garcia, B.; Vega-Zamora, M.; Gómez-López, M.D. Barriers and Opportunities for the Implementation of Sustainable Farming Practices in Mediterranean Tree Orchards. Agronomy 2021, 11, 821. [Google Scholar] [CrossRef]

- Bahlol, H.Y.; Chandel, A.K.; Hoheisel, G.A.; Khot, L.R. The smart spray analytical system: Developing understanding of output air-assist and spray patterns from orchard sprayers. Crop Prot. 2020, 127, 104977. [Google Scholar] [CrossRef]

- Tang, Y.; Qiu, J.; Zhang, Y.; Wu, D.; Cao, Y.; Zhao, K.; Zhu, L. Optimization strategies of fruit detection to overcome the challenge of unstructured background in field orchard environment: A review. Precis. Agric. 2023, 24, 1183–1219. [Google Scholar] [CrossRef]

- Liu, Y.; Li, L.; Liu, Y.; He, X.; Song, J.; Zeng, A.; Wang, Z. Assessment of spray deposition and losses in an apple orchard with an unmanned agricultural aircraft system in China. Trans. Asabe 2020, 63, 619–627. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, A.; Liu, J.; Faheem, M. A Comparative Study of Semantic Segmentation Models for Identification of Grape with Different Varieties. Agriculture 2021, 11, 997. [Google Scholar] [CrossRef]

- Salcedo, R.; Zhu, H.; Ozkan, E.; Falchieri, D.; Zhang, Z.; Wei, Z. Reducing ground and airborne drift losses in young apple orchards with PWM-controlled spray systems. Comput. Electron. Agric. 2021, 189, 106389. [Google Scholar] [CrossRef]

- Zhou, H.; Jia, W.; Li, Y.; Ou, M. Method for Estimating Canopy Thickness Using Ultrasonic Sensor Technology. Agriculture 2021, 11, 1011. [Google Scholar] [CrossRef]

- Niu, Z.; Huang, T.; Xu, C.; Sun, X.; Taha, M.F.; He, Y.; Qiu, Z. A Novel Approach to Optimize Key Limitations of Azure Kinect DK for Efficient and Precise Leaf Area Measurement. Agriculture 2025, 15, 173. [Google Scholar] [CrossRef]

- De Bortoli, L.; Marsi, S.; Marinello, F.; Carrato, S.; Ramponi, G.; Gallina, P. Structure from Linear Motion (SfLM): An On-the-Go Canopy Profiling System Based on Off-the-Shelf RGB Cameras for Effective Sprayers Control. Agronomy 2022, 12, 1276. [Google Scholar] [CrossRef]

- Xue, X.; Luo, Q.; Bu, M.; Li, Z.; Lyu, S.; Song, S. Citrus Tree Canopy Segmentation of Orchard Spraying Robot Based on RGB-D Image and the Improved DeepLabv3+. Agronomy 2023, 13, 2059. [Google Scholar] [CrossRef]

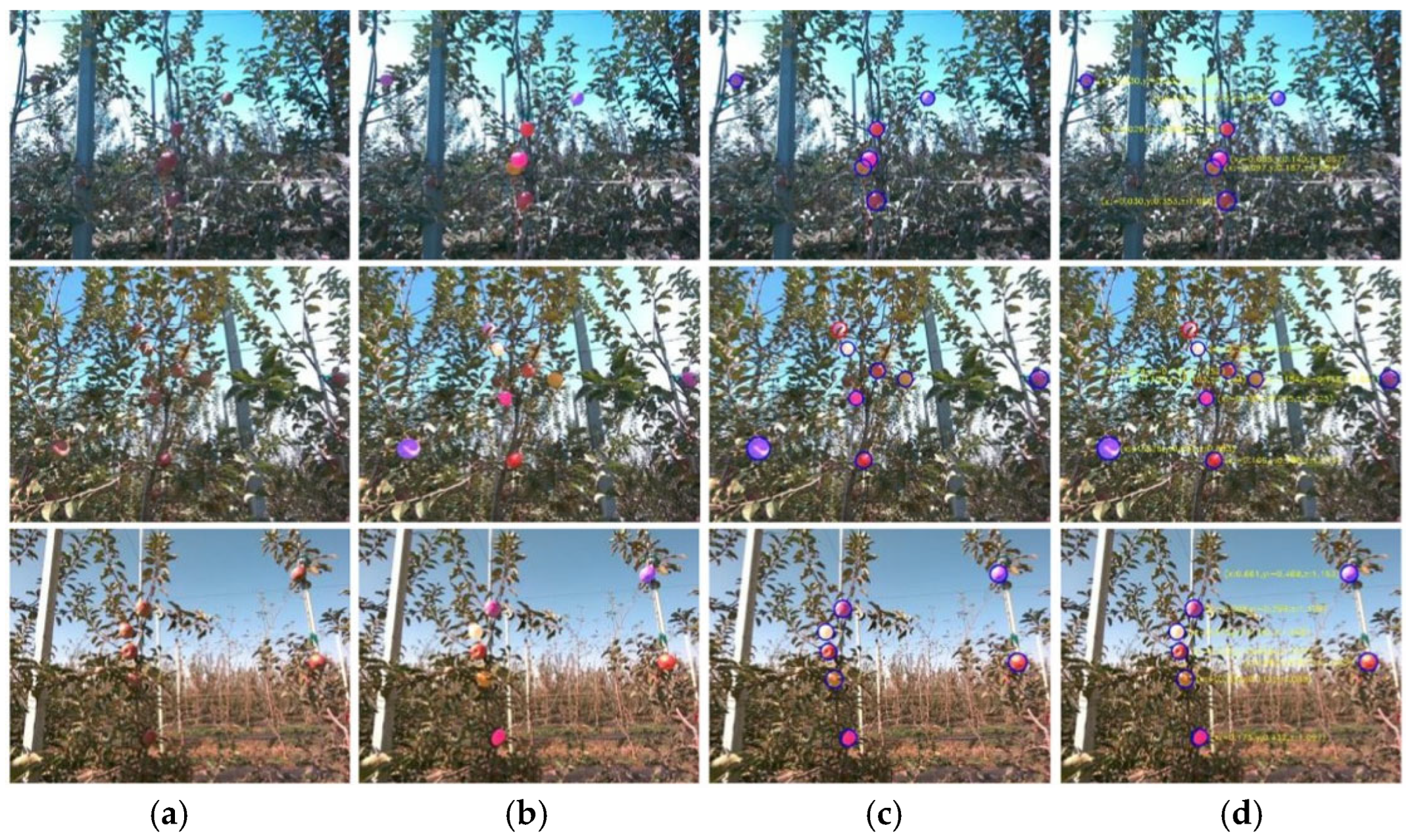

- Tang, S.; Xia, Z.; Gu, J.; Wang, W.; Huang, Z.; Zhang, W. High-precision apple recognition and localization method based on RGB-D and improved SOLOv2 instance segmentation. Front. Sustain. Food Syst. 2024, 8, 1403872. [Google Scholar] [CrossRef]

- Murcia, H.F.; Tilaguy, S.; Ouazaa, S. Development of a Low-Cost System for 3D Orchard Mapping Integrating UGV and LiDAR. Plants 2021, 10, 2804. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, H. Evaluation of a Laser Scanning Sensor in Detection of Complex-Shaped Targets for Variable-Rate Sprayer Development. Trans. ASABE 2016, 59, 1181–1192. [Google Scholar] [CrossRef]

- Liu, L.; Liu, Y.; He, X.; Liu, W. Precision Variable-Rate Spraying Robot by Using Single 3D LIDAR in Orchards. Agronomy 2022, 12, 2509. [Google Scholar] [CrossRef]

- Jiang, A.; Ahamed, T. Navigation of an Autonomous Spraying Robot for Orchard Operations Using LiDAR for Tree Trunk Detection. Sensors 2023, 23, 4808. [Google Scholar] [CrossRef]

- Yang, H.; Wang, X.; Sun, G. Three-Dimensional Morphological Measurement Method for a Fruit Tree Canopy Based on Kinect Sensor Self-Calibration. Agronomy 2019, 9, 741. [Google Scholar] [CrossRef]

- Bargoti, S.; Underwood, J.P.; Nieto, J.I.; Sukkarieh, S. A Pipeline for Trunk Localisation Using LiDAR in Trellis Structured Orchards. Springer Tracts Adv. Robot. 2015, 105, 455–468. [Google Scholar] [CrossRef]

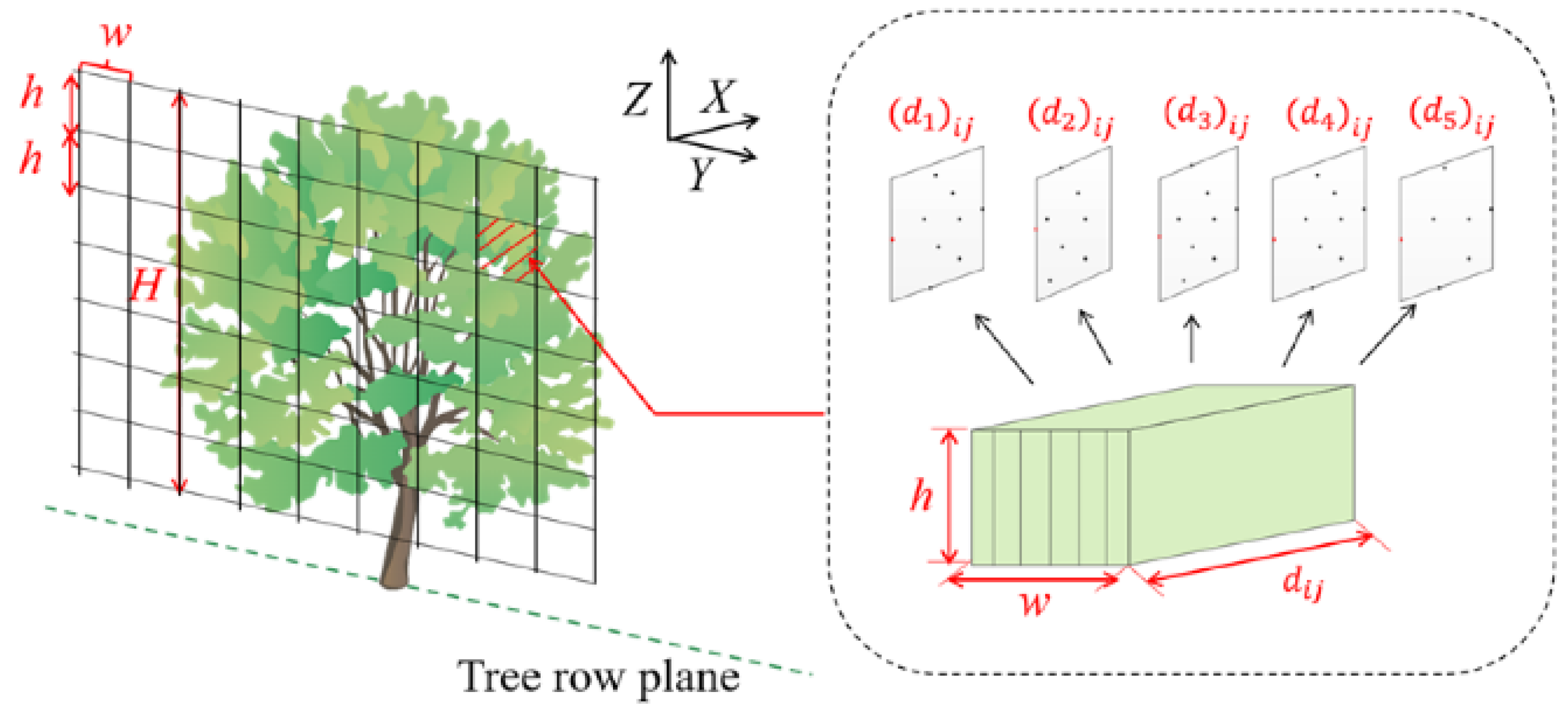

- Wang, M.; Dou, H.; Sun, H.; Zhai, C.; Zhang, Y.; Yuan, F. Calculation Method of Canopy Dynamic Meshing Division Volumes for Precision Pesticide Application in Orchards Based on LiDAR. Agronomy 2023, 13, 1077. [Google Scholar] [CrossRef]

- Zhmud, V.A.; Kondratiev, N.O.; Kuznetsov, K.A.; Trubin, V.G.; Dimitrov, L.V. Application of ultrasonic sensor for measuring distances in robotics. J. Phys. Conf. Ser. 2018, 1015, 032189. [Google Scholar] [CrossRef]

- Qiu, Z.; Lu, Y.; Qiu, Z. Review of Ultrasonic Ranging Methods and Their Current Challenges. Micromachines 2022, 13, 520. [Google Scholar] [CrossRef]

- Palleja, T.; Landers, A.J. Real time canopy density estimation using ultrasonic envelope signals in the orchard and vineyard. Comput. Electron. Agric. 2015, 115, 108–117. [Google Scholar] [CrossRef]

- Mahmud, S.; He, L.; Heinemann, P.; Choi, D.; Zhu, H. Unmanned aerial vehicle based tree canopy characteristics measurement for precision spray applications. Smart Agric. Technol. 2023, 4, 100153. [Google Scholar] [CrossRef]

- Gu, C.; Zhao, C.; Zou, W.; Yang, S.; Dou, H.; Zhai, C. Innovative Leaf Area Detection Models for Orchard Tree Thick Canopy Based on LiDAR Point Cloud Data. Agriculture 2022, 12, 1241. [Google Scholar] [CrossRef]

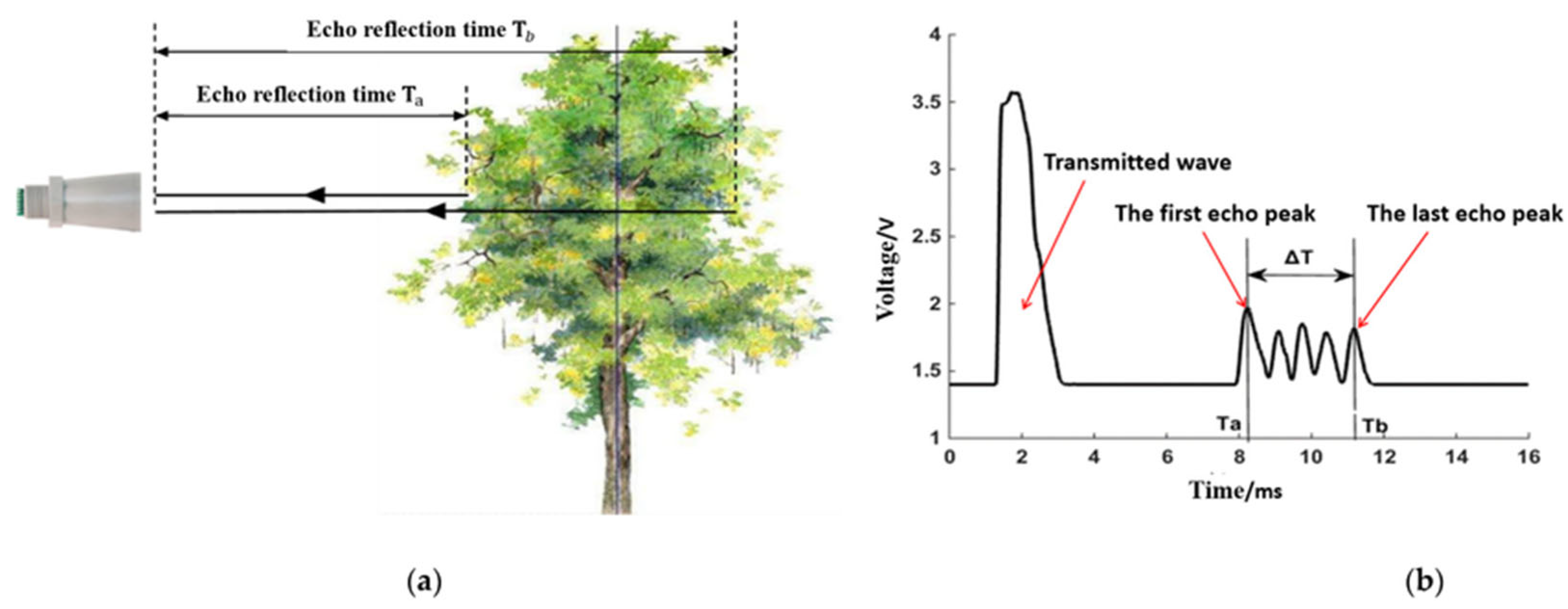

- Ou, M.; Hu, T.; Hu, M.; Yang, S.; Jia, W.; Wang, M.; Jiang, L.; Wang, X.; Dong, X. Experiment of Canopy Leaf Area Density Estimation Method Based on Ultrasonic Echo Signal. Agriculture 2022, 12, 1569. [Google Scholar] [CrossRef]

- Niu, Y.; Han, W.; Zhang, H.; Zhang, L.; Chen, H. Estimating maize plant height using a crop surface model constructed from UAV RGB images. Biosyst. Eng. 2024, 241, 56–67. [Google Scholar] [CrossRef]

- Wei, L.; Yang, H.; Niu, Y.; Zhang, Y.; Xu, L.; Chai, X. Wheat biomass, yield, and straw-grain ratio estimation from multi-temporal UAV-based RGB and multispectral images. Biosyst. Eng. 2023, 234, 187–205. [Google Scholar] [CrossRef]

- Li, L.; Xie, S.; Ning, J.; Chen, Q.; Zhang, Z. Evaluating green tea quality based on multisensor data fusion combining hyperspectral imaging and olfactory visualization systems. J. Sci. Food Agric. 2019, 99, 1787–1794. [Google Scholar] [CrossRef]

- Ali, M.M.; Hashim, N.; Aziz, S.A.; Lasekan, O. Utilisation of Deep Learning with Multimodal Data Fusion for Determination of Pineapple Quality Using Thermal Imaging. Agronomy 2023, 13, 401. [Google Scholar] [CrossRef]

- Lu, X.; Li, W.; Xiao, J.; Zhu, H.; Yang, D.; Yang, J.; Xu, X.; Lan, Y.; Zhang, Y. Inversion of Leaf Area Index in Citrus Trees Based on Multi-Modal Data Fusion from UAV Platform. Remote Sens. 2023, 15, 3523. [Google Scholar] [CrossRef]

- Zhou, X.; Chen, W.; Wei, X. Improved Field Obstacle Detection Algorithm Based on YOLOv8. Agriculture 2024, 14, 2263. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, Y.; Zhao, Y.; Pan, Q.; Jin, K.; Xu, G.; Hu, Y. TS-YOLO: An All-Day and Lightweight Tea Canopy Shoots Detection Model. Agronomy 2023, 13, 1411. [Google Scholar] [CrossRef]

- Ji, W.; Pan, Y.; Xu, B.; Wang, J. A Real-Time Apple Targets Detection Method for Picking Robot Based on ShufflenetV2-YOLOX. Agriculture 2022, 12, 856. [Google Scholar] [CrossRef]

- Ji, W.; Gao, X.; Xu, B.; Pan, Y.; Zhang, Z.; Zhao, D. Apple target recognition method in complex environment based on improved YOLOv4. J. Food Process Eng. 2021, 44, e13866. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Mirhaji, H.; Soleymani, M.; Asakereh, A.; Mehdizadeh, S.A. Fruit detection and load estimation of an orange orchard using the YOLO models through simple approaches in different imaging and illumination conditions. Comput. Electron. Agric. 2021, 191, 106533. [Google Scholar] [CrossRef]

- Ranjan, S.; Dawood, A.; Martin, C.; Manoj, K. Immature Green Apple Detection and Sizing in Commercial Orchards Using YOLOv8 and Shape Fitting Techniques. IEEE Access 2024, 12, 43436–43452. [Google Scholar]

- Li, A.; Wang, C.; Ji, T.; Wang, Q.; Zhang, T. D3-YOLOv10: Improved YOLOv10-Based Lightweight Tomato Detection Algorithm Under Facility Scenario. Agriculture 2024, 14, 2268. [Google Scholar] [CrossRef]

- Guo, M.; Xu, T.; Liu, J.; Liu, Z.; Jiang, P.; Mu, T.; Zhang, S.; Martin, R.; Cheng, M.; Hu, S. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient Multi-Scale Attention Module with Cross-Spatial Learning. In Proceedings of the 2023 IEEE International Conference on Acoustics, Speech and Processing, Rhodes Island, Greece, 4–10 June 2023. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, J.; Ding, J.; Ran, S.; Qin, S.; Liu, B.; Li, X. Automatic Pear Extraction from High-Resolution Images by a Visual Attention Mechanism Network. Remote Sens. 2023, 15, 3283. [Google Scholar] [CrossRef]

- Yang, Y.; Su, L.; Zong, A.; Tao, W.; Xu, X.; Chai, Y.; Mu, W. A New Kiwi Fruit Detection Algorithm Based on an Improved Lightweight Network. Agriculture 2024, 14, 1823. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 3–19. [Google Scholar]

- Jiang, M.; Song, L.; Wang, Y.; Li, Z.; Song, H. Fusion of the YOLOv4 network model and visual attention mechanism to detect low-quality young apples in a complex environment. Precis. Agric. 2022, 23, 559–577. [Google Scholar] [CrossRef]

- Akdoğan, C.; Özer, T.; Oğuz, Y. PP-YOLO: Deep learning based detection model to detect apple and cherry trees in orchard based on Histogram and Wavelet preprocessing techniques. Comput. Electron. Agric. 2025, 232, 110052. [Google Scholar] [CrossRef]

- Huang, Z.; Zhang, X.; Wang, H.; Wei, H.; Zhang, Y.; Zhou, G. Pear Fruit Detection Model in Natural Environment Based on Lightweight Transformer Architecture. Agriculture 2024, 15, 24. [Google Scholar] [CrossRef]

- Tao, K.; Wang, A.; Shen, Y.; Lu, Z.; Peng, F.; Wei, X. Peach Flower Density Detection Based on an Improved CNN Incorporating Attention Mechanism and Multi-Scale Feature Fusion. Horticulturae 2022, 8, 904. [Google Scholar] [CrossRef]

- Zhang, B.; Wang, R.; Zhang, H.; Yin, C.; Xia, Y.; Fu, M.; Fu, W. Dragon fruit detection in natural orchard environment by integrating lightweight network and attention mechanism. Front. Plant Sci. 2022, 13, 1040923. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Li, G.; Chen, W.; Liu, B.; Chen, M.; Lu, S. Detection of Dense Citrus Fruits by Combining Coordinated Attention and Cross-Scale Connection with Weighted Feature Fusion. Appl. Sci. 2022, 12, 6600. [Google Scholar] [CrossRef]

- Ji, W.; Wang, J.; Xu, B.; Zhang, T. Apple Grading Based on Multi-Dimensional View Processing and Deep Learning. Foods 2023, 12, 2117. [Google Scholar] [CrossRef] [PubMed]

- Hu, T.; Wang, W.; Gu, J.; Xia, Z.; Zhang, J.; Wang, B. Research on Apple Object Detection and Localization Method Based on Improved YOLOX and RGB-D Images. Agronomy 2023, 13, 1816. [Google Scholar] [CrossRef]

- Jiang, L.; Wang, Y.; Wu, C.; Wu, H. Fruit Distribution Density Estimation in YOLO-Detected Strawberry Images: A Kernel Density and Nearest Neighbor Analysis Approach. Agriculture 2024, 14, 1848. [Google Scholar] [CrossRef]

- Zhang, F.; Chen, Z.; Ali, S.; Yang, N.; Fu, S.; Zhang, Y. Multi-class detection of cherry tomatoes using improved YOLOv4-Tiny. Int. J. Agric. Biol. Eng. 2023, 16, 225–231. [Google Scholar] [CrossRef]

- Xu, Z.; Liu, J.; Wang, J.; Cai, L.; Jin, Y.; Zhao, S.; Xie, B. Realtime Picking Point Decision Algorithm of Trellis Grape for High-Speed Robotic Cut-and-Catch Harvesting. Agronomy 2023, 13, 1618. [Google Scholar] [CrossRef]

- Ji, W.; Zhang, T.; Xu, B.; He, G. Apple recognition and picking sequence planning for harvesting robot in a complex environment. J. Agric. Eng. 2024, 55, 1. [Google Scholar]

- Xie, H.; Zhang, Z.; Zhang, K.; Yang, L.; Zhang, D.; Yu, Y. Research on the visual location method for strawberry picking points under complex conditions based on composite models. J. Sci. Food Agric. 2024, 104, 8566–8579. [Google Scholar] [CrossRef]

- Rovira-Más, F.; Saiz-Rubio, V.; Cuenca, A.; Ortiz, C.; Teruel, M.P.; Ortí, E. Open-Format Prescription Maps for Variable Rate Spraying in Orchard Farming. J. Asabe 2024, 67, 243–257. [Google Scholar] [CrossRef]

- Hu, K.; Feng, X. Research on the Variable Rate Spraying System Based on Canopy Volume Measurement. J. Inf. Process. Syst. 2019, 15, 1131–1140. [Google Scholar]

- Li, J.; Nie, Z.; Chen, Y.; Ge, D.; Li, M. Development of Boom Posture Adjustment and Control System for Wide Spray Boom. Agriculture 2023, 13, 2162. [Google Scholar] [CrossRef]

- Zou, W.; Wang, X.; Deng, W.; Su, S.; Wang, S.; Fan, P. Design and test of automatic toward-target sprayer used in orchard. In Proceedings of the 2015 IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER), Shenyang, China, 8–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 697–702. [Google Scholar]

- Bin Motalab, M.; Al-Mallahi, A. Development of a flexible electronic control unit for seamless integration of machine vision to CAN-enabled boom sprayers for spot application technology. Smart Agric. Technol. 2024, 9, 100618. [Google Scholar] [CrossRef]

- Zhang, C.; Zhai, C.; Zhang, M.; Zhang, C.; Zou, W.; Zhao, C. Staggered-Phase Spray Control: A Method for Eliminating the Inhomogeneity of Deposition in Low-Frequency Pulse-Width Modulation (PWM) Variable Spray. Agriculture 2024, 14, 465. [Google Scholar] [CrossRef]

- Ma, C.; Li, G.; Peng, Q. Design and Test of a Jet Remote Control Spraying Machine for Orchards. Agriengineering 2021, 3, 797–814. [Google Scholar] [CrossRef]

- Wen, S.; Zhang, Q.; Deng, J.; Lan, Y.; Yin, X.; Shan, J. Design and Experiment of a Variable Spray System for Unmanned Aerial Vehicles Based on PID and PWM Control. Appl. Sci. 2018, 8, 2482. [Google Scholar] [CrossRef]

- Shi, Y.; Zhang, C.; Liang, A.; Yuan, H. Fuzzy Control of the Spraying Medicine Control System; Springer: York, Nork, NY, USA, 2008; pp. 1087–1094. [Google Scholar]

- Vatavuk, I.; Vasiljević, G.; Kovačić, Z. Task Space Model Predictive Control for Vineyard Spraying with a Mobile Manipulator. Agriculture 2022, 12, 381. [Google Scholar] [CrossRef]

- Li, J.; Cui, H.; Ma, Y.; Xun, L.; Li, Z.; Yang, Z.; Lu, H. Orchard Spray Study: A Prediction Model of Droplet Deposition States on Leaf Surfaces. Agronomy 2020, 10, 747. [Google Scholar]

- Berk, P.; Stajnko, D.; Hočevar, M.; Malneršič, A.; Jejčič, V.; Belšak, A. Plant protection product dose rate estimation in apple orchards using a fuzzy logic system. PLoS ONE 2019, 14, e0214315. [Google Scholar] [CrossRef]

- Song, L.; Huang, J.; Liang, X.; Yang, S.X.; Hu, W.; Tang, D. An Intelligent Multi-Sensor Variable Spray System with Chaotic Optimization and Adaptive Fuzzy Control. Sensors 2020, 20, 2954. [Google Scholar]

- Liao, J.; Hewitt, A.J.; Wang, P.; Luo, X.; Zang, Y.; Zhou, Z.; Lan, Y.; O’Donnell, C. Development of droplet characteristics prediction models for air induction nozzles based on wind tunnel tests. Int. J. Agric. Biol. Eng. 2019, 12, 1–6. [Google Scholar] [CrossRef]

- Shi, Q.; Mao, H.; Guan, X. Numerical Simulation and Experimental Verification of the Deposition Concentration of an Unmanned Aerial Vehicle. Appl. Eng. Agric. 2019, 35, 367–376. [Google Scholar] [CrossRef]

- Gong, C.; Li, D.; Kang, C. Visualization of the evolution of bubbles in the spray sheet discharged from the air--induction nozzle. Pest Manag. Sci. 2022, 78, 1850–1860. [Google Scholar] [CrossRef] [PubMed]

- Pan, X.; Yang, S.; Gao, Y.; Wang, Z.; Zhai, C.; Qiu, W. Evaluation of Spray Drift from an Electric Boom Sprayer: Impact of Boom Height and Nozzle Type. Agronomy 2025, 15, 160. [Google Scholar] [CrossRef]

- Liu, J.; Liu, X.; Zhu, X.; Yuan, S. Droplet characterisation of a complete fluidic sprinkler with different nozzle dimensions. Biosyst. Eng. 2016, 148, 90–100. [Google Scholar] [CrossRef]

- Guo, S.; Yao, W.; Xu, T.; Ma, H.; Sun, M.; Chen, C.; Lan, Y. Assessing the application of spot spray in Nanguo pear orchards: Effect of nozzle type, spray volume rate and adjuvant. Pest Manag. Sci. 2022, 78, 3564–3575. [Google Scholar] [CrossRef]

- Amaya, K.; Bayat, A. Innovating an electrostatic charging unit with an insulated induction electrode for air-assisted orchard sprayers. Crop Prot. 2024, 181, 106701. [Google Scholar] [CrossRef]

- Hu, Y.; Chen, Y.; Wei, W.; Hu, Z.; Li, P. Optimization Design of Spray Cooling Fan Based on CFD Simulation and Field Experiment for Horticultural Crops. Agriculture 2021, 11, 566. [Google Scholar] [CrossRef]

- Lin, J.; Cai, J.; Xiao, L.; Liu, K.; Chen, J.; Ma, J.; Qiu, B. An angle correction method based on the influence of angle and travel speed on deposition in the air-assisted spray. Crop Prot. 2024, 175, 106444. [Google Scholar] [CrossRef]

- Guo, J.; Dong, X.; Qiu, B. Analysis of the Factors Affecting the Deposition Coverage of Air-Assisted Electrostatic Spray on Tomato Leaves. Agronomy 2024, 14, 1108. [Google Scholar] [CrossRef]

- Feng, F.; Dou, H.; Zhai, C.; Zhang, Y.; Zou, W.; Hao, J. Design and Experiment of Orchard Air-Assisted Sprayer with Airflow Graded Control. Agronomy 2024, 15, 95. [Google Scholar] [CrossRef]

- Bourodimos, G.; Koutsiaras, M.; Psiroukis, V.; Balafoutis, A.; Fountas, S. Development and Field Evaluation of a Spray Drift Risk Assessment Tool for Vineyard Spraying Application. Agriculture 2019, 9, 181. [Google Scholar] [CrossRef]

- Qi, H.; Lin, Z.; Zhou, J.; Li, J.; Chen, P.; Ouyang, F. Effect of temperature and humidity on droplet deposition of unmanned agricultural aircraft system. Int. J. Precis. Agric. Aviat. 2018, 1, 41–49. [Google Scholar] [CrossRef]

- Wang, Z.; Lan, L.; He, X.; Herbst, A. Dynamic evaporation of droplet with adjuvants under different environment conditions. Int. J. Agric. Biol. Eng. 2020, 13, 1–6. [Google Scholar] [CrossRef]

- Zhou, Q.; Xue, X.; Chen, C.; Cai, C.; Jiao, Y. Canopy deposition characteristics of different orchard pesticide dose models. Int. J. Agric. Biol. Eng. 2023, 16, 1–6. [Google Scholar] [CrossRef]

- Ma, J.; Liu, K.; Dong, X.; Huang, X.; Ahmad, F.; Qiu, B. Force and motion behaviour of crop leaves during spraying. Biosyst. Eng. 2023, 235, 83–99. [Google Scholar] [CrossRef]

- Jiang, S.; Yang, S.; Xu, J.; Li, W.; Zheng, Y.; Liu, X.; Tan, Y. Wind field and droplet coverage characteristics of air--assisted sprayer in mango--tree canopies. Pest Manag. Sci. 2022, 78, 4892–4904. [Google Scholar] [CrossRef]

- Sarwar, A.; Peters, T.R.; Shafeeque, M.; Mohamed, A.; Arshad, A.; Ullah, A.; Saddique, A.; Muzammil, M.; Aslam, R.A. Accurate measurement of wind drift and evaporation losses could improve water application efficiency of sprinkler irrigation systems—A comparison of measuring techniques. Agric. Water Manag. 2021, 258, 107209. [Google Scholar]

- Blanco, M.N.; Fenske, R.A.; Kasner, E.J.; Yost, M.G.; Seto, E.; Austin, E. Real-Time Monitoring of Spray Drift from Three Different Orchard Sprayers. Chemosphere 2019, 222, 46–55. [Google Scholar] [CrossRef]

- Chen, P.; Lan, Y.; Huang, X.; Qi, H.; Wang, G.; Wang, J.; Wang, L.; Xiao, H. Droplet Deposition and Control of Planthoppers of Different Nozzles in Two-Stage Rice with a Quadrotor Unmanned Aerial Vehicle. Agronomy 2020, 10, 303. [Google Scholar] [CrossRef]

- Lin, J.; Cai, J.; Ouyang, J.; Xiao, L.; Qiu, B. The Influence of Electrostatic Spraying with Waist-Shaped Charging Devices on the Distribution of Long-Range Air-Assisted Spray in Greenhouses. Agronomy 2024, 14, 2278. [Google Scholar] [CrossRef]

- Appah, S.; Wang, P.; Ou, M.; Gong, C.; Jia, W. Review of electrostatic system parameters, charged droplets characteristics and substrate impact behavior from pesticides spraying. Int. J. Agric. Biol. Eng. 2019, 12, 1–9. [Google Scholar] [CrossRef]

- Vigo-Morancho, A.; Videgain, M.; Boné, A.; Vidal, M.; García-Ramos, F.J. Characterization and Evaluation of an Electrostatic Knapsack Sprayer Prototype for Agricultural Crops. Agronomy 2024, 14, 2343. [Google Scholar] [CrossRef]

- Song, Y. Analysis of air curtain system flow field and droplet drift characteristics of high clearance sprayer based on CFD. Int. J. Agric. Biol. Eng. 2024, 17, 38–45. [Google Scholar] [CrossRef]

- Ellis, M.C.B.; Lane, A.G.; O’Sullivan, C.M.; Jones, S. Wind tunnel investigation of the ability of drift-reducing nozzles to provide mitigation measures for bystander exposure to pesticides. Biosyst. Eng. 2021, 202, 152–164. [Google Scholar] [CrossRef]

- Liao, J.; Luo, X.; Wang, P.; Zhou, Z.; O’Donnell, C.C.; Zang, Y.; Hewitt, A.J. Analysis of the Influence of Different Parameters on Droplet Characteristics and Droplet Size Classification Categories for Air Induction Nozzle. Agronomy 2020, 10, 256. [Google Scholar] [CrossRef]

- Koc, C.; Duran, H.; Koc, D.G. Orchard Sprayer Design for Precision Pesticide Application. Erwerbs-Obstbau 2023, 65, 1819–1828. [Google Scholar] [CrossRef]

- Gil, E.; Campos, J.; Salcedo, R.; García-Ruiz, F. Variable Rate Application in fruit orchards and vineyards in Europe: Canopy characterization and system improvement. In Proceedings of the 2023 ASABE Annual Meeting, Omaha, Nebraska, 8–12 July 2023; Volume 1. [Google Scholar]

- Li, L.; He, X.; Song, J.; Liu, Y.; Zeng, A.; Liu, Y.; Liu, C.; Liu, Z. Design and experiment of variable rate orchard sprayer based on laser scanning sensor. Int. J. Agric. Biol. Eng. 2018, 11, 101–108. [Google Scholar] [CrossRef]

- Fessler, L.; Fulcher, A.; Lockwood, D.; Wright, W.; Zhu, H. Advancing Sustainability in Tree Crop Pest Management: Refining Spray Application Rate with a Laser-guided Variable-rate Sprayer in Apple Orchards. HortScience 2020, 55, 1522–1530. [Google Scholar] [CrossRef]

- Nackley, L.L.; Warneke, B.; Fessler, L.; Pscheidt, J.W.; Lockwood, D.; Wright, W.C.; Sun, X.; Fulcher, A. Variable-rate Spray Technology Optimizes Pesticide Application by Adjusting for Seasonal Shifts in Deciduous Perennial Crops. HortTechnology 2021, 31, 479–489. [Google Scholar] [CrossRef]

- Nan, Y.; Zhang, H.; Zheng, J.; Yang, K.; Ge, Y. Low-volume precision spray for plant pest control using profile variable rate spraying and ultrasonic detection. Front. Plant Sci. 2023, 13, 1042769. [Google Scholar] [CrossRef] [PubMed]

- Cui, B.; Cui, X.; Wei, X.; Zhu, Y.; Ma, Z.; Zhao, Y.; Liu, Y. Design and Testing of a Tractor Automatic Navigation System Based on Dynamic Path Search and a Fuzzy Stanley Model. Agriculture 2024, 14, 2136. [Google Scholar] [CrossRef]

- Zhu, F.; Chen, J.; Guan, Z.; Zhu, Y.; Shi, H.; Cheng, K. Development of a combined harvester navigation control system based on visual simultaneous localization and mapping-inertial guidance fusion. J. Agric. Eng. 2024, 55. [Google Scholar] [CrossRef]

- Cui, L.; Mao, H.; Xue, X.; Ding, S.; Qiao, B. Optimized design and test for a pendulum suspension of the crop spray boom in dynamic conditions based on a six DOF motion simulator. Int. J. Agric. Biol. Eng. 2018, 11, 76–85. [Google Scholar]

- Su, Z.; Zou, W.; Zhai, C.; Tan, H.; Yang, S.; Qin, X. Design of an Autonomous Orchard Navigation System Based on Multi-Sensor Fusion. Agronomy 2024, 14, 2825. [Google Scholar] [CrossRef]

- Liu, W.; Hu, J.; Liu, J.; Yue, R.; Zhang, T.; Yao, M.; Li, J. Method for the navigation line recognition of the ridge without crops via machine vision. Int. J. Agric. Biol. Eng. 2024, 17, 230–239. [Google Scholar]

- Jiang, S.; Qi, P.; Han, L.; Liu, L.; Li, Y.; Huang, Z.; Liu, Y.; He, X. Navigation system for orchard spraying robot based on 3D LiDAR SLAM with NDT_ICP point cloud registration. Comput. Electron. Agric. 2024, 220, 108870. [Google Scholar] [CrossRef]

- Wang, W.; Qin, J.; Huang, D.; Zhang, F.; Liu, Z.; Wang, Z.; Yang, F. Integrated Navigation Method for Orchard-Dosing Robot Based on LiDAR/IMU/GNSS. Agronomy 2024, 14, 2541. [Google Scholar] [CrossRef]

- Guevara, J.; Fernando, A.; Cheein, A.; Gené-Mola, J.; Gregorio, E. Analyzing and overcoming the effects of GNSS error on LiDAR based orchard parameters estimation. Comput. Electron. Agric. 2020, 170, 105255. [Google Scholar] [CrossRef]

- Vu, C.T.; Chen, H.C.; Liu, Y.C. Toward Autonomous Navigation for Agriculture Robots in Orchard Farming. In Proceedings of the 2024 IEEE International Conference on Recent Advances in Systems Science and Engineering (RASSE), Taichung, Taiwan, 6–8 November 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–8. [Google Scholar]

- Zhang, Y.; Zhang, B.; Shen, C.; Liu, H.; Huang, J.; Tian, K.; Tang, Z. Review of the field environmental sensing methods based on multi-sensor information fusion technology. Int. J. Agric. Biol. Eng. 2024, 17, 1–13. [Google Scholar]

- Jon, M.; Ander, A.; Inaki, M.; Aitor, G.; David, O.; Oskar, C. A Generic ROS-Based Control Architecture for Pest Inspection and Treatment in Greenhouses Using a Mobile Manipulator. IEEE Access 2021, 9, 94981–94995. [Google Scholar]

- Wang, S.; Song, J.; Qi, P.; Yuan, C.; Wu, H.; Zhang, L.; Liu, W.; Liu, Y.; He, X. Design and development of orchard autonomous navigation spray system. Front. Plant Sci. 2022, 13, 960686. [Google Scholar] [CrossRef] [PubMed]

- Gené-Mola, J.; Llorens, J.; Rosell-Polo, J.R.; Gregorio, E.; Arnó, J.; Solanelles, F.; Martínez-Casasnovas, J.; Escolà, A. Assessing the Performance of RGB-D Sensors for 3D Fruit Crop Canopy Characterization under Different Operating and Lighting Conditions. Sensors 2020, 20, 7072. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, F.; Zheng, Y.; Chen, C.; Peng, X. Detection of Camellia oleifera fruit maturity in orchards based on modified lightweight YOLO. Comput. Electron. Agric. 2024, 226, 109471. [Google Scholar] [CrossRef]

- Zhong, W.; Yang, W.; Zhu, J.; Jia, W.; Dong, X.; Ou, M. An Improved UNet-Based Path Recognition Method in Low-Light Environments. Agriculture 2024, 14, 1987. [Google Scholar] [CrossRef]

- Ma, Z.; Yang, S.; Li, J.; Qi, J. Research on SLAM Localization Algorithm for Orchard Dynamic Vision Based on YOLOD-SLAM2. Agriculture 2024, 14, 1622. [Google Scholar] [CrossRef]

- Jiang, A.; Ahamed, T. Development of an autonomous navigation system for orchard spraying robots integrating a thermal camera and LiDAR using a deep learning algorithm under low- and no-light conditions. Comput. Electron. Agric. 2025, 235, 110359. [Google Scholar] [CrossRef]

- Ji, X.; Wang, A.; Wei, X. Precision Control of Spraying Quantity Based on Linear Active Disturbance Rejection Control Method. Agriculture 2021, 11, 761. [Google Scholar] [CrossRef]

- Xue, R.; Zhang, C.; Yan, H.; Disasa, K.N.; Lakhiar, I.A.; Akhlaq, M.; Hameed, M.U.; Li, J.; Ren, J.; Deng, S.; et al. Determination of the optimal frequency and duration of micro-spray patterns for high-temperature environment tomatoes based on the Fuzzy Borda model. Agric. Water Manag. 2025, 307, 109240. [Google Scholar] [CrossRef]

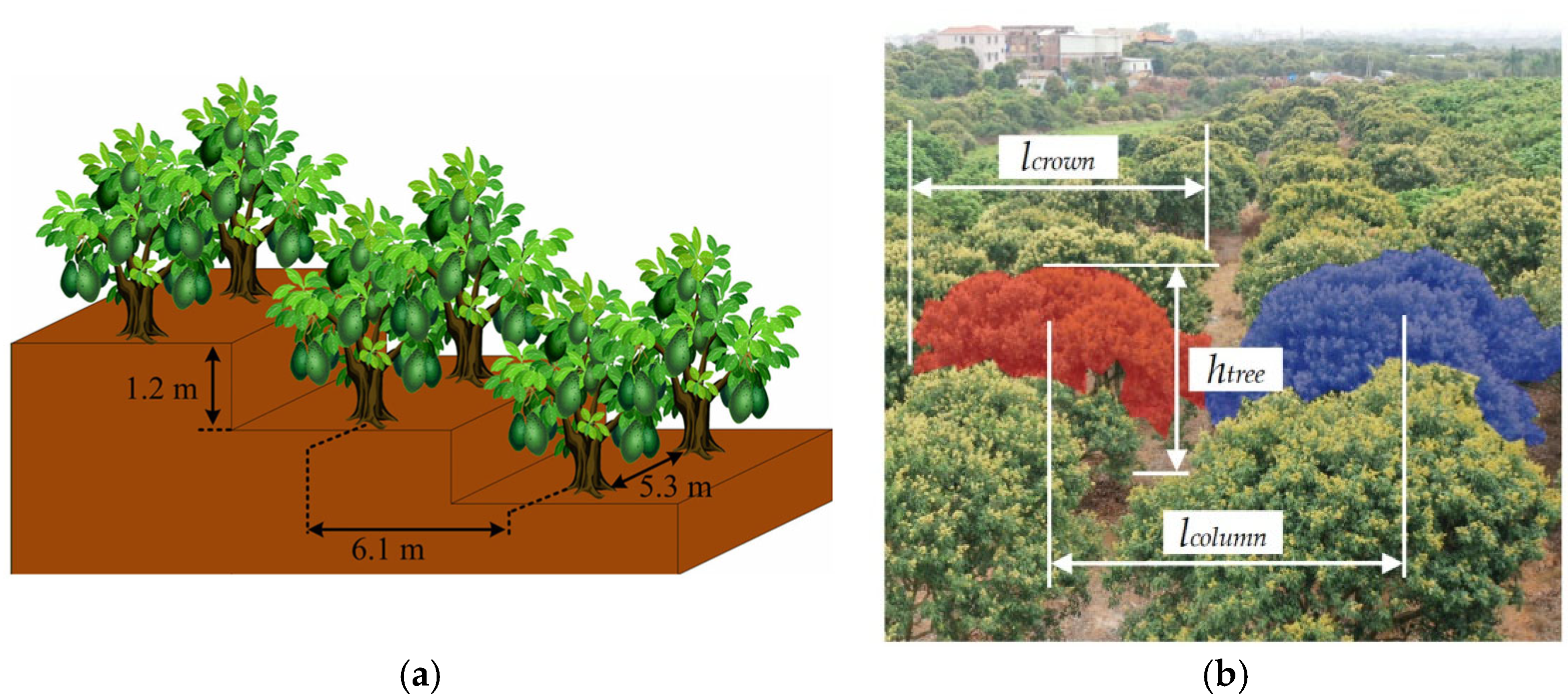

- Li, Z.; Li, C.; Zeng, Y.; Mai, C.; Jiang, R.; Li, J. Design and Realization of an Orchard Operation-Aid Platform: Based on Planting Patterns and Topography. Agriculture 2024, 15, 48. [Google Scholar] [CrossRef]

- Bloch, V.; Degani, A.; Bechar, A. A methodology of orchard architecture design for an optimal harvesting robot. Biosyst. Eng. 2018, 166, 126–137. [Google Scholar] [CrossRef]

| Sensor | Feature | Orchard | Relative Error | Shortcoming |

|---|---|---|---|---|

| RGB-D | Canopy segmentation | Citrus | 2.94% | The detection speed is slower compared to RGB [27] |

| RGB | Canopy volume | Apple | 6.64% | Strongly affected by backlighting, leading to overestimation of canopy volume [39]. |

| LiDAR | Canopy height | Citrus | 26.80% | Affected by environmental noise such as weather and reflections [29]. |

| LiDAR | Leaf area | Apple | 13.90% | Greatly affected by branches and trunks [40]. |

| Ultrasonic | Leaf area density | Osmanthus | 2.84% | Greatly affected by leaf occlusion and irregular distribution [41]. |

| Ultrasonic | Canopy thickness | Osmanthus | 18.8% | Strongly affected by lighting and denser canopies, with only 8.8% error in lab tests [24]. |

| Data Source | Mean Squared Error | Mean Absolute Error | R2 |

|---|---|---|---|

| RGB data | 0.087 | 0.013 | 0.814 |

| Point cloud data | 0.079 | 0.01 | 0.846 |

| Multi-data | 0.062 | 0.005 | 0.914 |

| YOLO Version | Environment Challenge | Task | mAP/Accuracy | Notes |

|---|---|---|---|---|

| YOLOv8n | High fruit density | Density Estimation | mAP: 87.3%; accuracy: 98.7%. | Accurate cluster segmentation [69]. |

| YOLOv4-Tiny + FEN | Occlusion + Day/Night | Multi-class tomato detection | mAP: 94.72% in night. | Maintained performance under occlusion [70]. |

| YOLOv4-SE | Fruit overlap + depth | Grape detection + picking point | Average recognition success rate 97%. | Depth fusion improved precision [71]. |

| EF-YOLOv5s | Lighting variation + occlusion | Apple detection + clustering | Precision: 98.84%. | 2.86 s per pick [72]. |

| YOLOv8s-seg-CBAM | Small target + occlusion | Strawberry peduncle detection | Accuracy: 86.2%. | 30.6 ms/image [73]. |

| Nozzle Type | Description | Manufacturer | Mean Deposition |

|---|---|---|---|

| SX | Flat-fan | DJI (Shenzhen, China) | 0.442 μL/cm−2 |

| XR | Extended range flat-fan | TeeJet (Wheaton, IL, USA) | 0.410 μL/cm−2 |

| IDK | Air-induction flat-fan | Lechler (Metzingen, Germany) | 0.488 μL/cm−2 |

| TR | Hollow cone | Lechler (Metzingen, Germany) | 0.284 μL/cm−2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, M.; Liu, S.; Li, Z.; Ou, M.; Dai, S.; Dong, X.; Wang, X.; Jiang, L.; Jia, W. A Review of Intelligent Orchard Sprayer Technologies: Perception, Control, and System Integration. Horticulturae 2025, 11, 668. https://doi.org/10.3390/horticulturae11060668

Wu M, Liu S, Li Z, Ou M, Dai S, Dong X, Wang X, Jiang L, Jia W. A Review of Intelligent Orchard Sprayer Technologies: Perception, Control, and System Integration. Horticulturae. 2025; 11(6):668. https://doi.org/10.3390/horticulturae11060668

Chicago/Turabian StyleWu, Minmin, Siyuan Liu, Ziyu Li, Mingxiong Ou, Shiqun Dai, Xiang Dong, Xiaowen Wang, Li Jiang, and Weidong Jia. 2025. "A Review of Intelligent Orchard Sprayer Technologies: Perception, Control, and System Integration" Horticulturae 11, no. 6: 668. https://doi.org/10.3390/horticulturae11060668

APA StyleWu, M., Liu, S., Li, Z., Ou, M., Dai, S., Dong, X., Wang, X., Jiang, L., & Jia, W. (2025). A Review of Intelligent Orchard Sprayer Technologies: Perception, Control, and System Integration. Horticulturae, 11(6), 668. https://doi.org/10.3390/horticulturae11060668