A Blueberry Maturity Detection Method Integrating Attention-Driven Multi-Scale Feature Interaction and Dynamic Upsampling

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset Collection

2.2. Data Processing

2.3. Model Selection and Enhancement

2.3.1. AIFI

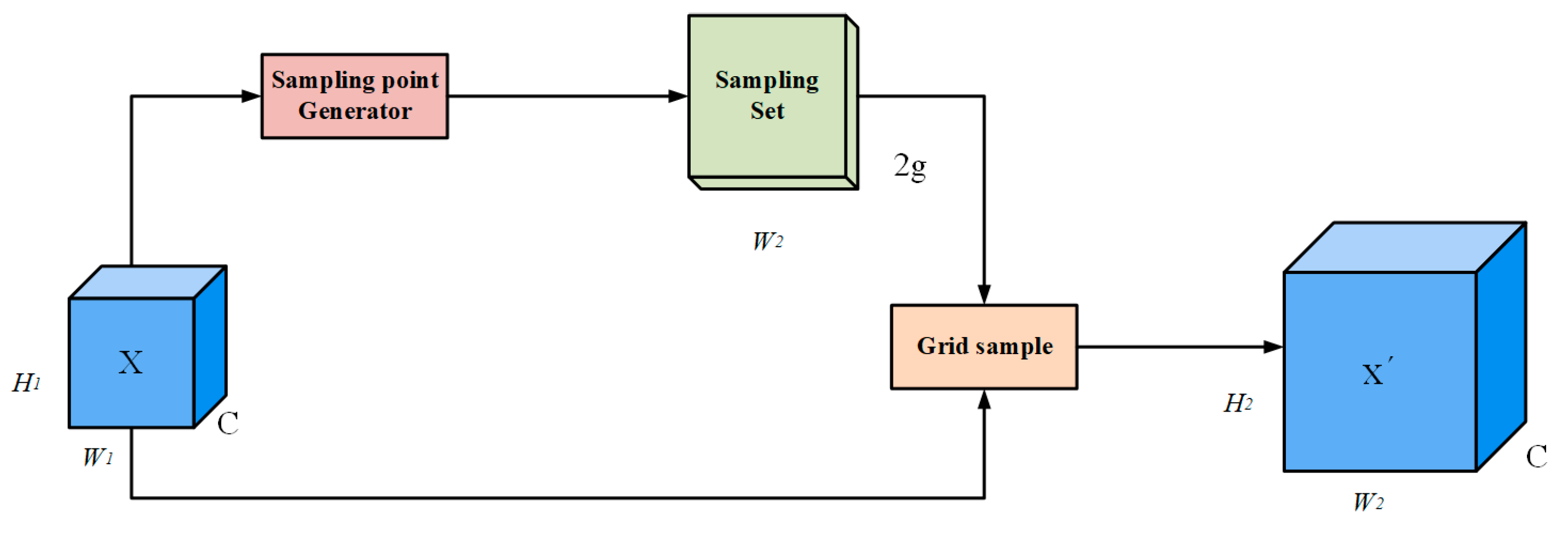

2.3.2. DySample

- -

- The static scope factor performs feature map upsampling by combining linear transformation and pixel shuffle operation with a fixed scope factor (0.25).

- -

- The dynamic scope factor introduces a dynamic scope factor (0.5σ) to further adjust the upsampled features by dynamically combining them with the input features, so that the upsampling process can be better adapted to the spatial and content changes of the input.

2.3.3. EIOU

- -

- Faster convergence: By directly optimizing the centroid distance and aspect ratio differences between the predicted and target frames, EIOU enables faster convergence, especially when the frame overlaps are low. This reduces unnecessary iterations during training, improving efficiency.

- -

- Higher positioning accuracy: EIOU’s focus on optimizing the aspect ratio improves the model’s ability to accurately predict the scale of the target box. This reduces errors in both width and height predictions, making it particularly effective for targets with significant scale variations

- -

- Better robustness: Even when the predicted box does not perfectly overlap with the target box, EIOU provides effective gradients, preventing the training process from stalling and ensuring stable learning.

2.4. Experimental Environment

2.5. Evaluation Criteria

3. Experiments and Analysis of Results

3.1. Experimental Comparison Before and After Model Improvement

3.2. Ablation Experiments

3.3. Optimization of Space Pyramid Pools

3.4. Comparison of Loss Functions

3.5. Comparison Test

3.6. Model Detection Test

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shi, J.; Xiao, Y.; Jia, C.; Zhang, H.; Gan, Z.; Li, X.; Yang, M.; Yin, Y.; Zhang, G.; Hao, J. Physiological and biochemical changes during fruit maturation and ripening in highbush blueberry (Vaccinium corymbosum L.). Food Chem. 2023, 410, 135299. [Google Scholar] [CrossRef]

- Wu, Y.; Han, T.; Yang, H.; Lyu, L.; Li, W.; Wu, W. Known and potential health benefits and mechanisms of blueberry anthocyanins: A review. Food Biosci. 2023, 55, 103050. [Google Scholar] [CrossRef]

- Krikorian, R.; Shidler, M.D.; Nash, T.A.; Kalt, W.; Vinqvist-Tymchuk, M.R.; Shukitt-Hale, B.; Joseph, J.A. Blueberry supplementation improves memory in older adults. J. Agric. Food Chem. 2010, 58, 3996–4000. [Google Scholar] [CrossRef]

- Huang, W.; Wang, X.; Zhang, J.; Xia, J.; Zhang, X. Improvement of blueberry freshness prediction based on machine learning and multi-source sensing in the cold chain logistics. Food Control 2023, 145, 109496. [Google Scholar] [CrossRef]

- Kang, S.; Li, D.; Li, B.; Zhu, J.; Long, S.; Wang, J. Maturity identification and category determination method of broccoli based on semantic segmentation models. Comput. Electron. Agric. 2024, 217, 108633. [Google Scholar] [CrossRef]

- Moggia, C.; Lobos, G.A. Why measuring blueberry firmness at harvest is not enough to estimate postharvest softening after long term storage? A review. Postharvest Biol. Technol. 2023, 198, 112230. [Google Scholar] [CrossRef]

- Tian, Y.; Qin, S.; Yan, Y.; Wang, J.; Jiang, F. Blueberry Ripeness Detection in Complex Field Environments Based on Improved YOLOv8. Trans. Chin. Soc. Agric. Eng. 2024, 40, 153–162. [Google Scholar]

- Wang, L.; Qin, M.; Lei, J.; Wang, X.; Tan, K. Blueberry Ripeness Recognition Method Based on Improved YOLOv4-Tiny. Trans. Chin. Soc. Agric. Eng. 2021, 37, 170–178. [Google Scholar]

- Wang, C.; Han, Q.; Li, J.; Li, C.; Zou, X. YOLO-BLBE: A Novel Model for Identifying Blueberry Fruits with Different Maturities Using the I-MSRCR Method. Agronomy 2024, 14, 658. [Google Scholar] [CrossRef]

- Liu, Y.; Zheng, H.; Zhang, Y.; Zhang, Q.; Chen, H.; Xu, X.; Wang, G. “Is this blueberry ripe?”: A blueberry ripeness detection algorithm for use on picking robots. Front. Plant Sci. 2023, 14, 1198650. [Google Scholar] [CrossRef]

- Feng, W.; Liu, M.; Sun, Y.; Wang, S.; Wang, J. The Use of a Blueberry Ripeness Detection Model in Dense Occlusion Scenarios Based on the Improved YOLOv9. Agronomy 2024, 14, 1860. [Google Scholar] [CrossRef]

- Xiao, F.; Wang, H.; Xu, Y.; Shi, Z. A Lightweight Detection Method for Blueberry Fruit Maturity Based on an Improved YOLOv5 Algorithm. Agriculture 2024, 14, 36. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, Y.; Xu, X. Object Detection in High-Resolution UAV Aerial Remote Sensing Images of Blueberry Canopy Fruits. Agriculture 2024, 14, 1842. [Google Scholar] [CrossRef]

- Wichura, M.A.; Koschnick, F.; Jung, J.; Bauer, S.; Wichura, A. Phenological growth stages of highbush blueberries (Vaccinium spp.): Codification and description according to the BBCH scale. Botany 2024, 102, 428–437. [Google Scholar] [CrossRef]

- Giongo, L.; Poncetta, P.; Loretti, P.; Costa, F. Texture profiling of blueberries (Vaccinium spp.) during fruit development, ripening and storage. Postharvest Biol. Technol. 2013, 76, 34–39. [Google Scholar] [CrossRef]

- Min, D.; Zhao, J.; Bodner, G.; Ali, M.; Li, F.; Zhang, X.; Rewald, B. Early decay detection in fruit by hyperspectral imaging–Principles and application potential. Food Control 2023, 152, 109830. [Google Scholar] [CrossRef]

- Bartunek, J.S.; Nilsson, M.; Sallberg, B.; Claesson, I. Adaptive fingerprint image enhancement with emphasis on preprocessing of data. IEEE Trans. Image Process. 2012, 22, 644–656. [Google Scholar] [CrossRef]

- Faris, P.D.; Ghali, W.A.; Brant, R.; Norris, C.M.; Galbraith, P.D.; Knudtson, M.L.; Investigators, A. Multiple imputation versus data enhancement for dealing with missing data in observational health care outcome analyses. J. Clin. Epidemiol. 2002, 55, 184–191. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Fang, J.; Michael, K.; Montes, D.; Nadar, J.; Skalski, P. ultralytics/yolov5; v6. 1-tensorrt, tensorflow edge tpu and openvino export and inference; Zenodo: Brussel, Belgium, 2022. [Google Scholar]

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A comprehensive review of yolo architectures in computer vision: From yolov1 to yolov8 and yolo-nas. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 11–15 June 2024; pp. 16965–16974. [Google Scholar]

- Liu, W.; Lu, H.; Fu, H.; Cao, Z. Learning to upsample by learning to sample. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 6027–6037. [Google Scholar]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Lau, K.W.; Po, L.-M.; Rehman, Y.A.U. Large separable kernel attention: Rethinking the large kernel attention design in cnn. Expert Syst. Appl. 2024, 236, 121352. [Google Scholar] [CrossRef]

- Wang, C.; Yeh, I.; Liao, H. YOLOv9: Learning what you want to learn using programmable gradient information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar]

- Zhang, H.; Zhang, S. Shape-iou: More accurate metric considering bounding box shape and scale. arXiv 2023, arXiv:2312.17663. [Google Scholar]

- Gevorgyan, Z. SIoU loss: More powerful learning for bounding box regression. arXiv 2022, arXiv:2205.12740. [Google Scholar]

- Chen, Z.; Chen, K.; Lin, W.; See, J.; Yu, H.; Ke, Y.; Yang, C. Piou loss: Towards accurate oriented object detection in complex environments. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Part V 16, pp. 195–211. [Google Scholar]

- Chen, J.; Chen, H.; Xu, F.; Lin, M.; Zhang, D.; Zhang, L. Real-time detection of mature table grapes using ESP-YOLO network on embedded platforms. Biosyst. Eng. 2024, 246, 122–134. [Google Scholar] [CrossRef]

- Li, H.; Chen, J.; Gu, Z.; Dong, T.; Chen, J.; Huang, J.; Gai, J.; Gong, H.; Lu, Z.; He, D. Optimizing edge-enabled system for detecting green passion fruits in complex natural orchards using lightweight deep learning model. Comput. Electron. Agric. 2025, 234, 110269. [Google Scholar] [CrossRef]

| Parameter | Setup |

|---|---|

| Epochs | 200 |

| Batch Size | 16 |

| Optimizer | SGD |

| Initial Learning Rate | 0.01 |

| Final Learning Rate | 0.01 |

| Momentum | 0.937 |

| Weight Decay | 5 × 10−4 |

| Close Mosaic | Last ten epochs |

| Images | 640 |

| Workers | 8 |

| Mosaic | 1.0 |

| Level | Model | Precision (%) | Recall (%) | mAP50 (%) | mAP50-95 (%) |

|---|---|---|---|---|---|

| Mature | YOLOv8 | 97.62 | 94.44 | 98.62 | 78.15 |

| ADE-YOLOv8 | 98.33 | 97.76 | 99.18 | 79.95 | |

| Undermature | YOLOv8 | 94.51 | 93.81 | 96.30 | 77.01 |

| ADE-YOLOv8 | 95.72 | 94.42 | 97.37 | 79.95 | |

| Immature | YOLOv8 | 93.61 | 89.49 | 94.06 | 74.53 |

| ADE-YOLO | 95.42 | 93.95 | 96.14 | 77.36 | |

| ALL | YOLOv8 | 95.25 | 92.58 | 96.33 | 76.57 |

| ADE-YOLOv8 | 96.49 | 95.38 | 97.56 | 79.25 |

| YOLOv8n | AIFI | DySample | EIOU | Precision (%) | Recall (%) | mAP50 (%) | mAP50-95 (%) | Parameters (M) | Weight (MB) |

|---|---|---|---|---|---|---|---|---|---|

| √ | 95.25 | 92.58 | 96.33 | 76.57 | 3.01 | 6.3 | |||

| √ | √ | 95.98 | 95.2 | 97.27 | 78.23 | 2.93 | 6.2 | ||

| √ | √ | 96.01 | 93.36 | 97.07 | 77.17 | 3.02 | 6.3 | ||

| √ | √ | 94.97 | 92.69 | 96.50 | 77.20 | 3.01 | 6.3 | ||

| √ | √ | √ | 95.16 | 94.22 | 97.04 | 78.13 | 2.94 | 6.2 | |

| √ | √ | √ | 94.28 | 94.63 | 97.02 | 77.16 | 2.94 | 6.2 | |

| √ | √ | √ | 95.67 | 94.25 | 97.34 | 77.28 | 2.80 | 6.3 | |

| √ | √ | √ | √ | 96.49 | 95.38 | 97.56 | 79.25 | 2.95 | 6.2 |

| Attention Mechanism | Precision (%) | Recall (%) | mAP50 (%) | mAP50-95 (%) |

|---|---|---|---|---|

| SPPF | 95.67. | 94.25 | 97.34 | 77.28 |

| SPPF-LSKA | 95.66 | 94.81 | 97.32 | 77.80 |

| Focal Modulation | 94.64 | 95.20 | 96.56 | 77.69 |

| SPPELAN | 94.19 | 89.96 | 94.99 | 76.31 |

| AIFI | 96.49 | 95.38 | 97.56 | 79.25 |

| Model | Precision (%) | Recall (%) | mAP50 (%) | mAP50-95 (%) | Parameters (M) | Weight (MB) | FLOPs (G) | FPS (S) |

|---|---|---|---|---|---|---|---|---|

| SSD | 82.45 | 78.67 | 87.3 | 66.74 | 14.34 | 48.10 | 15.10 | 77.06 |

| YOLOv3 | 80.71 | 77.19 | 85.03 | 70.42 | 61.5 | 123.60 | 154.60 | 45.84 |

| YOLOv3-Tiny | 88.2 | 80.51 | 87.80 | 60.32 | 8.67 | 17.50 | 12.90 | 85.11 |

| YOLOv5n | 94.49 | 89.53 | 94.44 | 70.10 | 1.78 | 3.90 | 4.10 | 150.6 |

| YOLOv5s | 92.0 | 85.72 | 91.07 | 72.62 | 7.03 | 14.50 | 15.80 | 90.48 |

| YOLOv7 | 93.40 | 91.39 | 95.31 | 73.63 | 37.21 | 74.80 | 105.10 | 60.00 |

| YOLOv7-Tiny | 94.43 | 92.30 | 96.22 | 70.19 | 6.02 | 12.30 | 13.20 | 97.16 |

| YOLOv8n | 95.25 | 92.58 | 96.33 | 76.57 | 3.01 | 6.30 | 8.20 | 130.05 |

| YOLOv9t | 92.02 | 85.71 | 91.04 | 72.62 | 2.62 | 5.70 | 11.00 | 107.56 |

| YOLOv10n | 94.11 | 91.08 | 96.24 | 76.42 | 2.70 | 5.70 | 8.40 | 121.43 |

| ADE-YOLO | 96.49 | 95.38 | 97.56 | 79.25 | 2.95 | 6.20 | 8.10 | 136.99 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

You, H.; Li, Z.; Wei, Z.; Zhang, L.; Bi, X.; Bi, C.; Li, X.; Duan, Y. A Blueberry Maturity Detection Method Integrating Attention-Driven Multi-Scale Feature Interaction and Dynamic Upsampling. Horticulturae 2025, 11, 600. https://doi.org/10.3390/horticulturae11060600

You H, Li Z, Wei Z, Zhang L, Bi X, Bi C, Li X, Duan Y. A Blueberry Maturity Detection Method Integrating Attention-Driven Multi-Scale Feature Interaction and Dynamic Upsampling. Horticulturae. 2025; 11(6):600. https://doi.org/10.3390/horticulturae11060600

Chicago/Turabian StyleYou, Haohai, Zhiyi Li, Zhanchen Wei, Lijuan Zhang, Xinhua Bi, Chunguang Bi, Xuefang Li, and Yunpeng Duan. 2025. "A Blueberry Maturity Detection Method Integrating Attention-Driven Multi-Scale Feature Interaction and Dynamic Upsampling" Horticulturae 11, no. 6: 600. https://doi.org/10.3390/horticulturae11060600

APA StyleYou, H., Li, Z., Wei, Z., Zhang, L., Bi, X., Bi, C., Li, X., & Duan, Y. (2025). A Blueberry Maturity Detection Method Integrating Attention-Driven Multi-Scale Feature Interaction and Dynamic Upsampling. Horticulturae, 11(6), 600. https://doi.org/10.3390/horticulturae11060600