A Review of Environmental Sensing Technologies for Targeted Spraying in Orchards

Abstract

1. Introduction

2. Environmental Sensing Technology

2.1. Definition and Principles of Environmental Sensing Technology

2.2. Environmental Sensing Technology for Orchards

3. Key Sensing Technologies for Orchards

3.1. Visual Sensors: Imaging Technologies and Orchard Applications

3.1.1. Monocular and Binocular (Multi-Camera) Visual Sensors

| Researcher | Collection Equipment | Image Data | Data Usage | Ref. |

|---|---|---|---|---|

| Zhang et al. | Binaural Camera | Apple fruit | Fruit detection and localization | [65] |

| Wang et al. | MV-VD120SC | Litchi fruit | Localization of overlapping fruits | [66] |

| Zheng et al. | MV-SUA134GC | Tomato fruit | Localization of overlapping fruits | [67] |

| Liu et al. | MV-CA060-10GC | Pineapple fruit | Localization of overlapping fruits | [68] |

| Pan et al. | ZED 2i | Pear fruit | Segmentation of overlapping fruits | [69] |

| Sun et al. | ZED 2i | Pear tree trunk | Trunk detection and distance measurement | [70] |

| Tang et al. | ZED2i | Oil-seed camellia fruit | Localization of overlapping fruits | [71] |

| Zhang et al. | ZED2i | Fruit tree branches | Three-dimensional reconstruction of branches | [72] |

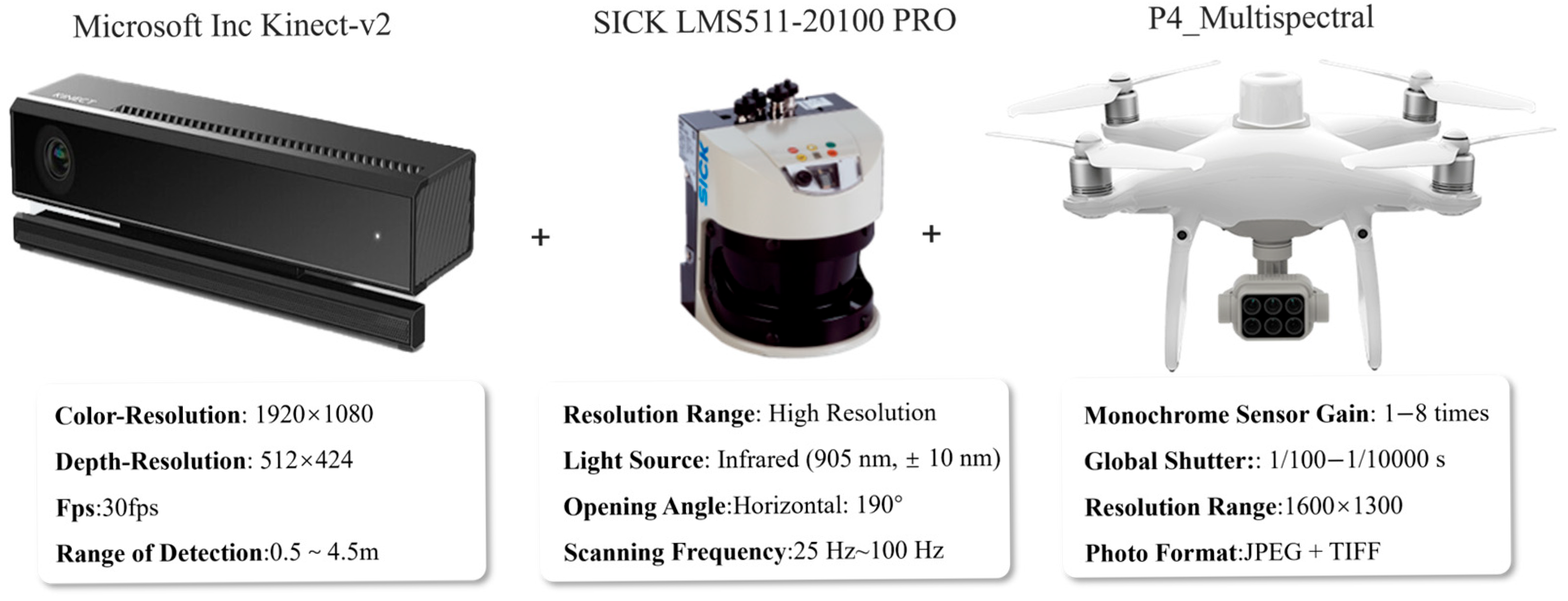

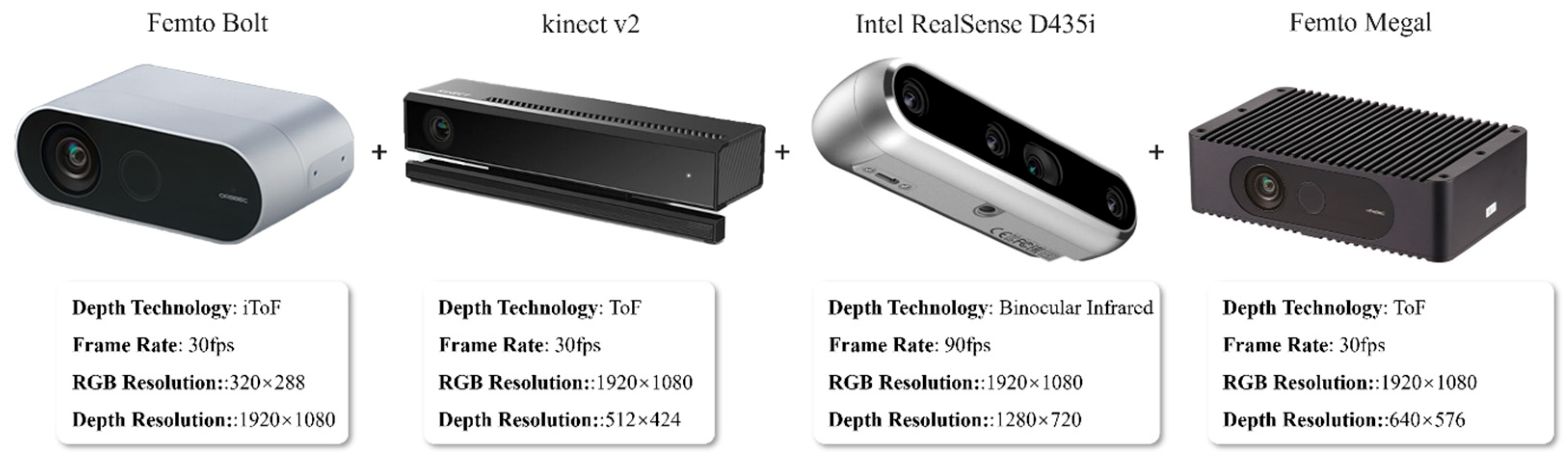

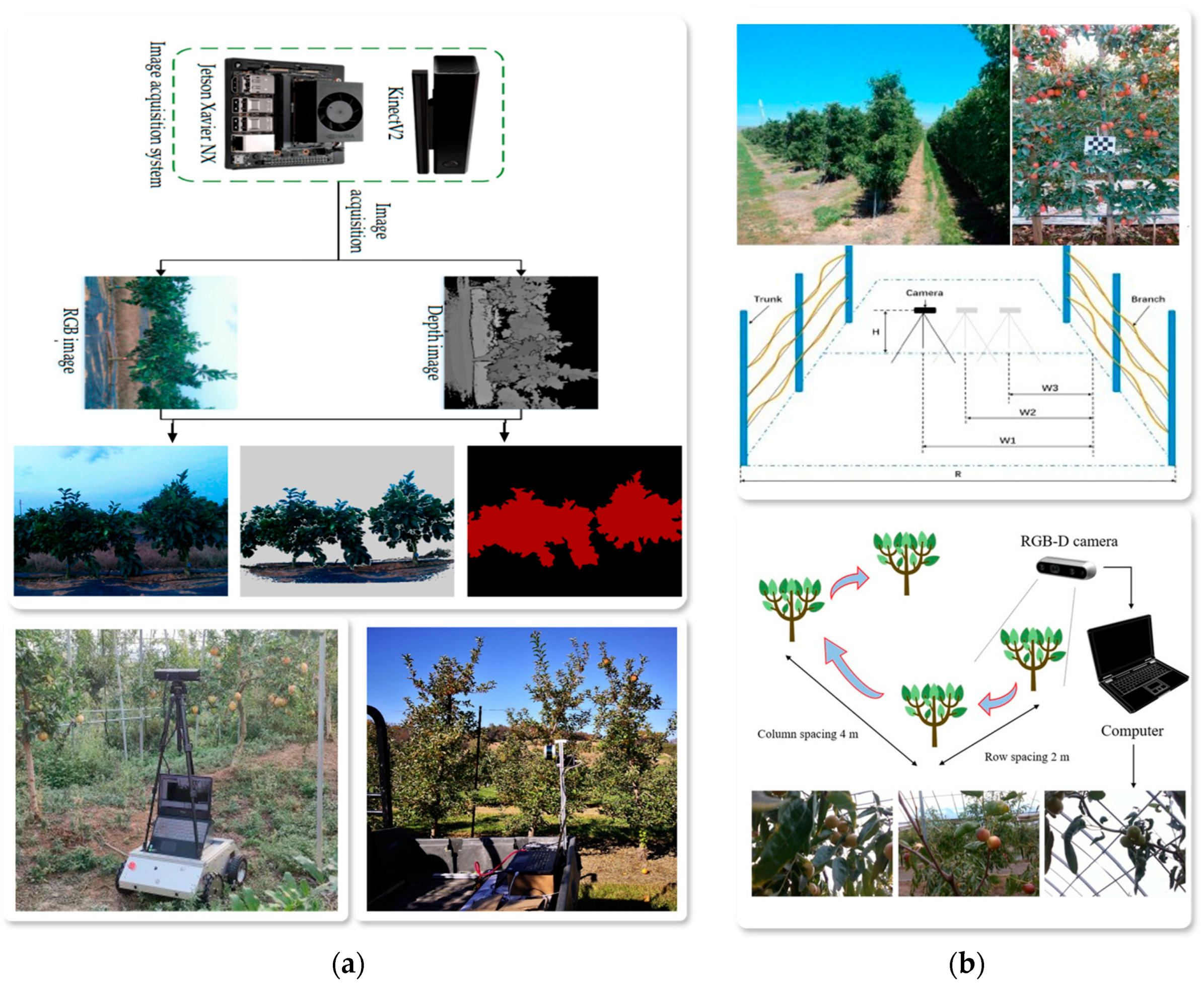

3.1.2. RGB-D and Dynamic Visual Sensors

3.2. LiDAR: Orchard Environmental Perception

3.2.1. Working Principle and Composition

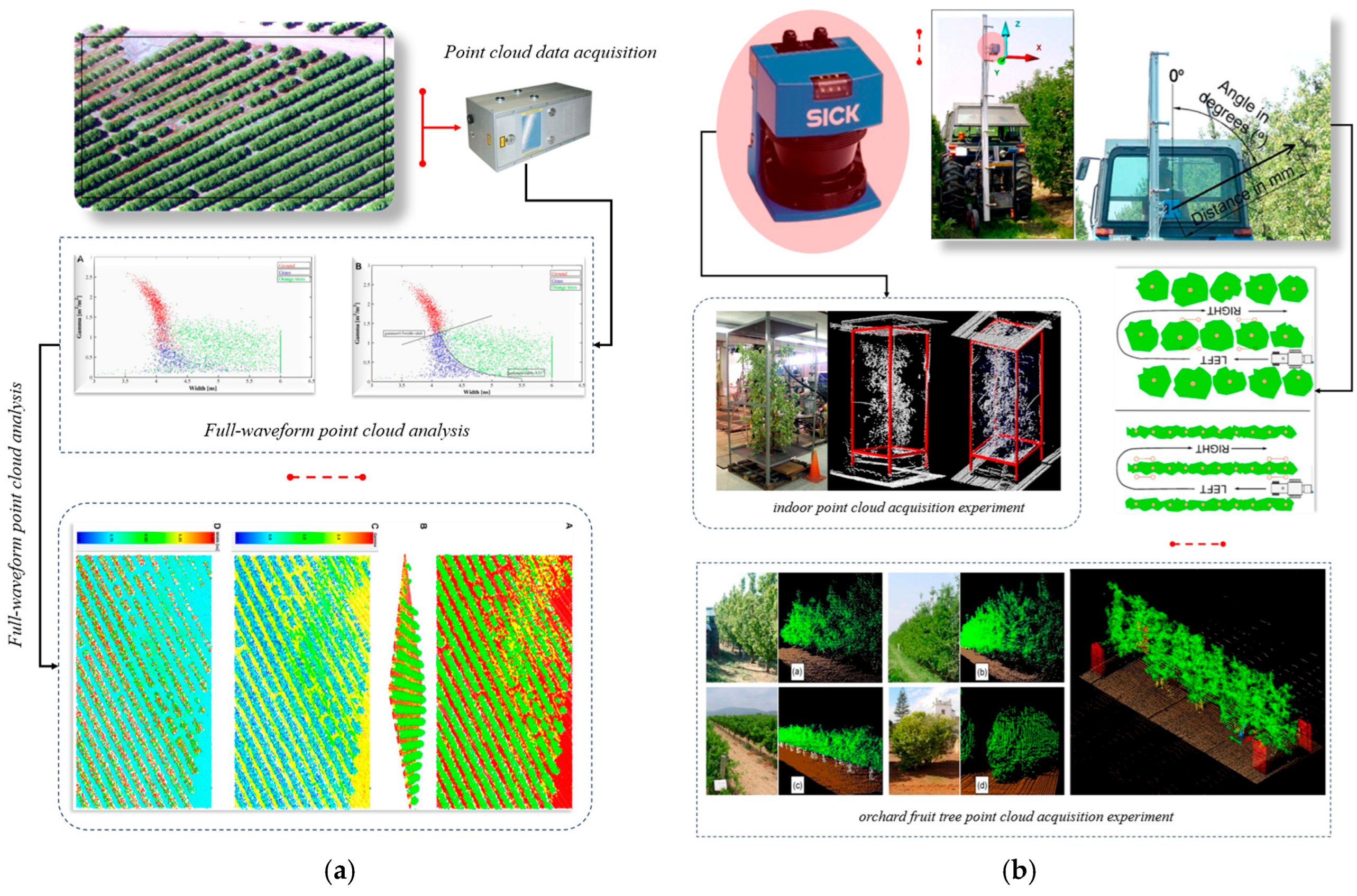

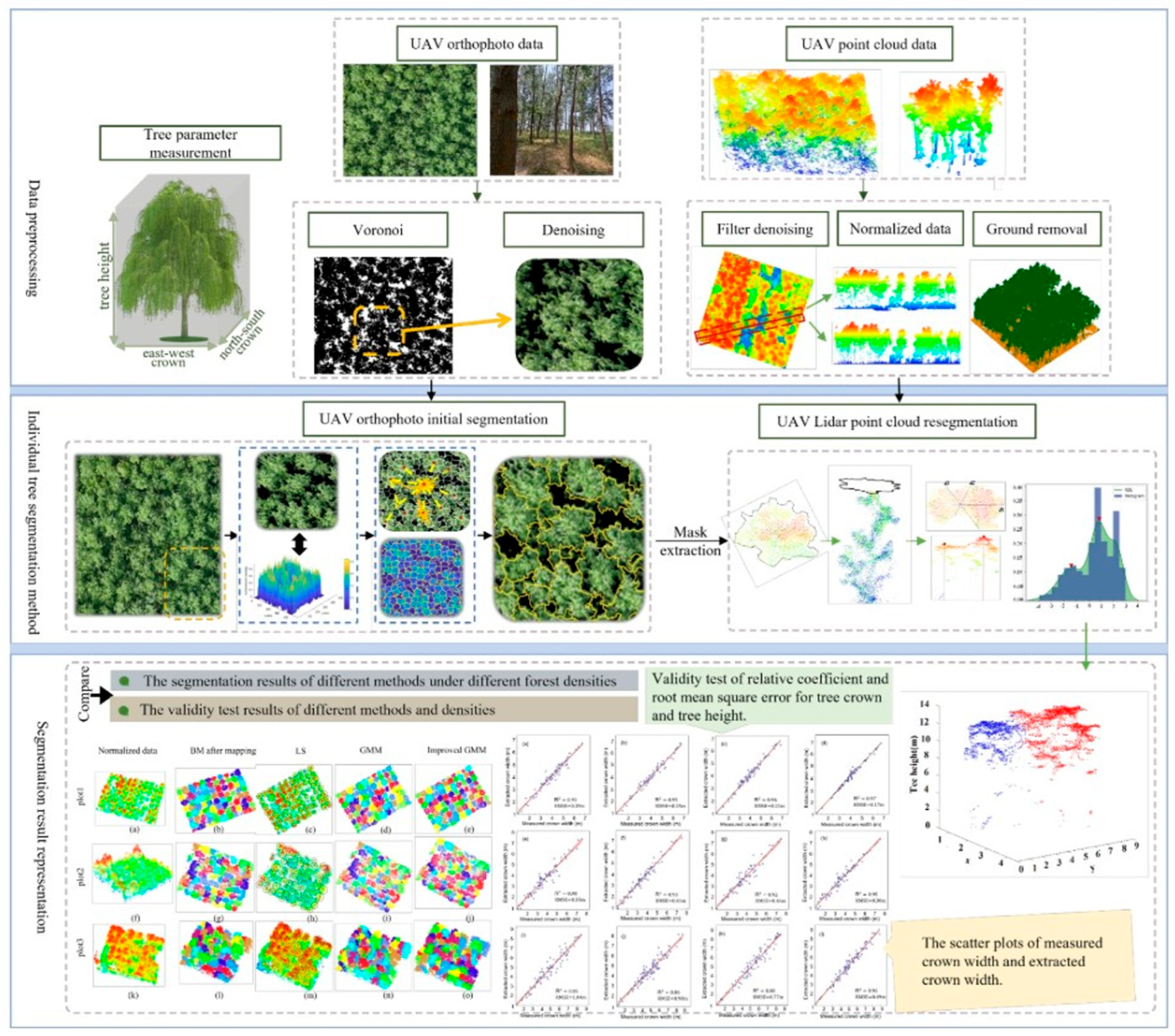

3.2.2. Application of LiDAR in Orchard Environmental Perception

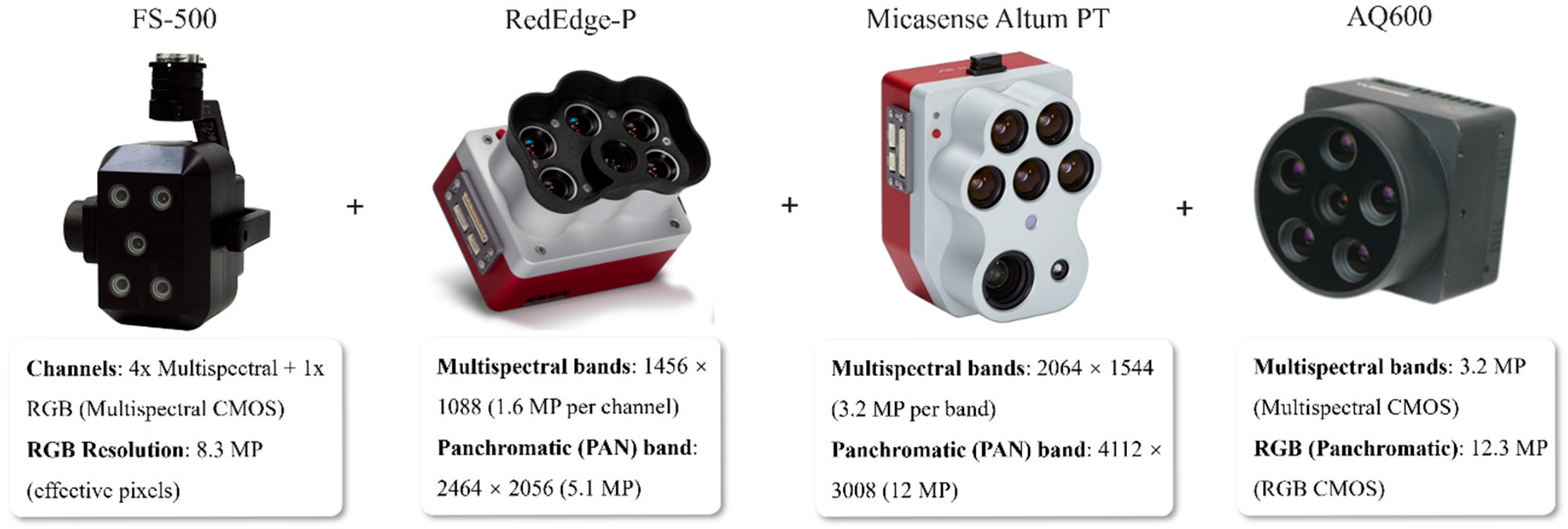

3.3. Multispectral and Hyperspectral Sensors: Crop Health Monitoring and Pest Detection

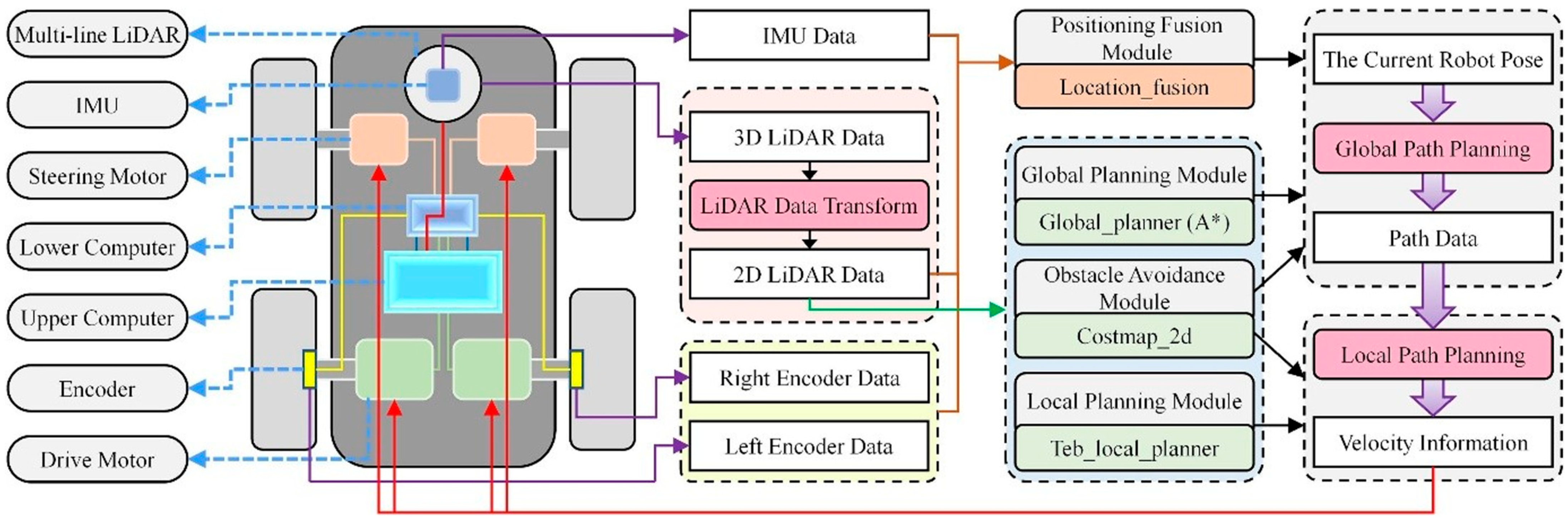

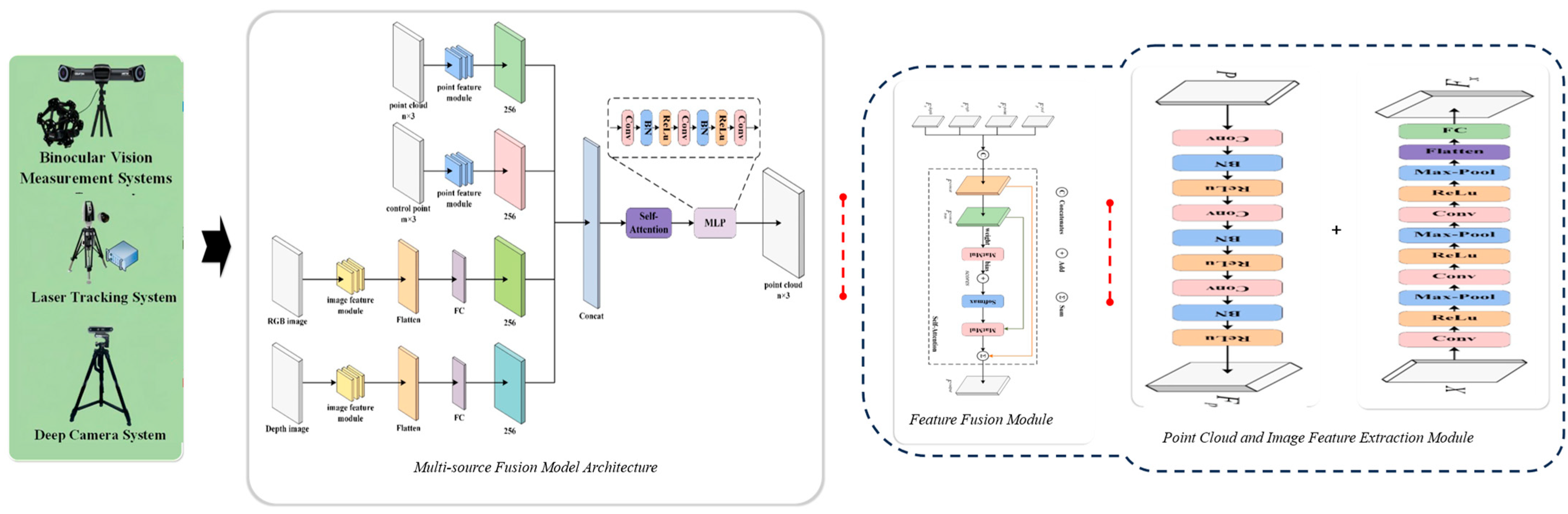

3.4. Sensor Data Fusion: Enhancing the Accuracy and Real-Time Performance of Orchard Environmental Perception

3.4.1. Principles and Methods of Sensor Data Fusion

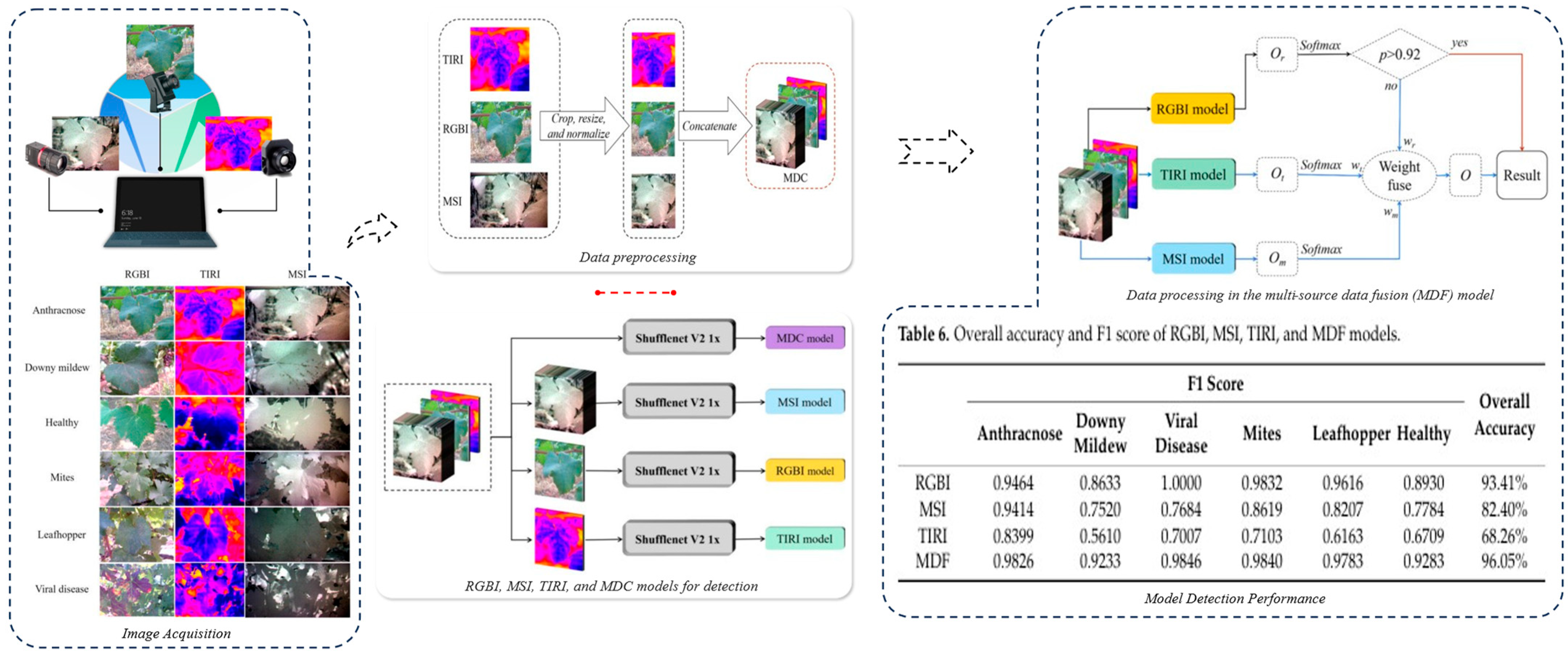

3.4.2. Sensor Data Fusion Combinations and Methods in Orchard Perception

4. Orchard Targeted Spraying Technology Environmental Perception

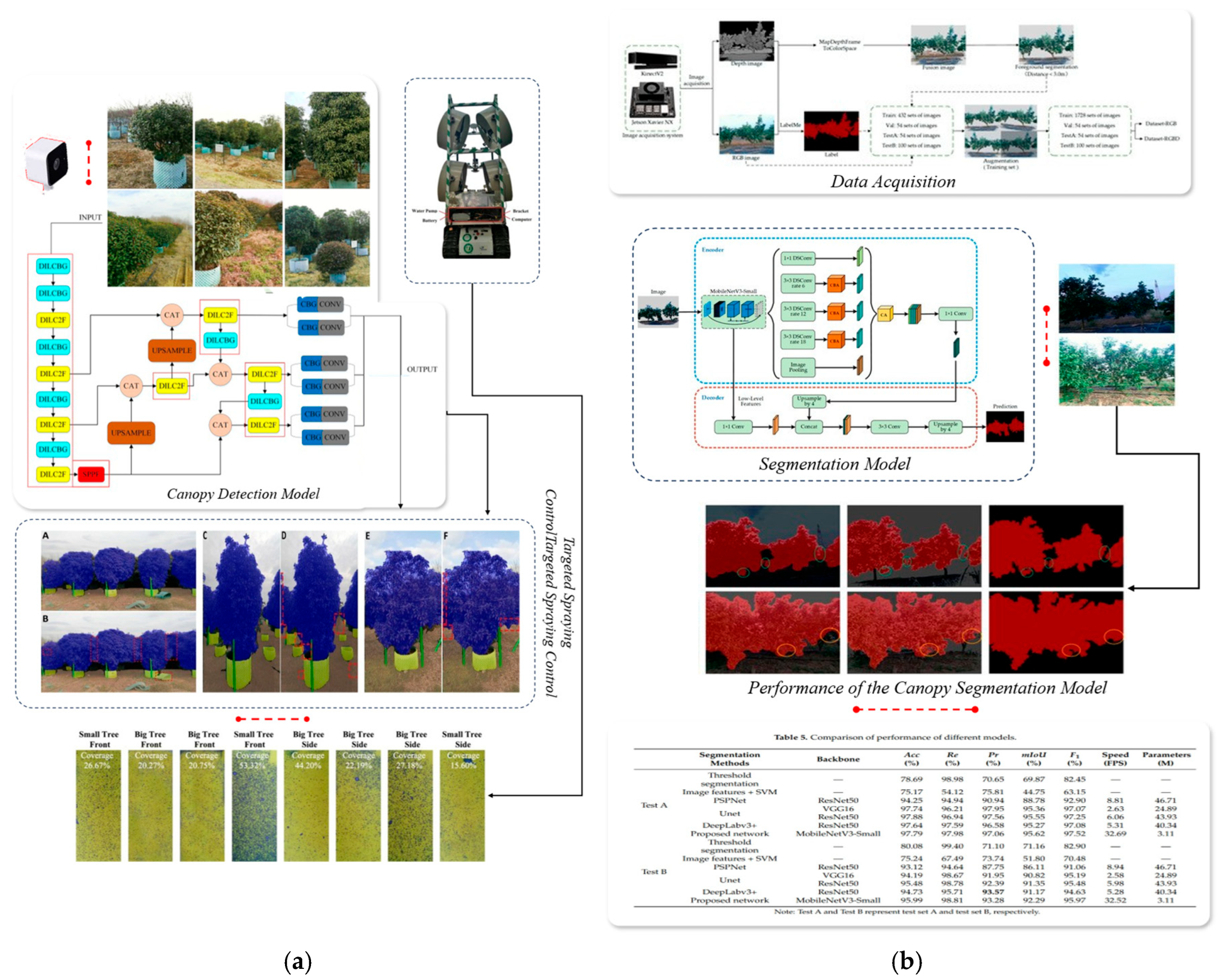

4.1. Canopy Perception and Targeted Spraying

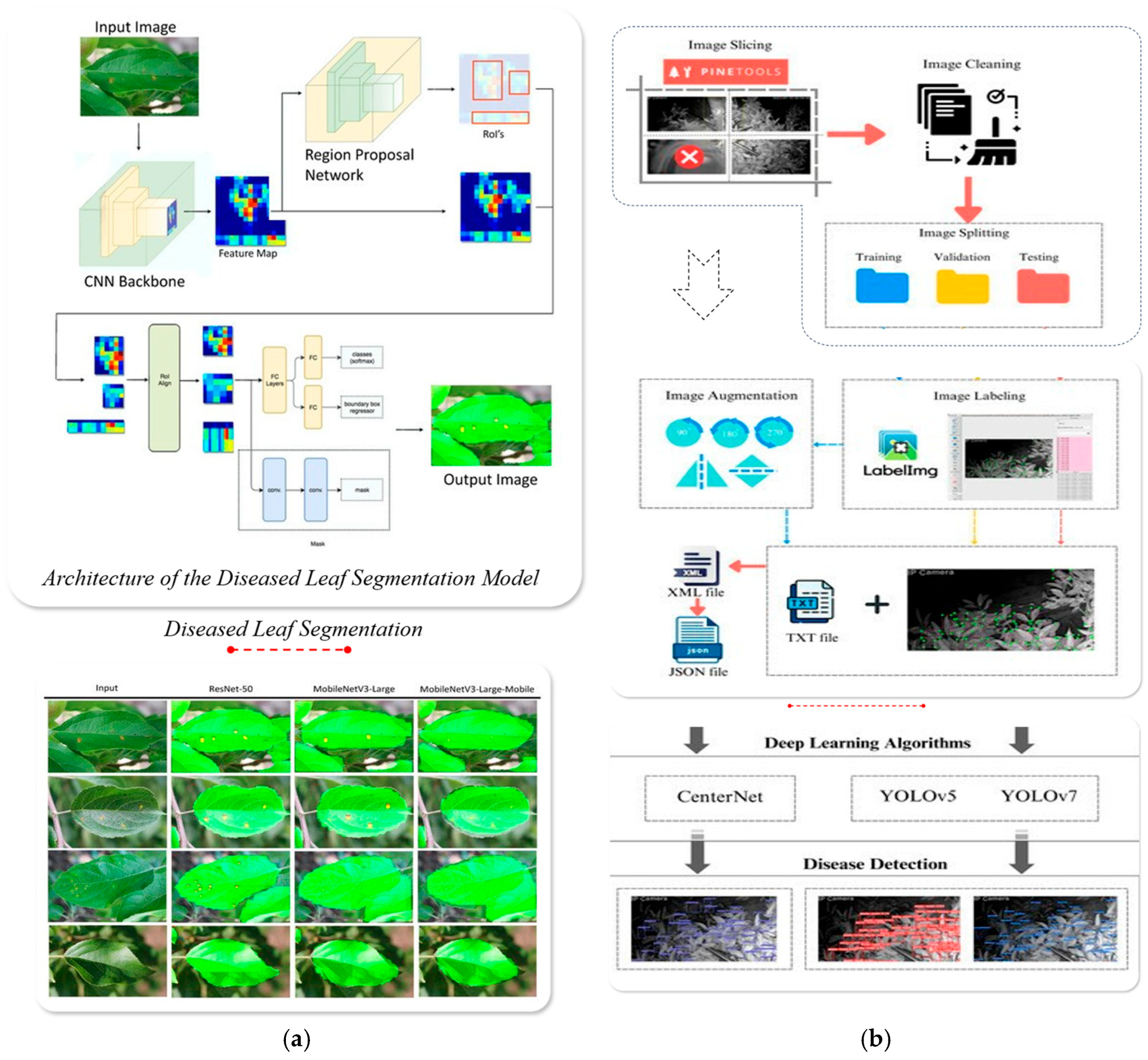

4.2. Pest and Disease Area Detection and Precision Application

| Researcher | Research Object | Research Method | Research Objective | Ref. |

|---|---|---|---|---|

| Zhang et al. | Orchard pests | YOLOv5 + GhostNet | Real-time pest detection | [152] |

| Luo et al. | Citrus pests and diseases | Light-SA YOLOV8 | Real-time pest and disease recognition | [153] |

| Chao et al. | Apple tree leaf diseases | XDNet | Disease identification | [154] |

| Sun et al. | Apple tree leaf diseases | MEAN-SSD | Real-time disease detection | [155] |

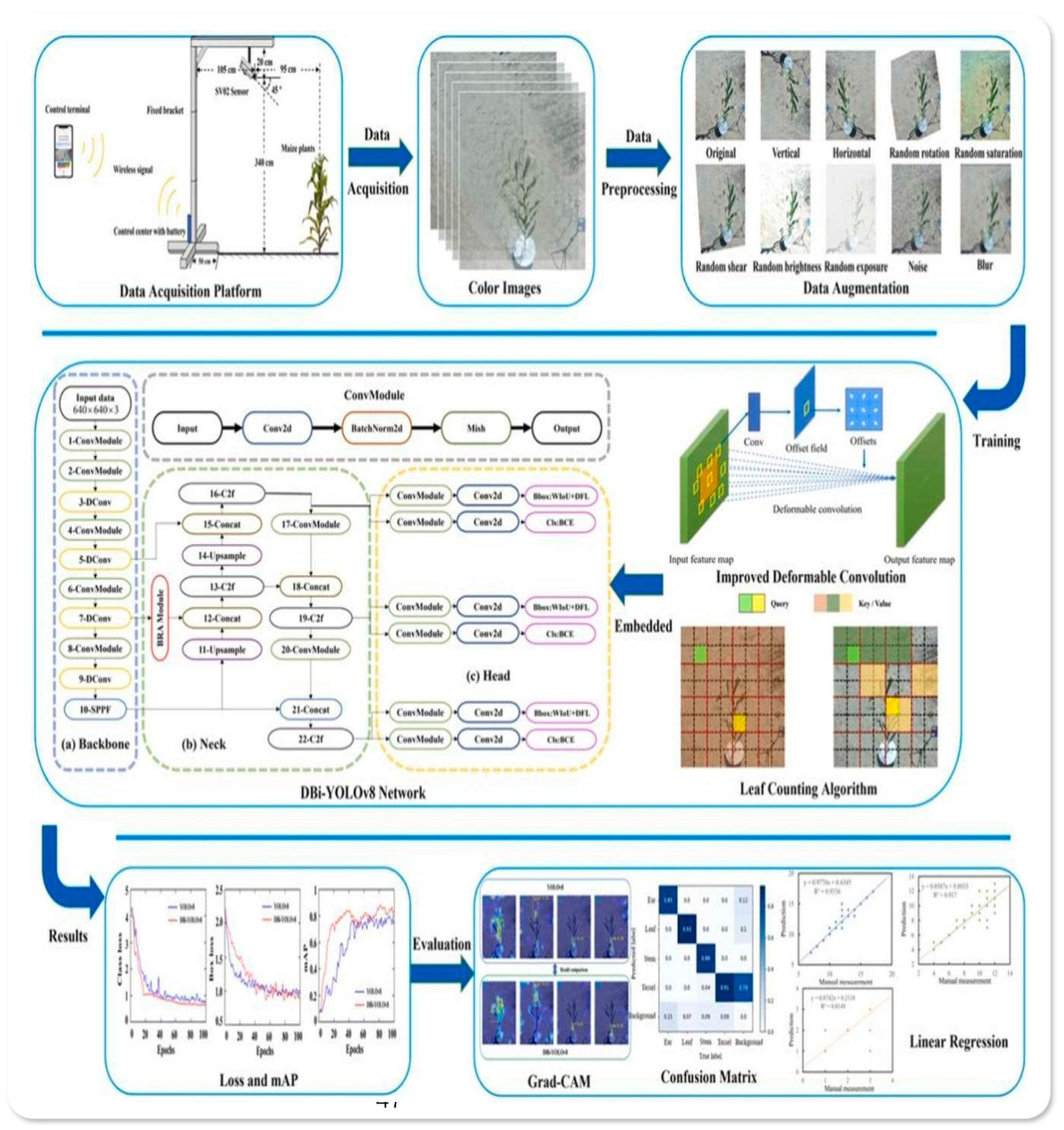

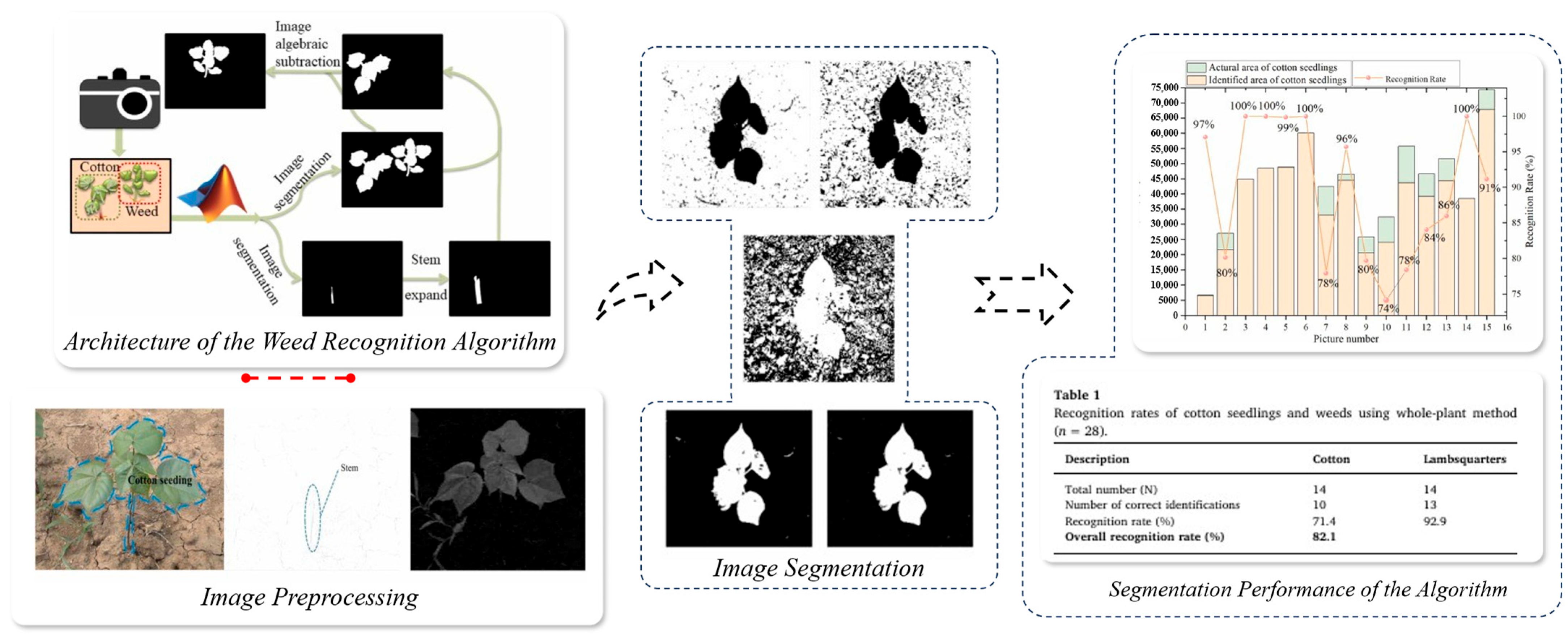

4.3. Weed and Non-Target Area Recognition and Targeted Weed Control

5. Challenges and Future Development Directions

5.1. Technical Adaptability in Orchard Complex Environments

5.2. Real-Time Data Processing and Big Data Analysis Challenges

5.3. Technological Innovation and Future Outlook in Sustainable Agricultural Development

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Endalew, A.M.; Debaer, C.; Rutten, N.; Vercammen, J.; Delele, M.A.; Ramon, H.; Verboven, P. Modelling pesticide flow and deposition from air-assisted orchard spraying in orchards: A new integrated CFD approach. Agric. For. Meteorol. 2010, 150, 1383–1392. [Google Scholar] [CrossRef]

- Xun, L.; Campos, J.; Salas, B.; Fabregas, F.X.; Zhu, H.; Gil, E. Advanced spraying systems to improve pesticide saving and reduce spray drift for apple orchards. Precis. Agric. 2023, 24, 1526–1546. [Google Scholar] [CrossRef]

- Brown, D.L.; Giles, D.K.; Oliver, M.N.; Klassen, P. Targeted spray technology to reduce pesticide in runoff from dormant orchards. Crop Prot. 2008, 27, 545–552. [Google Scholar] [CrossRef]

- Lin, J.; Cai, J.; Ouyang, J.; Xiao, L.; Qiu, B. The Influence of Electrostatic Spraying with Waist-Shaped Charging Devices on the Distribution of Long-Range Air-Assisted Spray in Greenhouses. Agronomy 2024, 14, 2278. [Google Scholar] [CrossRef]

- Giles, D.K.; Klassen, P.; Niederholzer, F.J.; Downey, D. “Smart” sprayer technology provides environmental and economic benefits in California orchards. Calif. Agric. 2011, 65, 85–89. [Google Scholar] [CrossRef]

- Wang, G.; Lan, Y.; Qi, H.; Chen, P.; Hewitt, A.; Han, Y. Field evaluation of an unmanned aerial vehicle (UAV) sprayer: Effect of spray volume on deposition and the control of pests and disease in wheat. Pest Manag. Sci. 2019, 75, 1546–1555. [Google Scholar] [CrossRef]

- Schatke, M.; Ulber, L.; Kämpfer, C.; Redwitz, C. Estimation of weed distribution for site-specific weed management—Can Gaussian copula reduce the smoothing effect? Precis. Agric. 2025, 26, 37. [Google Scholar] [CrossRef]

- Xie, Q.; Song, M.; Wen, T.; Cao, W.; Zhu, Y.; Ni, J. An intelligent spraying system for weeds in wheat fields based on a dynamic model of droplets impacting wheat leaves. Front. Plant Sci. 2024, 15, 1420649. [Google Scholar] [CrossRef]

- Owen-Smith, P.; Perry, R.; Wise, J.; Jamil, R.Z.R.; Gut, L.; Sundin, G.; Grieshop, M. Spray coverage and pest management efficacy of a solid set canopy delivery system in high density apples. Pest Manag. Sci. 2019, 75, 3050–3059. [Google Scholar] [CrossRef]

- Grella, M.; Gallart, M.; Marucco, P.; Balsari, P.; Gil, E. Ground Deposition and Airborne Spray Drift Assessment in Vineyard and Orchard: The Influence of Environmental Variables and Sprayer Settings. Sustainability 2017, 9, 728. [Google Scholar] [CrossRef]

- Wang, S.; Song, J.; Qi, P.; Yuan, C.; Wu, H.; Zhang, L.; He, X. Design and development of orchard autonomous navigation spray system. Front. Plant Sci. 2022, 13, 960686. [Google Scholar] [CrossRef] [PubMed]

- Seol, J.; Kim, J.; Son, H.I. Field evaluations of a deep learning-based intelligent spraying robot with flow control for pear orchards. Precis. Agric. 2022, 23, 712–732. [Google Scholar] [CrossRef]

- Osterman, A.; Godeša, T.; Hočevar, M.; Širok, B.; Stopar, M. Real-time positioning algorithm for variable-geometry air-assisted orchard sprayer. Comput. Electron. Agric. 2013, 98, 175–182. [Google Scholar] [CrossRef]

- Zheng, K.; Zhao, X.; Han, C.; He, Y.; Zhai, C.; Zhao, C. Design and Experiment of an Automatic Row-Oriented Spraying System Based on Machine Vision for Early-Stage Maize Corps. Agriculture 2023, 13, 691. [Google Scholar] [CrossRef]

- Zhu, C.; Hao, S.; Liu, C.; Wang, Y.; Jia, X.; Xu, J.; Guo, S.; Huo, J.; Wang, W. An Efficient Computer Vision-Based Dual-Face Target Precision Variable Spraying Robotic System for Foliar Fertilisers. Agronomy 2024, 14, 2770. [Google Scholar] [CrossRef]

- Román, C.; Peris, M.; Esteve, J.; Tejerina, M.; Cambray, J.; Vilardell, P.; Planas, S. Pesticide dose adjustment in fruit and grapevine orchards by DOSA3D: Fundamentals of the system and on-farm validation. Sci. Total Environ. 2022, 808, 152158. [Google Scholar] [CrossRef]

- Wang, S.; Wang, W.; Lei, X.; Wang, S.; Li, X.; Norton, T. Canopy Segmentation Method for Determining the Spray Deposition Rate in Orchards. Agronomy 2022, 12, 1195. [Google Scholar] [CrossRef]

- Holterman, H.J.; Zande, J.C.; Huijsmans, J.F.; Wenneker, M. An empirical model based on phenological growth stage for predicting pesticide spray drift in pome fruit orchards. Biosyst. Eng. 2017, 154, 46–61. [Google Scholar] [CrossRef]

- Ma, J.; Liu, K.; Dong, X.; Huang, X.; Ahmad, F.; Qiu, B. Force and motion behaviour of crop leaves during spraying. Biosyst. Eng. 2023, 235, 83–99. [Google Scholar] [CrossRef]

- Wang, A.; Li, W.; Men, X.; Gao, B.; Xu, Y.; Wei, X. Vegetation detection based on spectral information and development of a low-cost vegetation sensor for selective spraying. Pest Manag. Sci. 2022, 78, 2467–2476. [Google Scholar] [CrossRef]

- Liu, L.; Liu, Y.; He, X.; Liu, W. Precision Variable-Rate Spraying Robot by Using Single 3D LIDAR in Orchards. Agronomy 2022, 12, 2509. [Google Scholar] [CrossRef]

- Li, L.; He, X.; Song, J.; Liu, Y.; Zeng, A.; Liu, Y.; Liu, Z. Design and experiment of variable rate orchard sprayer based on laser scanning sensor. Int. J. Agric. Biol. Eng. 2018, 11, 101–108. [Google Scholar] [CrossRef]

- Salas, B.; Salcedo, R.; Garcia-Ruiz, F.; Gil, E. Design, implementation and validation of a sensor-based precise airblast sprayer to improve pesticide applications in orchards. Precis. Agric. 2024, 25, 865–888. [Google Scholar] [CrossRef]

- Berk, P.; Hocevar, M.; Stajnko, D.; Belsak, A. Development of alternative plant protection product application techniques in orchards, based on measurement sensing systems: A review. Comput. Electron. Agric. 2016, 124, 273–288. [Google Scholar] [CrossRef]

- Dang, F.; Chen, D.; Lu, Y.; Li, Z. YOLOWeeds: A novel benchmark of YOLO object detectors for multi-class weed detection in cotton production systems. Comput. Electron. Agric. 2023, 205, 107655. [Google Scholar] [CrossRef]

- Pantazi, X.E.; Moshou, D.; Tamouridou, A.A. Automated leaf disease detection in different crop species through image features analysis and One Class Classifiers. Comput. Electron. Agric. 2019, 156, 96–104. [Google Scholar] [CrossRef]

- Gu, C.; Zou, W.; Wang, X.; Chen, L.; Zhai, C. Wind loss model for the thick canopies of orchard trees based on accurate variable spraying. Front. Plant Sci. 2022, 13, 1010540. [Google Scholar] [CrossRef]

- Liu, H.; Du, Z.; Shen, Y.; Du, W.; Zhang, X. Development and evaluation of an intelligent multivariable spraying robot for orchards and nurseries. Comput. Electron. Agric. 2024, 222, 109056. [Google Scholar] [CrossRef]

- Chen, Q.; Xie, Y.; Guo, S.; Bai, J.; Shu, Q. Sensing system of environmental perception technologies for driverless vehicle: A review of state of the art and challenges. Sens. Actuators A Phys. 2021, 319, 112566. [Google Scholar] [CrossRef]

- Wang, D.; Li, W.; Liu, X.; Li, N.; Zhang, C. UAV environmental perception and autonomous obstacle avoidance: A deep learning and depth camera combined solution. Comput. Electron. Agric. 2020, 175, 105523. [Google Scholar] [CrossRef]

- Ye, L.; Wu, F.; Zou, X.; Li, J. Path planning for mobile robots in unstructured orchard environments: An improved kinematically constrained bi-directional RRT approach. Comput. Electron. Agric. 2023, 215, 108453. [Google Scholar] [CrossRef]

- Tang, Y.; Qiu, J.; Zhang, Y.; Wu, D.; Cao, Y.; Zhao, K.; Zhu, L. Optimization strategies of fruit detection to overcome the challenge of unstructured background in field orchard environment: A review. Precis. Agric. 2023, 24, 1183–1219. [Google Scholar] [CrossRef]

- Chen, W.; Lu, S.; Liu, B.; Li, G.; Qian, T. Detecting citrus in orchard environment by using improved YOLOv4. Sci. Program. 2020, 2020, 8859237. [Google Scholar] [CrossRef]

- Kang, H.; Chen, C. Fruit Detection and Segmentation for Apple Harvesting Using Visual Sensor in Orchards. Sensors 2019, 19, 4599. [Google Scholar] [CrossRef]

- Jiang, A.; Ahamed, T. Navigation of an Autonomous Spraying Robot for Orchard Operations Using LiDAR for Tree Trunk Detection. Sensors 2023, 23, 4808. [Google Scholar] [CrossRef] [PubMed]

- Zheng, C.; Abd-Elrahman, A.; Whitaker, V.M.; Dalid, C. Deep learning for strawberry canopy delineation and biomass prediction from high-resolution images. Plant Phenomics 2022, 2022, 9850486. [Google Scholar] [CrossRef]

- Sun, G.; Wang, X.; Ding, Y.; Lu, W.; Sun, Y. Remote Measurement of Apple Orchard Canopy Information Using Unmanned Aerial Vehicle Photogrammetry. Agronomy 2019, 9, 774. [Google Scholar] [CrossRef]

- Chen, X.; Jiang, K.; Zhu, Y.; Wang, X.; Yun, T. Individual Tree Crown Segmentation Directly from UAV-Borne LiDAR Data Using the PointNet of Deep Learning. Forests 2021, 12, 131. [Google Scholar] [CrossRef]

- Liu, Y.; You, H.; Tang, X.; You, Q.; Huang, Y.; Chen, J. Study on Individual Tree Segmentation of Different Tree Species Using Different Segmentation Algorithms Based on 3D UAV Data. Forests 2023, 14, 1327. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, B.; Shen, C.; Liu, H.; Huang, J.; Tian, K.; Tang, Z. Review of the field environmental sensing methods based on multi-sensor information fusion technology. Int. J. Agric. Biol. Eng. 2024, 17, 1–13. [Google Scholar]

- Ren, Y.; Huang, X.; Aheto, J.H.; Wang, C.; Ernest, B.; Tian, X.; Wang, C. Application of volatile and spectral profiling together with multimode data fusion strategy for the discrimination of preserved eggs. Food Chem. 2021, 343, 128515. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Xie, S.; Ning, J.; Chen, Q.; Zhang, Z. Evaluating green tea quality based on multisensor data fusion combining hyperspectral imaging and olfactory visualization systems. J. Sci. Food Agric. 2019, 99, 1787–1794. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Sun, J.; Tian, Y.; Wu, X.; Dai, C.; Li, B. Spectral classification of lettuce cadmium stress based on information fusion and VISSA-GOA-SVM algorithm. J. Food Process Eng. 2019, 42, e13085. [Google Scholar] [CrossRef]

- Zhu, W.; Feng, Z.; Dai, S.; Zhang, P.; Wei, X. Using UAV Multispectral Remote Sensing with Appropriate Spatial Resolution and Machine Learning to Monitor Wheat Scab. Agriculture 2022, 12, 1785. [Google Scholar] [CrossRef]

- Qin, H.; Zhou, W.; Yao, Y.; Wang, W. Individual tree segmentation and tree species classification in subtropical broadleaf forests using UAV-based LiDAR, hyperspectral, and ultrahigh-resolution RGB data. Remote Sens. Environ. 2022, 280, 113143. [Google Scholar] [CrossRef]

- Zhu, W.; Li, J.; Li, L.; Wang, A.; Wei, X.; Mao, H. Nondestructive diagnostics of soluble sugar, total nitrogen and their ratio of tomato leaves in greenhouse by polarized spectra–hyperspectral data fusion. Int. J. Agric. Biol. Eng. 2020, 13, 189–197. [Google Scholar] [CrossRef]

- Guan, H.; Deng, H.; Ma, X.; Zhang, T.; Zhang, Y.; Zhu, T.; Lu, Y. A corn canopy organs detection method based on improved DBi-YOLOv8 network. Eur. J. Agron. 2024, 154, 127076. [Google Scholar] [CrossRef]

- Li, H.; Tan, B.; Sun, L.; Liu, H.; Zhang, H.; Liu, B. Multi-Source Image Fusion Based Regional Classification Method for Apple Diseases and Pests. Appl. Sci. 2024, 14, 7695. [Google Scholar] [CrossRef]

- Duan, Y.; Han, W.; Guo, P.; Wei, X. YOLOv8-GDCI: Research on the Phytophthora Blight Detection Method of Different Parts of Chili Based on Improved YOLOv8 Model. Agronomy 2024, 14, 2734. [Google Scholar] [CrossRef]

- Ji, W.; Gao, X.; Xu, B.; Pan, Y.; Zhang, Z.; Zhao, D. Apple target recognition method in complex environment based on improved YOLOv4. J. Food Process Eng. 2021, 44, e13866. [Google Scholar] [CrossRef]

- Ji, W.; Pan, Y.; Xu, B.; Wang, J. A real-time apple targets detection method for picking robot based on ShufflenetV2-YOLOX. Agriculture 2022, 12, 856. [Google Scholar] [CrossRef]

- Zhang, F.; Chen, Z.; Ali, S.; Yang, N.; Fu, S.; Zhang, Y. Multi-class detection of cherry tomatoes using improved Yolov4-tiny model. Int. J. Agric. Biol. Eng. 2023, 16, 225–231. [Google Scholar]

- Pei, H.; Sun, Y.; Huang, H.; Zhang, W.; Sheng, J.; Zhang, Z. Weed Detection in Maize Fields by UAV Images Based on Crop Row Preprocessing and Improved YOLOv4. Agriculture 2022, 12, 975. [Google Scholar] [CrossRef]

- Deng, L.; Miao, Z.; Zhao, X.; Yang, S.; Gao, Y.; Zhai, C.; Zhao, C. HAD-YOLO: An Accurate and Effective Weed Detection Model Based on Improved YOLOV5 Network. Agronomy 2025, 15, 57. [Google Scholar] [CrossRef]

- Liu, Q.; Lv, J.; Zhang, C. MAE-YOLOv8-based small object detection of green crisp plum in real complex orchard environments. Comput. Electron. Agric. 2024, 226, 109458. [Google Scholar] [CrossRef]

- Zhang, W.; Chen, X.; Qi, J.; Yang, S. Automatic instance segmentation of orchard canopy in unmanned aerial vehicle imagery using deep learning. Front. Plant Sci. 2022, 13, 1041791. [Google Scholar] [CrossRef]

- Cheng, Z.; Cheng, Y.; Li, M.; Dong, X.; Gong, S.; Min, X. Detection of Cherry Tree Crown Based on Improved LA-dpv3+ Algorithm. Forests 2023, 14, 2404. [Google Scholar] [CrossRef]

- Mahmud, M.S.; He, L.; Zahid, A.; Heinemann, P.; Choi, D.; Krawczyk, G.; Zhu, H. Detection and infected area segmentation of apple fire blight using image processing and deep transfer learning for site-specific management. Comput. Electron. Agric. 2023, 209, 107862. [Google Scholar] [CrossRef]

- Anagnostis, A.; Tagarakis, A.C.; Asiminari, G.; Papageorgiou, E.; Kateris, D.; Moshou, D.; Bochtis, D. A deep learning approach for anthracnose infected trees classification in walnut orchards. Comput. Electron. Agric. 2021, 182, 105998. [Google Scholar] [CrossRef]

- Khan, S.; Tufail, M.; Khan, M.T.; Khan, Z.A.; Anwar, S. Deep learning-based identification system of weeds and crops in strawberry and pea fields for a precision agriculture sprayer. Precis. Agric. 2021, 22, 1711–1727. [Google Scholar] [CrossRef]

- Zhang, X.; Xun, Y.; Chen, Y. Automated identification of citrus diseases in orchards using deep learning. Biosyst. Eng. 2022, 223, 249–258. [Google Scholar] [CrossRef]

- Liu, J.; Abbas, I.; Noor, R.S. Development of Deep Learning-Based Variable Rate Agrochemical Spraying System for Targeted Weeds Control in Strawberry Crop. Agronomy 2021, 11, 1480. [Google Scholar] [CrossRef]

- Zhao, G.; Yang, R.; Jing, X.; Zhang, H.; Wu, Z.; Sun, X.; Fu, L. Phenotyping of individual apple tree in modern orchard with novel smartphone-based heterogeneous binocular vision and YOLOv5s. Comput. Electron. Agric. 2023, 209, 107814. [Google Scholar] [CrossRef]

- Mirbod, O.; Choi, D.; Heinemann, P.H.; Marini, R.P.; He, L. On-tree apple fruit size estimation using stereo vision with deep learning-based occlusion handling. Biosyst. Eng. 2023, 226, 27–42. [Google Scholar] [CrossRef]

- Zhang, L.; Hao, Q.; Mao, Y.; Su, J.; Cao, J. Beyond Trade-Off: An Optimized Binocular Stereo Vision Based Depth Estimation Algorithm for Designing Harvesting Robot in Orchards. Agriculture 2023, 13, 1117. [Google Scholar] [CrossRef]

- Wang, C.; Zou, X.; Tang, Y.; Luo, L.; Feng, W. Localisation of litchi in an unstructured environment using binocular stereo vision. Biosyst. Eng. 2016, 145, 39–51. [Google Scholar] [CrossRef]

- Zheng, S.; Liu, Y.; Weng, W.; Jia, X.; Yu, S.; Wu, Z. Tomato Recognition and Localization Method Based on Improved YOLOv5n-seg Model and Binocular Stereo Vision. Agronomy 2023, 13, 2339. [Google Scholar] [CrossRef]

- Liu, T.H.; Nie, X.N.; Wu, J.M.; Zhang, D.; Liu, W.; Cheng, Y.F.; Qi, L. Pineapple (Ananas comosus) fruit detection and localization in natural environment based on binocular stereo vision and improved YOLOv3 model. Precis. Agric. 2023, 24, 139–160. [Google Scholar] [CrossRef]

- Pan, S.; Ahamed, T. Pear Recognition in an Orchard from 3D Stereo Camera Datasets to Develop a Fruit Picking Mechanism Using Mask R-CNN. Sensors 2022, 22, 4187. [Google Scholar] [CrossRef]

- Sun, H.; Xue, J.; Zhang, Y.; Li, H.; Liu, R.; Song, Y.; Liu, S. Novel method of rapid and accurate tree trunk location in pear orchard combining stereo vision and semantic segmentation. Measurement 2025, 242, 116127. [Google Scholar] [CrossRef]

- Tang, Y.; Zhou, H.; Wang, H.; Zhang, Y. Fruit detection and positioning technology for a Camellia oleifera C. Abel orchard based on improved YOLOv4-tiny model and binocular stereo vision. Expert Syst. Appl. 2023, 211, 118573. [Google Scholar] [CrossRef]

- Zeng, X.; Wan, H.; Fan, Z.; Yu, X.; Guo, H. MT-MVSNet: A lightweight and highly accurate convolutional neural network based on mobile transformer for 3D reconstruction of orchard fruit tree branches. Expert Syst. Appl. 2025, 268, 126220. [Google Scholar] [CrossRef]

- Yu, T.; Hu, C.; Xie, Y.; Liu, J.; Li, P. Mature pomegranate fruit detection and location combining improved F-PointNet with 3D point cloud clustering in orchard. Comput. Electron. Agric. 2022, 200, 107233. [Google Scholar] [CrossRef]

- Xue, X.; Luo, Q.; Bu, M.; Li, Z.; Lyu, S.; Song, S. Citrus Tree Canopy Segmentation of Orchard Spraying Robot Based on RGB-D Image and the Improved DeepLabv3+. Agronomy 2023, 13, 2059. [Google Scholar] [CrossRef]

- Zhang, J.; He, L.; Karkee, M.; Zhang, Q.; Zhang, X.; Gao, Z. Branch detection for apple trees trained in fruiting wall architecture using depth features and Regions-Convolutional Neural Network (R-CNN). Comput. Electron. Agric. 2018, 155, 386–393. [Google Scholar] [CrossRef]

- Xiao, K.; Ma, Y.; Gao, G. An intelligent precision orchard pesticide spray technique based on the depth-of-field extraction algorithm. Comput. Electron. Agric. 2017, 133, 30–36. [Google Scholar] [CrossRef]

- Yu, C.; Shi, X.; Luo, W.; Feng, J.; Zheng, Z.; Yorozu, A.; Guo, J. MLG-YOLO: A Model for Real-Time Accurate Detection and Localization of Winter Jujube in Complex Structured Orchard Environments. Plant Phenomics 2024, 6, 0258. [Google Scholar] [CrossRef]

- Sun, X.; Fang, W.; Gao, C.; Fu, L.; Majeed, Y.; Liu, X.; Li, R. Remote estimation of grafted apple tree trunk diameter in modern orchard with RGB and point cloud based on SOLOv2. Comput. Electron. Agric. 2022, 199, 107209. [Google Scholar] [CrossRef]

- Tong, S.; Zhang, J.; Li, W.; Wang, Y.; Kang, F. An image-based system for locating pruning points in apple trees using instance segmentation and RGB-D images. Biosyst. Eng. 2023, 236, 277–286. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Xiong, J.; Li, J. Guava Detection and Pose Estimation Using a Low-Cost RGB-D Sensor in the Field. Sensors 2019, 19, 428. [Google Scholar] [CrossRef]

- Wang, X.; Kang, H.; Zhou, H.; Au, W.; Chen, C. Geometry-aware fruit grasping estimation for robotic harvesting in apple orchards. Comput. Electron. Agric. 2022, 193, 106716. [Google Scholar] [CrossRef]

- Qi, Z.; Hua, W.; Zhang, Z.; Deng, X.; Yuan, T.; Zhang, W. A novel method for tomato stem diameter measurement based on improved YOLOv8-seg and RGB-D data. Comput. Electron. Agric. 2024, 226, 109387. [Google Scholar] [CrossRef]

- Ahmed, D.; Sapkota, R.; Churuvija, M.; Karkee, M. Estimating optimal crop-load for individual branches in apple tree canopies using YOLOv8. Comput. Electron. Agric. 2025, 229, 109697. [Google Scholar] [CrossRef]

- Feng, Y.; Ma, W.; Tan, Y.; Yan, H.; Qian, J.; Tian, Z.; Gao, A. Approach of Dynamic Tracking and Counting for Obscured Citrus in Smart Orchard Based on Machine Vision. Appl. Sci. 2024, 14, 1136. [Google Scholar] [CrossRef]

- Abeyrathna, R.M.R.D.; Nakaguchi, V.M.; Minn, A.; Ahamed, T. Recognition and Counting of Apples in a Dynamic State Using a 3D Camera and Deep Learning Algorithms for Robotic Harvesting Systems. Sensors 2023, 23, 3810. [Google Scholar] [CrossRef]

- Underwood, J.P.; Jagbrant, G.; Nieto, J.I.; Sukkarieh, S. Lidar-based tree recognition and platform localization in orchards. J. Field robotics 2015, 32, 1056–1074. [Google Scholar] [CrossRef]

- Shen, Y.; Zhu, H.; Liu, H.; Chen, Y.; Ozkan, E. Development of a laser-guided, embedded-computer-controlled, air-assisted precision sprayer. Trans. ASABE 2017, 60, 1827–1838. [Google Scholar] [CrossRef]

- Berk, P.; Stajnko, D.; Belsak, A.; Hocevar, M. Digital evaluation of leaf area of an individual tree canopy in the apple orchard using the LIDAR measurement system. Comput. Electron. Agric. 2020, 169, 105158. [Google Scholar] [CrossRef]

- Lu, H.; Xu, S.; Cao, S. SGTBN: Generating dense depth maps from single-line LiDAR. IEEE Sens. J. 2021, 21, 19091–19100. [Google Scholar] [CrossRef]

- Liu, J.; Liang, H.; Wang, Z.; Chen, X. A Framework for Applying Point Clouds Grabbed by Multi-Beam LIDAR in Perceiving the Driving Environment. Sensors 2015, 15, 21931–21956. [Google Scholar] [CrossRef]

- Yan, T.; Zhu, H.; Sun, L.; Wang, X.; Ling, P. Investigation of an experimental laser sensor-guided spray control system for greenhouse variable-rate applications. Trans. ASABE 2019, 62, 899–911. [Google Scholar] [CrossRef]

- Gu, W.; Wen, W.; Wu, S.; Zheng, C.; Lu, X.; Chang, W.; Xiao, P.; Guo, X. 3D Reconstruction of Wheat Plants by Integrating Point Cloud Data and Virtual Design Optimization. Agriculture 2024, 14, 391. [Google Scholar] [CrossRef]

- Sun, Y.; Luo, Y.; Zhang, Q.; Xu, L.; Wang, L.; Zhang, P. Estimation of Crop Height Distribution for Mature Rice Based on a Moving Surface and 3D Point Cloud Elevation. Agronomy 2022, 12, 836. [Google Scholar] [CrossRef]

- Fieber, K.D.; Davenport, I.J.; Ferryman, J.M.; Gurney, R.J.; Walker, J.P.; Hacker, J.M. Analysis of full-waveform LiDAR data for classification of an orange orchard scene. ISPRS J. Photogramm. Remote Sens. 2013, 82, 63–82. [Google Scholar] [CrossRef]

- Rosell, J.R.; Llorens, J.; Sanz, R.; Arnó, J.; Ribes-Dasi, M.; Masip, J.; Palacín, J. Obtaining the three-dimensional structure of tree orchards from remote 2D terrestrial LIDAR scanning. Agric. For. Meteorol. 2009, 149, 1505–1515. [Google Scholar] [CrossRef]

- Underwood, J.P.; Hung, C.; Whelan, B.; Sukkarieh, S. Mapping almond orchard canopy volume, flowers, fruit and yield using lidar and vision sensors. Comput. Electron. Agric. 2016, 130, 83–96. [Google Scholar] [CrossRef]

- Sanz, R.; Rosell, J.R.; Llorens, J.; Gil, E.; Planas, S. Relationship between tree row LIDAR-volume and leaf area density for fruit orchards and vineyards obtained with a LIDAR 3D Dynamic Measurement System. Agric. For. Meteorol. 2013, 171, 153–162. [Google Scholar] [CrossRef]

- Gu, C.; Zhao, C.; Zou, W.; Yang, S.; Dou, H.; Zhai, C. Innovative Leaf Area Detection Models for Orchard Tree Thick Canopy Based on LiDAR Point Cloud Data. Agriculture 2022, 12, 1241. [Google Scholar] [CrossRef]

- Wang, K.; Zhou, J.; Zhang, W.; Zhang, B. Mobile LiDAR Scanning System Combined with Canopy Morphology Extracting Methods for Tree Crown Parameters Evaluation in Orchards. Sensors 2021, 21, 339. [Google Scholar] [CrossRef]

- Murray, J.; Fennell, J.T.; Blackburn, G.A.; Whyatt, J.D.; Li, B. The novel use of proximal photogrammetry and terrestrial LiDAR to quantify the structural complexity of orchard trees. Precis. Agric. 2020, 2, 473–483. [Google Scholar] [CrossRef]

- Wang, M.; Dou, H.; Sun, H.; Zhai, C.; Zhang, Y.; Yuan, F. Calculation Method of Canopy Dynamic Meshing Division Volumes for Precision Pesticide Application in Orchards Based on LiDAR. Agronomy 2023, 13, 1077. [Google Scholar] [CrossRef]

- Luo, S.; Wen, S.; Zhang, L.; Lan, Y.; Chen, X. Extraction of crop canopy features and decision-making for variable spraying based on unmanned aerial vehicle LiDAR data. Comput. Electron. Agric. 2024, 224, 109197. [Google Scholar] [CrossRef]

- Mahmud, M.S.; Zahid, A.; He, L.; Choi, D.; Krawczyk, G.; Zhu, H. LiDAR-sensed tree canopy correction in uneven terrain conditions using a sensor fusion approach for precision sprayers. Comput. Electron. Agric. 2021, 191, 106565. [Google Scholar] [CrossRef]

- Chen, C.; Cao, G.Q.; Li, Y.B.; Liu, D.; Ma, B.; Zhang, J.L.; Li, L.; Hu, J.P. Research on monitoring methods for the appropriate rice harvest period based on multispectral remote sensing. Discret. Dyn. Nat. Soc. 2022, 2022, 1519667. [Google Scholar]

- Zhang, S.; Xue, X.; Chen, C.; Sun, Z.; Sun, T. Development of a low-cost quadrotor UAV based on ADRC for agricultural remote sensing. Int. J. Agric. Biol. Eng. 2019, 12, 82–87. [Google Scholar] [CrossRef]

- Meng, L.; Audenaert, K.; Van Labeke, M.C.; Höfte, M. Detection of Botrytis cinerea on strawberry leaves upon mycelial infection through imaging technique. Sci. Hortic. 2024, 330, 113071. [Google Scholar] [CrossRef]

- Wei, L.; Yang, H.; Niu, Y.; Zhang, Y.; Xu, L.; Chai, X. Wheat biomass, yield, and straw-grain ratio estimation from multi-temporal UAV-based RGB and multispectral images. Biosyst. Eng. 2023, 234, 187–205. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, A.; Zhang, H.; Zhu, Q.; Zhang, H.; Sun, W.; Niu, Y. Estimating Leaf Chlorophyll Content of Winter Wheat from UAV Multispectral Images Using Machine Learning Algorithms under Different Species, Growth Stages, and Nitrogen Stress Conditions. Agriculture 2024, 14, 1064. [Google Scholar] [CrossRef]

- Johansen, K.; Raharjo, T.; McCabe, M.F. Using multi-spectral UAV imagery to extract tree crop structural properties and assess pruning effects. Remote Sens. 2018, 10, 854. [Google Scholar] [CrossRef]

- Chandel, A.K.; Khot, L.R.; Sallato, B. Apple powdery mildew infestation detection and mapping using high-resolution visible and multispectral aerial imaging technique. Sci. Hortic. 2021, 287, 110228. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, Y.; Song, Z.; Jiang, D.; Guo, Y.; Liu, Y.; Chang, Q. Estimating Leaf Area Index in Apple Orchard by UAV Multispectral Images with Spectral and Texture Information. Remote Sens. 2024, 16, 3237. [Google Scholar] [CrossRef]

- Noguera, M.; Aquino, A.; Ponce, J.M.; Cordeiro, A.; Silvestre, J.; Arias-Calderón, R.; Andújar, J.M. Nutritional status assessment of olive crops by means of the analysis and modelling of multispectral images taken with UAVs. Biosyst. Eng. 2021, 211, 1–18. [Google Scholar] [CrossRef]

- Zhao, X.; Zhao, Z.; Zhao, F.; Liu, J.; Li, Z.; Wang, X.; Gao, Y. An Estimation of the Leaf Nitrogen Content of Apple Tree Canopies Based on Multispectral Unmanned Aerial Vehicle Imagery and Machine Learning Methods. Agronomy 2024, 14, 552. [Google Scholar] [CrossRef]

- Sarabia, R.; Aquino, A.; Ponce, J.M.; López, G.; Andújar, J.M. Automated Identification of Crop Tree Crowns from UAV Multispectral Imagery by Means of Morphological Image Analysis. Remote Sens. 2020, 12, 748. [Google Scholar] [CrossRef]

- Adade, S.Y.S.S.; Lin, H.; Johnson, N.A.N.; Nunekpeku, X.; Aheto, J.H.; Ekumah, J.N.; Chen, Q. Advanced Food Contaminant Detection through Multi-Source Data Fusion: Strategies, Applications, and Future Perspectives. Trends Food Sci. Technol. 2024, 156, 104851. [Google Scholar] [CrossRef]

- Cheng, J.; Sun, J.; Shi, L.; Dai, C. An effective method fusing electronic nose and fluorescence hyperspectral imaging for the detection of pork freshness. Food Biosci. 2024, 59, 103880. [Google Scholar] [CrossRef]

- Xu, S.; Xu, X.; Zhu, Q.; Meng, Y.; Yang, G.; Feng, H.; Wang, B. Monitoring leaf nitrogen content in rice based on information fusion of multi-sensor imagery from UAV. Precis. Agric. 2023, 24, 2327–2349. [Google Scholar] [CrossRef]

- Jiang, S.; Qi, P.; Han, L.; Liu, L.; Li, Y.; Huang, Z.; He, X. Navigation system for orchard spraying robot based on 3D LiDAR SLAM with NDT_ICP point cloud registration. Comput. Electron. Agric. 2024, 220, 108870. [Google Scholar] [CrossRef]

- Lin, X.; Chao, S.; Yan, D.; Guo, L.; Liu, Y.; Li, L. Multi-Sensor Data Fusion Method Based on Self-Attention Mechanism. Appl. Sci. 2023, 13, 11992. [Google Scholar] [CrossRef]

- Basir, O.; Yuan, X. Engine fault diagnosis based on multi-sensor information fusion using Dempster–Shafer evidence theory. Inf. Fusion 2007, 8, 379–386. [Google Scholar] [CrossRef]

- Ahmed, S.; Qiu, B.; Ahmad, F.; Kong, C.W.; Xin, H. A state-of-the-art analysis of obstacle avoidance methods from the perspective of an agricultural sprayer UAV’s operation scenario. Agronomy 2021, 11, 1069. [Google Scholar] [CrossRef]

- Chen, R.; Zhang, C.; Xu, B.; Zhu, Y.; Zhao, F.; Han, S.; Yang, H. Predicting individual apple tree yield using UAV multi-source remote sensing data and ensemble learning. Comput. Electron. Agric. 2022, 201, 107275. [Google Scholar] [CrossRef]

- Tang, S.; Xia, Z.; Gu, J.; Wang, W.; Huang, Z.; Zhang, W. High-precision apple recognition and localization method based on RGB-D and improved SOLOv2 instance segmentation. Front. Sustain. Food Syst. 2024, 8, 1403872. [Google Scholar] [CrossRef]

- Sun, J.; Zhang, L.; Zhou, X.; Yao, K.; Tian, Y.; Nirere, A. A method of information fusion for identification of rice seed varieties based on hyperspectral imaging technology. J. Food Process Eng. 2021, 44, e13797. [Google Scholar] [CrossRef]

- Li, Y.; Qi, X.; Cai, Y.; Tian, Y.; Zhu, Y.; Cao, W.; Zhang, X. A Rice Leaf Area Index Monitoring Method Based on the Fusion of Data from RGB Camera and Multi-Spectral Camera on an Inspection Robot. Remote Sens. 2024, 16, 4725. [Google Scholar] [CrossRef]

- Wang, J.; Gao, Z.; Zhang, Y.; Zhou, J.; Wu, J.; Li, P. Real-Time Detection and Location of Potted Flowers Based on a ZED Camera and a YOLO V4-Tiny Deep Learning Algorithm. Horticulturae 2022, 8, 21. [Google Scholar] [CrossRef]

- Wang, R.; Hu, C.; Han, J.; Hu, X.; Zhao, Y.; Wang, Q.; Xie, Y. A Hierarchic Method of Individual Tree Canopy Segmentation Combing UAV Image and LiDAR. Arab. J. Sci. Eng. 2025, 50, 7567–7585. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, H. Evaluation of a laser scanning sensor in detection of complex-shaped targets for variable-rate sprayer development. Trans. ASABE 2016, 59, 1181–1192. [Google Scholar]

- Wang, J.; Zhang, Y.; Gu, R. Research status and prospects on plant canopy structure measurement using visual sensors based on three-dimensional reconstruction. Agriculture 2020, 10, 462. [Google Scholar] [CrossRef]

- Mahmud, M.S.; Zahid, A.; He, L.; Choi, D.; Krawczyk, G.; Zhu, H.; Heinemann, P. Development of a LiDAR-guided section-based tree canopy density measurement system for precision spray applications. Comput. Electron. Agric. 2021, 182, 106053. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, M.; Pan, Q.; Jin, X.; Wang, G.; Zhao, Y.; Hu, Y. Identification of tea plant cultivars based on canopy images using deep learning methods. Sci. Hortic. 2025, 339, 113908. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, Y.; Zhao, Y.; Pan, Q.; Jin, K.; Xu, G.; Hu, Y. Ts-yolo: An all-day and lightweight tea canopy shoots detection model. Agronomy 2023, 13, 1411. [Google Scholar] [CrossRef]

- You, J.; Li, D.; Wang, Z.; Chen, Q.; Ouyang, Q. Prediction and visualization of moisture content in Tencha drying processes by computer vision and deep learning. J. Sci. Food Agric. 2024, 104, 5486–5494. [Google Scholar] [CrossRef]

- Zhang, T.; Zhou, J.; Liu, W.; Yue, R.; Yao, M.; Shi, J.; Hu, J. Seedling-YOLO: High-Efficiency Target Detection Algorithm for Field Broccoli Seedling Transplanting Quality Based on YOLOv7-Tiny. Agronomy 2024, 14, 931. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, Y.; Fan, W.; Liu, J. An Improved YOLOv8 Model for Lotus Seedpod Instance Segmentation in the Lotus Pond Environment. Agronomy 2024, 14, 1325. [Google Scholar] [CrossRef]

- Khan, Z.; Liu, H.; Shen, Y.; Zeng, X. Deep learning improved YOLOv8 algorithm: Real-time precise instance segmentation of crown region orchard canopies in natural environment. Comput. Electron. Agric. 2024, 224, 109168. [Google Scholar] [CrossRef]

- Churuvija, M.; Sapkota, R.; Ahmed, D.; Karkee, M. A pose-versatile imaging system for comprehensive 3D modeling of planar-canopy fruit trees for automated orchard operations. Comput. Electron. Agric. 2025, 230, 109899. [Google Scholar] [CrossRef]

- Xu, S.; Zheng, S.; Rai, R. Dense object detection based canopy characteristics encoding for precise spraying in peach orchards. Comput. Electron. Agric. 2025, 232, 110097. [Google Scholar] [CrossRef]

- Vinci, A.; Brigante, R.; Traini, C.; Farinelli, D. Geometrical Characterization of Hazelnut Trees in an Intensive Orchard by an Unmanned Aerial Vehicle (UAV) for Precision Agriculture Applications. Remote Sens. 2023, 15, 541. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhou, J.; Yang, Y.; Liu, L.; Liu, F.; Kong, W. Rapid Target Detection of Fruit Trees Using UAV Imaging and Improved Light YOLOv4 Algorithm. Remote Sens. 2022, 14, 4324. [Google Scholar] [CrossRef]

- Bing, Q.; Zhang, R.; Zhang, L.; Li, L.; Chen, L. UAV-SfM Photogrammetry for Canopy Characterization Toward Unmanned Aerial Spraying Systems Precision Pesticide Application in an Orchard. Drones 2025, 9, 151. [Google Scholar] [CrossRef]

- Guo, Y.; Gao, J.; Tunio, M.H.; Wang, L. Study on the identification of mildew disease of cuttings at the base of mulberry cuttings by aeroponics rapid propagation based on a BP neural network. Agronomy 2022, 13, 106. [Google Scholar] [CrossRef]

- Zuo, Z.; Gao, S.; Peng, H.; Xue, Y.; Han, L.; Ma, G.; Mao, H. Lightweight Detection of Broccoli Heads in Complex Field Environments Based on LBDC-YOLO. Agronomy 2024, 14, 2359. [Google Scholar] [CrossRef]

- Zhou, X.; Chen, W.; Wei, X. Improved Field Obstacle Detection Algorithm Based on YOLOv8. Agriculture 2024, 14, 2263. [Google Scholar] [CrossRef]

- Storey, G.; Meng, Q.; Li, B. Leaf Disease Segmentation and Detection in Apple Orchards for Precise Smart Spraying in Sustainable Agriculture. Sustainability 2022, 14, 1458. [Google Scholar] [CrossRef]

- Apacionado, B.V.; Ahamed, T. Sooty Mold Detection on Citrus Tree Canopy Using Deep Learning Algorithms. Sensors 2023, 23, 8519. [Google Scholar] [CrossRef]

- Zhou, X.; Sun, J.; Mao, H.; Wu, X.; Zhang, X.; Yang, N. Visualization research of moisture content in leaf lettuce leaves based on WT-PLSR and hyperspectral imaging technology. J. Food Process Eng. 2018, 41, e12647. [Google Scholar] [CrossRef]

- Wang, A.; Song, Z.; Xie, Y.; Hu, J.; Zhang, L.; Zhu, Q. Detection of Rice Leaf SPAD and Blast Disease Using Integrated Aerial and Ground Multiscale Canopy Reflectance Spectroscopy. Agriculture 2024, 14, 1471. [Google Scholar] [CrossRef]

- Di Nisio, A.; Adamo, F.; Acciani, G.; Attivissimo, F. Fast Detection of Olive Trees Affected by Xylella Fastidiosa from UAVs Using Multispectral Imaging. Sensors 2020, 20, 4915. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, X.; Wang, M.; Xu, T.; Huang, K.; Sun, Y.; Lv, X. Early detection and lesion visualization of pear leaf anthracnose based on multi-source feature fusion of hyperspectral imaging. Front. Plant Sci. 2024, 15, 1461855. [Google Scholar] [CrossRef]

- Yang, R.; Lu, X.; Huang, J.; Zhou, J.; Jiao, J.; Liu, Y.; Liu, F.; Su, B.; Gu, P. A Multi-Source Data Fusion Decision-Making Method for Disease and Pest Detection of Grape Foliage Based on ShuffleNet V2. Remote Sens. 2021, 13, 5102. [Google Scholar] [CrossRef]

- Zhang, Y.; Cai, W.; Fan, S.; Song, R.; Jin, J. Object Detection Based on YOLOv5 and GhostNet for Orchard Pests. Information 2022, 13, 548. [Google Scholar] [CrossRef]

- Luo, D.; Xue, Y.; Deng, X.; Yang, B.; Chen, H.; Mo, Z. Citrus diseases and pests detection model based on self-attention YOLOV8. IEEE Access 2023, 11, 139872–139881. [Google Scholar] [CrossRef]

- Chao, X.; Sun, G.; Zhao, H.; Li, M.; He, D. Identification of apple tree leaf diseases based on deep learning models. Symmetry 2020, 12, 1065. [Google Scholar] [CrossRef]

- Sun, H.; Xu, H.; Liu, B.; He, D.; He, J.; Zhang, H.; Geng, N. MEAN-SSD: A novel real-time detector for apple leaf diseases using improved light-weight convolutional neural networks. Comput. Electron. Agric. 2021, 189, 106379. [Google Scholar] [CrossRef]

- Memon, M.S.; Chen, S.; Shen, B.; Liang, R.; Tang, Z.; Wang, S.; Memon, N. Automatic visual recognition, detection and classification of weeds in cotton fields based on machine vision. Crop Prot. 2025, 187, 106966. [Google Scholar] [CrossRef]

- Chen, S.; Memon, M.S.; Shen, B.; Guo, J.; Du, Z.; Tang, Z.; Memon, H. Identification of weeds in cotton fields at various growth stages using color feature techniques. Ital. J. Agron. 2024, 19, 100021. [Google Scholar] [CrossRef]

- Su, J.; Yi, D.; Coombes, M.; Liu, C.; Zhai, X.; McDonald-Maier, K.; Chen, W.H. Spectral analysis and mapping of blackgrass weed by leveraging machine learning and UAV multispectral imagery. Comput. Electron. Agric. 2022, 192, 106621. [Google Scholar] [CrossRef]

- Zisi, T.; Alexandridis, T.K.; Kaplanis, S.; Navrozidis, I.; Tamouridou, A.-A.; Lagopodi, A.; Moshou, D.; Polychronos, V. Incorporating Surface Elevation Information in UAV Multispectral Images for Mapping Weed Patches. J. Imaging 2018, 4, 132. [Google Scholar] [CrossRef]

- Tao, T.; Wei, X. STBNA-YOLOv5: An Improved YOLOv5 Network for Weed Detection in Rapeseed Field. Agriculture 2025, 15, 22. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, X.; Sun, J.; Yu, T.; Cai, Z.; Zhang, Z.; Mao, H. Low-cost lettuce height measurement based on depth vision and lightweight instance segmentation model. Agriculture 2024, 14, 1596. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, X.; Ma, G.; Du, X.; Shaheen, N.; Mao, H. Recognition of weeds at asparagus fields using multi-feature fusion and backpropagation neural network. Int. J. Agric. Biol. Eng. 2021, 14, 190–198. [Google Scholar] [CrossRef]

- Zhu, H.; Zhang, Y.; Mu, D.; Bai, L.; Wu, X.; Zhuang, H.; Li, H. Research on improved YOLOx weed detection based on lightweight attention module. Crop Prot. 2024, 177, 106563. [Google Scholar] [CrossRef]

- Sampurno, R.M.; Liu, Z.; Abeyrathna, R.M.R.D.; Ahamed, T. Intrarow Uncut Weed Detection Using You-Only-Look-Once Instance Segmentation for Orchard Plantations. Sensors 2024, 24, 893. [Google Scholar] [CrossRef]

- Jin, T.; Liang, K.; Lu, M.; Zhao, Y.; Xu, Y. WeedsSORT: A weed tracking-by-detection framework for laser weeding applications within precision agriculture. Smart Agric. Technol. 2025, 11, 100883. [Google Scholar] [CrossRef]

- Fan, X.; Chai, X.; Zhou, J.; Sun, T. Deep learning based weed detection and target spraying robot system at seedling stage of cotton field. Comput. Electron. Agric. 2023, 214, 108317. [Google Scholar] [CrossRef]

- Xu, Y.; Gao, Z.; Khot, L.; Meng, X.; Zhang, Q. A real-time weed mapping and precision herbicide spraying system for row crops. Sensors 2018, 18, 4245. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Cui, Z.; Li, M.; Li, J.; Gao, D.; Ma, F.; Wang, Y. A grapevine trunks and intra-plant weeds segmentation method based on improved Deeplabv3 Plus. Comput. Electron. Agric. 2024, 227, 109568. [Google Scholar] [CrossRef]

- Sabarina, K.; Priya, N. Lowering data dimensionality in big data for the benefit of precision agriculture. Procedia Comput. Sci. 2015, 48, 548–554. [Google Scholar] [CrossRef][Green Version]

- San Emeterio de la Parte, M.; Lana Serrano, S.; Muriel Elduayen, M.; Martínez-Ortega, J.-F. Spatio-Temporal Semantic Data Model for Precision Agriculture IoT Networks. Agriculture 2023, 13, 360. [Google Scholar] [CrossRef]

| Researcher | Collection Equipment | Image Data | Data Usage | Ref. |

|---|---|---|---|---|

| Cheng et al. | Sony EXMOR 1/2.3 | Cherry tree crown | Canopy detection | [57] |

| Mahmud et al. | Logitech C920 | Apple tree crown | Canopy segmentation | [58] |

| Anagnostis et al. | RX100 II | Leaf diseases and pests | Disease and pest area Segmentation | [59] |

| Khan et al. | DJI Spark Camera | Weeds in the strawberry orchard | Weed detection | [60] |

| Zhang et al. | DSC-W170 | Citrus diseases | Disease classification | [61] |

| Liu et al. | Aluratek AWC01F | Weeds in the strawberry orchard | Weed detection | [62] |

| Researcher | Collection Equipment | Image Data | Data Usage | Ref. |

|---|---|---|---|---|

| Sun et al. | Kinect V2 | RGB and depth images of apple trees | Phenotypic analysis of fruit trees | [78] |

| Tong et al. | Realsense D435i | RGB and depth images of apple trees | Pruning point localization of fruit trees | [79] |

| Zhang et al. | Kinect V2 | RGB and depth images of guava | Detection and localization of guava | [80] |

| Wang et al. | Realsense D435 | RGB and depth images of apple trees | Fruit localization and pose detection | [81] |

| Qiu et al. | Microsoft Azure DK | RGB and depth images of tomato plants | Branch and trunk dimension analysis | [82] |

| Sun et al. | Azure Kinect DK AI | RGB and depth images of apple trees | Branch diameter estimation | [83] |

| Product Name | Line Count | Range Capability | Accuracy | Frame Rate |

|---|---|---|---|---|

| Sick LMS111-10100 | 1 | 0.5 m~20 m | ±30 mm | 25 Hz/50 Hz |

| RS-16 LiDAR | 16 | 0.4 m~150 m | ±2 cm | 5 Hz/10 Hz/20 Hz |

| Sick LMS511-20100 PRO | 1 | 0.2 m~80 m | ±12 mm | 25 Hz/35 Hz/50 Hz/75 Hz/100 Hz |

| Helios 16 | 16 | 0.2 m~150 m | 1 cm | 5 Hz/10 Hz/20 Hz |

| Researcher | Collection Equipment | Image Data | Data Usage | Ref. |

|---|---|---|---|---|

| Underwood et al. | SICK LMS-291 | Apricot tree point cloud data | Orchard yield assessment | [96] |

| Sanz et al. | Sick LMS200 | Pear tree point cloud data | Leaf area density (LAD) calculation | [97] |

| Gu et al. | Sick LMS111–10100 | Apple tree canopy point cloud data | Canopy leaf area calculation | [98] |

| Wang et al. | RoboSense RS-16 | Fruit tree canopy point cloud data | Canopy morphological parameter measurement | [99] |

| Qiu et al. | Riegl LMS Z210ii | Fruit tree point cloud data | Tree structure quantification | [100] |

| Sun et al. | Sick LMS111-10100 | Fruit tree canopy point cloud data | Canopy volume calculation | [101] |

| Researcher | Collection Equipment | Image Data | Data Usage | Ref. |

|---|---|---|---|---|

| Yu et al. | CI-110 | Apple canopy LAI image | Apple orchard leaf area index calculation | [111] |

| Noguera et al. | MicaSense RedEdge-M | Olive tree multispectral data | Nutritional status assessment of olive crops | [112] |

| Zhao et al. | Micro-MCA Snap | Multispectral images of apple tree canopies | Leaf Nitrogen Content Estimation (LNCE) | [113] |

| Sarabia et al. | FLIR C3 | Multispectral Images of Apple Trees | Canopy detection | [114] |

| Researcher | Research Object | Research Method | Research Objective | Ref. |

|---|---|---|---|---|

| Churuvija et al. | Cherry tree canopy | Multi-functional posture imaging | Analysis of canopy structural parameters | [137] |

| Xu et al. | Peach tree canopy | Depth-enhanced object detection algorithm | Canopy detection and precision spraying | [138] |

| Vinci et al. | Hazelnut tree canopy | Point cloud reconstruction + DSM analysis + NDVI fusion | Canopy identification | [139] |

| Zhu et al. | Fruit tree canopy | YOLOv4 | Canopy detection and counting | [140] |

| Bing et al. | Mango tree canopy | SfM modeling + LiDAR point cloud comparative analysis | Canopy parameter extraction and variable spray map generation | [141] |

| Researcher | Research Object | Research Method | Research Objective | Ref. |

|---|---|---|---|---|

| Jin et al. | Field weeds | YOLOv11-TA + SuperPoint + SuperGlue + Adaptive EKF | Weed detection and laser weeding | [165] |

| Fan et al. | Field weeds | Improved Faster R-CNN (CBAM + BiFPN) | Weed detection and precision spraying | [166] |

| Xu et al. | Field weeds | IPSO algorithm + Horizontal histogram | Weed detection and variable spraying | [167] |

| Yang et al. | Vineyard weeds | Improved Deeplabv3 | Weed detection | [168] |

| Technology Type | Low-Cost Solution | High-Cost Solution | Core Factors Affecting Price Difference |

|---|---|---|---|

| Monocular Vision | USD 70–USD 420 | USD 1120–USD 4200 | Resolution (60 fps > 200 fps), low-light performance (20 db > 50 db) |

| Binocular Vision | USD 350–USD 1120 | USD 2800–USD 7000 | Depth calculation accuracy (5% < 1%), effective coverage (3 m > 20 m) |

| RGB-D | USD 252–USD 840 | USD 2100–USD 5600 | Point cloud density (50 K points > 1 M points) |

| LiDAR | USD 210–USD 1680 | USD 7000–USD 42,000 | Angle resolution (1° > 0.1°), high penetration rate (30% > 90%) |

| Multispectral | USD 1120–USD 4900 | USD 8400–USD 35,000 | Number of bands (5~12), accuracy (±8%~±1%) |

| Hyperspectral | USD 1400–USD 11,200 | USD 28,000–USD 140,000 | Spectral channels (50~500), sampling speed (1 fps~100 fps) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Zhang, Z.; Jia, W.; Ou, M.; Dong, X.; Dai, S. A Review of Environmental Sensing Technologies for Targeted Spraying in Orchards. Horticulturae 2025, 11, 551. https://doi.org/10.3390/horticulturae11050551

Wang Y, Zhang Z, Jia W, Ou M, Dong X, Dai S. A Review of Environmental Sensing Technologies for Targeted Spraying in Orchards. Horticulturae. 2025; 11(5):551. https://doi.org/10.3390/horticulturae11050551

Chicago/Turabian StyleWang, Yunfei, Zhengji Zhang, Weidong Jia, Mingxiong Ou, Xiang Dong, and Shiqun Dai. 2025. "A Review of Environmental Sensing Technologies for Targeted Spraying in Orchards" Horticulturae 11, no. 5: 551. https://doi.org/10.3390/horticulturae11050551

APA StyleWang, Y., Zhang, Z., Jia, W., Ou, M., Dong, X., & Dai, S. (2025). A Review of Environmental Sensing Technologies for Targeted Spraying in Orchards. Horticulturae, 11(5), 551. https://doi.org/10.3390/horticulturae11050551