A Two-Stage Canopy Extraction Method Utilizing Multispectral Images to Enhance the Estimation of Canopy Nitrogen Content in Pear Orchards with Full Grass Cover

Abstract

1. Introduction

2. Materials and Methods

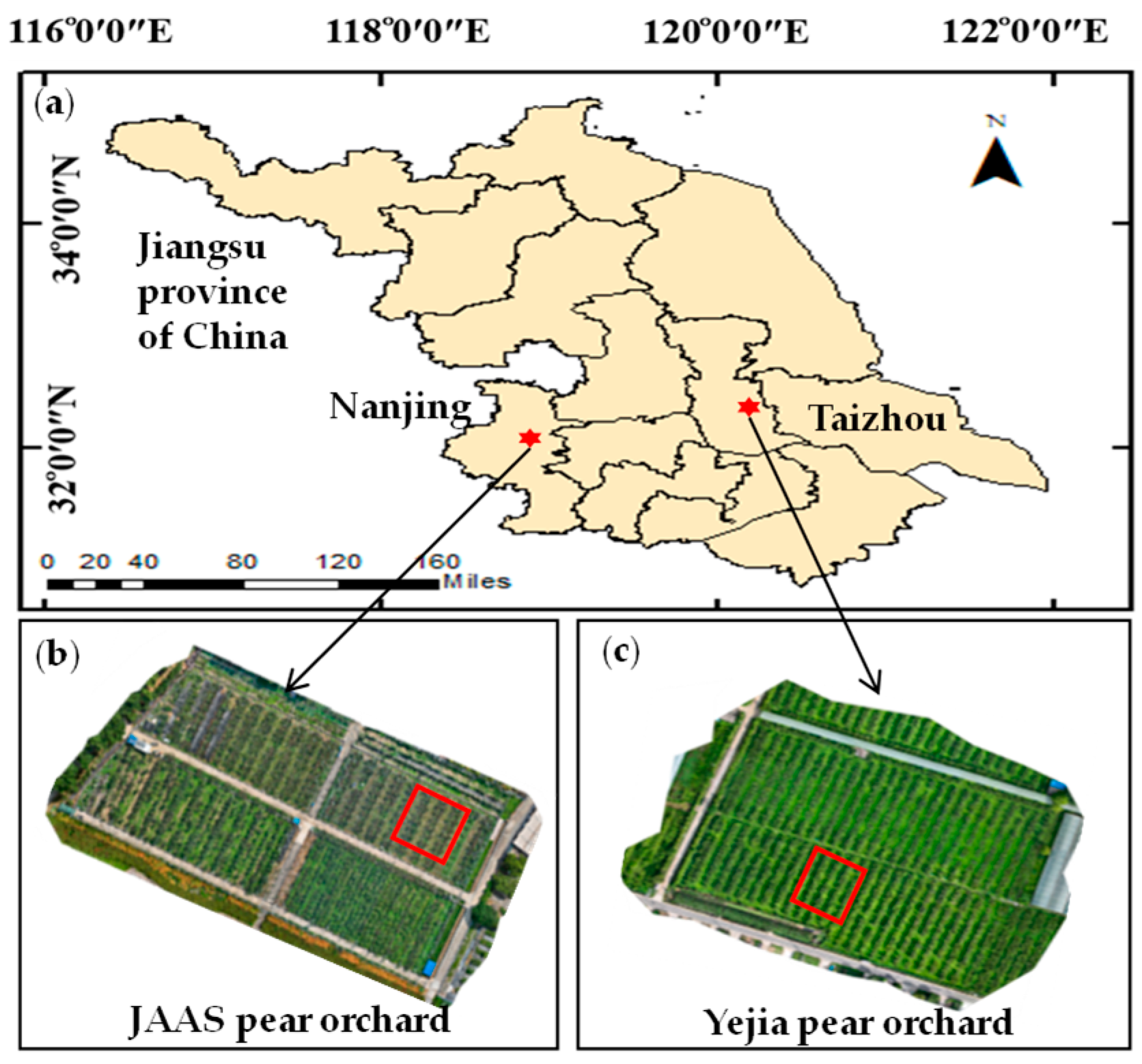

2.1. Study Areas

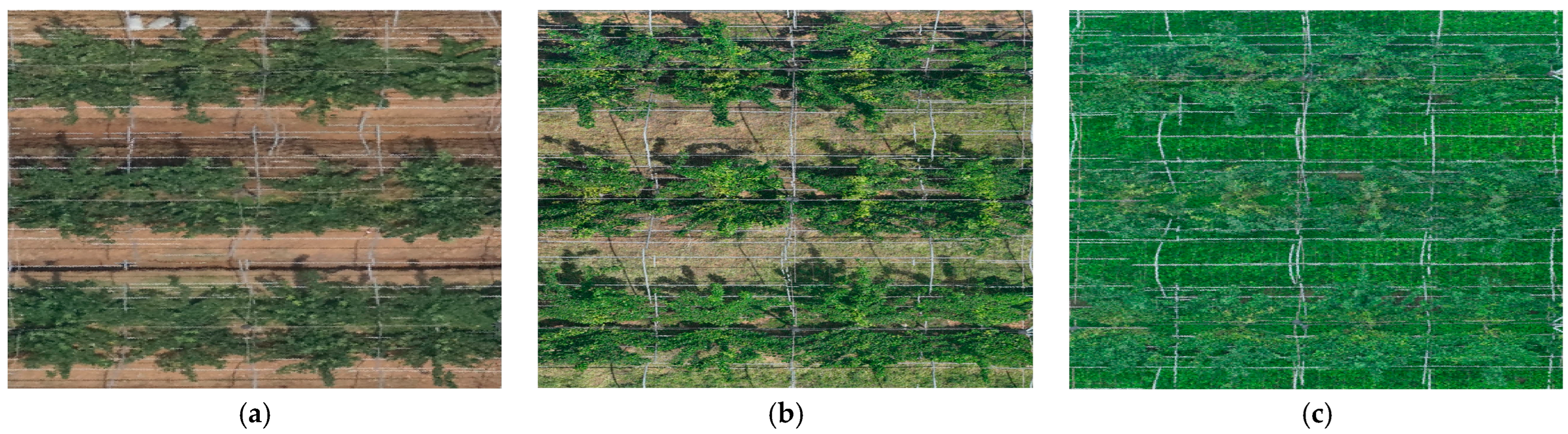

2.2. Data Collection and Preprocessing

2.2.1. Canopy-Scale Data Collection and Preprocessing

2.2.2. Leaf-Scale Data Collection and Preprocessing

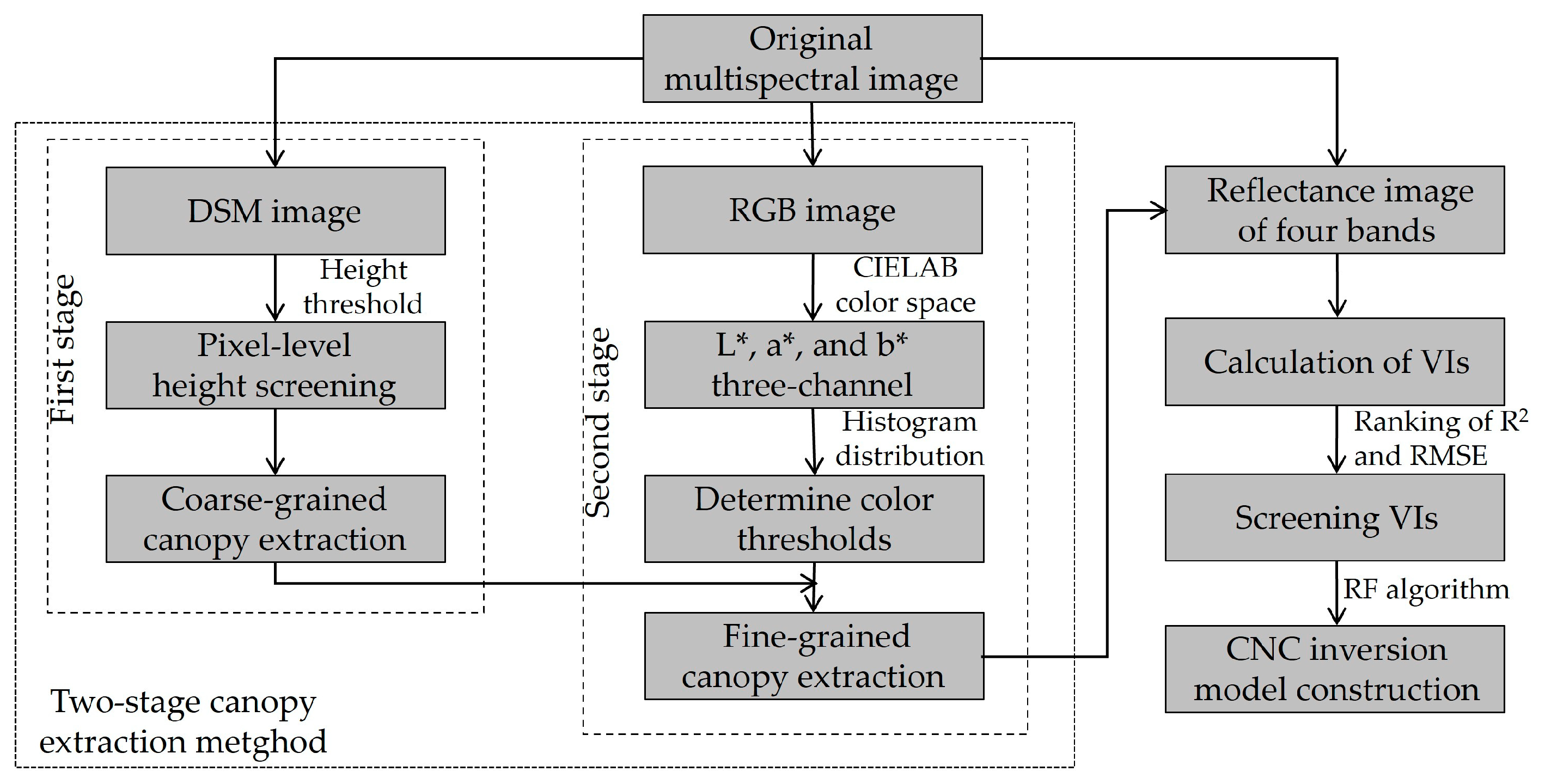

2.3. Principle of Two-Stage Canopy Extraction Method

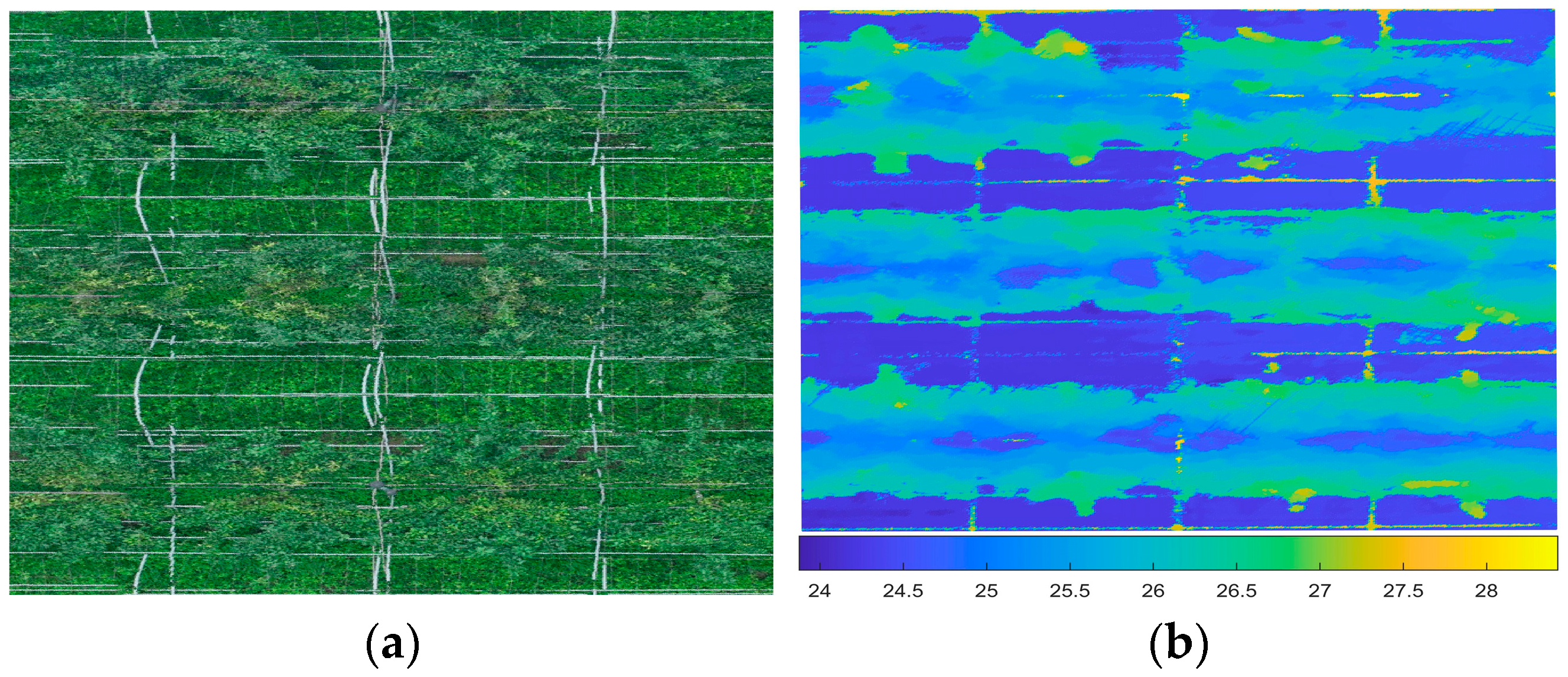

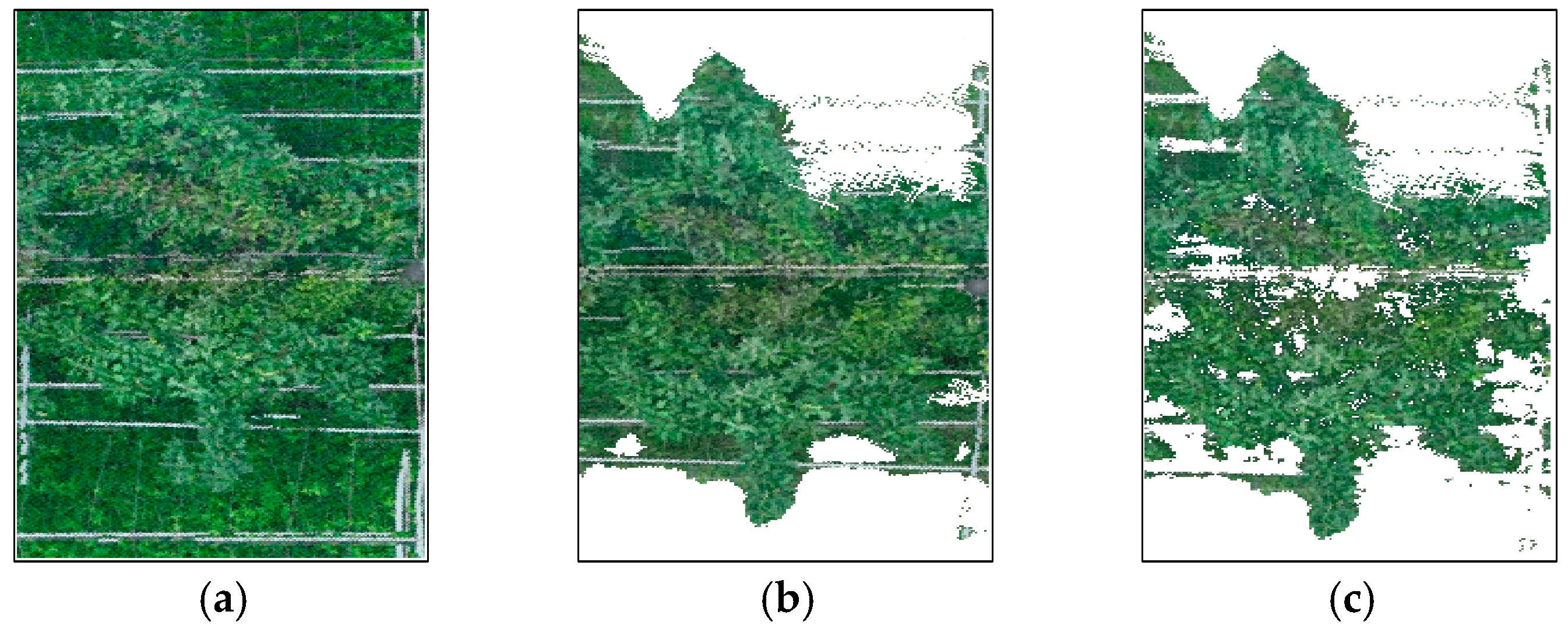

2.3.1. Coarse-Grained Canopy Extraction Based on Height Difference

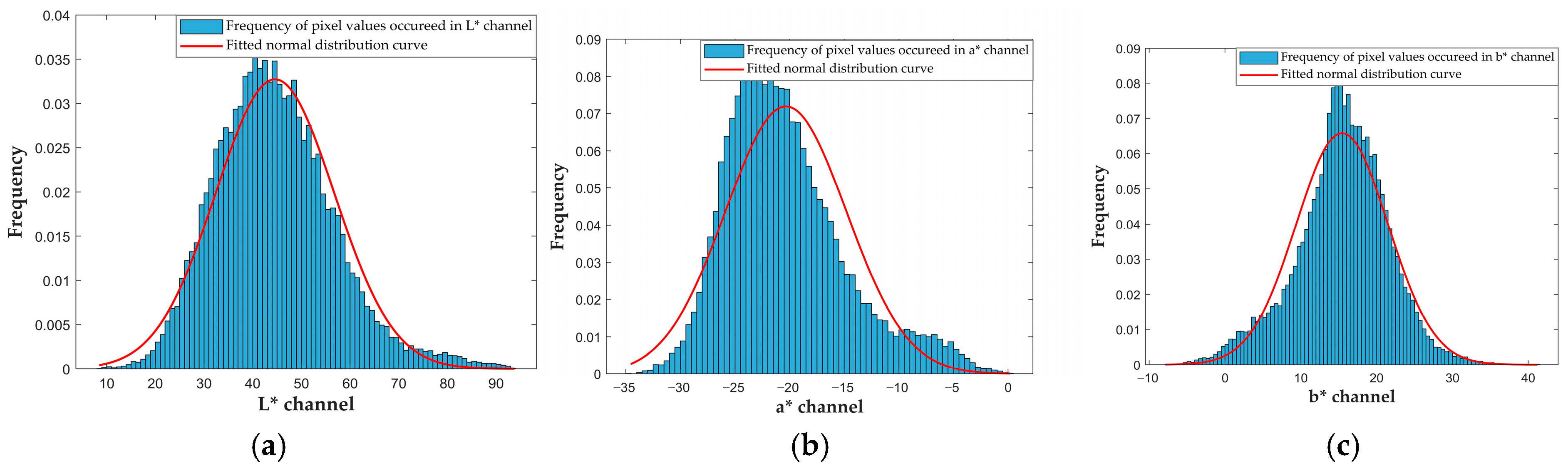

2.3.2. Fine-Grained Canopy Extraction Based on Color Thresholds

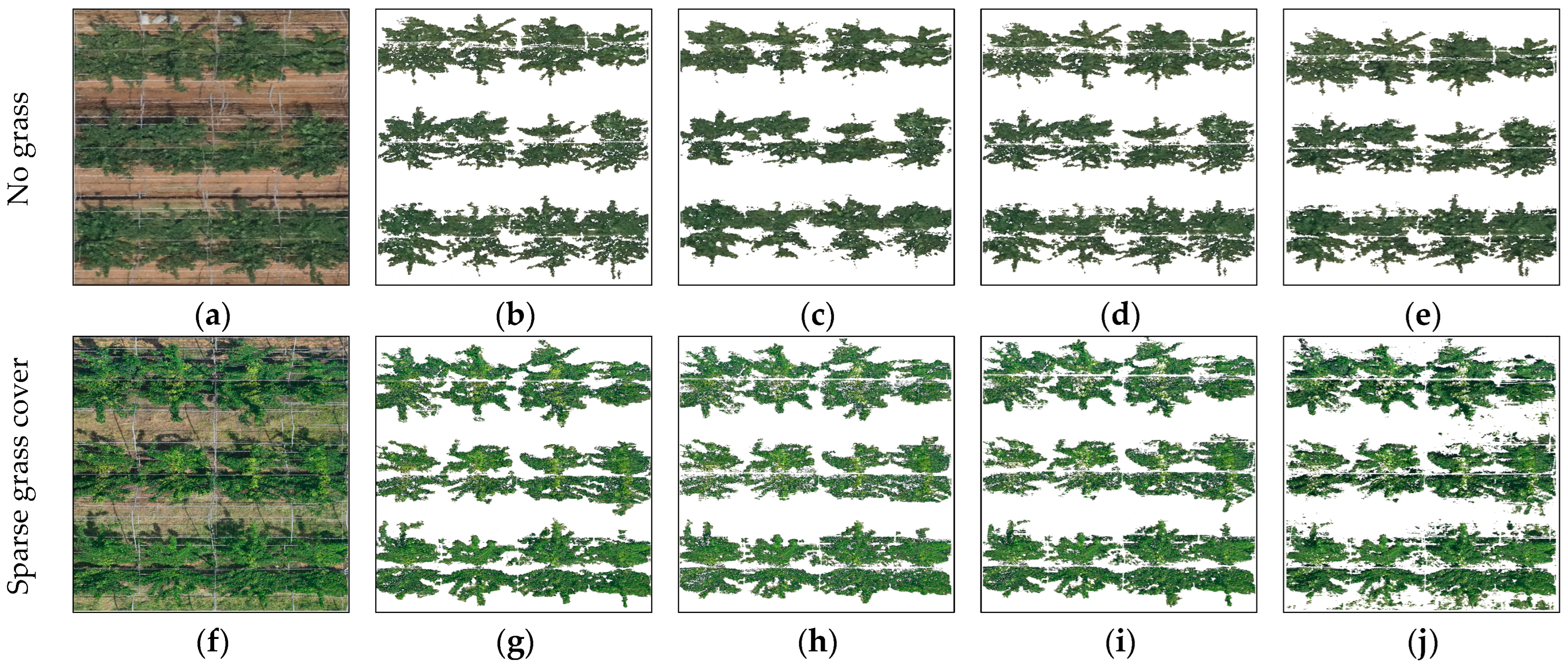

2.3.3. Baseline Methods

2.3.4. Accuracy Evaluation for Canopy Extraction

2.4. Construction of CNC Inversion Model

2.4.1. Calculation and Screening of VIs

2.4.2. Establishment and Verification of CNC Inversion Model

2.4.3. Accuracy Evaluation for CNC Inversion

2.5. Technical Routes

3. Results

3.1. Determination of Color Thresholds

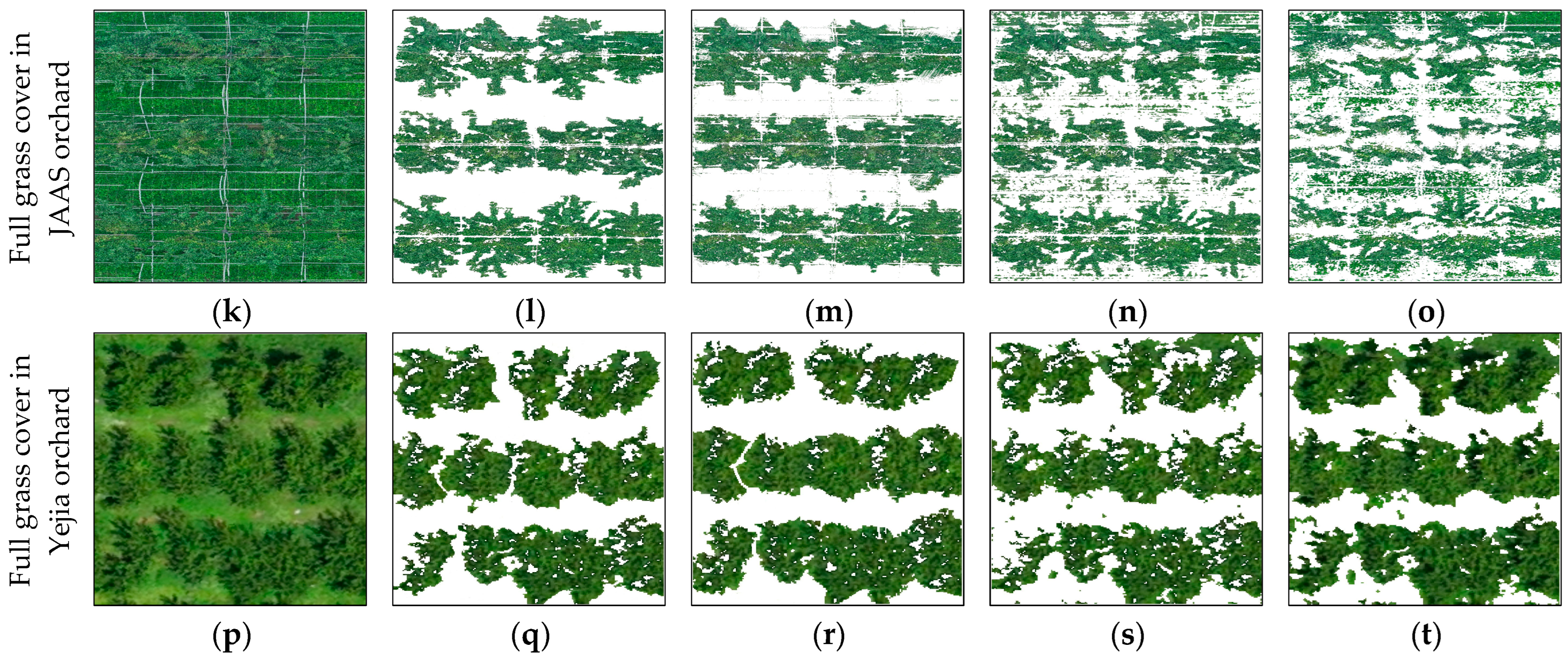

3.2. Results of Canopy Extraction

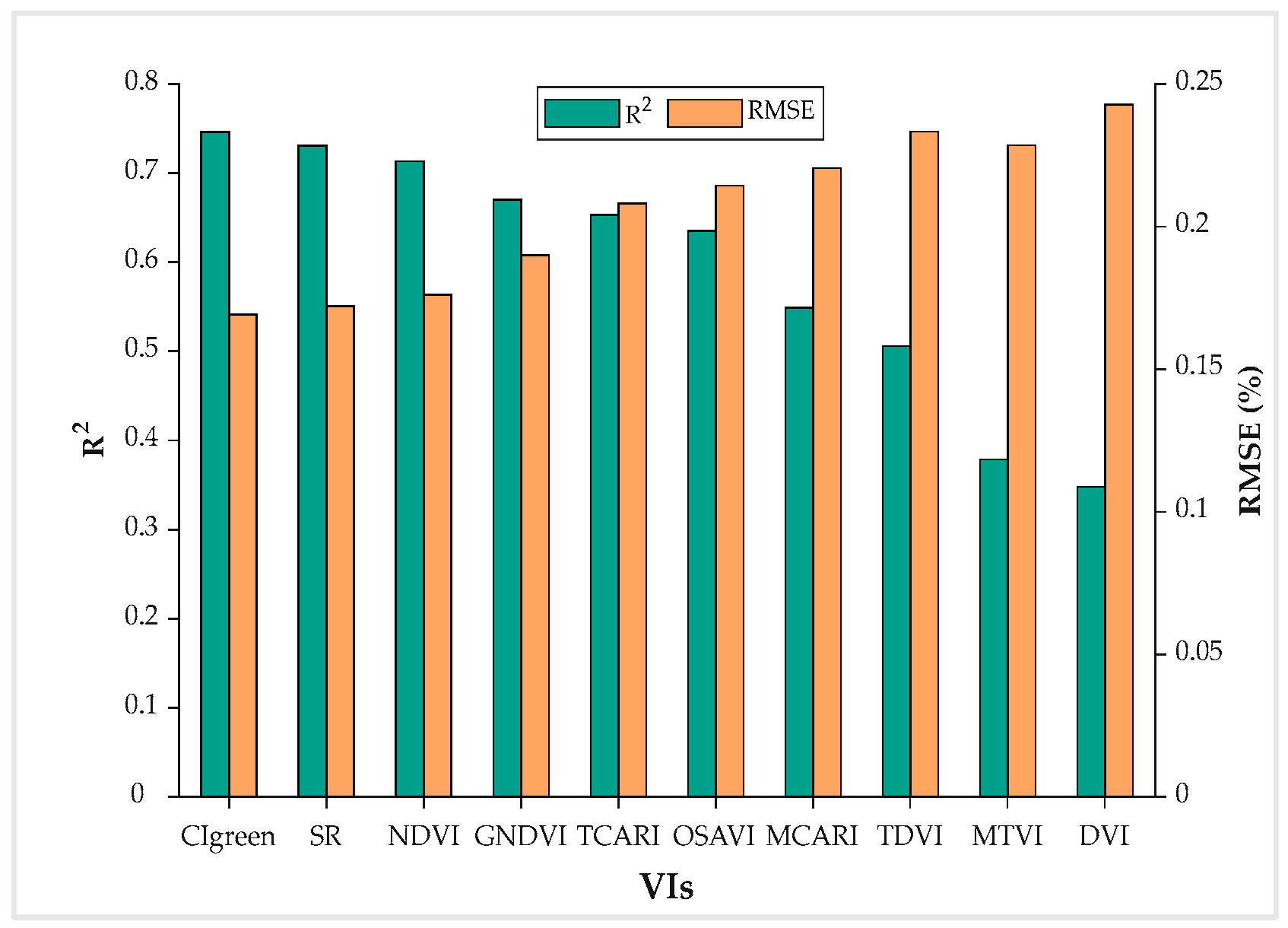

3.3. Result of VI Screening

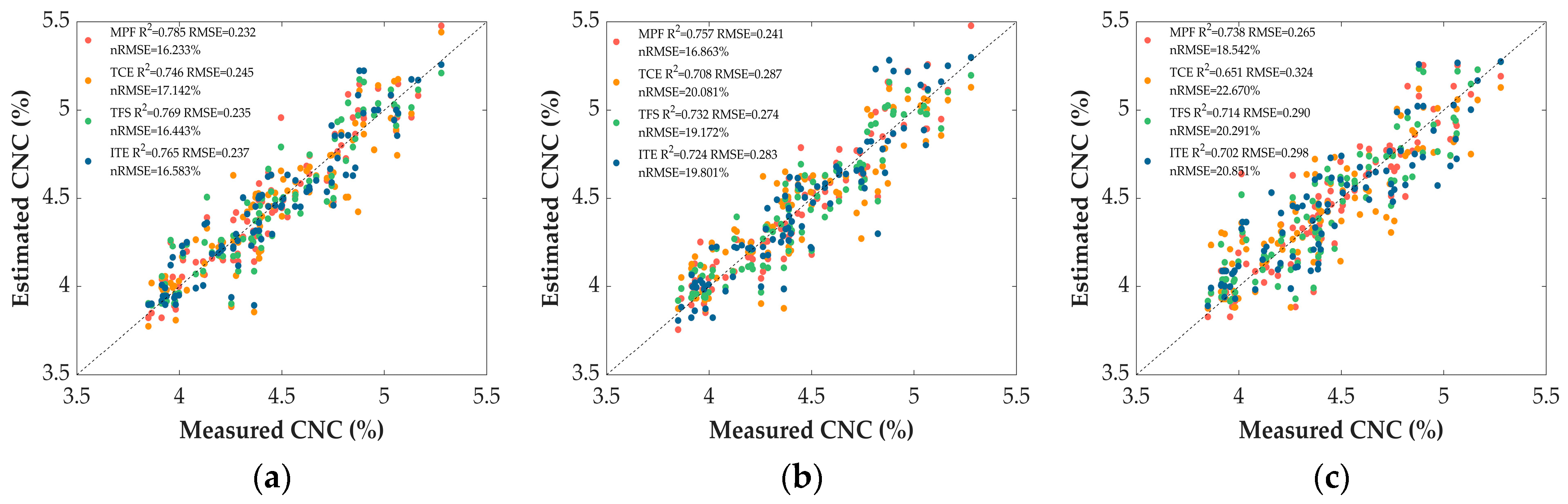

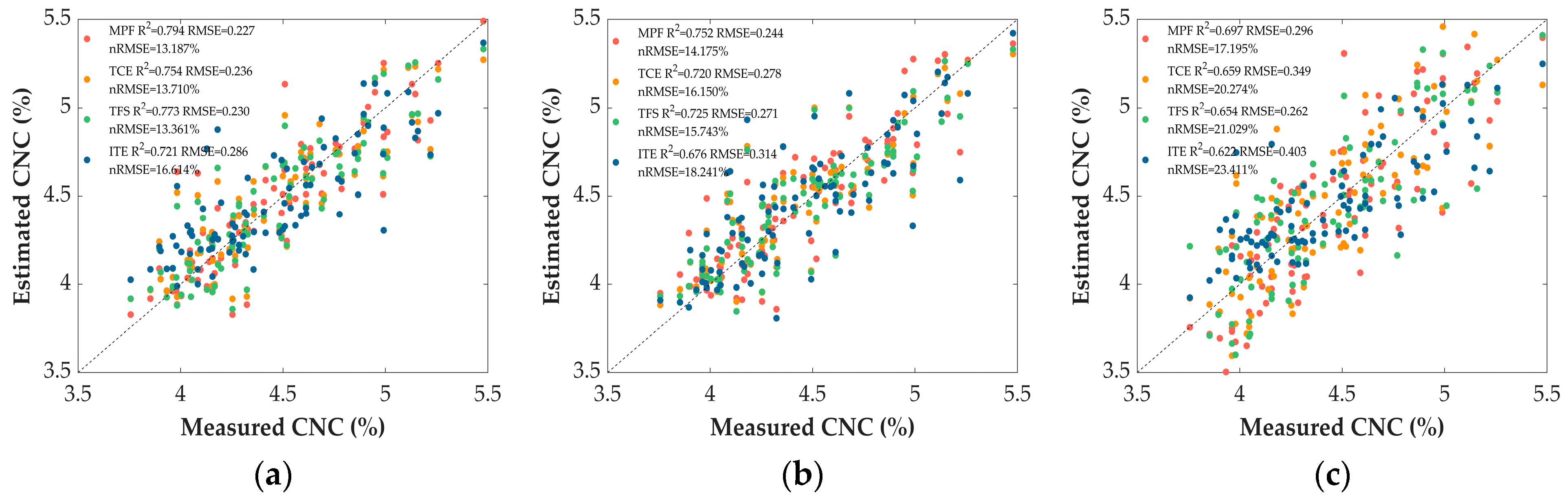

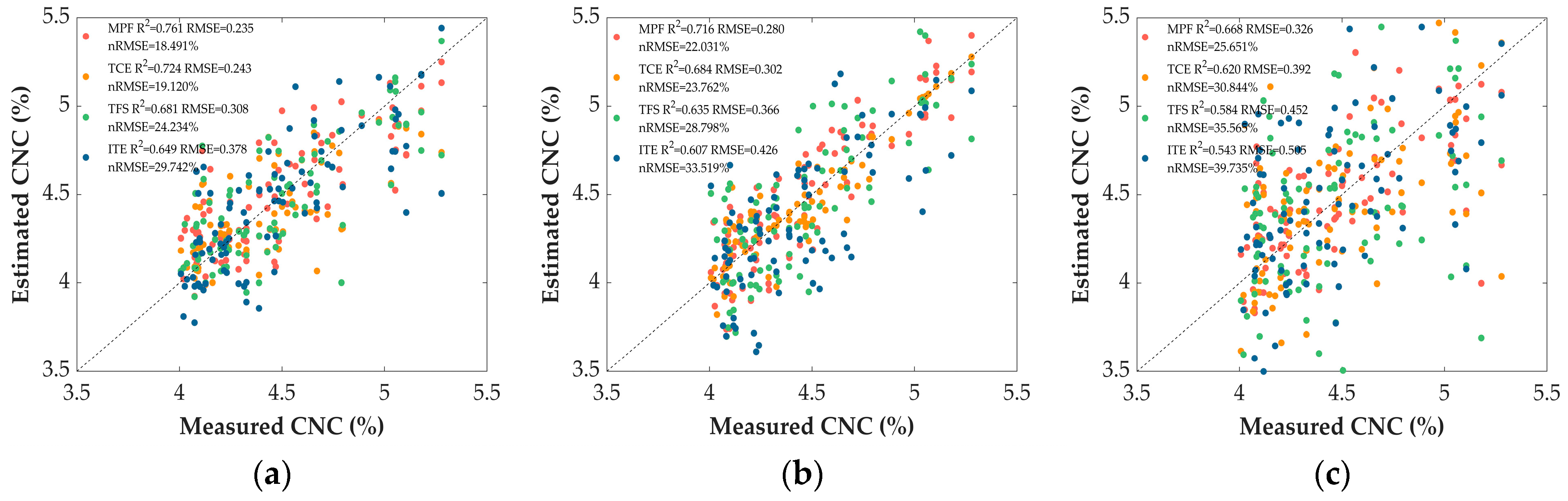

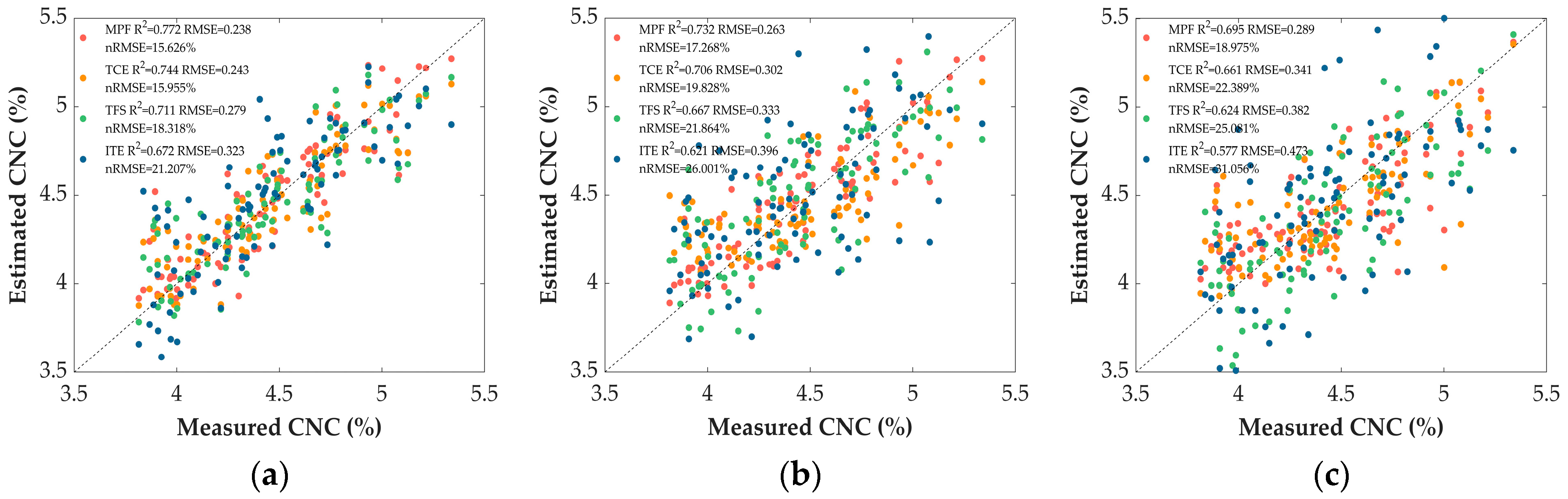

3.4. Comparison of CNC Inversion Models

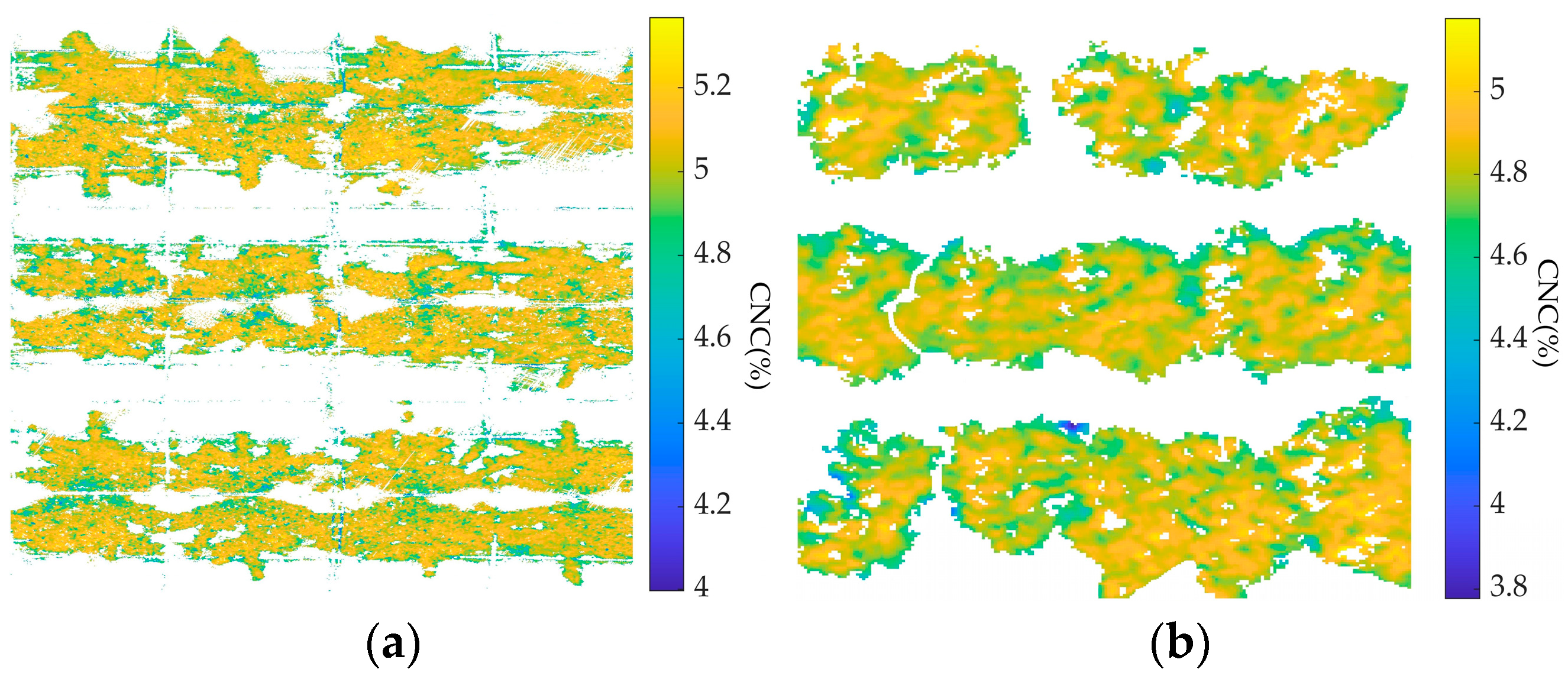

3.5. Spatial Inversion Mapping of CNC

4. Discussion

4.1. Analysis of Coarse-Grained Canopy Extraction Based on Height Difference

4.2. Analysis of Fine-Grained Canopy Extraction Based on Color Thresholds

4.3. Construction of CNC Inversion Model and Visualization of CNC Distribution

4.4. Performance Comparison of Canopy Extraction and CNC Inversion Based on TCE in the Two Orchards

4.5. Future Prospects and Challenges

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, H.; Zhang, J.; Xu, K.; Jiang, X.; Zhu, Y.; Cao, W.; Ni, J. Spectral monitoring of wheat leaf nitrogen content based on canopy structure information compensation. Comput. Electron. Agric. 2021, 190, 106434. [Google Scholar] [CrossRef]

- Zhang, C.; Zhu, X.; Li, M.; Xue, Y.; Qin, A.; Gao, G.; Wang, M.; Jiang, Y. Utilization of the fusion of ground-space remote sensing data for canopy nitrogen content inversion in apple orchards. Horticulturae 2023, 9, 1085. [Google Scholar] [CrossRef]

- Saez-Plaza, P.; Navas, M.J.; Wybraniec, S.; Michalowski, T.; Asuero, A.G. An Overview of the Kjeldahl Method of Nitrogen Determination. Part II. Sample Preparation, Working Scale, Instrumental Finish, and Quality Control. Crit. Rev. Anal. Chem. 2013, 43, 224–272. [Google Scholar] [CrossRef]

- Avioz, D.; Linker, R.; Raveh, E.; Baram, S.; Paz-Kagan, T. Multi-scale remote sensing for sustainable citrus farming: Predicting canopy nitrogen content using UAV-satellite data fusion. Smart. Agric. Technol. 2025, 11, 100906. [Google Scholar] [CrossRef]

- Wang, L.; Chen, S.; Peng, Z.; Huang, J.; Wang, C.; Jiang, H.; Zheng, Q.; Li, D. Phenology effects on physically based estimation of paddy rice canopy traits from UAV hyperspectral imagery. Remote Sens. 2021, 13, 1792. [Google Scholar] [CrossRef]

- Liao, Z.; Dai, Y.; Wang, H.; Ketterings, Q.; Lu, J.; Zhang, F.; Li, Z.; Fan, J. A doublelayer model for improving the estimation of wheat canopy nitrogen content from unmanned aerial vehicle multispectral imagery. J. Integr. Agric. 2023, 22, 2248–2270. [Google Scholar] [CrossRef]

- Tang, Y.; Li, F.; Hu, Y.; Yu, K. Exploring the optimal wavelet function and wavelet feature for estimating maize leaf chlorophyll content. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4400812. [Google Scholar] [CrossRef]

- Azadnia, R.; Rajabipour, A.; Jamshidi, B.; Omid, M. New approach for rapid estimation of leaf nitrogen, phosphorus, and potassium contents in apple-trees using Vis/NIR spectroscopy based on wavelength selection coupled with machine learning. Comput. Electron. Agric. 2023, 207, 107746. [Google Scholar] [CrossRef]

- Hasan, U.; Jia, K.; Wang, L.; Wang, C.; Shen, Z.; Yu, W.; Sun, Y.; Jiang, H.; Zhang, Z.; Guo, J.; et al. Retrieval of leaf chlorophyll contents (LCCs) in litchi based on fractional order derivatives and VCPA-GA-ML algorithms. Plants 2023, 12, 501. [Google Scholar] [CrossRef]

- Kong, W.; Ma, L.; Ye, H.; Wang, J.; Nie, C.; Chen, B.; Zhou, X.; Huang, W.; Fan, Z. Nondestructive estimation of leaf chlorophyll content in banana based on unmanned aerial vehicle hyperspectral images using image feature combination methods. Front. Plant Sci. 2025, 16, 1536177. [Google Scholar] [CrossRef]

- Dong, X.; Zhang, Z.; Yu, R.; Tian, Q.; Zhu, X. Extraction of information about individual trees from high-spatial-resolution UAV-acquired images of an orchard. Remote Sens. 2020, 12, 133. [Google Scholar] [CrossRef]

- Cheng, Z.; Qi, L.; Cheng, Y. Cherry tree crown extraction from natural orchard images with complex backgrounds. Agriculture 2021, 11, 431. [Google Scholar] [CrossRef]

- Lu, Z.; Qi, L.; Zhang, H.; Wan, J.; Zhou, J. Image segmentation of UAV fruit tree canopy in a natural illumination environment. Agriculture 2022, 12, 1039. [Google Scholar] [CrossRef]

- Zhang, C.; Chen, Z.; Yang, G.; Xu, B.; Feng, H.; Chen, R.; Qi, N.; Zhang, W.; Zhao, D.; Cheng, J.; et al. Removal of canopy shadows improved retrieval accuracy of individual apple tree crowns LAI and chlorophyll content using UAV multispectral imagery and PROSAIL model. Comput. Electron. Agric. 2024, 221, 108959. [Google Scholar] [CrossRef]

- Li, Z.; Deng, X.; Lan, Y.; Liu, C.; Qing, J. Fruit tree canopy segmentation from UAV orthophoto maps based on a lightweight improved U-Net. Comput. Electron. Agric. 2024, 217, 108538. [Google Scholar] [CrossRef]

- Wei, P.; Yan, X.; Yan, W.; Sun, L.; Xu, J.; Yuan, H. Precise extraction of targeted apple tree canopy with YOLO-Fi model for advanced UAV spraying plans. Comput. Electron. Agric. 2024, 226, 109425. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, R.; Zhang, L.; Yi, T.; Zhang, D.; Zhu, A. Research on individual tree canopy segmentation of Camellia oleifera based on a UAV-LiDAR system. Agriculture 2024, 14, 364. [Google Scholar] [CrossRef]

- Yang, Y.; Zeng, T.; Li, L.; Fang, J.; Fu, W.; Gu, Y. Canopy extraction of mango trees in hilly and plain orchards using UAV images: Performance of machine learning vs deep learning. Ecol. Inform. 2025, 87, 103101. [Google Scholar] [CrossRef]

- Chen, L.; Bao, Y.; He, X.; Yang, J.; Wu, Q.; Lv, J. Nature-based accumulation of organic carbon and nitrogen in citrus orchard soil with grass coverage. Soil. Till. Res. 2025, 248, 106419. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, R.; Fu, L.; Tao, S.; Bao, J. Effects of orchard grass on soil fertility and nutritional status of fruit trees in Korla fragrant pear orchard. Horticulturae 2023, 9, 903. [Google Scholar] [CrossRef]

- Wang, A.; Zhang, W.; Wei, X. A review on weed detection using ground-based machine vision and image processing techniques. Comput. Electron. Agric. 2019, 158, 226–240. [Google Scholar] [CrossRef]

- Gong, Y.; Liu, G.; Xue, Y.; Li, R.; Meng, L. A survey on dataset quality in machine learning. Inform. Software. Tech. 2023, 162, 107268. [Google Scholar] [CrossRef]

- Shen, W.; Peng, Z.; Wang, X.; Wang, H.; Cen, J.; Jiang, D.; Xie, L.; Yang, X.; Tian, Q. A survey on label-efficient deep image segmentation: Bridging the gap between weak supervision and dense prediction. IEEE Trans. Pattern. Anal. Mach. Intell. 2023, 45, 9284–9305. [Google Scholar] [CrossRef]

- Polat, N.; Memduhoğlu, A.; Kaya, Y. Accurate Terrain Modeling After Dark: Evaluating Nighttime Thermal UAV-Derived DSMs. Drones 2025, 9, 430. [Google Scholar] [CrossRef]

- Xi, R.; Gu, Y.; Zhang, X.; Ren, Z. Nitrogen monitoring and inversion algorithms of fruit trees based on spectral remote sensing: A deep review. Front. Plant. Sci. 2024, 15, 1489151. [Google Scholar] [CrossRef]

- Wrat, G.; Ranjan, P.; Mishra, S.K.; Jose, J.T.; Das, J. Neural network-enhanced internal leakage analysis for efficient fault detection in heavy machinery hydraulic actuator cylinders. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2025, 239, 1021–1031. [Google Scholar] [CrossRef]

- Li, W.; Zhu, X.; Yu, X.; Li, M.; Tang, X.; Zhang, J.; Xue, Y.; Zhang, C.; Jiang, Y. Inversion of nitrogen concentration in apple canopy based on UAV hyperspectral images. Sensors 2022, 22, 3503. [Google Scholar] [CrossRef]

- Li, M.X.; Zhu, X.C.; Li, W.; Tang, X.Y.; Yu, X.Y.; Jiang, Y.M. Retrieval of Nitrogen Content in Apple Canopy Based on Unmanned Aerial Vehicle Hyperspectral Images Using a Modified Correlation Coefficient Method. Sustainability 2022, 14, 1992. [Google Scholar] [CrossRef]

- Jia, Y.; Li, Y.; He, J.; Biswas, A.; Siddique, K.H.; Hou, Z.; Luo, H.; Wang, C.; Xie, X. Enhancing precision nitrogen management for cotton cultivation in arid environments using remote sensing techniques. Field. Crop. Res. 2025, 321, 109689. [Google Scholar]

- Donmez, C.; Villi, O.; Berberoglu, S.; Cilek, A. Computer vision-based citrus tree detection in a cultivated environment using UAV imagery. Comput. Electron. Agric. 2021, 187, 106273. [Google Scholar] [CrossRef]

- Din, M.; Zheng, W.; Rashid, M.; Wang, S.; Shi, Z. Evaluating hyperspectral vegetation indices for leaf area index estimation of Oryza sativa L. at diverse phenological stages. Front. Plant. Sci. 2017, 8, 820. [Google Scholar]

- Cheng, J.; Yang, H.; Qi, J.; Sun, Z.; Han, S.; Feng, H.; Jiang, J.; Xu, W.; Li, Z.; Yang, G.; et al. Estimating canopy-scale chlorophyll content in apple orchards using a 3D radiative transfer model and UAV multispectral imagery. Comput. Electron. Agric. 2022, 202, 107401. [Google Scholar] [CrossRef]

- Gribble, C.; Ize, T.; Kensler, A.; Wald, I.; Parker, S. A coherent grid traversal approach to visualizing particle-based simulation data. IEEE Trans. Vis. Comput. Graph. 2007, 13, 758–768. [Google Scholar] [CrossRef]

- Lillotte, T.; Joester, M.; Frindt, B.; Berghaus, A.; Lammens, R.F.; Wagner, K.G. UV–VIS spectra as potential process analytical technology (PAT) for measuring the density of compressed materials: Evaluation of the CIELAB color space. Int. J. Pharmaceut. 2021, 603, 120668. [Google Scholar]

- Zhang, Y.; Li, X.; Wang, M.; Xu, T.; Huang, K.; Sun, Y.; Yuan, Q.; Lei, X.; Qi, Y.; Lv, X. Early detection and lesion visualization of pear leaf anthracnose based on multi-source feature fusion of hyperspectral imaging. Front. Plant Sci. 2024, 15, 1461855. [Google Scholar] [CrossRef] [PubMed]

- Sabha, M.; Saffarini, M. Selecting optimal k for K-means in image segmentation using GLCM. Multimed. Tools. Appl. 2024, 83, 55587–55603. [Google Scholar]

- Zhao, Z.; Lu, C.; Tonooka, H.; Wu, L.; Lin, H.; Jiang, X. Dynamic monitoring of vegetation phenology on the Qinghai-Tibetan plateau from 2001 to 2020 via the MSAVI and EVI. Sci. Rep. 2025, 15, 25698. [Google Scholar] [CrossRef]

- Rouse, J. Monitoring vegetation systems in the great plains with ERTS. In Third NASA Earth Resources Technology Satellite Symposium; NASA: Washington, DC, USA, 1973; Volume 1, pp. 309–317. [Google Scholar]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote. Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Roujean, J.-L.; Breon, F.-M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar]

- Gitelson, A.; Kaufman, Y.; Merzlyak, M. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar]

- Carter, G. Ratios of leaf reflectances in narrow wavebands as indicators of plant stress. Remote Sens. 1994, 15, 697–703. [Google Scholar] [CrossRef]

- Gitelson, A.; Gritz, Y.; Merzlyak, M. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef] [PubMed]

- Daughtry, C.; Walthall, C.; Kim, M.; De Colstoun, E.; McMurtrey, I. Estimating corn leaf chlorophyll concentration from leaf and canopy reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- De Grave, C.; Verrelst, J.; Morcillo-Pallarés, P.; Pipia, L.; Rivera-Caicedo, J.P.; Amin, E.; Belda, S.; Moreno, J. Quantifying vegetation biophysical variables from the Sentinel-3/FLEX tandem mission: Evaluation of the synergy of OLCI and FLORIS data sources. Remote Sens. Environ. 2020, 251, 112101. [Google Scholar] [CrossRef]

- Darvishzadeh, R.; Skidmore, A.; Schlerf, M.; Atzberger, C. Inversion of a radiative transfer model for estimating vegetation LAI and chlorophyll in a heterogeneous grassland. Remote Sens. Environ. 2008, 112, 2592–2604. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.; Pattey, E.; Zarco-Tejada, P.; Strachan, I. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Rev. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Hosmer, D.; Lemeshow, S. Confidence interval estimation of interaction. Epidemiology 1992, 3, 452–456. [Google Scholar] [CrossRef]

- Shi, C.; Zhu, J.; Shen, Y.; Luo, S.; Zhu, H.; Song, R. Off-policy confidence interval estimation with confounded markov decision process. J. Am. Stat. Assoc. 2024, 119, 273–284. [Google Scholar] [CrossRef]

- Rao, L.; Yuan, Y.; Shen, X.; Yu, G.; Chen, X. Designing nanotheranostics with machine learning. Nat. Nanotechnol. 2024, 19, 1769–1781. [Google Scholar] [CrossRef] [PubMed]

- Mienye, I.; Swart, T. A comprehensive review of deep learning: Architectures, recent advances, and applications. Information 2024, 15, 755. [Google Scholar] [CrossRef]

- Chen, P.; Liu, S.; Xu, J.; Liu, M. Stability control of a wheel-legged mobile platform used in hilly orchards. Biosyst. Eng. 2025, 256, 104195. [Google Scholar] [CrossRef]

- Malounas, I.; Lentzou, D.; Xanthopoulos, G.; Fountas, S. Testing the suitability of automated machine learning, hyperspectral imaging and CIELAB color space for proximal in situ fertilization level classification. Smart Agric. Technol. 2024, 8, 100437. [Google Scholar] [CrossRef]

- Wang, B.; Gu, S.; Wang, J.; Chen, B.; Wen, W.; Guo, X.; Zhao, C. Maximizing the radiation use efficiency by matching the leaf area and leaf nitrogen vertical distributions in a maize canopy: A simulation study. Plant Phenomics 2024, 6, 0217. [Google Scholar] [CrossRef] [PubMed]

| Reference | Year | Fruit Species | Extraction Method | Grass Cover Density |

|---|---|---|---|---|

| [8] | 2020 | Apple and Pear | IT | Sparse cover |

| [12] | 2021 | Cherry | IT | No grass |

| [13] | 2022 | Apple | IT | No grass |

| [14] | 2024 | Apple | IT | No grass |

| [15] | 2024 | Lychee | TC | Sparse cover |

| [16] | 2024 | Apple | IT&TC | Sparse cover |

| [17] | 2024 | Sasanqua | TC | Sparse cover |

| [18] | 2025 | Mango | TC | Sparse cover |

| Parameters | Max | Min | Mean | Standard Deviation |

|---|---|---|---|---|

| LNC (%) | 2.032 | 1.374 | 1.647 | 0.263 |

| LAI (m2/m2) | 3.677 | 1.443 | 2.590 | 0.542 |

| CNC (%) | 5.742 | 3.357 | 4.468 | 0.475 |

| VIs | Equation |

|---|---|

| Normalized Difference Vegetation Index (NDVI) [38] | |

| Optimized Soil-Adjusted Vegetation Index (OSAVI) [39] | |

| Difference Vegetation Index (DVI) [40] | |

| Green Normalized Difference Vegetation Index (GNDVI) [41] | |

| Simple Ratio (SR) [42] | |

| Green Chlorophyll Index (CIgreen) [43] | |

| Modified Chlorophyll Absorption Ratio Index (MCARI) [44] | |

| Transformed Chlorophyll Absorption in Reflectance Index (TCARI) [45] | 3 |

| Transformed Difference Vegetation Index (TDVI) [46] | |

| Modified Triangular Vegetation Index (MTVI) [47] |

| Channel | μ | σ | Lower Limit Threshold (μ − 2σ) | Upper Limit Threshold (μ + 2σ) |

|---|---|---|---|---|

| L* | 45.672 | 11.023 | 23.626 | 67.718 |

| a* | −19.705 | 5.864 | −31.433 | −7.977 |

| b* | 14.757 | 6.278 | 2.201 | 27.313 |

| Scenarios | Methods | Accuracy (%) | Recall (%) | Precision (%) | F1-Score (%) |

|---|---|---|---|---|---|

| No grass | TCE | 92.406 | 95.282 | 92.348 | 93.792 |

| TFS | 95.344 | 97.127 | 95.303 | 96.206 | |

| ITE | 94.647 | 96.980 | 94.319 | 95.631 | |

| Sparse grass cover | TCE | 93.859 | 97.306 | 93.201 | 95.209 |

| TFS | 96.374 | 98.270 | 96.148 | 97.197 | |

| ITE | 93.064 | 94.616 | 94.791 | 94.703 | |

| Full grass cover in JAAS orchard | TCE | 91.725 | 95.789 | 91.284 | 93.482 |

| TFS | 82.361 | 87.121 | 85.514 | 86.310 | |

| ITE | 73.205 | 80.091 | 78.216 | 79.142 | |

| Full grass cover in Yejia orchard | TCE | 91.555 | 93.453 | 93.392 | 92.919 |

| TFS | 86.806 | 91.314 | 86.209 | 88.688 | |

| ITE | 81.475 | 86.953 | 81.327 | 84.046 |

| Indicator | CIgreen | SR | NDVI | GNDVI | TCARI | OSAVI | MCARI | TDVI | MTVI | DVI |

|---|---|---|---|---|---|---|---|---|---|---|

| R2 | 0.746 | 0.731 | 0.713 | 0.670 | 0.653 | 0.635 | 0.549 | 0.506 | 0.379 | 0.348 |

| RMSE | 0.169 | 0.172 | 0.176 | 0.190 | 0.208 | 0.214 | 0.220 | 0.233 | 0.229 | 0.243 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Y.; Huang, K.; Yuan, Q.; Lei, X.; Lv, X. A Two-Stage Canopy Extraction Method Utilizing Multispectral Images to Enhance the Estimation of Canopy Nitrogen Content in Pear Orchards with Full Grass Cover. Horticulturae 2025, 11, 1419. https://doi.org/10.3390/horticulturae11121419

Sun Y, Huang K, Yuan Q, Lei X, Lv X. A Two-Stage Canopy Extraction Method Utilizing Multispectral Images to Enhance the Estimation of Canopy Nitrogen Content in Pear Orchards with Full Grass Cover. Horticulturae. 2025; 11(12):1419. https://doi.org/10.3390/horticulturae11121419

Chicago/Turabian StyleSun, Yuanhao, Kai Huang, Quanchun Yuan, Xiaohui Lei, and Xiaolan Lv. 2025. "A Two-Stage Canopy Extraction Method Utilizing Multispectral Images to Enhance the Estimation of Canopy Nitrogen Content in Pear Orchards with Full Grass Cover" Horticulturae 11, no. 12: 1419. https://doi.org/10.3390/horticulturae11121419

APA StyleSun, Y., Huang, K., Yuan, Q., Lei, X., & Lv, X. (2025). A Two-Stage Canopy Extraction Method Utilizing Multispectral Images to Enhance the Estimation of Canopy Nitrogen Content in Pear Orchards with Full Grass Cover. Horticulturae, 11(12), 1419. https://doi.org/10.3390/horticulturae11121419