Determination of Non-Digestible Parts in Dairy Cattle Feces Using U-NET and F-CRN Architectures

Abstract

Simple Summary

Abstract

1. Introduction

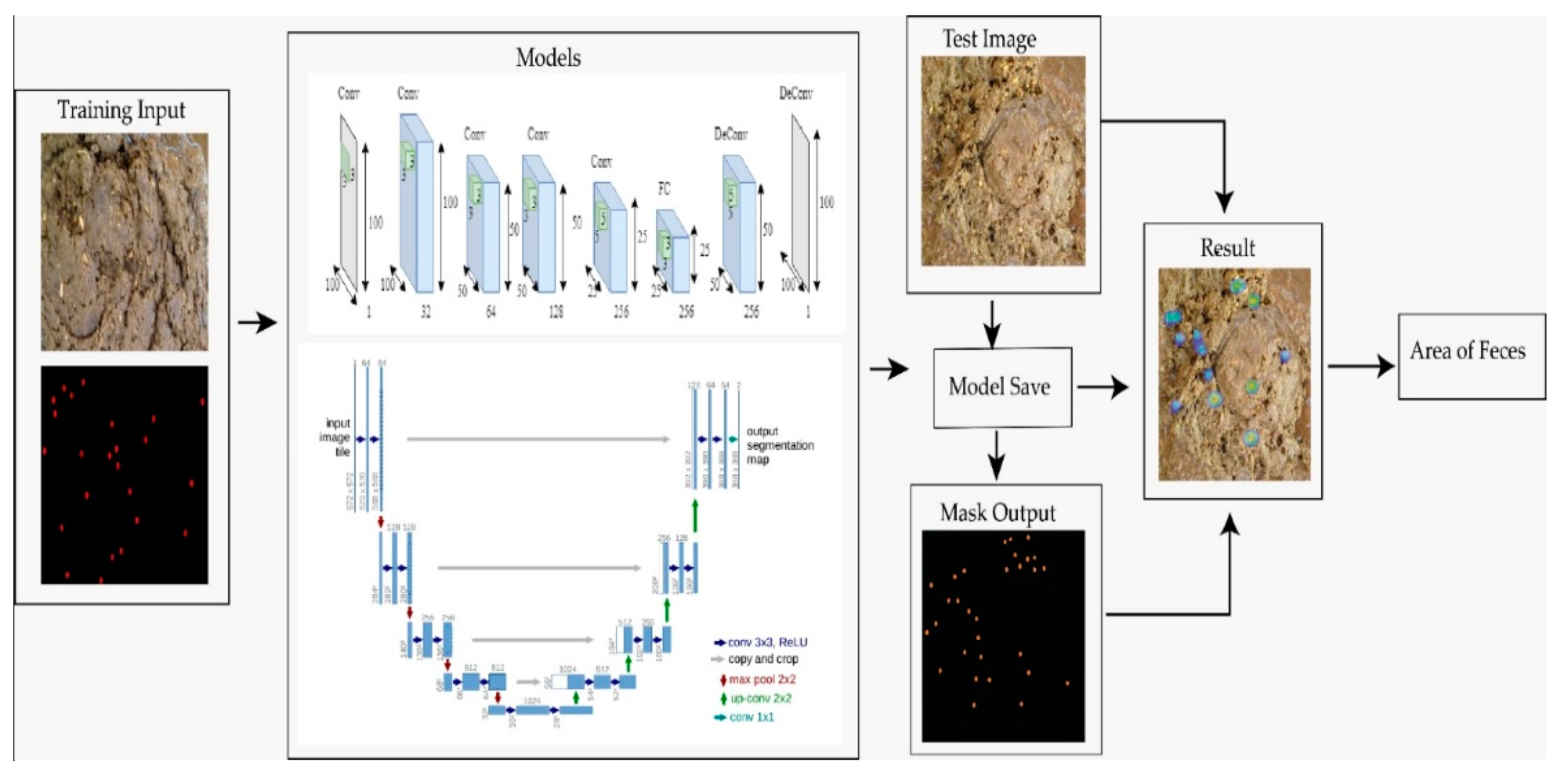

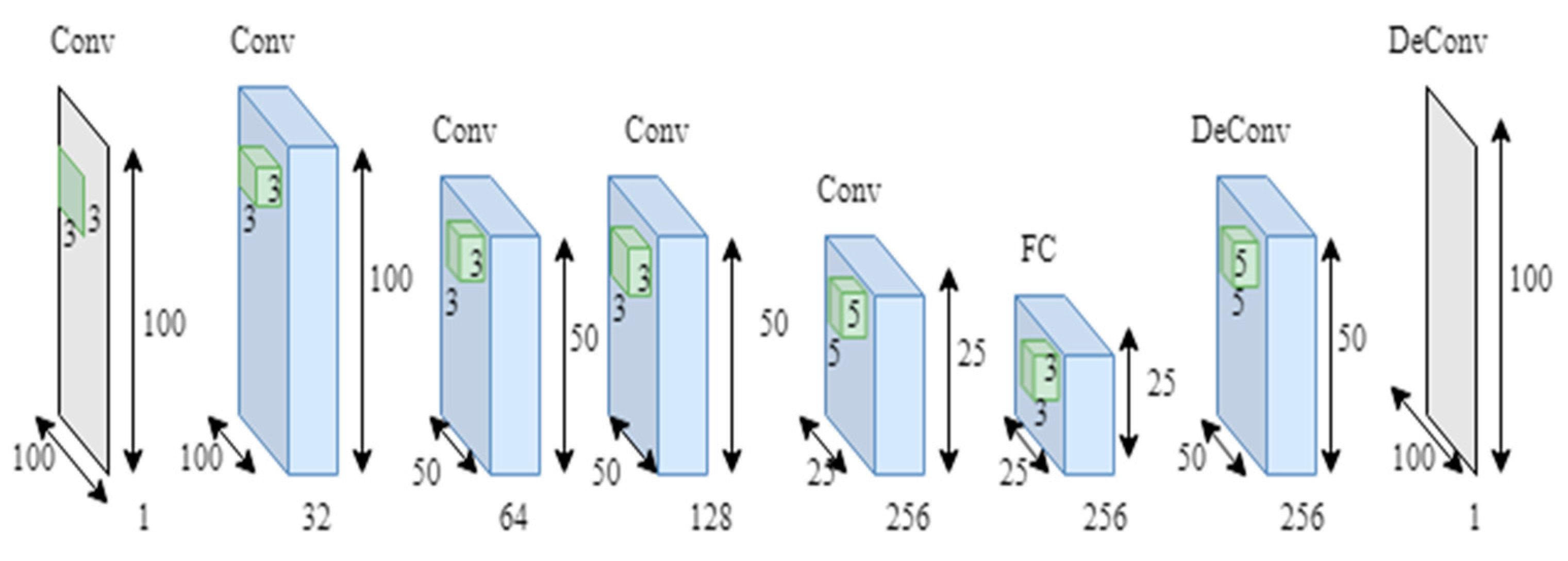

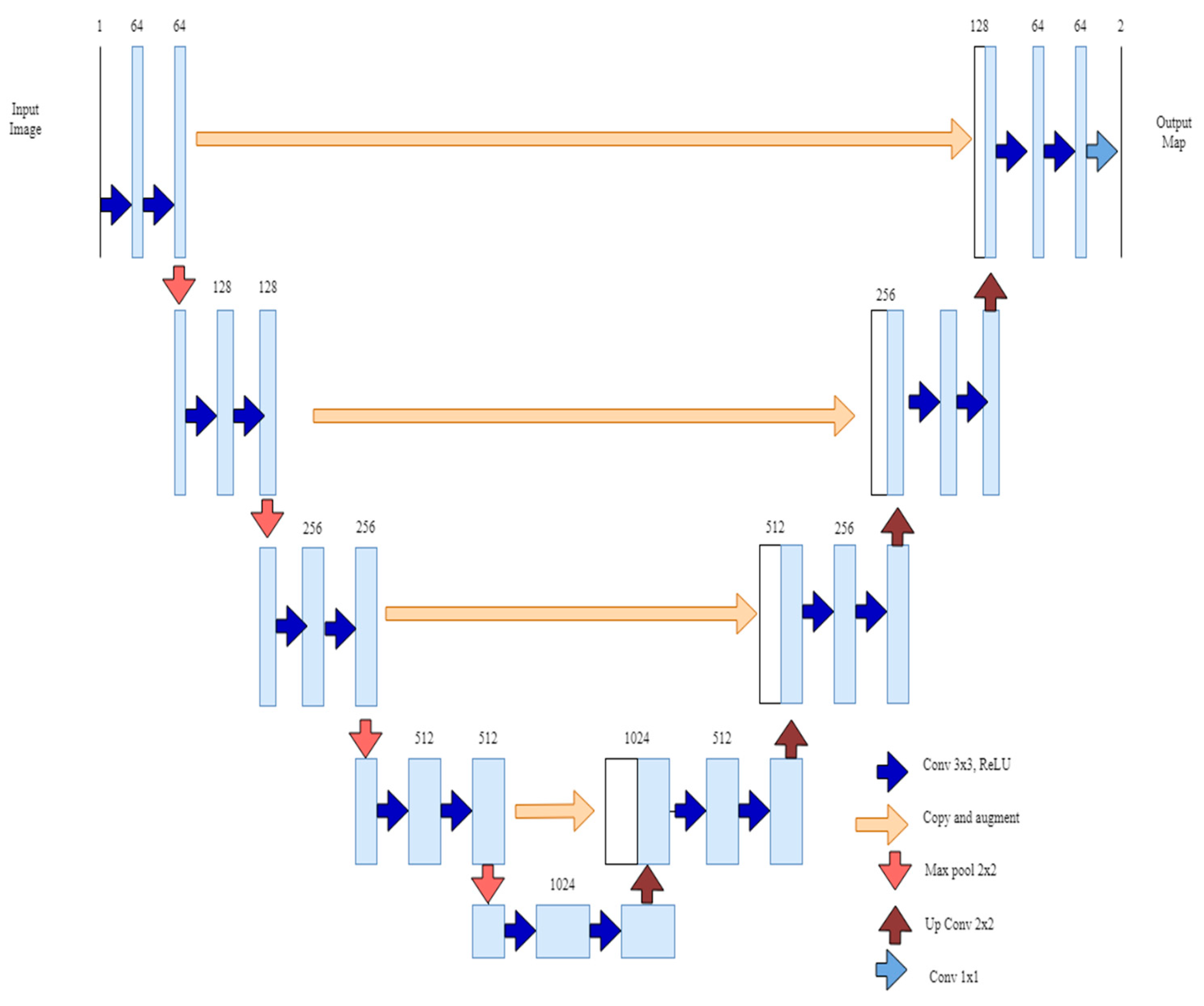

2. Materials and Methods

2.1. Material

2.2. Method

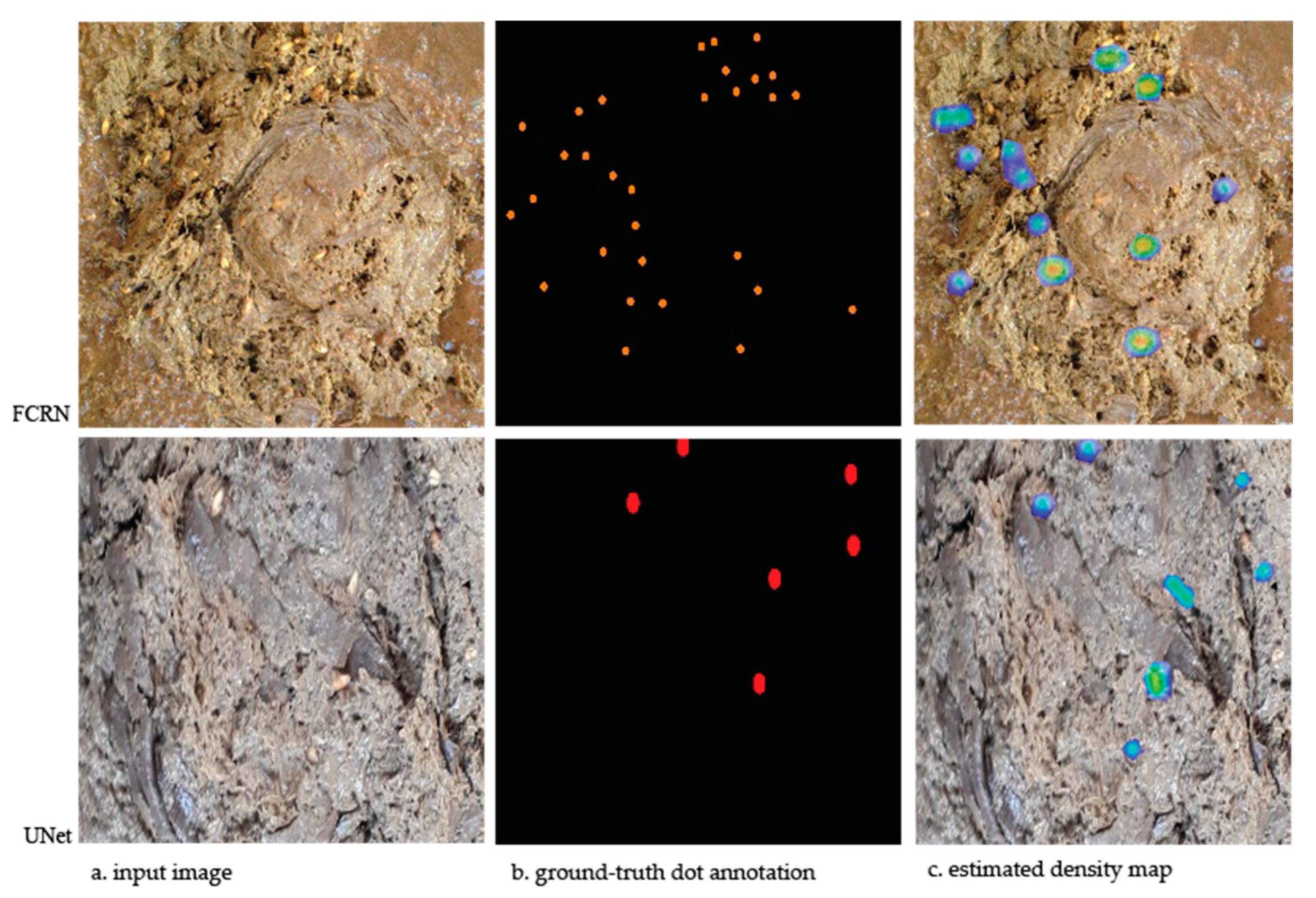

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Model Summary for FCRN

| Layer (Type) | Output Shape | Param # |

| Conv2d-1 | [−1, 32, 256, 256] | 864 |

| BatchNorm2d-2 | [−1, 32, 256, 256] | 64 |

| ReLU-3 | [−1, 32, 256, 256] | 0 |

| MaxPool2d-4 | [−1, 32, 128, 128] | 0 |

| Conv2d-5 | [−1, 64, 128, 128] | 18,432 |

| BatchNorm2d-6 | [−1, 64, 128, 128] | 128 |

| ReLU-7 | [−1, 64, 128, 128] | 0 |

| MaxPool2d-8 | [−1, 64, 64, 64] | 0 |

| Conv2d-9 | [−1, 128, 64, 64] | 73,728 |

| BatchNorm2d-10 | [−1, 128, 64, 64] | 256 |

| ReLU-11 | [−1, 128, 64, 64] | 0 |

| MaxPool2d-12 | [−1, 128, 32, 32] | 0 |

| Conv2d-13 | [−1, 512, 32, 32] | 589,824 |

| BatchNorm2d-14 | [−1, 512, 32, 32] | 1024 |

| ReLU-15 | [−1, 512, 32, 32] | 0 |

| Upsample-16 | [−1, 512, 64, 64] | 0 |

| Conv2d-17 | [−1, 128, 64, 64] | 589,824 |

| BatchNorm2d-18 | [−1, 128, 64, 64] | 256 |

| ReLU-19 | [−1, 128, 64, 64] | 0 |

| Upsample-20 | [−1, 128, 128, 128] | 0 |

| Conv2d-21 | [−1, 64, 128, 128] | 73,728 |

| BatchNorm2d-22 | [−1, 64, 128, 128] | 128 |

| ReLU-23 | [−1, 64, 128, 128] | 0 |

| Upsample-24 | [−1, 64, 256, 256] | 0 |

| Conv2d-25 | [−1, 1, 256, 256] | 576 |

| BatchNorm2d-26 | [−1, 1, 256, 256] | 2 |

| ReLU-27 | [−1, 1, 256, 256] | 0 |

| Total params: 1,348,834 Trainable params: 134,883 | ||

Appendix B. Model Summary for U-Net

| Layer (Type) | Output Shape | Param # |

| Conv2d-1 | [−1, 64, 256, 256] | 1728 |

| BatchNorm2d-2 | [−1, 64, 256, 256] | 128 |

| ReLU-3 | [−1, 64, 256, 256] | 0 |

| Conv2d-4 | [−1, 64, 256, 256] | 36,864 |

| BatchNorm2d-5 | [−1, 64, 256, 256] | 128 |

| ReLU-6 | [−1, 64, 256, 256] | 0 |

| Conv2d-7 | [−1, 64, 128, 128] | 36,864 |

| BatchNorm2d-8 | [−1, 64, 128, 128] | 128 |

| ReLU-9 | [−1, 64, 128, 128] | 0 |

| Conv2d-10 | [−1, 64, 128, 128] | 36,864 |

| BatchNorm2d-11 | [−1, 64, 128, 128] | 128 |

| ReLU-12 | [−1, 64, 128, 128] | 0 |

| Conv2d-13 | [−1, 64, 64, 64] | 36,864 |

| BatchNorm2d-14 | [−1, 64, 64, 64] | 128 |

| ReLU-15 | [−1, 64, 64, 64] | 0 |

| Conv2d-16 | [−1, 64, 64, 64] | 36,864 |

| BatchNorm2d-17 | [−1, 64, 64, 64] | 128 |

| ReLU-18 | [−1, 64, 64, 64] | 0 |

| Conv2d-19 | [−1, 64, 32, 32] | 36,864 |

| BatchNorm2d-20 | [−1, 64, 32, 32] | 128 |

| ReLU-21 | [−1, 64, 32, 32] | 0 |

| Conv2d-22 | [−1, 64, 32, 32] | 36,864 |

| BatchNorm2d-23 | [−1, 64, 32, 32] | 128 |

| ReLU-24 | [−1, 64, 32, 32] | 0 |

| Upsample-25 | [−1, 64, 64, 64] | 0 |

| ConvCat-26 | [−1, 128, 64, 64] | 0 |

| Conv2d-27 | [−1, 64, 64, 64] | 73,728 |

| BatchNorm2d-28 | [−1, 64, 64, 64] | 128 |

| ReLU-29 | [−1, 64, 64, 64] | 0 |

| Conv2d-30 | [−1, 64, 64, 64] | 36,864 |

| BatchNorm2d-31 | [−1, 64, 64, 64] | 128 |

| ReLU-32 | [−1, 64, 64, 64] | 0 |

| Upsample-33 | [−1, 64, 128, 128] | 0 |

| ConvCat-34 | [−1, 128, 128, 128] | 0 |

| Conv2d-35 | [−1, 64, 128, 128] | 73,728 |

| BatchNorm2d-36 | [−1, 64, 128, 128] | 128 |

| ReLU-37 | [−1, 64, 128, 128] | 0 |

| Conv2d-38 | [−1, 64, 128, 128] | 36,864 |

| BatchNorm2d-39 | [−1, 64, 128, 128] | 128 |

| ReLU-40 | [−1, 64, 128, 128] | 0 |

| Upsample-41 | [−1, 64, 256, 256] | 0 |

| ConvCat-42 | [−1, 128, 256, 256] | 0 |

| Conv2d-43 | [−1, 64, 256, 256] | 73,728 |

| BatchNorm2d-44 | [−1, 64, 256, 256] | 128 |

| ReLU-45 | [−1, 64, 256, 256] | 0 |

| Conv2d-46 | [−1, 64, 256, 256] | 36,864 |

| BatchNorm2d-47 | [−1, 64, 256, 256] | 128 |

| ReLU-48 | [−1, 64, 256, 256] | 0 |

| Conv2d-49 | [−1, 1, 256, 256] | 64 |

| Total params: 593,408 Trainable params: 593,408 | ||

References

- Dai, Z.; Song, H.; Wang, X.; Fang, Y.; Yun, X.; Zhang, Z.; Li, H. Video-Based Vehicle Counting Framework. IEEE Access 2019, 7, 64460–64470. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, X. Autonomous Garbage Detection for Intelligent Urban Management. In Proceedings of the MATEC Web of Conferences, Shanghai, China, 12–14 October 2018; Volume 232, pp. 1–5. [Google Scholar]

- Boukerche, A.; Hou, Z. Object Detection Using Deep Learning Methods in Traffic Scenarios. ACM Comput. Surv. (CSUR) 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Chen, H.; Zhang, L.; Ma, J.; Zhang, J. Target Heat-Map Network: An End-to-End Deep Network for Target Detection in Remote Sensing Images. Neurocomputing 2019, 331, 375–387. [Google Scholar] [CrossRef]

- Choi, D.; Lee, W.S.; Schueller, J.K.; Ehsani, R.; Roka, F.; Diamond, J. A Performance Comparison of RGB, NIR, and Depth Images in Immature Citrus Detection Using Deep Learning Algorithms for Yield Prediction. In Proceedings of the ASABE Annual International Meeting, Spokane, WA, USA, 16–19 July 2017. [Google Scholar] [CrossRef]

- Khan, R.; Debnath, R. Multi Class Fruit Classification Using Efficient Object Detection and Recognition Techniques. Int. J. Image Graph. Signal Process. 2019, 11, 1–18. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. arXiv 2019, arXiv:1905.05055. [Google Scholar]

- Dzulkurnain, Z.; Mahamad, A.K.; Saon, S.; Ahmadon, M.A.; Yamaguchi, S. Internet of Things (IoT) Based Traffic Management & Routing Solution for Parking Space. Indones. J. Electr. Eng. Comput. Sci. 2019, 15, 336–345. [Google Scholar] [CrossRef]

- Ciampi, L.; Gennaro, C.; Carrara, F.; Falchi, F.; Vairo, C.; Amato, G. Multi-Camera Vehicle Counting Using Edge-AI. Expert Syst. Appl. 2022, 207, 117929. [Google Scholar] [CrossRef]

- Fachrie, M. A Simple Vehicle Counting System Using Deep Learning with YOLOv3 Model. J. RESTI (Rekayasa Sist. Dan Teknol. Inf.) 2020, 4, 462–468. [Google Scholar] [CrossRef]

- Di Mauro, D.; Furnari, A.; Patanè, G.; Battiato, S.; Farinella, G.M. Estimating the Occupancy Status of Parking Areas by Counting Cars and Non-Empty Stalls. J. Vis. Commun. Image Represent. 2019, 62, 234–244. [Google Scholar] [CrossRef]

- Pervaiz, M.; Ghadi, Y.Y.; Gochoo, M.; Jalal, A.; Kamal, S.; Kim, D.S. A Smart Surveillance System for People Counting and Tracking Using Particle Flow and Modified Som. Sustainability 2021, 13, 5367. [Google Scholar] [CrossRef]

- Ren, P.; Wang, L.; Fang, W.; Song, S.; Djahel, S. A Novel Squeeze YOLO-Based Real-Time People Counting Approach. Int. J. Bio-Inspired Comput. 2020, 16, 94–101. [Google Scholar] [CrossRef]

- Nogueira, V., Jr.; Oliveira, H.; Silva, J.A.; Vieira, T.; Oliveira, K. RetailNet: A Deep Learning Approach for People Counting and Hot Spots Detection in Retail Stores. In Proceedings of the 2019 32nd SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Rio de Janeiro, Brazil, 28–30 October 2019. [Google Scholar] [CrossRef]

- Sarwar, F.; Griffin, A.; Periasamy, P.; Portas, K.; Law, J. Detecting and Counting Sheep with a Convolutional Neural Network. In Proceedings of the AVSS 2018—2018 15th IEEE International Conference on Advanced Video and Signal-Based Surveillance, Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Li, Z.; Wang, J.; Tang, H.; Huang, C.; Yang, F.; Chen, B.; Wang, X.; Xin, X.; Ge, Y. Predicting Grassland Leaf Area Index in the Meadow Steppes of Northern China: A Comparative Study of Regression Approaches and Hybrid Geostatistical Methods. Remote Sens. 2016, 8, 632. [Google Scholar] [CrossRef]

- Jarvinen, T.D.; Choi, D.; Heinemann, P.; Baugher, T.A. Multiple Object Tracking-by-Detection for Fruit Counting on an Apple Tree Canopy. In Proceedings of the 2018 ASABE Annual International Meeting, Detroit, MI, USA, 31 July 2018. [Google Scholar] [CrossRef]

- Javare, P.; Khetan, D.; Kamerkar, C.; Gupte, Y.; Chachra, S.; Joshi, U. Using Object Detection and Data Analysis for Developing Customer Insights in a Retail Setting. SSRN Electron. J. 2020. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions; Springer International Publishing: Cham, Switzerland, 2021; Volume 8, ISBN 4053702100444. [Google Scholar]

- Han, J.; Zhang, Z.; Cao, J.; Luo, Y.; Zhang, L.; Li, Z.; Zhang, J. Prediction of Winter Wheat Yield Based on Multi-Source Data and Machine Learning in China. Remote Sens. 2020, 12, 236. [Google Scholar] [CrossRef]

- Wang, M.; Wang, X. Automatic Adaptation of a Generic Pedestrian Detector to a Specific Traffic Scene. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 3401–3408. [Google Scholar] [CrossRef]

- Arteta, C.; Lempitsky, V.; Noble, J.A.; Zisserman, A. Learning to Detect Cells Using Non-Overlapping Extremal Regions. Medical image computing and computer-assisted intervention. In Proceedings of the MICCAI International Conference on Medical Image Computing and Computer-Assisted Intervention, Nice, France, 1–5 October 2012; Volume 15, pp. 348–356. [Google Scholar] [CrossRef]

- Huang, G.; Laradji, I.; Vazquez, D.; Lacoste-Julien, S.; Rodriguez, P. A Survey of Self-Supervised and Few-Shot Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 1–20. [Google Scholar] [CrossRef]

- Rabaud, V.; Belongie, S. Counting Crowded Moving Objects. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; Volume 1, pp. 705–711. [Google Scholar] [CrossRef]

- Li, Y.; Liu, H.; Zheng, X.; Han, Y.; Li, L. A Top-Bottom Clustering Algorithm Based on Crowd Trajectories for Small Group Classification. IEEE Access 2019, 7, 29679–29698. [Google Scholar] [CrossRef]

- Sindagi, V.A.; Patel, V.M. A Survey of Recent Advances in CNN-Based Single Image Crowd Counting and Density Estimation. Pattern Recognit. Lett. 2018, 107, 3–16. [Google Scholar] [CrossRef]

- Chen, K.; Gong, S.; Xiang, T.; Loy, C.C. Cumulative Attribute Space for Age and Crowd Density Estimation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2467–2474. [Google Scholar] [CrossRef]

- Ribera, J.; Chen, Y.; Boomsma, C.; Delp, E.J. Counting Plants Using Deep Learning. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017; pp. 1344–1348. [Google Scholar]

- Aich, S.; Stavness, I. Leaf Counting with Deep Convolutional and Deconvolutional Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops, ICCVW 2017, Venice, Italy, 22–29 October 2017; pp. 2080–2089. [Google Scholar] [CrossRef]

- Aich, S.; Josuttes, A.; Ovsyannikov, I.; Strueby, K.; Ahmed, I.; Duddu, H.S.; Pozniak, C.; Shirtliffe, S.; Stavness, I. DeepWheat: Estimating Phenotypic Traits from Crop Images with Deep Learning. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision, WACV 2018, Lake Tahoe, NV, USA, 12–15 March 2018; pp. 323–332. [Google Scholar]

- Noman, M.; Stankovic, V.; Tawfik, A. Object Detection Techniques: Overview and Performance Comparison. In Proceedings of the 2019 IEEE 19th International Symposium on Signal Processing and Information Technology, ISSPIT 2019, Ajman, United Arab Emirates, 10–12 December 2019. [Google Scholar] [CrossRef]

- Borges Oliveira, D.A.; Ribeiro Pereira, L.G.; Bresolin, T.; Pontes Ferreira, R.E.; Reboucas Dorea, J.R. A Review of Deep Learning Algorithms for Computer Vision Systems in Livestock. Livest. Sci. 2021, 253, 104700. [Google Scholar] [CrossRef]

- Koltes, J.E.; Cole, J.B.; Clemmens, R.; Dilger, R.N.; Kramer, L.M.; Lunney, J.K.; McCue, M.E.; McKay, S.D.; Mateescu, R.G.; Murdoch, B.M.; et al. A Vision for Development and Utilization of High-Throughput Phenotyping and Big Data Analytics in Livestock. Front. Genet. 2019, 10, 1197. [Google Scholar] [CrossRef]

- Barkema, H.W.; von Keyserlingk, M.A.G.; Kastelic, J.P.; Lam, T.J.G.M.; Luby, C.; Roy, J.P.; LeBlanc, S.J.; Keefe, G.P.; Kelton, D.F. Invited Review: Changes in the Dairy Industry Affecting Dairy Cattle Health and Welfare. J. Dairy Sci. 2015, 98, 7426–7445. [Google Scholar] [CrossRef] [PubMed]

- Lempitsky, V.; Zisserman, A. Learning To Count Objects in Images. In Proceedings of the TNIPS’10: Proceedings of the 23rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–9 December 2010; Volume 1, pp. 1324–1332. [Google Scholar]

- Xie, W.; Noble, J.A.; Zisserman, A. Microscopy Cell Counting and Detection with Fully Convolutional Regression Networks. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2018, 6, 283–292. [Google Scholar] [CrossRef]

- Õnoro-Rubio, D.; L’opez-Sastre, R.J. Towards Perspective-Free Object Counting with Deep Learning. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; Volume 9911, pp. 615–629. ISBN 978-3-319-46477-0. [Google Scholar]

- Kilic, E.; Ozturk, S. An Accurate Car Counting in Aerial Images Based on Convolutional Neural Networks. J. Ambient. Intell. Humaniz. Comput. 2021. [Google Scholar] [CrossRef]

- Haider, A.; Shaukat, F.; Mir, J. Human Detection in Aerial Thermal Imaging Using a Fully Convolutional Regression Network. Infrared Phys. Technol. 2021, 116, 103796. [Google Scholar] [CrossRef]

- Drid, K.; Allaoui, M.; Kherfi, M.L. Object Detector Combination for Increasing Accuracy and Detecting More Overlapping Objects. In International Conference on Image and Signal Processing; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2020; Volume 12119, pp. 290–296. [Google Scholar] [CrossRef]

- Carranza-García, M.; Torres-Mateo, J.; Lara-Benítez, P.; García-Gutiérrez, J. On the Performance of One-Stage and Two-Stage Object Detectors in Autonomous Vehicles Using Camera Data. Remote Sens. 2021, 13, 89. [Google Scholar] [CrossRef]

- Zhou, T.; Yu, Z.; Cao, Y.; Bai, H.; Su, Y. Study on an Infrared Multi-Target Detection Method Based on the Pseudo-Two-Stage Model. Infrared Phys. Technol. 2021, 118, 103883. [Google Scholar] [CrossRef]

- Patel, D. Single Shot Detector for Object Detection Using an Ensemble of Deep Learning and Statistical Modelling for Robot Learning Applications; Laurentian University Sudbury: Sudbury, ON, Canada, 2021; Available online: https://zone.biblio.laurentian.ca/handle/10219/3753 (accessed on 14 November 2022).

- Liang, M.; Hu, X. Recurrent Convolutional Neural Network for Object Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3367–3375. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep Learning in Agriculture: A Survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Mirhaji, H.; Soleymani, M.; Asakereh, A.; Abdanan Mehdizadeh, S. Fruit Detection and Load Estimation of an Orange Orchard Using the YOLO Models through Simple Approaches in Different Imaging and Illumination Conditions. Comput. Electron. Agric. 2021, 191, 106533. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep Learning—Method Overview and Review of Use for Fruit Detection and Yield Estimation. Comput. Electron. Agric. 2019, 162, 219–234. [Google Scholar] [CrossRef]

| Network | Batch Size | MAE | RMSE |

|---|---|---|---|

| FCRN | 1 | 23.65 | 36.69 |

| 8 | 37.78 | 45.76 | |

| 16 | 158.71 | 201.46 | |

| U-Net | 1 | 16.69 | 22.48 |

| 8 | 24.42 | 34.64 | |

| 16 | 144.28 | 198.34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Özden, C.; Bulut, M.; Çanga Boğa, D.; Boğa, M. Determination of Non-Digestible Parts in Dairy Cattle Feces Using U-NET and F-CRN Architectures. Vet. Sci. 2023, 10, 32. https://doi.org/10.3390/vetsci10010032

Özden C, Bulut M, Çanga Boğa D, Boğa M. Determination of Non-Digestible Parts in Dairy Cattle Feces Using U-NET and F-CRN Architectures. Veterinary Sciences. 2023; 10(1):32. https://doi.org/10.3390/vetsci10010032

Chicago/Turabian StyleÖzden, Cevher, Mutlu Bulut, Demet Çanga Boğa, and Mustafa Boğa. 2023. "Determination of Non-Digestible Parts in Dairy Cattle Feces Using U-NET and F-CRN Architectures" Veterinary Sciences 10, no. 1: 32. https://doi.org/10.3390/vetsci10010032

APA StyleÖzden, C., Bulut, M., Çanga Boğa, D., & Boğa, M. (2023). Determination of Non-Digestible Parts in Dairy Cattle Feces Using U-NET and F-CRN Architectures. Veterinary Sciences, 10(1), 32. https://doi.org/10.3390/vetsci10010032