1. Introduction

General practitioners (GPs) often perform physical abdominal examinations on patients to identify physical signs that test or confirm diagnostic possibilities that have arisen from their patient’s history. Abdominal palpation is one of the most widely used examination procedures, which is difficult to learn as it involves multiple feedback modalities and sensory coordination, i.e., touch, hearing, and vision. Hence, the technique requires years of experience for practitioners to sharpen their skills and sensitivity for a reliable diagnosis. This is currently obtained through repetitive training on patients with real diagnoses, who give instant feedback about any discomfort through verbal communication [

1,

2], facial expression [

3,

4], and physical reactions such as muscle guarding [

5,

6]. However, repetitive practice on real patients is very challenging as patients have few stays of short duration in the hospital.

An elegant alternative to practicing on patients is simulation-based education (SBE) [

7], whereby a proto-professional experience can be provided via utilizing manikin simulators [

8] or virtual reality (VR) platforms [

9]. The fabrication of internal organs is the key contribution of a physical manikin simulator. Multiple diseased liver models made of silicone can be designed as replacement units in a human-like physical abdominal simulator [

10]. Soft robotic livers with tunable stiffness tumors made of granular jamming nodules have also been proposed to improve the controllability of the organ with more diverse pathological conditions rendered [

8].

Alternatively, VR training systems allow higher flexibility in the simulated conditions, although the level of interactions is limited. Burdea et al. [

11] implemented a haptic device with visual supplements on a computer screen to establish a virtual environment for digital rectal examination training. Haptic devices that render single-point interaction with six degrees-of-freedom actuators have been widely used to reassemble the input palpation force and location to output tissue compliance in VR-based training [

12,

13]. However, simulating the haptic sensing of touch based on VR images can be complex for palpation training, as it takes years of experience for trainees and clinicians to grasp accurate haptic sensation for diagnosis and decision-making [

14].

Combining VR or augmented reality (AR) with a physical simulator provides advantages in gaining high-level interaction in a physical simulator and flexible feature rendering in virtual simulation as additional information for more effective training [

15,

16,

17]. By incorporating visual information into physical training, medical trainees benefit from being able to associate haptic perception with the stress levels at an organ level. Such internal models mapping haptic perception to visual representations of mechanical stress during palpation will further enhance the ability to discriminate among different physiological events, such as the edge of the liver passing under the fingers during a breathing cycle, touching a swollen area of the intestine, etc.

This visual information will also provide trainees immediate insight on how the palpation would affect the internal abdominal tissue (e.g., too much pressure may cause discomfort to patients or impact on already damaged tissues), besides being an added potential for experienced clinicians to calibrate their examination technique. This is particularly advantageous in scenarios in which trainees are yet to be familiarised with the different multi-sensory perceptions, e.g., body language, facial expressions, and verbal signals shown by patients, from palpating on patients with actual conditions. However, currently there is no abdominal simulator for physical examination training that has the capability to provide such visual information to trainees especially in real time.

Computational modelling can be used to produce this important layer of information for the simulator. By using finite element modelling (FEM), we can predict tissue deformation under mechanical loading, such as palpation. Several FE human body models have been developed. For instance, a complete human body model named the total human model for safety (THUMS) model [

18] by Toyota Motor Corporation was developed and validated for injury reconstruction and crash analyses, but it lacks details of the abdominal tissues. The Global Human Body Model Consortium (GHBMC) [

19] has also developed a full human body model for impact studies in road traffic accidents. However, this model has a complex description of abdominal tissues and has been developed for high rate loading; hence, it is not suitable for palpation simulation. Therefore, we constructed a new model from CT scans of the human abdomen, allowing us to model different abdominal tissues for different physiological conditions, such as different stages of the respiratory cycle. Performing FE simulations for palpation requires a long run time and high computation, which is not desirable especially when real-time information is needed for immediate training feedback.

Surrogate models based on machine learning (ML) methods can potentially help us build accurate and fast models to integrate with a physical setup for real-time palpation training. Machine learning has been used previously to build fast and accurate surrogate models by using data obtained from FEM simulations. An artificial neural network (ANN) was trained in [

20] to estimate stress distribution in the aorta, and in [

21,

22] to simulate deformation in soft tissue in real time. Other studies implemented a convolution neural network (CNN) technique for fast visualisation of soft tissue behaviour from FEM simulations, such as liver deformation [

23,

24]. These previous studies have developed surrogate models for real-time surgical planning, computer-guided surgery, and guided tumour irradiation. However, there are currently no surrogate models available for palpation.

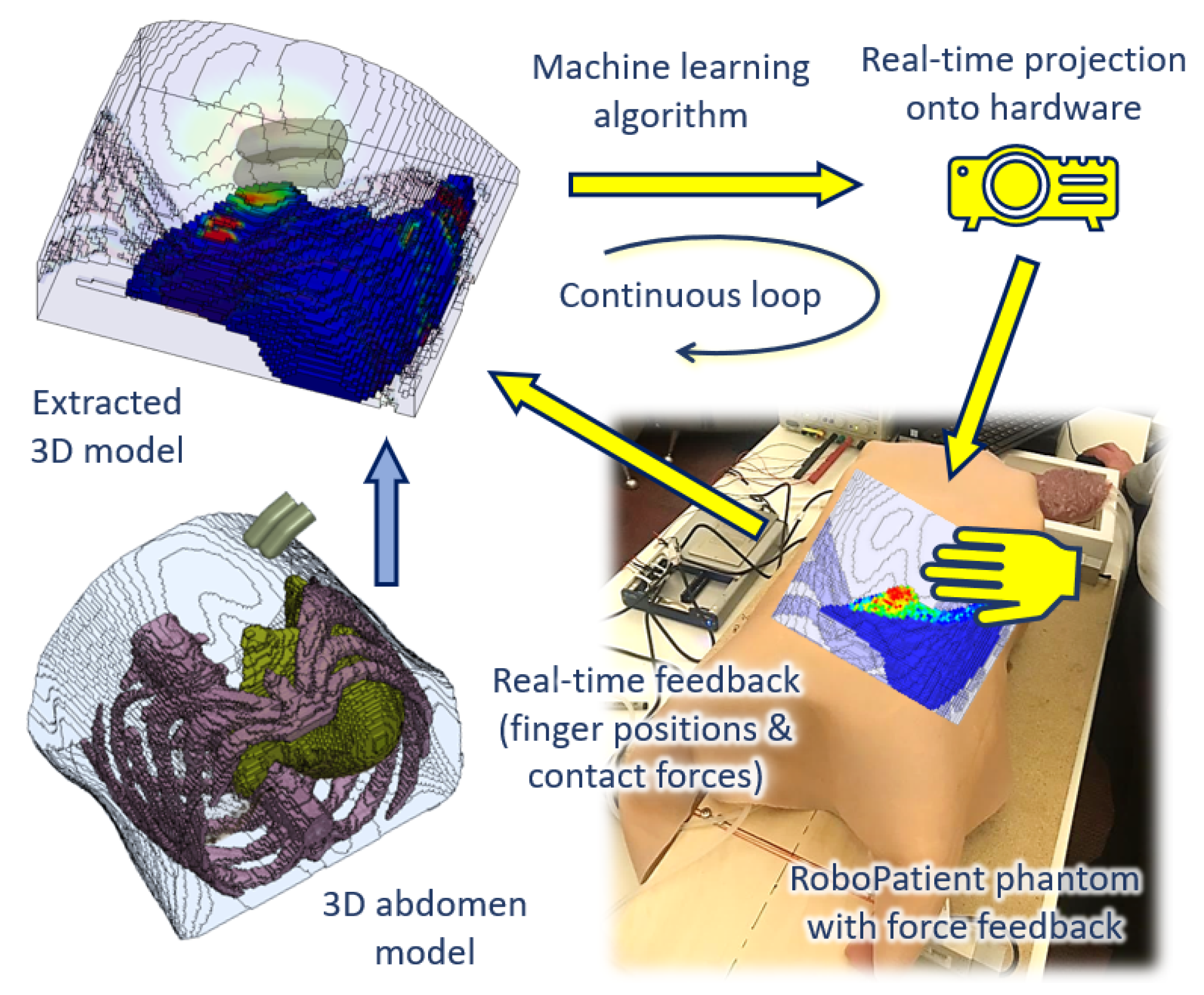

In this paper, we proposed a new ANN-trained surrogate model based on FEM simulations as shown in

Figure 1. The FE simulations were performed by using a new model of the abdomen developed from CT scans. We tested whether the surrogate model could accurately predict the stress distribution at the edge of the liver, which is a key area in a physical examination. We also showed that the surrogate model could provide real-time predictions. Finally, we demonstrated that the surrogate model could be integrated with a physical palpation platform to provide visual information on tissue deformation.

2. Methodology

The development of the RoboPatient simulator involves the integration between the hardware platform with force feedback, and the software, i.e., the construction of a surrogate model based on FEM simulations of abdominal palpation. These stages are described in the following subsections.

2.1. Finite Element Model Setup

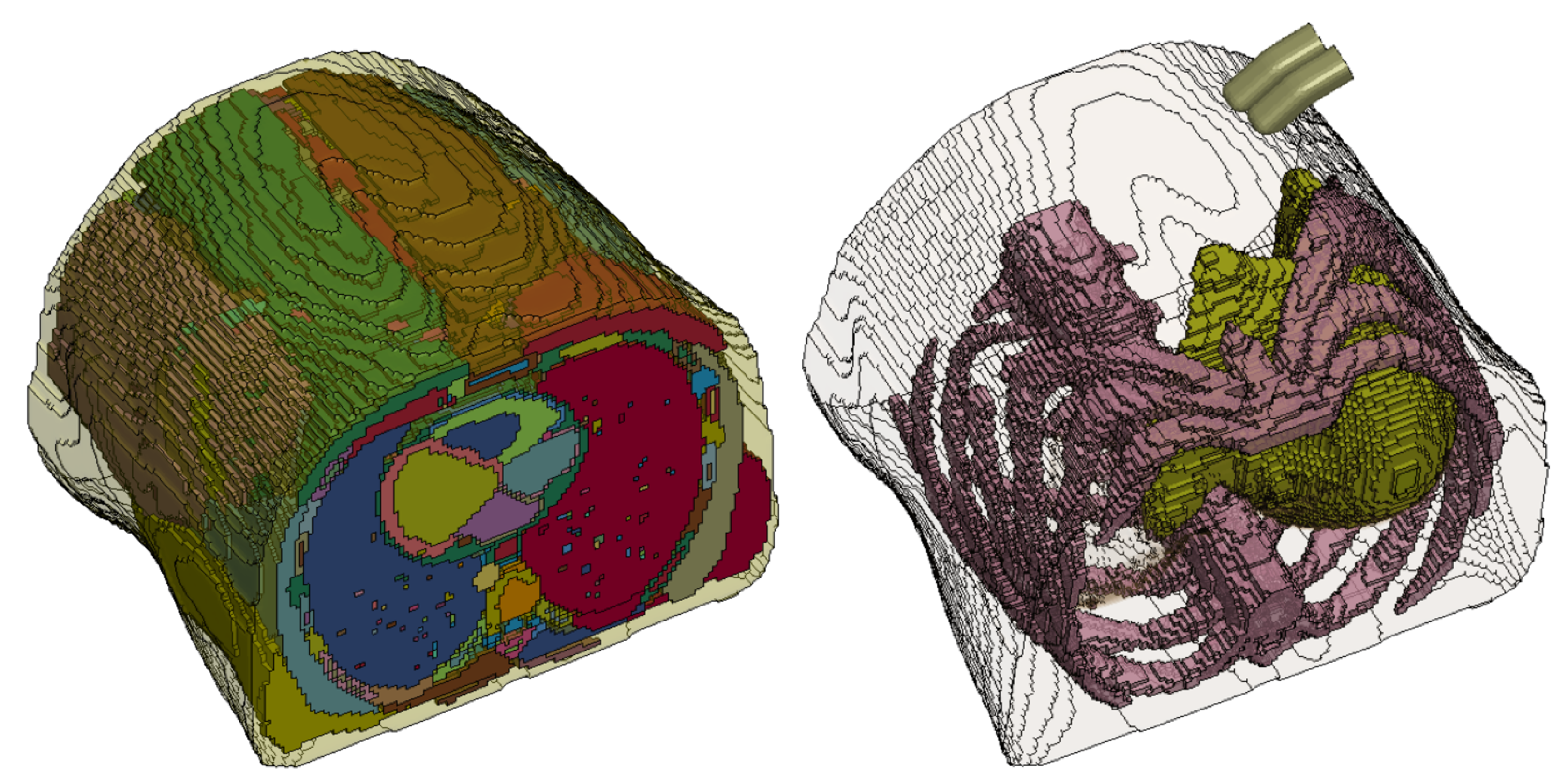

An anatomically detailed 3D model of the abdomen was extracted from computerized tomography (CT) scans of the human abdomen by using 4D Extended Cardiac-Torso (XCAT) Phantom software [

25] configured to capture the scan images with respiratory motion at full exhalation. The generated scan sequences were then post-processed in ImageJ software to produce 32-bit real image sequences at a resolution of

pixels to be converted into a 3D human abdomen model. This full abdomen model was then segmented and meshed (

Figure 2a) for FEM simulations.

We used an image-based meshing algorithm as described in Ghajari et. al. [

26] to develop the FE model. In this method, the coordinates of each voxel of the image was used to generate a hexahedral FE. Different anatomical regions of the image were assigned different part numbers in the mesh file. This meshing process led to jagged edges on the surface of the model. The mesh-smoothing algorithm, explained in Ghajari et. al. [

26] was used to smooth the mesh. The model was then simplified by grouping all internal organs, except the ribs and liver, into a "lumped" abdominal model (see

Figure 2b) with the focus on liver deformation during palpation in this study. This was done to reduce the complexity of materials and contact interactions among the many organs within the abdomen in the model.

The simulations were set up in LS-Prepost (Livermore Software Technology Corporation—LSTC, Livermore, CA, USA) by using material properties validated for the flesh (i.e., simplified rubber/foam) in [

27,

28,

29] to represent the lumped abdominal tissue, and those for the ribs (i.e., piecewise linear plasticity) in [

29,

30,

31] (see

Table 1). The liver was modelled as a visco-hyperelastic material with parameters reported in [

32,

33]. The Ogden strain energy function in Equation (

1) was used to model the hyperelastic part of the liver tissue response,

where

are the principal stretches with

, and

and

are the material constants, which we obtained by fitting the model to experimental stress/strain curve provided in [

32]. Equation (

2) incorporates the strain rate dependency of the tissue into the model,

where

is the long-term second Piola–Kirchhoff stress tensor,

E is the Green–Lagrange strain tensor, and the relaxation function

is represented by the Prony series given by Equation (

3),

in which

and

are the shear moduli and the decay constant, respectively. The material constants for the visco-hyperelastic liver tissue are given in

Table 2.

An FE model of two fingers were added to the abdomen model to simulate palpation. As the deformation of the finger is negligible compared with the soft abdominal tissue, they are modelled as a rigid part. The surface-to-surface penalty contact algorithm was used to define the contact between the fingers and abdomen.

2.2. Finite Element Simulations

FE simulations often require long run-time and high computation especially when the model is highly complex with respect to the number of parts, contact surfaces, and elements. We explored several ways to further simplify the abdomen model for simulations with the aim to reduce computational time.

We cropped out a reduced model comprising the top half of the liver and the surrounding abdominal tissue (

Figure 3), reducing the number of elements from 547,250 elements to 112,955 elements. We simulated 15 mm of finger indentation onto both the newly reduced model and the abdomen model depicted in

Figure 2b to verify the difference in the behaviour of both the full simplified model and the reduced model. We found a negligible difference between the models in terms of the contact force (less than 0.12 N), but there was a significant reduction in the simulation time from about 18.5 h down to 7.5 h.

We also performed a simulation on the reduced model with indentation over a longer duration of 500 ms (simulation duration = 57 h) to observe the difference in the tissue response with the simulation at 25 ms. The effect of the palpation speed on the tissue response was also found to be negligible. It should be noted that we focused on the transient response during indentation, thus not taking into account tissue relaxation.

The palpation simulations for our application were then performed with finger indentations up to 15 mm onto the abdominal locations above the liver region, depicted by the red crosses in

Figure 4a. We developed a framework in Matlab to generate palpation FE models for each of these indentation locations. The Matlab code generates the FE models, runs FE simulations, and saves the results in text files. Each simulation took approximately 1.5 h on our high performance computing (HPC) unit with 20 cores and 24 gigabytes of memory. The final datasets generated by using this framework contained the stress/strain of every element of the region of interest (ROI), as shown in

Figure 4, as a function of the indentation locations and the fingers contact forces for each simulation time stamp. The information in the datasets forms the basis of the surrogate model for the ANN training in the next step.

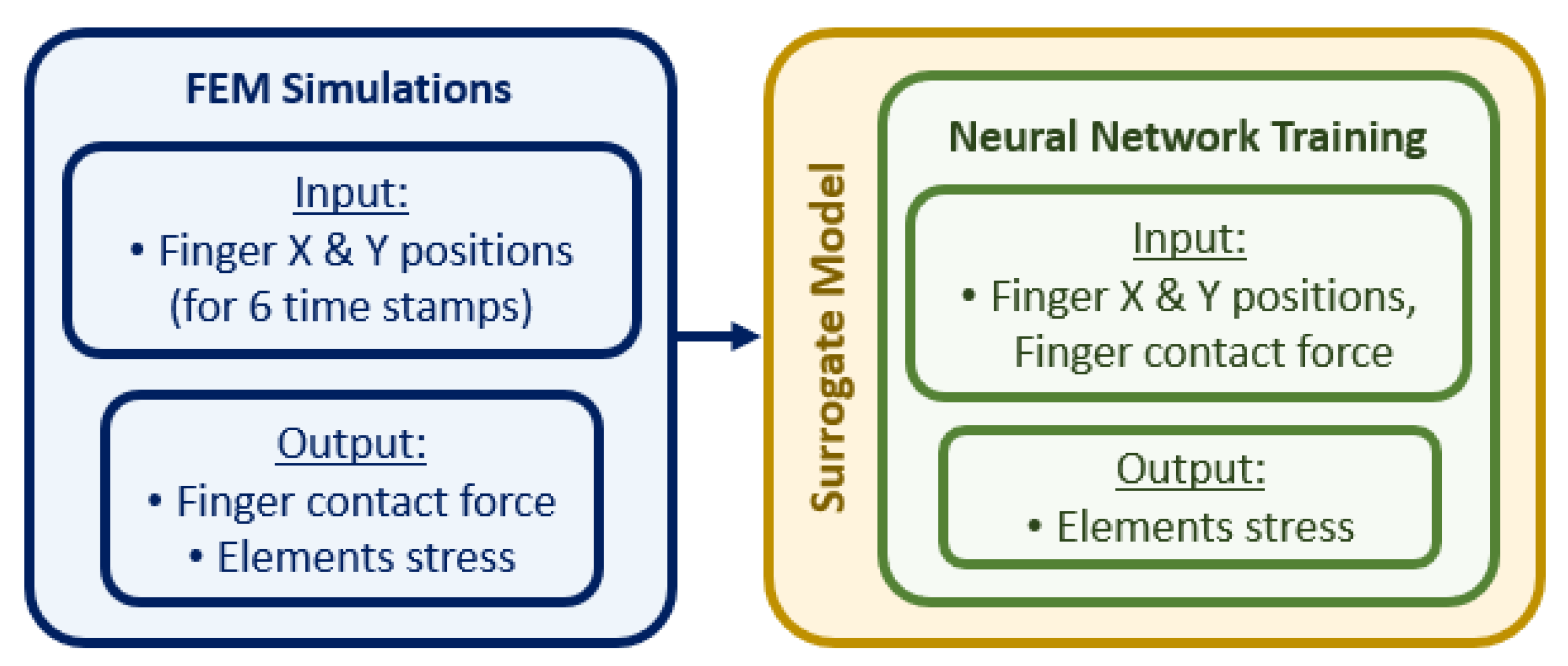

2.3. Construction of the Surrogate Model

The surrogate model links the input parameters in the FE simulations, i.e., the positions of the fingers in the x and y axes and the contact force on the tip of the fingers, to the output parameters of the simulation, i.e., the stress tensor of the elements in the liver, as shown in the flowchart in

Figure 5. As our aim is to predict the stress distribution of the liver tissue at indentation locations that have not been simulated with the FE model, we trained an ANN described in the next section by using the information we obtained from our simulations.

We focused on the edge of the liver as the region of interest, which has 377 elements (see

Figure 4b). The stress tensors of each solid element at each time step was used to compute the maximum principal stress (Equation (

4)):

where

is the stress tensor, and its eigenvalue gives a diagonal matrix consisting of

,

, and

, which are the minimum, intermediate, and maximum principal stresses, respectively. In this study, we used the maximum principal stress,

.

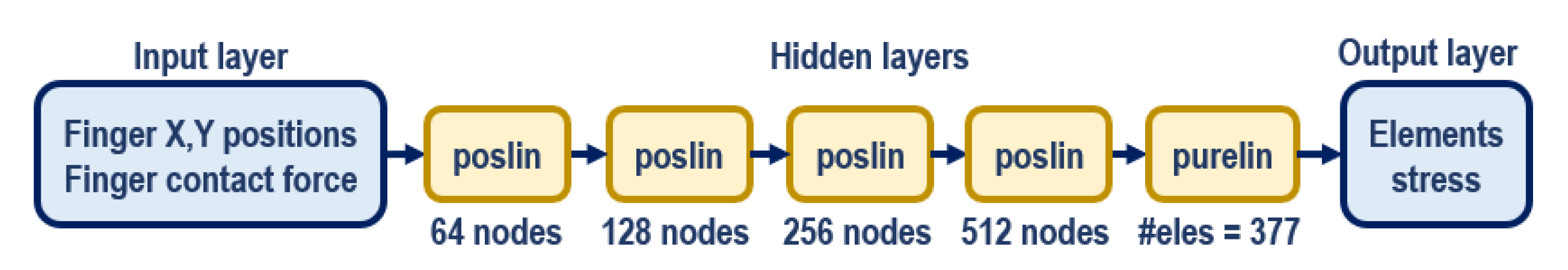

2.4. Surrogate Model Training

The surrogate model was trained with 150 datasets consisting of 25 palpation locations (depicted by red crosses in

Figure 4a) at six time stamps (0 ms, 5 ms, 10 ms, 15 ms, 20 ms and 25 ms). The input to the model were location and force of indentation, and output was the stress in the liver elements. Data from three other palpation locations (depicted by green crosses in

Figure 4a) were used to test the network.

An ANN with multi-layer perceptron was developed in Matlab for the surrogate training by using the ANN training toolbox. The network (

Figure 6) has five hidden layers which use positive linear (poslin) activation functions, and the final hidden layer uses a hard-limit linear (purelin) function. The training implements gradient descent, specifically that with momentum and adaptive learning rate backpropagation algorithm. This network architecture was chosen based on the balance between the network complexity and its performance. The training process took approximately 16 min to complete with the datasets extracted from all the FEM simulation on a desktop computer (Intel(R) Xeon(R) CPU E5-2623 v4 @ 2.60 GHz—dual processors, 128 GB RAM). The trained network was then tested with the test dataset, and its the accuracy was evaluated.

2.5. Integration of the Surrogate Model and Force Sensing Platform

The surrogate model was then integrated with a force-sensing platform. The overall experimental setup is shown in

Figure 7. The force-sensing platform is constructed from perspex pieces of 400 mm × 400 mm × 10 mmin dimensions. The platform is equipped with four Robotous RA80-6A01 force-torque sensors, each attached to one corner of the platform. These sensors are connected together by CAN buses via SeeedStudio CAN Bus Shield V2 to Arduino Mega 2560 for measurement readings. The readings from each sensor are combined to provide force measurements when fingers are in contact with the platform.

Calibrations are also performed by using the readings of each sensor to determine the positions of the fingers during palpation. These position and force readings are obtained continuously in real time and are sent to the surrogate model to predict the stress/strain field at the edge of the liver. The stress distribution is mapped to a colour scheme and projected onto the platform for real time visualisation of the changes by using an AAXA P300 NEO Pico LED Video Projector.

3. Results and Discussion

3.1. Fe Predictions of Liver Stress Due to Palpation

Figure 8 shows the maximum principal stress distribution (in GPa) within the liver at the maximum indentation (15 mm) at the final simulation time stamp (25 ms). The simulation results here are shown for selected simulation cases, i.e., the first and last indentation locations in each horizontal line according to

Figure 4a for illustration due to space constraint. The range of the maximum principal stress displayed in the legend covers the maximum and minimum values of the overall 25 sets of simulation data across all six time stamps, i.e.,

KPa and

KPa, respectively. As expected, the maximum stress was predicted near the edge of the liver and when the fingers were passing across this region.

The new FEM of the human abdomen we constructed provides us with detailed insight of the internal tissue behaviour for palpation as opposed to the existing models which are established for specific purposes, such as high-impact analyses [

18,

19]. However, FEM simulations are highly time-consuming, at least 1.5 h for each palpation location, rendering them impractical for real-time predictions.

3.2. Surrogate Model Validation and Performance

We validated the performance of the surrogate model against the FEM simulations. The validation showed comparable stress distribution onto the liver edge across the ROI, with correct prediction of areas of high stresses (see

Figure 9). The performance of the surrogate model was evaluated on the test dataset by using Equation (

5),

where

y,

and

are the actual, estimated, and average stress values in testing stage respectively, and

k is the element number in the FE model. The performance of the surrogate model was 92.64%. The training performance is shown in

Figure 10, with the mean squared error of all 377 elements within the ROI approaching the best threshold at epoch 20,000.

The entire ANN training process on the surrogate model only took approximately 16 min, and the following predictions of the stress distributions across the ROI occur instantly with respect to the forces and positions of the fingers when in contact with the force-sensing platform, without having to execute FE simulations each time. This process provides us with the advantage of immediate visual feedback for palpation training.

3.3. Implementation on Force-Sensing Platform

Upon the integration of the surrogate model with the hardware platform, the ANN takes in real-time contact force and x–y position readings from the force sensors incorporated on the platform (see

Figure 7) as input. The changes in the internal element stress distribution within the ROI are then projected onto the hardware platform for real-time visualization during user training.

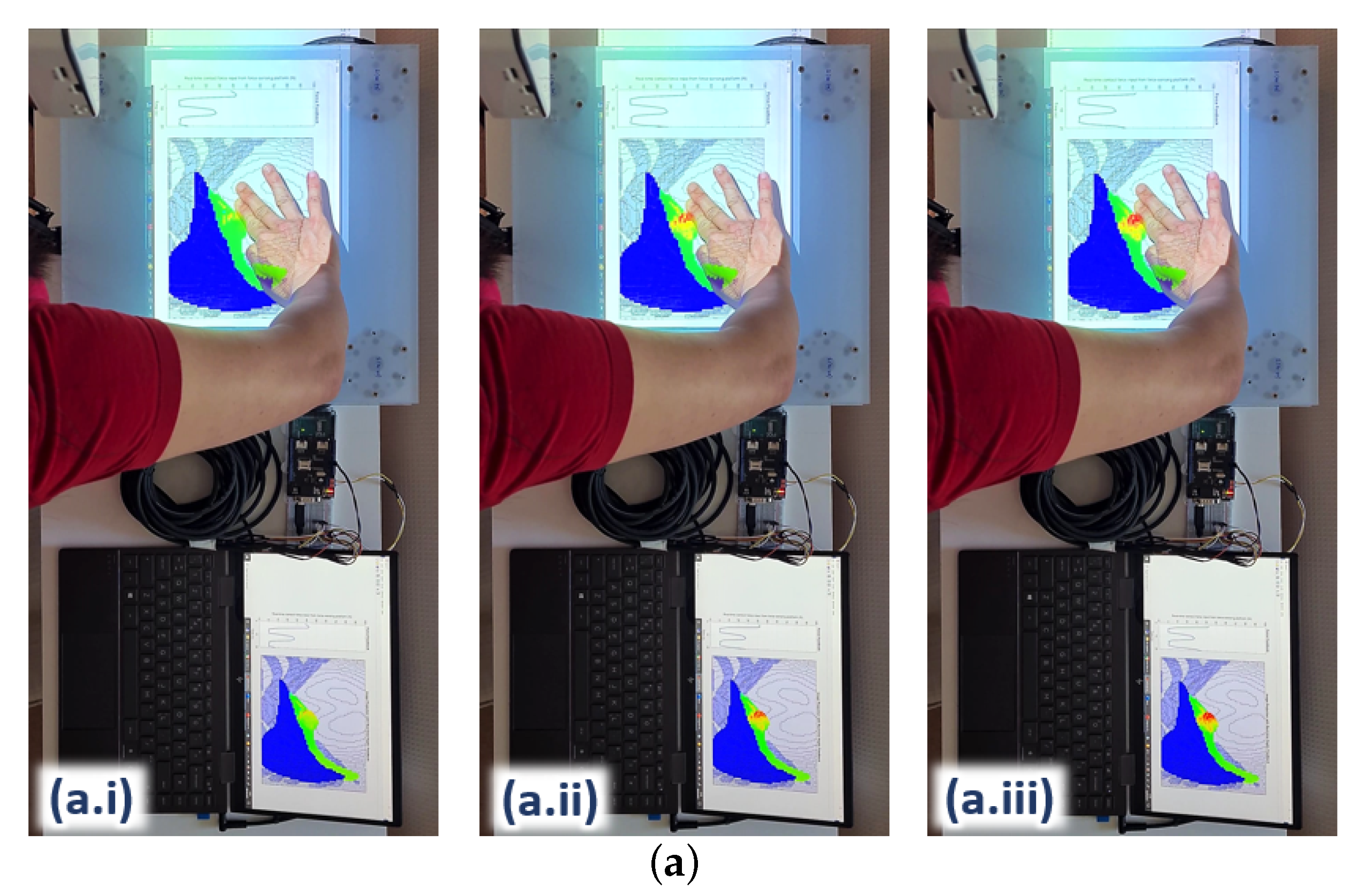

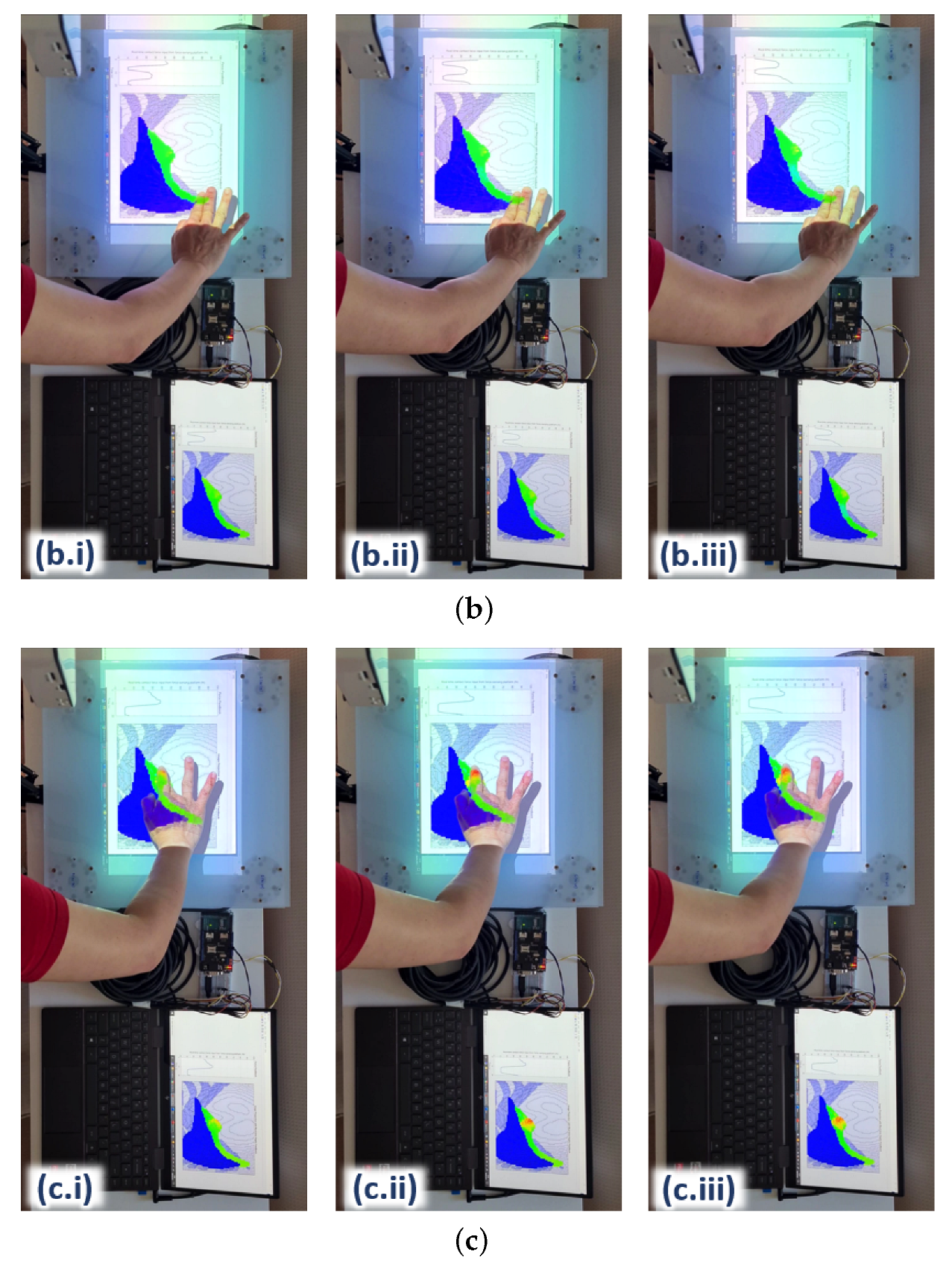

Figure 11 shows three examples of the 2D rendering of the stress distribution from the trained ANN on the hardware platform during real-time force feedback. The image frames are taken at three different palpation locations, i.e., A, B and C, at three approximately similar contact forces, i.e., (i), (ii) and (iii), respectively, to observe the stress distribution on the liver edge.

The stress distribution on the liver tissue is highly dependent on the 3D model of the liver within the abdomen. For instance, some parts of the liver are closer to the surface of the abdomen and not covered by the ribcage (e.g., where palpation at location A is); hence, this part of the liver tissue is more responsive to palpation, visualising a higher stress distribution around the vicinity. The part of the liver tissue, where location B is, appears to be deeper in the 3D abdomen model; hence, it would need stronger palpation or higher force to reach the same stress distribution on the liver as it is with case C. In addition, the force would have been attenuated to a certain degree by the thick abdominal tissue above that part of the liver.

As for the liver region around location C, most of the liver tissue is located beneath the rib cage in the 3D model; hence, more force would also be needed to reach a higher stress distribution on the liver tissue. These differences in the stress distributions around the abdomen clearly demonstrate that the surrogate model trained by the ANN matches the behaviours of the FE model in the aspects of palpation locations as well as the 3D of the model (e.g., the tissue depth within the model).

The integration of the surrogate model with the force-sensing platform to provide real-time visual feedback provides us with several benefits. Our new abdomen FEM has the capability to generate high-fidelity tissue behaviours comparable to existing FE models [

18,

19]. In addition, our ANN-trained surrogate model based on the FEM enables us to augment information from the visual-contact force interaction as seen in high-level interventions such as surgical planning and computer-aided surgical procedure [

22,

23,

24]. This is a promising application for palpation training so medical trainees and practitioners have an added visual aid to help regulate their palpation behaviours with respect to the impact onto the internal organs during palpation.

3.4. Limitations and Future Work

Currently, the RoboPatient simulator is tested only with the perspex force-sensing plate for feasibility study. With the surrogate model trained based on the FEM simulations results, the force and position feedback on the force-sensing platform are sufficient to reflect the stress distribution on the 2D image projection. An abdomen phantom, moulded with a liver phantom within (both can be made by casting EcoFlex silicone resin) to match the FE model in 2D or 3D configuration can be conveniently included for a more realistic feel. The phantom can also be customised to emulate various pathological conditions for diverse training experience, such as added tumorous tissues of different stiffness levels by using a modular granular jamming design [

8].

The FE model of our abdomen was generated by using XCAT software from a single male human body based on the 3D Visible Human Project [

34]; hence, it does not take into account physiological variations, such as the visceral fat in different human bodies. More investigation is required to include these variations into our FE model configurations to provide a more realistic abdominal model for a larger range of palpation training. In addition, the FE model was extracted from the CT scan at a single respiratory frame. Even though this model provides a sufficient representation of the abdominal tissue dynamics during palpation for our feasibility study, it would be more realistic to take into account the respiratory cycle in our simulations. Customised tissue abnormalities, such as tumours within the liver tissue with different severity can also be included into the FE models for more advanced training programmes. These features can then be added into the surrogate model and ANN training, as well as the abdomen phantom to produce a more authentic visualisation and palpation feedback.

When palpating onto the force-sensing platform, a slight latency in the range of 200–500 ms during the visual projections was observed, taking into account the duration of 150 ms our brains take to process visual information [

35]. This slight latency does not affect the feedback of the force sensor input (i.e., contact force and fingers location) to the surrogate model and the tissue deformation rendering, but it could be improved for a smoother visual projection. This slight latency could be due to the communications within the hardware and software integration (i.e., Arduino, SeeedStudio CAN Bus Shield, Matlab) and the refresh rate in the projector. It can be improved by hardware enhancement (e.g., a better processor) and optimization of the algorithms. We can also consider designing a more modular ANN or a cluster of localised networks that is more region-oriented to provide faster response, and, hence, a faster training rate. This would be advantageous in allowing us to expand the ROI to the entire liver or abdomen.

A user study will be performed with the involvements of medical trainees and practitioners to assess the level of improvements in their palpation skills when given the added visual feedback. We are interested to observe the changes in their palpation skills, such as the reconditioning of fingers’ configuration and regulating palpation forces with respect to the real-time projection of internal stress distribution, as well as the enhancement in their perception when other feedback (e.g., haptic or facial expressions) are involved.

4. Conclusions

In this paper, we present a neural network-trained surrogate model for real-time visualisation of internal abdominal stress distribution during manual palpation of the abdomen. This surrogate model can be used to assist medical trainees to use a robotic patient phantom to associate their palpation forces with the internal organ deformation. Such visual feedback can be potentially used as a gender-ethnicity neutral intervention to train medical students to mitigate biases in other inputs such as facial or auditory expressions of pain during physical examination. It is beneficial for trainees who have not yet attained much experience involving multiple motor-sensory systems when palpating on actual patients, for instance visual and auditory signals (i.e., to appropriately understand facial and verbal expressions of patients) and the sense of touch (i.e., in feeling the different textures and stiffness of tissue as well as patient’s motions such as muscle contractions). Moreover, the added visual feedback on internal tissue can add potential for experienced clinicians to calibrate their examination techniques to improve their skills in diagnosis and decision-making. The benefits of this RoboPatient simulator with the added visual feedback will be evaluated through a user study involving medical trainees and practitioners.

A high-fidelity FE model of the abdomen was generated and used to predict tissue dynamics during palpation. We constructed a surrogate model based on FEM simulation results, and trained it with an ANN to produce rapid results with a performance fit accuracy of . This enables accurate real-time estimation of the internal tissue stress given a real-time palpation force. The ability of the surrogate model, which represents the 3D high fidelity FE abdomen model to take in real-time physical palpation input from the hardware platform gives us the advantage of an augmented visuo-haptic feedback as compared to a pure virtual interaction. The slight latency during real-time projection can be improved by further optimising the code implementation, as well as the hardware integration.

The ultimate aim, which we are currently working toward, is to incorporate a layered abdominal tissue phantom, moulded with liver phantom with the force-sensing platform, for a more realistic feel during manual palpation as shown in

Figure 1. To enhance our FE abdomen model, we are also working on incorporating respiratory cycles into the FEM simulation to provide a better understanding on tissue behaviour when the abdomen is being palpated in normal breathing conditions. Different tissue conditions, such as a liver with tumours of different stiffness and sizes at various locations, can also be added into the FEM simulations and the ANN surrogate model for a better sense of finger-tissue interaction, hence providing a more complete palpation training experience.