Toward Sensor-to-Text Generation: Leveraging LLM-Based Video Annotations for Stroke Therapy Monitoring

Abstract

1. Introduction

- We propose an automated video-based annotation pipeline that integrates LLMs and ML techniques to detect and classify patient activities during therapy sessions.

- We explore the feasibility of identifying task-specific distinctive features from accelerometer signals.

- We propose a deep learning-based visionary framework that can deliver text-based feedback to patients and clinicians using multimodal data, enhancing stroke rehabilitation monitoring.

2. Literature Review

3. Problem Description

4. Methodology

4.1. Data Sources

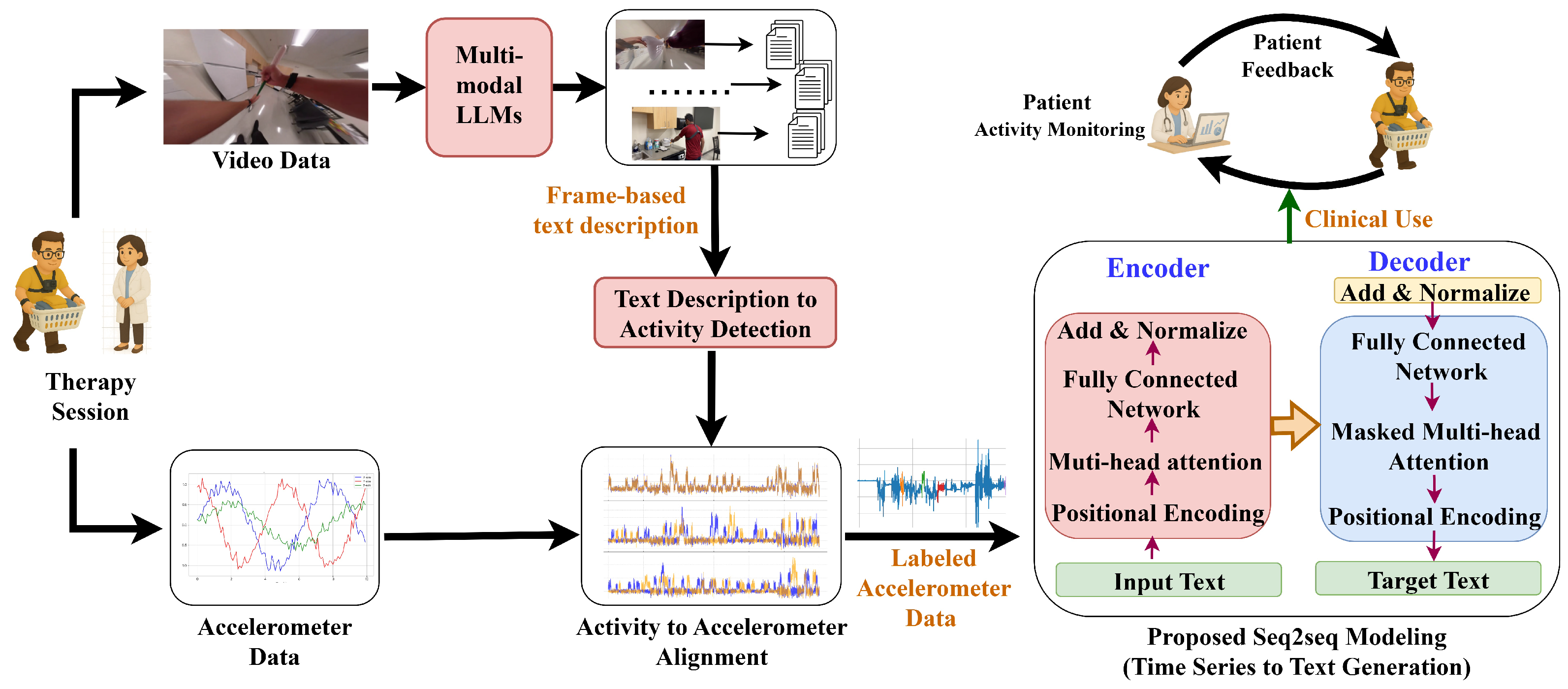

4.2. Text Annotation from Video

4.3. Seq2seq Learning Model

5. Experimental Results

- How effectively can relevant activity descriptions be generated from videos using locally deployed LLMs? (Section 5.1)

- What are the strengths and limitations of cloud-based LLMs in generating structured activity descriptions from video inputs? (Section 5.2)

- To what extent are certain human activities detectable using text descriptions of video frames generated by LLMs? (Section 5.3)

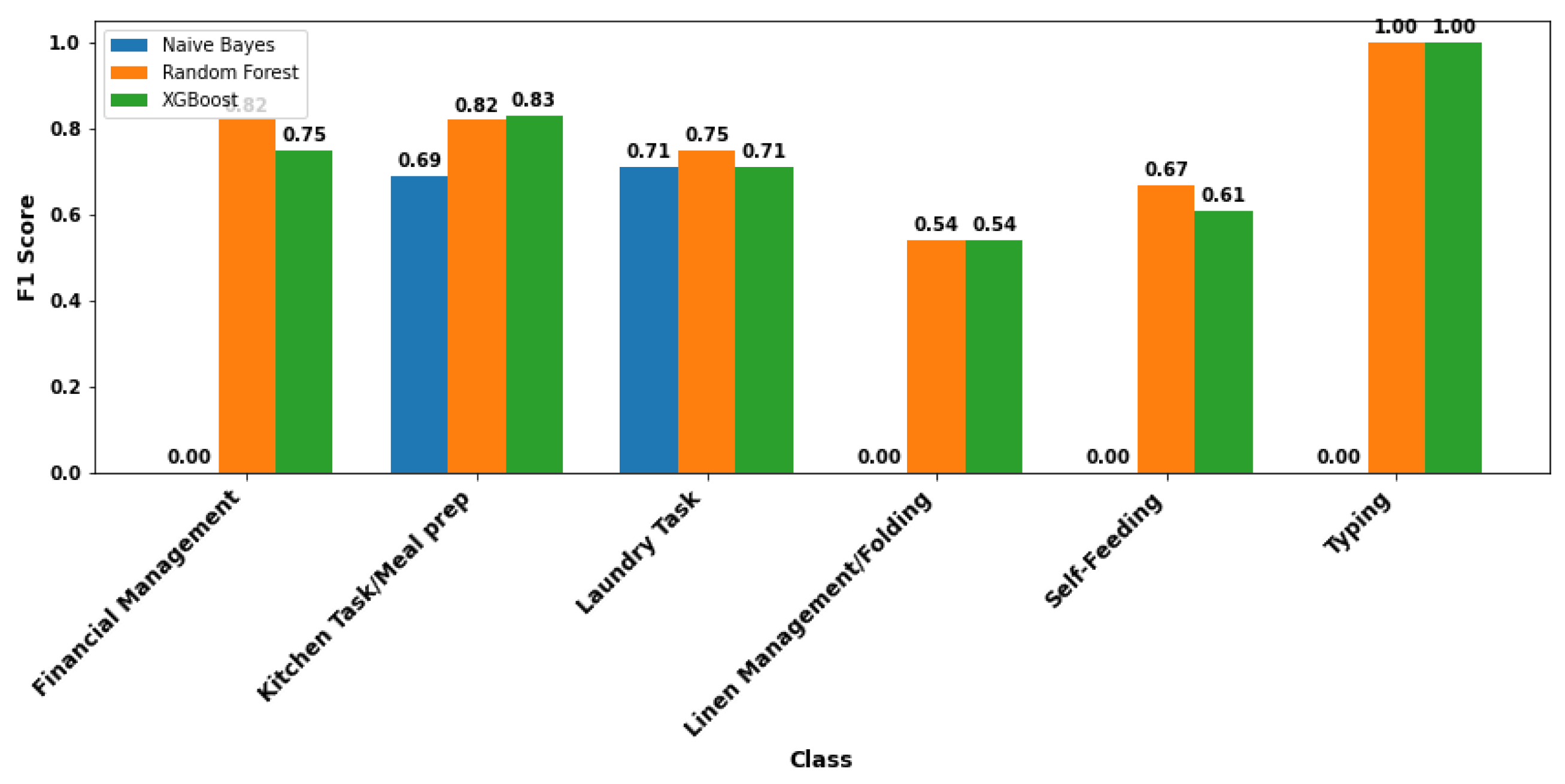

- To what extent accelerometer data can be used for identifying different human activities? (Section 5.4 and Section 5.5)

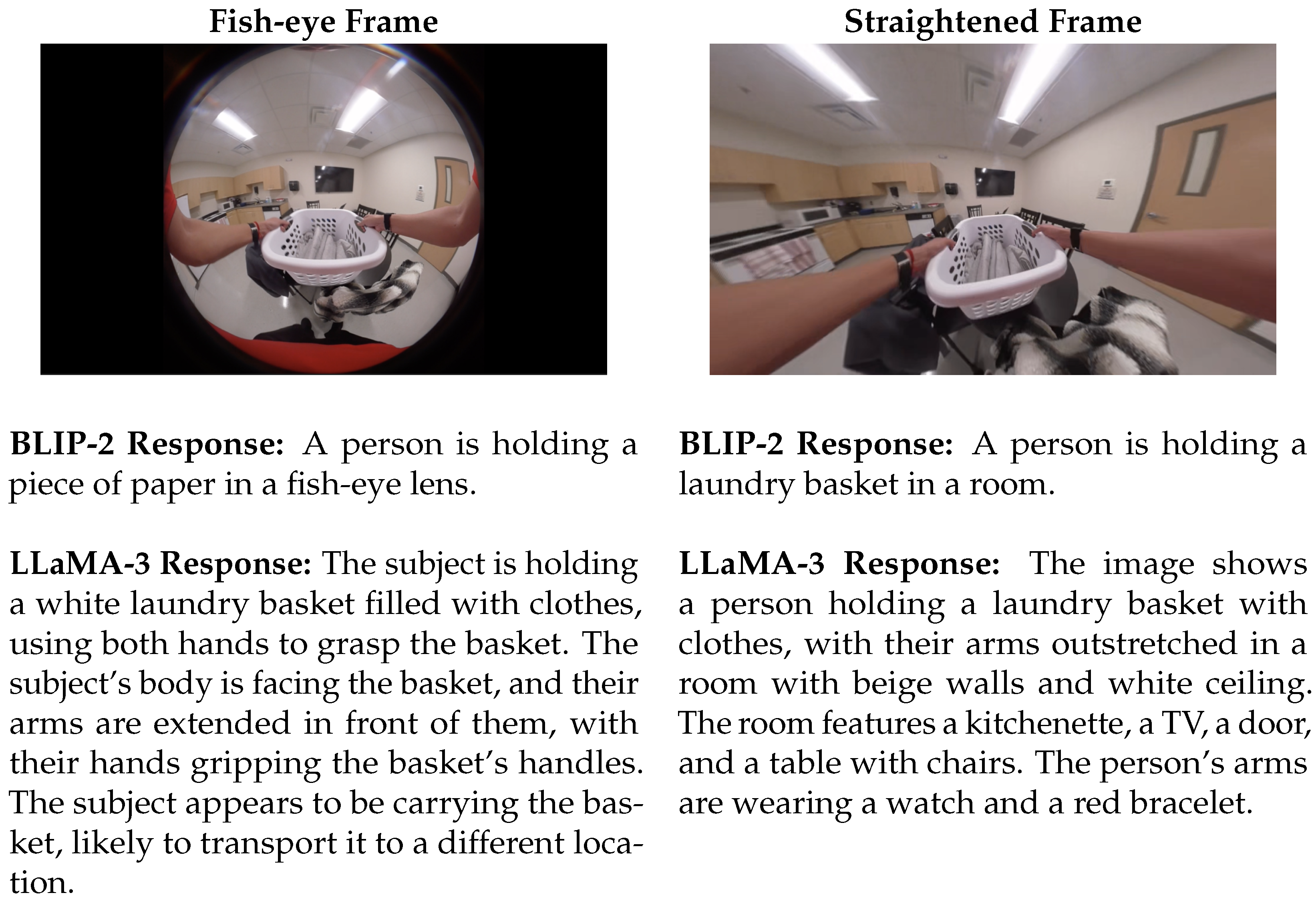

5.1. Generating Activity Descriptions Using Locally Deployed LLMs

5.2. Generating Activity Descriptions Using Cloud-Based LLMs

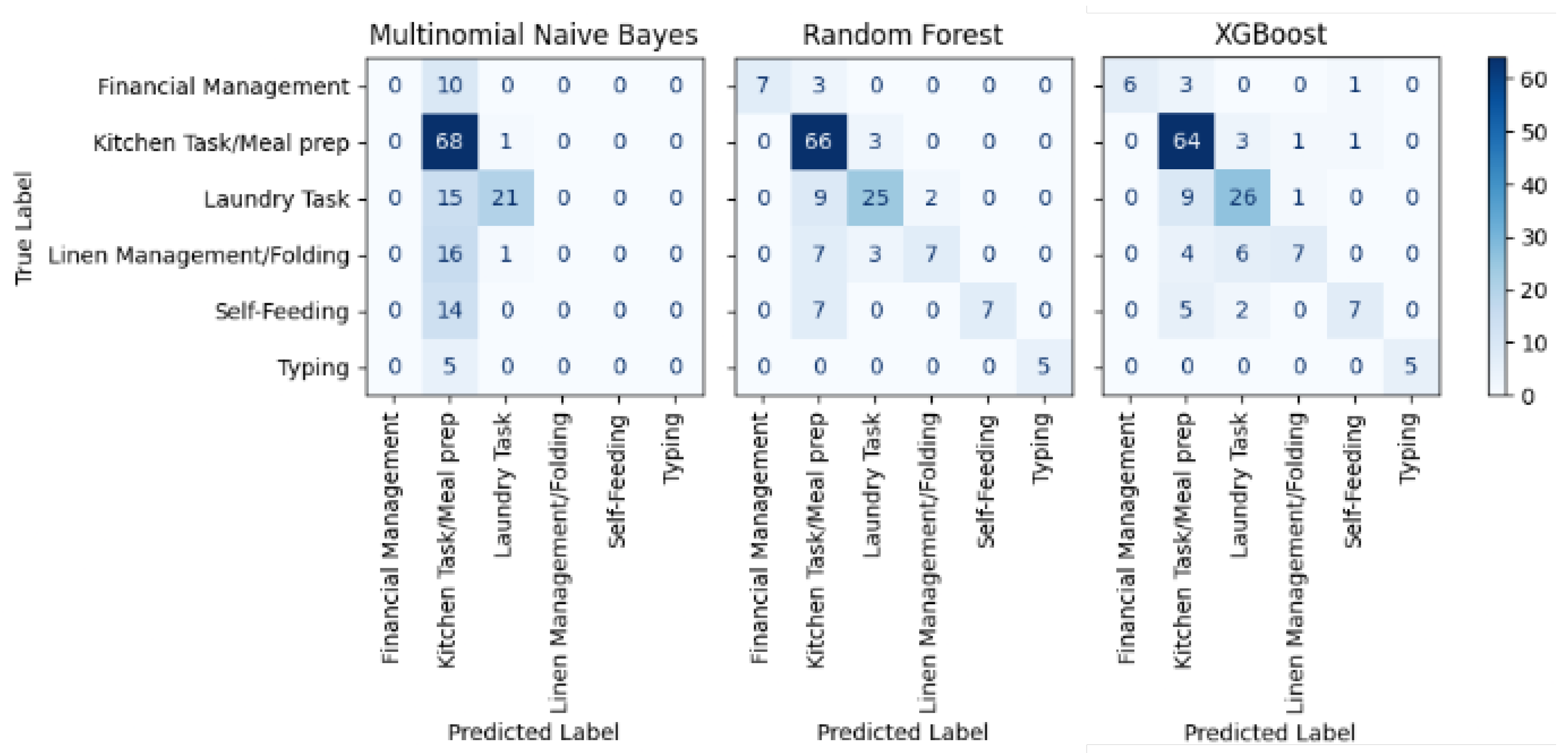

5.3. Automating Task Annotations

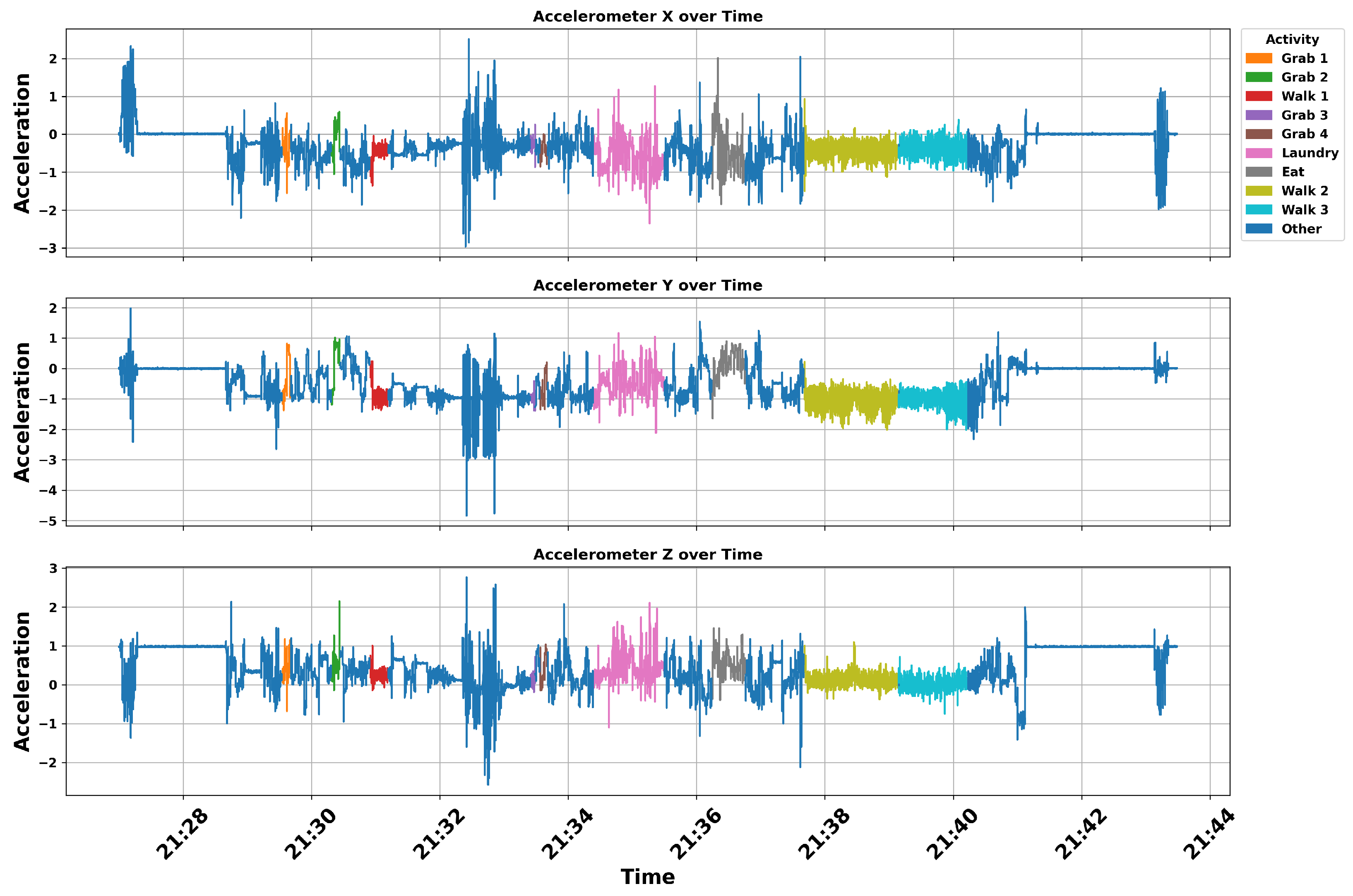

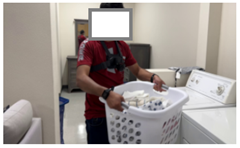

5.4. Annotating Accelerometer Data and Finding Temporal Features

5.5. Additional Findings from the Data: Case Series Report

6. Discussion

7. Summary

8. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kleindorfer, D.O.; Towfighi, A.; Chaturvedi, S.; Cockroft, K.M.; Gutierrez, J.; Lombardi-Hill, D.; Kamel, H.; Kernan, W.N.; Kittner, S.J.; Leira, E.C.; et al. 2021 Guideline for the Prevention of Stroke in Patients with Stroke and Transient Ischemic Attack: A Guideline from the American Heart Association/American Stroke Association. Stroke 2021, 52, e364–e467. [Google Scholar] [CrossRef] [PubMed]

- Virani, S.S.; Alonso, A.; Benjamin, E.J.; Bittencourt, M.S.; Callaway, C.W.; Carson, A.P.; Chamberlain, A.M.; Chang, A.R.; Cheng, S.; Delling, F.N.; et al. Heart Disease and Stroke Statistics-2020 Update: A Report from the American Heart Association. Circulation 2020, 141, e139–e596. [Google Scholar] [CrossRef]

- Geed, S.; Feit, P.; Edwards, D.F.; Dromerick, A.W. Why Are Stroke Rehabilitation Trial Recruitment Rates in Single Digits? Front. Neurol. 2021, 12, 674237. [Google Scholar] [CrossRef]

- Simpson, L.A.; Hayward, K.S.; McPeake, M.; Field, T.S.; Eng, J.J. Challenges of Estimating Accurate Prevalence of Arm Weakness Early After Stroke. Neurorehabil. Neural Repair 2021, 35, 15459683211028240. [Google Scholar] [CrossRef]

- Young, B.M.; Holman, E.A.; Cramer, S.C. Rehabilitation Therapy Doses Are Low After Stroke and Predicted by Clinical Factors. Stroke 2023, 54, 831–839. [Google Scholar] [CrossRef]

- Barth, J.; Geed, S.; Mitchell, A.; Brady, K.P.; Giannetti, M.L.; Dromerick, A.W.; Edwards, D.F. The Critical Period After Stroke Study (CPASS) Upper Extremity Treatment Protocol. Arch. Rehabil. Res. Clin. Transl. 2023, 5, 100282. [Google Scholar] [CrossRef]

- Kwakkel, G.; Stinear, C.; Essers, B.; Munoz-Novoa, M.; Branscheidt, M.; Cabanas-Valdés, R.; Lakičević, S.; Lampropoulou, S.; Luft, A.R.; Marque, P. Motor rehabilitation after stroke: European Stroke Organisation (ESO) consensus-based definition and guiding framework. Eur. Stroke J. 2023, 8, 880–894. [Google Scholar] [CrossRef] [PubMed]

- Bernhardt, J.; Hayward, K.S.; Kwakkel, G.; Ward, N.S.; Wolf, S.L.; Borschmann, K.; Krakauer, J.W.; Boyd, L.A.; Carmichael, S.T.; Corbett, D.; et al. Agreed definitions and a shared vision for new standards in stroke recovery research: The Stroke Recovery and Rehabilitation Roundtable taskforce. Int. J. Stroke 2017, 12, 444–450. [Google Scholar] [CrossRef]

- Wolf, S.L.; Kwakkel, G.; Bayley, M.; McDonnell, M.N. Best practice for arm recovery post stroke: An international application. Physiotherapy 2016, 102, 1–4. [Google Scholar] [CrossRef] [PubMed]

- Steins, D.; Dawes, H.; Esser, P.; Collett, J. Wearable accelerometry-based technology capable of assessing functional activities in neurological populations in community settings: A systematic review. J. Neuroeng. Rehabil. 2014, 11, 36. [Google Scholar] [CrossRef]

- Jim, E.A.; Utsha, M.A.H.; Aurna, F.N.; Choudhury, A.; Hoque, M.A. Towards Safer Aging: A Comprehensive Edge Computing Approach to Unconsciousness and Fall Detection. In Proceedings of the 2025 International Conference on Electrical, Computer and Communication Engineering (ECCE), Chittagong, Bangladesh, 13–15 February 2025; pp. 1–6. [Google Scholar]

- Geed, S. Towards measuring the desired neurorehabilitation outcomes directly with accelerometers and machine learning. Dev. Med. Child Neurol. 2024, 66, 1274–1275. [Google Scholar] [CrossRef]

- Geed, S.; Grainger, M.L.; Mitchell, A.; Anderson, C.C.; Schmaulfuss, H.L.; Culp, S.A.; McCormick, E.R.; McGarry, M.R.; Delgado, M.N.; Noccioli, A.D.; et al. Concurrent validity of machine learning-classified functional upper extremity use from accelerometry in chronic stroke. Front. Physiol. 2023, 14, 1116878. [Google Scholar] [CrossRef] [PubMed]

- Sequeira, S.B.; Grainger, M.L.; Mitchell, A.M.; Anderson, C.C.; Geed, S.; Lum, P.; Giladi, A.M. Machine Learning Improves Functional Upper Extremity Use Capture in Distal Radius Fracture Patients. Plast. Reconstr. Surgery Global Open 2022, 10, e4472. [Google Scholar] [CrossRef]

- Barth, J.; Geed, S.; Mitchell, A.; Lum, P.S.; Edwards, D.F.; Dromerick, A.W. Characterizing upper extremity motor behavior in the first week after stroke. PLoS ONE 2020, 15, e0221668. [Google Scholar] [CrossRef]

- Mathew, S.P.; Dawe, J.; Musselman, K.E.; Petrevska, M.; Zariffa, J.; Andrysek, J.; Biddiss, E. Measuring functional hand use in children with unilateral cerebral palsy using accelerometry and machine learning. Dev. Med. Child Neurol. 2024, 66, 1380–1389. [Google Scholar] [CrossRef]

- Tran, T.; Chang, L.C.; Lum, P. Functional Arm Movement Classification in Stroke Survivors Using Deep Learning with Accelerometry Data. In Proceedings of the IEEE International Conference on Big Data, Sorrento, Italy, 15–18 December 2023. [Google Scholar]

- Dobkin, B.H.; Martinez, C. Wearable Sensors to Monitor, Enable Feedback, and Measure Outcomes of Activity and Practice. Curr. Neurol. Neurosci. Rep. 2018, 18, 87. [Google Scholar] [CrossRef] [PubMed]

- Aziz, O.; Park, E.J.; Mori, G.; Robinovitch, S.N. Distinguishing the causes of falls in humans using an array of wearable tri-axial accelerometers. Gait Posture 2014, 39, 506–512. [Google Scholar] [CrossRef] [PubMed]

- Gebruers, N.; Vanroy, C.; Truijen, S.; Engelborghs, S.; De Deyn, P.P. Monitoring of physical activity after stroke: A systematic review of accelerometry-based measures. Arch. Phys. Med. Rehabil. 2010, 91, 288–297. [Google Scholar] [CrossRef]

- Clark, E.; Podschun, L.; Church, K.; Fleagle, A.; Hull, P.; Ohree, S.; Springfield, M.; Wood, S. Use of accelerometers in determining risk of falls in individuals post-stroke: A systematic review. Clin. Rehabil. 2023, 37, 1467–1478. [Google Scholar] [CrossRef]

- Peters, D.M.; O’Brien, E.S.; Kamrud, K.E.; Roberts, S.M.; Rooney, T.A.; Thibodeau, K.P.; Balakrishnan, S.; Gell, N.; Mohapatra, S. Utilization of wearable technology to assess gait and mobility post-stroke: A systematic review. J. NeuroEngineering Rehabil. 2021, 18, 67. [Google Scholar] [CrossRef]

- Nieto, E.M.; Lujan, E.; Mendoza, C.A.; Arriaga, Y.; Fierro, C.; Tran, T.; Chang, L.-C.; Gurovich, A.N.; Lum, P.S.; Geed, S. Accelerometry and the Capacity–Performance Gap: Case Series Report in Upper-Extremity Motor Impairment Assessment Post-Stroke. Bioengineering 2025, 12, 615. [Google Scholar] [CrossRef] [PubMed]

- Urbin, M.A.; Waddell, K.J.; Lang, C.E. Acceleration metrics are responsive to change in upper extremity function of stroke survivors. Arch. Phys. Med. Rehabil. 2015, 96, 854–861. [Google Scholar] [CrossRef]

- Lum, P.S.; Shu, L.; Bochniewicz, E.M.; Tran, T.; Chang, L.C.; Barth, J.; Dromerick, A.W. Improving Accelerometry-Based Measurement of Functional Use of the Upper Extremity After Stroke: Machine Learning Versus Counts Threshold Method. Neurorehabilit. Neural Repair 2020, 34, 1078–1087. [Google Scholar] [CrossRef]

- Srinivasan, S.; Amonkar, N.; Kumavor, P.D.; Bubela, D. Measuring Upper Extremity Activity of Children with Unilateral Cerebral Palsy Using Wrist-Worn Accelerometers: A Pilot Study. Am. J. Occup. Ther. 2024, 78, 7802180050. [Google Scholar] [CrossRef]

- Dawe, J.; Yang, J.F.; Fehlings, D.; Likitlersuang, J.; Rumney, P.; Zariffa, J.; Musselman, K.E. Validating Accelerometry as a Measure of Arm Movement for Children with Hemiplegic Cerebral Palsy. Phys. Ther. 2019, 99, 721–729. [Google Scholar] [CrossRef]

- Bailey, D.P.; Ahmed, I.; Cooper, D.L.; Finlay, K.A.; Froome, H.M.; Nightingale, T.E.; Romer, L.M.; Goosey-Tolfrey, V.L.; Ferrandino, L. Validity of a wrist-worn consumer-grade wearable for estimating energy expenditure, sedentary behaviour, and physical activity in manual wheelchair users with spinal cord injury. Disabil. Rehabil. Assist. Technol. 2025, 20, 708–714. [Google Scholar] [CrossRef]

- Khan, M.U.G.; Zhang, L.; Gotoh, Y. Human Focused Video Description. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 1480–1487. [Google Scholar] [CrossRef]

- Hanckmann, P.; Schutte, K.; Burghouts, G.J. Automated Textual Descriptions for a Wide Range of Video Events with 48 Human Actions. In Proceedings of the Computer Vision—ECCV 2012. Workshops and Demonstrations, Florence, Italy, 7–13 October 2012; pp. 372–380. [Google Scholar] [CrossRef]

- Ramanishka, V.; Das, A.; Park, D.H.; Venugopalan, S.; Hendricks, L.A.; Rohrbach, M.; Saenko, K. Multimodal Video Description. In Proceedings of the 24th ACM International Conference on Multimedia (MM ’16), Amsterdam, The Netherlands, 15–19 October 2016; pp. 1092–1096. [Google Scholar] [CrossRef]

- Hori, C.; Hori, T.; Lee, T.-Y.; Zhang, Z.; Harsham, B.; Hershey, J.R.; Marks, T.K.; Sumi, K. Attention-Based Multimodal Fusion for Video Description. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4193–4202. [Google Scholar]

- Thomason, J.; Venugopalan, S.; Guadarrama, S.; Saenko, K.; Mooney, R. Integrating Language and Vision to Generate Natural Language Descriptions of Videos in the Wild. In Proceedings of the COLING 2014: The 25th International Conference on Computational Linguistics: Technical Papers, Dublin, Ireland, 23–29 August 2014; pp. 1218–1227. [Google Scholar]

- Wang, X.; Chen, W.; Wu, J.; Wang, Y.-F.; Wang, W.Y. Video Captioning via Hierarchical Reinforcement Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4213–4222. [Google Scholar]

- Li, L.; Hu, K. Text Description Generation from Videos via Deep Semantic Models. In Proceedings of the 2021 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Tokyo, Japan, 14–17 December 2021; pp. 1551–1555. [Google Scholar]

- Chen, L.; Wei, X.; Li, J.; Dong, X.; Zhang, P.; Zang, Y.; Chen, Z.; Duan, H.; Tang, Z.; Yuan, L.; et al. ShareGPT4Video: Improving Video Understanding and Generation with Better Captions. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2024; pp. 19472–19495. [Google Scholar]

- Allappa, S.S.; Thenkanidiyoor, V.; Dinesh, D.A. Video Activity Recognition Using Sequence Kernel Based Support Vector Machines. In Proceedings of the International Conference on Pattern Recognition Applications and Methods, Madeira, Portugal, 16–18 January 2018; pp. 164–185. [Google Scholar]

- de Almeida Maia, H.; Ttito Concha, D.; Pedrini, H.; Tacon, H.; de Souza Brito, A.; de Lima Chaves, H.; Bernardes Vieira, M.; Moraes Villela, S. Action Recognition in Videos Using Multi-Stream Convolutional Neural Networks. In Deep Learning Applications; Springer: New York, NY, USA, 2020; pp. 95–111. [Google Scholar]

- Kulbacki, M.; Segen, J.; Chaczko, Z.; Rozenblit, J.W.; Kulbacki, M.; Klempous, R.; Wojciechowski, K. Intelligent Video Analytics for Human Action Recognition: The State of Knowledge. Sensors 2023, 23, 4258. [Google Scholar] [CrossRef]

- Ullah, H.A.; Letchmunan, S.; Zia, M.S.; Butt, U.M.; Hassan, F.H. Analysis of Deep Neural Networks for Human Activity Recognition in Videos—A Systematic Literature Review. IEEE Access 2021, 9, 126366–126387. [Google Scholar] [CrossRef]

- Soleimani, F.; Khodabandelou, G.; Chibani, A.; Amirat, Y. Activity Recognition via Multimodal Large Language Models and Riemannian Optimization. HAL Preprint. 2025. Available online: https://hal.science/hal-04943796v1/file/Main_V0.pdf (accessed on 18 August 2025).

- Lara, O.D.; Labrador, M.A. A Survey on Human Activity Recognition Using Wearable Sensors. IEEE Commun. Surv. Tutor. 2012, 15, 1192–1209. [Google Scholar] [CrossRef]

- Shakya, S.R.; Zhang, C.; Zhou, Z. Comparative Study of Machine Learning and Deep Learning Architecture for Human Activity Recognition Using Accelerometer Data. Int. J. Mach. Learn. Comput. 2018, 8, 577–582. [Google Scholar]

- Chernbumroong, S.; Atkins, A.S.; Yu, H. Activity Classification Using a Single Wrist-Worn Accelerometer. In Proceedings of the 2011 5th International Conference on Software, Knowledge Information, Industrial Management and Applications (SKIMA) Proceedings, Benevento, Italy, 8–11 September 2011; pp. 1–6. [Google Scholar]

- Panwar, M.; Dyuthi, S.R.; Prakash, K.C.; Biswas, D.; Acharyya, A.; Maharatna, K.; Gautam, A.; Naik, G.R. CNN Based Approach for Activity Recognition Using a Wrist-Worn Accelerometer. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju Island, Republic of Korea, 11–15 July 2017; pp. 2438–2441. [Google Scholar]

- Uswatte, G.; Taub, E.; Morris, D.P.P.T.; Light, K.P.P.T.; Thompson, P.A. The Motor Activity Log-28: Assessing Daily Use of the Hemiparetic Arm after Stroke. Neurology 2006, 67, 1189–1194. [Google Scholar] [CrossRef]

- Li, J.; Li, D.; Savarese, S.; Hoi, S. BLIP-2: Bootstrapping Language-Image Pre-Training with Frozen Image Encoders and Large Language Models. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 19730–19742. [Google Scholar]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The LLaMA 3 Herd of Models. arXiv 2024, arXiv:2407.21783. [Google Scholar] [CrossRef]

- Jin, Y.; Li, J.; Zhang, J.; Hu, J.; Gan, Z.; Tan, X.; Liu, Y.; Wang, Y.; Wang, C.; Ma, L. LLaVA-VSD: Large Language-and-Vision Assistant for Visual Spatial Description. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, VIC, Australia, 28 October–1 November 2024; pp. 11420–11425. [Google Scholar]

- Wang, J.; Yang, Z.; Hu, X.; Li, L.; Lin, K.; Gan, Z.; Liu, Z.; Liu, C.; Wang, L. GIT: A Generative Image-to-Text Transformer for Vision and Language. arXiv 2022, arXiv:2205.14100. [Google Scholar]

- Ding, N.; Tang, Y.; Fu, Z.; Xu, C.; Han, K.; Wang, Y. GPT4IMAGE: Can Large Pre-Trained Models Help Vision Models on Perception Tasks? arXiv 2023, arXiv:2306.00693. [Google Scholar]

- Xia, M.; Wu, Z. Dual-Encoder-Based Image-Text Fusion Algorithm. In Proceedings of the International Conference on Image Processing and Artificial Intelligence (ICIPAl 2024), Suzhou, China, 19–21 April 2024; pp. 204–210. [Google Scholar]

- Tran, M.-N.; To, T.-A.; Thai, V.-N.; Cao, T.-D.; Nguyen, T.-T. AGAIN: A Multimodal Human-Centric Event Retrieval System Using Dual Image-to-Text Representations. In Proceedings of the 12th International Symposium on Information and Communication Technology, Hochiminh City, Vietnam, 7–8 December 2023; pp. 931–937. [Google Scholar]

- Hu, E.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 11106–11115. [Google Scholar]

| Model | Primary Modality | Multimodal Support | Key Features |

|---|---|---|---|

| BLIP-2 [47] | Vision-Language | Yes | Pre-trained for vision-language bridging, strong captioning performance |

| LLaMA-3 [48] | Language | Indirect (via integration) | Can be paired with vision encoders for multimodal applications |

| LLaVA [49] | Language (with vision encoder) | Yes | Chat-style multimodal model with instruction-following capability |

| GIT [50] | Vision-Language | Yes | Unified model for image captioning and VQA using transformer |

| GPT-4V [51] | Language (closed weights) | Yes | Strong zero-shot capabilities on visual reasoning tasks |

| DUAL-LLM [52] | Vision-Language | Yes | Two-stream architecture optimized for image captioning |

| AGAIN [53] | Vision-Language | Yes | Focuses on grounding and alignment between vision and text |

| Frame | Hand Activity | Background | Activity Description |

|---|---|---|---|

| The subject’s hands are grasping a piece of fabric, possibly a shirt or dress, as they move through a room. | The background of the image is a white room with a bed and dresser, suggesting a bedroom or hospital room. | The subject appears to be in the process of putting on the garment, with their hands holding the fabric and their body moving through the room, possibly indicating that they are getting dressed or changing clothes. |

| The hands are holding a laundry basket with a grey garment in it. | The hands are holding a laundry basket with a grey garment in it, in a room with white walls and a white ceiling. | The subject is in a home setting, doing a daily task of folding laundry, as they hold a laundry basket with a grey garment in it. |

| The first chunk describes the hand motion as “two hands reaching out towards a table from the bottom of the frame.” | The second chunk describes the background associated with the hands as “the hands are positioned in front of a round table in a room.” | The third chunk describes the subject’s activity in a home setting as "the subject is preparing a meal, likely in a kitchen, with the hands reaching for a bowl.” |

| The first chunk describes the hand’s action: “The hand is holding a pen and writing on a piece of paper.” | The background shows a room with a white ceiling, a black TV, and a white wall. | The third chunk describes the activity in a home setting: “The subject is writing on a piece of paper, likely doing homework or taking notes in a home setting.” |

| The subject is holding a green object in their hand, with their wrist bent in a way that suggests they are using it to point at something. | The background appears to be a room with white walls and a white ceiling, possibly a classroom or office setting. | The subject is holding a green object in their hand, with their wrist bent in a way that suggests they are using it to point at something..... |

| Frame | Prompt 1 | Prompt 2 |

|---|---|---|

| The person in the image appears to be engaged in household activities, specifically opening a plastic container at a table. | Left hand: Grabbing. What is the left hand holding: A container lid. Right hand: Grabbing. What is the right hand holding: A container |

| The person is doing laundry. They are carrying a laundry basket filled with clothes. | Left hand: Grabbing. What is the left hand holding: A laundry basket. Right hand: Grabbing. What is the right hand holding: A laundry basket. |

| The person in the image appears to be engaged in a kitchen-related activity. They are standing at a counter, placing or handling a bottle. This setup, with cleaning supplies and dishware present, suggests they might be tidying up or preparing the area for another task. The presence of a camera harness indicates that their actions are being recorded, likely for documenting or broadcasting their activities. | Left hand: Grabbing. What is the left hand holding: A transparent bottle. Right hand: Not grabbing. What is the right hand holding: Nothing. |

| Task | Axis X | Axis Y | Axis Z | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | std | Median | ZCR | Mean | std | Median | ZCR | Mean | std | Median | ZCR | |

| Grab 1 | −0.358 | 0.344 | −0.346 | 2.125 | −0.311 | 0.685 | −0.626 | 0.250 | 0.418 | 0.333 | 0.358 | 0.125 |

| Grab 2 | −0.096 | 0.376 | 0.069 | 2.500 | 0.209 | 0.690 | 0.627 | 0.375 | 0.550 | 0.297 | 0.526 | 0.125 |

| Grab 3 | −0.274 | 0.139 | −0.276 | 0.805 | −0.983 | 0.181 | −0.953 | 0.000 | 0.128 | 0.163 | 0.119 | 0.201 |

| Grab 4 | −0.375 | 0.190 | −0.399 | 0.286 | −0.634 | 0.428 | −0.787 | 0.857 | 0.427 | 0.314 | 0.393 | 0.143 |

| Laundry | −0.562 | 0.376 | −0.580 | 1.394 | −0.435 | 0.433 | −0.448 | 0.758 | 0.409 | 0.332 | 0.354 | 0.015 |

| Eat | −0.454 | 0.515 | −0.566 | 1.160 | 0.144 | 0.415 | 0.145 | 1.837 | 0.553 | 0.277 | 0.530 | 0.032 |

| Walk 1 | −0.470 | 0.206 | −0.425 | 0.000 | −0.823 | 0.311 | −0.887 | 0.529 | 0.244 | 0.139 | 0.249 | 0.059 |

| Walk 2 | −0.408 | 0.207 | −0.396 | 0.284 | −1.027 | 0.287 | −0.999 | 0.023 | 0.120 | 0.173 | 0.106 | 0.011 |

| Walk 3 | −0.376 | 0.188 | −0.369 | 0.800 | −0.998 | 0.253 | −0.965 | 0.000 | −0.009 | 0.156 | −0.027 | 0.015 |

| PID | p006 | p007 | p008 | p009 |

|---|---|---|---|---|

| Age (years) | 76 | 68 | 46 | 71 |

| Sex | Female | Male | Male | Male |

| Race | AA | White | White | White |

| Ethnicity | Hispanic | Hispanic | Hispanic | Hispanic |

| Stroke type | Ischemic | Ischemic | Ischemic | Ischemic |

| Months post-stroke | 126.9 | 7.5 | 26.2 | 37.5 |

| Affected arm | Dominant | Dominant | Dominant | Dominant |

| Concordance | Concordant | Concordant | Concordant | Concordant |

| NIHSS motor arm (Impaired) | 3 | 2 | 1 | 1 |

| NIHSS motor arm (Unimpaired) | 0 | 0 | 0 | 0 |

| ARAT (Impaired) | 9 | 40 | 56 | 50 |

| ARAT (Unimpaired) | 56 | 57 | 57 | 57 |

| UEFM (Impaired) | 22 | 48 | 60 | 58 |

| UEFM (Unimpaired) | 66 | 66 | 66 | 66 |

| Patient ID | Std Dev (Working Hand) | Std Dev (Impaired Hand) | Difference |

|---|---|---|---|

| p006 | 0.754 | 0.409 | 0.344 |

| p007 | 0.498 | 0.428 | 0.070 |

| p008 | 0.502 | 0.449 | 0.053 |

| p009 | 0.478 | 0.427 | 0.050 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hoque, M.A.; Ehsan, S.; Choudhury, A.; Lum, P.; Akbar, M.; Geed, S.; Hossain, M.S. Toward Sensor-to-Text Generation: Leveraging LLM-Based Video Annotations for Stroke Therapy Monitoring. Bioengineering 2025, 12, 922. https://doi.org/10.3390/bioengineering12090922

Hoque MA, Ehsan S, Choudhury A, Lum P, Akbar M, Geed S, Hossain MS. Toward Sensor-to-Text Generation: Leveraging LLM-Based Video Annotations for Stroke Therapy Monitoring. Bioengineering. 2025; 12(9):922. https://doi.org/10.3390/bioengineering12090922

Chicago/Turabian StyleHoque, Mohammad Akidul, Shamim Ehsan, Anuradha Choudhury, Peter Lum, Monika Akbar, Shashwati Geed, and M. Shahriar Hossain. 2025. "Toward Sensor-to-Text Generation: Leveraging LLM-Based Video Annotations for Stroke Therapy Monitoring" Bioengineering 12, no. 9: 922. https://doi.org/10.3390/bioengineering12090922

APA StyleHoque, M. A., Ehsan, S., Choudhury, A., Lum, P., Akbar, M., Geed, S., & Hossain, M. S. (2025). Toward Sensor-to-Text Generation: Leveraging LLM-Based Video Annotations for Stroke Therapy Monitoring. Bioengineering, 12(9), 922. https://doi.org/10.3390/bioengineering12090922