1. Introduction

Brain tumor segmentation is clinically indispensable for treatment planning and research in neuro-oncology. Following radiological diagnosis, accurate tumor segmentation enables precise boundary delineation, volumetric quantification, and therapy response assessment. These tasks directly inform clinical management strategies, including surgical resection margins, radiation targeting, and chemotherapy regimen optimization [

1,

2], while manual segmentation performed by expert radiologists provides high-quality results, it is labor-intensive, time-consuming, and highly dependent on the expertise of the annotator. As the volume of medical imaging data increases due to advances in imaging technologies and the need for more frequent monitoring, manual segmentation becomes increasingly impractical. Furthermore, in regions with limited access to specialized medical personnel, manual segmentation is often not feasible, exacerbating the problem in clinical practice.

In response to these challenges, automatic brain tumor segmentation using deep learning (DL) techniques has become an active area of research. Deep learning-based methods, particularly convolutional neural networks (CNNs), have shown remarkable success in medical image segmentation tasks, outperforming traditional image processing techniques [

3,

4,

5,

6,

7,

8,

9,

10]. These methods are capable of automatically learning hierarchical feature representations from raw image data, making them more adaptable to diverse segmentation tasks involving different anatomical structures and imaging modalities. In particular, U-Net [

11] and its variants [

12,

13,

14,

15] have become the go-to models for medical image segmentation due to their ability to work effectively with relatively small datasets, a common limitation in medical imaging.

Despite the successes of CNN-based models, several challenges remain in the field of medical image segmentation. One of the most significant hurdles is the lack of large, diverse annotated datasets. Medical image segmentation models are typically trained on limited datasets, which can lead to over-fitting and poor generalization [

16,

17]. Additionally, medical images often suffer from variations in acquisition conditions (e.g., scanner type, acquisition protocol) that can make it difficult for models trained on one dataset to generalize to another. To mitigate these issues, researchers have turned to transfer learning and pre-trained models [

18] to leverage larger, more diverse datasets, such as those from natural images, as a starting point.

The recent introduction of the “Segment Anything Model” (SAM) has demonstrated significant potential in general image segmentation tasks, though its clinical applicability requires further validation. SAM is a foundation model trained on an enormous dataset of 11 million images and 1 billion masks, which provides it with significant versatility. SAM can handle multiple types of input prompts, including points, boxes, and text, making it highly adaptable to a wide range of segmentation tasks [

19]. However, despite its success in natural image segmentation, SAM has limitations when applied to medical images, particularly due to the substantial differences between natural and medical image modalities. Medical images, such as MRI scans, contain complex structures with significant variations in anatomical and pathological features across patients, which SAM may not be equipped to handle directly [

20].

To address these challenges, a medical variant of SAM, called MedSAM, was developed [

21]. MedSAM is fine-tuned on a large-scale medical dataset consisting of over a million annotated medical images, and it shows significant improvements over the original SAM model when applied to medical image segmentation tasks. By leveraging domain-specific features and anatomical priors, MedSAM performs better in tasks such as brain tumor segmentation, where multi-modal data is often required to capture all relevant features. Despite these advances, the application of SAM-based models to multi-modal medical imaging remains an open challenge, particularly when dealing with missing modalities.

To effectively apply the SAM model to multimodal data, effective feature fusion strategies play a crucial role in improving the performance of multi-modal segmentation models. Feature fusion methods aim to combine complementary information from multiple modalities in a way that enhances the model’s ability to make accurate predictions. Attention mechanisms have proven to be particularly effective in this regard. For instance, the Squeeze-and-Excitation (SE) module [

22] allows the network to adaptively recalibrate feature channels, while cross-attention mechanisms can focus on the most relevant features for segmentation. These methods help ensure that the network learns to prioritize the most informative features from each modality, which is particularly beneficial when working with multi-modal MRI data [

23].

In clinical practice, MRI data is often incomplete due to missing modalities (e.g., T1, T2, FLAIR, or T1gd), which may result from image corruption, artifacts, acquisition protocols, patient allergies to contrast agents, or cost considerations [

24]. This poses a significant challenge for multi-modal learning, where the model needs to handle missing data during training and inference without compromising the segmentation performance. Several strategies have been proposed to address the missing modality problem. One approach is to simply replace missing modalities with zeros or random values [

25], though this does not provide meaningful information and may result in poor performance. More advanced methods aim to synthesize the missing modality using generative models [

26,

27], but generating high-quality synthetic data remains a significant challenge. Another approach is to rely on feature sharing between modalities, where models learn shared representations from existing modalities to minimize the loss of information due to missing data [

23].

This paper proposes a multi-modal SAM model (MSAM) for brain tumor segmentation, with the following key contributions:

Extension of SAM to Multi-Modal MRI Data: We extend the SAM model to work with multi-modal MRI data, experimentally validating the effectiveness of different modality combinations for tumor segmentation. Our results show that structural MRI modalities (T1, T2, T1gd, FLAIR) significantly improve segmentation performance, particularly for enhancing tumors segmentation.

Robust Feature Fusion Strategy: We propose a robust feature fusion strategy within the MSAM framework, which is capable of integrating information from multiple modalities effectively. The model is able to maintain high segmentation accuracy even when one modality is missing, making it more adaptable to real-world clinical scenarios where modality availability is often inconsistent.

Missing Modality Training Strategy: We introduce a novel training strategy that improves the model’s ability to handle missing modalities. By simulating missing modalities during training, our model learns to compensate for the absence of critical information and achieves improved performance in real-world scenarios where data may be incomplete.

By addressing the challenges posed by multi-modal data and missing modalities, MSAM advances the state-of-the-art in brain tumor segmentation and demonstrates the potential of SAM-based models for medical image analysis.

2. Methods

2.1. Introduction to SAM

The Segment Anything Model (SAM), proposed by Meta AI, is an advanced image segmentation model consisting of three core components: the image encoder, the prompt encoder, and the mask decoder. The image encoder transforms the input image into feature maps, the prompt encoder encodes various types of prompts (such as points, boxes, text, etc.), and the decoder generates the segmentation mask for the input image based on the image feature embeddings and prompt encoding embeddings.

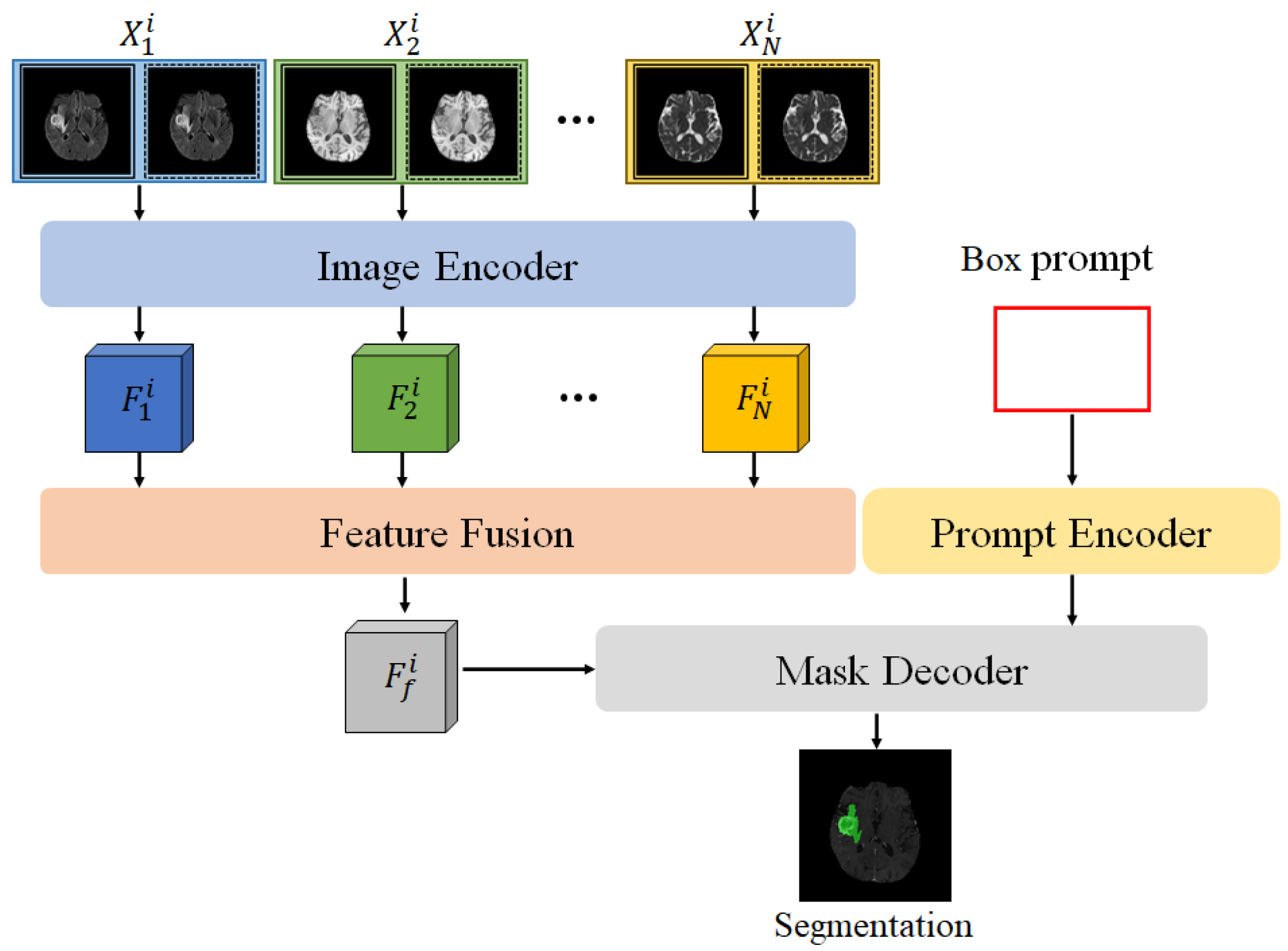

In the multi-modal SAM model (MSAM) proposed in this paper, the SAM framework is extended, as shown in

Figure 1. Unlike the original SAM, MSAM first applies the encoder to perform feature encoding on multi-modal MRI data (

) to obtain feature representations for each modality, denoted as

. To effectively fuse the multi-modal features and adapt them for SAM’s mask decoder, we design a Feature Fusion Block (FFB), which combines the multiple feature maps

into a comprehensive multi-modal fused feature

.

2.2. Feature Fusion Block

The Feature Fusion Block aims to integrate multi-modal features from the SAM encoder, emphasizing tumor-relevant features and enhancing segmentation accuracy in the decoder. Common fusion techniques, such as average and max pooling, are limited: average pooling may dilute important features, while max pooling overlooks subtle yet crucial information.

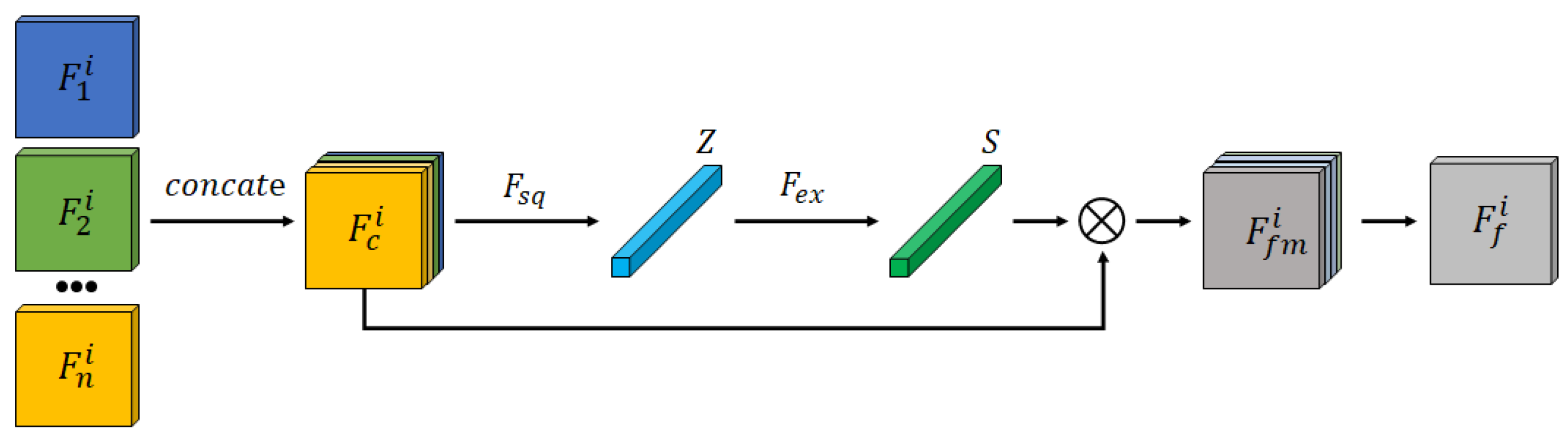

The Feature Fusion Block structure is depicted in

Figure 2. Encoded features from different modalities are first concatenated and input into a channel attention mechanism to generate a fused feature representation,

. This recalibrates feature weights to enhance tumor segmentation accuracy.

To effectively capture the inter-dependencies between different feature channels and optimize fusion outcomes, a channel attention mechanism is incorporated into the Feature Fusion Module, as illustrated in

Figure 2. Specifically, the different modality features extracted by the encoder,

, where

, are concatenated into a new feature map

. Global Average Pooling is then applied over the spatial dimensions

of the feature map to generate a weight vector

, where the

k-th element is calculated as follows:

Next, a two-layer fully connected network captures channel correlation:

where

is the ReLU activation function,

denotes Sigmoid, and

are weight matrices. This network generates channel attention weights

s, applied as follows:

The final fused feature map is then obtained using a residual layer, yielding a comprehensive feature representation optimized for segmentation.

This fusion approach effectively combines features from different modalities, generating a more expressive fused feature representation than traditional mean or max fusion methods, thereby significantly improving segmentation performance.

2.3. Training and Prediction Methods

In multi-modal model training, the conventional approach is referred to as Full Modality Training (FT). To better handle segmentation tasks with missing modalities, we propose a specialized training method called Missing Modality Training (MT). The Full Modality Training (FT) approach trains on all modalities, whereas the Missing Modality Training (MT) approach adapts the model to perform under conditions of missing data, enhancing its robustness.This approach significantly improves tumor segmentation performance under missing modality conditions without altering the model structure. Through MT training, the Feature Fusion Block can more effectively extract features from available modalities, thereby providing more stable and reliable segmentation features to the subsequent decoder.

For data with missing modality

, we construct substitute data

, where each element

is independently sampled from a uniform distribution between 0 and 1, satisfying:

This random substitution aids the model in generalizing across different missing modality scenarios by providing surrogate data for absent features.

During training, when a modality

is missing, it is not completely replaced by the randomly generated

; instead, it is replaced based on a probability

, as follows:

Here, determines the likelihood of using real versus randomly generated data for a missing modality, enabling controlled adaptation to partial modality information.

In model prediction, we differentiate between Full Modality Prediction (FP) and Missing Modality Prediction (MP). Under FI mode, the model receives input from all modalities; in MI mode, any missing modality is entirely replaced by the corresponding random data to ensure the model’s adaptability to missing modalities.

2.4. Compared Methods

For brain tumor segmentation, several state-of-the-art deep learning approaches have been proposed, including nnU-Net, UNETR, and SwinUNETR, which have demonstrated promising results in medical image segmentation tasks.

nnU-Net (no-new-U-Net) is a fully automated and task-independent framework designed to achieve high performance in medical image segmentation [

14]. nnU-Net adapts its architecture, preprocessing pipeline, and training strategy based on the characteristics of the dataset, including the image modality, size, and the number of classes. This adaptability has allowed nnU-Net to outperform many other methods in various challenges without requiring task-specific fine-tuning. Its robustness and simplicity make it a reliable choice for a wide range of segmentation problems, including brain tumor segmentation in multi-modal MRI datasets.

UNETR (U-Net Transformer) leverages the strengths of both U-Net and Transformer architectures [

28]. By replacing the conventional convolutional blocks in U-Net with self-attention mechanisms from transformers, UNETR is capable of capturing long-range dependencies in the image, making it particularly effective for volumetric data such as MRI scans. The model excels in segmenting complex structures by learning global context and fine-grained local details simultaneously. This hybrid architecture has proven to be effective in various medical imaging tasks, including brain tumor segmentation.

SwinUNETR (Swin Transformer U-Net) integrates the Swin Transformer, a hierarchical vision Transformer that uses shifted windows for efficient image processing, with the U-Net architecture [

29]. This approach takes advantage of the Swin Transformer’s ability to learn both global and local contextual information through hierarchical representations, enhancing segmentation performance in complex medical imaging tasks. SwinUNETR has demonstrated superior performance in brain tumor segmentation, especially when using multi-modal MRI data, by capturing both fine details and high-level features in the images.

These methods represent the cutting edge of deep learning architectures in medical image segmentation, each combining different strengths of convolutional networks, transformers, and self-attention mechanisms to improve the accuracy and efficiency of brain tumor segmentation.

4. Results

To assess the performance of the proposed MSAM model, we conducted a series of comparative experiments, focusing on multi-modal glioma segmentation tasks. These experiments aimed to evaluate the effectiveness of different modality combinations, as well as compare the segmentation performance of MSAM with that of the widely-used U-Net, nnU-Net, UNETR, and SwinUNETR.

4.1. Segmentation Performance

We first investigated the impact of different modality combinations on segmentation performance, particularly the integration of structural modalities and Diffusion Tensor Imaging (DTI) modalities. The goal was to identify the optimal combination of modalities for glioma segmentation.

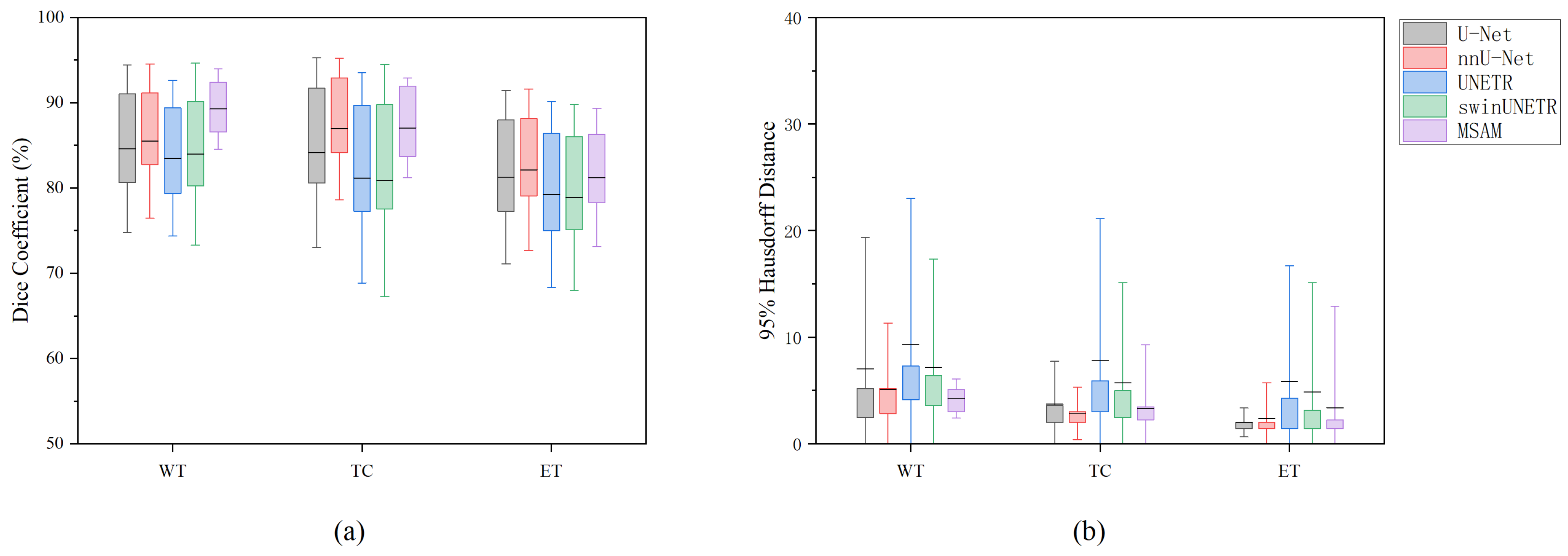

Subsequently, we compared the performance of MSAM with U-Net under various modality combinations. The segmentation performance was evaluated using two key metrics: Dice Similarity Coefficient (DSC) and 95% Hausdorff Distance (HD95). The suffix “-SD” indicates that the method uses both structural modalities and DTI modalities; the suffix “-S” and “-D”indicates that the method just uses the structural Modalities and DTI modalities, respectively. The results are summarized in

Table 1 and

Figure 3.

Under identical modality input conditions, MSAM consistently outperformed U-Net, UNETR, and SwinUNETR add across all three segmentation tasks, as measured by both DSC and HD95. However, for TC and ET segmentation, MSAM performs slightly worse than nnU-Net. Specifically, for the Whole Tumor (WT) and Tumor Core (TC) regions, MSAM exhibited superior segmentation accuracy. The performance difference in the Enhancing Tumor (ET) region was relatively smaller, but MSAM still showed an edge, particularly under the DTI modality condition (MSAM-D). While both models performed poorly when only DTI data were used, MSAM showed a clear advantage in segmenting the ET region under this condition.

The standard deviation of MSAM’s segmentation results was notably lower than that of the compared methods, indicating greater stability and reliability in tumor segmentation tasks. These findings suggest that MSAM provides more consistent and robust performance compared to the others, especially for the segmentation of ET regions.

The experimental results further revealed that the choice of modality input significantly influences segmentation performance. For the WT and TC segmentation tasks, MSAM-S achieved the highest DSC of 89.27% and 87.05%, respectively, with HD95 values of 4.24 and 3.34. For the ET segmentation task, MSAM-S achieved the highest DSC of 81.23%, while MSAM-SD and MSAM-D showed a comparable HD95 value of 2.00. Overall, MSAM-S demonstrated the best performance across all tasks.

When only DTI modality data were used, segmentation performance was poor, particularly for the TC and ET tasks. The performance of the methods under the DTI-only condition was suboptimal, with both models showing significant degradation in segmentation accuracy. This highlights the importance of integrating structural modalities for accurate tumor segmentation.

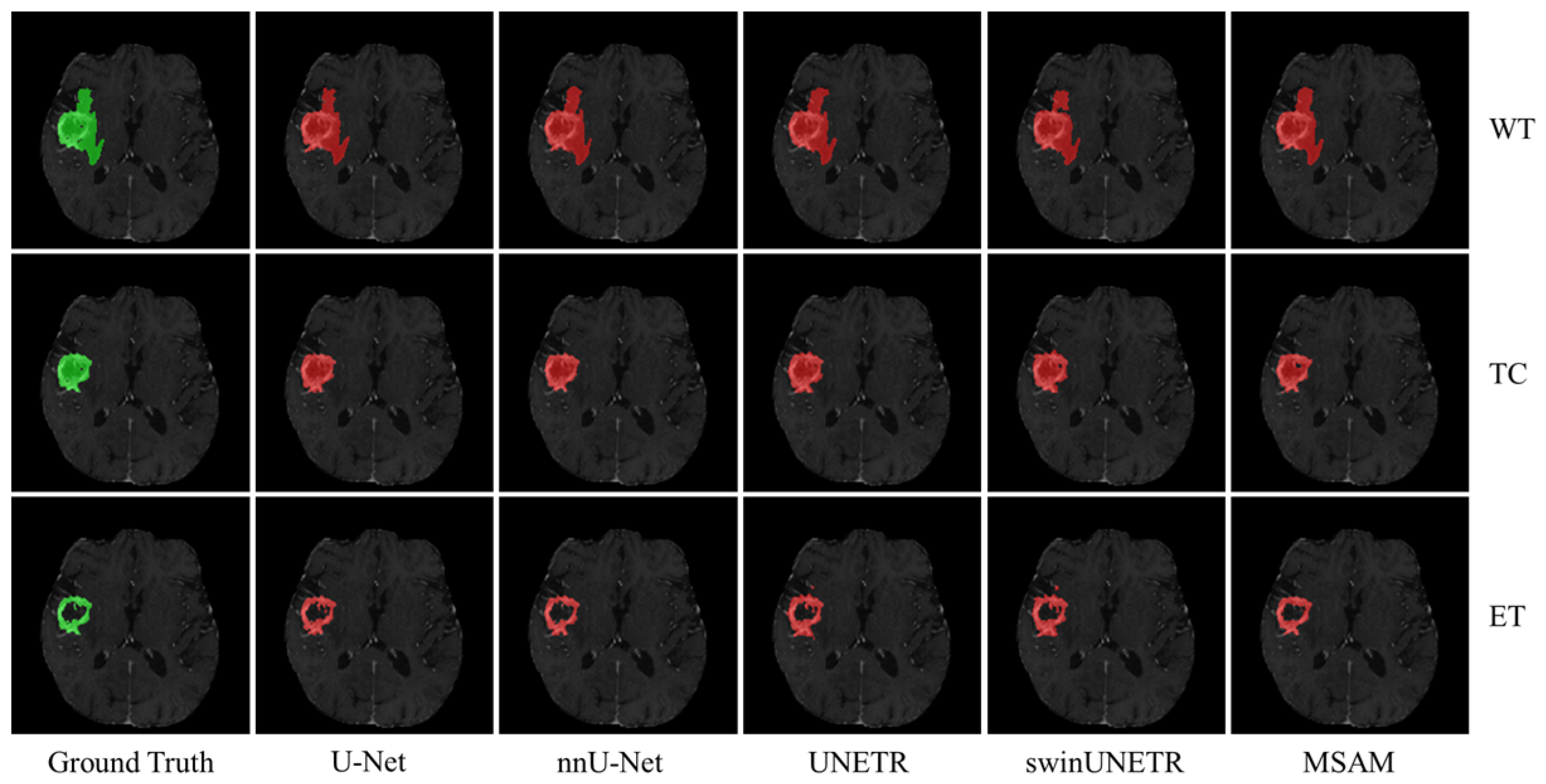

Figure 4 presents qualitative results for the three segmentation tasks using structural modality data as input. The green regions represent the ground truth, while the red areas indicate the corresponding segmentation masks. As shown, MSAM-S’s segmentation results are much closer to the ground truth, particularly in capturing finer details, compared to U-Net-S and UNETR-S, that demonstrate less precise delineation of tumor boundaries. This finding is consistent with the quantitative results summarized in

Table 1.

4.2. Evaluation of FFB

We concurrently evaluated the utility of the FFB framework by performing tumor sub-region segmentation using single-modality data. As shown in

Table 2, the comparative results of different fusion strategies are presented in terms of Dice Similarity Coefficient (DSC) and 95th percentile Hausdorff Distance (HD95).

Using the multimodal fusion model MSAM-S as the baseline, systematic comparisons with eight single-modality models (e.g., SAM-FLAIR using only FLAIR sequences, SAM-T1gd using only T1gd sequences) and two degraded fusion variants (MSAM-SD/MSAM-D) demonstrated the significant advantages of multimodal fusion. The experiments revealed comprehensive performance improvements across all tasks for the multimodal model: Compared to single-modality approaches, MSAM-S achieved a maximum DSC increase of 1.1% (81.23% vs. SAM-T1gd’s 80.13%) in ET segmentation while maintaining a mean HD95 of 2.00 mm, representing a 51.7% improvement in boundary precision over the best single-modality method (SAM-T1gd: 4.14 mm).

Notably, substantial performance variations were observed among single-modality models across different tasks. For instance, while SAM-T1gd demonstrated optimal ET segmentation (DSC = 80.13%), its TC segmentation performance significantly deteriorated (DSC = 85.70% vs. the multimodal baseline of 87.05%). Similarly, SAM-FLAIR achieved 88.88% DSC in WT segmentation but failed to effectively delineate ET regions (DSC = 58.57%). These task-specific sensitivity disparities underscore the critical importance of multimodal complementarity: through feature fusion mechanisms, MSAM-S successfully integrates anatomical information from different imaging sequences, achieving optimal performance balance across all three segmentation tasks.

Further analysis revealed that the degraded model MSAM-D (with spatial alignment module removed) exhibited catastrophic performance degradation in TC segmentation, with DSC plummeting by 17.1 percentage points to 69.95% and HD95 error expanding to 22.05 mm (a 558% increase from baseline). The single-modality model SAM-ad completely failed in complex region segmentation (ET: DSC = 48.85%, HD95 = 36.36 mm), providing counterfactual validation of multimodal necessity. The experiments also exposed inherent limitations of single-modality methods: SAM-T2 produced 11.20 mm HD95 errors in TC segmentation (3.35× the baseline), while SAM-rd showed outlier HD95 values of 25.82 mm in ET segmentation. In contrast, the multimodal approach reduced these errors to clinically acceptable ranges through cross-modal feature calibration.

4.3. Evaluation of Missing Modality Training

We also explored the effect of missing modalities on segmentation performance by training MSAM under conditions where specific modalities were absent. To further enhance model performance under missing modality conditions, a Missing Modality Training (MT) mode was adopted.

Table 3 reports the Dice Score results for various prediction modes under different missing modality conditions. The modes include Full Modality Training and Full Modality Prediction (FTFP), Missing Modality Training and Full Modality Prediction (MTFP), Full Modality Training and Missing Modality Prediction (FTMP) and Missing Modality Training and Missing Modality Prediction (MTMP).

From

Table 3, it is evident that within the same training mode, whether Full Modality Training (FT) or Missing Modality Training (MT), Full Modality Prediction (FI) generally surpasses Missing Modality Prediction (MP) in segmentation performance. Under the FT mode, the FP segmentation results consistently exceed those of MP; in the MT mode, the three FP results (MTFP-T1 for TC, MTFP-FLAIR and MTFP-T1 for ET) are slightly lower than those of MP, but the differences are minimal and not statistically significant after significance testing. These findings indicate that full modality input significantly enhances brain tumor segmentation performance, with each modality playing a crucial role in the segmentation task.

Under Full Modality Prediction (FP), FTFP surpasses MTFP only in the WT segmentation task, with a Dice Score of 89.27%, while MTFP-T2 and MTFP-FLAIR achieve the highest Dice Scores in the TC and ET segmentation tasks, at 87.36% and 81.28%, respectively.

Under Missing Modality Prediction (MP), MTMP outperforms FTMP in the vast majority of cases (11/12), with the sole exception being the WT segmentation task where MTMP-T1’s result is slightly lower than that of FTFI-T1. Different modalities hold varying levels of importance for different tumor regions, with the FLAIR modality being particularly critical for WT segmentation, while the T1gd modality is essential for TC and ET segmentation. The absence of these key modalities significantly degrades the corresponding segmentation results. However, the findings reveal that the Missing Modality Training (MT) mode effectively mitigates the adverse effects of missing key modalities, substantially enhancing Dice Scores by 6.05%, 6.79%, and 18.02%, respectively.

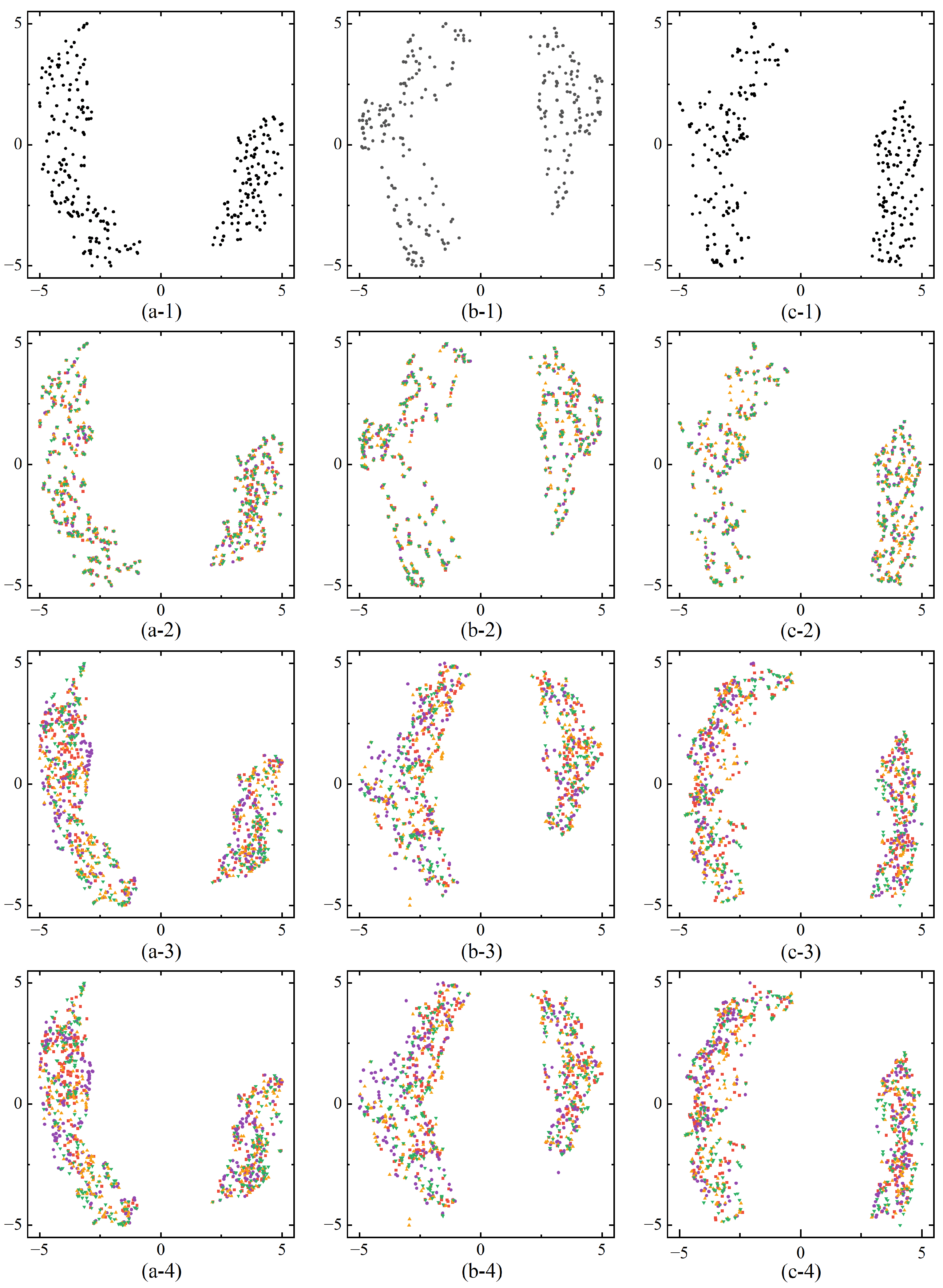

We further validate the effectiveness of the proposed modality fusion module by performing feature visualizations, allowing an intuitive analysis of the differences in feature representation across various training modes and input modalities. To achieve this, we employed Uniform Manifold Approximation and Projection (UMAP), a nonlinear dimensionality reduction technique that maps high-dimensional data into a lower-dimensional space while preserving both local and global structures. UMAP is particularly effective in data visualization and feature extraction, offering high efficiency for large datasets and strong preservation of global data relationships.

Figure 5 presents feature visualizations after modality fusion under different training modes and input modalities. In the three segmentation tasks (Whole Tumor, Tumor Core, Enhancing Tumor), the features distinctly separate into foreground and background domains, visually demonstrating that the MSAM model can effectively distinguish between tumor and non-tumor regions. Under the same training mode, the feature distributions after fusion exhibit a similar pattern regardless of whether full or missing modality input is used. However, in the Full Modality Training (FT) mode, when certain modalities are absent during prediction, the feature distributions across modalities overlap substantially. This overlap occurs because the model optimizes for full-modality input during training, meaning that the absence of specific modalities during prediction does not significantly affect the feature distributions of the remaining modalities. This suggests that if the missing modality is not crucial to the segmentation task, the impact on performance is minimal. However, if a key modality is absent, segmentation accuracy is notably reduced. Therefore, the FT training mode is somewhat rigid in addressing missing modalities, as it lacks the flexibility to compensate for missing information and struggles to leverage the available modalities to accurately segment tumor regions.

In contrast, the feature distributions under the Missing Modality Training (MT) mode exhibit a lower degree of overlap and are more distinct compared to the FT mode. This indicates that the MT mode can adapt more effectively to missing modality conditions by making specific adjustments, thereby compensating for the absence of certain modalities. As a result, segmentation performance improves significantly when key modalities are missing, as demonstrated by the results in

Table 3.

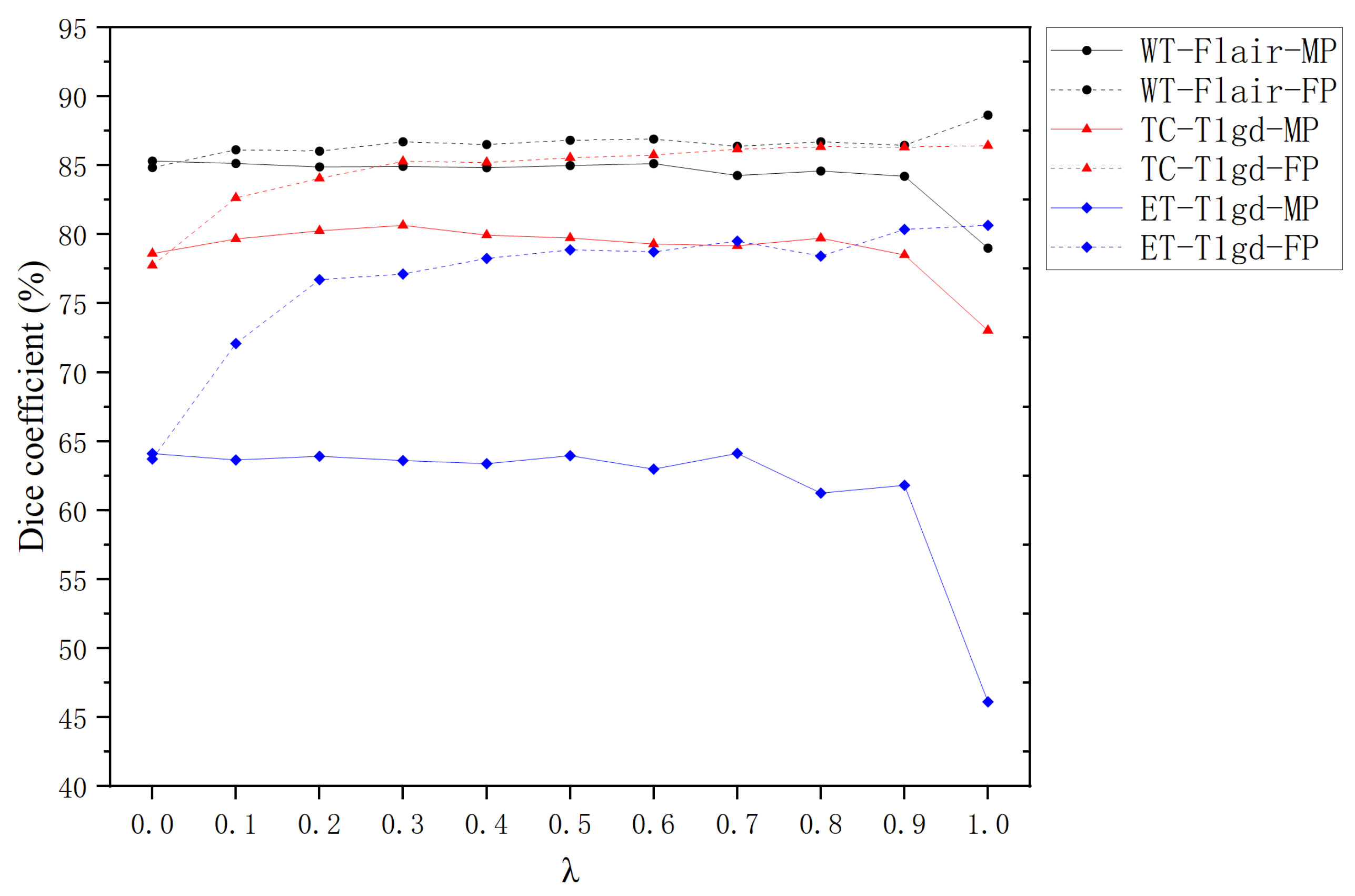

4.4. Impact of Hyperparameter in Missing Modality Training

The hyperparameter

(ranging from 0 to 1) plays a pivotal role in governing the behavior of Missing Modality Training (MT). Building on the findings from

Section 4.3 and prior studies [

33], which highlight the modality-specific importance of FLAIR sequences for Whole Tumor segmentation and T1gd for Tumor Core and Enhancing Tumor delineation, we systematically evaluate the influence of

under targeted modality absence conditions.

Figure 6 illustrates the Dice coefficients achieved by Full Modality Prediction (FP) and Missing Modality Prediction (MP) strategies across different

values for individual segmentation tasks.

For all segmentation tasks (WT, TC, ET), FP consistently demonstrates superior performance to MP when

is within the range of

. This aligns with the expectation that FP benefits from richer feature representations, enabling more precise segmentation. The magnitude of improvement follows the hierarchy:

where

represents the performance gain relative to MP baseline.

At , MP shows marginal superiority over FP. This counterintuitive result stems from complete modality replacement with random noise during training, creating inference interference when actual modalities are reintroduced. When , the system converges to Full Training (FT) mode, where the mean Dice Score of FP significantly outperforms MP’s.

Within the practical range of , MP mode shows limited sensitivity to variations. For WT-FLAIR-MP and ET-T1gd-MP, a gradual decline in Dice Scores is observed as increases, suggesting that higher values may amplify interference from incomplete modality information. In contrast, TC-T1gd-MP displays a non-monotonic trend, with peak performance occurring at . For FP mode, all tasks exhibit a consistent upward trend in Dice Scores with increasing , underscoring the robustness of MT in mitigating modality absence during inference.

The experimental results validate that MT effectively enhances model robustness against missing modalities during inference. The observed patterns support the selection of as an optimal compromise, balancing feature preservation and noise suppression across all tasks. This configuration achieves a mean Dice Coefficient of 0.84 ± 0.03, demonstrating balanced performance without significant task-specific bias. The findings emphasize the importance of task-aware parameter tuning in multimodal segmentation frameworks.

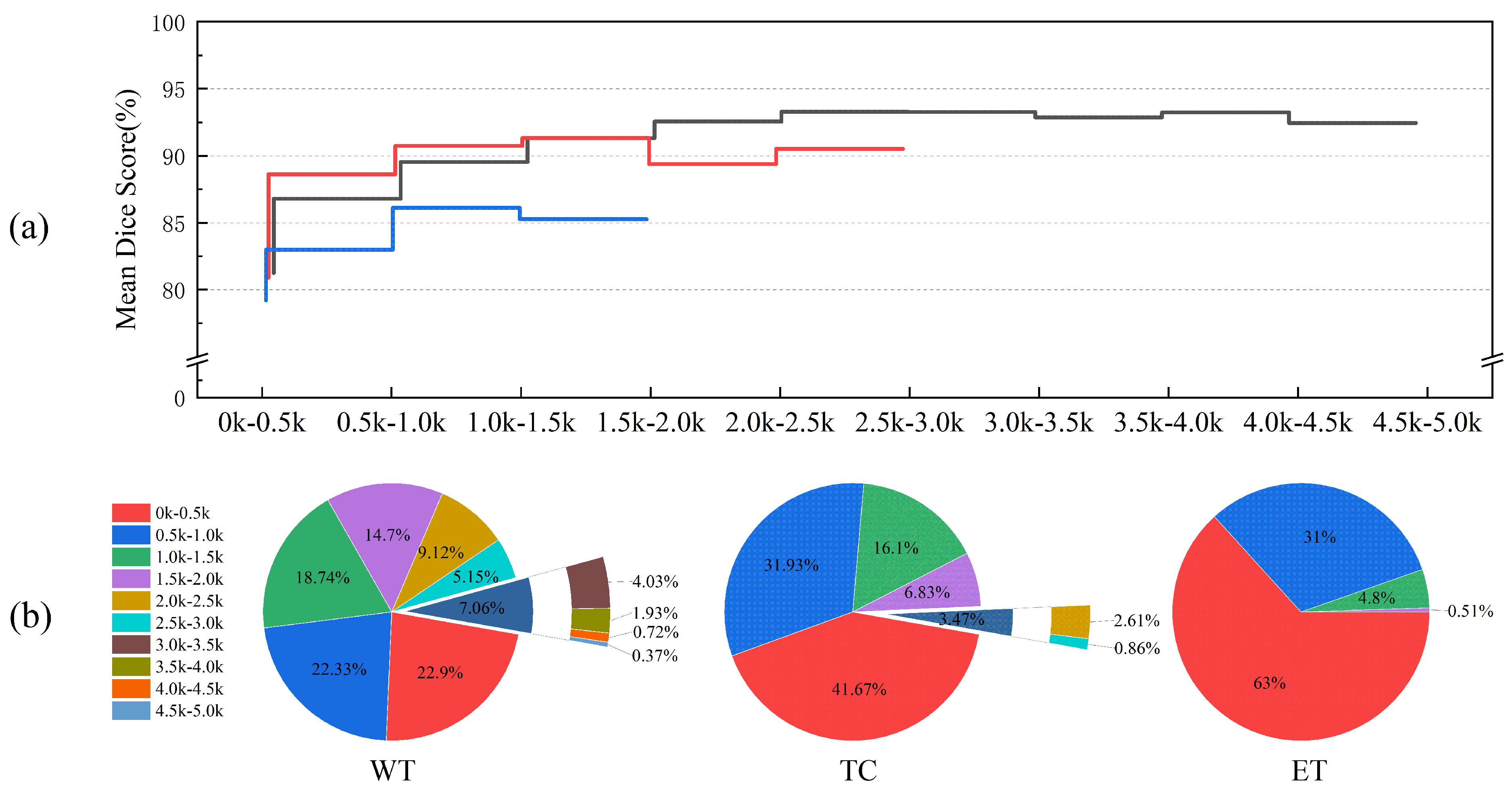

4.5. Statistical Analysis of Tumor Regions

We also analyzed the impact of tumor region size on segmentation performance and present the results for tumor regions of various sizes in

Figure 7. As the tumor region size increases, the Dice Score initially increases, reaching a peak before slightly declining. This trend suggests that segmentation performance is strongly influenced by the size of the tumor regions, with smaller regions resulting in lower segmentation accuracy. Specifically, for the Whole Tumor (WT), Tumor Core (TC), and Enhancing Tumor (ET) tasks, the Dice Scores for tumor regions in the 0–0.5 k voxel size range are 81.24%, 80.89%, and 79.21%, respectively, representing the lowest values.

The size distribution of the target regions varies across the segmentation tasks, as shown in

Figure 7b. The WT size range spans 0–5.0 k voxels, with TC, being a sub-region of WT, ranging from 0 to 3.0 k voxels, and the ET region falling within the 0–2.0 k voxel range. In the 0–2.0 k size range, segmentation performance for the ET region is generally lower than that for the WT and TC regions. Furthermore, TC performance in the 0–0.5 k size range is slightly inferior to that of WT but surpasses WT in other intervals. Given the target region size distribution for each task, we conclude that the ET region, being smaller and more challenging to segment, leads to poorer segmentation performance compared to the WT and TC regions.

5. Discussion

In this study, we comprehensively evaluated the MSAM model for multimodal brain tumor segmentation under various challenging conditions, including missing imaging modalities and varying tumor region sizes. The results consistently demonstrate MSAM’s robustness, its ability to adapt to incomplete input data, and its superior performance across multiple segmentation tasks. In this section, we interpret the major findings, contrast them with related work, and discuss their implications.

Our findings underscore the critical role of multimodal fusion in achieving accurate and robust brain tumor segmentation. MSAM-S, which integrates structural MRI modalities, achieved the good performance, particularly in segmenting the Enhancing Tumor (ET), a region characterized by irregular morphology and unclear boundaries. Structural modalities such as T1, T1gd, T2, and FLAIR were particularly effective for segmenting larger tumor regions like Whole Tumor (WT) and Tumor Core (TC), as evidenced by the strong performance of MSAM-S. These results highlight that different modalities contribute complementary information for accurate tumor region segmentation. The fusion of these heterogeneous features enhances the SAM’s ability to delineate both large and small tumor regions. This indicates that combining different imaging sequences is very important for improving the segmentation performance of complex tumor subregions. Future research will explore advanced fusion methodologies—notably hybrid attention architectures and hierarchical multi-scale integration—to model complex inter-modal dependencies. Such approaches may enhance information synthesis across extended modality sets, potentially elevating performance in clinically heterogeneous brain tumor segmentation tasks.

Segmentation results under different modalities missing further emphasize the importance of key modalities. When all modalities were available, MSAM achieved optimal results, particularly for WT and TC. However, the absence of critical modalities—especially T1gd and T2—led to significant performance degradation in ET segmentation, suggesting that these modalities are essential for capturing the complex contrast and boundary information associated with the ET region. This is consistent with prior reports on the relevance of contrast-enhanced and fluid-sensitive sequences in tumor delineation [

23,

33].

A key contribution of this study is the introduction of a Missing Modality Training (MT) strategy. Instead of attempting to reconstruct missing inputs, MT allows the model to learn robust representations using only the available modalities during training. This is achieved by substituting missing modalities with noise drawn from a uniform distribution to serve as placeholders, preserving the structural integrity of the network. Our empirical observations confirm that MSAM trained with MT can dynamically adjust feature distributions in the latent space according to modality availability, thus achieving stable and reliable segmentation performance even under missing data conditions, while more complex methods such as generative reconstruction could potentially provide more accurate modality estimation [

25], our goal was to evaluate the MSAM architecture’s intrinsic ability to generalize under incomplete inputs. Future work may explore the integration of generative or modality-aware imputation techniques as complementary modules.

Despite the overall effectiveness of our approach, we observed a marked drop in segmentation accuracy for small tumor regions, particularly in the ET task. Quantitative analysis revealed that tumors with fewer voxels (e.g., <0.5 k) exhibited significantly lower Dice Scores. This phenomenon likely stems from limited spatial context, low inter-region contrast, ambiguous boundaries, and an imbalanced representation of small lesions in the training set. Such limitations are common in medical image segmentation and highlight the need for targeted solutions.

To address this challenge, future research could incorporate region-aware loss functions that emphasize underrepresented tumor sizes, utilize resolution-preserving or multi-scale architectures to capture fine-grained features, and apply attention-based mechanisms that can selectively focus on ambiguous or subtle tumor regions. These enhancements may improve segmentation performance for small, complex lesions and further increase the clinical utility of the MSAM model.