Evaluating 3D Hand Scanning Accuracy Across Trained and Untrained Students

Abstract

1. Introduction

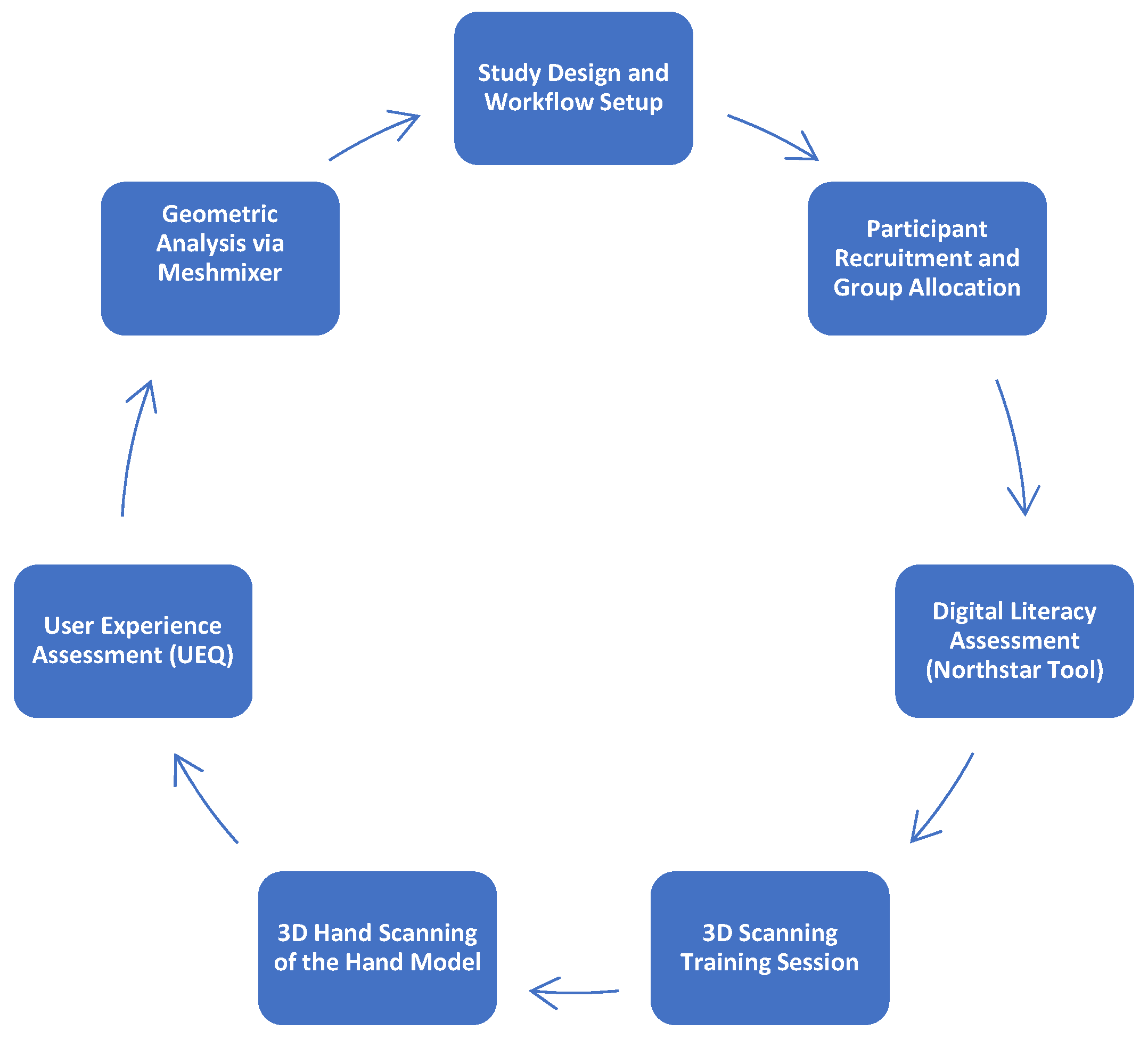

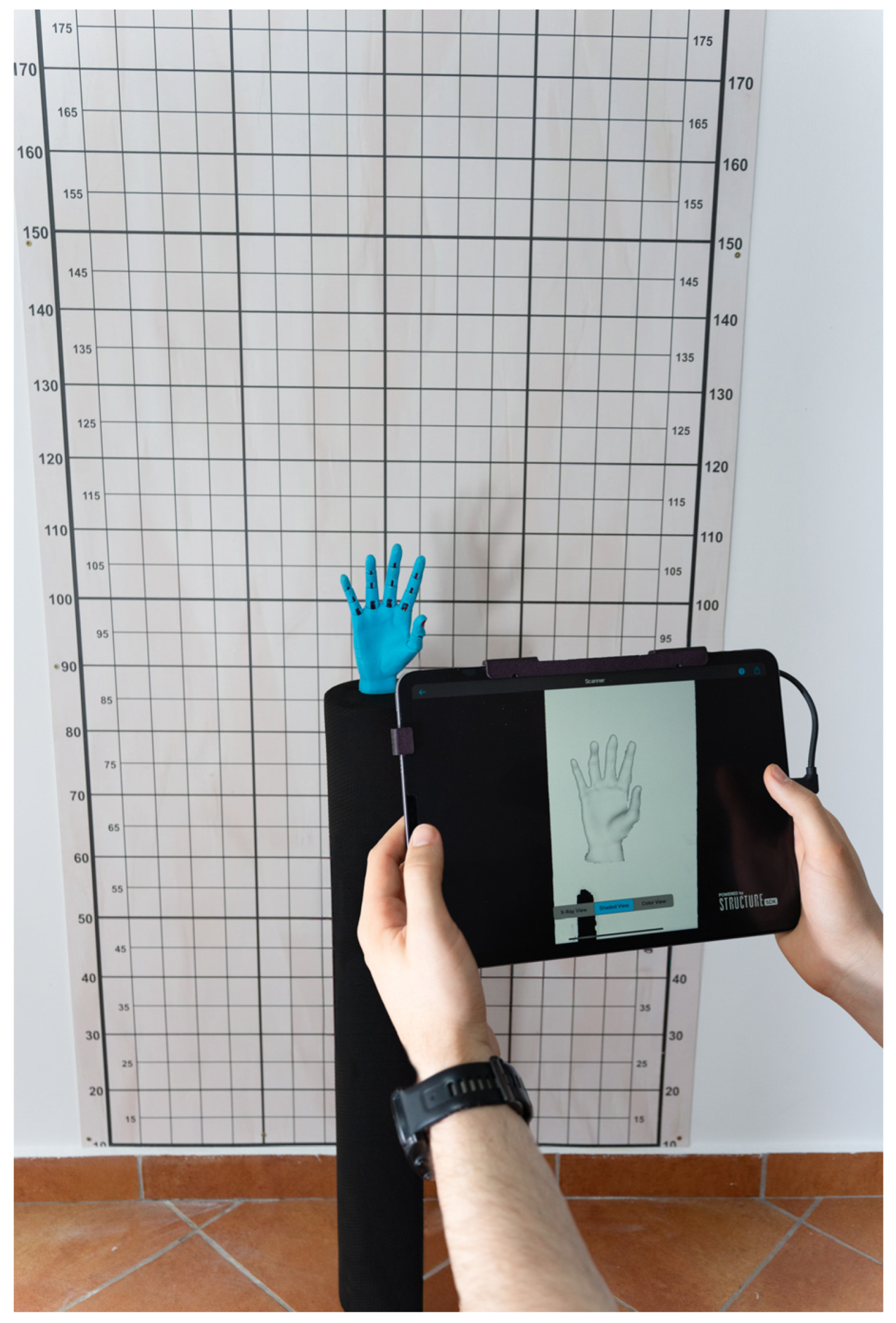

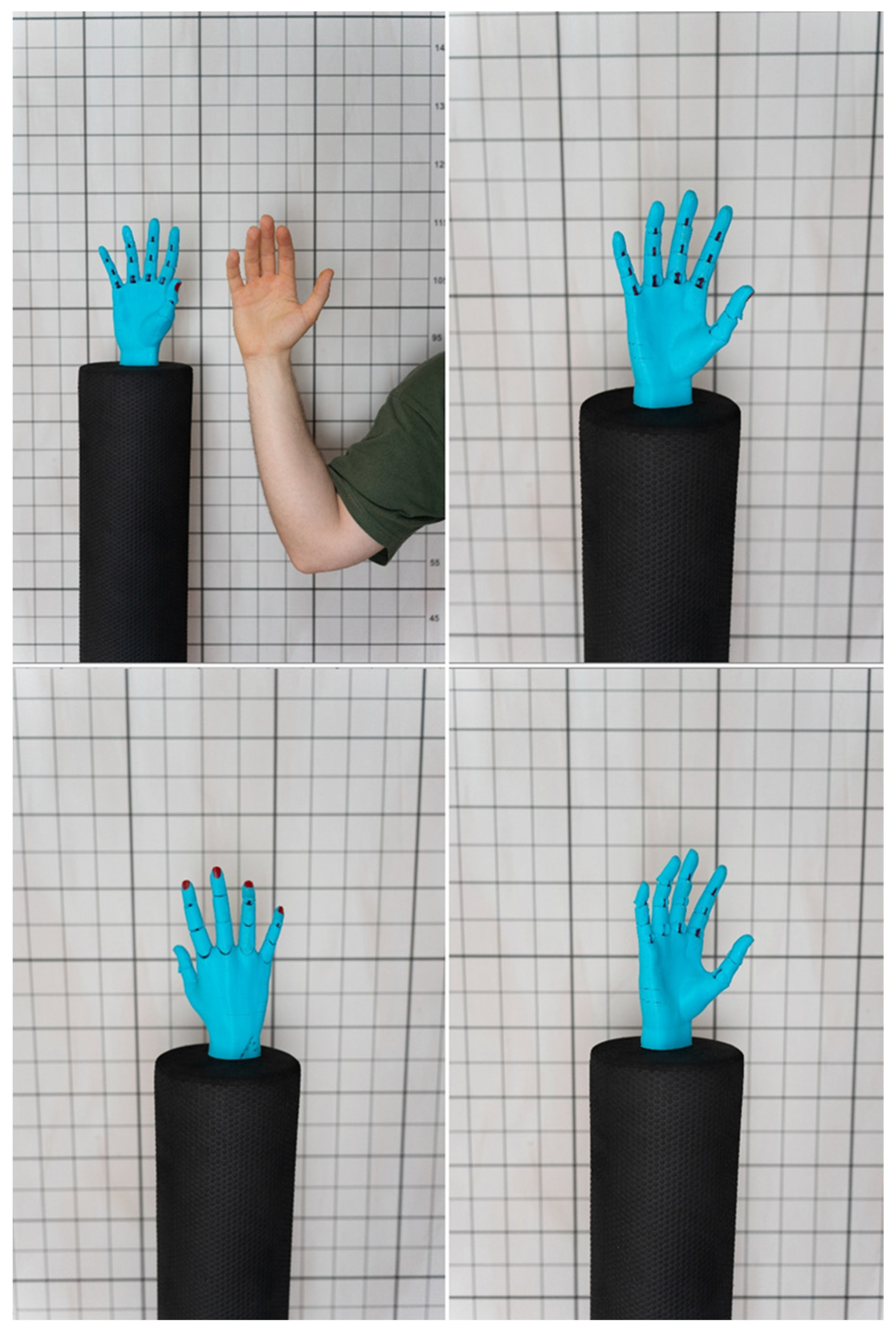

2. Materials and Methods

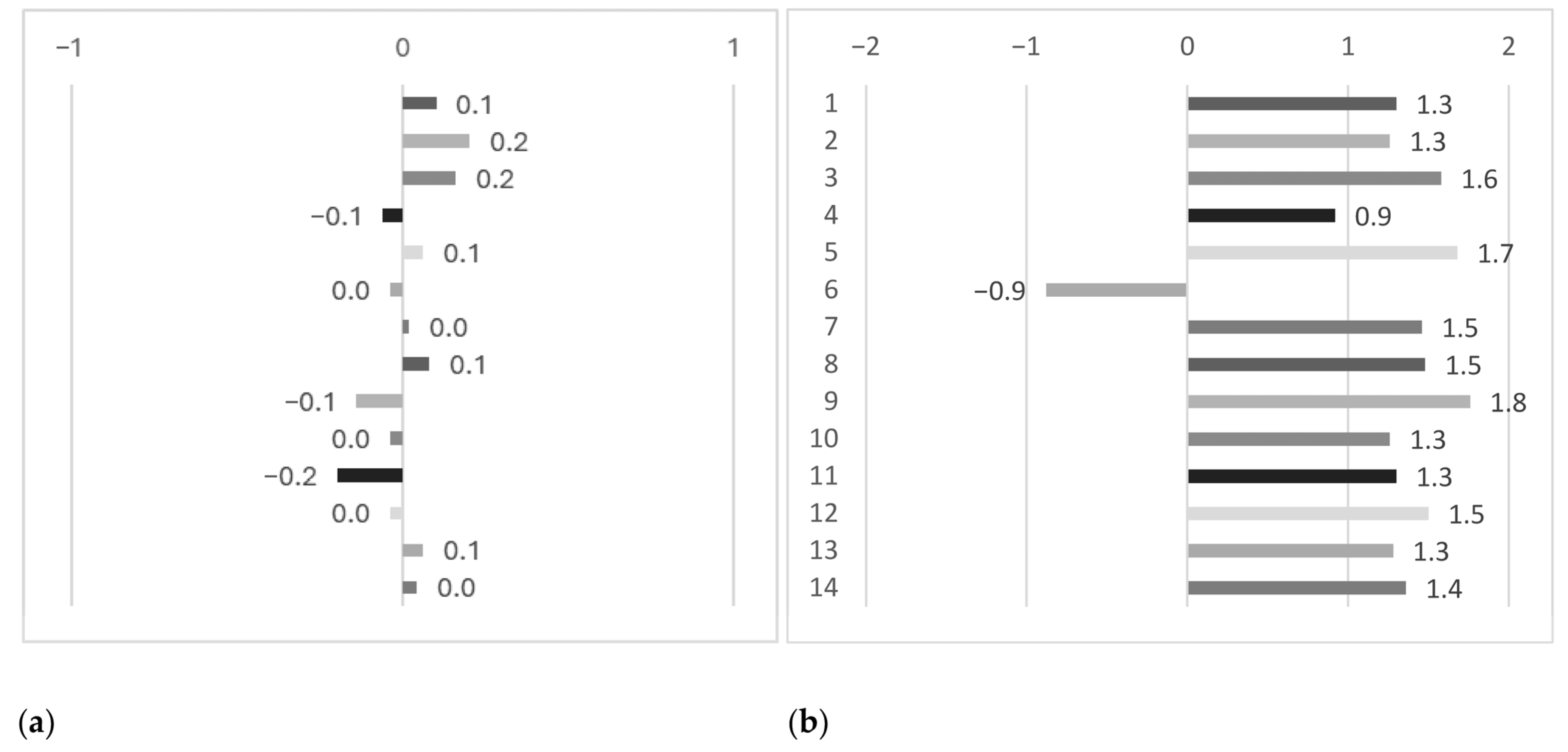

3. Results

4. Discussion

4.1. 3D Scanning of the Hand

4.2. Non-Professionals in 3D Scanning

4.3. Structure Sensor Pro and Its Uses in Medical and Research Fields

4.4. Comparative Studies of 3D Scanning

4.5. 3D Scanning Training

4.6. 3D Scanning and AI

4.7. Implications and Context

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Training Overview

- Introduction (1 min):

- Step 1: Hardware Familiarization (2 min):

- Step 2: Equipment Check (1 min):

- Step 3: Sensor Mounting and Calibration (2 min):

- Step 4: Application Setup and Mode Selection (2 min):

- Step 5: Positioning and Environment Setup (2 min):

- Step 6: Live Scanning Demonstration (2 min):

- Step 7: Review and Saving (1 min):

- Step 8: Post-Processing Overview (Optional-1 min):

- Q&A and Conclusion (2 min):

References

- Haleem, A.; Mohd, J. 3D scanning applications in medical field: A literature-based review. Clin. Epidemiol. Glob. Health 2019, 7, 199–210. [Google Scholar] [CrossRef]

- Barrios-Muriel, J.; Romero-Sánchez, F.; Alonso-Sánchez, F.J.; Salgado, D.R. Advances in orthotic and prosthetic manufacturing: A technology review. Materials 2020, 13, 295. [Google Scholar] [CrossRef]

- Seifert, E.; Griffin, L. Comparison and validation of traditional 3D scanning anthropometric methods to measure the hand. In 11th International Conference and Exhibition on 3D Body Scanning and Processing Technologies, Online, 17–18 November 2020. [Google Scholar]

- Qi, J.; Zhang, X.; Ying, B.; Lv, F. Comparison of human body sizing measurement data by using manual and 3D scanning measuring techniques. J. Fiber Bioeng. Inf. 2011, 4, 83–95. [Google Scholar] [CrossRef]

- Bartol, K.; Bojanić, D.; Petković, T.; Pribanić, T. A review of body measurement using 3D scanning. IEEE Access 2021, 9, 67281–67301. [Google Scholar] [CrossRef]

- Volonghi, P.; Baronio, G.; Signoroni, A. 3D scanning and geometry processing techniques for customised hand orthotics: An experimental assessment. Virtual Phys. Prototyp. 2018, 13, 105–116. [Google Scholar] [CrossRef]

- Baronio, G.; Volonghi, P.; Signoroni, A. Concept and design of a 3d printed support to assist hand scanning for the realization of customized orthosis. Appl. Bionics Biomech. 2017, 2017, 8171520. [Google Scholar] [CrossRef] [PubMed]

- Klepser, A.; Babin, M.; Loercher, C.; Kirchdoerfer, E.; Beringer, J.; Schmidt, A. 3D Hand Measuring with a Mobile Scanning System. In Proceedings of the 3rd International Conference on 3D Body Scanning Technologies, Lugano, Switzerland, 16–17 October 2012; pp. 288–294. [Google Scholar]

- Zeraatkar, M.; Khalili, K. A fast and low-cost human body 3D scanner using 100 cameras. J. Imaging 2020, 6, 21. [Google Scholar] [CrossRef]

- NORTHSTAR. Available online: https://www.digitalliteracyassessment.org/about (accessed on 17 March 2024).

- Margevica-Grinberga, I.; Šmitina, A. Self-Assessment of the Digital Skills of Career Education Specialists during the Provision of Remote Services. World J. Educ. Technol. Curr. Issues 2021, 13, 1061–1072. [Google Scholar] [CrossRef]

- Ufondu, C.C.; Ikpat, N.H.; Chibuzo, N.F. 21st century skill acquisition in business education program: The role of digital literacy and tools. Futur. Educ. 2024, 4, 86–97. [Google Scholar]

- Vodă, A.I.; Cautisanu, C.; Grădinaru, C.; Tănăsescu, C.; de Moraes, G.H.S.M. Exploring digital literacy skills in social sciences and humanities students. Sustainability 2022, 14, 2483. [Google Scholar] [CrossRef]

- Obermayer, N.; Csizmadia, T.; Banász, Z.; Purnhauser, P. Importance of digital and soft skills in the digital age. In Proceedings of the ECKM 24th European Conference on Knowledge Management, Lisboa, Portugal, 7–8 September 2023; Volume 24, pp. 978–987. [Google Scholar]

- Schmidt, R.; Singh, K. Meshmixer: An interface for rapid mesh composition. In ACM SIGGRAPH 2010 Talks; Association for Computing Machinery: New York, NY, USA, 2010; p. 1. [Google Scholar]

- Kim, N.Y.; Donaldson, C.D.; Wertheim, D.; Naini, F.B. Accuracy and Precision of Three-Dimensionally Printed Orthognathic Surgical Splints. Appl. Sci. 2024, 14, 6089. [Google Scholar] [CrossRef]

- Uzundurukan, A.; Poncet, S.; Boffito, D.C.; Micheau, P. Realistic 3D CT-FEM for Target-based Multiple Organ Inclusive Studies. J. Biomed. Eng. Biosci. (JBEB) 2022, 10, 24–35. [Google Scholar] [CrossRef]

- Farook, T.H.; Barman, A.; Abdullah, J.Y.; Jamayet, N.B. Optimization of Prosthodontic Computer-Aided Designed Models: A Virtual Evaluation of Mesh Quality Reduction Using Open-Source Software. J. Prosthodont. 2021, 30, 420–429. [Google Scholar] [CrossRef] [PubMed]

- Abad-Coronel, C.; Pazán, D.P.; Hidalgo, L.; Larriva Loyola, J. Comparative analysis between 3D-printed models designed with generic and dental-specific software. Dent. J. 2023, 11, 216. [Google Scholar] [CrossRef]

- Sommer, K.; Izzo, R.L.; Shepard, L.; Podgorsak, A.R.; Rudin, S.; Siddiqui, A.H.; Wilson, M.F.; Angel, E.; Said, Z.; Springer, M.; et al. Design optimization for accurate flow simulations in 3D printed vascular phantoms derived from computed tomography angiography. MI Imaging Inform. Healthc. Res. Appl. 2017, 10138, 180–191. [Google Scholar]

- Sommer, K.N.; Iyer, V.; Kumamaru, K.K.; Rava, R.A.; Ionita, C.N. Method to simulate distal flow resistance in coronary arteries in 3D printed patient-specific coronary models. 3D Print. Med. 2020, 6, 19. [Google Scholar] [CrossRef]

- Smith, M.; Drew, T.; Reidy, B.; McCaul, C.; MacMahon, P.; Jones, J.F.X. Three-dimensional printed laryngeal model for training in emergency airway access. Br. J. Anaesth. 2018, 121, e8–e9. [Google Scholar] [CrossRef]

- Yunga, A.D.A.; Corte, N.A.J.; Ocampo, F.V.P.; Calderón, M.E.B. Comparative study of reliability in three software meshmixer, 3d slicer and nemocast of the intercanine and intermolar spaces of digital models. World J. Adv. Res. Rev. 2023, 17, 1040–1045. [Google Scholar] [CrossRef]

- Espinoza, P.E.I.; Paredes, J.R.P. Bolton analysis in a specific program for orthodontics: NemoCast in comparison with free access programs: Meshmixer and 3d slicer. World J. Adv. Res. Rev. 2023, 17, 686–698. [Google Scholar] [CrossRef]

- Gielow, M.; Gerrah, R. Virtual intravascular visualization of the aorta for surgical planning in acute type A aortic dissection. JTCVS Tech. 2024, 25, 28–32. [Google Scholar] [CrossRef]

- YanFi, Y.U.; Sari, A.C. The effect of user experience from teksologi. Adv. Sci. 2020, 5, 847–851. [Google Scholar] [CrossRef]

- Kadastik, J.; Artla, T.; Schrepp, M. Your experience is important! The user experience questionnaire (UEQ)-Estonian version. In Proceedings of the 11th International Scientific Conference, Jelgava, Latvia, 11–12 May 2018; Volume 11, pp. 281–287. [Google Scholar]

- Schrepp, M.; Hinderks, A. Design and evaluation of a short version of the user experience questionnaire (UEQ-S). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 103. [Google Scholar] [CrossRef]

- Pratama, A.; Faroqi, A.; Mandyartha, E.P. Evaluation of User Experience in Integrated Learning Information Systems Using User Experience Questionnaire (UEQ). J. Inf. Syst. Inform. 2022, 4, 1019–1029. [Google Scholar] [CrossRef]

- Rodrigues, M.E.M.; Moura, K.H.S.; Branco, K.C.; Lelli, V.; Viana, W.; Andrade, R.M.; Santos, I.S. Exploring User Experience and Usability of mHealth applications for people with diabetes: An Evaluation Study Using UEQ and HE4EH Checklist. J. Interact. Syst. 2023, 14, 562–575. [Google Scholar] [CrossRef]

- Saleh, A.M.; Abuaddous, H.Y.; Enaizan, O.; Ghabban, F. User experience assessment of a COVID-19 tracking mobile application (AMAN) in Jordan. Indones. J. Electr. Eng. Comput. Sci. 2021, 23, 1120–1127. [Google Scholar] [CrossRef]

- Wulandari, R.; Pangarsa, E.A.; Andono, P.N.; Rachmani, E.; Sidiq, M.; Setyowati, M.; Waluyo, D.E. Evaluation of usability and user experience of oncodoc‘s m-health application for early detection of cancer. Asian Pac. J. Cancer Prev. APJCP 2022, 23, 4169. [Google Scholar] [CrossRef]

- Schrepp, M.; Thomaschewski, J. Design and validation of a framework for the creation of user experience questionnaires. IJIMAI 2019, 5, 88–95. [Google Scholar] [CrossRef]

- Juric, M.; Pehar, F.; Pavlović, N.P. Translation, psychometric evaluation, and validation of the Croatian version of the User Experience Questionnaire (UEQ). Int. J. Hum.–Comput. Interact. 2024, 40, 1644–1657. [Google Scholar] [CrossRef]

- Whaiduzzaman, M.; Sakib, A.; Khan, N.J.; Chaki, S.; Shahrier, L.; Ghosh, S.; Rahman, M.S.; Mahi, M.J.N.; Barros, A.; Fidge, C.; et al. Concept to reality: An integrated approach to testing software user interfaces. Appl. Sci. 2023, 13, 11997. [Google Scholar] [CrossRef]

- Dewi, P.W.S.; Dantes, G.R.; Indrawan, G. User experience evaluation of e-report application using cognitive walkthrough (cw), heuristic evaluation (he) and user experience questionnaire (UEQ). J. Phys. Conf. Ser. 2020, 1516, 12–24. [Google Scholar] [CrossRef]

- Yu, F.; Zeng, L.; Pan, D.; Sui, X.; Tang, J. Evaluating the accuracy of hand models obtained from two 3D scanning techniques. Sci. Rep. 2020, 10, 11875. [Google Scholar] [CrossRef]

- Neri, P.; Paoli, A.; Aruanno, B.; Barone, S.; Tamburrino, F.; Razionale, A.V. 3D scanning of Upper Limb anatomy by a depth-camera-based system. Int. J. Interact. Des. Manuf. IJIDeM 2024, 18, 5599–5610. [Google Scholar] [CrossRef]

- Mai, H.N.; Lee, D.H. The effect of perioral scan and artificial skin markers on the accuracy of virtual dentofacial integration: Stereophotogrammetry versus smartphone three-dimensional face-scanning. Int. J. Environ. Res. Public Health 2021, 18, 229. [Google Scholar] [CrossRef]

- Mai, H.N.; Kim, J.; Choi, Y.H.; Lee, D.H. Accuracy of portable face-scanning devices for obtaining three-dimensional face models: A systematic review and meta-analysis. Int. J. Environ. Res. Public Health 2021, 18, 94. [Google Scholar] [CrossRef]

- Redaelli, D.F.; Gonizzi Barsanti, S.; Fraschini, P.; Biffi, E.; Colombo, G. Low-cost 3D devices and laser scanners comparison for the application in orthopedic centers. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 953–960. [Google Scholar] [CrossRef]

- Chiu, C.T.; Thelwell, M.; Senior, T.; Choppin, S.; Hart, J.; Wheat, J. Comparison of depth cameras for three-dimensional reconstruction in medicine. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2019, 233, 938–994. [Google Scholar] [CrossRef]

- Rosicky, J.; Grygar, A.; Chapcak, P.; Bouma, T.; Rosicky, J. Application of 3D scanning in prosthetic & orthotic clinical practice. In Proceedings of the 7th International Conference on 3D Body Scanning Technologies, Lugano, Switzerland, 30 November–1 December 2016; pp. 88–97. [Google Scholar]

- Paterson, A.M.J.; Bibb, R.J.; Campbell, R.I. A review of existing anatomical data capture methods to support the mass customisation of wrist splints. Virtual Phys. Prototyp. 2010, 5, 201–207. [Google Scholar] [CrossRef]

- Albert, J.A.; Owolabi, V.; Gebel, A.; Brahms, C.M.; Granacher, U.; Arnrich, B. Evaluation of the pose tracking performance of the azure kinect and kinect v2 for gait analysis in comparison with a gold standard: A pilot study. Sensors 2020, 20, 5104. [Google Scholar] [CrossRef]

- Quinzi, V.; Polizzi, A.; Ronsivalle, V.; Santonocito, S.; Conforte, C.; Manenti, R.J.; Lo Giudice, A. Facial scanning accuracy with stereophotogrammetry and smartphone technology in children: A systematic review. Children 2020, 9, 1390. [Google Scholar] [CrossRef]

- Kim, H.; Jeong, S. Case study: Hybrid model for the customized wrist orthosis using 3d printing. J. Mech. Sci. Technol. 2015, 29, 5151–5156. [Google Scholar] [CrossRef]

- Daanen, H.A.; Ter Haar, F.B. 3D whole body scanners revisited. Displays 2013, 34, 270–275. [Google Scholar] [CrossRef]

- Portnoy, S.; Barmin, N.; Elimelech, M.; Assaly, B.; Oren, S.; Shanan, R.; Levanon, Y. Automated 3D-printed finger orthosis versus manual orthosis preparation by occupational therapy students: Preparation time, product weight, and user satisfaction. J. Hand Ther. 2020, 33, 174–179. [Google Scholar] [CrossRef]

- Sarışahin, S.; Yazıcıoğlu, Z.Ç. The Use of Orthoses Made with 3D Printer in Upper Extremity Rehabilitation: A Review. Uluborlu Mesleki Bilim. Derg. 2024, 7, 45–54. [Google Scholar] [CrossRef]

- Choo, Y.J.; Chang, M.C. Use of machine learning in the field of prosthetics and orthotics: A systematic narrative review. Prosthet. Orthot. Int. 2023, 47, 226–240. [Google Scholar] [CrossRef] [PubMed]

- Nayak, S.; Das, R.K. Application of artificial intelligence (AI) in prosthetic and orthotic rehabilitation. In Service Robotics; Intechopen: London, UK, 2020. [Google Scholar]

- Li, J.; Chen, S.; Shang, X.; Li, N.; Aiyiti, W.; Gao, F. Research progress of rehabilitation orthoses based on 3D printing technology. Adv. Mater. Sci. Eng. 2022, 2022, 5321570. [Google Scholar] [CrossRef]

- Chhadi, D.K.; Patil, D. Artificial Intelligence Versus Conventional orthosis. J. Pharm. Negat. Results 2022, 13, 2898–2901. [Google Scholar] [CrossRef]

| Control Group (n = 42) | Experimental Group (n = 45) | p-Value (t-Test) | |

|---|---|---|---|

| Age (mean ± SD) | 19.9 ± 1.1 | 19.8 ± 1.07 | p = 0.72 |

| Gender f/m (n; %) | 14/28; 34/66 | 18/27; 41/59 | p = 0.52 |

| Digital competence (%) | 87.5% ± 36.27 | 88.3% ± 29.04 | p = 0.22 |

| Parameters | Control Group (mean ± SD) | Experimental Group (mean ± SD) | p-Value | Size Effect |

|---|---|---|---|---|

| Surface area (m2) | 0.09 ± 0.001 | 0.096 ± 0.004 | p < 0.0001 | d = 1.6 |

| Volume (m3) | 0.002 ± 0.0004 | 0.003 ± 0.0002 | p < 0.0001 | r = 0.74 |

| Vertices (nr.) | 13,406 ± 468.6 | 14,863 ± 627.1 | p < 0.0001 | d = 2.64 |

| Triangles (nr.) | 26,955 ± 838.2 | 28,839 ± 1194 | p < 0.0001 | d = 1.84 |

| Gaps detected (nr.) | 4.09 ± 1.62 | 3.6 ± 1.62 | p < 0.002 | r = 0.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Glazer, C.; Oravitan, M.; Pantea, C.; Almajan-Guta, B.; Jurjiu, N.-A.; Marghitas, M.P.; Avram, C.; Stanila, A.M. Evaluating 3D Hand Scanning Accuracy Across Trained and Untrained Students. Bioengineering 2025, 12, 777. https://doi.org/10.3390/bioengineering12070777

Glazer C, Oravitan M, Pantea C, Almajan-Guta B, Jurjiu N-A, Marghitas MP, Avram C, Stanila AM. Evaluating 3D Hand Scanning Accuracy Across Trained and Untrained Students. Bioengineering. 2025; 12(7):777. https://doi.org/10.3390/bioengineering12070777

Chicago/Turabian StyleGlazer, Ciprian, Mihaela Oravitan, Corina Pantea, Bogdan Almajan-Guta, Nicolae-Adrian Jurjiu, Mihai Petru Marghitas, Claudiu Avram, and Alexandra Mihaela Stanila. 2025. "Evaluating 3D Hand Scanning Accuracy Across Trained and Untrained Students" Bioengineering 12, no. 7: 777. https://doi.org/10.3390/bioengineering12070777

APA StyleGlazer, C., Oravitan, M., Pantea, C., Almajan-Guta, B., Jurjiu, N.-A., Marghitas, M. P., Avram, C., & Stanila, A. M. (2025). Evaluating 3D Hand Scanning Accuracy Across Trained and Untrained Students. Bioengineering, 12(7), 777. https://doi.org/10.3390/bioengineering12070777