1. Introduction

As technology continues to advance at a rapid pace, artificial intelligence (AI) is becoming more deeply embedded in numerous industries, with healthcare standing out as a key area of impact. AI is making significant strides in areas such as heart disease [

1], cancer [

2,

3], and diabetes [

4]. Its applications span from aiding in diagnostics to planning treatments and developing personalized medical plans, underscoring its tremendous potential to enhance the efficiency and accuracy of medical services. In dental medical diagnostics, AI has already demonstrated its transformative potential. Leveraging machine learning algorithms, AI can process extensive amounts of medical imagery, including X-rays [

5], CT [

6], and MRI scans [

7], enabling doctors to diagnose diseases more accurately.

Caries are a prevalent issue in dental healthcare, affecting nearly all adults and 60–90% of children, posing a significant public health challenge, especially with dental braces or dental restorations [

8,

9]. Traditional dental examinations relying on visual inspection or radiographic images [

10,

11] can be subjective and time-consuming. Related studies have utilized auxiliary software for oral examinations, such as methods of geometric alignment to compare noise levels in subtraction images [

12], jawbone regeneration [

13], and corticalization measurement [

14]. With the rise of AI, automated caries detection using image processing and deep learning technologies has gained increasing attention [

15]. Deep learning techniques such as convolutional neural networks (CNNs) have shown significant performance in medical image classification by leveraging large-scale annotated datasets [

16,

17]. In dentistry, CNNs have been applied to detect apical lesions, offering objective interpretation and reducing diagnostic time [

18]. Bitewing radiographs (BWs) are commonly used to identify caries and periodontal conditions. Tooth region extraction from BWs can be performed using filtering, binarization, and projection methods [

19,

20,

21,

22]. The YOLO object detection algorithm enables real-time localization with high accuracy and speed [

23,

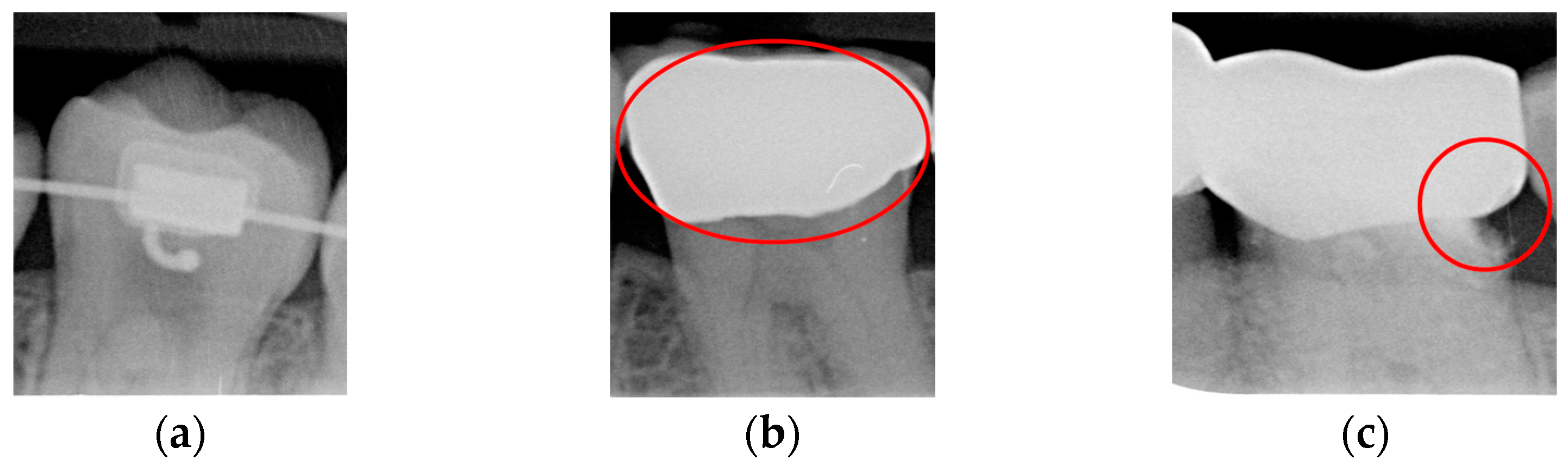

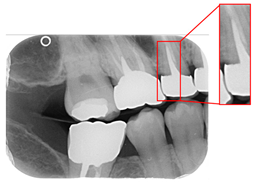

24]. This study uses YOLO to detect caries under restorations and dental braces, as illustrated in

Figure 1.

Three primary methods are commonly used to diagnose dental caries: digital radiography, simulated radiography, and 3D imaging techniques such as CBCT. For example, Baffi et al. [

10] reviewed 77 studies involving 15,518 tooth surfaces, with 63% showing enamel caries. Lee et al. [

25] applied a U-Net-based CNN for early caries detection, achieving an accuracy of 63.29% and a recall of 65.02%. Dashti et al. [

26] used deep learning on 2D radiographs and achieved an average precision of 85.9%. In addition to CNN-based methods, image enhancement techniques such as noise reduction, contrast adjustment [

27], intensity value mapping [

28], and histogram equalization [

29] have been widely adopted to improve lesion visibility and classification performance. Well-known CNN models like AlexNet, GoogLeNet, and MobileNet have also been used for training and evaluating datasets containing secondary caries and healthy teeth, allowing for comparisons of model performance and accuracy.

Despite numerous studies employing AI-assisted methods for detecting dental caries, two key limitations remain. First, the accuracy of most existing models typically ranges between 88% and 93%, indicating a persistent risk of misclassification. Second, these studies often exclude cases involving caries under dental restorations and orthodontic braces, which limit their applicability in more complex clinical scenarios. Thus, we employ rotation-aware segmentation methods to address the various BW tilt angles to detect dental caries, ensuring that the most suitable segment angle is used for each BW and maintaining high detection accuracy despite variations in BW imaging angle. Moreover, an ablation experiment was conducted to analyze the impact of various image enhancement techniques on model performance. The proposed system was also benchmarked against recent state-of-the-art studies to evaluate its precision in detecting caries under dental braces and dental restoration. This study aims to focus specifically on detecting dental caries under dental braces and dental restorations. The proposed system is designed to assist clinicians in interpreting BW images and identifying caries under dental restorations and braces by leveraging deep learning and image processing techniques. The goal is to develop an AI-assisted diagnostic tool that reduces the diagnostic burden on dental professionals, enhances early detection accuracy, and improves clinical efficiency in real-world dental practice.

2. Materials and Methods

This study aims to develop an automated system to help dentists quickly detect caries under dental restoration and dental brace. However, the diverse shapes and orientations of teeth in BWs present significant challenges for accurate individual tooth assessment. Thus, we first locate and segment each tooth in the BW by YOLO. At the same time, we implemented the proposed rotation-aware segmentation on the BW and evaluated its performance compared with YOLO-based detection. Subsequently, we applied image processing algorithms and conducted an ablation experiment to optimize caries detection under dental restorations and orthodontic braces. These experiments enabled the model to achieve its highest detection precision by effectively isolating the target regions and minimizing background interference with CNN training. The overall flow chart is shown in

Figure 2.

2.1. BW Image Dataset Collection

The dataset used in this study was provided by Chang Gung Memorial Hospital, Taoyuan, Taiwan. It was approved by the Institutional Review Board (IRB) of Chang Gung Medical Foundation (IRB number: 02002030B0). BW image and corresponding ground truth annotations were collected by three oral specialists, each with over five years of clinical experience. Each expert independently annotated the presence of caries under restorations and dental braces on the BW images using the LabelImg tool version 1.7.0. The annotation process was conducted without mutual influence among annotators. Final labels for each BW image were determined by majority voting to ensure annotation reliability. Patients with a history of the human immunodeficiency virus (HIV) were excluded from the dataset. All eligible BW images collected during the study period were included in the dataset to maximize sample size and ensure the generalizability of clinical diagnosis.

Model training, testing, and validation were supervised by senior researchers with extensive experience. A blinded protocol was implemented during the validation and testing stages to eliminate operator bias. Specifically, the operator conducting model evaluation was unaware of whether the BW images contained teeth affected by caries under restorations or dental braces, ensuring objective assessment. The BW dataset contained 505 images, and the single-tooth dataset included 440 images. For the tooth localization task using YOLO, 84 BW images were reserved as a validation set, while the remaining images were split into training and test sets in an 8:2 ratio. For the CNN-based classification task detecting the presence of caries under restorations and dental braces, 40 single-tooth images were reserved for validation, and the remaining images were divided into training and test sets using a 7:3 ratio.

2.2. BW Image Segmentation

This subsection describes our two image segmentation methods. The first method is rotation-aware segmentation, which extracts single teeth by finding the optimal rotation angle of the BW slice and segmenting based on horizontal and lead hammer lines. The second method uses the YOLO deep learning technique to determine tooth coordinates and segment teeth accordingly. These techniques allow for subsequent image enhancement and CNN training, improving the model’s ability to localize and classify caries under complex conditions.

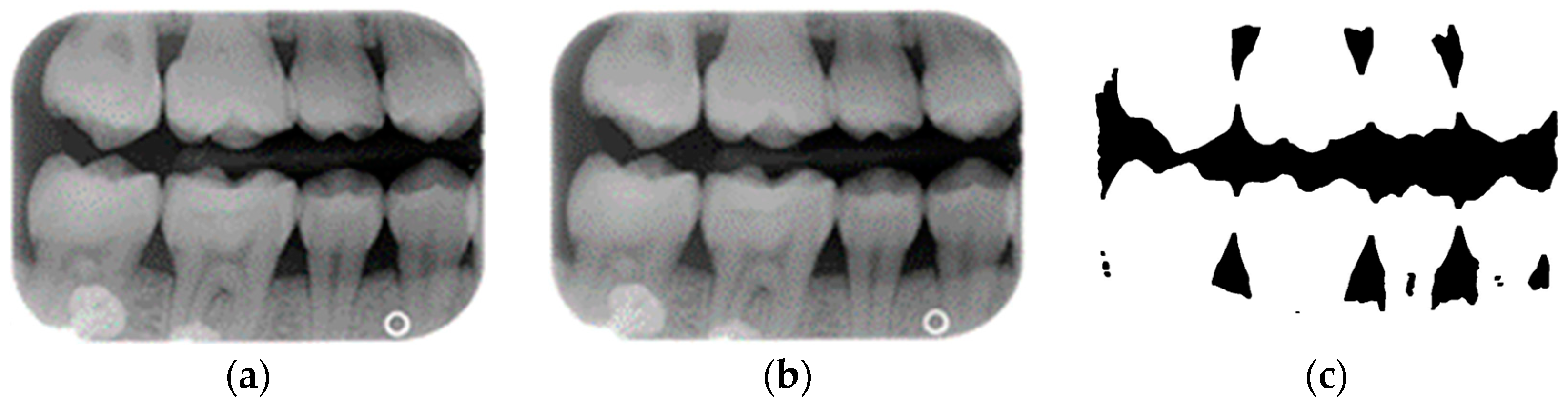

A complete BW varies due to factors such as angle, exposure size, the number of teeth, and interproximal spacing. Using fixed parameters and thresholds can lead to misjudgments and low segmentation efficiency. To enhance flexibility and operability, the algorithm uses adaptive thresholds tailored to each BW based on brightness, size, and the number of teeth. Each BW is pre-processed before segmentation due to variations in mouth shape, tooth shape, and imaging angle. This study first applies to a gaussian high-pass filter to eliminate noise, reducing segmentation errors. Next, the images undergo binarization and erosion techniques to clarify background contours, making them easier to distinguish, as illustrated in

Figure 3.

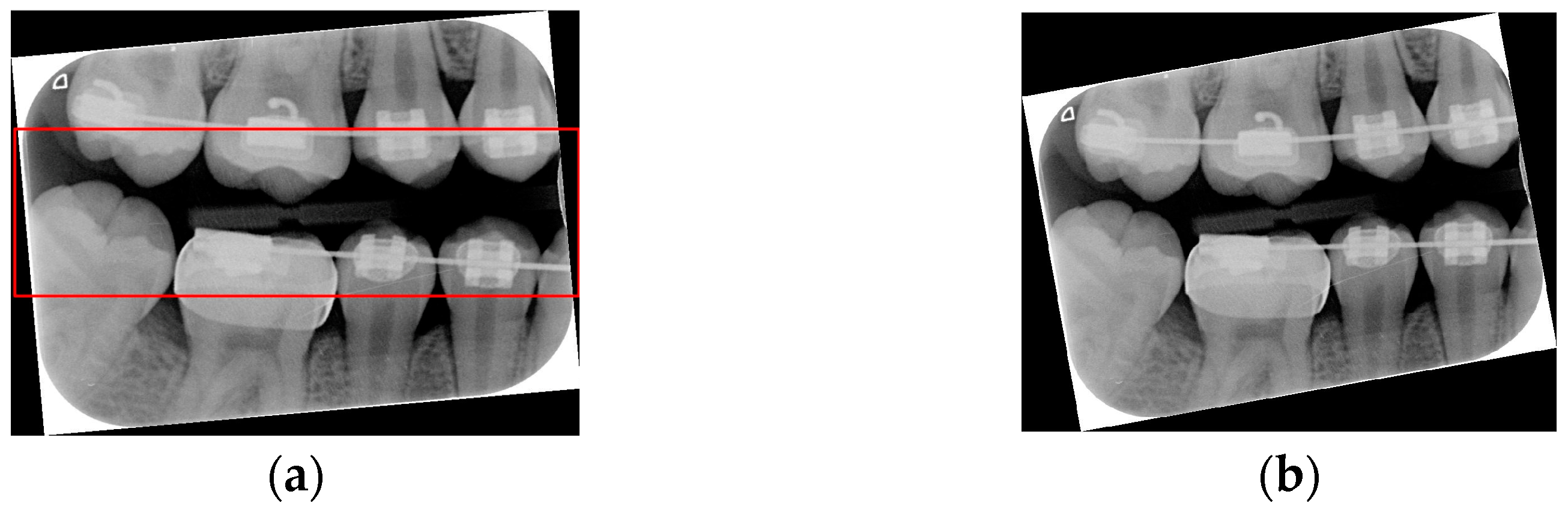

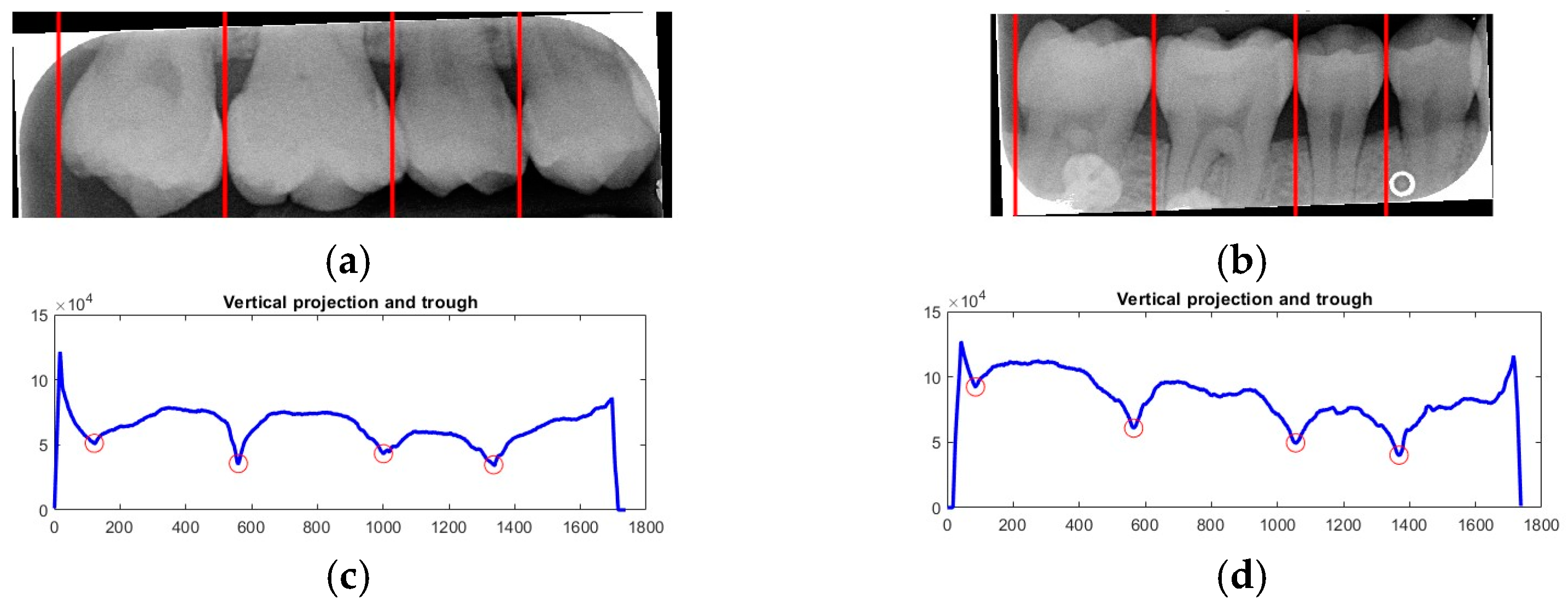

Due to angular issues in a BW, horizontal and vertical lines may not fully separate the teeth. This study addresses this by rotating and binarizing images multiple times to enhance the contrast between teeth and gaps. High-contrast images allow for accurate identification of tooth gaps through pixel horizontal projection as shown in

Figure 4a. The image is divided horizontally into three parts, masking the upper and lower sections to focus on the middle, like the upper and lower sides of the red box in

Figure 4b are masked. The valleys of the projection line in this region are identified as the x-minimum value, and the y-coordinate of the valley represents the vertical height separating the upper and lower rows of teeth after rotation. Additionally, during each rotation, a projection is made to identify the trough position in the middle of the image. The trough values (x-minimum) at each angle are compared to determining the optimal rotation angle for horizontal segmentation. Initially, the image is rotated within a range of plus or minus 15 degrees, in increments of 5 degrees. By comparing the trough values at each angle, the most suitable rotation angle for horizontal cutting is identified, as shown in

Figure 4b.

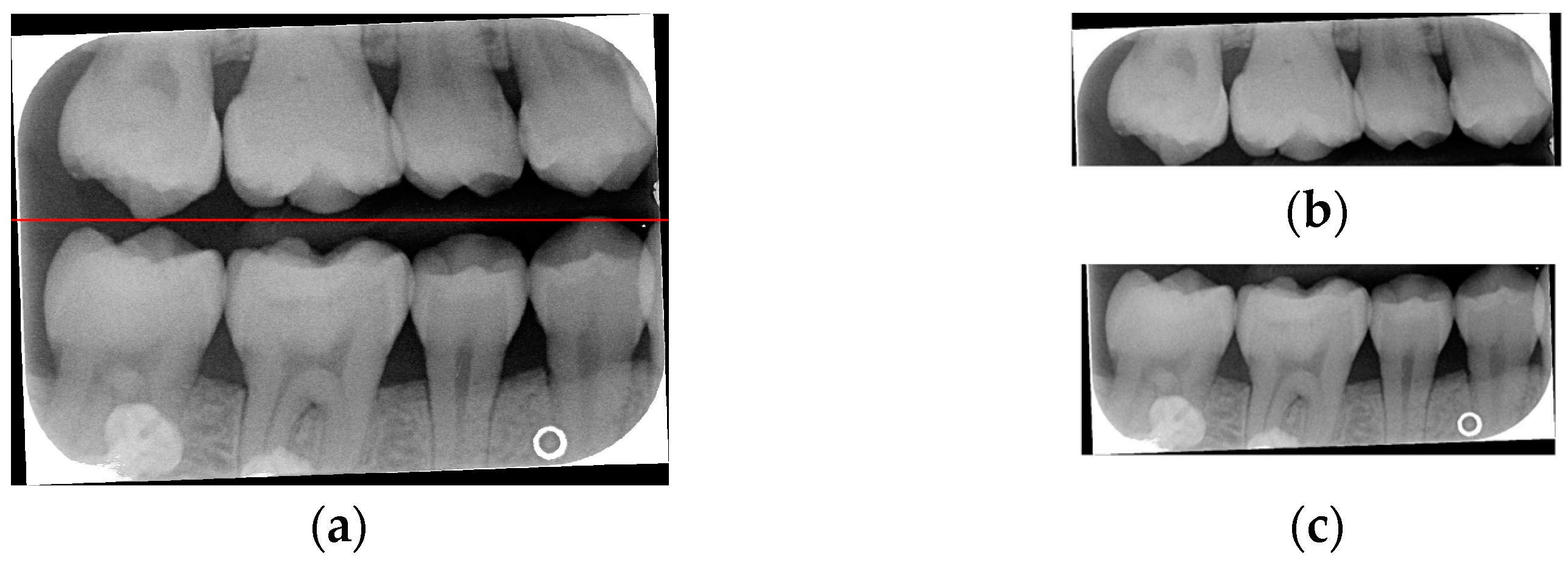

According to

Table 1. After performing small-angle rotations and comparing the trough values at each angle, it was determined that the lowest trough value (x = 36) occurs at a rotation of 11 degrees, which is lower than the trough value (x = 40) obtained at the initial rotation of 10 degrees. Therefore, it can be concluded that a positive 11 degrees is the most suitable rotation angle for this BW, which is more favorable for subsequent horizontal segmentation. If a smaller rotation angle is used from the beginning to find a suitable angle, multiple calculations will be required within the same range of angles. However, by gradually rotating the image in two steps, one large angle (5 degrees) and one small angle (1 degree) to obtain the most suitable rotation angle, we achieve the same result and find out the suitable angle more quickly. After rotating the image of each BW to a suitable angle, the height of the trough (y-value) is found. The height of the plumb coordinates of the troughs are found and the horizontal line separating the upper and lower jaws is plotted using the height of these coordinates. This allows the entire BW to be divided into upper and lower rows of teeth; the specific segmentation result is shown in

Figure 5.

After dividing the BW into upper and lower rows of teeth, each tooth is segmented individually. Vertical projection and vertical erosion are used to find the troughs (y-minimum) of the adjacent waveforms, identifying the gaps between teeth to separate each one. The number of vertical lines required varies with the number of teeth in each row. If the number of teeth is

n, then

n − 1 vertical lines are needed for complete segmentation. These

n − 1 lines correspond to the number of troughs found in the vertical projection of the waveform. The x-coordinates of these troughs are returned to the original image, where vertical lines are drawn to isolate the teeth. The peaks and valleys are marked with red circles in

Figure 6a,b, which is shown in

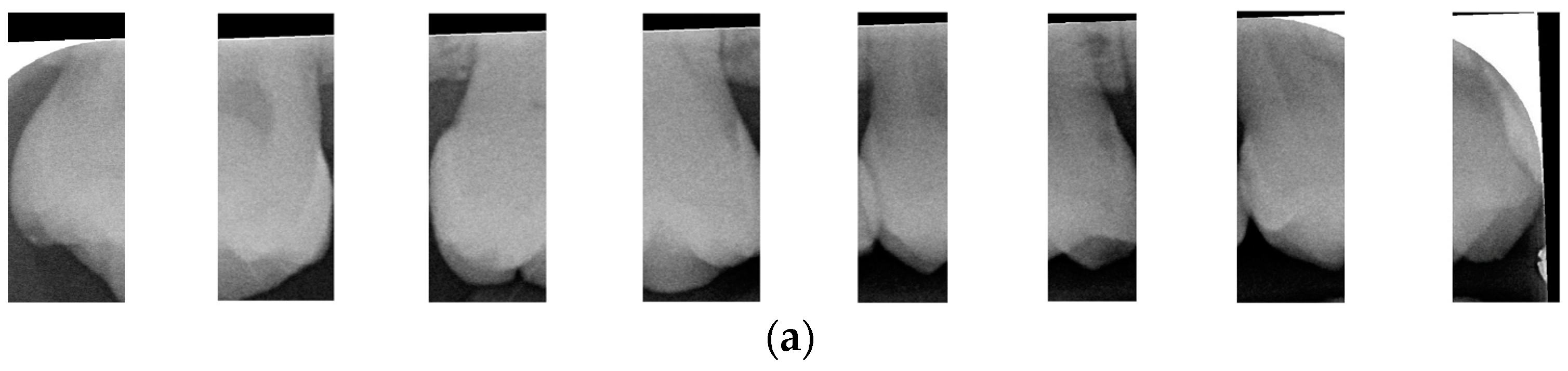

Figure 6c,d. Because secondary caries mainly occurs on both sides of the teeth, and since each complete tooth has both a left and right half, this increases the complexity during training and judgment, resulting in poor training outcomes. Therefore, each tooth image is further divided into left and right halves, as shown in

Figure 7. This approach reduced the complexity of the data and doubled the training dataset, providing more data for training.

- B.

YOLO Deep Learning Method

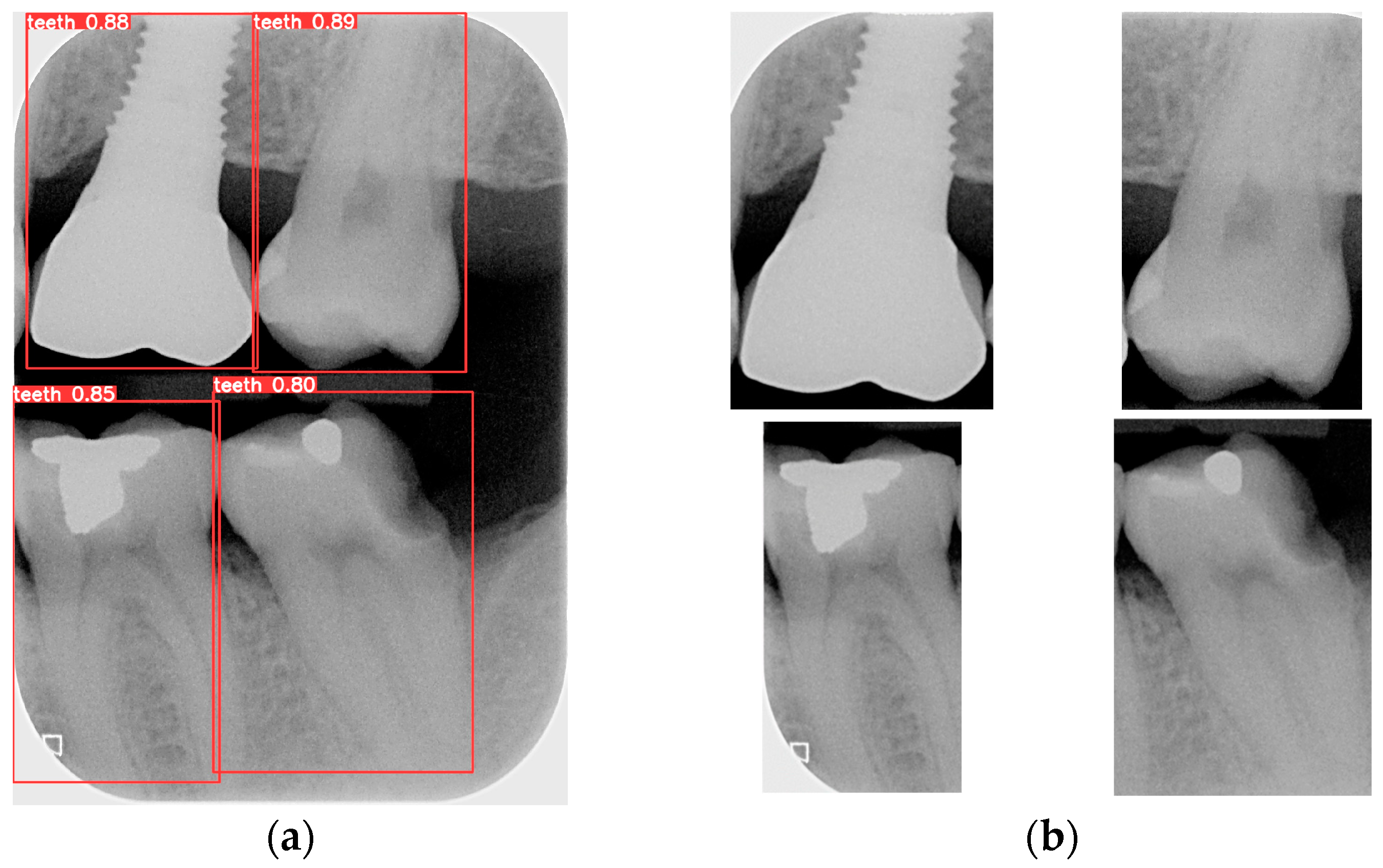

Object detection has been a challenging task in computer vision and deep learning. Traditional methods often require multiple steps, including region extraction, feature computation, and classification, leading to slow processing speeds and high complexity. However, recent advancements in deep learning have led to significant progress in object detection. YOLO achieves excellent accuracy and significantly outperforms traditional methods in image processing speed. Its uniqueness lies in detecting and locating objects in the entire image at once, without the need for excessive computation. YOLO is used to locate the teeth by finding the coordinates of each tooth in the BW. The BW is segmented according to these coordinates to produce an image of each individual tooth. Training the YOLO model requires a large amount of data for training and validation, with each piece of data distinguished from the target. The trained model is then applied to the entire database of BWs, identifying and labeling the position of each tooth. The BW is segmented to obtain individual tooth images after determining the coordinates of each tooth. Subsequently, the length and width data of the four teeth in the BW are used to segment each tooth, which is shown in

Figure 8.

2.3. Image Enhancement

This subsection aims to make symptomatic conditions more apparent, thereby making the images more suitable for CNN training and analysis. In a BW, tooth decay appears as black gaps, teeth appear as grayish-white, and dental restorations appear as bright white. The enhancement process focuses on increasing the contrast between black, gray, and white, particularly at the junctions of dental restorations, teeth, and cavities (black background). Non-smooth lines at these junctions indicate the presence of caries. Segmented images may lack sufficient color contrast or display subtle symptoms, which can hinder the CNN model’s ability to train and discriminate effectively. To address this, image enhancement techniques are employed to improve symptom visibility by increasing contrast. Histogram equalization (HISTEQ) is used to enhance dark and bright areas and increase overall contrast, highlighting symptom locations before CNN model training. Additionally, intensity value mapping (IAM) and adaptive histogram equalization (AHE) are applied to further enhance image quality, as illustrated in

Figure 9.

After the above three types of symptom enhancement, it is found that the symptomatic part is not particularly noticeable. The white color of the dental restoration, the off-white color of the teeth and gingiva, and the black color of the background are not in sharp contrast. The edges of the color blocks are blurred. This may make it difficult for the CNN model to recognize the symptoms. Therefore, this study uses the above three types of reinforcement to enhance the training accuracy through the interaction enhancement model. For example, HISTEQ and AHE can increase the contrast in the image and make it easier to detect secondary caries in the image. The result of the interaction enhancement is shown in

Figure 10.

2.4. CNN Training and Validation

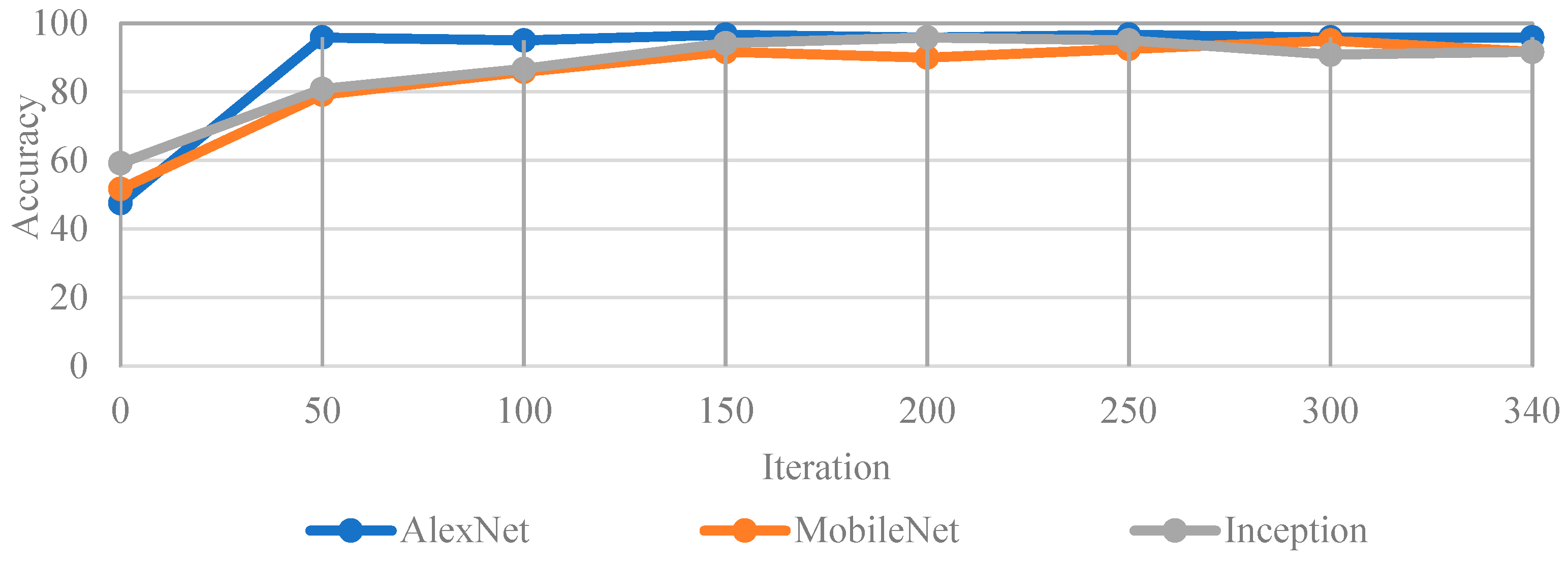

Various CNN models were employed for image classification within the domain of deep learning. Using AlexNet as a representative example,

Table 2 illustrates the architecture of each layer with the AlexNet model. During the training phase, each image in the validation set was individually verified to calculate the average validation accuracy. The CNN model was trained using these classified datasets, with the input image size configured to 227 × 227 × 3. This setup allowed for a consistent and standardized input size for the model. The design of the model involved modifying the last three layers, fully connected, softmax, and classification layers, and replacing them with fully connected layers specifically configured to classify the images into two categories, corresponding to the primary classes being analyzed. After the deep learning model was trained, images from the test set were randomly input into the model to assess its performance. The model classified these images based on the features it learned during the initial training phase. A confusion matrix was then generated to analyze the classification results, providing a detailed breakdown of the model’s accuracy and performance. This matrix allowed for a clear visualization of how well the model distinguished between the different classes, highlighting areas of strength and potential improvement. This systematic procedure comprehensively assessed the CNN’s performance in classifying BWs.

Hyperparameter Adjustment

In the training stage, each parameter represents different meanings, such as the number of layers in the neural network, the loss function, the size of the convolution kernel, and the learning rate. This study describes three modified parameters, including the initial learning rate, max epoch, and mini-batch size. Detailed hyperparameter values are listed in

Table 3. The experiments were conducted on a hardware platform equipped with an Apple M1 processor (8-core CPU + 8-core GPU) operating at 3.2 GHz and 16 GB of DRAM. The software environment included MATLAB R2023a and Deep Network Designer version 14.6.

4. Discussion

This study uses deep learning techniques to detect whether individual teeth in a BW affected by dental restorations and dental braces exhibit signs of caries. To enhance model performance, image processing and enhancement techniques are incorporated to improve the training outcomes of the deep learning models. This system is primarily designed to support dental professionals in clinical diagnosis and aims to serve as a diagnostic aid, especially for senior dentists in learning to identify carious lesions. Moreover, this study addresses a significant gap in current research, where caries under dental restorations and dental braces have often been excluded from diagnostic models. Compared to previous studies, our experiment results better detect caries beneath restorations and around braces. For instance, Ayhan et al. [

34] developed a CNN model using U-Net for caries detection on bitewing radiographs, achieving a precision of 65.1% and a recall of 72.7%. In contrast, our model achieved higher precision and recall rates, indicating improved diagnostic accuracy in complex restorations and orthodontic appliance cases. Furthermore, Pérez de Frutos et al. [

35] utilized deep learning methods for detecting proximal caries lesions in BW images, emphasizing the potential of AI in enhancing diagnostic capabilities. Our inclusion of images with restorations and orthodontic appliances in the dataset addresses the limitations noted in earlier research, where such complexities were often excluded. Additionally, our model’s performance metrics surpass those reported in prior studies utilizing similar deep learning architectures for caries detection, indicating a significant advancement in diagnostic accuracy. This is particularly evident when compared to the work of Ayhan et al. [

36], who implemented a deep learning approach for caries detection and segmentation on bitewing radiographs, which achieves a precision of 93.4% and a recall of 83.4%, and in our YOLOv8 detection can reach 99.4% and 98.5%, demonstrating that our result is better than the state-of-the-art research. Overall, the contributions and innovations in this study are as follows:

We evaluated two segmentation techniques for BWs, including the state-of-the-art YOLOv8 model and our innovative rotation-aware single-tooth segmentation algorithm, which effectively compensates for errors caused by angular variations in BWs. While both methods achieved comparable segmentation accuracy (96–98%), our proposed algorithm showed a faster inference time, at least twice as fast as YOLOv8.

We compared our deep learning model with recent BW-based single-tooth detection studies [

30,

31]. We observed improvements, with precision increasing by up to 13.25% and recall by 12.55%. The proposed method achieved a maximum precision of 99.40% and a recall of 98.50% in detecting the targeted lesions.

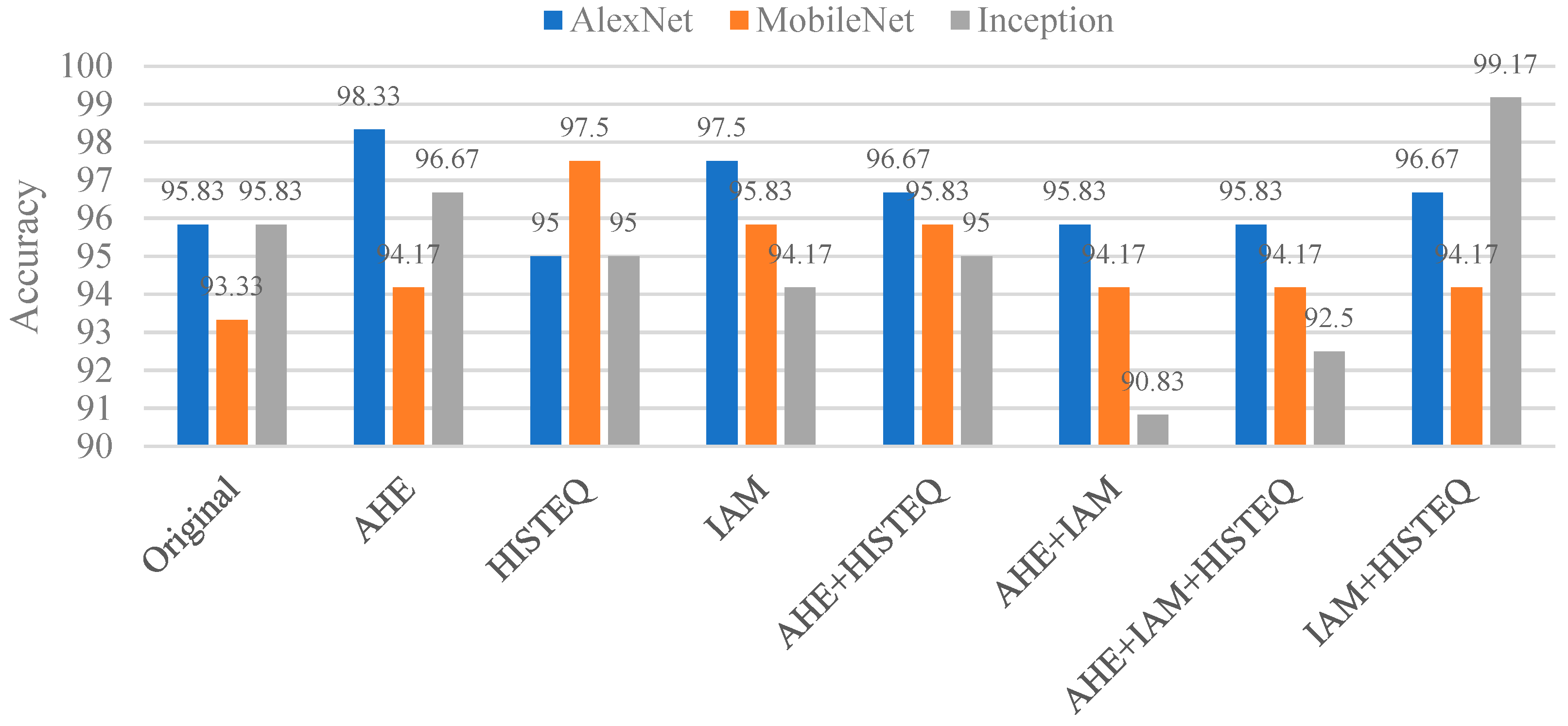

In detecting caries under restoration and dental braces, we applied various image enhancement techniques and conducted ablation studies to verify their effectiveness. The better-performing model is Inception-v3, which achieved an accuracy of 99.17%, representing a 3.34% improvement over the baseline without enhancement. Compared with a recent method in [

32], our system showed a 26.36% improvement in lesion detection.

To evaluate clinical applicability, we tested the system on external datasets not used for training or validation. The system achieved over 90% accuracy in identifying caries under dental restorations and dental braces cases, demonstrating its robustness and stability in practical diagnostic scenarios.

Despite the significant technical progress made in this study, several limitations remain. First, the limited size of the original dataset may affect the model’s generalization capability. Although data augmentation techniques were employed to alleviate sample insufficiency, further validation using large-scale and diverse datasets is necessary. We addressed this concern by using an external open-source dataset [

33] that was entirely separate from the training and validation data to evaluate the model’s robustness. In addition, we plan to conduct prospective real-world validation in collaboration with multiple medical institutions, aiming to expand our radiographic database and improve the clinical applicability and stability of the proposed system. Second, although classic CNN architectures such as AlexNet and Inception-v3 have demonstrated strong accuracy in this study, they were primarily chosen due to their stability on moderately sized datasets and relatively low computational requirements, making them suitable for initial validation stages. However, recent studies have shown that Vision Transformer (ViT) models exhibit superior performance in medical image analysis, particularly in capturing long-range dependencies and global features, which are essential for identifying complex structures. ViT typically requires large-scale datasets to achieve high accuracy. Azad et al. [

37] have shown that the effectiveness of ViT architectures in medical imaging tasks is significantly influenced by the availability of extensive training data. In future work, we will collect more BW datasets and combine ViT and assess their potential benefits in enhancing model performance and generalization capability.

Third, while effective, the current image enhancement strategy retains substantial background information during lesion detection, especially when identifying implants, where non-lesion areas may remain overly prominent. Future work will explore alternative enhancement and preprocessing techniques to suppress irrelevant backgrounds better and emphasize pathological regions. Fourth, this study did not consider the phenomenon of cervical burnout, which can mimic carious lesions on BW images and potentially lead to false-positive detections. In future work, we will explore artifact-reduction techniques and model adaptations to distinguish true lesions from cervical burnout. This study did not specifically address the issue of radiolucent restorative materials, such as certain composite resins, which may mimic carious lesions on radiographs. These materials can appear as radiolucent and may be mistakenly identified as caries, posing a risk of false-positive diagnoses. In future work, we plan to investigate methods to differentiate true caries from radiolucent artifacts. Additionally, integrating the system into clinical practice requires addressing compatibility with existing dental software and meeting regulatory standards. We will work closely with practitioners to optimize usability and ensure compliance with clinical guidelines.