From Trust in Automation to Trust in AI in Healthcare: A 30-Year Longitudinal Review and an Interdisciplinary Framework

Abstract

1. Introduction

- From automation trust to AI trust, how has human–machine trust evolved over the past thirty years? This includes: A. What is the historical background of interpersonal trust and the early progress on trust in automation? B. How has human–AI trust evolved since the advent of AI systems?

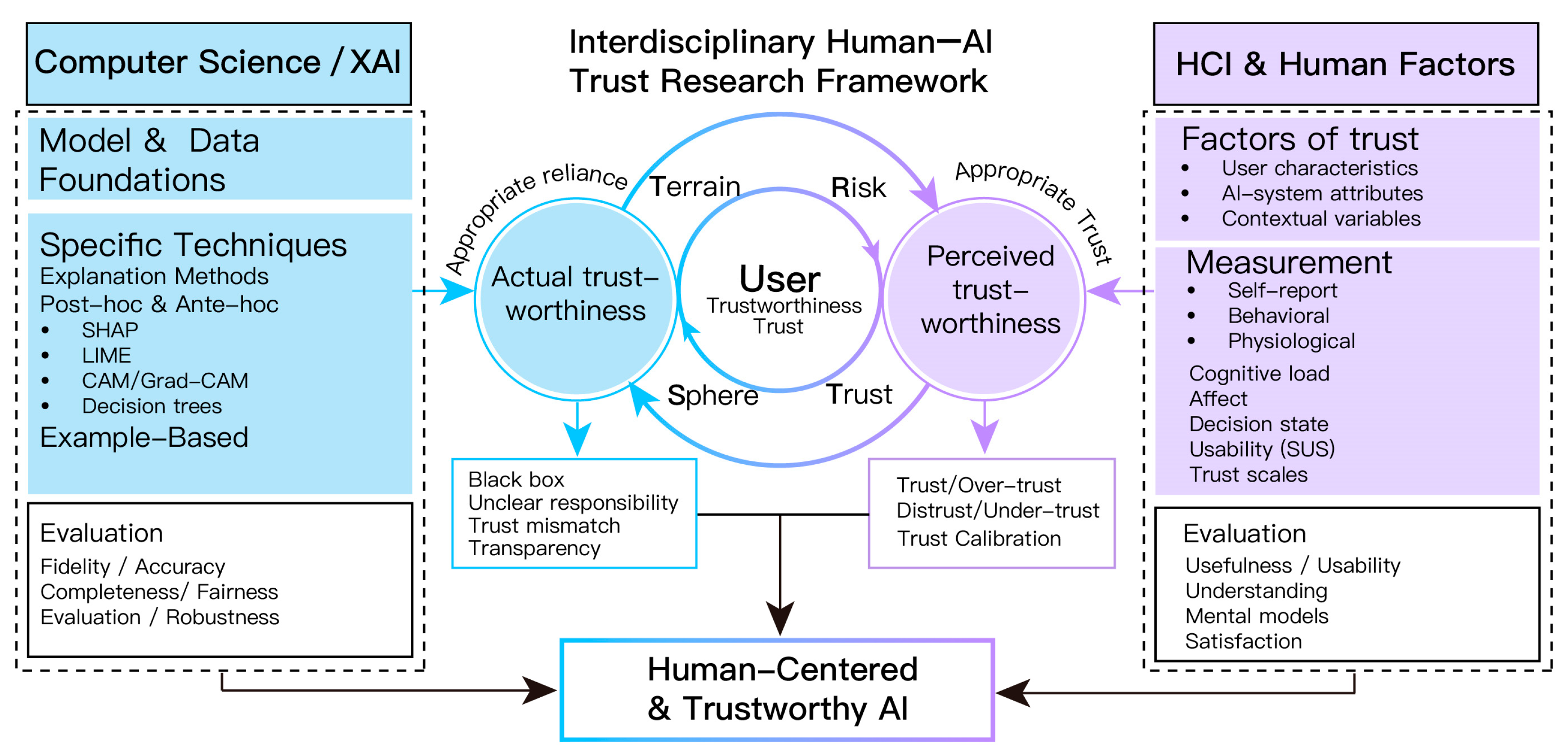

- Through which key mechanisms do XAI and HCI, respectively, influence actual trustworthiness and perceived trustworthiness, and how can these lines of work be integrated into a unified framework that bridges the two?

- What research gaps, major challenges, and future opportunities define the current landscape of AI trust?

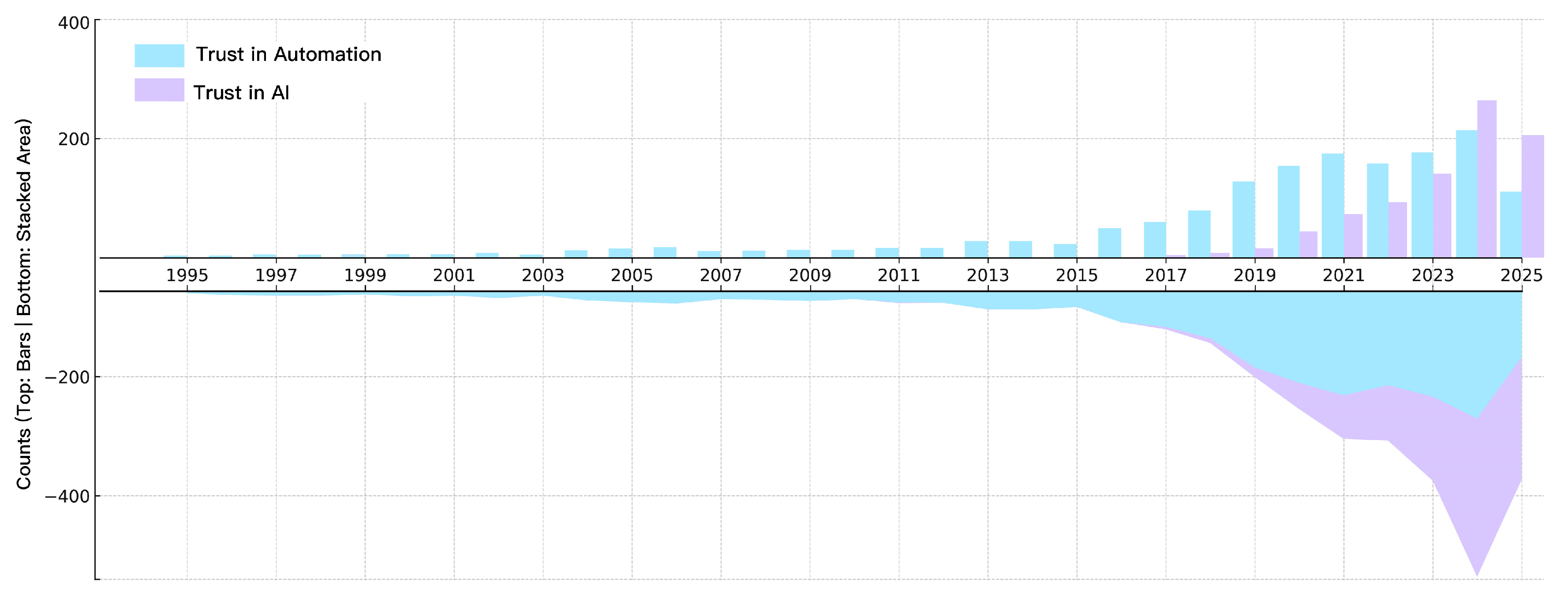

- We synthesize a thirty-year longitudinal evolution from “automation” to “AI”, summarizing phase-specific technical contexts, trust mechanisms, theoretical iteration, and practical foci.

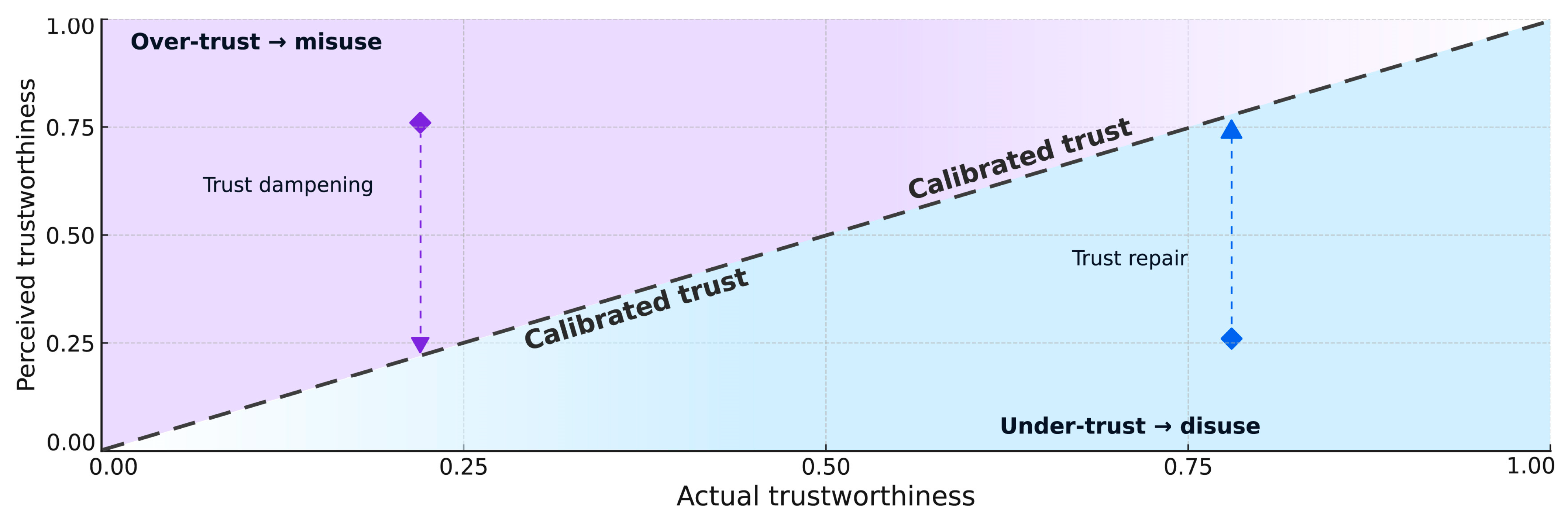

- We compare trust research across the XAI research community and HCI and develop an Interdisciplinary Human-AI Trust Research (I-HATR) framework that places actual trustworthiness and perceived trustworthiness within the same coordinate system, providing a multidimensional understanding of human-AI trust and helping to bridge the gap between them.

- We outline future research opportunities, major challenges, and actionable directions based on current trends

2. Methods

2.1. Scope and Positioning

2.2. Search and Selection

3. Longitudinal Evolution: From Trust in Automation to Trust in AI

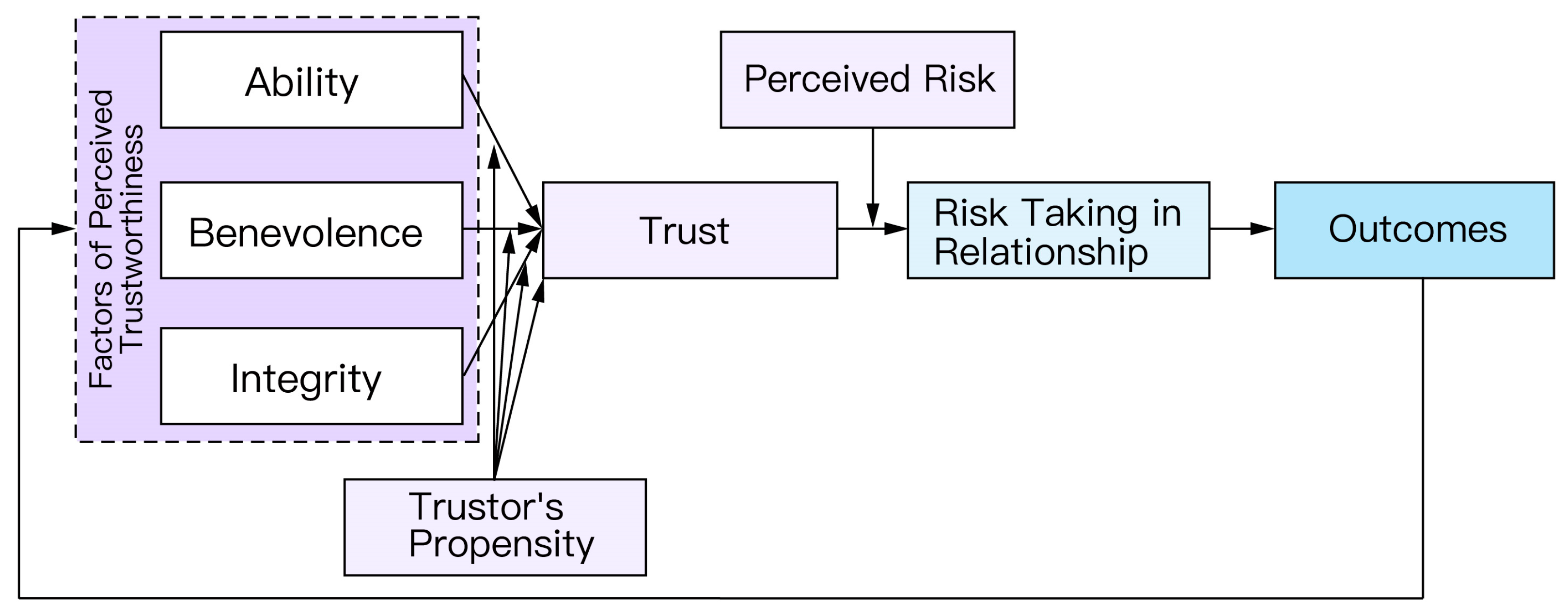

3.1. Foundations of Trust in Automation

3.2. Trust in Automation

3.2.1. Technological Trajectory

3.2.2. Theory and Paradigm Shifts

3.2.3. Applications and Extensions

3.3. Trust in AI

3.3.1. Technological Developments

3.3.2. Theory and Paradigm Shifts

3.3.3. Application Extensions

4. An Interdisciplinary XAI–HCI Framework for Human–AI Trust

4.1. XAI Pathway (Left Wing of the I-HATR)

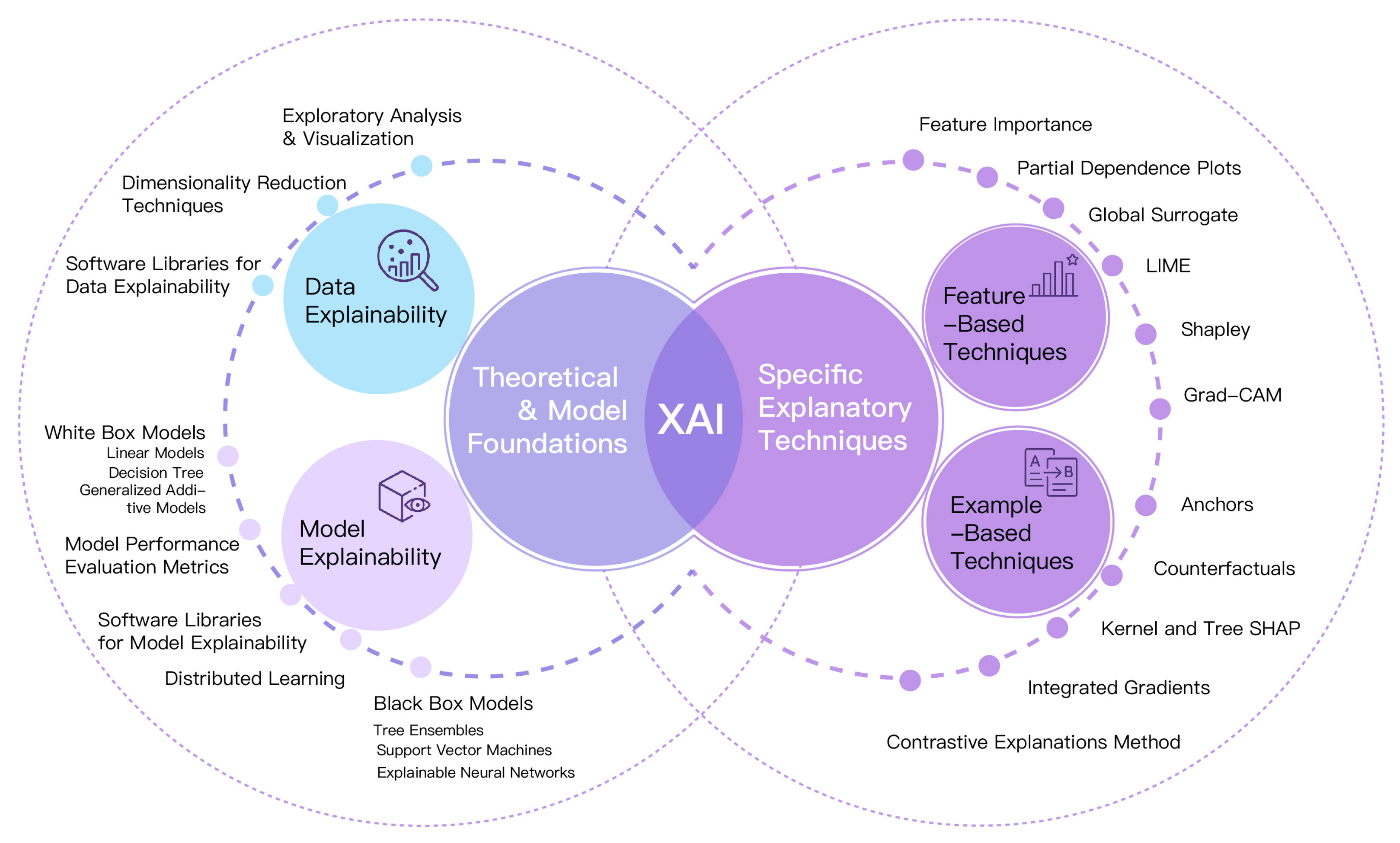

4.1.1. From Opacity to Explainability: A Task-Oriented Taxonomy for Data and Models

4.1.2. Ante-Hoc (Intrinsic) and Post Hoc Approaches

4.2. HCI Pathway (Right Wing of the I-HATR)

4.2.1. Determinants of Human–AI Trust

- User-related factors

- AI-system factors

- Contextual factors

4.2.2. Evaluation and Measurement of Human–AI Trust

- Self-report measures

- Behavioral measures

- Psycho-/neurophysiological measures

5. Discussion

5.1. Paradigm Shifts and the Evolution of Measurement

5.2. From Parallel Tracks to Resonance: Aligning XAI and HCI

5.3. Operationalizing Complexity

5.4. Compliance and Societal Implications

6. Limitations, Research Gaps, and Future Directions

6.1. Limitations

6.2. Research Gaps and Future Directions

- (1)

- Bridge the split between system metrics and human measures.

- (2)

- Close the “performance–deployability” gap in healthcare.

- (3)

- Ethics, culture, and time horizons.

- Conduct real-user longitudinal studies over 12–24 months with scheduled interim assessments; extensions beyond this window should be justified by interim findings;

- Examine generational differences (e.g., Gen Z vs. Millennials) in AI trust;

- Develop synchronous trust–capability assessment models to detect asynchrony in real time;

- Apply complex-systems approaches (e.g., differential-equation models) to capture nonlinear trust dynamics and “butterfly effects.”

- (4)

- Rebalance underexplored areas.

- (5)

- From one-way trust to reciprocal trust.

- (6)

- Regulatory adaptability.

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gao, J.; Wang, D. Quantifying the use and potential benefits of artificial intelligence in scientific research. Nat. Hum. Behav. 2024, 8, 2281–2292. [Google Scholar] [CrossRef] [PubMed]

- Bughin, J.; Hazan, E.; Lund, S.; Dahlström, P.; Wiesinger, A.; Subramaniam, A. Skill shift: Automation and the future of the workforce. McKinsey Glob. Inst. 2018, 1, 3–84. [Google Scholar]

- Sadybekov, A.V.; Katritch, V. Computational approaches streamlining drug discovery. Nature 2023, 616, 673–685. [Google Scholar] [CrossRef]

- Thangavel, K.; Sabatini, R.; Gardi, A.; Ranasinghe, K.; Hilton, S.; Servidia, P.; Spiller, D. Artificial intelligence for trusted autonomous satellite operations. Prog. Aerosp. Sci. 2024, 144, 100960. [Google Scholar] [CrossRef]

- King, A. Digital targeting: Artificial intelligence, data, and military intelligence. J. Glob. Secur. Stud. 2024, 9, ogae009. [Google Scholar] [CrossRef]

- Sarpatwar, K.; Sitaramagiridharganesh Ganapavarapu, V.; Shanmugam, K.; Rahman, A.; Vaculin, R. Blockchain enabled AI marketplace: The price you pay for trust. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Mugari, I.; Obioha, E.E. Predictive policing and crime control in the United States of America and Europe: Trends in a decade of research and the future of predictive policing. Soc. Sci. 2021, 10, 234. [Google Scholar] [CrossRef]

- Anshari, M.; Hamdan, M.; Ahmad, N.; Ali, E. Public service delivery, artificial intelligence and the sustainable development goals: Trends, evidence and complexities. J. Sci. Technol. Policy Manag. 2025, 16, 163–181. [Google Scholar] [CrossRef]

- Jiang, P.; Sinha, S.; Aldape, K.; Hannenhalli, S.; Sahinalp, C.; Ruppin, E. Big data in basic and translational cancer research. Nat. Rev. Cancer 2022, 22, 625–639. [Google Scholar] [CrossRef]

- Elemento, O.; Leslie, C.; Lundin, J.; Tourassi, G. Artificial intelligence in cancer research, diagnosis and therapy. Nat. Rev. Cancer 2021, 21, 747–752. [Google Scholar] [CrossRef]

- EI Naqa, I.; Karolak, A.; Luo, Y.; Folio, L.; Tarhini, A.A.; Rollison, D.; Parodi, K. Translation of AI into oncology clinical practice. Oncogene 2023, 42, 3089–3097. [Google Scholar] [CrossRef] [PubMed]

- Kumar, P.; Chauhan, S.; Awasthi, L.K. Artificial intelligence in healthcare: Review, ethics, trust challenges & future research directions. Eng. Appl. Artif. Intell. 2023, 120, 105894. [Google Scholar] [CrossRef]

- Chanda, T.; Haggenmueller, S.; Bucher, T.C.; Holland-Letz, T.; Kittler, H.; Tschandl, P.; Heppt, M.V.; Berking, C.; Utikal, J.S.; Schilling, B.; et al. Dermatologist-like explainable AI enhances melanoma diagnosis accuracy: Eye-tracking study. Nat. Commun. 2025, 16, 4739. [Google Scholar] [CrossRef]

- Waldrop, M.M. What are the limits of deep learning? Proc. Natl. Acad. Sci. USA 2019, 116, 1074–1077. [Google Scholar] [CrossRef]

- Rai, A. Explainable AI: From black box to glass box. J. Acad. Mark. Sci. 2020, 48, 137–141. [Google Scholar] [CrossRef]

- Parasuraman, R.; Riley, V. Humans and automation: Use, misuse, disuse, abuse. Hum. Factors 1997, 39, 230–253. [Google Scholar] [CrossRef]

- Mehrotra, S.; Degachi, C.; Vereschak, O.; Jonker, C.M.; Tielman, M.L. A systematic review on fostering appropriate trust in Human-AI interaction: Trends, opportunities and challenges. ACM J. Responsible Comput. 2024, 1, 1–45. [Google Scholar] [CrossRef]

- Castelvecchi, D. Can we open the black box of AI? Nat. News 2016, 538, 20. [Google Scholar] [CrossRef] [PubMed]

- Retzlaff, C.O.; Angerschmid, A.; Saranti, A.; Schneeberger, D.; Roettger, R.; Mueller, H.; Holzinger, A. Post-hoc vs ante-hoc explanations: xAI design guidelines for data scientists. Cogn. Syst. Res. 2024, 86, 101243. [Google Scholar] [CrossRef]

- Krueger, F.; Riedl, R.; Bartz, J.A.; Cook, K.S.; Gefen, D.; Hancock, P.A.; Lee, M.R.; Mayer, R.C.; Mislin, A.; Müller-Putz, G.R.; et al. A call for transdisciplinary trust research in the artificial intelligence era. Humanit. Soc. Sci. Commun. 2025, 12, 1124. [Google Scholar] [CrossRef]

- Nussberger, A.M.; Luo, L.; Celis, L.E.; Crockett, M.J. Public attitudes value interpretability but prioritize accuracy in Artificial Intelligence. Nat. Commun. 2022, 13, 5821. [Google Scholar] [CrossRef]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Davenport, T.; Kalakota, R. The potential for artificial intelligence in healthcare. Future Healthc. J. 2019, 6, 94–98. [Google Scholar] [CrossRef] [PubMed]

- Liang, W.; Tadesse, G.A.; Ho, D.; Fei-Fei, L.; Zaharia, M.; Zhang, C.; Zou, J. Advances, challenges and opportunities in creating data for trustworthy AI. Nat. Mach. Intell. 2022, 4, 669–677. [Google Scholar] [CrossRef]

- Bengio, Y.; Hinton, G.; Yao, A.; Song, D.; Abbeel, P.; Darrell, T.; Harari, Y.N.; Zhang, Y.Q.; Xue, L.; Shalev-Shwartz, S.; et al. Managing extreme AI risks amid rapid progress. Science 2024, 384, 842–845. [Google Scholar] [CrossRef]

- Sanneman, L.; Shah, J.A. The situation awareness framework for explainable AI (SAFE-AI) and human factors considerations for XAI systems. Int. J. Hum.–Comput. Interact. 2022, 38, 1772–1788. [Google Scholar] [CrossRef]

- Miller, T. Explanation in artificial intelligence: Insights from the social sciences. Artif. Intell. 2019, 267, 1–38. [Google Scholar] [CrossRef]

- Abdul, A.; Vermeulen, J.; Wang, D.; Lim, B.Y.; Kankanhalli, M. Trends and trajectories for explainable, accountable and intelligible systems: An HCI research agenda. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–18. [Google Scholar]

- Ryan, M. In AI we trust: Ethics, artificial intelligence, and reliability. Sci. Eng. Ethics 2020, 26, 2749–2767. [Google Scholar] [CrossRef] [PubMed]

- Vereschak, O.; Bailly, G.; Caramiaux, B. How to evaluate trust in AI-assisted decision making? A survey of empirical methodologies. Proc. ACM Hum.-Comput. Interact. 2021, 5, 1–39. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in automation: Designing for appropriate reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef]

- Hoff, K.A.; Bashir, M. Trust in automation: Integrating empirical evidence on factors that influence trust. Hum. Factors 2015, 57, 407–434. [Google Scholar] [CrossRef]

- Hancock, P.A.; Billings, D.R.; Schaefer, K.E.; Chen, J.Y.C.; de Visser, E.; Parasuraman, R. A meta-analysis of factors affecting trust in human–robot interaction. Hum. Factors 2011, 53, 517–527. [Google Scholar] [CrossRef]

- Glikson, E.; Woolley, A.W. Human trust in artificial intelligence: Review of empirical research. Acad. Manag. Ann. 2020, 14, 627–660. [Google Scholar] [CrossRef]

- Kaplan, A.D.; Kessler, T.T.; Brill, J.C.; Hancock, P.A. Trust in artificial intelligence: Meta-analytic findings. Hum. Factors 2023, 65, 337–359. [Google Scholar] [CrossRef] [PubMed]

- Asan, O.; Bayrak, A.E.; Choudhury, A. Artificial intelligence and human trust in healthcare: Focus on clinicians. J. Med. Internet Res. 2020, 22, e15154. [Google Scholar] [CrossRef]

- Tucci, F.; Galimberti, S.; Naldini, L.; Valsecchi, M.G.; Aiuti, A. A systematic review and meta-analysis of gene therapy with hematopoietic stem and progenitor cells for monogenic disorders. Nat. Commun. 2022, 13, 1315. [Google Scholar] [CrossRef]

- Catapan, S.D.C.; Sazon, H.; Zheng, S.; Gallegos-Rejas, V.; Mendis, R.; Santiago, P.H.; Kelly, J.T. A systematic review of consumers’ and healthcare professionals’ trust in digital healthcare. NPJ Digit. Med. 2025, 8, 115. [Google Scholar] [CrossRef]

- Rotter, J.B. A new scale for the measurement of interpersonal trust. J. Personal. 1967, 35, 651–665. [Google Scholar] [CrossRef]

- Muir, B.M. Trust between humans and machines, and the design of decision aids. Int. J. Man-Mach. Stud. 1987, 27, 527–539. [Google Scholar] [CrossRef]

- Hwang, P.; Burgers, W.P. Properties of trust: An analytical view. Organ. Behav. Hum. Decis. Process. 1997, 69, 67–73. [Google Scholar] [CrossRef]

- Giffin, K. The contribution of studies of source credibility to a theory of interpersonal trust in the communication process. Psychol. Bull. 1967, 68, 104. [Google Scholar] [CrossRef]

- Bainbridge, L. Ironies of automation. In Analysis, Design and Evaluation of Man–Machine Systems; Pergamon: Oxford, UK, 1983; pp. 129–135. [Google Scholar]

- Parasuraman, R.; Manzey, D.H. Complacency and bias in human use of automation: An attentional integration. Hum. Factors 2010, 52, 381–410. [Google Scholar] [CrossRef] [PubMed]

- Sutton, R.T.; Pincock, D.; Baumgart, D.C.; Sadowski, D.C.; Fedorak, R.N.; Kroeker, K.I. An overview of clinical decision support systems: Benefits, risks, and strategies for success. NPJ Digit. Med. 2020, 3, 17. [Google Scholar] [CrossRef] [PubMed]

- Tonekaboni, S.; Joshi, S.; McCradden, M.D.; Goldenberg, A. What clinicians want: Contextualizing explainable machine learning for clinical decision support. NPJ Digit. Med. 2019, 2, 97. [Google Scholar]

- Wischnewski, M.; Krämer, N.; Müller, E. Measuring and understanding trust calibrations for automated systems: A survey of the state-of-the-art and future directions. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–16. [Google Scholar]

- Amann, J.; Blasimme, A.; Vayena, E.; Frey, D.; Madai, V.I. Artificial intelligence explainability in clinical decision support systems: A review of arguments for and against explainability. JMIR Med. Inform. 2022, 10, e28432. [Google Scholar]

- van der Sijs, H.; Aarts, J.; Vulto, A.; Berg, M. Overriding of drug safety alerts in computerized physician order entry. J. Am. Med. Inform. Assoc. 2006, 13, 138–147. [Google Scholar] [CrossRef] [PubMed]

- Wright, A.; Ai, A.; Ash, J.; Wiesen, J.F.; Hickman, T.-T.T.; Aaron, S.; McEvoy, D.; Borkowsky, S.; Dissanayake, P.I.; Embi, P.; et al. Clinical decision support alert malfunctions: Analysis and empirically derived taxonomy. JAMIA 2018, 25, 555–561. [Google Scholar] [CrossRef]

- Fenton, J.J.; Taplin, S.H.; Carney, P.A.; Abraham, L.; Sickles, E.A.; D'Orsi, C.; Berns, E.A.; Cutter, G.; Hendrick, R.E.; Barlow, W.E.; et al. Influence of computer-aided detection on performance of screening mammography. N. Engl. J. Med. 2007, 356, 1399–1409. [Google Scholar] [CrossRef] [PubMed]

- Leveson, N.G.; Turner, C.S. An investigation of the Therac-25 accidents. Computer 1993, 26, 18–41. [Google Scholar] [CrossRef]

- Kok, B.C.; Soh, H. Trust in robots: Challenges and opportunities. Curr. Robot. Rep. 2020, 1, 297–309. [Google Scholar] [CrossRef]

- Castaldo, S. Trust in Market Relationships; Edward Elgar: Worcestershire, UK, 2007. [Google Scholar]

- Teacy, W.L.; Patel, J.; Jennings, N.R.; Luck, M. Travos: Trust and reputation in the context of inaccurate information sources. Auton. Agents Multi-Agent Syst. 2006, 12, 183–198. [Google Scholar] [CrossRef]

- Mayer, R.C.; Davis, J.H.; Schoorman, F.D. An integrative model of organizational trust. Acad. Manag. Rev. 1995, 20, 709–734. [Google Scholar] [CrossRef]

- Coeckelbergh, M. Can we trust robots? Ethics Inf. Technol. 2012, 14, 53–60. [Google Scholar] [CrossRef]

- Azevedo, C.R.; Raizer, K.; Souza, R. A vision for human-machine mutual understanding, trust establishment, and collaboration. In Proceedings of the 2017 IEEE Conference on Cognitive and Computational Aspects of Situation Management (CogSIMA), Savannah, GA, USA, 27–31 March 2017; pp. 1–3. [Google Scholar]

- Okamura, K.; Yamada, S. Adaptive trust calibration for human-AI collaboration. PLoS ONE 2020, 15, e0229132. [Google Scholar] [CrossRef]

- Körber, M. Theoretical considerations and development of a questionnaire to measure trust in automation. In Congress of the International Ergonomics Association; Springer International Publishing: Cham, Switzerland, 2018; pp. 13–30. [Google Scholar]

- Lewis, P.R.; Marsh, S. What is it like to trust a rock? A functionalist perspective on trust and trustworthiness in artificial intelligence. Cogn. Syst. Res. 2022, 72, 33–49. [Google Scholar] [CrossRef]

- Jian, J.-Y.; Bisantz, A.M.; Drury, C.G. Foundations for an empirically determined scale of trust in automated systems. Int. J. Cogn. Ergon. 2000, 4, 53–71. [Google Scholar] [CrossRef]

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. A model for types and levels of human interaction with automation. IEEE Trans. Syst. Man Cybern.-Part A 2000, 30, 286–297. [Google Scholar] [CrossRef] [PubMed]

- McNamara, S.L.; Lin, S.; Mello, M.M.; Diaz, G.; Saria, S.; Sendak, M.P. Intended use and explainability in FDA-cleared AI devices. npj Digit. Med. 2024; advance online publication. [Google Scholar]

- Sheridan, J.E. Organizational culture and employee retention. Acad. Manag. J. 1992, 35, 1036–1056. [Google Scholar] [CrossRef]

- Bussone, A.; Stumpf, S.; O’Sullivan, D. The role of explanations on trust and reliance in clinical decision support systems. In Proceedings of the 2015 IEEE International Conference on Healthcare Informatics (ICHI), Dallas, TX, USA, 21–23 October 2015; pp. 160–169. [Google Scholar]

- Naiseh, M.; Al-Thani, D.; Jiang, N.; Ali, R. How the different explanation classes impact trust calibration: The case of clinical decision support systems. Int. J. Hum.-Comput. Stud. 2023, 169, 102941. [Google Scholar] [CrossRef]

- Raj, M.; Seamans, R. Primer on artificial intelligence and robotics. J. Organ. Des. 2019, 8, 11. [Google Scholar] [CrossRef]

- Erengin, T.; Briker, R.; de Jong, S.B. You, Me, and the AI: The role of third-party human teammates for trust formation toward AI teammates. J. Organ. Behav. 2024. [Google Scholar] [CrossRef]

- Saßmannshausen, T.; Burggräf, P.; Wagner, J.; Hassenzahl, M.; Heupel, T.; Steinberg, F. Trust in artificial intelligence within production management–an exploration of antecedents. Ergonomics 2021, 64, 1333–1350. [Google Scholar] [CrossRef]

- Dwivedi, R.; Dave, D.; Naik, H.; Singhal, S.; Omer, R.; Patel, P.; Qian, B.; Wen, Z.; Shah, T.; Morgan, G.; et al. Explainable AI (XAI): Core ideas, techniques, and solutions. ACM Comput. Surv. 2023, 55, 1–33. [Google Scholar] [CrossRef]

- Mosqueira-Rey, E.; Hernández-Pereira, E.; Alonso-Ríos, D.; Bobes-Bascarán, J.; Fernández-Leal, Á. Human-in-the-loop machine learning: A state of the art. Artif. Intell. Rev. 2023, 56, 3005–3054. [Google Scholar] [CrossRef]

- Gille, F.; Jobin, A.; Ienca, M. What we talk about when we talk about trust: Theory of trust for AI in healthcare. Intell.-Based Med. 2020, 1, 100001. [Google Scholar] [CrossRef]

- Afroogh, S.; Akbari, A.; Malone, E.; Kargar, M.; Alambeigi, H. Trust in AI: Progress, challenges, and future directions. Humanit. Soc. Sci. Commun. 2024, 11, 1568. [Google Scholar] [CrossRef]

- Russell, S.; Norvig, P.; Popineau, F.; Miclet, L.; Cadet, C. Intelligence Artificielle: Une Approche Modern, 4th ed.; Pearson France: Paris, France, 2021. [Google Scholar]

- Wingert, K.M.; Mayer, R.C. Trust in autonomous technology: The machine or its maker? In A Research Agenda for Trust; Edward Elgar Publishing: Cheltenham, UK, 2024; pp. 51–62. [Google Scholar]

- Begoli, E.; Bhattacharya, T.; Kusnezov, D. The need for uncertainty quantification in machine-assisted medical decision making. Nat. Mach. Intell. 2019, 1, 20–23. [Google Scholar] [CrossRef]

- Sendak, M.P.; Gao, M.; Brajer, N.; Balu, S. Presenting machine learning model information to clinical end users with model facts labels. npj Digit. Med. 2020, 3, 41. [Google Scholar] [CrossRef]

- Yan, A.; Xu, D. AI for depression treatment: Addressing the paradox of privacy and trust with empathy, accountability, and explainability. In Proceedings of the 42nd International Conference on Information Systems (ICIS 2021): Building Sustainability and Resilience with IS: A Call for Action, Austin, TX, USA, 12–15 December 2021; Association for Information Systems: Atlanta, GA, USA, 2021. [Google Scholar]

- Topol, E. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Hughes, L.; Ismagilova, E.; Aarts, G.; Coombs, C.; Crick, T.; Duan, Y.; Dwivedi, R.; Edwards, J.; Eirug, A.; et al. Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int. J. Inf. Manag. 2021, 57, 101994. [Google Scholar] [CrossRef]

- Ajenaghughrure, I.B.; da Costa Sousa, S.C.; Lamas, D. Risk and Trust in artificial intelligence technologies: A case study of Autonomous Vehicles. In Proceedings of the 2020 13th International Conference on Human System Interaction (HSI), Tokyo, Japan, 6–8 June 2020; IEEE: New York, NY, USA, 2020; pp. 118–123. [Google Scholar]

- Wu, D.; Huang, Y. Why do you trust siri?: The factors affecting trustworthiness of intelligent personal assistant. Proc. Assoc. Inf. Sci. Technol. 2021, 58, 366–379. [Google Scholar] [CrossRef]

- Zierau, N.; Flock, K.; Janson, A.; Söllner, M.; Leimeister, J.M. The influence of AI-based chatbots and their design on users’ trust and information sharing in online loan applications. In Proceedings of the Hawaii International Conference on System Sciences (HICSS), Kauai, HI, USA, 5–8 January 2021. [Google Scholar]

- Maier, T.; Menold, J.; McComb, C. The relationship between performance and trust in AI in E-Finance. Front. Artif. Intell. 2022, 5, 891529. [Google Scholar] [CrossRef]

- Kästner, L.; Langer, M.; Lazar, V.; Schomäcker, A.; Speith, T.; Sterz, S. On the relation of trust and explainability: Why to engineer for trustworthiness. In Proceedings of the 2021 IEEE 29th International Requirements Engineering Conference Workshops (REW), Notre Dame, IN, USA, 20–24 September 2021; IEEE: New York, NY, USA, 2021; pp. 169–175. [Google Scholar]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Molnar, C. Interpretable Machine Learning; Lulu. Com: Durham, NC, USA, 2020. [Google Scholar]

- Lopes, P.; Silva, E.; Braga, C.; Oliveira, T.; Rosado, L. XAI systems evaluation: A review of human and computer-centred methods. Appl. Sci. 2022, 12, 9423. [Google Scholar] [CrossRef]

- Mohseni, S.; Zarei, N.; Ragan, E.D. A multidisciplinary survey and framework for design and evaluation of explainable AI systems. ACM Trans. Interact. Intell. Syst. (TiiS) 2021, 11, 1–45. [Google Scholar] [CrossRef]

- Adadi, A.; Berrada, M. Peeking inside the black-box: A survey on explainable artificial intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Visser, R.; Peters, T.M.; Scharlau, I.; Hammer, B. Trust, distrust, and appropriate reliance in (X)AI: A conceptual clarification of user trust and survey of its empirical evaluation. Cogn. Syst. Res. 2025, 91, 101357. [Google Scholar] [CrossRef]

- Chen, M.; Nikolaidis, S.; Soh, H.; Hsu, D.; Srinivasa, S. Trust-aware decision making for human-robot collaboration: Model learning and planning. ACM Trans. Hum.-Robot Interact. 2020, 9, 1–23. [Google Scholar] [CrossRef]

- Jahn, T.; Bergmann, M.; Keil, F. Transdisciplinarity: Between mainstreaming and marginalization. Ecol. Econ. 2012, 79, 1–10. [Google Scholar] [CrossRef]

- Meske, C.; Bunde, E. Transparency and trust in human-AI-interaction: The role of model-agnostic explanations in computer vision-based decision support. In Proceedings of the International Conference on Human-Computer Interaction, Virtual, 19–24 July 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 54–69. [Google Scholar]

- Von Eschenbach, W.J. Transparency and the black box problem: Why we do not trust AI. Philos. Technol. 2021, 34, 1607–1622. [Google Scholar] [CrossRef]

- Saranya, A.; Subhashini, R. A systematic review of Explainable Artificial Intelligence models and applications: Recent developments and future trends. Decis. Anal. J. 2023, 7, 100230. [Google Scholar] [CrossRef]

- Hassija, V.; Chamola, V.; Mahapatra, A.; Singal, A.; Goel, D.; Huang, K.; Scardapane, S.; Spinelli, I.; Mahmud, M.; Hussain, A. Interpreting black-box models: A review on explainable artificial intelligence. Cogn. Comput. 2024, 16, 45–74. [Google Scholar] [CrossRef]

- Shaban-Nejad, A.; Michalowski, M.; Brownstein, J.S.; Buckeridge, D.L. Guest editorial explainable AI: Towards fairness, accountability, transparency and trust in healthcare. IEEE J. Biomed. Health Inform. 2021, 25, 2374–2375. [Google Scholar]

- Zolanvari, M.; Yang, Z.; Khan, K.; Jain, R.; Meskin, N. TRUST XAI: Model-agnostic explanations for AI with a case study on IIoT security. IEEE Internet Things J. 2021, 10, 2967–2978. [Google Scholar]

- Kamath, U.; Liu, J. Explainable Artificial Intelligence: An Introduction to Interpretable Machine Learning; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Guidotti, R.; Monreale, A.; Giannotti, F.; Pedreschi, D.; Ruggieri, S.; Turini, F. Factual and counterfactual explanations for black box decision making. IEEE Intell. Syst. 2019, 34, 14–23. [Google Scholar] [CrossRef]

- Doshi-Velez, F.; Kim, B. Towards a rigorous science of interpretable machine learning. arXiv 2017, arXiv:1702.08608. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should I trust you?” Explaining the predictions of any classifier. In Proceedings of the KDD 2016, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Seah, J.C.; Tang, C.H.; Buchlak, Q.D.; Holt, X.G.; Wardman, J.B.; Aimoldin, A.; Esmaili, N.; Ahmad, H.; Pham, H.; Lambert, J.F.; et al. Effect of a comprehensive deep-learning model on the accuracy of chest x-ray interpretation by radiologists: A retrospective, multireader multicase study. Lancet Digit. Health 2021, 3, e496–e506. [Google Scholar] [CrossRef]

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Turini, F. Local rule-based explanations of black box decision systems. arXiv 2018, arXiv:1805.10820. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.; Olshen, R.; Stone, C. Classification and Regression Trees; Chapman and Hall/CRC: Boca Raton, FL, USA, 1984. [Google Scholar]

- Hosmer, D.W.; Lemeshow, S.; Sturdivant, R.X. Applied Logistic Regression, 3rd ed.; Wiley: Hoboken, NJ, USA, 2013. [Google Scholar]

- Lakkaraju, H.; Bach, S.H.; Leskovec, J. Interpretable decision sets: A joint framework for description and prediction. In Proceedings of the KDD 2016, San Francisco, CA, USA, 13–17 August 2016; pp. 1675–1684. [Google Scholar]

- Hastie, T.; Tibshirani, R. Generalized Additive Models; Chapman & Hall: Boca Raton, FL, USA, 1990. [Google Scholar]

- Choi, E.; Bahadori, M.T.; Sun, J.; Kulas, J.; Schuetz, A.; Stewart, W. RETAIN: An interpretable predictive model for healthcare using reverse time attention mechanism. In Proceedings of the NeurIPS 2016, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Turini, F.; Giannotti, F.; Pedreschi, D. A survey of methods for explaining black box models. ACM Comput. Surv. 2018, 51, 93. [Google Scholar] [CrossRef]

- Lipton, Z.C. The mythos of model interpretability. Commun. ACM 2018, 61, 36–43. [Google Scholar] [CrossRef]

- Murdoch, W.J.; Singh, C.; Kumbier, K.; Abbasi-Asl, R.; Yu, B. Interpretable machine learning: Definitions, methods, and applications. Proc. Natl. Acad. Sci. USA 2019, 116, 22071–22080. [Google Scholar] [CrossRef]

- Dombrowski, A.K.; Alber, M.; Anders, C.; Ackermann, M.; Müller, K.R.; Kessel, P. Explanations can be manipulated and geometry is to blame. Adv. Neural Inf. Process. Syst. 2019, 32, 13589–13600. [Google Scholar]

- Kompa, B.; Snoek, J.; Beam, A.L. Second opinion needed: Communicating uncertainty in medical machine learning. NPJ Digit. Med. 2021, 4, 4. [Google Scholar] [CrossRef]

- Metta, C.; Beretta, A.; Pellungrini, R.; Rinzivillo, S.; Giannotti, F. Towards transparent healthcare: Advancing local explanation methods in explainable artificial intelligence. Bioengineering 2024, 11, 369. [Google Scholar] [CrossRef]

- Jiang, F.; Zhou, L.; Zhang, C.; Jiang, H.; Xu, Z. Malondialdehyde levels in diabetic retinopathy patients: A systematic review and meta-analysis. Chin. Med. J. 2023, 136, 1311–1321. [Google Scholar] [CrossRef]

- Saporta, A.; Gui, X.; Agrawal, A.; Pareek, A.; Truong, S.Q.H.; Nguyen, C.D.T.; Ngo, V.-D.; Seekins, J.; Blankenberg, F.G.; Ng, A.Y.; et al. Benchmarking saliency methods for chest X-ray interpretation. Nat. Mach. Intell. 2022, 4, 867–878. [Google Scholar] [CrossRef]

- Ghassemi, M.; Oakden-Rayner, L.; Beam, A.L. The false hope of current approaches to explainable artificial intelligence in health care. Lancet Digit. Health 2021, 3, e745–e750. [Google Scholar] [CrossRef]

- Singh, Y.; Hathaway, Q.A.; Keishing, V.; Salehi, S.; Wei, Y.; Horvat, N.; Vera-Garcia, D.V.; Choudhary, A.; Mula Kh, A.; Quaia, E.; et al. Beyond Post hoc Explanations: A Comprehensive Framework for Accountable AI in Medical Imaging Through Transparency, Interpretability, and Explainability. Bioengineering 2025, 12, 879. [Google Scholar] [CrossRef]

- Fok, R.; Weld, D.S. In Search of Verifiability: Explanations Rarely Enable Complementary Performance in AI-Advised Decision Making. AI Mag. 2024, 45, 317–332. [Google Scholar] [CrossRef]

- Riley, R.D.; Ensor, J.; Collins, G.S. Uncertainty of risk estimates from clinical prediction models. BMJ 2025, 388, e080749. [Google Scholar] [CrossRef] [PubMed]

- Bhatt, U.; Antorán, J.; Zhang, Y.; Liao, Q.V.; Sattigeri, P.; Fogliato, R.; Melançon, G.; Krishnan, R.; Stanley, J.; Tickoo, O.; et al. Uncertainty as a form of transparency: Measuring, communicating, and using uncertainty. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, Virtual, 19–21 May 2021; pp. 401–413. [Google Scholar]

- Ali, S.; Abuhmed, T.; El-Sappagh, S.; Muhammad, K.; Alonso-Moral, J.M.; Confalonieri, R.; Guidotti, R.; Del-Ser-Lorente, J.; Díaz-Rodríguez, N.; Herrera, F. Explainable Artificial Intelligence (XAI): What we know and what is left to attain Trustworthy Artificial Intelligence. Inf. Fusion 2023, 99, 101805. [Google Scholar] [CrossRef]

- Samek, W.; Montavon, G.; Lapuschkin, S.; Anders, C.J.; Müller, K.R. Explaining deep neural networks and beyond: A review of methods and applications. Proc. IEEE 2021, 109, 247–278. [Google Scholar] [CrossRef]

- Albahri, A.S.; Duhaim, A.M.; Fadhel, M.A.; Alnoor, A.; Baqer, N.S.; Alzubaidi, L.; Albahri, O.S.; Alamoodi, A.H.; Bai, J.; Salhi, A.; et al. A systematic review of trustworthy and explainable artificial intelligence in healthcare: Assessment of quality, bias risk, and data fusion. Inf. Fusion 2023, 96, 156–191. [Google Scholar] [CrossRef]

- Mehrotra, S.; Jorge, C.C.; Jonker, C.M.; Tielman, M.L. Integrity-based explanations for fostering appropriate trust in AI agents. ACM Trans. Interact. Intell. Syst. 2024, 14, 1–36. [Google Scholar] [CrossRef]

- Kessler, T.; Stowers, K.; Brill, J.C.; Hancock, P.A. Comparisons of human-human trust with other forms of human-technology trust. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Los Angeles, CA, USA, 9–13 October 2017; SAGE Publications: Thousand Oaks, CA, USA, 2017; Volume 61, No. 1. pp. 1303–1307. [Google Scholar]

- Cai, C.J.; Reif, E.; Hegde, N.; Hipp, J.; Kim, B.; Smilkov, D.; Wattenberg, M.; Viegas, F.; Corrado, G.S.; Stumpe, M.C.; et al. Human-Centered Tools for Coping with Imperfect Algorithms During Medical Decision-Making. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019. [Google Scholar]

- Miller, L.; Kraus, J.; Babel, F.; Baumann, M. More Than a Feeling—Interrelation of Trust Layers in Human-Robot Interaction and the Role of User Dispositions and State Anxiety. Front. Psychol. 2021, 12, 592711. [Google Scholar] [CrossRef]

- Omrani, N.; Rivieccio, G.; Fiore, U.; Schiavone, F.; Agreda, S.G. To trust or not to trust? An assessment of trust in AI-based systems: Concerns, ethics and contexts. Technol. Forecast. Soc. Chang. 2022, 181, 121763. [Google Scholar] [CrossRef]

- Yang, R.; Wibowo, S. User trust in artificial intelligence: A comprehensive conceptual framework. Electron. Mark. 2022, 32, 2053–2077. [Google Scholar] [CrossRef]

- Hancock, P.A. Are humans still necessary? Ergonomics 2023, 66, 1711–1718. [Google Scholar] [CrossRef]

- Riedl, R. Is trust in artificial intelligence systems related to user personality? Review of empirical evidence and future research directions. Electron. Mark. 2022, 32, 2021–2051. [Google Scholar] [CrossRef]

- Gillespie, N.; Daly, N. Repairing trust in public sector agencies. In Handbook on Trust in Public Governance; Edward Elgar Publishing: Cheltenham, UK, 2025; pp. 98–115. [Google Scholar]

- Siau, K.; Wang, W. Building trust in artificial intelligence, machine learning, and robotics. Cut. Bus. Technol. J. 2018, 31, 47–53. [Google Scholar]

- De Visser, E.; Parasuraman, R. Adaptive aiding of human-robot teaming: Effects of imperfect automation on performance, trust, and workload. J. Cogn. Eng. Decis. Mak. 2011, 5, 209–231. [Google Scholar] [CrossRef]

- Dietvorst, B.J.; Simmons, J.P.; Massey, C. Algorithm aversion: People erroneously avoid algorithms after seeing them err. J. Exp. Psychol. 2015, 144, 114–126. [Google Scholar] [CrossRef]

- Kätsyri, J.; Förger, K.; Mäkäräinen, M.; Takala, T. A review of empirical evidence on different uncanny valley hypotheses: Support for perceptual mismatch as one road to the valley of eeriness. Front. Psychol. 2015, 6, 390. [Google Scholar] [CrossRef]

- Hancock, P.A.; Kessler, T.T.; Kaplan, A.D.; Stowers, K.; Brill, J.C.; Billings, D.R.; Schaefer, K.E.; Szalma, J.L. How and why humans trust: A meta-analysis and elaborated model. Front. Psychol. 2023, 14, 1081086. [Google Scholar] [CrossRef]

- Gaudiello, I.; Zibetti, E.; Lefort, S.; Chetouani, M.; Ivaldi, S. Trust as indicator of robot functional and social acceptance: An experimental study on user conformation to the iCub’s answers. Comput. Hum. Behav. 2016, 61, 633–655. [Google Scholar] [CrossRef]

- Yang, X.J.; Lau, H.Y.K.; Neff, B.; Shah, J.A. Toward quantifying trust dynamics: How people adjust their trust after moment-to-moment interaction with automation. Hum. Factors 2021, 63, 1343–1360. [Google Scholar] [CrossRef]

- Rittenberg, B.S.P.; Holland, C.W.; Barnhart, G.E.; Gaudreau, S.M.; Neyedli, H.F. Trust with increasing and decreasing reliability. Hum. Factors 2024, 66, 2569–2589. [Google Scholar] [CrossRef]

- Lyons, J.B.; Hamdan, I.a.; Vo, T.Q. Explanations and trust: What happens to trust when a robot teammate behaves unexpectedly? Comput. Hum. Behav. 2023, 139, 107497. [Google Scholar]

- Rojas, E.; Li, M. Trust is contagious: Social influences in human–human–AI teams. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Phoenix, AZ, USA, 9–13 September 2024. [Google Scholar]

- Kohn, S.C.; De Visser, E.J.; Wiese, E.; Lee, Y.C.; Shaw, T.H. Measurement of trust in automation: A narrative review and reference guide. Front. Psychol. 2021, 12, 604977. [Google Scholar] [CrossRef]

- Madsen, M.; Gregor, S. Measuring human-computer trust. In Proceedings of the 11th Australasian Conference on Information Systems, Brisbane, Australia, 6–8 December 2000; Volume 53, pp. 6–8. [Google Scholar]

- Miller, D.; Johns, M.; Mok, B.; Gowda, N.; Sirkin, D.; Lee, K.; Ju, W. Behavioral measurement of trust in automation: The trust-fall. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2016, 60, 1849–1853. [Google Scholar] [CrossRef]

- Liu, C.; Chen, B.; Shao, W.; Zhang, C.; Wong, K.K.; Zhang, Y. Unraveling attacks to Machine-Learning-Based IoT Systems: A survey and the open libraries behind them. IEEE Internet Things J. 2024, 11, 19232–19255. [Google Scholar] [CrossRef]

- Wong, K.K. Cybernetical Intelligence: Engineering Cybernetics with Machine Intelligence; John Wiley & Sons: Hoboken, NJ, USA, 2023. [Google Scholar]

- Dzindolet, M.T.; Peterson, S.A.; Pomranky, R.A.; Pierce, L.G.; Beck, H.P. The role of trust in automation reliance. Int. J. Hum.-Comput. Stud. 2003, 58, 697–718. [Google Scholar] [CrossRef]

- Lohani, M.; Payne, B.R.; Strayer, D.L. A review of psychophysiological measures to assess cognitive states in real-world driving. Front. Hum. Neurosci. 2019, 13, 57. [Google Scholar] [CrossRef]

- Ajenaghughrure, I.B.; Sousa, S.D.C.; Lamas, D. Measuring trust with psychophysiological signals: A systematic mapping study of approaches used. Multimodal Technol. Interact. 2020, 4, 63. [Google Scholar] [CrossRef]

- Hopko, S.K.; Binion, C.; Walenski, M. Neural correlates of trust in automation: Considerations and generalizability between technology domains. Front. Neuroergonomics 2021, 2, 731327. [Google Scholar] [CrossRef] [PubMed]

- Perelló-March, J.R.; Burns, C.G.; Woodman, R.; Elliott, M.T.; Birrell, S.A. Using fNIRS to verify trust in highly automated driving. IEEE Trans. Intell. Transp. Syst. 2023, 24, 739–751. [Google Scholar] [CrossRef]

- Xu, T.; Dragomir, A.; Liu, X.; Yin, H.; Wan, F.; Bezerianos, A.; Wang, H. An EEG study of human trust in autonomous vehicles based on graphic theoretical analysis. Front. Neuroinformatics 2022, 16, 907942. [Google Scholar] [CrossRef]

- Chita-Tegmark, M.; Law, T.; Rabb, N.; Scheutz, M. Can you trust your trust measure? In Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 8–11 March 2021; pp. 92–100. [Google Scholar]

- Tun, H.M.; Rahman, H.A.; Naing, L.; Malik, O.A. Trust in Artificial Intelligence–Based Clinical Decision Support Systems Among Health Care Workers: Systematic Review. J. Med. Internet Res. 2025, 27, e69678. [Google Scholar] [CrossRef]

- Mainz, J.T. Medical AI: Is trust really the issue? J. Med. Ethics 2024, 50, 349–350. [Google Scholar] [CrossRef] [PubMed]

- Bach, T.A.; Khan, A.; Hallock, H.; Beltrão, G.; Sousa, S. A systematic literature review of user trust in AI-enabled systems: An HCI perspective. Int. J. Hum.–Comput. Interact. 2024, 40, 1251–1266. [Google Scholar] [CrossRef]

| Dimension | Post Hoc Explanation [102] | Ante-Hoc Explanation [19] |

|---|---|---|

| Definition | Explains a trained model’s decisions after training and prediction via external tools [103]. | Builds explainability into the model during design/training so predictions and explanations co-emerge; the decision process is inherently understandable [22]. |

| Representative models/methods | SHAP [22] LIME [104] CAM/Grad-CAM [105] LORE [106] | Decision trees [107]; linear/logistic regression [108]; rule-based models [109]; GAM [110]; interpretable neural networks [111]. |

| Strengths | Model-agnostic and flexible; can explain complex black-box models [112]. | Explanations are faithful to model behavior without extra approximations; typically more reliable [22]. |

| Limitations | Explanations are often approximations that may diverge from the true decision logic [113]. | Expressive power can be limited; may trade off some predictive performance [114]. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wong, K.K.L.; Han, Y.; Cai, Y.; Ouyang, W.; Du, H.; Liu, C. From Trust in Automation to Trust in AI in Healthcare: A 30-Year Longitudinal Review and an Interdisciplinary Framework. Bioengineering 2025, 12, 1070. https://doi.org/10.3390/bioengineering12101070

Wong KKL, Han Y, Cai Y, Ouyang W, Du H, Liu C. From Trust in Automation to Trust in AI in Healthcare: A 30-Year Longitudinal Review and an Interdisciplinary Framework. Bioengineering. 2025; 12(10):1070. https://doi.org/10.3390/bioengineering12101070

Chicago/Turabian StyleWong, Kelvin K. L., Yong Han, Yifeng Cai, Wumin Ouyang, Hemin Du, and Chao Liu. 2025. "From Trust in Automation to Trust in AI in Healthcare: A 30-Year Longitudinal Review and an Interdisciplinary Framework" Bioengineering 12, no. 10: 1070. https://doi.org/10.3390/bioengineering12101070

APA StyleWong, K. K. L., Han, Y., Cai, Y., Ouyang, W., Du, H., & Liu, C. (2025). From Trust in Automation to Trust in AI in Healthcare: A 30-Year Longitudinal Review and an Interdisciplinary Framework. Bioengineering, 12(10), 1070. https://doi.org/10.3390/bioengineering12101070