Assessing the Value of Imaging Data in Machine Learning Models to Predict Patient-Reported Outcome Measures in Knee Osteoarthritis Patients

Abstract

1. Introduction

2. Materials and Methods

2.1. Ethics Considerations

2.2. Data Source

2.3. Outcome Measure

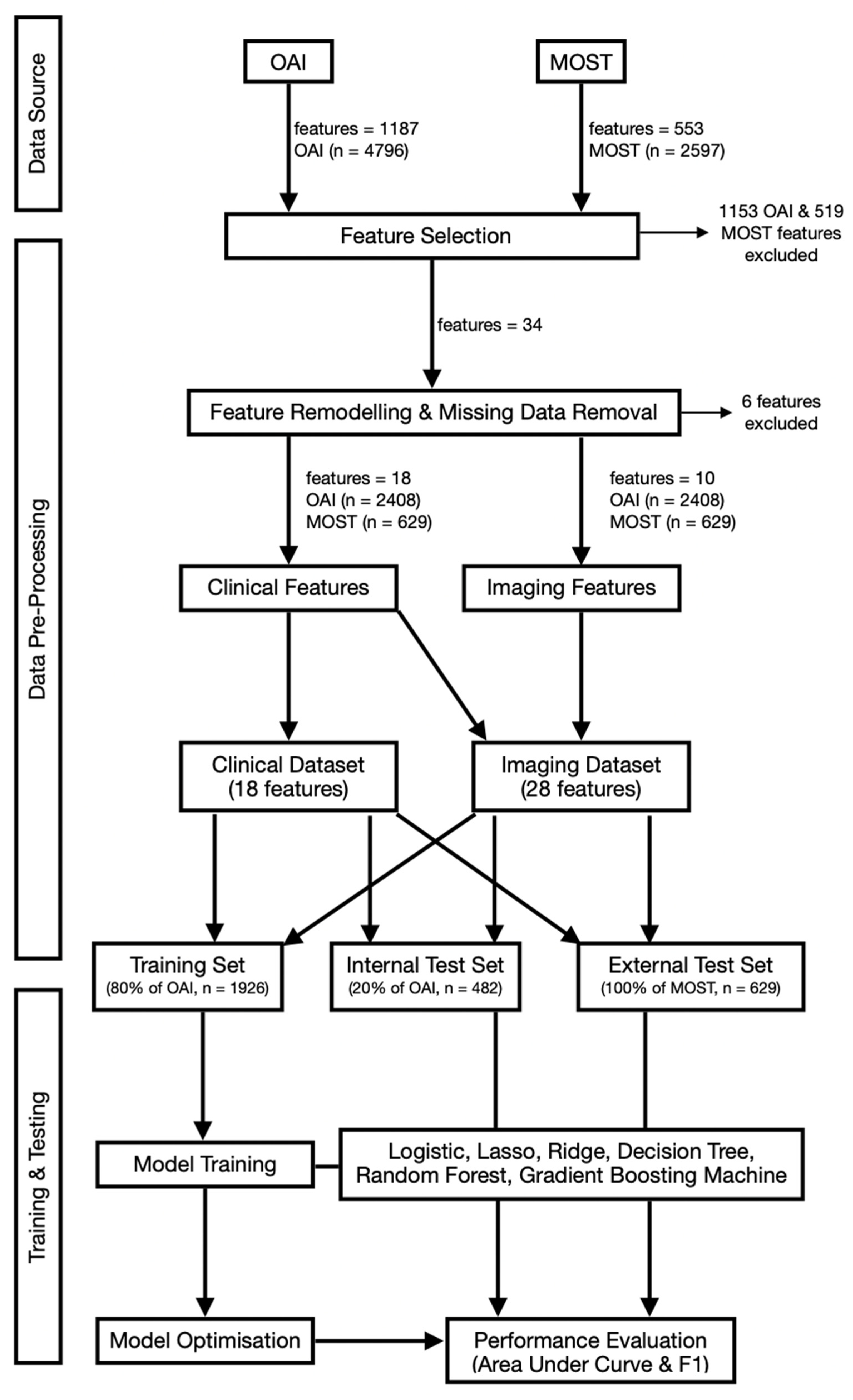

2.4. Feature Selection and Data Pre-Processing

2.5. Model Development, Training and Validation

2.6. Statistical Analysis and Feature Importance

3. Results

3.1. Data Distribution

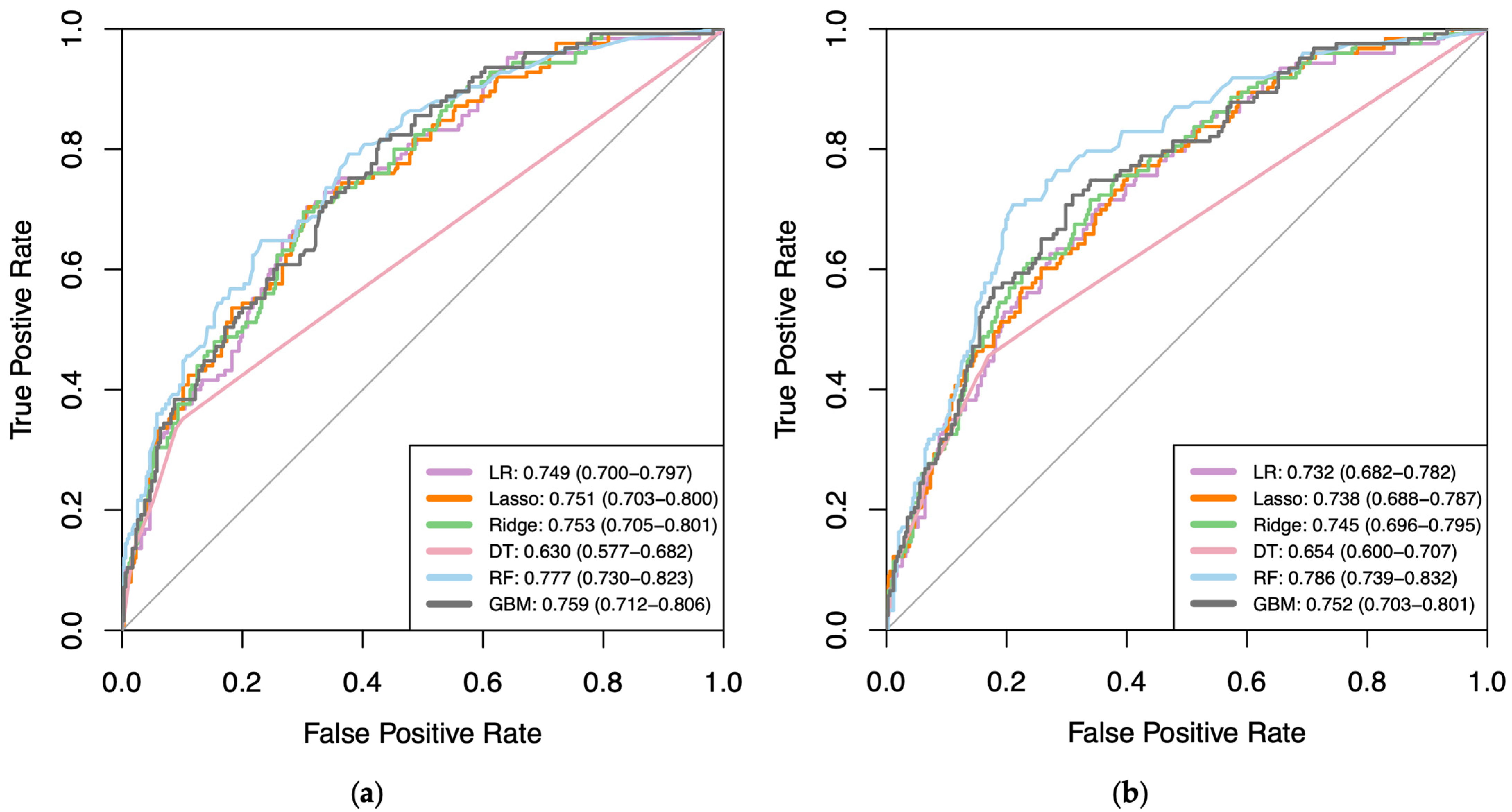

3.2. Model Performance

3.3. Feature Importance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Patient Feature | Subgroups within Each Feature |

|---|---|

| Age | Age ≤ 50; 50 < Age < 60; 60 ≤ Age < 70; Age ≥ 70 |

| Sex | Male; Female |

| Ethnicity | White/Caucasian; Black/African American/Asian & other Non-White |

| Living Status | Lives Alone; Lives with someone else |

| Education Status | Less than high school graduate; High school graduate; Some college; College graduate; Some graduate school; Graduate degree |

| Employment Status | Yes; No |

| Body Mass Index (BMI) | Underweight (BMI < 18.5); Healthy (18.5–24.9); Overweight (25.0–29.9); Obese (30.0–39.9); Morbidly obese (BMI > 40) |

| Comorbidities (Charlson Comorbidity Index) | None; Mild (CCI = 1–2), Moderate (CCI = 3–4); Severe (CCI > 5) |

| Inflammatory Arthritis | None; OA/degenerative only; gout/other only; OA/degenerative and gout/other |

| Injury to knee | Yes; No |

| Knee Surgery | No; Left or Right; Left and Right |

| Osteoarthritis medication | None; corticosteroids; supplements (methylsulfonylmethane, fluorides, glucosamine); Combination of above |

| Osteoporosis medication | None; Vitamin D/Calcium; Bisphosphonate; Oestrogen/Raloxifene; Calcitonin/Teriparatide; Combination of above |

| Analgesic medication | None; WHO Pain Ladder 1 (mild); WHO Pain Ladder 2 and above (moderate to severe) |

| Hypertension | Normal (SBP < 140 & DBP < 90); Stage 1 (SBP ≥ 140/DBP ≥ 90); Stage 2 (SBP ≥ 160/DBP ≥ 100); Severe (SBP > 180 or DBP > 110) |

| 20m walk assessment | No risk; Risk of disability (based on cut-off point of ≥10 s) |

| Short Form-12 (SF-12) Mental | normal; low mental health score |

| Physical Activity Scale for Elderly (PASE) score | Normal physical activity (≥120); Low physical activity (<120) |

| Joint Space Narrowing (JSN)—Medial | Osteoarthritis Research Society International (OARSI) Grade 0–3 |

| Joint Space Narrowing (JSN)—Lateral | Osteoarthritis Research Society International (OARSI) Grade 0–3 |

| Kellgren–Lawrence Grade | Normal (0); Doubtful (1); Mild (2); Moderate (3); Severe (4) |

| Cartilage morphology (medial femorotibial joint) | None; thickness loss in one subregion; thickness loss in more than one subregion |

| Cartilage morphology (lateral femorotibial joint) | None; thickness loss in one subregion; thickness loss in more than one subregion |

| Cartilage morphology (patellofemoral joint) | None; thickness loss in one subregion; thickness loss in more than one subregion |

| Bone marrow lesions (medial femorotibial joint) | None; in one subregion; in more than one subregion |

| Bone marrow lesions (lateral femorotibial joint) | None; in one subregion; in more than one subregion |

| Bone marrow lesions (patellofemoral joint) | None; in one subregion; in more than one subregion |

| Meniscal tear | None; in one subregion; in more than one subregion |

| WOMAC | WOMAC < 24; WOMAC ≥ 24 |

| Data Interpretation Tasks | RStudio Software Package |

|---|---|

| Data Visualisation | Amelia (version 1.8.0) |

| Collinearity Visualisation | corrplot (version 0.92) |

| Data Pre-Processing—setting seed; sample split | simEd (version 2.0.0); caTools (version 1.17.1) |

| Area Under Curve Score; Receiver Operative Characteristic Curves | ROCR (version 1.0-11); pROC (version 1.18.0) |

| F1 Score—confusionMatrix | caret (version 3.45) |

| Generalised Linear Models (Logistic Regression) | glm (version 3.6.2) |

| Regularised General Linear Models (Lasso Regression) | glmnet (version 4.1-4) |

| Regularised General Linear Models (Ridge Regression) | glmnet (version 4.1-4) |

| Recursive Partitioning and Regression Trees (Decision Tree) | rpart (version 4.1.16) |

| Breiman and Cutler’s Random Forest Models | randomForest (version 4.7-1.1) |

| Generalised Boosted Regression Models | gbm (version 2.1.8) |

Appendix B

| ML Algorithm | Internal Test | External Test | ||

|---|---|---|---|---|

| Change in AUC * | Change in F1 * | Change in AUC * | Change in F1 * | |

| Logistic | −0.017 | 0.024 | 0.02 | −0.035 |

| Lasso | −0.013 | 0.017 | 0.014 | −0.011 |

| Ridge | −0.008 | 0.007 | 0.02 | −0.019 |

| Decision Tree | 0.024 | −0.042 | −0.087 | 0.158 |

| Random Forest | 0.009 | 0.051 | 0.017 | 0.007 |

| GBM | −0.007 | 0.019 | 0.018 | 0.023 |

References

- GBD 2021 Osteoarthritis Collaborators. Global, regional, and national burden of osteoarthritis, 1990–2020 and projections to 2050: A systematic analysis for the Global Burden of Disease Study 2021. Lancet Rheumatol. 2023, 5, 508–522. [Google Scholar] [CrossRef] [PubMed]

- Duong, V.; Oo, W.M.; Ding, C.; Culvenor, A.G.; Hunter, D.J. Evaluation and Treatment of Knee Pain: A Review. JAMA 2023, 330, 1568–1580. [Google Scholar] [CrossRef] [PubMed]

- Vitaloni, M.; Botto-van Bemden, A.; Sciortino Contreras, R.M.; Scotton, D.; Bibas, M.; Quintero, M.; Monfort, M.; Carné, X.; de Abajo, F.; Oswald, E.; et al. Global management of patients with knee osteoarthritis begins with quality of life assessment: A systematic review. BMC Musculoskelet. Disord. 2019, 20, 493. [Google Scholar] [CrossRef] [PubMed]

- Davis, A.M.; King, L.K.; Stanaitis, I.; Hawker, G.A. Fundamentals of osteoarthritis: Outcome evaluation with patient-reported measures and functional tests. Osteoarthr. Cartil. 2022, 30, 775–785. [Google Scholar] [CrossRef]

- Woolacott, N.F.; Corbett, M.S.; Rice, S.J.C. The use and reporting of WOMAC in the assessment of the benefit of physical therapies for the pain of osteoarthritis of the knee: Findings from a systematic review of clinical trials. Rheumatology 2012, 51, 1440–1446. [Google Scholar] [CrossRef] [PubMed]

- Deng, W.; Shao, H.; Zhou, Y.; Li, H.; Wang, Z.; Huang, Y. Reliability and validity of commonly used patient-reported outcome measures (PROMs) after medial unicompartmental knee arthroplasty. Orthop. Traumatol. Surg. Res. 2022, 108, 103096. [Google Scholar] [CrossRef] [PubMed]

- Kwon, S.B.; Ku, Y.; Han, H.; Lee, M.C.; Kim, H.C.; Ro, D.H. A machine learning-based diagnostic model associated with knee osteoarthritis severity. Sci. Rep. 2020, 10, 15743. [Google Scholar] [CrossRef]

- Giesinger, J.M.; Hamilton, D.F.; Jost, B.; Behrend, H.; Giesinger, K. WOMAC, EQ-5D and Knee Society Score Thresholds for Treatment Success after Total Knee Arthroplasty. J. Arthroplast. 2015, 30, 2154–2158. [Google Scholar] [CrossRef]

- Rahman, S.A.; Narhari, P.; Sharifudin, M.A.; Shokri, A.A. Western Ontario and McMaster Universities (WOMAC) Osteoarthritis Index as an Assessment Tool to Indicate Total Knee Arthroplasty in Patients with Primary Knee Osteoarthritis. IIUM Med. J. 2020, 19, 47–53. [Google Scholar] [CrossRef]

- Makhni, E.C.; Hennekes, M.E. The Use of Patient-Reported Outcome Measures in Clinical Practice and Clinical Decision Making. J. Am. Acad. Orthop. Surg. 2023, 31, 1059–1066. [Google Scholar] [CrossRef]

- Nagai, K.; Nakamura, T.; Fu, F.H. The diagnosis of early osteoarthritis of the knee using magnetic resonance imaging. Ann. Jt. 2018, 3, 110. [Google Scholar] [CrossRef]

- Mortensen, J.F.; Mongelard, K.B.G.; Radev, D.I.; Kappel, A.; Rasmussen, L.E.; Østgaard, S.E.; Odgaard, A. MRi of the knee compared to specialized radiography for measurements of articular cartilage height in knees with osteoarthritis. J. Orthop. 2021, 25, 191–198. [Google Scholar] [CrossRef]

- Yusuf, E.; Kortekaas, M.C.; Watt, I.; Huizinga, T.W.J.; Kloppenburg, M. Do knee abnormalities visualised on MRI explain knee pain in knee osteoarthritis? A systematic review. Ann. Rheum. Dis. 2011, 70, 60–67. [Google Scholar] [CrossRef]

- Culvenor, A.G.; Øiestad, B.E.; Hart, H.F.; Stefanik, J.J.; Guermazi, A.; Crossley, K.M. Prevalence of knee osteoarthritis features on magnetic resonance imaging in asymptomatic uninjured adults: A systematic review and meta-analysis. Br. J. Sports Med. 2019, 53, 1268–1278. [Google Scholar] [CrossRef]

- Khan, M.M.; Pincher, B.; Pacheco, R. Unnecessary magnetic resonance imaging of the knee: How much is it really costing the NHS? Ann. Med. Surg. 2021, 70, 102736. [Google Scholar] [CrossRef] [PubMed]

- Hofmann, B.; Håvik, V.; Andersen, E.R.; Brandsæter, I.Ø.; Kjelle, E. Low-value MRI of the knee in Norway: A register-based study to identify the proportion of potentially low-value MRIs and estimate the related costs. BMJ Open 2024, 14, e081860. [Google Scholar] [CrossRef] [PubMed]

- Ashikyan, O.; Buller, D.C.; Pezeshk, P.; McCrum, C.; Chhabra, A. Reduction of unnecessary repeat knee radiographs during osteoarthrosis follow-up visits in a large teaching medical center. Skelet. Radiol. 2019, 48, 1975–1980. [Google Scholar] [CrossRef]

- Ota, S.; Sasaki, E.; Sasaki, S.; Chiba, D.; Kimura, Y.; Yamamoto, Y.; Kumagai, Y.; Ando, M.; Tsuda, E.; Ishibashi, Y. Relationship between abnormalities detected by magnetic resonance imaging and knee symptoms in early knee osteoarthritis. Sci. Rep. 2021, 11, 15179. [Google Scholar] [CrossRef] [PubMed]

- Sakellariou, G.; Conaghan, P.G.; Zhang, W.; Bijlsma, J.W.J.; Boyesen, P.; D’Agostino, M.A.; Doherty, M.; Fodor, D.; Kloppenburg, M.; Miese, F.; et al. EULAR recommendations for the use of imaging in the clinical management of peripheral joint osteoarthritis. Ann. Rheum. Dis. 2017, 76, 1484–1494. [Google Scholar] [CrossRef]

- Sidey-Gibbons, J.A.M.; Sidey-Gibbons, C.J. Machine learning in medicine: A practical introduction. BMC Med. Res. Methodol. 2019, 19, 64. [Google Scholar] [CrossRef]

- Mahmoud, K.; Alagha, M.A.; Nowinka, Z.; Jones, G. Predicting total knee replacement at 2 and 5 years in osteoarthritis patients using machine learning. BMJ Surg. Interv. Health Technol. 2023, 5, e000141. [Google Scholar] [CrossRef] [PubMed]

- Nowinka, Z.; Alagha, M.A.; Mahmoud, K.; Jones, G.G. Predicting Depression in Patients with Knee Osteoarthritis Using Machine Learning: Model Development and Validation Study. JMIR Form. Res. 2022, 6, e36130. [Google Scholar] [CrossRef] [PubMed]

- Chan, L.C.; Li, H.H.T.; Chan, P.K.; Wen, C. A machine learning-based approach to decipher multi-etiology of knee osteoarthritis onset and deterioration. Osteoarthr. Cartil. Open 2021, 3, 100135. [Google Scholar] [CrossRef] [PubMed]

- Widera, P.; Welsing, P.M.J.; Ladel, C.; Loughlin, J.; Lafeber Floris, P.F.J.; Petit Dop, F.; Larkin, J.; Weinans, H.; Mobasheri, A.; Bacardit, J. Multi-classifier prediction of knee osteoarthritis progression from incomplete imbalanced longitudinal data. Sci. Rep. 2020, 10, 8427. [Google Scholar] [CrossRef] [PubMed]

- Jafarzadeh, S.; Felson, D.T.; Nevitt, M.C.; Torner, J.C.; Lewis, C.E.; Roemer, F.W.; Guermazi, A.; Neogi, T. Use of clinical and imaging features of osteoarthritis to predict knee replacement in persons with and without radiographic osteoarthritis: The most study. Osteoarthr. Cartil. 2020, 28, S308–S309. [Google Scholar] [CrossRef]

- Leung, K.; Zhang, B.; Tan, J.; Shen, Y.; Geras, K.J.; Babb, J.S.; Cho, K.; Chang, G.; Deniz, C.M. Prediction of Total Knee Replacement and Diagnosis of Osteoarthritis by Using Deep Learning on Knee Radiographs: Data from the Osteoarthritis Initiative. Radiology 2020, 296, 584–593. [Google Scholar] [CrossRef]

- Choi, Y.; Ra, H.J. Patient Satisfaction after Total Knee Arthroplasty. Knee Surg. Relat. Res. 2016, 28, 1–15. [Google Scholar] [CrossRef]

- Siontis, G.C.M.; Tzoulaki, I.; Castaldi, P.J.; Ioannidis, J.P.A. External validation of new risk prediction models is infrequent and reveals worse prognostic discrimination. J. Clin. Epidemiol. 2015, 68, 25–34. [Google Scholar] [CrossRef]

- Tugwell, P.; Knottnerus, J.A. Clinical prediction models are not being validated. J. Clin. Epidemiol. 2015, 68, 1–2. [Google Scholar] [CrossRef]

- Nevitt, M.C.; Felson, D.T.; Lester, G. The Osteoarthritis Initiative. In Protocol for the Cohort Study; National Institute of Arthritis, Musculoskeletal and Skin Diseases: Bethesda, MD, USA, 2006. Available online: https://nda.nih.gov/oai/study-details.html (accessed on 21 May 2022).

- Segal, N.A.; Nevitt, M.C.; Gross, K.D.; Gross, K.D.; Hietpas, J.; Glass, N.A.; Lewis, C.E.; Torner, J.C. The Multicenter Osteoarthritis Study: Opportunities for rehabilitation research. PM R J. Inj. Funct. Rehabil. 2013, 5, 647–654. [Google Scholar] [CrossRef]

- Maredupaka, S.; Meshram, P.; Chatte, M.; Kim, W.H.; Kim, T.K. Minimal clinically important difference of commonly used patient-reported outcome measures in total knee arthroplasty: Review of terminologies, methods and proposed values. Knee Surg. Relat. Res. 2020, 32, 19. [Google Scholar] [CrossRef] [PubMed]

- MacKay, C.; Clements, N.; Wong, R.; Davis, A.M. A systematic review of estimates of the minimally clinically important difference and patient acceptable symptom state of the western ontario and mcmaster universities osteoarthritis index in patients who underwent total hip and total knee replacement. Osteoarthr. Cartil. 2019, 27, S238–S239. [Google Scholar] [CrossRef]

- Escobar, A.; García Pérez, L.; Herrera-Espiñeira, C.; Aizpuru, F.; Sarasqueta, C.; Gonzalez Sáenz de Tejada, M.; Quintana, J.; Bilbao, A. Total knee replacement; minimal clinically important differences and responders. Osteoarthr. Cartil. 2013, 21, 2006–2012. [Google Scholar] [CrossRef]

- National Institute for Health and Care Excellence. Hypertension in Adults: Diagnosis and Management. 2019. Available online: https://www.nice.org.uk/guidance/ng136 (accessed on 21 May 2022).

- Bonakdari, H.; Jamshidi, A.; Pelletier, J.; Abram, F.; Tardif, G.; Martel-Pelletier, J. A warning machine learning algorithm for early knee osteoarthritis structural progressor patient screening. Ther. Adv. Musculoskelet. Dis. 2021, 13, 1759720X21993254. [Google Scholar] [CrossRef] [PubMed]

- Anekar, A.A.; Cascella, M. WHO Analgesic Ladder; StatPearls Publishing: Treasure Island, FL, USA, 2022. [Google Scholar]

- Kokkotis, C.; Moustakidis, S.; Papageorgiou, E.; Giakas, G.; Tsaopoulos, D.E. Machine learning in knee osteoarthritis: A review. Osteoarthr. Cartil. Open 2020, 2, 100069. [Google Scholar] [CrossRef] [PubMed]

- Koo, B.S.; Eun, S.; Shin, K.; Yoon, H.; Hong, C.; Kim, D.; Hong, S.; Kim, Y.-G.; Lee, C.-K.; Yoo, B.; et al. Machine learning model for identifying important clinical features for predicting remission in patients with rheumatoid arthritis treated with biologics. Arthritis Res. Ther. 2021, 23, 178. [Google Scholar] [CrossRef] [PubMed]

- Venkatasubramaniam, A.; Wolfson, J.; Mitchell, N.; Barnes, T.; JaKa, M.; French, S. Decision trees in epidemiological research. Emerg. Themes Epidemiol. 2017, 14, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Natekin, A.; Knoll, A. Gradient boosting machines, a tutorial. Front. Neurorobotics 2013, 7, 21. [Google Scholar] [CrossRef] [PubMed]

- Zou, K.H.; O’Malley, A.J.; Mauri, L. Receiver-Operating Characteristic Analysis for Evaluating Diagnostic Tests and Predictive Models. Circulation 2007, 115, 654–657. [Google Scholar] [CrossRef]

- Hajian-Tilaki, K. Receiver Operating Characteristic (ROC) Curve Analysis for Medical Diagnostic Test Evaluation. Casp. J. Intern. Med. 2013, 4, 627–635. [Google Scholar]

- Mandrekar, J.N. Receiver operating characteristic curve in diagnostic test assessment. J. Thorac. Oncol. 2010, 5, 1315–1316. [Google Scholar] [CrossRef] [PubMed]

- Wegier, W.; Ksieniewicz, P. Application of Imbalanced Data Classification Quality Metrics as Weighting Methods of the Ensemble Data Stream Classification Algorithms. Entropy 2020, 22, 849. [Google Scholar] [CrossRef] [PubMed]

- Bastick, A.N.; Wesseling, J.; Damen, J.; Verkleij, S.P.; Emans, P.J.; Bindels, P.J.; Bierma-Zeinstra, S.M. Defining knee pain trajectories in early symptomatic knee osteoarthritis in primary care: 5-year results from a nationwide prospective cohort study (CHECK). Br. J. Gen. Pract. 2016, 66, e32–e39. [Google Scholar] [CrossRef] [PubMed]

- Devana, S.K.; Shah, A.A.; Lee, C.; Roney, A.R.; van der Schaar, M.; SooHoo, N.F. A Novel, Potentially Universal Machine Learning Algorithm to Predict Complications in Total Knee Arthroplasty. Arthroplast. Today 2021, 10, 135–143. [Google Scholar] [CrossRef] [PubMed]

- Couronné, R.; Probst, P.; Boulesteix, A. Random forest versus logistic regression: A large-scale benchmark experiment. BMC Bioinform. 2018, 19, 270. [Google Scholar] [CrossRef] [PubMed]

- Binvignat, M.; Pedoia, V.; Butte, A.J.; Louati, K.; Klatzmann, D.; Berenbaum, F.; Mariotti-Ferrandiz, E.; Sellam, J. Use of machine learning in osteoarthritis research: A systematic literature review. RMD Open 2022, 8, e001998. [Google Scholar] [CrossRef] [PubMed]

- Gorial, F.I.; Anwer Sabah, S.A.; Kadhim, M.B.; Jamal, N.B. Functional Status in Knee Osteoarthritis and its Relation to Demographic and Clinical Features. Mediterr. J. Rheumatol. 2018, 29, 207–210. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.Y.; Han, K.; Park, Y.G.; Park, S. Effects of education, income, and occupation on prevalence and symptoms of knee osteoarthritis. Sci. Rep. 2021, 11, 13983. [Google Scholar] [CrossRef]

- Sinatti, P.; Sánchez Romero, E.A.; Martínez-Pozas, O.; Villafañe, J.H. Effects of Patient Education on Pain and Function and Its Impact on Conservative Treatment in Elderly Patients with Pain Related to Hip and Knee Osteoarthritis: A Systematic Review. Int. J. Environ. Res. Public Health 2022, 19, 6194. [Google Scholar] [CrossRef]

- Bzdok, D.; Altman, N.; Krzywinski, M. Statistics versus machine learning. Nat. Methods 2018, 15, 233–234. [Google Scholar] [CrossRef]

- Perry, T.A.; Wang, X.; Gates, L.; Parsons, C.M.; Sanchez-Santos, M.T.; Garriga, C.; Cooper, C.; Nevitt, M.C.; Hunter, D.J.; Arden, N.K. Occupation and risk of knee osteoarthritis and knee replacement: A longitudinal, multiple-cohort study. Semin. Arthritis Rheum. 2020, 50, 1006–1014. [Google Scholar] [CrossRef] [PubMed]

- Feehan, M.; Owen, L.A.; McKinnon, I.M.; DeAngelis, M.M. Artificial Intelligence, Heuristic Biases, and the Optimization of Health Outcomes: Cautionary Optimism. J. Clin. Med. 2021, 10, 5284. [Google Scholar] [CrossRef] [PubMed]

- Neogi, T.; Felson, D.; Niu, J.; Nevitt, M.; Lewis, C.E.; Aliabadi, P.; Sack, B.; Torner, J.; Bradley, L.; Zhang, Y. Association between radiographic features of knee osteoarthritis and pain: Results from two cohort studies. BMJ 2009, 339, b2844. [Google Scholar] [CrossRef] [PubMed]

- Guan, B.; Liu, F.; Mizaian, A.H.; Demehri, S.; Samsonov, A.; Guermazi, A.; Kijowski, R. Deep learning approach to predict pain progression in knee osteoarthritis. Skelet. Radiol. 2022, 51, 363–373. [Google Scholar] [CrossRef] [PubMed]

- Javaid, M.K.; Kiran, A.; Guermazi, A.; Kwoh, K.; Zaim, S.; Carbone, L.; Harris, T.; McCulloch, C.E.; Arden, N.K.; Lane, N.E.; et al. Individual MRI and radiographic features of knee OA in subjects with unilateral knee pain: Health ABC study. Arthritis Rheumatol. 2012, 64, 3246–3255. [Google Scholar] [CrossRef] [PubMed]

- Ashinsky, B.G.; Bouhrara, M.; Coletta, C.E.; Lehallier, B.; Urish, K.L.; Lin, P.; Goldberg, I.G.; Spencer, R.G. Predicting early symptomatic osteoarthritis in the human knee using machine learning classification of magnetic resonance images from the Osteoarthritis Initiative. J. Orthop. Res. 2017, 35, 2243–2250. [Google Scholar] [CrossRef] [PubMed]

- Schiratti, J.; Dubois, R.; Herent, P.; Cahané, D.; Dachary, J.; Clozel, T.; Wainrib, G.; Keime-Guibert, F.; Lalande, A.; Pueyo, M.; et al. A deep learning method for predicting knee osteoarthritis radiographic progression from MRI. Arthritis Res. Ther. 2021, 23, 262. [Google Scholar] [CrossRef] [PubMed]

- Karimi, D.; Dou, H.; Warfield, S.K.; Gholipour, A. Deep learning with noisy labels: Exploring techniques and remedies in medical image analysis. Med. Image Anal. 2020, 65, 101759. [Google Scholar] [CrossRef] [PubMed]

- Chang, G.H.; Felson, D.T.; Qiu, S.; Guermazi, A.; Capellini, T.D.; Kolachalama, V.B. Assessment of knee pain from MR imaging using a convolutional Siamese network. Eur. Radiol. 2020, 30, 3538–3548. [Google Scholar] [CrossRef]

- Abedin, J.; Antony, J.; McGuinness, K.; Moran, K.; O’Connor, N.E.; Rebholz-Schuhmann, D.; Newell, J. Predicting knee osteoarthritis severity: Comparative modeling based on patient’s data and plain X-ray images. Sci. Rep. 2019, 9, 5761. [Google Scholar] [CrossRef]

- Johnson, J.M.; Khoshgoftaar, T.M. Survey on deep learning with class imbalance. J. Big Data 2019, 6, 27. [Google Scholar] [CrossRef]

| Model | Category | Feature |

|---|---|---|

| Clinical and Imaging Datasets | Patient Demographics | Age |

| Sex | ||

| Ethnicity | ||

| Living Status | ||

| Education Status | ||

| Employment Status | ||

| Body Mass Index (BMI) | ||

| Past Medical/Surgical History | Comorbidities (Charlson Comorbidity Index) | |

| Inflammatory Arthritis | ||

| Injury to knee | ||

| Knee Surgery | ||

| Drug History | Osteoarthritis medication | |

| Osteoporosis medication | ||

| Analgesic medication | ||

| Baseline Examination | Hypertension | |

| 20 m walk assessment | ||

| Baseline Questionnaire | Short Form-12 (SF-12) Mental Component | |

| Physical Activity Scale for Elderly (PASE) score | ||

| Imaging Dataset | Radiograph | Joint Space Narrowing (JSN)—Medial |

| Joint Space Narrowing (JSN)—Lateral | ||

| Kellgren–Lawrence (KL) Grade | ||

| Magnetic Resonance Imaging | Cartilage morphology (medial femorotibial joint) | |

| Cartilage morphology (lateral femorotibial joint) | ||

| Cartilage morphology (patellofemoral joint) | ||

| Bone marrow lesions (medial femorotibial joint) | ||

| Bone marrow lesions (lateral femorotibial joint) | ||

| Bone marrow lesions (patellofemoral joint) | ||

| Meniscal tear | ||

| Outcome | 2-year WOMAC score | |

| Feature | Most Common Subgroup | OAI, N (%) (n = 2408) | MOST, N (%) (n = 629) |

|---|---|---|---|

| Age | 60–70 years | 827 (34.3) | 238 (37.8) |

| Sex | Female | 1531 (63.6) | 369 (58.7) |

| Ethnicity | White/Caucasian | 2031 (84.3) | 563 (89.5) |

| Living Status | Lives with someone | 1932 (80.2) | 525 (83.5) |

| Education Status | Graduate degree | 757 (31.4) | 147 (23.4) |

| Employment Status | Paid work | 1430 (59.4) | 420 (66.8) |

| Body Mass Index (BMI) | Overweight (25.0–29.9) | 982 (40.8) | 258 (41.0) |

| Comorbidities (Charlson Comorbidity Index) | None | 1846 (76.7) | 485 (77.1) |

| Inflammatory Arthritis | None | 2291 (95.1) | 621 (98.7) |

| Injury to knee | None | 1293 (53.7) | 372 (59.1) |

| Knee Surgery | None | 1807 (75.0) | 522 (83.0) |

| Osteoarthritis medication | None | 1480 (61.5) | 434 (69.0) |

| Osteoporosis medication | None | 1095 (45.5) | 316 (50.2) |

| Analgesic medication | None | 1453 (60.3) | 154 (24.5) |

| Hypertension | Normal (SBP a < 140 & DBP a < 90) | 1919 (79.7) | 512 (81.4) |

| 20m walk assessment | Normal pace (≥1.22 s) | 1692 (70.3) | 392 (62.3) |

| Short Form-12(SF-12) Mental Component | Normal mental health status | 1214 (50.4) | 319 (50.7) |

| Physical Activity Scale for Elderly (PASE) | Normal physical activity (≥120) | 1614 (67.0) | 482 (76.6) |

| Joint Space Narrowing (JSN)—Medial | None | 974 (40.4) | 391 (62.2) |

| Joint Space Narrowing (JSN)—Lateral | None | 1905 (79.1) | 509 (80.9) |

| Kellgren–Lawrence (KL) Grade | Moderate (KL = 3) | 739 (30.7) | 79 (12.6) |

| Cartilage morphology (medial FTJ b) | No thickness loss | 937 (38.9) | 271 (43.1) |

| Cartilage morphology (lateral FTJ b) | No thickness loss | 1144 (47.5) | 345 (54.8) |

| Cartilage morphology (PFJ b) | Thickness loss in one or more subregion | 1463 (60.8) | 145 (23.1) |

| Bone marrow lesions (medial FTJ b) | None | 1532 (63.6) | 474 (75.4) |

| Bone marrow lesions (lateral FTJ b) | None | 1899 (78.9) | 542 (86.2) |

| Bone marrow lesions (PFJ b) | None | 940 (39.0) | 283 (45.0) |

| Meniscal tear | None | 1151 (47.8) | 415 (66.0) |

| WOMAC | Normal (<24) | 1775 (73.7) | 460 (73.1) |

| ML Algorithm | Clinical Dataset | Imaging Dataset | ||

|---|---|---|---|---|

| Training AUC (95% CI) | Internal Test AUC (95% CI) | Training AUC (95% CI) | Internal Test AUC (95%CI) | |

| Logistic | 0.745 (0.721–0.770) | 0.749 (0.700–0.797) | 0.791 (0.768–0.814) | 0.732 (0.682–0.782) |

| Lasso | 0.734 (0.709–0.759) | 0.751 (0.703–0.800) | 0.779 (0.755–0.803) | 0.738 (0.688–0.787) |

| Ridge | 0.730 (0.705–0.756) | 0.753 (0.705–0.801) | 0.777 (0.753–0.801) | 0.745 (0.696–0.795) |

| Decision Tree | 0.628 (0.602–0.655) | 0.630 (0.577–0.682) | 0.667 (0.639–0.694) | 0.654 (0.600–0.707) |

| Random Forest | 0.784 (0.761–0.808) | 0.777 (0.730–0.823) | 0.820 (0.799–0.842) | 0.786 (0.739–0.832) |

| GBM | 0.736 (0.711–0.761) | 0.759 (0.712–0.806) | 0.783 (0.760–0.807) | 0.752 (0.703–0.801) |

| ML Algorithm | Clinical Dataset | Imaging Dataset | ||

|---|---|---|---|---|

| Internal Test F1 | External Test F1 | Internal Test F1 | External Test F1 | |

| Logistic | 0.526 | 0.547 | 0.550 | 0.512 |

| Lasso | 0.528 | 0.534 | 0.545 | 0.523 |

| Ridge | 0.536 | 0.541 | 0.543 | 0.522 |

| Decision Tree | 0.473 | 0.286 | 0.431 | 0.444 |

| Random Forest | 0.566 | 0.529 | 0.617 | 0.536 |

| GBM | 0.539 | 0.525 | 0.558 | 0.548 |

| Clinical Dataset | Influence Factor | Imaging Dataset | Influence Factor |

|---|---|---|---|

| Education Background | 21.99 | KL Grade | 9.60 |

| Arthritis History | 10.56 | Education Background | 7.66 |

| Comorbidities | 9.73 | 20 m walk test | 7.62 |

| Osteoporosis medication | 8.59 | JSN—Medial | 7.46 |

| Past Knee Surgery | 6.70 | Pain medication | 5.85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nair, A.; Alagha, M.A.; Cobb, J.; Jones, G. Assessing the Value of Imaging Data in Machine Learning Models to Predict Patient-Reported Outcome Measures in Knee Osteoarthritis Patients. Bioengineering 2024, 11, 824. https://doi.org/10.3390/bioengineering11080824

Nair A, Alagha MA, Cobb J, Jones G. Assessing the Value of Imaging Data in Machine Learning Models to Predict Patient-Reported Outcome Measures in Knee Osteoarthritis Patients. Bioengineering. 2024; 11(8):824. https://doi.org/10.3390/bioengineering11080824

Chicago/Turabian StyleNair, Abhinav, M. Abdulhadi Alagha, Justin Cobb, and Gareth Jones. 2024. "Assessing the Value of Imaging Data in Machine Learning Models to Predict Patient-Reported Outcome Measures in Knee Osteoarthritis Patients" Bioengineering 11, no. 8: 824. https://doi.org/10.3390/bioengineering11080824

APA StyleNair, A., Alagha, M. A., Cobb, J., & Jones, G. (2024). Assessing the Value of Imaging Data in Machine Learning Models to Predict Patient-Reported Outcome Measures in Knee Osteoarthritis Patients. Bioengineering, 11(8), 824. https://doi.org/10.3390/bioengineering11080824