Abstract

Recent advances in deep learning have shown significant potential for accurate cell detection via density map regression using point annotations. However, existing deep learning models often struggle with multi-scale feature extraction and integration in complex histopathological images. Moreover, in multi-class cell detection scenarios, current density map regression methods typically predict each cell type independently, failing to consider the spatial distribution priors of different cell types. To address these challenges, we propose CellRegNet, a novel deep learning model for cell detection using point annotations. CellRegNet integrates a hybrid CNN/Transformer architecture with innovative feature refinement and selection mechanisms, addressing the need for effective multi-scale feature extraction and integration. Additionally, we introduce a contrastive regularization loss that models the mutual exclusiveness prior in multi-class cell detection cases. Extensive experiments on three histopathological image datasets demonstrate that CellRegNet outperforms existing state-of-the-art methods for cell detection using point annotations, with F1-scores of 86.38% on BCData (breast cancer), 85.56% on EndoNuke (endometrial tissue) and 93.90% on MBM (bone marrow cells), respectively. These results highlight CellRegNet’s potential to enhance the accuracy and reliability of cell detection in digital pathology.

1. Introduction

Histopathology is the gold standard for diagnosing a wide range of diseases [1]. Traditionally, pathologists analyze tissue slides under a microscope to make critical decisions. However, this diagnostic process is time-consuming and susceptible to both intra- and inter-observer variability [2]. The advent of digital pathology has enabled the creation of computer-aided diagnosis systems that are fast, accurate, and consistent [3]. These systems often leverage advanced machine learning techniques, particularly deep learning, to analyze complex histopathological images.

Deep learning-based methods have shown remarkable success across various tasks in digital pathology. Studies have demonstrated their effectiveness in tumor metastasis diagnosis [4], tumor subtyping [5], mutation prediction [5], and analyzing gigapixel pathology slides [6]. Among these applications, cell detection plays a crucial role in assisting pathologists to quantify cell populations [7] and identify abnormal cells [8]. As a fundamental step in the quantitative analysis of histopathological images [9], accurate cell detection is essential for numerous subsequent analytical processes and plays a pivotal role in enhancing the overall efficiency and accuracy of pathological diagnoses.

While deep learning methods have emerged as promising solutions for accurate cell detection [10,11,12,13], they are heavily reliant on large amounts of annotated data for training. The process of annotating cells requires the expertise of seasoned pathologists and presents significant challenges due to the small size and dense packing of cells in histopathological images. Creating detailed annotations, such as bounding boxes or segmentation masks, is particularly time-consuming and labor-intensive. Consequently, there is a pressing need for efficient and accurate cell detection methods that can alleviate this annotation burden while maintaining high performance.

To address the challenges associated with detailed cell annotations, an alternative approach that has gained attention for cell detection involves a simplified center-point annotation strategy [8,14,15]. While point annotations do not provide detailed information about cell size, shape, or precise boundaries, they offer significant advantages in the context of large-scale histopathological analysis. Firstly, it significantly reduces the time and effort required for annotation, allowing for rapid labeling of large datasets. Secondly, it provides cell location and type information, which is sufficient for tasks such as immunohistochemistry (IHC) scoring [7].

In this approach, each cell is marked by a single point at its center, rather than requiring a bounding box or segmentation mask. These point annotations are then converted into density maps, where each annotated cell location is represented by a Gaussian kernel [8,16], and the collective point annotations form the overall density map. During training, the model learns to predict this density map. At inference time, cell locations are determined by thresholding and local maxima searches on the predicted density maps.

While existing point annotation-based approaches have made commendable progress in improving annotation efficiency by significantly reducing manual effort, they remain insufficient for adapting to the unique characteristics of histopathological images. First, histopathological images exhibit a high degree of visual complexity, with cells varying in size, density, and morphological characteristics [17]. This complexity necessitates the analysis of features at different receptive fields and the integration of contextual information from multiple scales. However, current point annotation-based methods often lack effective mechanisms for capturing these intricate details and variations present in histopathological data. Second, when predicting density maps for multiple cell types, these methods typically extend the density map to multiple channels, with each channel corresponding to a specific cell type [8]. However, this approach treats the density maps of different cell types as conditionally independent, neglecting to incorporate prior knowledge regarding the spatial distribution of cells, which could provide valuable contextual information to enhance detection accuracy. Since individual cells have spatial extents and cannot occupy the same spatial location simultaneously, different cell types exhibit distinct spatial distribution patterns in histopathological images. Incorporating such spatial distribution priors can help improve the contrastiveness of predicted density maps across different cell types, reducing false positives and enhancing overall detection accuracy.

Motivated by the above discussions, we propose CellRegNet, a novel approach that leverages a hybrid CNN/Transformer architecture to effectively capture multi-scale visual cues and incorporates spatial distribution priors to guide the model’s prediction of density maps for cell detection. Specifically, CellRegNet employs a hybrid CNN/Transformer encoder to extract both local and global features, feature bridges to refine multi-scale features, and global context-guided feature selection (GCFS) blocks to select the most informative local features guided by global context. Additionally, we introduce a contrastive regularization loss that encourages the predicted density maps of different cell types to exhibit high contrastiveness, thereby reducing false positives and improving detection accuracy. Through extensive experiments on three public histopathological datasets, we demonstrate that CellRegNet outperforms existing state-of-the-art methods for cell detection using point annotations by achieving higher F1-scores. Our proposed approach effectively leverages multi-scale visual cues and spatial distribution priors, leading to more robust and accurate cell detection in challenging histopathological images.

The main contributions of this work include the following: proposing CellRegNet, a novel hybrid CNN/Transformer model for cell detection in histopathological images; incorporating feature bridges and global context-guided feature selection (GCFS) blocks to enhance multi-scale feature integration; and introducing a contrastive regularization loss to improve multi-class cell detection accuracy. These innovations effectively address the challenges of cellular complexity and spatial distribution in histopathological images, leading to significant improvements in cell detection performance.

The rest of the article is organized as follows: Section 2 provides an overview of related works in the fields of point annotation-based cell detection. Section 3 details the proposed CellRegNet approach, including the hybrid CNN/Transformer encoder, feature bridges, global context-guided feature selection (GCFS) blocks, contrastive regularization loss, and the inference procedure. Section 4 outlines the experimental setup, including the datasets used for evaluation, implementation details, and evaluation metrics. Section 5 presents and discusses the results, comparing the performance of CellRegNet against state-of-the-art methods and analyzing the effects of different components and design choices. Finally, Section 6 concludes the article by summarizing the key findings and contributions, and providing insights into potential future research directions.

2. Related Works

Cell detection in histopathological images is a crucial task in digital pathology, with applications ranging from cancer diagnosis to treatment planning [1,9]. This section provides an overview of the key developments in cell detection methods. These methods address various challenges inherent in cell detection, such as dealing with densely packed cells, varying cell sizes, and complex tissue structures. We first discuss the progression of regression-based cell detection techniques, followed by discussing the evolution of network architectures designed for this task.

2.1. Regression-Based Cell Detection

The detection of cell instances in histopathological images presents distinct challenges: cells are characteristically small in size, densely packed, and unevenly distributed across the image, while typically being annotated only with their center point coordinates to simplify the labeling process [8,17]. Under such circumstances, conventional anchor-based object detection methods face limitations [18]. Kainz et al. advocated for map-based regression approaches for detection [14], proposing to model each cell with a unimodal distribution, where pixel values represent the proximity to the nearest cell center. They argued that an ideal representation for modeling individual cells should maintain a flat background in non-cell regions while exhibiting distinct, localized peaks at cell centers. This representation enables straightforward detection through post-processing steps such as thresholding and local-maxima search.

A variety of regression targets have been explored for cell detection tasks. Sirinukunwattana et al. [19] introduced a spatially constrained CNN to regress cell probability maps, while Xie et al. [20] employed a fully residual network to regress proximity maps. Qu et al. [21] developed a regression model for Gaussian distance maps, utilizing a U-shaped architecture. To circumvent label adhesion, Liang et al. [22] introduced focal inverse distance transform maps as regression targets, along with the adoption of HRNet as a high-resolution prediction network. Li et al. [23] proposed exponential distance transform maps as regression targets. However, these approaches require sophisticated hyper-parameter tuning, which can be time-consuming and error-prone.

In contrast to these approaches, density map regression [8,15,16,18] offers several advantages. Density maps are generated using Gaussian kernels to approximate the Dirac distribution, making the values directly represent the density of cell distribution and thereby enhancing their interpretability. Additionally, Gaussian kernel requires only one hyper-parameter, , simplifying the tuning process. Density map regression was initially introduced by Zhang et al. [18] for the crowd counting task, which utilizes point annotations to localize individuals in public scenes and sports events. They proposed a multi-column CNN that leverages multiple convolutional columns with different receptive field sizes to capture multi-scale features for accurate crowd density estimation. Guo et al. [15] focused on density map regression for one-class cell detection using SAU-Net, which is based on U-Net with a self-attention module. Li et al. [16] highlighted the importance of high-resolution feature maps and large receptive fields for accurate density map prediction and proposed CSRNet. Huang et al. [8] extended the density map regression approach from single-class to multi-class settings and proposed U-CSRNet for cell detection. Additionally, they released the large-scale BCData dataset [8], comprising immunohistochemically stained breast cancer histopathological images, to facilitate public research in this domain. However, these approaches assume that density maps of different cell types are conditionally independent, ignoring prior knowledge of their spatial distribution.

2.2. Network Architectures for Density Map Regression

The design of network architectures for cell detection via density map regression has evolved significantly over the years, addressing the challenges of accurately capturing and representing the spatial distribution of cells. Key design principles include multi-scale feature extraction, large receptive fields, and high-resolution feature maps.

Multi-column convolutional neural networks [18] employ multiple convolutional branches with different receptive field sizes to capture multi-scale features. However, the reliance on a multi-branch architecture emphasizes the model’s width, limiting its capacity to extract deep features [16]. To simultaneously accommodate high-resolution feature maps and large receptive fields, CSRNet [16] integrates the first 10 layers of VGG-16 [24] with dilated convolution blocks [25]. These dilated convolution blocks allow CSRNet to expand the receptive fields and extract deeper features without sacrificing resolution. U-CSRNet [8] improves upon CSRNet by employing residual convolution blocks [26] instead of VGG blocks and introducing transposed convolutions to produce accurate and detailed density maps. CSRNet and U-CSRNet both utilize a series of stacked dilated convolution layers to capture fine details while maintaining a broader context. While effective, these approaches have their limitations. Stacking dilated convolutions may introduce gridding artifacts [27] and result in the loss of neighboring information.

Other networks designed for dense predictions can also be used for density map regression. HRNet [28], which maintains high-resolution representations throughout the network, is adopted by Liang et al. [22] to ensure that fine details are preserved during feature extraction. Inspired by HRNet, Zhang et al. [29] proposed DCLNet, which further integrates difference convolutions and deformable convolutions to localize cells. However, the use of deformable convolution introduces unsymmetrical offsets for each position in the sampling grid, which could be a drawback in applications requiring precise spatial alignment [30].

The U-shaped networks [31] have been widely adopted for cell detection and density map regression due to their effective encoder-decoder structure with skip connections. This architecture allows for the extraction of multi-scale features, combining deep semantic features with large receptive fields and high-resolution low-level details, which is beneficial for representing cellular characteristics and spatial distributions. SAU-Net [15] integrated an attention module at the encoder’s bottleneck to enhance feature representations. Li et al. [32] added gradient control and noise reduction modules to SAU-Net and proposed PGC-Net for cell detection. Qu et al. [21] combined a pre-trained ResNet [26] encoder with a U-shaped decoder to improve feature extraction capabilities. In recent years, U-shaped networks [31] have been combined with Transformers [33] to enlarge receptive fields by leveraging multi-head self-attention mechanisms [34,35,36]. These models are also applicable to density map regression tasks. While these models are effective for semantic segmentation tasks that demand precise delineation of object boundaries, in the context of density map regression, their extensive use of skip connections to propagate low-level features may introduce extraneous information, potentially hindering performance in density estimation objectives.

3. Method

This section presents our proposed CellRegNet for accurate cell detection in histopathological images using density map regression. The model leverages a hybrid architecture combining Swin Transformers and Convolutional Neural Networks (CNNs) to effectively capture both local and global features. We detail the model’s architecture, including the hybrid CNN/Transformer model, feature bridges to refine multi-scale features, and global context-guided feature selection (GCFS) blocks to select the most informative local features for the convolutional decoder responsible for density map generation. Additionally, we introduce the contrastive regularization loss, the ground truth generation process, and the inferencing procedure. More details are discussed next.

3.1. Model Architecture Overview

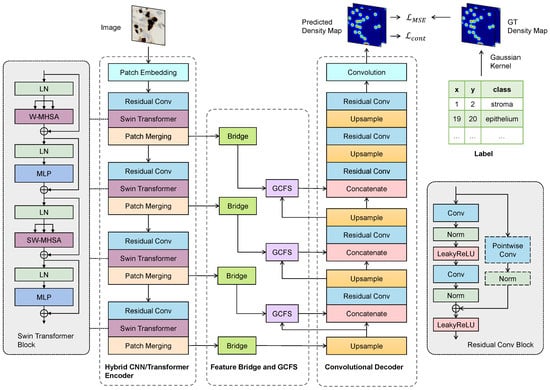

The proposed CellRegNet architecture, illustrated in Figure 1, is designed to efficiently detect cells in histopathological images through density map regression. The architecture employs a U-shaped design and is composed of several interconnected modules, each contributing to the overall performance of the model.

Figure 1.

The architecture of our proposed CellRegNet for cell detection via density map regression. The model comprises a hybrid CNN/Transformer encoder for multi-scale feature extraction, feature bridges for refinement, global context-guided feature selection (GCFS) blocks for informative feature selection, and a convolutional decoder for high-resolution density map generation. Ground truth is generated from point annotations by Gaussian kernel convolutions.

At the core of CellRegNet is a hybrid encoder that combines Swin Transformers and convolutional neural networks (CNNs). This hybrid encoder is responsible for extracting both local and global features from the input images. The Swin Transformer captures global context and long-range dependencies, while the CNN layers focus on local feature extraction and refinement.

Following the hybrid encoder, two key components significantly enhance the model’s ability to extract and select informative features. First, feature bridges are incorporated as horizontal skip connections. These bridges enlarge the receptive field and recalibrate feature maps. Second, and notably, the global context-guided feature selection (GCFS) blocks leverage cross-attention mechanisms to select the most informative local features guided by global context.

Each of these components plays a role in the overall performance of CellRegNet and will be discussed in detail in the following sections.

3.2. Hybrid CNN/Transformer Encoder

This section details our hybrid CNN/Transformer encoder, which efficiently extracts multi-scale features by combining Swin Transformer modules [37] with residual convolution blocks [26].

The hybrid encoder combines convolutional neural networks (CNNs) and Transformer architectures to extract multi-scale features efficiently. This hybrid approach aims to leverage the strengths of both architectures: CNNs excel at capturing local spatial information [26,38] and encoding positional information [39], while Transformers are adept at modeling long-range dependencies [33]. In the context of cell detection in histopathological images, this combination allows for the simultaneous consideration of local cellular details and broader tissue context.

The encoder begins with a patch embedding layer, which transforms the input image into embedded non-overlapping patches. Following the patch embedding layer, the model employs four sequential Swin Transformer blocks to hierarchically extract features and progressively model long-range relations [37]. Each Swin Transformer block consists of two sub-blocks, both of which include layer normalization, window-based multi-head self-attention (W-MHSA), and a multi-layer perceptron (MLP). To produce a hierarchical representation [37], a patch merging layer is placed after each Swin Transformer block. Patch merging reduces the spatial dimensions of the feature maps from to . The number of attention heads starts from three and doubles at each stage, corresponding to the increasing embedding dimensions.

Vision Transformers excel at modeling long-range relationships, but they may converge slowly on small-scale datasets [40]. Introducing convolutional priors is a common workaround to mitigate this issue and enhance feature learning [36,41,42]. Inspired by these approaches, we prepend a residual convolution block before each Swin Transformer block. Each residual block comprises two convolution layers, instance normalization, and ReLU activation, maintaining the feature map dimensions through identity shortcut.

The hybrid CNN/Transformer encoder effectively integrates the capabilities of convolutional and Transformer architectures. By combining convolutions with Swin Transformers, our model utilizes both local and global feature extraction mechanisms. This approach aims to enhance overall feature learning, particularly for cell detection tasks where cells can vary significantly in size, density, and morphology, and where the global tissue context provides important cues for accurate detection. The extracted features are further enhanced and recalibrated in the feature bridge, as detailed in the next section.

3.3. Feature Bridge

This section introduces the feature bridge, a component designed to refine and recalibrate features extracted by the encoder, with specific considerations for histopathological image analysis.

Feature bridges are lateral connections that facilitate the transfer of information from the intermediate stages of the encoder to the decoder. This mechanism promotes better gradient flow and aids the decoder in recovering details that might be lost during the downsampling process. As illustrated in Figure 1, in CellRegNet, feature bridges are employed as skip connections at the three deepest levels of the encoder, excluding the essential connection between the encoder’s final stage and the decoder’s initial stage. This design balances the preservation of informative features with computational efficiency.

In the analysis of histopathological images, accurately determining cell presence and identifying cell types requires the consideration of both fine-grained cellular details and broader tissue contexts. Motivated by recent advancements in convolutional neural networks [43,44], we incorporate large kernel depthwise convolutions into the feature bridge. This design choice aims to expand the effective receptive fields of the model [41], thereby refining the extracted features while maintaining computational efficiency. The increased receptive field allows the model to simultaneously capture local cellular characteristics and their surrounding tissue structures.

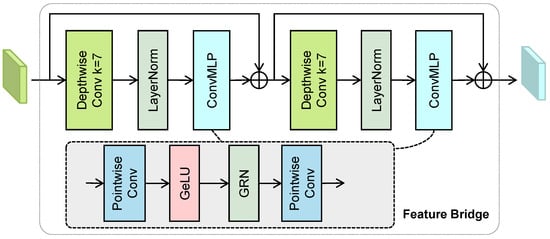

As illustrated in Figure 2, each feature bridge consists of two stacked ConvNeXt-V2 blocks [44]. Each block incorporates a depthwise convolution, layer normalization, and a lightweight inverted bottleneck convolutional MLP implemented by pointwise convolutions and global response normalizations (GRNs). The input features first pass through a depthwise convolution to expand the receptive field. Subsequently, a lightweight convolutional MLP, composed of two pointwise convolutions, facilitates information exchange along the channel dimension.

Figure 2.

The structure of the feature bridge, which incorporates depthwise convolutions and lightweight inverted bottleneck MLPs.

The GRN module, used in the convolutional MLP, recalibrates the feature maps [44]. Let be the input feature map, where C is the number of channels and denotes the feature map of the i-th channel. GRN first computes the L2 norm of for each channel: , where . The norms are then normalized across channels to compute the per-channel relative importance weights:

The features are then reweighted using , and adjusted using a residual connection and learnable parameters and :

where operands are broadcasted if needed and ⊙ denotes element-wise multiplication. The feature maps refined by the bridge are then passed through a global context-guided feature selection module before being integrated into the decoder. This process will be discussed in detail in the following section.

3.4. Global Context-Guided Feature Selection

The decoder of CellRegNet progressively integrates intermediate features from the encoder provided through feature bridges at each stage. While deep features encapsulate comprehensive global information, intermediate layer features provide essential local information to produce high-quality density map predictions. Therefore, a critical challenge is how to effectively integrate these features.

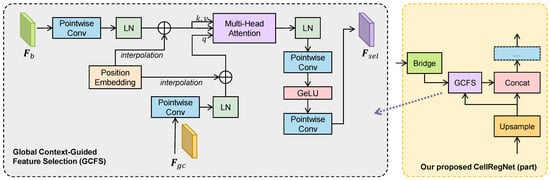

An intuitive approach is to train the model to learn to select relevant local detail features informed by the global context. Inspired by Transformers in neural machine translation tasks [33], we model this problem as a sequence-to-sequence cross-attention task, where the global-level context serves as queries, and intermediate-level features as keys and values.

As illustrated in Figure 3, GCFS modules are situated between the feature bridges and the decoder stages. GCFS takes two input feature maps: , the intermediate-level feature map from the lateral feature bridge, and , the global context information from the previous decoder stage. The input feature maps are initially processed through a pointwise convolution layer to adjust the number of channels to the embedding dimension . Subsequently, the feature maps are flattened along the spatial dimension into token sequences and normalized using layer normalization. To preserve spatial relationships, learnable positional encodings are added to the flattened tokens. If the spatial size of the positional encodings does not align with the input size, bicubic interpolation is applied to adjust them accordingly.

Figure 3.

Structure and location of our proposed global context-guided feature selection (GCFS) module. Left: details of the GCFS module. Right: Part of our proposed CellRegNet, highlighting the location of GCFS. GCFS utilizes multi-head cross-attention between the global context features and the lower-level features bridged from the encoder output, with the global context features serving as the query in the attention mechanism.

After these preparations, the global context is transformed into a token sequence , and the local features from the bridge are transformed into . These two sequences are then fed into a multi-head cross attention module.

Specifically, for each attention head, we construct the query , key , and value through linear projection:

The attention scores are calculated by:

where and h is the number of attention heads. By applying the attention scores to according to the standard Transformer mechanism [33], we obtain the attention-reweighted local features. These reweighted local features are then normalized using layer normalization, reshaped back to their original spatial dimensions, and passed through a convolutional MLP to generate the selected local features guided by the global context.

It is worth noting that, unlike standard Transformers [33] or Vision Transformers [40], the GCFS module does not employ residual connections to bypass the multi-head attention or MLP components. This intentional design choice introduces a feature bottleneck effect, compelling the model to rigorously select and focus on essential features.

By guiding local feature selection through global context, GCFS ensures that the most relevant local features are emphasized. This approach enhances the decoder’s ability to synthesize high-quality density maps, thereby improving the model’s performance and robustness in cell detection tasks.

3.5. Convolutional Decoder

As illustrated in Figure 1, the decoder of CellRegNet iteratively upsamples the global-level feature map and progressively integrates the selected local-level feature maps to generate density map predictions. We employ transposed convolutional layers to upsample the feature map by a factor of 2 at each stage.

Feature integration is implemented by concatenating the feature maps along the channel dimension, followed by a residual convolution block. This approach facilitates the fusion of information across channels, smooths spatial features, and preserves fine-grained details through residual connections. The final layer of the decoder consists of a pointwise convolution with K output channels, where K represents the number of classes in the desired density map. Since we are performing linear regression in this layer, no activation function is applied.

The resulting output density map can be utilized for calculating training loss or performing inference, as will be discussed in subsequent sections.

3.6. Training Objective

In this section, we first introduce the details of generating ground truth density maps from sparsely annotated points. Then, we describe the loss function used for training.

3.6.1. Ground Truth Generation

For each image within the dataset , we define its annotated points as a set . Each element in is a triplet , where x and y represent the coordinates of the cell center, and denotes the cell type or class. Formally, if an image has a total of N annotated cells, we have:

The ground truth density map for training is denoted as , which has the same spatial size as the image and a number of channels equal to the total number of classes K.

Following the methods described in the literature [8,16], we use Gaussian convolution to create the ground truth . First, we create an empty density map initialized to zeros. Then, for each annotated point in , characterized by its coordinates and class k, we set the value at to 1 on the k-th channel of . Finally, we apply a Gaussian convolution kernel to each channel of the density map. The Gaussian kernel approximates a Dirac distribution, resulting in a meaningful representation of object density that captures both the location and the spread of objects in the image.

3.6.2. Loss Function

In this study, we employ the mean squared error (MSE) loss as the primary loss function for density map regression. Formally, let denote the ground truth density maps and denote the predicted density maps. The MSE loss function is defined as follows:

While MSE is a standard loss function for density map regression tasks [8,16], it can lead to blurry density maps [22,45], which pose a significant challenge in the post-processing stage where thresholding and local maxima detection are performed to identify cell locations [14]. A blurred density map can result in higher false positive rates, as it becomes difficult to distinguish between true cell centers and noise.

Moreover, predicting density maps for different classes in separate channels and calculating MSE loss element-wise implicitly assumes that the density maps of different cell types are conditionally independent. However, in histopathological images, the density maps of different cell types exhibit inherent differences due to the fact that different types of cells cannot occupy the same spatial location.

As discussed in Section 3.6.1, cells are annotated by center points. The values on the ground truth density map are modeled by a Gaussian distribution centered at these points, which rapidly decays away from the center. We aim for the model’s output density maps to maintain similar characteristics of high central peaks and rapid decay away from the center. Therefore, if a particular location exhibits a high response in the density map of one cell type, it indicates proximity to the center of a cell of that type and is unlikely for the same location to show a significant response in the density map of another cell type. This distinction guides the design of our loss function, incorporating a prior that the density map should exhibit high contrastiveness across different channels.

Take a two-class cell density map regression task as an example. The predicted density map is first separated into two density maps of shape . These maps are then passed through a ReLU function to ensure non-negativity, and viewed as two -dimensional vectors: and , each corresponding to the density map of a class. To evaluate the contrastiveness between the two density vectors, we use their inner product:

Maximizing the contrastiveness across different channels can then be achieved by minimizing this inner product. Therefore, for K classes where , the contrastive regularization loss is defined as:

where denotes the ReLU function, and represents the flattened i-th channel of .

Our proposed contrastive loss function regularizes the predicted density map by penalizing overlaps between different channels, thereby encouraging the model to produce more distinct and accurate predictions for each cell type.

The total loss function is then given by:

where is a hyperparameter to control the regularization.

This combined loss function leverages the strengths of both MSE for overall density prediction accuracy and the contrastive loss for enhancing inter-class distinctions. By doing so, it addresses the limitations of using MSE alone and potentially improves the model’s ability to accurately locate and classify different cell types in histopathological images.

3.7. Inference

During the inference phase, the model’s output density maps undergo a series of standard post-processing steps to accurately identify cell locations. Initially, a ReLU function ensures non-negative density values. Subsequently, thresholding filters out low-intensity predictions, reducing noise and focusing on relevant regions. Finally, for each class, local maxima detection, analogous to non-maximum suppression (NMS) in object detection, pinpoints cell centers within high-intensity areas. These local maxima are considered the predicted cell centers, with their values representing confidence scores. This post-processing pipeline efficiently transforms continuous density map predictions into discrete cell location predictions, facilitating subsequent analysis and evaluation.

4. Experiments

In this section, we first introduce the datasets used in this study. Subsequently, we describe the evaluation metrics and the experimental setup.

4.1. Datasets

We evaluate the proposed CellRegNet on three publicly available datasets: the BCData dataset [8], the EndoNuke dataset [17] and the MBM Dataset [46].

4.1.1. BCData Dataset

The BCData dataset [8] comprises 1338 immunohistochemical (IHC) stained breast cancer histopathological images, with manually annotated cell centers for two different cell types: Ki-67 positive and Ki-67 negative tumor cells. Ki-67 is a biomarker that is associated with cell proliferation. Accurately detecting and identifying different types of tumor cells can aid the pathologists in determining the Ki-67 expression level and guide the treatment decisions. The dataset comes with a pre-defined split into 803 training, 133 validation, and 402 test images; all images have a resolution of 640 × 640 pixels and contain various cell densities. The dataset contains a total 62,623 positive and 118,451 negative tumor cells, respectively.

4.1.2. EndoNuke Dataset

The EndoNuke dataset [17] is a publicly available dataset comprising image tiles extracted from immunohistochemically (IHC) stained slides of endometrium tissue specimens. The dataset was created with the objective of facilitating the development of automated cell detection models to aid in the scoring process for endometrium IHC slides. Automating this scoring process could significantly enhance the diagnosis of infertility and other diseases [47].

The EndoNuke dataset comprises two components: the first consists of 40 image tiles used for an expert agreement study [17], while the second, which was employed in this study, contains 1740 image tiles. This latter subset includes 170,996 stromal cells and 37,722 epithelial cells, annotated as points by seven human experts. The subset was further partitioned into 1215 tiles for training, 175 for validation, and 350 for testing. Each image tile has an original physical size of , which was rescaled to pixels to facilitate computational experiments.

4.1.3. MBM Dataset

The modified bone marrow (MBM) dataset [46] is derived from the original bone marrow (BM) dataset introduced by Kainz et al. [14]. The original BM dataset comprises images of bone marrow tissues, providing a valuable resource for cell detection tasks in hematological contexts.

The MBM dataset consists of 44 non-overlapping images, each with a resolution of 600 × 600 pixels. Each image contains an average of 126 ± 33 cells. For our computational experiments, these images were rescaled to 640 × 640 pixels.

The annotations of MBM were calibrated through visual inspection with the assistance of domain experts [46], resulting in the inclusion of previously unlabeled cells. This enhancement makes it a reliable benchmark for evaluating cell detection algorithms.

4.2. Evaluation Metrics

To comprehensively evaluate the performance of our model, this study follows a structured process that includes matching model predictions with ground truth annotations, calculating per-class metrics, and summarizing these metrics for overall performance [8,19]. This section provides the detailed steps and methodology.

4.2.1. Matching Predictions with Ground Truth

The first step in our evaluation process involves matching the model’s predictions with the corresponding ground truth annotation points. As described in Section 3.7, for each image and each class, the model’s prediction is a set of cell centers with corresponding confidence scores. We start by sorting the predicted cell centers in descending order of their confidence scores. Subsequently, we iterate through each predicted cell center and attempt to match it with the nearest ground truth (GT) point within a specified radius r. The details are described in Algorithm 1, following four key principles:

- Each predicted cell center can be matched to at most one GT point.

- Each GT point can be matched to at most one predicted cell center.

- Predicted cell centers that do not match any GT points are considered false positives.

- Remaining unmatched GT points are considered false negatives.

By aggregating the true positives (TP), false positives (FP), and false negatives (FN) across the entire dataset, we obtain the per-class TP, FP, and FN counters for computing the per-class metrics discussed in the next section.

| Algorithm 1 Matching predicted cell centers to ground truth points |

|

4.2.2. Computing Per-Class Metrics

Based on the true positives (TP), false positives (FP), and false negatives (FN) obtained from the previous section, we compute the following per-class evaluation metrics for each cell type:

Precision measures the proportion of true positive predictions among all positive predictions made by the model. It is calculated as:

Recall quantifies the proportion of actual positive instances that were correctly identified by the model. It is computed as:

The F1-score provides a balanced measure that combines both precision and recall. It is defined as:

4.2.3. Summarizing Metrics for Overall Performance

To assess the overall performance of the model across all cell types, we compute the mean precision, mean recall, and mean F1-score. These summarized metrics provide a comprehensive assessment of the model’s effectiveness in a multi-class setting. Specifically, we report the mean precision, mean recall, and mean F1-score across all cell types. These mean metrics reflect the overall performance and robustness of the model in detecting different cell types.

4.3. Experimental Setup

In this study, experiments were conducted under a consistent configuration. The hardware platform comprised a server equipped with two Intel Xeon E5-2695 v4 CPUs, 128 GB of RAM, and four NVIDIA Tesla V100-PCIE GPUs. The software implementation was based on the PyTorch framework (version 2.3) [48].

During training, the batch size was set to 8, and the AdamW optimizer was used with a weight decay of 0.01. The initial learning rate was 0.0005 and it decayed to 0.00001 using a cosine annealing scheduler. The number of epochs was set to 500, and early stopping was applied to prevent overfitting by monitoring the performance on the validation set. For the evaluation process described in Algorithm 1, we used a radius r of 10 pixels for all datasets, following the approach in [8].

To enhance the diversity of the dataset and speed up training, random cropping was employed. Additionally, other data augmentation techniques were applied to improve the generalization capability of the model. Table 1 summarizes the data augmentation techniques used.

Table 1.

Data augmentation techniques used in the experiments.

5. Results and Discussion

In this section, we first compare our proposed CellRegNet with other state-of-the-art models on three datasets introduced in Section 4.1. Following that, we present ablation studies to evaluate the effectiveness of its components. Furthermore, we discuss the impact of the proposed loss function. Finally, we provide qualitative results to visually illustrate the model’s performance.

5.1. Performance Comparison

To comprehensively evaluate the effectiveness of our proposed CellRegNet, we conducted experiments on three public datasets: BCData [8], EndoNuke [17] and MBM [46]. We compared our model against nine representative models widely recognized in the field: U-Net [31], UNETR [34], SAU-Net [15], U-CSRNet [8], HRNet [28], DCLNet [29], Swin UNETR [35], Swin UNETR V2 [36], and PGC-Net [32]. This selection encompasses both convolutional neural networks (CNNs) and Transformer-based architectures, representing a spectrum of approaches from established benchmarks to recent state-of-the-art methods. These models were chosen based on their demonstrated strong performance in cell detection and related dense prediction tasks in microscopy image analysis.

The experimental results for BCData, EndoNuke and MBM datasets are presented in Table 2, Table 3 and Table 4, respectively. For BCData and EndoNuke datasets, we report the per-class and mean precision, recall, and F1-score metrics. To facilitate comparison and highlight the overall performance, the best F1-scores are emphasized in bold for each category and for the mean performance, as F1-score provides a balanced measure of precision and recall. For the MBM dataset, which contains only one class, we report the precision, recall, and F1-score for bone marrow cells. In Table 4, the number of parameters and computations of different methods are presented.

Table 2.

Comparing our proposed method with representative studies on the BCData dataset. All values are in percentage, higher is better, and the best F1-scores are highlighted in bold.

Table 3.

Comparing our proposed method with representative studies on the EndoNuke dataset. All values are in percentage, higher is better, and the best F1-scores are highlighted in bold.

Table 4.

Comparing our proposed method with representative studies on the MBM dataset. All values are in percentage, higher is better, and the best F1-score is highlighted in bold.

The results demonstrate that CellRegNet consistently outperforms existing state-of-the-art models across all three datasets in terms of overall F1-score. On the BCData dataset, CellRegNet achieves the highest mean F1-score of 86.38%, surpassing the next best model (Swin UNETR V2) by 0.52 percentage points (Table 2). Similarly, on the EndoNuke dataset, CellRegNet attains the highest mean F1-score of 85.56%, outperforming the next best model (DCLNet) by 0.34 percentage points (Table 3). For the MBM dataset (Table 4), CellRegNet achieves the highest F1-score of 93.90%, showing a slight but significant improvement over the next best model (U-CSRNet) which achieved 93.69%. This demonstrates CellRegNet’s effectiveness even in single-class scenarios.

As shown in Table 5, CellRegNet strikes a favorable balance between model size and computational efficiency. With 8.87 million parameters and 4.20 billion MACs, it offers a state-of-the-art F1-score while maintaining a relatively modest computational footprint compared to larger models like UNETR or HRNet.

Table 5.

Comparison of number of parameters and multiply-accumulate computations (MACs) across models. The MACs are measured with an sized RGB image and batch size of 1.

A notable aspect of CellRegNet’s performance is its ability to achieve high precision while maintaining competitive recall, particularly in multi-class scenarios. This characteristic is attributed to the contrastive regularization mechanism employed in CellRegNet, which is designed to reduce false positives in complex, multi-class cell detection tasks. The effectiveness of this approach is evident in the results for BCData and EndoNuke datasets. We provide an detailed study of loss functions discussed in Section 5.3.

The MBM dataset presents an interesting contrast as it is a single-class scenario. As shown in Table 4, while CellRegNet does not achieve the highest precision (93.19% compared to U-CSRNet’s 93.24%), it maintains a better balance between precision and recall, resulting in the highest overall F1-score of 93.90%.

The consistent balanced performance of CellRegNet across different cell types, datasets, and detection scenarios (both multi-class and single-class) demonstrates its versatility and robustness. This can be attributed to its carefully designed architectural components, including the combination of CNNs and Transformers to capture both local and global features crucial for accurate cell detection. The addition of contrastive regularization further enhances the model’s ability to distinguish between different cell types in multi-class scenarios, resulting in fewer false positive identifications.

5.2. Ablation Study

To analyze the contributions of different components in CellRegNet, we conducted ablation studies on the BCData dataset. We evaluated three configurations:

- Base Model: Includes the hybrid CNN/Transformer encoder and convolutional decoder described in Section 3.2 and Section 3.5, using identity shortcuts as horizontal skip connections at the three deepest levels of the encoder.

- Feature Bridge Model: Enhances the base model with feature bridges as horizontal skip connections, as described in Section 3.3.

- CellRegNet Model: Incorporates the global context-guided feature selection (GCFS) module described in Section 3.4 to select the most pertinent local features based on global information.

The performance of each configuration was evaluated using precision, recall, and F1-scores. Detailed results are presented in Table 6.

Table 6.

Ablation study of our proposed CellRegNet. The best F1-scores are highlighted in bold.

The ablation results demonstrate the effectiveness of each component in CellRegNet. The base model, which utilizes the hybrid CNN/Transformer encoder and convolutional decoder, achieves a solid mean F1-score of 85.79%. This performance underscores the strength of combining Swin Transformer [37] with convolutional priors [36,41,42] for cell detection tasks.

Adding the feature bridges significantly improves the model’s performance, increasing the mean F1-score to 86.00%. This improvement can be attributed to the enhanced information flow between the encoder and decoder, facilitated by the feature bridges. The large kernel depthwise convolutions and lightweight inverted bottleneck MLPs in the feature bridges effectively refine the extracted features by enlarging the effective receptive fields. This is particularly beneficial for histopathological image analysis, where capturing both local details and broader contextual information is crucial.

The full CellRegNet model, incorporating the GCFS module, achieves the best performance with a mean F1-score of 86.38%. The GCFS module’s ability to select the most relevant local features guided by global context proves to be highly effective in improving cell detection accuracy. Notably, the GCFS module contributes to balanced improvements on both positive and negative cell classes. For positive cells, it increases the F1-score from 86.43% to 86.74%, while for negative cells, the improvement is from 85.57% to 86.03%. This performance gain demonstrates the GCFS module’s effectiveness in selecting pertinent features for different cell types, addressing the challenge of integrating global and local information in histopathological image analysis.

5.3. Comparison of Loss Functions

To assess the efficacy of our proposed contrastive regularization in conjunction with the commonly used mean squared error (MSE) loss, we conducted experiments using CellRegNet on both BCData and EndoNuke datasets. Table 7 and Table 8 present the detailed results.

Table 7.

Evaluation of the proposed contrastive regularization loss on BCData dataset. The best F1-scores are highlighted in bold.

Table 8.

Evaluation of the proposed contrastive regularization loss on EndoNuke dataset. The best F1-scores are highlighted in bold.

The results demonstrate that incorporating contrastive regularization () alongside MSE loss () yields consistent improvements on both datasets. On the BCData dataset (Table 7), the addition of enhances the mean F1-score from 86.14% to 86.38%, with notable improvements in precision for both positive and negative tumor cells. Similarly, for the EndoNuke dataset (Table 8), the combined loss function improves the mean F1-score from 85.29% to 85.56%, with substantial gains in precision for both stroma and epithelium classes.

These findings align with the intended purpose of the contrastive regularization term. By incorporating the domain prior of spatial distribution of cells and penalizing overlaps between different channels in the predicted density maps, encourages the model to produce more distinct and sharper density maps for different cell types. This is reflected in the consistent improvement in precision and F1-score across all cell types and datasets, indicating that the model becomes more discriminative in identifying true cell locations.

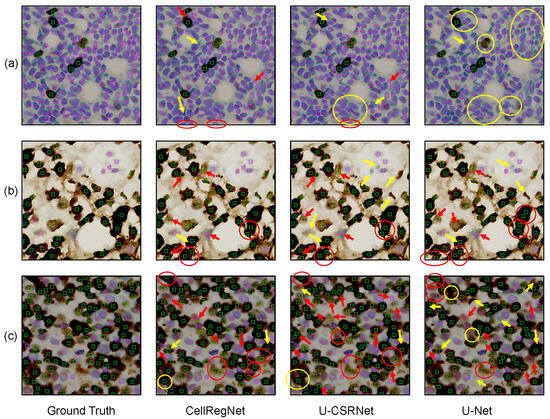

5.4. Qualitative Results

To visually illustrate the performance of CellRegNet, we present exemplary cell detection results on the BCData dataset. Figure 4 showcases a comparative analysis across three representative cases. Each row represents a case, with the columns displaying the ground truth (GT), CellRegNet detection results, U-CSRNet results, and U-Net results, respectively. U-CSRNet [8] and U-Net [31] serve as benchmarks for comparison. To facilitate comprehension, we have highlighted representative mispredictions in the images: red markers indicate false positives, and yellow markers indicate false negatives. It is worth noting that when a cell of class A is misclassified as class B, it is counted as both a false negative for class A and a false positive for class B in our quantitative evaluation metrics.

Figure 4.

Qualitative comparison of cell detection results. Rows (a–c) visualize three representative cases. For each case, ground truth, CellRegNet predictions, U-CSRNet predictions, and U-Net predictions are illustrated. Green boxes indicate positive tumor cells, magenta boxes indicate negative tumor cells. Red markers indicate false positives and yellow markers indicate false negatives.

As evidenced by Figure 4, CellRegNet demonstrates a robust capacity for accurate and comprehensive cell detection in Ki67-stained breast cancer images. The comparative analysis reveals several observations across the different models. U-Net shows relatively lower performance in scenarios with densely packed cells, as highlighted by the yellow circle in the upper right corner of Figure 4a. This indicates the challenges faced by simpler architectures in complex, crowded cellular environments. U-CSRNet demonstrates improved ability compared to U-Net. However, it shows increased false negatives in certain scenarios, particularly in areas with blurred color due to staining or scanning artifacts, and cells with irregular morphologies (yellow arrows in Figure 4b).

The correct detection and classification of individual cells in histological images remains a challenging problem, especially in scenarios where cells are densely packed or where multi-layered cellular structures are projected onto a 2D plane. This challenge is evident in Figure 4b, where all three models exhibit some false positives in areas where cells appear to be overlapping or in close proximity. These overlapping appearances could be due to actual cell adhesion in the tissue or the result of multiple cell layers being captured in the 2D projection of the histological section, leading to visual ambiguities in the image. In scenarios with crowded and dense cell populations (Figure 4c), correct identification and classification of individual cells pose significant challenges. While CellRegNet shows relatively better performance in these complex scenarios, there is still room for improvement in handling difficult samples caused by cell crowding, tissue architecture complexity, and staining variability.

5.5. Discussion

The experimental results demonstrate that CellRegNet consistently outperforms existing state-of-the-art models in cell detection tasks across three distinct histopathological datasets. On the BCData dataset, CellRegNet achieves a mean F1-score of 86.38%, surpassing the next best model by 0.52 percentage points. Similarly, on the EndoNuke dataset, it attains a mean F1-score of 85.56%, outperforming the closest competitor by 0.34 percentage points. For the MBM dataset, CellRegNet achieves the highest F1-score of 93.90%, showing an improvement over the next best model by 0.21 percentage points. These improvements are consistent across different cell types, indicating the model’s robustness and versatility in handling diverse histopathological images.

The superior performance of CellRegNet can be attributed to several key factors. First, the hybrid CNN/Transformer architecture enables effective capture of both local details and global context, which is crucial for accurate cell detection in complex histopathological images. The combination of convolutional layers and Swin Transformers addresses the need for multi-scale feature extraction—a challenge often encountered in previous methods. Second, the feature bridges contributes to more accurate density map predictions by significantly enlarge the receptive field and calibrate multi-scale features, enhancing information flow between the encoder and decoder. Third, the global context-guided feature selection (GCFS) module effectively selects the most informative local features guided by global context, addressing the challenge of integrating multi-scale information in histopathological image analysis.

Furthermore, the contrastive regularization loss introduces a valuable mechanism for modeling the mutual exclusiveness prior of different cell types within the learning process. By encouraging distinctiveness between density maps of different cell types, this loss function enhances the model’s ability to capture the spatial relationships and relative occurrences of different cell types. This approach improves the model’s discriminative power and contributes to a more accurate representation of the cellular landscape in histopathological images.

The results indicate that CellRegNet demonstrates improved accuracy in cell detection for histopathological image analysis. This approach has the potential to assist pathologists in their diagnostic work by enhancing biomarker quantification and automating cell counting tasks, which may contribute to more consistent results and reduce manual workload. Future research may focus on optimizing the model architecture to balance performance and computational efficiency, as well as validating its capabilities and limitations across various clinical contexts.

6. Conclusions

In this study, we introduced CellRegNet, a novel deep learning model for cell detection in histopathological images using point annotations. The key contributions of this work are as follows:

- We propose CellRegNet, a novel hybrid CNN/Transformer model for accurate cell detection in histopathological images using point annotations. CellRegNet effectively captures and integrates multi-scale visual cues, addressing the complexity of cellular structures in histopathological tissues.

- We introduce feature bridges as horizontal skip connections, which enlarge the receptive field and recalibrate feature maps. This innovation enhances the model’s ability to capture and leverage information across various scales.

- We design global context-guided feature selection (GCFS) blocks that leverage cross-attention mechanisms. These blocks enable the model to select the most informative local features guided by global context, significantly improving cell detection accuracy.

- We propose a contrastive regularization loss that incorporates spatial distribution priors of cells, enhancing the distinction between predicted density maps of different cell types and reducing false positives in multi-class cell detection.

CellRegNet demonstrates state-of-the-art performance on three public histopathological datasets, showcasing its effectiveness in accurately detecting and classifying cells in challenging scenarios. The model’s robust performance underscores its potential for real-world applications in digital pathology, potentially aiding pathologists in more efficient and accurate diagnoses.

Future research directions may include optimizing computational efficiency to facilitate real-time analysis, exploring the model’s adaptability to a broader range of histopathological image types, and investigating the integration of CellRegNet into comprehensive digital pathology workflows.

Author Contributions

Conceptualization, X.J.; methodology, X.J. and M.C.; software: X.J.; writing—original draft: X.J.; writing—review and editing: M.C. and H.A.; resources: H.A.; funding acquisition: H.A.; project administration: H.A.; supervision: H.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Fundamental Research Funds for the central universities of China under grant YD2150002001.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

We sincerely thank the editors and the reviewers for their careful reading and thoughtful comments.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hosseini, M.S.; Bejnordi, B.E.; Trinh, V.Q.H.; Chan, L.; Hasan, D.; Li, X.; Yang, S.; Kim, T.; Zhang, H.; Wu, T.; et al. Computational pathology: A survey review and the way forward. J. Pathol. Inform. 2024, 15, 100357. [Google Scholar] [CrossRef] [PubMed]

- Van der Laak, J.; Litjens, G.; Ciompi, F. Deep learning in histopathology: The path to the clinic. Nat. Med. 2021, 27, 775–784. [Google Scholar] [CrossRef] [PubMed]

- Pantanowitz, L.; Sharma, A.; Carter, A.B.; Kurc, T.; Sussman, A.; Saltz, J. Twenty years of digital pathology: An overview of the road travelled, what is on the horizon, and the emergence of vendor-neutral archives. J. Pathol. Inform. 2018, 9, 40. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Khosla, A.; Gargeya, R.; Irshad, H.; Beck, A.H. Deep learning for identifying metastatic breast cancer. arXiv 2016, arXiv:1606.05718. [Google Scholar]

- Xu, H.; Usuyama, N.; Bagga, J.; Zhang, S.; Rao, R.; Naumann, T.; Wong, C.; Gero, Z.; González, J.; Gu, Y.; et al. A whole-slide foundation model for digital pathology from real-world data. Nature 2024, 630, 181–188. [Google Scholar] [CrossRef] [PubMed]

- Chen, R.J.; Ding, T.; Lu, M.Y.; Williamson, D.F.; Jaume, G.; Song, A.H.; Chen, B.; Zhang, A.; Shao, D.; Shaban, M.; et al. Towards a general-purpose foundation model for computational pathology. Nat. Med. 2024, 30, 850–862. [Google Scholar] [CrossRef] [PubMed]

- Ushakov, E.; Naumov, A.; Fomberg, V.; Vishnyakova, P.; Asaturova, A.; Badlaeva, A.; Tregubova, A.; Karpulevich, E.; Sukhikh, G.; Fatkhudinov, T. EndoNet: A Model for the Automatic Calculation of H-Score on Histological Slides. Informatics 2023, 10, 90. [Google Scholar] [CrossRef]

- Huang, Z.; Ding, Y.; Song, G.; Wang, L.; Geng, R.; He, H.; Du, S.; Liu, X.; Tian, Y.; Liang, Y.; et al. Bcdata: A large-scale dataset and benchmark for cell detection and counting. In Proceedings, Part V 23, Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, 4–8 October 2020; Springer: Cham, Switzerland, 2020; pp. 289–298. [Google Scholar]

- Srinidhi, C.L.; Ciga, O.; Martel, A.L. Deep neural network models for computational histopathology: A survey. Med. Image Anal. 2021, 67, 101813. [Google Scholar] [CrossRef] [PubMed]

- Cireşan, D.C.; Giusti, A.; Gambardella, L.M.; Schmidhuber, J. Mitosis detection in breast cancer histology images with deep neural networks. In Proceedings, Part II 16, Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2013: 16th International Conference, Nagoya, Japan, 22–26 September 2013; Springer: Cham, Switzerland, 2013; pp. 411–418. [Google Scholar]

- Chen, H.; Dou, Q.; Wang, X.; Qin, J.; Heng, P. Mitosis detection in breast cancer histology images via deep cascaded networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Rao, S. Mitos-rcnn: A novel approach to mitotic figure detection in breast cancer histopathology images using region based convolutional neural networks. arXiv 2018, arXiv:1807.01788. [Google Scholar]

- Lv, G.; Wen, K.; Wu, Z.; Jin, X.; An, H.; He, J. Nuclei R-CNN: Improve mask R-CNN for nuclei segmentation. In Proceedings of the 2019 IEEE 2nd International Conference on Information Communication and Signal Processing (ICICSP), Weihai, China, 28–30 September 2019; pp. 357–362. [Google Scholar]

- Kainz, P.; Urschler, M.; Schulter, S.; Wohlhart, P.; Lepetit, V. You should use regression to detect cells. In Proceedings, Part III 18, Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 276–283. [Google Scholar]

- Guo, Y.; Stein, J.; Wu, G.; Krishnamurthy, A. Sau-net: A universal deep network for cell counting. In Proceedings of the 10th ACM International Conference on Bioinformatics, Computational Biology and Health Informatics, Niagara Falls, NY, USA, 7–10 September 2019; pp. 299–306. [Google Scholar]

- Li, Y.; Zhang, X.; Chen, D. Csrnet: Dilated convolutional neural networks for understanding the highly congested scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1091–1100. [Google Scholar]

- Naumov, A.; Ushakov, E.; Ivanov, A.; Midiber, K.; Khovanskaya, T.; Konyukova, A.; Vishnyakova, P.; Nora, S.; Mikhaleva, L.; Fatkhudinov, T.; et al. EndoNuke: Nuclei detection dataset for estrogen and progesterone stained IHC endometrium scans. Data 2022, 7, 75. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, D.; Chen, S.; Gao, S.; Ma, Y. Single-image crowd counting via multi-column convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 589–597. [Google Scholar]

- Sirinukunwattana, K.; Raza, S.E.A.; Tsang, Y.W.; Snead, D.R.; Cree, I.A.; Rajpoot, N.M. Locality sensitive deep learning for detection and classification of nuclei in routine colon cancer histology images. IEEE Trans. Med. Imaging 2016, 35, 1196–1206. [Google Scholar] [CrossRef] [PubMed]

- Xie, Y.; Xing, F.; Shi, X.; Kong, X.; Su, H.; Yang, L. Efficient and robust cell detection: A structured regression approach. Med. Image Anal. 2018, 44, 245–254. [Google Scholar] [CrossRef] [PubMed]

- Qu, H.; Wu, P.; Huang, Q.; Yi, J.; Yan, Z.; Li, K.; Riedlinger, G.M.; De, S.; Zhang, S.; Metaxas, D.N. Weakly supervised deep nuclei segmentation using partial points annotation in histopathology images. IEEE Trans. Med. Imaging 2020, 39, 3655–3666. [Google Scholar] [CrossRef] [PubMed]

- Liang, D.; Xu, W.; Zhu, Y.; Zhou, Y. Focal inverse distance transform maps for crowd localization. IEEE Trans. Multimed. 2022, 25, 6040–6052. [Google Scholar] [CrossRef]

- Li, B.; Chen, J.; Yi, H.; Feng, M.; Yang, Y.; Zhu, Q.; Bu, H. Exponential distance transform maps for cell localization. Eng. Appl. Artif. Intell. 2024, 132, 107948. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wang, P.; Chen, P.; Yuan, Y.; Liu, D.; Huang, Z.; Hou, X.; Cottrell, G. Understanding convolution for semantic segmentation. In Proceedings of the 2018 IEEE winter conference on applications of computer vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1451–1460. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Chen, J.; Li, B.; Feng, M.; Yang, Y.; Zhu, Q.; Bu, H. Difference-deformable convolution with pseudo scale instance map for cell localization. IEEE J. Biomed. Health Inform. 2024, 28, 355–366. [Google Scholar] [CrossRef] [PubMed]

- Bai, S.; He, Z.; Qiao, Y.; Hu, H.; Wu, W.; Yan, J. Adaptive dilated network with self-correction supervision for counting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4594–4603. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings, Part III 18, Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Li, J.; Wu, J.; Qi, J.; Zhang, M.; Cui, Z. PGC-Net: A Novel Encoder-Decoder Network with Path Gradient Flow Control for Cell Counting. IEEE Access 2024, 12, 68847–68856. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Hatamizadeh, A.; Tang, Y.; Nath, V.; Yang, D.; Myronenko, A.; Landman, B.; Roth, H.R.; Xu, D. Unetr: Transformers for 3d medical image segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 574–584. [Google Scholar]

- Hatamizadeh, A.; Nath, V.; Tang, Y.; Yang, D.; Roth, H.R.; Xu, D. Swin unetr: Swin transformers for semantic segmentation of brain tumors in mri images. In Proceedings of the International MICCAI Brainlesion Workshop; Springer: Cham, Switzerland, 2021; pp. 272–284. [Google Scholar]

- He, Y.; Nath, V.; Yang, D.; Tang, Y.; Myronenko, A.; Xu, D. Swinunetr-v2: Stronger swin transformers with stagewise convolutions for 3d medical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2023; pp. 416–426. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF international conference on computer vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1106–1114. [Google Scholar] [CrossRef]

- Islam, M.A.; Jia, S.; Bruce, N.D. How much position information do convolutional neural networks encode? arXiv 2020, arXiv:2001.08248. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Scaling up your kernels to 31x31: Revisiting large kernel design in cnns. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11963–11975. [Google Scholar]

- Wu, H.; Xiao, B.; Codella, N.; Liu, M.; Dai, X.; Yuan, L.; Zhang, L. Cvt: Introducing convolutions to vision transformers. In Proceedings of the IEEE/CVF international conference on computer vision, Montreal, BC, Canada, 11–17 October 2021; pp. 22–31. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Woo, S.; Debnath, S.; Hu, R.; Chen, X.; Liu, Z.; Kweon, I.S.; Xie, S. Convnext v2: Co-designing and scaling convnets with masked autoencoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 16133–16142. [Google Scholar]

- Cao, X.; Wang, Z.; Zhao, Y.; Su, F. Scale aggregation network for accurate and efficient crowd counting. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Paul Cohen, J.; Boucher, G.; Glastonbury, C.A.; Lo, H.Z.; Bengio, Y. Count-ception: Counting by fully convolutional redundant counting. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 18–26. [Google Scholar]

- Vetvicka, V.; Fiala, L.; Garzon, S.; Buzzaccarini, G.; Terzic, M.; Laganà, A.S. Endometriosis and gynaecological cancers: Molecular insights behind a complex machinery. Menopause Rev. Menopauzalny 2021, 20, 201–206. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32, 8024–8035. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).