Real-World Data and Real-World Evidence in Healthcare in the United States and Europe Union

Abstract

1. Introduction

“Real-world data [FDA] are data relating to patient health status and/or the delivery of health care routinely collected from a variety of sources. Examples of RWD include data derived from electronic health records, medical claims data, data from product or disease registries, and data gathered from other sources (such as digital health technologies) that can provide information regarding patient health status.”United States (US) Food and Drug Administration (FDA) [5]

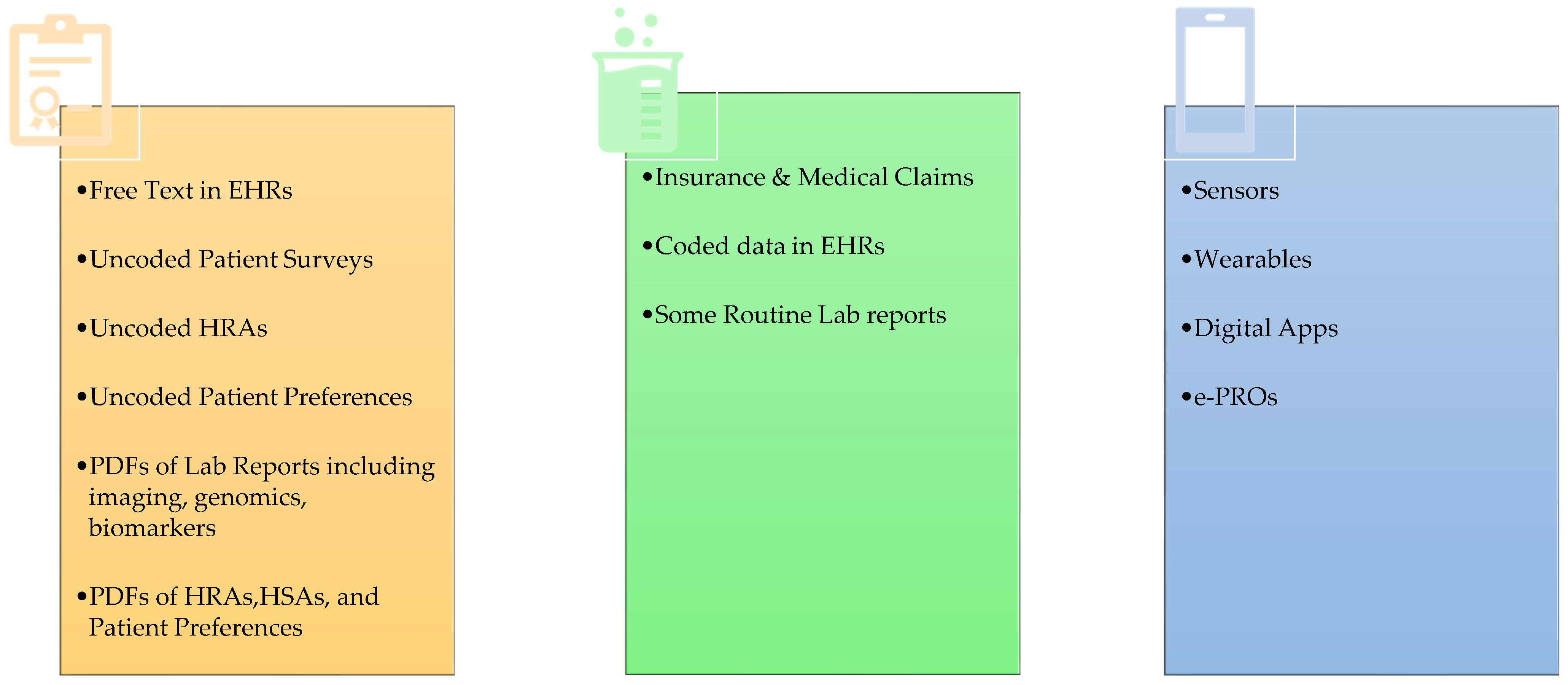

2. RWD Types and Use

3. Acceptability of RWD for Regulatory Decision-Making

| Dimensions | Concepts |

|---|---|

| Authenticity |

|

| Transparency |

|

| Relevancy |

|

| Accuracy |

|

| Track Record |

|

| FDA | EMA | ATRAcTR | ||

|---|---|---|---|---|

| Data Reliability | Accuracy Completeness Provenance Traceability | Data Reliability | Precision Accuracy Plausibility | Data Authenticity Data Transparency Data Accuracy |

| Data Extensiveness | Completeness Coverage | |||

| Data Coherence | Format Structural Semantic Uniqueness Conformance Validity | |||

| Data Timeliness | ||||

| Data Relevance | Exposure Outcomes Adequate Sample size | Data Relevance | Data Relevance | |

| Study Design | Employ Causal Inference Framework | |||

| Data Track Record | ||||

4. System Interoperability and Data Privacy

5. Discussion

6. Conclusions and Future Direction

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zou, K.H.; Li, J.Z.; Imperato, J.; Potkar, C.N.; Sethi, J.; Edward, J.; Ray, A. Harnessing real-world data for regulatory use and Applying Innovative Applications. J. Multidiscip. Healthc. 2020, 13, 671–679. [Google Scholar] [CrossRef] [PubMed]

- Zou, K.H.; Li, J.Z.; Salem, L.A.; Imperato, J.; Edwards, J.; Ray, A. (Eds.) Harnessing real-world evidence to reduce the burden of noncommunicable disease: Health information technology and innovation to generate insights. Health Serv. Outcomes Res. Methodol. 2021, 21, 8–20. [Google Scholar] [CrossRef] [PubMed]

- Zou, K.H.; Salem, L.A.; Ray, A. (Eds.) Real World Evidence in a Patient Centric Digital Era; Taylor & Francis: Boca Raton, FL, USA, 2021. [Google Scholar]

- Berger, M.L.; Ganz, P.A.; Zou, K.H.; Greenfield, S. When Will Real-World Data Fulfill Its Promise to Provide Timely Insights in Oncology? JCO Clin. Cancer Inform. 2024, 8, e2400039. [Google Scholar] [CrossRef] [PubMed]

- U.S. Food & Drug Administration. Real-World Evidence. 2023. Available online: https://www.fda.gov/science-research/science-and-research-special-topics/real-world-evidence (accessed on 10 July 2024).

- Concato, J.; Corrigan-Curay, J. Real-World Evidence—Where Are We Now? N. Engl. J. Med. 2022, 386, 1680–1682. [Google Scholar] [CrossRef] [PubMed]

- U.S. Congress. H.R.34—21st Century Cures Act. 2016. Available online: https://www.congress.gov/bill/114th-congress/house-bill/34 (accessed on 10 July 2024).

- FAIR. FAIR Principles. 2024. Available online: https://www.go-fair.org/fair-principles (accessed on 10 July 2024).

- Zou, K.H.; Vigna, C.; Talwai, A.; Jain, R.; Galaznik, A.; Berger, M.L.; Li, J.Z. The Next Horizon of Drug Development: External Control Arms and Innovative Tools to Enrich Clinical Trial Data. Ther. Innov. Regul. Sci. 2024, 58, 443–455. [Google Scholar] [CrossRef] [PubMed]

- Shah, K.; Patt, D.; Mullangi, S. Use of Tokens to Unlock Greater Data Sharing in Health Care. JAMA 2023, 330, 2333–2334. [Google Scholar] [CrossRef] [PubMed]

- U.S. Food & Drug Administration. Use of Real-World Evidence to Support Regulatory Decision-Making for Medical Devices. 2017. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/use-real-world-evidence-support-regulatory-decision-making-medical-devices (accessed on 10 July 2024).

- U.S. Food & Drug Administration. Use of Electronic Health Record Data in Clinical Investigations Guidance for Industry. 2018. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/use-electronic-health-record-data-clinical-investigations-guidance-industry (accessed on 10 July 2024).

- U.S. Food & Drug Administration. Framework for FDA’s Real-World Evidence Program. 2018. Available online: https://www.fda.gov/media/120060/download?attachment (accessed on 10 July 2024).

- U.S. Food & Drug Administration. CVM GFI #266 Use of Real-World Data and Real-World Evidence to Support Effectiveness of New Animal Drugs. 2021. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/cvm-gfi-266-use-real-world-data-and-real-world-evidence-support-effectiveness-new-animal-drugs (accessed on 10 July 2024).

- U.S. Food & Drug Administration. Real-World Data: Assessing Electronic Health Records and Medical Claims Data to Support Regulatory Decision-Making for Drug and Biological Products. 2021. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/real-world-data-assessing-electronic-health-records-and-medical-claims-data-support-regulatory (accessed on 10 July 2024).

- U.S. Food & Drug Administration. Considerations for the Design and Conduct of Externally Controlled Trials for Drug and Biological Products. 2023. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/considerations-design-and-conduct-externally-controlled-trials-drug-and-biological-products (accessed on 10 July 2024).

- U.S. Food & Drug Administration. Real-World Data: Assessing Registries to Support Regulatory Decision-Making for Drug and Biological Products. 2023. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/real-world-data-assessing-registries-support-regulatory-decision-making-drug-and-biological-products (accessed on 10 July 2024).

- U.S. Food & Drug Administration. Data Standards for Drug and Biological Product Submissions Containing Real-World Data. 2023. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/data-standards-drug-and-biological-product-submissions-containing-real-world-data (accessed on 10 July 2024).

- U.S. Food & Drug Administration. Advancing Real-World Evidence Program. 2024. Available online: https://www.fda.gov/drugs/development-resources/advancing-real-world-evidence-program (accessed on 10 July 2024).

- European Commission. European Health Data Space. 2024. Available online: https://health.ec.europa.eu/ehealth-digital-health-and-care/european-health-data-space_en (accessed on 10 July 2024).

- European Medicines Agency. Reflection Paper on Use of Real-World Data in Non-Interventional Studies to Generate Real-World Evidence—Scientific Guideline. 2024. Available online: https://www.ema.europa.eu/en/reflection-paper-use-real-world-data-non-interventional-studies-generate-real-world-evidence-scientific-guideline (accessed on 10 July 2024).

- Kahn, M.G.; Callahan, T.J.; Barnard, J.; Bauck, A.E.; Brown, J.; Davidson, B.N.; Estiri, H.; Goerg, C.; Holve, E.; Johnson, S.G.; et al. A Harmonized Data Quality Assessment Terminology and Framework for the Secondary Use of Electronic Health Record Data. EGEMS 2016, 4, 1244. [Google Scholar] [CrossRef] [PubMed]

- European Medicines Agency and Heads of Medicines Agencies. Data Quality Framework for EU Medicines Regulation. 2023. Available online: https://www.ema.europa.eu/en/documents/regulatory-procedural-guideline/data-quality-framework-eu-medicines-regulation_en.pdf (accessed on 10 July 2024).

- Mahendraratnam, N.; Silcox, C.; Mercon, K.; Krostsch, A.; Romine, M.; Aten, A.; Sherman, R.; Daniel, G.; McClellan, M.; Determining Real-World Data’s Fitness for Use and the Role of Reliability. Duke Margolis Center for Health Policy. 2019. Available online: https://healthpolicy.duke.edu/sites/default/files/2019-11/rwd_reliability.pdf (accessed on 10 July 2024).

- Berger, M.L.; Crown, W.H.; Li, J.Z.; Zou, K.H. ATRAcTR (Authentic Transparent Relevant Accurate Track-Record): A screening tool to assess the potential for real-world data sources to support creation of credible real-world evidence for regulatory decision-making. Health Serv. Outcomes Res. Methodol. 2023. [Google Scholar] [CrossRef]

- Wang, S.V.; Schneeweiss, S.; Franklin, J.M.; Desai, R.J.; Feldman, W.; Garry, E.M.; Glynn, R.J.; Lin, K.J.; Paik, J.; Patorno, E.; et al. Emulation of randomized clinical trials with nonrandomized database analyses: Results of 32 clinical trials. JAMA 2023, 329, 1376–1385, Erratum in JAMA 2024, 331, 1236. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.V.; Schneeweiss, S. Understanding the facets of emulating randomized clinical trials-reply. JAMA 2023, 330, 770–771. [Google Scholar] [CrossRef] [PubMed]

- Schneeweiss, S.; Wang, S.V. Hypothetical assessments of trial emulations. JAMA Intern. Med. 2024, 184, 446. [Google Scholar] [CrossRef] [PubMed]

- Desai, R.J.; Wang, S.V.; Sreedhara, S.K.; Zabotka, L.; Khosrow-Khavar, F.; Nelson, J.C.; Shi, X.; Toh, S.; Wyss, R.; Patorno, E.; et al. Process guide for inferential studies using healthcare data from routine clinical practice to evaluate causal effects of drugs (PRINCIPLED): Considerations from the FDA Sentinel Innovation Center. BMJ 2024, 384, e076460. [Google Scholar] [CrossRef] [PubMed]

- Park, J.E.; Campbell, H.; Towle, K.; Yuan, Y.; Jansen, J.P.; Phillippo, D.; Cope, S. Unanchored population-adjusted indirect comparison methods for time-to-event outcomes using inverse odds weighting, regression adjustment, and doubly robust methods with either individual patient or aggregate data. Value Health 2024, 27, 278–286. [Google Scholar] [CrossRef] [PubMed]

- Loh, W.W. Estimating Curvilinear Time-Varying Treatment Effects: Combining g-Estimation of Structural Nested Mean Models with Time-Varying Effect Models for Longitudinal Causal Inference. Psychol. Methods 2024. advance online publication. Available online: https://psycnet.apa.org/record/2024-54079-001?doi=1 (accessed on 10 July 2024). [CrossRef] [PubMed]

- HealthIT.gov. Building Data Infrastructure to Support Patient Centered Outcomes Research (PCOR): Common Data Model Harmonization. 2024. Available online: https://www.healthit.gov/topic/scientific-initiatives/pcor/common-data-model-harmonization-cdm (accessed on 10 July 2024).

- Observational Health Data Science and Informatics (OHDSI). Standardized Data: The OMOP Common Data Model. 2024. Available online: https://www.ohdsi.org/data-standardization (accessed on 10 July 2024).

- HL7 FHIR. HL7 FHIR Foundation Enabling Health Interoperability through FHIR. 2024. Available online: https://fhir.org (accessed on 10 July 2024).

- Integrating the Healthcare Enterprise (IHE) International. Making Healthcare Interoperable. 2024. Available online: https://www.ihe.net (accessed on 10 July 2024).

- U.S. Department of Health and Human Services. Health Information Privacy. 2024. Available online: https://www.hhs.gov/hipaa/index.html (accessed on 10 July 2024).

- GDPR.EU. What Is GDPR, the EU’s New Data Protection Law? 2024. Available online: https://gdpr.eu/what-is-gdpr (accessed on 10 July 2024).

- Rob Bonta, Attorney General. California Consumer Privacy Act (CCPA). 2024. Available online: https://oag.ca.gov/privacy/ccpa (accessed on 10 July 2024).

- California Privacy Protection Agency. The California Consumer Privacy Act. 2024. Available online: https://cppa.ca.gov/regulations (accessed on 10 July 2024).

- Casey, J.D.; Courtright, K.R.; Rice, T.W.; Semler, M.W. What can a learning healthcare system teach us about improving outcomes? Curr. Opin. Crit. Care 2021, 27, 527–536. [Google Scholar] [CrossRef] [PubMed]

- Rajpurkar, P.; Chen, E.; Banerjee, O.; Topol, E.J. AI in health and medicine. Nat. Med. 2022, 28, 31–38. [Google Scholar] [CrossRef] [PubMed]

- How to support the transition to AI-powered healthcare. Nat. Med. 2024, 30, 609–610. [CrossRef] [PubMed]

- Silcox, C.; Zimlichmann, E.; Huber, K.; Neil Rowen, N.; Robert Saunders, R.; McClellan, M.; Kahn, C.N.; Salzberg, C.A., 3rd; Bates, D.W. The potential for artificial intelligence to transform healthcare: Perspectives from international health leaders. NPJ Digit. Med. 2024, 7, 88. [Google Scholar] [CrossRef] [PubMed]

- Berger, M.L.; Sox, H.; Willke, R.J.; Brixner, D.L.; Eichler, H.G.; Goettsch, W.; Madigan, D.; Makady, A.; Schneeweiss, S.; Tarricone, R.; et al. Good Practices for Real-World Data Studies of Treatment and/or Comparative Effectiveness: Recommendations from the Joint ISPOR-ISPE Special Task Force on Real-World Evidence in Health Care Decision Making. Value Health 2017, 20, 1003–1008. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zou, K.H.; Berger, M.L. Real-World Data and Real-World Evidence in Healthcare in the United States and Europe Union. Bioengineering 2024, 11, 784. https://doi.org/10.3390/bioengineering11080784

Zou KH, Berger ML. Real-World Data and Real-World Evidence in Healthcare in the United States and Europe Union. Bioengineering. 2024; 11(8):784. https://doi.org/10.3390/bioengineering11080784

Chicago/Turabian StyleZou, Kelly H., and Marc L. Berger. 2024. "Real-World Data and Real-World Evidence in Healthcare in the United States and Europe Union" Bioengineering 11, no. 8: 784. https://doi.org/10.3390/bioengineering11080784

APA StyleZou, K. H., & Berger, M. L. (2024). Real-World Data and Real-World Evidence in Healthcare in the United States and Europe Union. Bioengineering, 11(8), 784. https://doi.org/10.3390/bioengineering11080784