Abstract

Early detection of breast lesions and distinguishing between malignant and benign lesions are critical for breast cancer (BC) prognosis. Breast ultrasonography (BU) is an important radiological imaging modality for the diagnosis of BC. This study proposes a BU image-based framework for the diagnosis of BC in women. Various pre-trained networks are used to extract the deep features of the BU images. Ten wrapper-based optimization algorithms, including the marine predator algorithm, generalized normal distribution optimization, slime mold algorithm, equilibrium optimizer (EO), manta-ray foraging optimization, atom search optimization, Harris hawks optimization, Henry gas solubility optimization, path finder algorithm, and poor and rich optimization, were employed to compute the optimal subset of deep features using a support vector machine classifier. Furthermore, a network selection algorithm was employed to determine the best pre-trained network. An online BU dataset was used to test the proposed framework. After comprehensive testing and analysis, it was found that the EO algorithm produced the highest classification rate for each pre-trained model. It produced the highest classification accuracy of 96.79%, and it was trained using only a deep feature vector with a size of 562 in the ResNet-50 model. Similarly, the Inception-ResNet-v2 had the second highest classification accuracy of 96.15% using the EO algorithm. Moreover, the results of the proposed framework are compared with those in the literature.

1. Introduction

Breast cancer (BC) is the most prevalent malignancy among women worldwide. According to a 2020 statistics report published in 2021 [], approximately 2.3 million new cases of BC are reported, accounting for 11.7% of the total number of cancer cases. With 685,000 fatalities, it is the fifth most common cause of cancer-related mortality worldwide. A good screening program can identify BC early, lower the risk of local and long-term recurrence, and enhance the five-year survival rate []. In accordance with national and international standards, women aged 40–74 years should receive a mammogram every year []. However, for those with dense breasts, the false-positive and false-negative rates are relatively high, which increases the likelihood of missed diagnoses. Breast ultrasonography (BU) is a complementary diagnostic technique not constrained by the type of glandular breast tissue []. It is particularly suitable for Asian women with dense breasts, as it boosts the detection rate of BC by 17% and lowers the chances of needless biopsies by 40% []. In addition to mammography, clinical examinations, and needle biopsies, BU is crucial for the assessment of breast diseases. Compared with other methods, BU has the advantages of being noninvasive, nonradioactive, and cost-effective []. However, the sonographer’s experience significantly affects BU diagnosis [,]. Complex structures, including normal tissues, cysts, benign lesions, and malignant tumors, are frequently observed in BC images. It can be difficult to distinguish between these various structures and precisely detect probable cancers; this requires expert knowledge and training.

Artificial intelligence (AI) and computer-aided methods have grown rapidly in recent years. Individualized analyses using AI can help doctors make better clinical decisions [,,,,]. Deep learning is a subfield of AI that enables high-throughput correlations between images and clinical data by automatically identifying image patterns [,,,]. Therefore, efficient computer-assisted techniques are necessary for the highly accurate automated identification of BC [,].

Rezaei [] recently reviewed various automatic and semiautomatic BC detection, segmentation, and classification approaches. Kwon et al. [] analyzed the effectiveness of two- and three-view scan approaches. They concluded that the two-view scan approach outperformed the three-view scan approach. In another study [], deep-learning networks were designed to distinguish between benign and malignant BU images. The developed model had a 95% area under the curve. Zhuang et al. [] proposed an image fusion method to train pre-trained models to differentiate between benign and malignant BU images. They reported a high classification rate of 95.48%. Similarly, Zhang et al. [] utilized pre-trained deep-learning models to detect malignancies. The parameters were fine-tuned using an optimization algorithm, resulting in a classification accuracy of 92.86%. Various pre-trained networks have also been utilized for the classification of BU images, resulting in high accuracy rates []. However, the primary drawback of these models is their long training time. In addition, the authors designed models for only two classes of problems (benign and malignant). Nevertheless, the model must be capable of distinguishing between the three classes of BU images (benign, malignant, and normal). To address this issue, authors of one study designed a 3D deep-learning model and achieved a high classification rate of 97.7%, albeit with a prolonged training time []. Moreover, conventional classifiers were trained to use the morphological features of the BU images to address the training time issue []. In a recent study [], deep features were computed using the ResNeT-101 structure to train a support vector machine (SVM) model. The area under the curve was used as the evaluation metric. Nevertheless, the model could only categorize the BU images into two subclasses. In another study [], the filter-based ReliefF algorithm was used to remove redundant deep features extracted from DenseNet-201 and MobileNet-v2 to train an SVM model (for three-class problems). The authors reported an accuracy of 94.57% and 90.39% for the augmented and original datasets, respectively. However, the features used in the filtering approaches were selected based on their relevance to the dependent variables. In certain circumstances, a threshold value must be selected to exclude extraneous information because it cannot link the features to the model performance. Therefore, further research is required to design a resilient and highly accurate model with short training times to classify BU images.

This study proposes a BU image-based framework for the diagnosis of BC in women. The highlights of the proposed methodology are as follows.

- Various pre-trained deep-learning models were utilized to compute the deep features of the BU images.

- Ten wrapper-based optimization algorithms were employed to compute the optimal subset of deep features, as follows: the marine predator algorithm (MPA), generalized normal distribution optimization (GNDO), slime mould algorithm (SMA), equilibrium optimizer (EO), manta-ray foraging optimization (MRFO), atom search optimization (ASO), Harris hawks optimization (HHO), Henry gas solubility optimization (HGSO), pathfinder algorithm (PFA), and poor and rich optimization (PRO).

- An SVM-based cost function was used to classify the BU images into subclasses (benign, malignant, and normal).

- Furthermore, a network selection algorithm was employed to determine the best deep-learning network.

- An online BU dataset was used to test the proposed methodology. Moreover, the findings of the proposed methodology are compared with those in the literature.

2. Methods and Materials

2.1. Breast Ultrasonography (BU) Dataset

This study used an online collection of BU images as the dataset []. The collection comprised 780 BU images from 600 women aged 25–75 years. Table 1 presents further information regarding the dataset.

Table 1.

Information on the online BU dataset [].

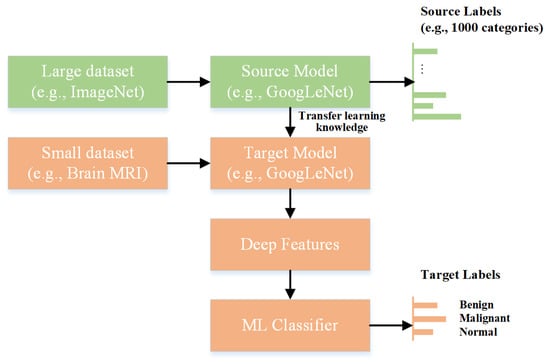

2.2. Deep Feature Extraction Using a Pre-Trained Convolutional Neural Network

The term “features” refers to the various characteristics that distinguish between different image classes. Choosing the essential attributes with the most significant fluctuations between the images can considerably improve the classification accuracy. The extraction of useful characteristics from images is a key operation that can be performed manually or with the help of a convolutional neural network. The accuracy of the manual feature extraction approach depends on image diversity, and it also takes a considerable amount of time. By contrast, convolutional neural networks are a type of deep neural network that uses convolutional, pooling, and fully connected layers to build the model architecture. It exhibits outstanding accuracy when trained on large datasets. However, when the quantity of training data is limited, the use of pre-trained networks for feature extraction may be helpful. Pre-trained models have been utilized in various medical imaging applications for image classification [,]. Figure 1 shows the concept of deep feature extraction using a pre-trained model (GoogLeNet).

Figure 1.

Extraction of deep features using a pre-trained deep-learning model.

Deep features are extracted by processing an image using a neural network and evaluating the activation of various layers. Classical machine-learning models can be used to categorize images using extracted deep features.

The following pre-trained networks were used in this study: DarkNet-19, DarkNet-53, DenseNet-201, EfficientNet-b0, GoogLeNet365, GoogLeNet, Inception-ResNet-v2, Inception-v3, MobileNet-v2, NASNet-Mobile, ResNet-101, ResNet-50, ResNet-18, ShuffleNet, SqueezeNet, and Xception. These models have distinctive qualities and capture various image characteristics, including local and global patterns. These models are useful for a variety of computer vision tasks, such as object detection, image segmentation, and image retrieval.

2.3. Optimal Feature Selection Using a Wrapper-Based Approach

Feature selection is crucial in many machine-learning applications because it directly affects model accuracy. Recognizing and using appropriate features that effectively define and categorize objects is critical. By contrast, incorporating irrelevant characteristics may diminish the accuracy. Consequently, determining the most valuable attributes and features is essential for improving the classification accuracy of the model.

The feature selection approach involves choosing an optimal subset of features from a larger collection to build the learning model. Then, using a specified criterion, the quality of the new subset is evaluated []. Many methodologies such as filter-based, wrapper-based, and embedding approaches can be used for feature selection []. These tactics not only improve classification accuracy, but they also reduce model complexity, leading to faster processing.

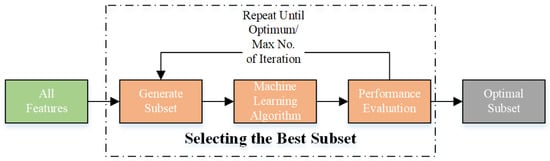

In this study, wrapper-based techniques were used to select the optimal feature subset to train the model. These algorithms are machine-learning techniques that evaluate the performance of a feature group when employed with a particular model, often known as the “wrapper” []. The algorithm evaluates the effect of the selected feature subset on the accuracy of the model. The algorithm selects the current feature subset or searches for a better subset based on the evaluation results. This approach is repeated until the best feature subset is obtained. Figure 2 illustrates the operation of the wrapper-based method. In this study, a range of metaheuristic algorithms and wrapper-based techniques were used to achieve optimum feature selection.

Figure 2.

Workflow for the wrapper-based approach.

Metaheuristic methods attempt to estimate solutions for complicated issues. As they use several low-level heuristics to handle high-level optimization tasks, they are referred to as “meta” []. The MPA, GNDO, SMA, EO, MRFO, ASO, HHO, HGSO, PFA, and PRO are examples of metaheuristic algorithms. Learning algorithms assess the performance of the generated feature subsets. Metaheuristics were employed as search algorithms to identify new optimum subsets []. Equation (1) specifies the cost functions of all the optimization strategies.

where and are the coefficients of each criterion [].

2.3.1. Marine Predators Algorithm (MPA)

MPA is a nature-inspired optimization approach that follows natural rules that determine the optimum foraging tactics and encounter rates between predators and prey in marine habitats. MPA simulation is based on the hunting and foraging habits of marine predators, such as sharks and dolphins [,]. Predation, reproduction, migration, and exploration are the four fundamental phases of the MPA. It aggressively searches for and collects food sources during the predation phase. The transmission of genetic material to offspring occurs during the reproduction phase. The exploration step involves scouring the search space for new locations. Finally, predators relocate to other regions during the migratory period.

2.3.2. Generalized Normal Distribution Optimization (GNDO)

GNDO is a method of parameter optimization or data fitting to a generalized normal distribution []. A statistical distribution, called the generalized normal distribution, expands the normal (Gaussian) distribution by adding form factors that enable more adaptable data modeling. Determining the values that best match the provided data, or optimizing a specific objective function, are steps for optimizing the parameters in a generalized normal distribution []. Many methods, including least-squares fitting and maximum likelihood estimation, can be used to complete the optimization process. The underlying distribution of the data can be learned, and statistical conclusions or forecasts can be drawn by optimizing the parameters of a generalized normal distribution. When data display non-normal features or require a more flexible distribution for modeling, this optimization approach is used in several disciplines, including finance, engineering, and data analysis.

2.3.3. Slime Mold Algorithm (SMA)

SMA is a computational optimization approach developed after studying the behavior of slime molds []. Slime molds are single-celled creatures capable of self-organization and emergent behavior. This allows them to tackle challenging issues, such as determining the shortest route between food sources []. To resolve optimization issues, the SMA imitates the foraging behavior of slime molds. It begins with a population of digital particles that stand in for the individual slime molds. These particles travel across the search area while leaving a pheromone trail in their wake. Exploration, pattern generation, and exploitation are the three key phases of this algorithm []. The search space is randomly explored by particles during the exploration phase, leaving a pheromone trail in their wake. Pheromone trails attract particles during the pattern generation phase, prompting them to gather and create patterns. Finally, depending on the pheromone trails, the particles converge toward the most promising locations in the search space during exploitation. The SMA has several benefits, including versatility when solving continuous and discrete optimization issues, parallelizability, and resilience when addressing challenging and dynamic problem domains.

2.3.4. Equilibrium Optimizer (EO)

EO is a metaheuristic optimization method based on the physical concept of equilibrium proposed by Faramarzi et al. [,]. The goal is to simulate the equilibrium state of a physical system to determine the optimum solution for a given issue. The optimization issue is represented as a population of individuals, each corresponding to a potential solution. Based on their fitness levels and the concept of equilibrium, the program iteratively adjusts the placement of these individuals. EO is divided into initialization, position update, and equilibrium update. The initial population of individuals is randomly generated during the startup process. The location of each individual is modified during the position–update step, depending on its present position, the positions of other individuals, and a series of mathematical equations derived from physical principles. This updating process attempts to explore the search space and converge on superior solutions. This method then modifies the parameters associated with equilibrium during the equilibrium update phase to achieve a balanced state in the population.

2.3.5. Manta-Ray Foraging Optimization (MRFO)

MRFO is a metaheuristic optimization technique inspired by nature and the foraging behavior of manta rays. The manta ray is renowned for its effective foraging techniques []. The MRFO algorithm simulates the foraging behavior of manta rays to solve optimization issues. The potential solutions are represented as a set of rays, each corresponding to a candidate solution, using a population-based technique []. These beams scour the search area to find the best solution. Numerous crucial phases are present in the MRFO algorithm. First, a starting population of rays is randomly created within the search space. Each ray represents a potential resolution. The rays then travel across the search area using various foraging techniques motivated by manta-ray behavior. These tactics include searching for food sources, avoiding hazards, and preserving social contact. The rays adjust their movements during the optimization process, depending on their own experience and the collective wisdom of the population. They alter their placement and speed to investigate interesting areas and obtain better solutions. The algorithm uses a fitness evaluation technique to determine the quality of each ray’s position and directs the exploration and exploitation stages.

2.3.6. Atom Search Optimization (ASO)

ASO is a newly developed physics-inspired metaheuristic optimization method designed to address a wide range of optimization issues []. It is motivated by fundamental molecular dynamics. The atomic motion model found in nature, wherein atoms interact through interaction forces originating from the Lennard–Jones potential, and constraint forces arising from the bond–length potential, is theoretically modeled and imitated by the ASO. The ASO is straightforward and simple to use. Further details on the algorithm can be found in [,].

2.3.7. Harris Hawks Optimization (HHO)

Heidari et al. [] proposed the HHO algorithm in 2019. It is a nature-inspired optimization algorithm based on the hunting behavior of the Harris’s hawk, a raptor species. A population-based optimization technique, the HHO algorithm, simulates the social structures and foraging habits of Harris’s hawks. In the wild, Harris’s hawks engage in intriguing group behaviors when hunting. They use a cooperative hunting technique in which certain hawks lead the group toward the prey, while others follow. The HHO simulates this behavior by categorizing the population into leaders and followers. Leaders seek potential solutions within the search space. Meanwhile, followers alter their positions based on the leaders’ information and they update their positions. This hierarchical structure allows for compelling search space exploration and exploitation. The HHO algorithm uses various operators to mimic the numerous hunting strategies employed by Harris’s hawks, including location updates, prey capture, and knowledge transfer. Using these operators iteratively optimizes a specified goal function. Numerous optimization issues, such as numerical optimization, engineering design, and feature selection, have been addressed using HHO [].

2.3.8. Henry Gas Solubility Optimization (HGSO)

HGSO is a revolutionary metaheuristic algorithm that solves difficult optimization problems by mimicking the behavior regulated by Henry’s law []. Henry’s law is a fundamental gas law that describes the amount of a given gas dissolved in a particular type and volume of liquid at a given temperature. To balance exploitation and exploration in the search space and prevent local optima, the HGSO algorithm mimics the huddling behavior of gas. Further details regarding HGSO can be found in [].

2.3.9. Path Finder Algorithm (PFA)

PFA is a metaheuristic optimization technique developed to address various optimization issues. The PFA is inspired by the collective movement of animals and it replicates the leadership structure of swarming to determine the best possible feeding location, or it locates prey []. The PFA uses multiple processes such as pheromone deposition, probabilistic selection, and local search algorithms to improve its exploration and exploitation capabilities. It also has adaptive settings and self-adjusting technologies that dynamically govern the search process. Further details regarding PFA can be found in [].

2.3.10. Poor and Rich Optimization (PRO)

The interactions between the wealthy and the poor, as they work to increase their wealth and economic standing, serve as the basis for the PRO algorithm []. The rich always try to expand the wealth gap by gaining more wealth through various means. By contrast, the poor want to increase their money and narrow the wealth gap by imitating the affluent. It is vital to emphasize that this conflict is continuous and that people from both groups have the ability to move between rich and poor categories. The algorithm uses a two-stage approach. During the exploration phase, rich and poor people explore the search area individually. To improve their seeking capacity, the poor mimic the movements and actions of the wealthy. Using a cooperative search approach, the exploitation phase focuses on exploitation. Rich people impart their expertise and information to help poor people increase their search efficiency. Further details regarding PRO can be found in [].

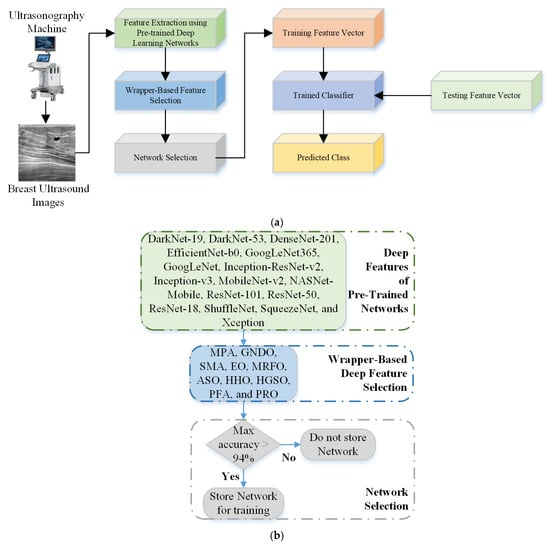

3. Proposed Framework for Breast Cancer Detection

BU is a reliable tool that is often used as a diagnostic technique following an abnormal mammogram or clinical breast examination. It provides additional information to guide subsequent investigations such as a fine-needle aspiration, core biopsy, or surgical excision. This study aims to develop an intelligent machine-learning model capable of detecting BC and classifying it into further classes (benign and malignant). After acquiring the images from the machine, pre-trained models were used to extract the deep features, as discussed in Section 2.2. Next, ten wrapper-based optimization algorithms, MPA, GNDO, SMA, EO, MRFO, ASO, HHO, HGSO, PFA, and PRO, were used to retrieve the most informative features, as discussed in Section 2.3. In the next step, a network selection algorithm with a classification accuracy above 94% was employed to select and concatenate the deep features. A complete flowchart of the proposed framework is presented in Figure 3.

Figure 3.

(a) Proposed flowchart for the diagnosis of BC; (b) Network selection approach.

An optimization algorithm with an accuracy of more than 94% for the deep features of a single network was used to select deep-learning networks during the network selection phase. Furthermore, the classical machine-learning SVM model was utilized for BC classification [,].

4. Results and Discussion

In this study, the proposed wrapper-based deep network selection method was employed to improve the performance of BU images for detecting and distinguishing BC types. An available online BC dataset was used to test the proposed framework [], as described in Section 2.1. As previously discussed, discrimination between the BU images of BC subtypes was achieved by employing the deep features of multiple pre-trained models. The wrapper-based algorithms outlined above (MPA, GNDO, SMA, EO, MRFO, ASO, HHO, HGSO, PFA, and PRO) were used to retrieve important features. MATLAB 2023a, running on a computer with the following specifications, was used for all processing and analyses, and it comprised the following: 32 GB of RAM, 1 TB SSD, 11th Generation, Intel(R) Core (TM) i7-10700, and 64-bit Windows 11 Pro. The 0.2 holdout validation approach was used to divide the dataset into training and testing datasets. To avoid overfitting, 20% of data were not used in training. The population size of each algorithm was set to 10; 100 was the maximum number of iterations. The values of and were set as 0.99 and 0.01, respectively []. All other parameters of each optimization algorithm are listed in Table 2.

Table 2.

MPA, GNDO, SMA, EO, MRFO, ASO, HHO, HGSO, PFA, and PRO parameters.

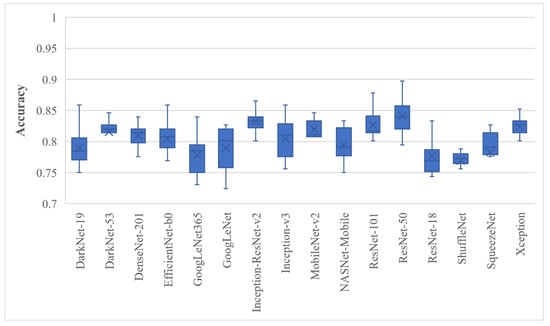

First, the deep features of all 16 models were extracted before the softmax layer and they were used for training the SVM model; the results are presented in Figure 4.

Figure 4.

BU image classification that used the full deep features of various pre-trained models trained with SVM; whiskers represent the range.

Figure 4 shows box plots of the classification accuracy for the full features of the pre-trained models. The BU dataset was randomly divided ten times in the 80% to 20% ratio; 20% of the data were retained and not used for model training. The results depicted in Figure 4 concluded that the ResNet-50 and Inception-ResNet-v2 trained SVMs achieved the highest average classification accuracy of 84.17 ± 3.08% and 83.33 ± 2.79%, respectively. The proposed approach was then employed, and the results are shown in Figure 5.

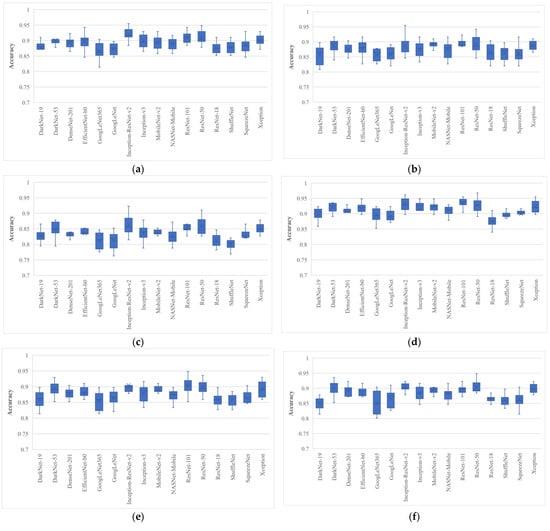

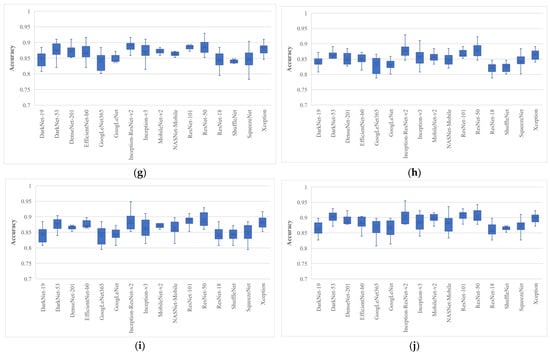

Figure 5.

Classification accuracy of various wrapper-based optimization approaches for BU images: (a) MPA; (b) GNDO; (c) SMA; (d) EO; (e)MRFO; (f) ASO; (g) HHO; (h) HGSO; (i) PFA; (j) PRO, where whiskers represent the range.

After analyzing the results, it was found that the EO algorithm produced the highest classification accuracy of 96.79%, trained with only 562 features, and had a minimum average classification accuracy of 89.1% when trained with a feature vector with a size of 570 with ResNet-50 deep features. Similarly, the Inception-ResNet-v2 had the second highest classification accuracy of 96.15% for a single run. It is also clear from Figure 5 that all the pre-trained models achieved their best accuracies with the EO algorithm. The average classification accuracy and average feature number of each pre-trained model for each optimization algorithm are listed in Table 3 and Table 4, respectively.

Table 3.

Classification accuracy of various wrapper-based optimization algorithms with deep features of various pre-trained deep-learning models (data represented as the mean ± the standard deviation of ten runs).

Table 4.

Feature vector sizes of various wrapper-based optimization algorithms for deep features of various pre-trained deep-learning model (data represented as the mean ± the standard deviation of ten runs).

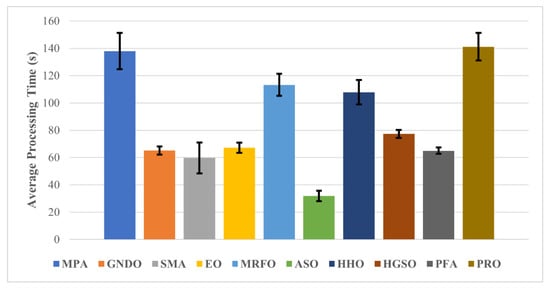

The average processing times of all algorithms for the pre-trained deep-learning model feature optimization are shown in Figure 6.

Figure 6.

Average processing time of each wrapper-based method.

After carefully analyzing the outcomes of processing time, it can be observed that the ASO uses the least computation time of only 31.79 ± 3.78 s, with a reasonable average classification accuracy of more than 88%. By contrast, the best optimization algorithm (EO) used less than 70 s to process the data, with an average accuracy of 94.69 ± 2.48, for ResNet-50 deep features. A comparison of the proposed approach with other BC detection approaches is presented in Table 5.

Table 5.

Comparison of the proposed approach with other BC detection approaches.

BU imaging is critical for evaluating breast lesions in the presence of palpable lumps or breast discomfort. Mammography is widely considered the primary method for the early diagnosis of BC. However, its usefulness in young women with dense breast tissue is limited. Furthermore, BU is an ideal diagnostic approach for minimizing the hazards of mammography radiation exposure [].

Furthermore, BU imaging offers comprehensive information on solid lesions. Cysts are the most common type of benign breast lesions in women. Anechoic, thin-walled, and well-circumscribed lesions are visible on BU images. In addition to being noninvasive, nonradioactive, and cost-effective, BU imaging is well tolerated by female patients. Deep-learning approaches have advanced to the point where they can help in BC diagnosis [,,]. In one study [], the authors used the deep features of a pre-trained model, and the filter-based method, minimum-redundancy maximum-relevance, was used to extract the optimal feature subset; the model achieved an accuracy of 95.6% for an augmented dataset. Similarly, Alduraibi [] applied the ReliefF filter-based method to determine the relevant features of a pre-trained model and achieved accuracies of 94.57 and 90.39% for augmented and original datasets, respectively. Regarding filter-based approaches, the optimal subset is selected based on its relevance to the dependent variable; the machine-learning algorithm is not used to select the features. Therefore, a favorable outcome cannot be guaranteed. However, in the case of wrapper-based methods, extracting the optimal features by testing them using a machine-learning model guarantees reliability and high classification performance. A comparison of the proposed study with previously published works showed the superiority of the proposed approach in terms of a high classification rate (a rise of 1.05% compared with the study that had the highest classification accuracy []). Therefore, the proposed BU framework may help practitioners to detect BC quickly and effectively.

5. Conclusions

In this study, a wrapper-based BU image classification methodology was designed to improve the BC detection capability in women. The deep features of the 16 pre-trained models were extracted, and ten optimization algorithms (MPA, GNDO, SMA, EO, MRFO, ASO, HHO, HGSO, PFA, and PRO) were used to retrieve the optimal features using the SVM classifier. The classification performance of each pre-trained model was significantly improved, and the size of the feature vector was decreased using optimization algorithms. A network selection strategy was used to determine the best features of the pre-trained network. After comprehensive testing and analysis, the EO algorithm produced the highest classification rate for each pre-trained model. It produced the highest classification accuracy of 96.79%, trained with a deep feature vector, with a size of 562 in the ResNet-50 model. Similarly, the Inception-ResNet-v2 had the second highest classification accuracy of 96.15% for a single run using the EO algorithm. Therefore, the proposed BU framework may be helpful for automatic BC identification.

Author Contributions

Conceptualization, A.Z. and M.U.A.; Methodology, A.Z.; Software, A.Z.; Validation, J.T.; Formal analysis: J.T. and M.U.A.; Writing—original draft, A.Z. and J.T.; Writing—review & editing, M.U.A. and S.W.L.; Supervision, S.W.L.; Project administration, S.W.L.; Funding acquisition, S.W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by a National Research Foundation of Korea (NRF) grant funded by the Korean government (MOE) (NRF2021R1I1A2059735) (S.W.L).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are included in the article.

Acknowledgments

This work was supported by a National Research Foundation of Korea (NRF) grant funded by the Korean government (MOE) (NRF2021R1I1A2059735) (S.W.L).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA A Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef]

- Cedolini, C.; Bertozzi, S.; Londero, A.P.; Bernardi, S.; Seriau, L.; Concina, S.; Cattin, F.; Risaliti, A. Type of Breast Cancer Diagnosis, Screening, and Survival. Clin. Breast Cancer 2014, 14, 235–240. [Google Scholar] [CrossRef] [PubMed]

- Ren, W.; Chen, M.; Qiao, Y.; Zhao, F. Global guidelines for breast cancer screening: A systematic review. Breast 2022, 64, 85–99. [Google Scholar] [CrossRef]

- Osako, T.; Takahashi, K.; Iwase, T.; Iijima, K.; Miyagi, Y.; Nishimura, S.; Tada, K.; Makita, M.; Akiyama, F.; Sakamoto, G.; et al. diagnostic ultrasonography and mammography for invasive and noninvasive breast cancer in women aged 30 to 39 years. Breast Cancer 2007, 14, 229–233. [Google Scholar] [CrossRef] [PubMed]

- Niell, B.L.; Freer, P.E.; Weinfurtner, R.J.; Arleo, E.K.; Drukteinis, J.S. Screening for Breast Cancer. Radiol. Clin. N. Am. 2017, 55, 1145–1162. [Google Scholar] [CrossRef]

- Lee, J.M.; Arao, R.F.; Sprague, B.L.; Kerlikowske, K.; Lehman, C.D.; Smith, R.A.; Henderson, L.M.; Rauscher, G.H.; Miglioretti, D.L. Performance of Screening Ultrasonography as an Adjunct to Screening Mammography in Women Across the Spectrum of Breast Cancer Risk. JAMA Intern. Med. 2019, 179, 658–667. [Google Scholar] [CrossRef] [PubMed]

- Yap, M.H.; Pons, G.; Marti, J.; Ganau, S.; Sentis, M.; Zwiggelaar, R.; Davison, A.K.; Marti, R.; Moi Hoon, Y.; Pons, G.; et al. Automated Breast Ultrasound Lesions Detection Using Convolutional Neural Networks. IEEE J. Biomed. Health Inform. 2018, 22, 1218–1226. [Google Scholar] [CrossRef]

- Berg, W.A.; Gutierrez, L.; NessAiver, M.S.; Carter, W.B.; Bhargavan, M.; Lewis, R.S.; Ioffe, O.B. Diagnostic Accuracy of Mammography, Clinical Examination, US, and MR Imaging in Preoperative Assessment of Breast Cancer. Radiology 2004, 233, 830–849. [Google Scholar] [CrossRef]

- Zafar, A.; Dad Kallu, K.; Atif Yaqub, M.; Ali, M.U.; Hyuk Byun, J.; Yoon, M.; Su Kim, K. A Hybrid GCN and Filter-Based Framework for Channel and Feature Selection: An fNIRS-BCI Study. Int. J. Intell. Syst. 2023, 2023, 8812844. [Google Scholar] [CrossRef]

- Ali, M.U.; Kallu, K.D.; Masood, H.; Tahir, U.; Gopi, C.V.V.M.; Zafar, A.; Lee, S.W. A CNN-Based Chest Infection Diagnostic Model: A Multistage Multiclass Isolated and Developed Transfer Learning Framework. Int. J. Intell. Syst. 2023, 2023, 6850772. [Google Scholar] [CrossRef]

- Ali, M.U.; Hussain, S.J.; Zafar, A.; Bhutta, M.R.; Lee, S.W. WBM-DLNets: Wrapper-Based Metaheuristic Deep Learning Networks Feature Optimization for Enhancing Brain Tumor Detection. Bioengineering 2023, 10, 475. [Google Scholar] [CrossRef] [PubMed]

- Zafar, A.; Hussain, S.J.; Ali, M.U.; Lee, S.W. Metaheuristic Optimization-Based Feature Selection for Imagery and Arithmetic Tasks: An fNIRS Study. Sensors 2023, 23, 3714. [Google Scholar] [CrossRef] [PubMed]

- Alanazi, M.F.; Ali, M.U.; Hussain, S.J.; Zafar, A.; Mohatram, M.; Irfan, M.; AlRuwaili, R.; Alruwaili, M.; Ali, N.H.; Albarrak, A.M. Brain Tumor/Mass Classification Framework Using Magnetic-Resonance-Imaging-Based Isolated and Developed Transfer Deep-Learning Model. Sensors 2022, 22, 372. [Google Scholar] [CrossRef] [PubMed]

- Almalki, Y.E.; Ali, M.U.; Ahmed, W.; Kallu, K.D.; Zafar, A.; Alduraibi, S.K.; Irfan, M.; Basha, M.A.A.; Alshamrani, H.A.; Alduraibi, A.K. Robust Gaussian and Nonlinear Hybrid Invariant Clustered Features Aided Approach for Speeded Brain Tumor Diagnosis. Life 2022, 12, 1084. [Google Scholar] [CrossRef]

- Almalki, Y.E.; Ali, M.U.; Kallu, K.D.; Masud, M.; Zafar, A.; Alduraibi, S.K.; Irfan, M.; Basha, M.A.A.; Alshamrani, H.A.; Alduraibi, A.K.; et al. Isolated Convolutional-Neural-Network-Based Deep-Feature Extraction for Brain Tumor Classification Using Shallow Classifier. Diagnostics 2022, 12, 1793. [Google Scholar] [CrossRef]

- Zahid, U.; Ashraf, I.; Khan, M.A.; Alhaisoni, M.; Yahya, K.M.; Hussein, H.S.; Alshazly, H. BrainNet: Optimal Deep Learning Feature Fusion for Brain Tumor Classification. Comput. Intell. Neurosci. 2022, 2022, 1465173. [Google Scholar] [CrossRef]

- Zhang, H.; Gao, Z.; Zhang, D.; Hau, W.K.; Zhang, H. Progressive Perception Learning for Main Coronary Segmentation in X-Ray Angiography. IEEE Trans. Med. Imaging 2023, 42, 864–879. [Google Scholar] [CrossRef]

- Xiao, T.; Liu, L.; Li, K.; Qin, W.; Yu, S.; Li, Z. Comparison of Transferred Deep Neural Networks in Ultrasonic Breast Masses Discrimination. BioMed Res. Int. 2018, 2018, 4605191. [Google Scholar] [CrossRef]

- Radak, M.; Lafta, H.Y.; Fallahi, H. Machine learning and deep learning techniques for breast cancer diagnosis and classification: A comprehensive review of medical imaging studies. J. Cancer Res. Clin. Oncol. 2023. [Google Scholar] [CrossRef]

- Rezaei, Z. A review on image-based approaches for breast cancer detection, segmentation, and classification. Expert. Syst. Appl. 2021, 182, 115204. [Google Scholar] [CrossRef]

- Kwon, B.R.; Chang, J.M.; Kim, S.Y.; Lee, S.H.; Kim, S.-Y.; Lee, S.M.; Cho, N.; Moon, W.K. Automated Breast Ultrasound System for Breast Cancer Evaluation: Diagnostic Performance of the Two-View Scan Technique in Women with Small Breasts. Korean J. Radiol. 2020, 21, 25–32. [Google Scholar] [CrossRef] [PubMed]

- Sun, Q.; Lin, X.; Zhao, Y.; Li, L.; Yan, K.; Liang, D.; Sun, D.; Li, Z.-C. Deep Learning vs. Radiomics for Predicting Axillary Lymph Node Metastasis of Breast Cancer Using Ultrasound Images: Don’t Forget the Peritumoral Region. Front. Oncol. 2020, 10. [Google Scholar] [CrossRef] [PubMed]

- Zhuang, Z.; Yang, Z.; Raj, A.N.J.; Wei, C.; Jin, P.; Zhuang, S. Breast ultrasound tumor image classification using image decomposition and fusion based on adaptive multi-model spatial feature fusion. Comput. Methods Programs Biomed. 2021, 208, 106221. [Google Scholar] [CrossRef]

- Zhang, X.; Li, H.; Wang, C.; Cheng, W.; Zhu, Y.; Li, D.; Jing, H.; Li, S.; Hou, J.; Li, J. Evaluating the accuracy of breast cancer and molecular subtype diagnosis by ultrasound image deep learning model. Front. Oncol. 2021, 11, 623506. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Han, L.; Chen, K.; Peng, Y.; Lin, J. Diagnostic Efficiency of the Breast Ultrasound Computer-Aided Prediction Model Based on Convolutional Neural Network in Breast Cancer. J. Digit. Imaging 2020, 33, 1218–1223. [Google Scholar] [CrossRef] [PubMed]

- Moon, W.K.; Huang, Y.-S.; Hsu, C.-H.; Chang Chien, T.-Y.; Chang, J.M.; Lee, S.H.; Huang, C.-S.; Chang, R.-F. Computer-aided tumor detection in automated breast ultrasound using a 3-D convolutional neural network. Comput. Methods Programs Biomed. 2020, 190, 105360. [Google Scholar] [CrossRef]

- Nascimento, C.D.L.; Silva, S.D.d.S.; Silva, T.A.d.; Pereira, W.C.d.A.; Costa, M.G.F.; Costa, C.F.F. Breast tumor classification in ultrasound images using support vector machines and neural networks. Res. Biomed. Eng. 2016, 32, 283–292. [Google Scholar] [CrossRef]

- Shia, W.-C.; Chen, D.-R. Classification of malignant tumors in breast ultrasound using a pretrained deep residual network model and support vector machine. Comput. Med. Imaging Graph. 2021, 87, 101829. [Google Scholar] [CrossRef]

- Alduraibi, S.-K. A Novel Convolutional Neural Networks-Fused Shallow Classifier for Breast Cancer Detection. Intell. Autom. Soft Comput. 2022, 33, 1321–1334. [Google Scholar] [CrossRef]

- Al-Dhabyani, W.; Gomaa, M.; Khaled, H.; Fahmy, A. Dataset of breast ultrasound images. Data Brief. 2020, 28, 104863. [Google Scholar] [CrossRef]

- Baltruschat, I.M.; Nickisch, H.; Grass, M.; Knopp, T.; Saalbach, A. Comparison of Deep Learning Approaches for Multi-Label Chest X-ray Classification. Sci. Rep. 2019, 9, 6381. [Google Scholar] [CrossRef]

- Kang, J.; Gwak, J. Ensemble of Instance Segmentation Models for Polyp Segmentation in Colonoscopy Images. IEEE Access 2019, 7, 26440–26447. [Google Scholar] [CrossRef]

- Dash, M.; Liu, H. Feature selection for classification. Intell. Data Anal. 1997, 1, 131–156. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An introduction to variable and feature selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Kohavi, R.; John, G.H. Wrappers for feature subset selection. Artif. Intell. 1997, 97, 273–324. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Abdel-Fatah, L.; Sangaiah, A.K. Metaheuristic algorithms: A comprehensive review. Comput. Intell. Multimed. Big Data Cloud Eng. Appl. 2018, 185–231. [Google Scholar] [CrossRef]

- Liu, W.; Wang, J. A Brief Survey on Nature-Inspired Metaheuristics for Feature Selection in Classification in this Decade. In Proceedings of the 2019 IEEE 16th International Conference on Networking, Sensing and Control (ICNSC), Banff, ALB, Canada, 9–11 May 2019; pp. 424–429. [Google Scholar]

- Agrawal, P.; Abutarboush, H.F.; Ganesh, T.; Mohamed, A.W. Metaheuristic Algorithms on Feature Selection: A Survey of One Decade of Research (2009–2019). IEEE Access 2021, 9, 26766–26791. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A nature-inspired metaheuristic. Expert. Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Rai, R.; Dhal, K.G.; Das, A.; Ray, S. An Inclusive Survey on Marine Predators Algorithm: Variants and Applications. Arch. Comput. Methods Eng. 2023, 30, 3133–3172. [Google Scholar] [CrossRef]

- Zhang, Y.; Jin, Z.; Mirjalili, S. Generalized normal distribution optimization and its applications in parameter extraction of photovoltaic models. Energy Convers. Manag. 2020, 224, 113301. [Google Scholar] [CrossRef]

- Vega-Forero, J.A.; Ramos-Castellanos, J.S.; Montoya, O.D. Application of the Generalized Normal Distribution Optimization Algorithm to the Optimal Selection of Conductors in Three-Phase Asymmetric Distribution Networks. Energies 2023, 16, 1311. [Google Scholar] [CrossRef]

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Tang, A.-D.; Tang, S.-Q.; Han, T.; Zhou, H.; Xie, L. A Modified Slime Mould Algorithm for Global Optimization. Comput. Intell. Neurosci. 2021, 2021, 2298215. [Google Scholar] [CrossRef]

- Chakraborty, P.; Nama, S.; Saha, A.K. A hybrid slime mould algorithm for global optimization. Multimed. Tools Appl. 2022, 82, 22441–22467. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Stephens, B.; Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl.-Based Syst. 2020, 191, 105190. [Google Scholar] [CrossRef]

- Faramarzi, A.; Mirjalili, S.; Heidarinejad, M. Binary equilibrium optimizer: Theory and application in building optimal control problems. Energy Build. 2022, 277, 112503. [Google Scholar] [CrossRef]

- Zhao, W.; Zhang, Z.; Wang, L. Manta ray foraging optimization: An effective bio-inspired optimizer for engineering applications. Eng. Appl. Artif. Intell. 2020, 87, 103300. [Google Scholar] [CrossRef]

- Hassan, I.H.; Abdullahi, M.; Aliyu, M.M.; Yusuf, S.A.; Abdulrahim, A. An improved binary manta ray foraging optimization algorithm based feature selection and random forest classifier for network intrusion detection. Intell. Syst. Appl. 2022, 16, 200114. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Zhang, Z. Atom search optimization and its application to solve a hydrogeologic parameter estimation problem. Knowl.-Based Syst. 2019, 163, 283–304. [Google Scholar] [CrossRef]

- Wang, P.; He, W.; Guo, F.; He, X.; Huang, J. An improved atomic search algorithm for optimization and application in ML DOA estimation of vector hydrophone array. AIMS Math. 2022, 7, 5563–5593. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Bairathi, D.; Gopalani, D. A novel swarm intelligence based optimization method: Harris’ hawk optimization. In Proceedings of the Intelligent Systems Design and Applications: 18th International Conference on Intelligent Systems Design and Applications (ISDA 2018), Vellore, India, 6–8 December 2018; Volume 2, pp. 832–842. [Google Scholar]

- Hashim, F.A.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W.; Mirjalili, S. Henry gas solubility optimization: A novel physics-based algorithm. Future Gener. Comput. Syst. 2019, 101, 646–667. [Google Scholar] [CrossRef]

- Yapici, H.; Cetinkaya, N. A new meta-heuristic optimizer: Pathfinder algorithm. Appl. Soft Comput. 2019, 78, 545–568. [Google Scholar] [CrossRef]

- Samareh Moosavi, S.H.; Bardsiri, V.K. Poor and rich optimization algorithm: A new human-based and multi populations algorithm. Eng. Appl. Artif. Intell. 2019, 86, 165–181. [Google Scholar] [CrossRef]

- Cristianini, N.; Shawe-Taylor, J. An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Smola, A.J.; Schölkopf, B. A tutorial on support vector regression. Stat. Comput. 2004, 14, 199–222. [Google Scholar] [CrossRef]

- Liao, W.X.; He, P.; Hao, J.; Wang, X.Y.; Yang, R.L.; An, D.; Cui, L.G. Automatic Identification of Breast Ultrasound Image Based on Supervised Block-Based Region Segmentation Algorithm and Features Combination Migration Deep Learning Model. IEEE J. Biomed. Health Inform. 2020, 24, 984–993. [Google Scholar] [CrossRef]

- Huang, Q.; Chen, Y.; Liu, L.; Tao, D.; Li, X. On Combining Biclustering Mining and AdaBoost for Breast Tumor Classification. IEEE Trans. Knowl. Data Eng. 2020, 32, 728–738. [Google Scholar] [CrossRef]

- Yu, X.; Kang, C.; Guttery, D.S.; Kadry, S.; Chen, Y.; Zhang, Y.D. ResNet-SCDA-50 for Breast Abnormality Classification. IEEE/ACM Trans. Comput. Biol. Bioinform. 2021, 18, 94–102. [Google Scholar] [CrossRef]

- Moon, W.K.; Lee, Y.-W.; Ke, H.-H.; Lee, S.H.; Huang, C.-S.; Chang, R.-F. Computer-aided diagnosis of breast ultrasound images using ensemble learning from convolutional neural networks. Comput. Methods Programs Biomed. 2020, 190, 105361. [Google Scholar] [CrossRef]

- Eroğlu, Y.; Yildirim, M.; Çinar, A. Convolutional Neural Networks based classification of breast ultrasonography images by hybrid method with respect to benign, malignant, and normal using mRMR. Comput. Biol. Med. 2021, 133, 104407. [Google Scholar] [CrossRef]

- Kim, E.S.; Cho, N.; Kim, S.-Y.; Kwon, B.R.; Yi, A.; Ha, S.M.; Lee, S.H.; Chang, J.M.; Moon, W.K. Comparison of Abbreviated MRI and Full Diagnostic MRI in Distinguishing between Benign and Malignant Lesions Detected by Breast MRI: A Multireader Study. Korean J. Radiol. 2021, 22, 297–307. [Google Scholar] [CrossRef]

- Sheth, D.; Giger, M.L. Artificial intelligence in the interpretation of breast cancer on MRI. J. Magn. Reson. Imaging 2020, 51, 1310–1324. [Google Scholar] [CrossRef]

- Geras, K.J.; Mann, R.M.; Moy, L. Artificial Intelligence for Mammography and Digital Breast Tomosynthesis: Current Concepts and Future Perspectives. Radiology 2019, 293, 246–259. [Google Scholar] [CrossRef]

- Le, E.P.V.; Wang, Y.; Huang, Y.; Hickman, S.; Gilbert, F.J. Artificial intelligence in breast imaging. Clin. Radiol. 2019, 74, 357–366. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).