Abstract

Artificial intelligence and emerging data science techniques are being leveraged to interpret medical image scans. Traditional image analysis relies on visual interpretation by a trained radiologist, which is time-consuming and can, to some degree, be subjective. The development of reliable, automated diagnostic tools is a key goal of radiomics, a fast-growing research field which combines medical imaging with personalized medicine. Radiomic studies have demonstrated potential for accurate lung cancer diagnoses and prognostications. The practice of delineating the tumor region of interest, known as segmentation, is a key bottleneck in the development of generalized classification models. In this study, the incremental multiple resolution residual network (iMRRN), a publicly available and trained deep learning segmentation model, was applied to automatically segment CT images collected from 355 lung cancer patients included in the dataset “Lung-PET-CT-Dx”, obtained from The Cancer Imaging Archive (TCIA), an open-access source for radiological images. We report a failure rate of 4.35% when using the iMRRN to segment tumor lesions within plain CT images in the lung cancer CT dataset. Seven classification algorithms were trained on the extracted radiomic features and tested for their ability to classify different lung cancer subtypes. Over-sampling was used to handle unbalanced data. Chi-square tests revealed the higher order texture features to be the most predictive when classifying lung cancers by subtype. The support vector machine showed the highest accuracy, 92.7% (0.97 AUC), when classifying three histological subtypes of lung cancer: adenocarcinoma, small cell carcinoma, and squamous cell carcinoma. The results demonstrate the potential of AI-based computer-aided diagnostic tools to automatically diagnose subtypes of lung cancer by coupling deep learning image segmentation with supervised classification. Our study demonstrated the integrated application of existing AI techniques in the non-invasive and effective diagnosis of lung cancer subtypes, and also shed light on several practical issues concerning the application of AI in biomedicine.

1. Introduction

Lung cancer is the leading cause of cancer-related death in the United States [1,2]. Computed tomography (CT) imaging remains one of the standard-of-care diagnostic tools for staging lung cancers. However, the conventional interpretation of radiological images can be, to some degree, affected by radiologists’ training and experience, and is therefore somewhat subjective and mostly qualitative by nature. While radiological images provide key information on the dimensions and extent of a tumor, they are unsuitable for assessing clinical–pathological information (e.g., histological features, levels of differentiation, or molecular characteristics) that is critical for the treatment selection process. Thus, the process of diagnosing cancer patients often requires invasive and sometime risky medical procedures, such as the collection of tissue biopsies. Finding new solutions for collecting critical microscopic and molecular features with non-invasive and operator-independent approaches remains a high priority in oncology. The development of reliable, non-invasive computer-aided diagnostic (CAD) tools may provide novel means to address these problems.

Image digitalization coupled with artificial intelligence is emerging as a powerful tool for generating large-scale quantitative data from high-resolution medical images and for identifying patterns that can predict biological processes and pathophysiological changes [3]. Preliminary studies have suggested that objective and quantitative structures that go beyond conventional image examination can predict the histopathological and molecular characteristics of a tumor in a non-invasive way [4]. Several new tools are now available for image analysis, and the machine learning processing pipeline enables automatic segmentation, feature extraction, and model building (Figure 1).

Figure 1.

Diagram describing the process of the radiomic machine learning workflow. First, CT image scans are acquired. Tumor regions are delineated during segmentation. Mathematical features are extracted from the tumor segmentations using software tools. These features are used to train machine learning models to make predictions using new data.

Segmentation is a critical part of the radiomic process, but it is also known to be challenging. Manual segmentation is labor-intensive and can be subject to inter-reader variability [5,6]. To improve segmentation efficiency and accuracy, the development of automated or semi-automated segmentation methods has become an active area of research [7]. Several deep learning models have been used to segment lung tumors from CT scans. However, validation of the reproducibility of these proposed methods using large datasets is still limited [8,9,10,11], and this has hindered their application in clinical settings. Deep learning tools such as U-Net and E-Net have been previously used to automatically segment non-small cell lung tumors and nodules in CT images, but these models were not specifically trained using lung cancer patient data [8].

The incremental multiple resolution residual network (iMRRN) is one of the best performing deep learning methods to have been developed for volumetric lung tumor segmentation from CT images [8,9,10,12]. The iMRRN extends the full resolution residual neural network by combining features at multiple image resolutions and feature levels. It is composed of multiple blocks of sequentially connected residual connection units (RCU), which in turn are convolutional filters used at each network layer. Due to its enhanced capability in recovering input image resolution, the iMRRN has been shown to outperform other neural networks commonly used for segmentation, such as Segnet and Unet, in terms of segmentation accuracy, regardless of tumor size, and localization [7,8,13,14]. Additionally, the iMRRN has also been shown to produce accurate segmentations and three-dimensional tumor volumes when compared with manual tracing by trained radiologists [8]. Its excellent segmentation performance and its public availability make the iMRRN an excellent candidate for other researchers to use.

In this study, we assessed the extent to which the iMRRN coupled with supervised classification can predict lung tumor subtypes based on CT images acquired from lung cancer patients. Segmentation performance was used to inform improvements to the tumor delineation process when deep learning models were used. The automated segmentation of lung tumors yielded quantifiable radiomic features to train classification algorithms. Seven machine learning classifiers were compared for their accuracy in differentiating three histological subtypes of lung cancer using CT image features. The most discriminating features and most accurate classification learners were ranked.

Our study was prompted by the fact that disconnections between AI research and clinical applications exist, and we have provided a framework to close such gaps. These gaps are not due to a lack of advanced and sophisticated AI models, but to insufficient validation of the integration of existing methods using new datasets and new clinical questions. Our study validated a framework to close one such gap. Our key contributions are summarized as follows. First, we demonstrated the feasibility of directly applying a trained DL model that is publicly available to a completely new CT dataset collected for other purposes with minimal inputs from radiologists. We recognized the limitations of applying trained DL models to new datasets and proposed the incorporation radiologists’ input on approximate tumor locations for reliable and targeted segmentation. Second, we systematically examined the accuracy of this integrated approach through lung cancer subtype prediction using the segmentation results from the DL model. Third, we discerned the practical issue of unbalanced data and demonstrated that an over-sampling approach such as SMOTE (synthetic minority oversampling technique) can effectively improve the accuracy with which real clinical data are classified. Fourth, for the first time, we demonstrated that radiomic analysis is able to classify three subtypes of lung cancers with accuracy comparable to that of two-subtype classification.

We believe that our study outcomes are of more use to researchers in the applied AI and/or biomedicine communities than to those whose expertise is novel AI methodology development. This is because our objective is not to build new deep learning or machine learning approaches. Instead, we identified important, practical limitations of existing AI methods when applied to new clinical data. Clinical data need to be carefully processed to be more specific and balanced so that the performance of AI methods can be maximized.

2. Materials and Methods

2.1. Data Description

We used the previously collected and publicly available CT images in the dataset named “A Large-Scale CT and PET/CT Dataset for Lung Cancer Diagnosis (Lung-PET-CT-Dx)”, obtained from The Cancer Imaging Archive (TCIA) [15]. TCIA is an open-access information platform created to support cancer research initiatives where open access cancer-related images are made available to the scientific community (https://www.cancerimagingarchive.net/, accessed on 30 May 2021) [15]. The Lung-PET-CT-Dx dataset contains 251,135 de-identified CT and PET-CT images from lung cancer patients [16]. These data were collected by the Second Affiliated Hospital of Harbin Medical University in Harbin, Heilongjiang Province, China. Lung cancer images were acquired from patients diagnosed with one of the four major lung cancer histopathological subtypes using biopsy. Radiologist annotations on the tumor locations were also provided for each CT/PET-CT image. Each image was manually annotated with a rectangular bounding box of similar length and width to the tumor lesion using the LabelImg tool [17]. Five academic thoracic radiologists completed the annotations: the bounding box was drawn by one radiologist and then verified by the other four.

For our analysis, we only processed CT images with a resolution of 1 mm. CT scans with resolutions other than 1 mm were excluded from the analysis. We made this choice because CT images of different intervals may introduce variability in the radiomic features that complicate the interpretation of the results. A thickness of 1 mm is the most commonly acquired slice resolution in clinics [18], and such CT images were indeed the most well represented in our dataset. Therefore, focusing on 1 mm thick CT images was the most relevant choice for future clinical utilization. In some cases, a patient had more than one chest CT scan. The anatomical scan taken at the earliest time point for a given patient was included in the analysis. This earliest timepoint CT scan is referred to as the patient’s primary CT scan. We decided to exclude non-primary scans for the following reasons: non-primary scans, such as contrast-enhanced or respiratory-gated scans, do not provide radiomic features comparable to those of CT scans, and thus are not appropriate for inclusion in our analysis. Furthermore, the non-primary CT scans might have been acquired after treatment had begun, at which point potential tumor necrosis and cell-death may affect the radiomic features within the CT image. In such cases, the non-primary CT images would not truly represent the radiomic properties of the tumors, which would have changed in response to treatment. A summary of patient demographic information and tumor TNM stages is provided in Table 1 [19].

Table 1.

Summary of the demographic and clinical information of the 355 patients with CT images in the TCIA dataset.

2.2. Semi-Automated Segmentation and Manual Inspection

To perform machine learning-based radiomic analysis, the Computational Environment for Radiologic Research (CERR, Memorial Sloan Kettering Cancer Center, New York, NY, USA) software platform was used to apply the trained iMRRN to automated segmentation [20]. CERR is an open-source, MATLAB-based (Mathworks Inc., Natick, MA, USA) tool with methods optimized for radiomic analysis. Using CERR, CT images in the DICOM format were converted to planC format in preparation for segmentation. Deployment of the iMRRN to the planC object enabled the segmentation of tumor ROIs and the production of a morphological mask over the tumor lesion. The Linux distribution Xubuntu 20.04 (Canonical Ltd., London, UK) was chosen for segmentation to execute the iMRRN Singularity (Sylabs, Reno, NV, USA) container.

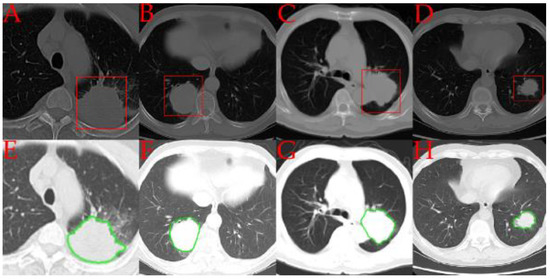

A visual comparison of the iMRRN segmentations and the radiologist annotations is given in Figure 2. One image from each of the four pathologic tumor subtypes is presented. In comparison with the rectangular delineations made by the radiologist, the automated segmentations followed the contours of the tumor lesions more precisely. In these examples, the iMRRN was able to adequately segment the tumors.

Figure 2.

Comparisons between representative examples of tumor regions of interest annotated by radiologists (in red) (A–D) and automatically segmented by the iMRRN (in green) (E–H). Panels A and E represent a patient with adenocarcinoma (group A); panels B and F represent a patient with small cell carcinoma (group B), panels C and G represent a patient with large cell carcinoma (group E); panels D and H represent a patient with squamous cell carcinoma (group C).

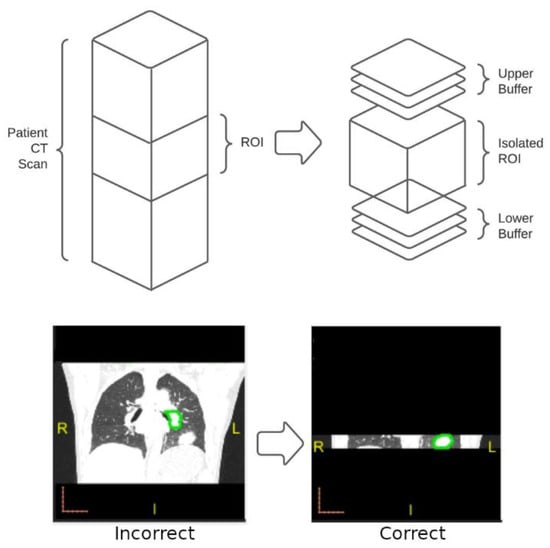

After the direct application of the iMRRN segmentation tool, the images were visually examined and compared with the manual box annotations. We found that in some patient CT scans, non-tumor structures, such as the heart, vertebrae, or sections of the patient’s couch, were mistakenly segmented as tumor nodules. Many of these structures were quite distant from where the tumors were located. To deal with such mistakes, we decided to supply the iMRRN only with CT images near the tumor locations. When performing segmentation within these focused tumor regions of interest (ROI), the iMRRN no longer erroneously segment unrelated structures. Using a Matlab program developed in house, original CT scans were trimmed by discarding the parts of the images outside the annotation boxes known not to contain the tumor lesion (Figure 3). Since there were cases in which the radiologist annotations did not cover the entire tumor, which may have led to incomplete segmentation, an upper and lower buffer were included in the ROI to increase the segmentation ROI (Figure 3, bottom panel).

Figure 3.

Overview of the process used to increase segmentation accuracy for images that failed initial screening. Top: In images for which unrestricted segmentation failed, the radiologist-defined ROI was isolated from the entire image stack (left) along with buffer images above and below the ROI. Automated segmentation using the iMRRN was then restricted to the target area. Bottom: example of incorrect segmentation of a non-tumor anatomical structure in which the iMRRN tool was used without restriction (left) compared with correct lesion segmentation after the field of analysis was restricted to the ROI and buffer images (right).

2.3. Radiomic Feature Extraction

Using CERR methods, histogram intensity features and tumor morphology features were extracted from the segmented ROIs. These features included 17 shape features, 22 first-order features, and 80 texture features from the tumor regions in each CT scan. Texture features were further broken down into the following 5 subgroups: gray level co-occurrence matrix (26), gray level run length matrix (16), gray level size zone matrix (16), neighborhood gray tone difference matrix (5), and neighborhood gray level dependence matrix (26). Each of these features were defined mathematically in the Image Biomarker Standardization Initiative reference manual [21]. A list of extracted radiomic features is given in Supplementary Table S1. All attributes were continuous variables, and each feature was normalized to a range between zero and one so that the scales of the feature values did not affect the results. Observations containing missing values and infinite values were removed.

In order to evaluate the predictive role of 2D and 3D CT-scan features in determining tumor subtype, we initially narrowed down the analysis to the central transverse plane of the Region of Interest (ROI) for the 2D examination. Subsequently, we expanded the analysis to encompass the entire tumor mask for the 3D examination.To examine how well the center CT slice represents other slices in the same CT volume in terms of radiomic analysis, we trained several classifiers using only center slices from the CT volumes and then tested the classification accuracy on the off-center slices that were 4 mm away from the center slices. No test slices were acquired if there was no tumor lesion 4 mm away from the center.

When the 2D CT images were analyzed, only those shape features applicable in 2D (i.e., major axis, minor axis, least axis, elongation, max2dDiameterAxialPlane, and surfArea) were included. We hypothesize that these shape features are the most robust against CT scanner variation, and, therefore, will be the most important when identifying lung tumors by histological subtype.

2.4. Radiomic Model Building

To examine the effectiveness of supervised learning methods in classifying lung cancer subtypes using radiomic features extracted from segmented tumor CT data, we trained and tested seven classifiers. The MATLAB Classification Learner App (Statistics and Machine Learning Toolbox version 12.3) was used to perform the classification and evaluation. The training data contained the extracted radiomic features as well as the confirmed lung cancer subtypes. The following classification algorithms were considered and compared: decision tree, discriminant, naïve Bayes, support vector machine, k-nearest neighbors, ensemble, and a narrow neural network. Each of these models was trained in over fifty iterations. Five-fold cross-validation (CV) was used to evaluate the performance of each model. Five-fold CV divides the whole dataset into five subsets of equal size. Each model was trained using four subsets and then tested on the fifth subset; the process was repeated five times, and the averaged results were reported.

Three lung cancer subtypes, namely adenocarcinomas (group A), small cell carcinomas (group B), and squamous cell carcinoma (group C), were used as response variables for our analysis. Large-cell carcinomas were not included in the analysis as they were poorly represented in the dataset (only five instances). Clinically, large-cell carcinomas account for less than 10% of all lung cancer types, so omitting this particular type did not impact our study objectives.

A principal component analysis (PCA) was used to reduce data complexity [22]. A PCA works by transforming the original dataset into a new set of variables (principal components) that are linear combinations of the original features. This is a widely used technique in machine learning and is especially useful when analyzing data with many features. We used the synthetic minority over-sampling technique (SMOTE) to address the problem of class imbalance in our dataset, in which adenocarcinoma patients (n = 251) greatly outnumbered small cell carcinoma patients (n = 38) and squamous cell carcinoma patients (n = 61). SMOTE was used synthesized new observations using a k-nearest neighbors approach to balance the number of training observations for each histotype group [23]. The MATLAB implementation of SMOTE we used created a more balanced dataset for radiomic modeling and feature analysis [24].

Chi-square tests have been used in machine learning to select features [25]. Although chi-square tests are restricted to categorical data, discretization enables the examination of continuous variables [26]. In our study, we used chi-square tests to obtain a chi-square feature ranking. This ranking describes the degrees of association between each feature and the response variable, which is the class label for classification. Using the feature ranking, we determined which were the most important shape, texture, and first-order histogram intensity features for classifying lung cancer histological subtypes.

3. Results

3.1. Patient Demographics

We summarized the demographic and clinical information of our lung CT patient cohort (Table 1). Over-representation in the adenocarcinoma group (A) in comparison with all other histotype groups was observed. The number of large cell carcinoma observations (five) was insufficient to proceed with radiomic analysis, so these observations were not considered for the training of the classification models. Sex, age, smoking history, and TNM stage are summarized by histotype. Of note is the relatively large number of T1 observations, which denote tumor lesions less than 3 cm across.

3.2. Segmentation Accuracy

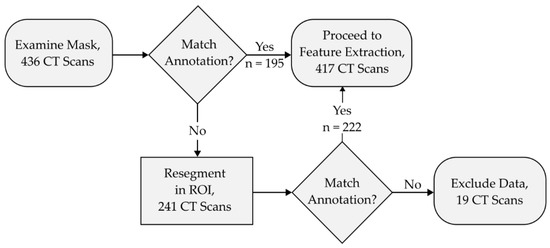

The accuracy of the automated segmentation performed by the iMRRN was first evaluated against the radiologist-defined regions of interest in 436 lung cancer images spanning various tumor subtypes and dimensions (ROI). Based on visual inspections of the morphological masks, the iMRRN initially produced accurate segmentations for 195 of the 436 (44.7%) plain CT images, and incorrectly placed the tumor region outside the radiologist’s ROI in 241 of the 436 cases (55.3%) (Figure 4). In the 241 scans that were incorrectly segmented, the iMRRN had placed the segmentation mask over a non-tumor anatomical structure outside of the radiologist-delineated ROI bounding box. This demonstrated a need for additional guidance to produce a higher number of accurate lesion contours for radiomic analysis. The 241 failed segmentations were again processed within the radiologist-defined bounds (Figure 4). An additional description of the data segmentation and exclusion process can be found in Supplementary Table S2. Of these 241 CT scans, the iMRRN segmentations matched the radiologist delineations in 222 cases. In the remaining 19 scans, the iMRRN masks did not match the radiologist’s annotations. These 19 scans, which represent different histological subtypes as well as a range of T-stages, were excluded from further radiomic analysis, resulting in an overall segmentation failure rate of 4.35%.

Figure 4.

This flowchart describes the segmentation and data exclusion process. Morphological segmentation masks were manually inspected for spatial accuracy against radiologist annotations following segmentation iterations. Segmentations that matched the annotations proceeded to feature extraction. Segmentation masks that did not adhere to the radiologist annotations were segmented again within the radiologist-defined region of interest (ROI). Masks that did not match the radiologist annotations (n = 19) after this step are excluded from downstream analyses.

Overall, the restriction of the segmentation execution to the ROI produced a higher number of accurate masks compared with the unrestricted analysis. Segmentation accuracy was improved across histological subtypes and lesions of different dimensions (Table 2). We performed two different comparisons, one by histotype and one by T-stage, in which the group size was the number of patients and the successes were the number of segmented CT scans. The percentage increase in each histotype was between 25% and 130%. The segmentation accuracy for all tumor sizes was improved by different degrees in relation to the T-stage, ranging between 10% and 2100%. The earlier the T-stage was, the higher the segmentation improvement that was achieved. As expected, the greatest improvement was seen for lesions smaller than 1 cm in diameter. As is shown in Table 2, only one CT scan containing a tumor smaller than 1 cm was successfully segmented when automatic segmentation was performed on the entire CT volume. After a refined CT volume was supplied to the automatic segmentation model, 22 CT datasets containing tumors smaller than 1 cm were successfully segmented. Without this refinement, the iMRRN either incorrectly segmented a non-tumor anatomical structure or failed to produce a segmentation mask. Taken together, our data suggest that the automatic and unrestricted segmentation of relatively small tumor lesions still requires manual intervention from a trained radiologist. However, a single radiologist annotation in the coronal plane may be sufficient to guide automated software segmentation of the tumor mass even within all the corresponding transverse plane images. This form of semi-automated segmentation may represent the best of both worlds, with trained radiologists supervising precision mathematical models with high accuracy and repeatability and minimal intervention.

Table 2.

Summary of segmentation results by histological group and by T-stage before and after the restriction of the image to the spatial range based on the annotation bounding box.

3.3. Radiomic Model Analysis Using SMOTE

From the 417 successfully segmented patient CT scans, we excluded follow-up studies to produce a training dataset of 324 unique observations. This training data included one representative segmentation mask per patient. We demonstrated how the SMOTE function rebalanced the number of observations in the training data (Table 3). Rebalancing was applied to the small cell carcinoma (B) and squamous cell carcinoma groups (C); the adenocarcinoma group (A), in contrast, was over-represented in this dataset. We applied SMOTE to the B and C groups to approximate an equal number of observations between each of the response types. Before applying SMOTE, the total number of training observations was 324, and this increased to 672 after applying SMOTE.

Table 3.

Summary of the number of training observations by histotype class both before and after applying SMOTE.

To assess whether machine learning classification algorithms can be used to predict histological subtypes from lung cancer CT images, we next extracted first- and second-order characteristics from the segmented images. To account for differences in the numbers of cases across histotype groups, we first examined how classification accuracy was affected by inherit variations caused by the application of SMOTE to the training data. We trained models using five separate instances of the SMOTE function and compared the resulting classification accuracy (Table 4).

Table 4.

Summary of the classification results for five instances of models trained with SMOTE-resampled observations.

3.4. Radiomic Analysis: Center and Center-Offset Slices

We next used our pipeline to evaluate the ability of seven machine learning models to accurately distinguish adenocarcinomas from the two other histological subtypes using 103 first- and second-order features in two dimensions using the central slide of the entire CT stack. We first conducted a two-class comparison (adenocarcinomas and squamous cell carcinomas vs. small cell carcinomas). The two-class comparison analysis represents the distinction between non-small cell carcinoma (NSCLC) and small-cell carcinoma (SCLC) cancer types. The dataset included 30 small cell carcinomas and 171 combined adenocarcinoma and squamous carcinomas. The accuracy of the classifiers in distinguishing NSCLC from SCLC ranged between 77% and 85% (Table 5). The results show minimal interference by the SMOTE function in the classification accuracy. Although the SMOTE function did not significantly improve the histological classification performance, the AUC did increase. This is possibly because the two classes of data were severely unbalanced (Table 5).

Table 5.

Summary of the classification results using features extracted from the central axial slice of the tumor volume from the patients’ primary CT scans before and after SMOTE resampling.

We then assessed the ability of our model system to accurately distinguish between three different tumor subtypes, namely adenocarcinomas, small cell carcinomas, and squamous cell carcinomas. As is shown in Table 3, the unbalanced three-group comparison yielded lower accuracy levels compared with the two-group comparison (ranging between 60.7% and 72.1%). However, after the groups were rebalanced using the SMOTE function, the accuracy of the ensemble, SVM, and KNN models increased to 84.3%, 85%, and 87%, respectively (Table 5).

Lastly, we applied a PCA to the 103 features to assess whether reducing the dimensionality of the variables would improve the accuracy of the classifiers. The PCA reduced the number of variables from 103 to 13 features while keeping 95% of the variability. However, the application of the PCA did not impact the performance of the algorithms when the analysis was limited to the central slide of the CT stack. The effect of the PCA on the SMOTE-resampled data is summarized in Supplementary Table S3.

3.5. Radiomic Feature Analysis: Whole Tumor

To account for the complex three-dimensional structures of the tumors, we next repeated the analysis using the whole stack of CT images. A total of 129 2D and 3D features were identified and tested in two- (adenocarcinomas and squamous carcinomas vs. small cell carcinomas) and three-class (adenocarcinomas vs. squamous carcinomas vs. small cell carcinomas) comparisons (Table 6). The two-class comparison analysis represents the distinction between non-small cell carcinoma (NSCLC) and small-cell carcinoma (SCLC) cancer types. In the two-class comparison, the rebalancing of the groups via the SMOTE function increased the accuracy of the models (ranges of 79.6–92.6% and 82.1–88.3% with and without the SMOTE function, respectively). As with the single image analysis, the addition of the PCA did not significantly affect the overall performance of the algorithms. In the three-class comparison, the discriminatory ability of the algorithms was slightly lower, although in this case rebalancing between the groups also increased the performance of the classifiers (unbalanced comparisons range: 67.6–89.1% versus balanced comparisons range: 73.2–92.7%). When the PCA was applied to the dataset, the SMOTE function appeared to have a greater impact than the reduction in dimensionality. For example, the SVM model had an accuracy of 76.5% and an AUC of 0.86 in the unbalanced comparison, but it has an accuracy of 92.7% and an AUC of 0.97 after the SMOTE function was applied.

Table 6.

Summary of the classification results using features extracted from the whole tumor volume in the patients’ primary CT scans.

3.6. Radiomic Feature Analysis: Three-Class Classification with Whole Tumor Features

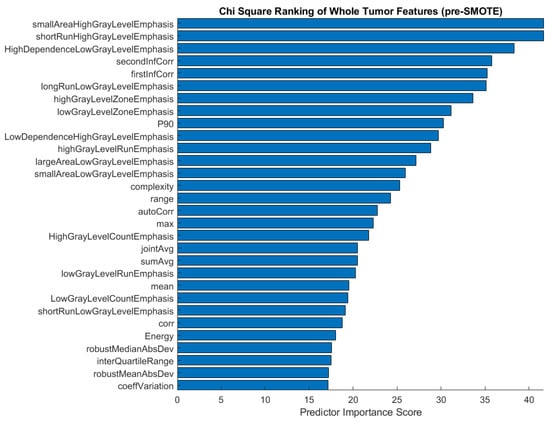

We performed chi-square feature ranking to identify the key features in the three-class classifications using unbalanced responses. The ranking is shown in Figure 5. While texture features, particularly gray level run length matrix (GLRLM) characteristics, scored highest in importance for predicting histological subtype, shape features did not emerge as important predictors, as was previously hypothesized.

Figure 5.

Feature ranking of the top 30 features before applying the SMOTE function to the imbalanced three-class comparison involving adenocarcinomas, small cell carcinomas, and squamous cell carcinomas.

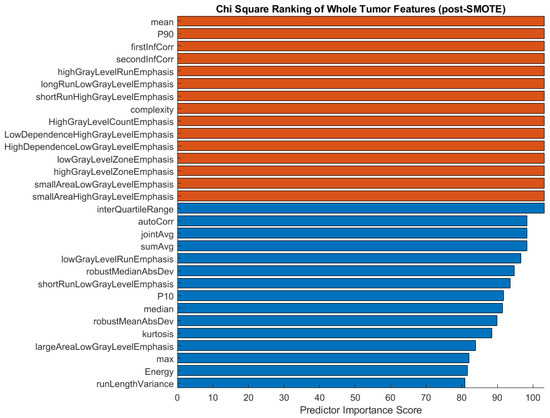

After applying the SMOTE function to the training data for three-class classification, we proceeded to analyze the chi-square feature ranking. The top 30 features were again ranked according to their predictor importance score (Figure 6). In comparison with the pre-SMOTE feature ranking (Figure 5), the post-SMOTE feature ranking places a higher emphasis on texture features, particularly GLRLM and GLCM features, in addition to first-order histogram intensity features. These features have infinite value predictor importance scores, indicating the strongest relationship between these characteristics and histological subtype classification.

Figure 6.

Feature ranking of the top 30 features after applying the SMOTE function to the training data in a three-group comparison. Chi-square scores with infinite values are shown in orange.

4. Discussion

Radiomic analyses provide valuable quantitative descriptions of medical images and have great potential to be used clinically for the improved management of cancer patients [27]. One bottleneck obstructing the clinical application of radiomics in cancer diagnosis and treatment is the need for manual tracing of the tumor by a certified radiologist. In this study, we showed that a pre-trained deep learning segmentation model with minimal input from radiologists on tumor locations can be used to replace the tedious manual segmentation of lung tumors.

We examined three primary types of lung cancer in our analysis. Lung adenocarcinoma, lung squamous cell carcinoma, and small cell lung cancer exhibit distinct physical characteristics. Adenocarcinoma is the most common type and appears as irregular glands or clusters of cells, resembling glandular tissue. It typically develops in the outer regions of the lungs and is more common in non-smokers and in women. In contrast, squamous cell carcinoma is characterized by cancerous cells resembling flat, thin squamous cells arranged in layers. It commonly arises in the central airways, such as the bronchi, and is strongly associated with smoking, particularly in male smokers. Small cell lung cancer is characterized by small, round cancer cells with minimal cytoplasm that grow in clusters [28]. By carefully analyzing the CT images, radiologists can identify specific patterns associated with each type of lung cancer.

To our knowledge, our study is the first that has attempted to classify three histological subtypes of lung cancer using clinical CT/PET images. Li et al. made an attempt to classify the same three subtypes; however, their analyses were primarily binary in nature. This is because their results focused solely on comparing classification accuracies between two out of the three subtypes, without testing the accuracy of distinguishing all three subtypes from each other [29]. Every other study has classified only two subtypes (either adenocarcinoma versus squamous cell carcinoma [30,31,32] or small cell lung cancer versus non-small cell lung cancer). Our best performing model was the support vector machine, which achieved a classification accuracy of 92.7% with an AUC of 0.97 when the three lung cancers subtypes were distinguished. The SVM and ensemble models performed the best when two classes (small cell lung cancer versus non-small cell lung cancer) were considered, both achieving an accuracy of 92.6% with an AUC of 0.98. Our models outperformed those used in previous studies [32].

Our analysis provides important insights into how the proposed framework can be contextualized and used for radiomic analysis. First, although automated segmentation algorithms such as the iMRRN are designed to operate without prior information concerning the location of the tumor lesion, we found that segmentation accuracy was improved when the general location of the tumor was provided (Table 3). The annotation can be as simple as the index of the slice which contains the tumor. A deep learning (DL) model can then be applied to the tumor-adjacent slices to remove the need to search through the entire stack for the tumor. The latter method was shown by our data to have a higher rate of misidentification of the tumor lesion. As this process will only require labeling a single tumor slice, this approach requires very limited effort from the radiologist. Thus, it may boost the use of automated DL segmentation and radiomics in oncology. From a clinical perspective, developing radiomics-based tools that can predict tumor histology may spare patients from invasive procedures and help physicians capture histological changes that may emerge in response to targeted treatments [33,34].

A second important issue that emerged from our analysis is the role of an unbalanced dataset, which truly poses challenges in radiomic analysis. When working with retrospective clinical samples, it is common for a dataset to contain unequal numbers of subjects across comparison groups. However, it is also known that many machine learning methods are sensitive to unbalanced data, as the minority classes may not be learned as sufficiently as the dominant classes. One should carefully examine the distribution of data before applying machine learning based radiomic analysis because unsatisfying results could partially stem from the underrepresentation of some classes. This is especially true for multi-class classifications, in which samples can be significantly skewed. As Table 6 shows, over-sampling increased the accuracy of the two-class classification by a few percentage points (up to 4%), while more significant improvements in the accuracy of the models were seen in the three-class classification (up to 16.2%). These results are comparable to other lung radiomic studies that have demonstrated increased classification performance after applying re-sampling techniques [35].

Lastly, effective classification methods rely on features that are informative and discriminating across compared groups. Radiomic features are divided into distinct classes: shape features, sphericity and compactness features, histogram-based features, and first- and second-order features. Shape features include geometric and spatial characteristics such as size, sphericity, and the compactness of the tumor. Sphericity and compactness are features known to have strong tumor classification reliability [36]. First-order characteristics are features that describe pixel intensity values and may be expressed as histogram values. Histogram-based features have been shown to have a high degree of reliability in radiomic studies [36]. Second-order features, or texture features, rely on statistical relationships between patterns of gray levels in the image. The gray level run length matrix and gray level zone length matrix features each describe homogeneity between pixels and have been shown to be reliable second-order features [37]. Our study showed that 3D CT data outperform single 2D CT data by up to 5% when their radiomic features are used in classification. There are several factors that might cause this. First, 3D CT data provides a richer set of radiomic features, such as the true shape and size information of the three spatial dimensions described above. Second, tumor morphometric and texture characteristics are subject to spatial heterogeneity, which can only be captured by 3D features. Two-dimensional texture features may not be sufficient to accurately describe spatial heterogeneity. However, if clinical 3D CT data are unavailable, 2D radiomic analysis can still be used to achieve useful classification with decent accuracy.

Improving the accuracy of such classifications will rely on the selection of discriminating features. This study utilized each of the shape, first-order, and texture features available in CERR, as these are shown to be robust against differences in image acquisition techniques [38]. Incorporating clinical features such as age, sex, weight, and smoking history are likely to improve classification accuracy as these have been shown to correlate with risk for lung cancer [39].

5. Conclusions

This study demonstrated a successful application of the deep learning method iMRRN to the segmenting of independent lung CT data and identified a procedure to make automatic segmentation more accurate. The direct use of segmentation with existing deep learning models leads to classification accuracy comparable with that typically achieved in published studies. The necessity of balancing data samples was demonstrated, as was a suitable data balancing method. The feasibility of performing classifications with three classes was shown by systematically comparing various machine learning methods. Overall, we demonstrated that the classification of subtypes of lung cancer can be semi-automated with minimal radiologist intervention in terms of segmentation and radiomic analysis.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/bioengineering10060690/s1, Table S1: Extracted Radiomic Features Using CERR; Table S2: Segmentation and Data Exclusion Process; Table S3: Classification Accuracy Before and After Applying PCA to the Training Dataset With SMOTE applied.

Author Contributions

Conceptualization, Q.W. and M.P.; methodology, B.D., Q.W. and M.P.; validation, B.D., Q.W. and M.P.; formal analysis, B.D.; data curation, B.D.; writing—original draft preparation, B.D, M.P. and Q.W.; writing—review and editing, B.D., M.P. and Q.W.; visualization, B.D.; supervision, M.P. and Q.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are available in a publicly accessible repository. The data presented in this study are openly available in The Cancer Imaging Archive at https://doi.org/10.7937/TCIA.2020.NNC2-0461, reference number [16].

Acknowledgments

The authors received technical support from Aditya Apte, the iMRRN development team, and the CERR development team, Department of Medical Physics at Memorial Sloan Kettering Cancer Center in New York, NY, USA.

Conflicts of Interest

The authors declare the following competing interests: M.P. receives royalties from TheraLink Technologies, Inc., for whom she acts as a consultant.

References

- Collins, L.G.; Haines, C.; Perkel, R.; Enck, R.E. Lung Cancer: Diagnosis and Management. Am. Fam. Physician 2007, 75, 56–63. [Google Scholar] [PubMed]

- Siegel, R.L.; Miller, K.D.; Wagle, N.S.; Jemal, A. Cancer Statistics, 2023. CA Cancer J. Clin. 2023, 73, 17–48. [Google Scholar] [CrossRef] [PubMed]

- Tunali, I.; Gillies, R.J.; Schabath, M.B. Application of Radiomics and Artificial Intelligence for Lung Cancer Precision Medicine. Cold Spring Harb. Perspect. Med. 2021, 11, a039537. [Google Scholar] [CrossRef] [PubMed]

- Rizzo, S.; Botta, F.; Raimondi, S.; Origgi, D.; Fanciullo, C.; Morganti, A.G.; Bellomi, M. Radiomics: The Facts and the Challenges of Image Analysis. Eur. Radiol. Exp. 2018, 2, 36. [Google Scholar] [CrossRef]

- Singh, S.; Pinsky, P.; Fineberg, N.S.; Gierada, D.S.; Garg, K.; Sun, Y.; Nath, P.H. Evaluation of Reader Variability in the Interpretation of Follow-up CT Scans at Lung Cancer Screening. Radiology 2011, 259, 263–270. [Google Scholar] [CrossRef]

- Haarburger, C.; Müller-Franzes, G.; Weninger, L.; Kuhl, C.; Truhn, D.; Merhof, D. Radiomics Feature Reproducibility under Inter-Rater Variability in Segmentations of CT Images. Sci. Rep. 2020, 10, 12688. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef]

- Jiang, J.; Hu, Y.-C.; Liu, C.-J.; Halpenny, D.; Hellmann, M.D.; Deasy, J.O.; Mageras, G.; Veeraraghavan, H. Multiple Resolution Residually Connected Feature Streams for Automatic Lung Tumor Segmentation From CT Images. IEEE Trans. Med. Imaging 2019, 38, 134–144. [Google Scholar] [CrossRef]

- Jiang, J.; Elguindi, S.; Berry, S.L.; Onochie, I.; Cervino, L.; Deasy, J.O.; Veeraraghavan, H. Nested Block Self-Attention Multiple Resolution Residual Network for Multiorgan Segmentation from CT. Med. Phys. 2022, 49, 5244–5257. [Google Scholar] [CrossRef]

- Primakov, S.P.; Ibrahim, A.; van Timmeren, J.E.; Wu, G.; Keek, S.A.; Beuque, M.; Granzier, R.W.Y.; Lavrova, E.; Scrivener, M.; Sanduleanu, S.; et al. Automated Detection and Segmentation of Non-Small Cell Lung Cancer Computed Tomography Images. Nat. Commun. 2022, 13, 3423. [Google Scholar] [CrossRef]

- Zhang, G.; Yang, Z.; Jiang, S. Automatic Lung Tumor Segmentation from CT Images Using Improved 3D Densely Connected UNet. Med. Biol. Eng. Comput. 2022, 60, 3311–3323. [Google Scholar] [CrossRef]

- Um, H.; Jiang, J.; Thor, M.; Rimner, A.; Luo, L.; Deasy, J.O.; Veeraraghavan, H. Multiple Resolution Residual Network for Automatic Thoracic Organs-at-Risk Segmentation from CT. arXiv 2020, arXiv:2005.13690. Available online: http://arxiv.org/abs/2005.13690 (accessed on 2 February 2023).

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Krithika alias AnbuDevi, M.; Suganthi, K. Review of Semantic Segmentation of Medical Images Using Modified Architectures of UNET. Diagnostics 2022, 12, 3064. [Google Scholar] [CrossRef]

- Clark, K.; Vendt, B.; Smith, K.; Freymann, J.; Kirby, J.; Koppel, P.; Moore, S.; Phillips, S.; Maffitt, D.; Pringle, M.; et al. The Cancer Imaging Archive (TCIA): Maintaining and Operating a Public Information Repository. J. Digit. Imaging 2013, 26, 1045–1057. [Google Scholar] [CrossRef]

- Li, P.; Wang, S.; Li, T.; Lu, J.; HuangFu, Y.; Wang, D. A Large-Scale CT and PET/CT Dataset for Lung Cancer Diagnosis. Cancer Imaging Arch. 2020. [Google Scholar] [CrossRef]

- Lin, T. LabelImg: LabelImg Is a Graphical Image Annotation Tool and Label Object Bounding Boxes in Images. Available online: https://github.com/tzutalin/labelImg (accessed on 2 February 2023).

- CT Scans | Cancer Imaging Program (CIP). Available online: https://imaging.cancer.gov/imaging_basics/cancer_imaging/ct_scans.htm (accessed on 16 April 2023).

- Detterbeck, F.C. The Eighth Edition TNM Stage Classification for Lung Cancer: What Does It Mean on Main Street? J. Thorac. Cardiovasc. Surg. 2018, 155, 356–359. [Google Scholar] [CrossRef]

- Apte, A.P.; Iyer, A.; Crispin-Ortuzar, M.; Pandya, R.; van Dijk, L.V.; Spezi, E.; Thor, M.; Um, H.; Veeraraghavan, H.; Oh, J.H.; et al. Technical Note: Extension of CERR for Computational Radiomics: A Comprehensive MATLAB Platform for Reproducible Radiomics Research. Med. Phys. 2018, 45, 3713–3720. [Google Scholar] [CrossRef]

- Zwanenburg, A.; Vallières, M.; Abdalah, M.A.; Aerts, H.J.W.L.; Andrearczyk, V.; Apte, A.; Ashrafinia, S.; Bakas, S.; Beukinga, R.J.; Boellaard, R.; et al. The Image Biomarker Standardization Initiative: Standardized Quantitative Radiomics for High-Throughput Image-Based Phenotyping. Radiology 2020, 295, 328–338. [Google Scholar] [CrossRef]

- Lever, J.; Krzywinski, M.; Altman, N. Principal Component Analysis. Nat. Methods 2017, 14, 641–642. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-Sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Synthetic Minority Over-Sampling Technique (SMOTE). Available online: https://www.mathworks.com/matlabcentral/fileexchange/75401-synthetic-minority-over-sampling-technique-smote (accessed on 25 February 2023).

- Jin, X.; Xu, A.; Bie, R.; Guo, P. Machine Learning Techniques and Chi-Square Feature Selection for Cancer Classification Using SAGE Gene Expression Profiles. In Proceedings of the Data Mining for Biomedical Applications; Li, J., Yang, Q., Tan, A.-H., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 106–115. [Google Scholar]

- Univariate Feature Ranking for Classification Using Chi-Square Tests-MATLAB Fscchi2. Available online: https://www.mathworks.com/help/stats/fscchi2.html#mw_3a4e15f8-e55d-4b64-b8d0-1253e2734904_head (accessed on 25 February 2023).

- Binczyk, F.; Prazuch, W.; Bozek, P.; Polanska, J. Radiomics and Artificial Intelligence in Lung Cancer Screening. Transl Lung Cancer Res 2021, 10, 1186–1199. [Google Scholar] [CrossRef] [PubMed]

- What Is Lung Cancer? | Types of Lung Cancer. Available online: https://www.cancer.org/cancer/types/lung-cancer/about/what-is.html (accessed on 9 May 2023).

- Li, H.; Gao, L.; Ma, H.; Arefan, D.; He, J.; Wang, J.; Liu, H. Radiomics-Based Features for Prediction of Histological Subtypes in Central Lung Cancer. Front. Oncol. 2021, 11, 658887. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Liu, S.; Zhang, C.; Yu, H.; Liu, X.; Hu, Y.; Xu, W.; Tang, X.; Fu, Q. Exploratory Study of a CT Radiomics Model for the Classification of Small Cell Lung Cancer and Non-Small-Cell Lung Cancer. Front. Oncol. 2020, 10, 1268. [Google Scholar] [CrossRef] [PubMed]

- Hyun, S.H.; Ahn, M.S.; Koh, Y.W.; Lee, S.J. A Machine-Learning Approach Using PET-Based Radiomics to Predict the Histological Subtypes of Lung Cancer. Clin. Nucl. Med. 2019, 44, 956–960. [Google Scholar] [CrossRef]

- Yang, F.; Chen, W.; Wei, H.; Zhang, X.; Yuan, S.; Qiao, X.; Chen, Y.-W. Machine Learning for Histologic Subtype Classification of Non-Small Cell Lung Cancer: A Retrospective Multicenter Radiomics Study. Front. Oncol. 2021, 10, 608598. [Google Scholar] [CrossRef]

- Rubin, M.A.; Bristow, R.G.; Thienger, P.D.; Dive, C.; Imielinski, M. Impact of Lineage Plasticity to and from a Neuroendocrine Phenotype on Progression and Response in Prostate and Lung Cancers. Mol. Cell 2020, 80, 562–577. [Google Scholar] [CrossRef]

- Quintanal-Villalonga, Á.; Chan, J.M.; Yu, H.A.; Pe’er, D.; Sawyers, C.L.; Sen, T.; Rudin, C.M. Lineage Plasticity in Cancer: A Shared Pathway of Therapeutic Resistance. Nat. Rev. Clin. Oncol. 2020, 17, 360–371. [Google Scholar] [CrossRef]

- Lv, J.; Chen, X.; Liu, X.; Du, D.; Lv, W.; Lu, L.; Wu, H. Imbalanced Data Correction Based PET/CT Radiomics Model for Predicting Lymph Node Metastasis in Clinical Stage T1 Lung Adenocarcinoma. Front. Oncol. 2022, 12, 788968. [Google Scholar] [CrossRef]

- Fornacon-Wood, I.; Mistry, H.; Ackermann, C.J.; Blackhall, F.; McPartlin, A.; Faivre-Finn, C.; Price, G.J.; O’Connor, J.P.B. Reliability and Prognostic Value of Radiomic Features Are Highly Dependent on Choice of Feature Extraction Platform. Eur. Radiol. 2020, 30, 6241–6250. [Google Scholar] [CrossRef]

- Tomori, Y.; Yamashiro, T.; Tomita, H.; Tsubakimoto, M.; Ishigami, K.; Atsumi, E.; Murayama, S. CT Radiomics Analysis of Lung Cancers: Differentiation of Squamous Cell Carcinoma from Adenocarcinoma, a Correlative Study with FDG Uptake. Eur. J. Radiol. 2020, 128, 109032. [Google Scholar] [CrossRef]

- Owens, C.A.; Peterson, C.B.; Tang, C.; Koay, E.J.; Yu, W.; Mackin, D.S.; Li, J.; Salehpour, M.R.; Fuentes, D.T.; Court, L.E.; et al. Lung Tumor Segmentation Methods: Impact on the Uncertainty of Radiomics Features for Non-Small Cell Lung Cancer. PLoS ONE 2018, 13, e0205003. [Google Scholar] [CrossRef]

- Yan, M.; Wang, W. Development of a Radiomics Prediction Model for Histological Type Diagnosis in Solitary Pulmonary Nodules: The Combination of CT and FDG PET. Front. Oncol. 2020, 10, 555514. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).