Abstract

Activated channels of functional near-infrared spectroscopy are typically identified using the desired hemodynamic response function (dHRF) generated by a trial period. However, this approach is not possible for an unknown trial period. In this paper, an innovative method not using the dHRF is proposed, which extracts fluctuating signals during the resting state using maximal overlap discrete wavelet transform, identifies low-frequency wavelets corresponding to physiological noise, trains them using long-short term memory networks, and predicts/subtracts them during the task session. The motivation for prediction is to maintain the phase information of physiological noise at the start time of a task, which is possible because the signal is extended from the resting state to the task session. This technique decomposes the resting state data into nine wavelets and uses the fifth to ninth wavelets for learning and prediction. In the eighth wavelet, the prediction error difference between the with and without dHRF from the 15-s prediction window appeared to be the largest. Considering the difficulty in removing physiological noise when the activation period is near the physiological noise, the proposed method can be an alternative solution when the conventional method is not applicable. In passive brain-computer interfaces, estimating the brain signal starting time is necessary.

1. Introduction

In processing functional near-infrared spectroscopy (fNIRS) signals, a task-related hemodynamic signal cannot be identified if a physiological noise period is overlapped with the designed task period. This study proposes a novel method to identify physiological noises from the resting state and remove those noises during the task period using wavelet techniques and neural networks-based prediction. FNIRS is a brain-imaging technique that uses two or more wavelengths of light in near-infrared bands to measure changes in oxidized and deoxidized hemoglobin concentration in the cerebral cortex [1]. When a person moves, thinks, or receives an external stimulus, the nerve cells in cerebral cortical layers become excited. As the cells require more energy, the oxidized hemoglobin concentration around the nerve cells increases, and the deoxygenated hemoglobin concentration decreases [1]. Based on this principle, fNIRS can measure brain activity in real time. Because fNIRS is inexpensive, easy to use, and harmless to the human body, it has been used in brain disease diagnosis [2,3], brain-computer interface (BCI) [4], decoding sensory signals [5,6], child development [7], and psychology research [8].

An fNIRS channel consists of one source and one detector. When the light is emitted from a light source, photons pass through several layers, including the scalp, skull, cerebrospinal fluid, capillaries, and cerebral cortex, before returning to a detector. Through this process, the detected light contains various noises that make it challenging to know the hemodynamic responses. These noises include heartbeat, breathing, and motion artifacts [9]; more problematically, very low-frequency noise around 0.01 Hz has been reported [10,11,12].

In improving the accuracy of the measured signal, noise removal/reduction techniques are indispensable. Various techniques can remove physiological noises such as heartbeat, breathing, and Mayer waves. For instance, the superficial noise in the scalp can be removed using short separated channels [13], additional external devices, or applying denoising techniques such as adaptive filtering [14] and correlation analysis methods [15]. In addition, since the frequency bands of physiological noise are roughly known, a band-pass filter has become one of the most easily applied noise reduction techniques [16].

A general linear model (GLM) method has been widely used to find the task-related hemodynamic response in the fNIRS signal after preprocessing [17]. The desired hemodynamic response function (dHRF), which should be used for the GLM method, is designed considering the experimental paradigm. However, in the case that the essential frequency of the dHRF overlaps with a specific frequency of physiological noise, the conventional GLM method will not work and may result in mistaking noise for the hemodynamic response. Therefore, a new different denoising technique must be pursued.

A discrete wavelet transform (DWT) is a mathematical tool used to analyze signals in the time-frequency domain [18]. In fNIRS research, DWT has been used for denoising [19,20] and connectivity analysis [21,22]. The maximal overlap discrete wavelet transform (MODWT) is a type of DWT often used in signal processing and time series analysis [23]. It decomposes a signal into a series of wavelet coefficients at different widths and time locations. Unlike the usual DWTs, which use non-overlapping sub-signal windows to perform the wavelet decomposition, the MODWT uses overlapping sub-signal windows. This nested-window approach allows the MODWT to improve time-frequency localization and reduce the boundary effects that can occur in DWTs [24]. Due to this advantage, MODWT has been applied to a wide range of signals, including audio signals [25], weather information [26,27], and biomedical signals [28]. MODWT is powerful when the signals are abnormal or have complex frequency components.

Deep learning, a subfield of artificial intelligence, is based on artificial neural networks. In recent years, brain research has increasingly used it to analyze large, complex data sets, such as those generated by biomedical devices [29]. Research has been conducted to analyze health data such as magnetic resonance imaging (MRI) [30], electrocardiograms (ECG) [31] and electroencephalograms (EEG) [32,33], or to decode brain waves to control BCI [34,35]. Furthermore, analyzing brain neuroimaging data and identifying patterns associated with specific diseases can help with early diagnosis and personalized treatment.

Long short-term memory (LSTM) is a type of recurrent neural network (RNN) architecture designed to overcome the limitations of traditional RNNs in handling long-term dependencies in sequential data [36]. It has been used in a wide range of applications for time-series data classification and forecasting [37,38,39,40]. LSTMs are particularly useful in tasks that require modeling long-term dependencies in sequential data. LSTMs’ ability to selectively remember and forget information over time is vital for accurate forecasting.

MODWT-LSTM-based prediction research has shown excellent results in predicting periodic data such as water level [41], ammonia nitrogen [42], weather [43], etc. In brain research, MODWT has been applied as a preprocessing method for EEG-based seizure detection [28,44], Alzheimer’s diagnosis [45], and resting state network analysis of fMRI [46]. Since brain signals are measured in time series, active research on brain signal classification [47,48] uses LSTM. However, to our knowledge, this is the first study to predict the noise in fNIRS signals despite many of the noise components being periodic.

In this study, one thousand synthetic data are generated, assuming 600-s rest and 40-s task. Each data is decomposed into eight levels by the MODWT. Five wavelets containing low-frequency components from the 600-s data are used to train an LSTM network. The trained LSTM networks are used to predict the next 40 s, presumably the predicted signals of the low-frequency oscillations. The predicted signals are then subtracted from the task period data. For validation purposes, the predicted signal and original data are compared by calculating mean absolute errors (MAEs), and root mean square errors (RMSEs). Finally, the proposed method is demonstrated by analyzing the actual fNIRS data from humans.

2. Method Development

This section describes the development of the proposed method with the following four subsections. In the first subsection, the method of synthetic fNIRS data generation is described. The second and third subsections explain the operation of MODWT and LSTM, respectively. The fourth subsection describes the validation of the proposed method. The last subsection presents the results of the data analysis.

2.1. Synthetic fNIRS Data Generation

One thousand synthetic data are generated according to the method of Germignani et al. [49] with a sampling frequency of 8.138 Hz. For each data, thirty orders of autoregressive noise are added to the baseline noise [50]. The synthetic physiological noises include frequency ranges of 1 ± 0.1 Hz, 0.25 ± 0.01 Hz, and 0.1 ± 0.01 Hz for cardiac, respiratory, and Mayer waves, respectively. In addition, a sine wave with a frequency of 0.01 ± 0.001 Hz was generated for the very low-frequency component [11]. The amplitudes of five signals in a synthetic fNIRS signal were set randomly in the range of 0.01 to 0.03. In this paper, the resting period is set to 10 min, considering that the concerned low-frequency noise is near 0.01 Hz.

For five hundred data samples only, the desired hemodynamic function (dHRF) based on a 2-gamma function with 20 s of task and 20 s of resting state after the 10 min resting state were added. The amplitude of this signal was randomized between 0.1 and 0.35 and added to the previously generated noise. All data were set to zero at the starting point before processing the signals. Figure 1 depicts synthetic signals for various noises and the resultant HbO signal assumed.

Figure 1.

A synthetic HbO signal is made of six components.

2.2. Maximal Overlap Discrete Wavelet Transform

The discrete wavelet transform (DWT) is a signal processing technique that decomposes a signal into different frequency components at multiple levels of resolution. The DWT works by convolving the signal with a set of filters, called wavelet filters, which capture different frequency bands. The signal is decomposed into approximation and detail coefficients [19], which represent low-frequency components and high-frequency components, respectively. This decomposition is applied recursively to the approximation coefficients to obtain a multi-resolution representation. However, the DWT has several drawbacks, including the introduction of boundary artifacts due to the filtering process, the lack of shift invariance in the decomposition, and the potential loss of fine detail at higher decomposition levels.

Zhang et al. (2018) [51] utilized the DWT in forecasting vehicle emissions and specifically compared four cases: The autoregressive integrated moving average (ARIMA) model, LSTM, DWT-ARIMA, and DWT-LSTM. They reported that adopting DWT improved the performance overall. Individually, between ARIMA and LSTM, LSTM performed better; between ARIMA and DWT-ARIMA, DWT-ARIMA generated improved results; between LSTM and DWT-LSTM, DWT-LSTM was superior; and between DWT-LSTM and DWT-ARIMA, DWT-LSTM demonstrated the best forecasting.

MODWT is a mathematical technique that transforms a signal into a multilevel wavelet and scaling factor. MODWT has several advantages over DWT. For example, the MODWT can be adequately defined for signals of arbitrary length, whereas the DWT is only for signals of integer length to the power of two.

For discrete signal , the jth element and scaling factor of the MODWT are defined as follows.

where is the wavelet coefficient of the tth element of the jth level of the MODWT; is the scaling factor of the tth element of the jth level; and are the jth level’s high- and low-pass filters (wavelet and scaling filters) of MODWT generated by periodizing and , respectively, with n lengths; and are the jth level MODWT high () and low () pass filters; and are the jth level DWT high-pass and low-pass filters, where L is the maximum decomposition level. The filters are determined by the mother wavelet as in the DWT [52]. The MODWT based multiresolution analysis is expressed as follows.

where is the approximation component and is the detail components (). Figure 2 shows a scheme of MODWT-based multiresolution analysis.

Figure 2.

Schematic of the MODWT decomposition.

In this study, Sym4 was selected as the mother wavelet because it resembles the canonical hemodynamic response function. Let the number of data be N. Then, the maximum decomposition level becomes less than log2(N). Considering our case’s shortest resting state of 60 s, the data size is 60 s × 8.13 Hz = 487.8. Therefore, the decomposition level in our work was selected by 8, which is the largest integer less than log2(487.8). The eight decompositions result in nine signals, of which only five signals belonging to low frequencies will be predicted.

2.3. Long Short-Term Memory

LSTM is a type of RNN architecture that addresses the vanishing gradient problem and allows for capturing long-term dependencies in sequential data. LSTM consists of memory cells that store and update information over time. The primary function of an LSTM is to use memory cells that can hold information for long periods. Memory cells can selectively forget or remember information based on input data and past states. This allows the network to learn and remember important information while ignoring irrelevant or redundant information. An LSTM network has three gates (input gate, forget gate, and output gate) that control the flow of information into and out of the memory cells. The input gate determines which information is stored in the memory cell , the forget gate determines which information is discarded, and the output gate controls the output of the memory cell (Figure 3) [53].

Figure 3.

A structure of LSTM layers.

The LSTM model is represented by the following equations:

where and are the cell states at t − 1 and t, and at each gate, are the bias vectors, are the weight matrices, and are the recurrent weights. is a sigmoid function, tanh is a hyperbolic tangent activation function, and denotes the cross product of two vectors.

In this study, three LSTM layers were utilized, with the number of hidden units set to [128, 64, 32], and a dropout layer was employed between the LSTM layers with a probability of 0.2 to prevent overfitting (Figure 4). To train the LSTM network, the Adam optimizer was used with a maximum epoch of 100 and a minibatch size of 128. All data were normalized before training.

Figure 4.

Diagram of the proposed time-series prediction based on MODWT-LSTM.

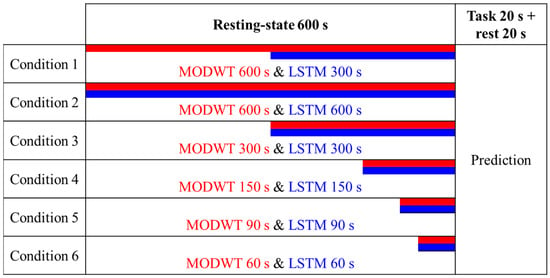

For the synthetic data, nine hundred data were randomly selected from the thousand data to train the network, and then one hundred data were tested. The number of data points trained was divided into five conditions ([600 s, 300 s, 150 s, 90 s, 60 s] × sampling rate (8.13 Hz) = [4883, 2441, 1221, 732, 488]), and then 244 data points (30 s × 8.13 Hz) were predicted.

For actual fNIRS data, a leave-one-out method was used to avoid splitting data from the same person for training and testing. For example, to train an LSTM network to predict the 48 channels of a subject, a total of 432 channels (nine subjects × 48 channels) were used. Since the data was only 600 s long, 570 s of data were used for training, and the trained LSTM network predicted the next 30 s.

2.4. Validation

To determine the accuracy of the signal predicted by the LSTM, the mean absolute error (MAE) and root mean squared error (RMSE) were calculated compared to the original signal, divided by the signal with and without dHRF. The data was segmented, analyzed, and predicted to find the required resting-state length to achieve optimal prediction accuracy, as shown in Figure 5. MAE and RMSE can be calculated using the following equations.

where is the original signal, is the predicted signal, is the timestep, and is the number of data. The calculated MAEs and RMSEs of the signal with and without the dHRF were compared using a two-sample t-test.

Figure 5.

Data segmentation for validation (red: MODWT data length, blue: LSTM data length for training).

2.5. Synthetic Data Analysis

The synthetic data were decomposed into nine components using MODWT, and the components used for prediction were the fifth through ninth. The frequency of the fifth wavelet was between 0.13 and 0.26 Hz, the sixth between 0.067 and 0.13 Hz, the seventh between 0.035 and 0.067 Hz, the eighth between 0.017 and 0.035 Hz, and the ninth consisted of signals below 0.017 Hz. Figure 6 shows the prediction results of the signal with and without dHRF. The signal with dHRF showed a significant fluctuation during the task period in the low-frequency signals of Wavelets 6–9, and the predicted signal did not follow this fluctuation.

Figure 6.

Prediction results from synthetic data: (a) Without dHRF (MODWT 300 s, LSTM 300 s), (b) with dHRF (MODWF 300 s, LSTM 300 s), (c) without dHRF (MODWT 600 s, LSTM 600 s), and (d) with dHRF (MODWT 600 s, LSTM 600 s) (blue line: training data, red line: MODWT results including test time, orange dotted line: predicted result).

Figure 7 and Table 1 show the calculated MAEs and RMSEs. In all conditions, the MAEs and RMSEs of the signal with dHRF corresponding to Wavelets 6–9 and the signal without dHRF were statistically significantly different. The only statistically significant difference between with and without dHRF was found in the RMSE of Wavelet 5 when the MODWT-LSTM analysis was performed with 300 s of data (Figure 7c). To compare the prediction results for each condition, MAEs and RMSEs for all conditions are shown in Figure 8. The error of the dHRF signal was the largest in Condition 2 (MODWT-LSTM at 600 s) and the smallest in Condition 6 (MODWT-LSTM with 60 s of data). In particular, the difference in prediction accuracy between with and without dHRF signals of Wavelet 8 was the largest in all conditions.

Figure 7.

MAEs and RMSEs for wavelets 5–9: (a) MODWT 600 s and LSTM training 300 s, (b) MODWT 600 s and LSTM training 600 s, (c) MODWT 300 s and LSTM training 300 s, (d) MODWT 150 s and LSTM training 150 s, (e) MODWT 90 s and LSTM training 90 s, and (f) MODWT 60 s and LSTM training 60 s (* p < 0.05, ** p < 0.01).

Table 1.

Mean and standard deviation of MAEs and RMSEs for synthetic data (* p < 0.05, ** p < 0.01).

Figure 8.

Comparison of MAE and RMSE for all data segmentations: (1) MODWT 600 s and LSTM training 300 s, (2) MODWT 600 s and LSTM training 600 s, (3) MODWT 300 s and LSTM training 300 s, (4) MODWT 150 s and LSTM training 150 s, (5) MODWT 90 s and LSTM training 90 s, and (6) MODWT 60 s and LSTM training 60 s.

For the 600 s data prediction results, MAEs and RMSEs were calculated for 1 s, 3 s, 5 s, 10 s, 15 s, and 30 s (Figure 9). In all cases, there were statistically significant differences in Wavelets 6–9 between with and without dHRF. Especially for Wavelet 7, with the most significant difference at 1 s and a decrease after that, but for Wavelet 8, the difference started at 10 s and was most extensive at 15 s.

Figure 9.

MAEs and RMSEs of 600 s data MODWT-LSTM for each wavelet: (a) 1 s, (b) 3 s, (c) 5 s, (d) 10 s, (e) 15 s, and (f) 30 s (* p < 0.05, ** p < 0.01).

3. Human Data Application

In this section, actual fNIRS data from human subjects were used to validate the proposed method. The actual fNIRS data were obtained in the authors’ previous study, but only resting state data were used [2]. In the first subsection, the fNIRS data acquisition is briefly described. The second subsection describes the results of the application of the proposed method.

3.1. fNIRS Data Acquisition

Resting state data with a data length of 10 min were selected from ten healthy subjects. The selected subjects are five males and five females (age: 68 ± 5.95 years). Prior to the experiment, each subject was fully informed about the purpose of the study. Written informed consent was obtained from each subject. The entire experiment was approved by the ethics committee of Pusan National University Yangsan Hospital (Institutional Review Board approval number: PNUYH-03-2018-003).

Hemodynamic responses in PFC were measured with a portable fNIRS device (NIRSIT; OBELAB, Seoul, Republic of Korea) equipped with 24 sources (laser diode) and 32 detectors (a total of 204 channels, including short channel separation) at a sampling rate of 8.138 Hz. NIRSIT uses two wavelengths of near-infrared light (780 nm and 850 nm) to measure concentration changes of HbO and HbR. Only 48 channels with 3 cm of channel distance out of 204 channels were used for this study.

3.2. Human Data Analysis

The prediction results for the actual HbO data are shown in Figure 10. Unlike the synthetic data, the amplitude of the ninth wavelet was significantly lower than the other wavelets. A spike appeared in all the wavelets at a particular time, presumably a motion artifact. The MODWT results differed at both ends of the wavelets for the 570 s data and the 600 s data.

Figure 10.

Prediction results of MODWT-LSTM for actual HbO data (blue line: training data, red line: MODWT results including test time points, orange dotted line: predicted result).

Table 2 shows the results of calculating the mean and standard deviation of the MAEs and RMSEs of the predictions on the real HbO data. Among them, the average value is plotted for easy comparison (Figure 11). The ninth wavelet had the slightest error but the most significant standard deviation across all cases. The fifth and sixth wavelets showed increasingly significant errors until 3 s and 5 s, respectively, then decreased. The seventh and eighth wavelets had more significant errors as the time window increased.

Table 2.

Mean and standard deviation of MAEs and RMSEs for real data.

Figure 11.

Averaged MAEs and RMSEs of real HbO data MODWT-LSTM for each wavelet by predicted time windows.

4. Discussion

In fNIRS studies, cognitive tasks are used to evaluate cognitive abilities such as working memory, conflict processing, language processing, emotional processing, and memory encoding and retrieval [54]. For example, N-back, Stroop, and verbal fluency tasks evaluate working memory, conflict processing, language processing, etc. Such cognitive tasks are also often used to detect brain diseases such as schizophrenia, depression, cognitive impairment, attention-deficit hyperactivity disorder, etc. [55].

Cortical activations caused by cognitive tasks are investigated by a t-map, a connectivity map, or extracted features from HbO signals [2,3]. The t-map is reconstructed with t-values from the GLM method, indicating the dHRF’s weight at each channel. The connectivity map is an image map of correlation coefficients between two channels, which reflects how those two channels are interrelated. Hemodynamic features such as the mean, slope, and peak value have also been used to diagnose brain diseases. Cognitive task analysis can identify activated/deactivated regions and differences between healthy and non-healthy people.

The proposed method was validated in two ways: (i) By comparing synthetic data with and without dHRF, and (ii) by predicting the resting state data. In the synthetic data, the proposed method showed statistically significant differences in the prediction errors between with and w/o dHRF. The prediction errors in human resting state data also showed concordance with the results of synthetic data without dHRF. The agreement between the synthetic data without dHRF and the human resting state data demonstrates that the task-related response can also be differentiated from the proposed method.

Since the hemodynamic signal in this study consisted of 20 s of task and 20 s of rest and had a frequency of 0.025 Hz, it was expected that the eighth wavelet would show a significant difference with and without dHRF. As shown in Figure 6, the wavelet decomposition of the signal with dHRF was different from the signal without in the sixth through ninth wavelets. As expected, a statistically significant difference was found in the eighth wavelet, but the sixth, seventh, and ninth wavelets also showed significant differences. This is likely due to the decomposition of the dHRF into multiple levels when performing the MODWT.

The LSTM results show that the difference between with and without dHRF is more pronounced when the number of training data points increases. (Figure 7a,b). In addition, the smaller the number of training data points, the smaller the prediction error of the signal with dHRF and the larger the prediction error of the signal without dHRF. This is not surprising, since sufficient data is required for practical training of the LSTM.

To investigate whether the occurrence of hemodynamic signals can be predicted early, MAEs and RMSEs were estimated by dividing the predicted data into 1 s, 3 s, 5 s, 10 s, 15 s, and 30 s, and the difference in error between the seventh wavelet with and without dHRF was significant early. The difference between the eighth wavelet with and without dHRF was significant at 15 s because it took more than 10 s for the dHRF to rise to the maximum, since it takes time for the dHRF to rise.

When the proposed method was applied to real data, the error was similar to that of the synthetic data without dHRF. The lowest error occurred in the ninth wavelet, which seems to be due to the lowest signal strength of the ninth wavelet. Initially, wavelets with higher frequencies produced relatively higher errors, but the opposite was true as the prediction time increased. This suggests that as the data length varies, the results of the MODWT change as well, as this is more pronounced at both ends of the data.

Methods to estimate the hemodynamic response and remove noise from fNIRS signals include Kalman filtering [56], Bayesian filtering [57], block averaging [58], general linear models [59], and adaptive filtering [14,60,61]. In addition, initial-dip detection has also been studied for early detection of hemodynamic responses [62,63]. However, these methods rely heavily on the desired hemodynamic function as a reference signal (Table 3). The hemodynamic signal is designed by gamma functions [64], the balloon model [65], the finite element method [66], the state-space method [67,68], etc. These hemodynamic signals are not suitable for use in unknown areas because they depend on the brain region or task being measured. However, the proposed method is differentiated from existing methods in that it does not require a reference signal and can be applied without external devices.

Table 3.

Comparison with the existing methods (adaptive filtering and general linear model) and the proposed method.

5. Conclusions

The following three implications are made:

(i) Alleviating the dHRF’s trap: In the conventional methods (i.e., general linear model [59], recursive estimation method [60], etc.), the brain signal is identified by comparing HbO signals with a dHRF. If the correlation coefficient between two signals is high, the measured HbO is attributed to the task. The dHRF computed by convolving a gamma function with the task period contains multiple frequencies, not a single frequency. For example, for a 20 s task followed by a 20 s rest, the dHRF has 0.025 Hz (=1/40 s), and all other components are considered noises. Such multiple frequencies are also seen from the synthetic data analysis, showing that the added 0.025 Hz dHRF affected neighboring frequency bands, see Figure 6. Therefore, if the brain signal is identified with only the dHRF, the neighboring signals are unwillingly included (which could be noises). Hence, the proposed method can alleviate the dHRF’s trap.

(ii) Can handle an unknown task period: In neuroscience, fNIRS has been used to identify brain regions associated with specific tasks and to understand how neural networks function. In particular, regular examinations in daily life are essential for the early detection of cognitive decline due to brain disease or aging. Research on the classification of cognitive decline and brain disease diagnosis using fNIRS is being actively conducted. However, it is challenging to establish classification criteria because hemodynamic signals vary depending on various factors such as age and gender. In particular, it is necessary to compare behavioral data and fNIRS signals for classification, and the duration of cognitive function tests belonging to neuropsychological tests should be pre-designed. Thus, the proposed method can be used when the task period to be observed is unknown or very long.

(iii) Starting time estimation for passive BCI: Recently, passive BCI has become essential for fault-free automotive cars, pilots, etc. In this case, the brain signal’s starting time has to be identified. To estimate the starting time, a moving-window approach can be adopted. If the prediction error becomes large while moving the window, the instance of a significant error can be considered as the starting time of a passive brain signal, and we can generate a BCI command.

The proposed method can overcome the variability in the resting state, which varies from person to person, by predicting the subsequent signal. The predicted signal ought to be removed from the measured signal, and the remaining signal should be analyzed for brain activity. Although the proposed method has some limitations, e.g., large volumes of training data and computation time to train the model for the first time, it is expected to play a significant role in improving the temporal resolution of fNIRS in the future.

Author Contributions

Conceptualization, S.-H.Y., K.-S.H.; methodology, S.-H.Y.; software, S.-H.Y.; formal analysis, S.-H.Y., K.-S.H.; resources, G.H.; writing—original draft preparation, S.-H.Y.; writing—review and editing, K.-S.H.; visualization, S.-H.Y.; supervision, K.-S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Research Foundation of Korea under the Ministry of Science and ICT, Korea (grant no. RS-2023-00207954).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Pusan National University Yangsan Hospital ethics committee (Institutional Review Board approval no: PNUYH-03-2018-003).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data and code that support the findings of this study are openly available in https://github.com/sohyeonyoo/MODWT-LSTM.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ayaz, H.; Baker, W.B.; Blaney, G.; Boas, D.A.; Bortfeld, H.; Brady, K.; Brake, J.; Brigadoi, S.; Buckley, E.M.; Carp, S.A.; et al. Optical imaging and spectroscopy for the study of the human brain: Status report. Neurophotonics 2022, 9, S24001. [Google Scholar] [CrossRef] [PubMed]

- Yoo, S.-H.; Woo, S.-W.; Shin, M.-J.; Yoon, J.A.; Shin, Y.-I.; Hong, K.-S. Diagnosis of mild cognitive impairment using cognitive tasks: A functional near-infrared spectroscopy study. Curr. Alzheimer Res. 2020, 17, 1145–1160. [Google Scholar] [CrossRef] [PubMed]

- Yang, D.L.; Hong, K.-S.; Yoo, S.-H.; Kim, C.-S. Evaluation of neural degeneration biomarkers in the prefrontal cortex for early identification of patients with mild cognitive impairment: An fNIRS study. Front. Hum. Neurosci. 2019, 13, 317. [Google Scholar] [CrossRef] [PubMed]

- Naseer, N.; Hong, K.-S. fNIRS-based brain-computer interfaces: A review. Front. Hum. Neurosci. 2015, 9, 3. [Google Scholar] [CrossRef] [PubMed]

- Yoo, S.-H.; Santosa, H.; Kim, C.-S.; Hong, K.-S. Decoding multiple sound-categories in the auditory cortex by neural networks: An fNIRS study. Front. Hum. Neurosci. 2021, 15, 63619. [Google Scholar] [CrossRef]

- Hong, K.-S.; Bhutta, M.R.; Liu, X.; Shin, Y.-I. Classification of somatosensory cortex activities using fNIRS. Behav. Brain Res. 2017, 333, 225–234. [Google Scholar] [CrossRef]

- Zhang, D.D.; Chen, Y.; Hou, X.L.; Wu, Y.J. Near-infrared spectroscopy reveals neural perception of vocal emotions in human neonates. Hum. Brain Mapp. 2019, 40, 2434–2448. [Google Scholar] [CrossRef]

- Flynn, M.; Effraimidis, D.; Angelopoulou, A.; Kapetanios, E.; Williams, D.; Hemanth, J.; Towell, T. Assessing the effectiveness of automated emotion recognition in adults and children for clinical investigation. Front. Hum. Neurosci. 2020, 14, 70. [Google Scholar] [CrossRef]

- Huang, R.; Qing, K.; Yang, D.; Hong, K.-S. Real-time motion artifact removal using a dual-stage median filter. Biomed. Signal Proces. 2022, 72, 103301. [Google Scholar] [CrossRef]

- Bicciato, G.; Keller, E.; Wolf, M.; Brandi, G.; Schulthess, S.; Friedl, S.G.; Willms, J.F.; Narula, G. Increase in low-frequency oscillations in fNIRS as cerebral response to auditory stimulation with familiar music. Brain Sci. 2022, 12, 42. [Google Scholar] [CrossRef]

- Rojas, R.F.; Huang, X.; Hernandez-Juarez, J.; Ou, K.L. Physiological fluctuations show frequency-specific networks in fNIRS signals during resting state. In Proceedings of the 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Republic of Korea, 11–15 July 2017. [Google Scholar]

- Vermeij, A.; Meel-van den Abeelen, A.S.S.; Kessels, R.P.C.; van Beek, A.H.E.A.; Claassen, J.A.H.R. Very-low-frequency oscillations of cerebral hemodynamics and blood pressure are affected by aging and cognitive load. Neuroimage 2014, 85, 608–615. [Google Scholar] [CrossRef] [PubMed]

- Noah, J.A.; Zhang, X.; Dravida, S.; DiCocco, C.; Suzuki, T.; Aslin, R.N.; Tachtsidis, I.; Hirsch, J. Comparison of short-channel separation and spatial domain filtering for removal of non-neural components in functional near-infrared spectroscopy signals. Neurophotonics 2021, 8, 015004. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, H.D.; Yoo, S.-H.; Bhutta, M.R.; Hong, K.-S. Adaptive filtering of physiological noises in fNIRS data. Biomed. Eng. Online 2018, 17, 180. [Google Scholar] [CrossRef]

- Sutoko, S.; Chan, Y.L.; Obata, A.; Sato, H.; Maki, A.; Numata, T.; Funane, T.; Atsumori, H.; Kiguchi, M.; Tang, T.B.; et al. Denoising of neuronal signal from mixed systemic low-frequency oscillation using peripheral measurement as noise regressor in near-infrared imaging. Neurophotonics 2019, 6, 015001. [Google Scholar] [CrossRef] [PubMed]

- Hong, K.-S.; Khan, M.J.; Hong, M.J. Feature extraction and classification methods for hybrid fNIRS-EEG brain-computer interfaces. Front. Hum. Neurosci. 2018, 12, 246. [Google Scholar] [CrossRef]

- von Luhmann, A.; Ortega-Martinez, A.; Boas, D.A.; Yucel, M.A. Using the general linear model to improve performance in fNIRS single trial analysis and classification: A perspective. Front. Hum. Neurosci. 2020, 14, 30. [Google Scholar] [CrossRef]

- Shensa, M.J. The discrete wavelet transform—Wedding the a trous and Mallat algorithms. IEEE Trans. Signal Process. 1992, 40, 2464–2482. [Google Scholar] [CrossRef]

- Molavi, B.; Dumont, G.A. Wavelet-based motion artifact removal for functional near-infrared spectroscopy. Physiol. Meas. 2012, 33, 259–270. [Google Scholar] [CrossRef]

- Duan, L.; Zhao, Z.P.; Lin, Y.L.; Wu, X.Y.; Luo, Y.J.; Xu, P.F. Wavelet-based method for removing global physiological noise in functional near-infrared spectroscopy. Biomed. Opt. Express 2018, 9, 330656. [Google Scholar] [CrossRef]

- Dommer, L.; Jager, N.; Scholkmann, F.; Wolf, M.; Holper, L. Between-brain coherence during joint n-back task performance: A two-person functional near-infrared spectroscopy study. Behav. Brain Res. 2012, 234, 212–222. [Google Scholar] [CrossRef]

- Holper, L.; Scholkmann, F.; Wolf, M. Between-brain connectivity during imitation measured by fNIRS. Neuroimage 2012, 63, 212–222. [Google Scholar] [CrossRef]

- Zhu, L.; Wang, Y.X.; Fan, Q.B. MODWT-ARMA model for time series prediction. Appl. Math. Model. 2014, 38, 1859–1865. [Google Scholar] [CrossRef]

- Percival, D.B.; Walden, A.T. Wavelet Methods for Time Series Analysis; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Abdelhakim, S.; El Amine, D.S.M.; Fadia, M. Maximal overlap discrete wavelet transform-based abrupt changes detection for heart sounds segmentation. J. Mech. Med. Biol. 2023, 23, 2350017. [Google Scholar] [CrossRef]

- Adib, A.; Zaerpour, A.; Lotfirad, M. On the reliability of a novel MODWT-based hybrid ARIMA-artificial intelligence approach to forecast daily Snow Depth (Case study: The western part of the Rocky Mountains in the USA). Cold Reg. Sci. Technol. 2021, 189, 103342. [Google Scholar] [CrossRef]

- Yousuf, M.U.; Al-Bahadly, I.; Avci, E. Short-Term Wind Speed Forecasting based on hybrid MODWT-ARIMA-Markov model. IEEE Access 2021, 9, 79695–79711. [Google Scholar] [CrossRef]

- Li, M.Y.; Chen, W.Z.; Zhang, T. Application of MODWT and log-normal distribution model for automatic epilepsy identification. Biocybern. Biomed. Eng. 2017, 37, 679–689. [Google Scholar] [CrossRef]

- Bizzego, A.; Gabrieli, G.; Esposito, G. Deep neural networks and transfer learning on a multivariate physiological signal dataset. Bioengineering 2021, 8, 35. [Google Scholar] [CrossRef]

- ElNakieb, Y.; Ali, M.T.; Elnakib, A.; Shalaby, A.; Mahmoud, A.; Soliman, A.; Barnes, G.N.; El-Baz, A. Understanding the role of connectivity dynamics of resting-state functional MRI in the diagnosis of autism spectrum disorder: A comprehensive study. Bioengineering 2023, 10, 56. [Google Scholar] [CrossRef]

- Madan, P.; Singh, V.; Singh, D.P.; Diwakar, M.; Pant, B.; Kishor, A. A hybrid deep learning approach for ECG-based arrhythmia classification. Bioengineering 2022, 9, 152. [Google Scholar] [CrossRef]

- Lee, P.L.; Chen, S.H.; Chang, T.C.; Lee, W.K.; Hsu, H.T.; Chang, H.H. Continual learning of a transformer-based deep learning classifier using an initial model from action observation EEG data to online motor imagery classification. Bioengineering 2023, 10, 186. [Google Scholar] [CrossRef]

- Nafea, M.S.; Ismail, Z.H. Supervised machine learning and deep learning techniques for epileptic seizure recognition using EEG signals-A systematic literature review. Bioengineering 2022, 9, 781. [Google Scholar] [CrossRef] [PubMed]

- Ghonchi, H.; Fateh, M.; Abolghasemi, V.; Ferdowsi, S.; Rezvani, M. Deep recurrent-convolutional neural network for classification of simultaneous EEG-fNIRS signals. IET Signal Process. 2020, 14, 142–153. [Google Scholar] [CrossRef]

- Hekmatmanesh, A.; Azni, H.M.; Wu, H.; Afsharchi, M.; Li, M.; Handroos, H. Imaginary control of a mobile vehicle using deep learning algorithm: A brain computer interface study. IEEE Access 2021, 10, 20043–20052. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Cao, J.; Li, Z.; Li, J. Financial time series forecasting model based on CEEMDAN and LSTM. Phys. A 2019, 519, 127–139. [Google Scholar] [CrossRef]

- Esmaeili, F.; Cassie, E.; Nguyen, H.P.T.; Plank, N.O.V.; Unsworth, C.P.; Wang, A.L. Predicting analyte concentrations from electrochemical aptasensor signals using LSTM recurrent networks. Bioengineering 2022, 9, 529. [Google Scholar] [CrossRef]

- Shao, E.Z.; Mei, Q.C.; Ye, J.Y.; Ugbolue, U.C.; Chen, C.Y.; Gu, Y.D. Predicting coordination variability of selected lower extremity couplings during a cutting movement: An investigation of deep neural networks with the LSTM structure. Bioengineering 2022, 9, 411. [Google Scholar] [CrossRef]

- Phutela, N.; Relan, D.; Gabrani, G.; Kumaraguru, P.; Samuel, M. Stress classification using brain signals based on LSTM network. Comput. Intell. Neurosci. 2022, 2022, 767592. [Google Scholar] [CrossRef]

- Barzegar, R.; Aalami, M.T.; Adamowski, J. Coupling a hybrid CNN-LSTM deep learning model with a boundary corrected maximal overlap discrete wavelet transform for multiscale lake water level forecasting. J. Hydrol. 2021, 598, 126196. [Google Scholar] [CrossRef]

- Li, Y.T.; Li, R.Y. Predicting ammonia nitrogen in surface water by a new attention-based deep learning hybrid model. Environ. Res. 2023, 216, 114723. [Google Scholar] [CrossRef]

- Li, Y.M.; Peng, T.; Zhang, C.; Sun, W.; Hua, L.; Ji, C.L.; Shahzad, N.M. Multi-step ahead wind speed forecasting approach coupling maximal overlap discrete wavelet transform, improved grey wolf optimization algorithm and long short-term memory. Renew. Energy 2022, 196, 1115–1126. [Google Scholar] [CrossRef]

- Raghu, S.; Sriraam, N.; Temel, Y.; Rao, S.V.; Hegde, A.S.; Kubben, P.L. Complexity analysis and dynamic characteristics of EEG using MODWT based entropies for identification of seizure onset. J. Biomed. Res. 2020, 34, 213–227. [Google Scholar] [CrossRef] [PubMed]

- Ge, Q.; Lin, Z.C.; Gao, Y.X.; Zhang, J.X. A robust discriminant framework based on functional biomarkers of eeg and its potential for diagnosis of Alzheimer’s disease. Healthcare 2020, 8, 476. [Google Scholar] [CrossRef] [PubMed]

- Cordes, D.; Kaleem, M.F.; Yang, Z.S.; Zhuang, X.W.; Curran, T.; Sreenivasan, K.R.; Mishra, V.R.; Nandy, R.; Walsh, R.R. Energy-period profiles of brain networks in group fMRI resting-state data: A comparison of empirical mode decomposition with the short-time Fourier transform and the discrete wavelet transform. Front. Neurosci. 2021, 15, 663403. [Google Scholar] [CrossRef]

- Martin-Chinea, K.; Ortega, J.; Gomez-Gonzalez, J.F.; Pereda, E.; Toledo, J.; Acosta, L. Effect of time windows in LSTM networks for EEG-based BCIs. Cogn. Neurodyn. 2023, 17, 385–398. [Google Scholar] [CrossRef]

- Van Houdt, G.; Mosquera, C.; Napoles, G. A review on the long short-term memory model. Artif. Intell. Rev. 2020, 53, 5929–5955. [Google Scholar] [CrossRef]

- Gemignani, J.; Gervain, J.; IEEE. A practical guide for synthetic fNIRS data generation. In Proceedings of the 43rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Electronic Network, Virtual, 1–5 November 2021; pp. 828–831. [Google Scholar]

- Gemignani, J.; Middell, E.; Barbour, R.L.; Graber, H.L.; Blankertz, B. Improving the analysis of near-spectroscopy data with multivariate classification of hemodynamic patterns: A theoretical formulation and validation. J. Neural Eng. 2018, 15, 045001. [Google Scholar] [CrossRef]

- Zhang, Q.; Li, F.; Long, F.; Ling, Q. Vehicle emission forecasting based on wavelet transform and long short-term memory network. IEEE Access 2018, 6, 56984–56994. [Google Scholar] [CrossRef]

- Seo, Y.; Choi, Y.; Choi, J. River stage modeling by combining maximal overlap discrete wavelet transform, support vector machines and genetic algorithm. Water 2017, 9, 525. [Google Scholar] [CrossRef]

- Abbasimehr, H.; Paki, R. Improving time series forecasting using LSTM and attention models. J. Ambient Intell. Humaniz. Comput. 2022, 13, 673–691. [Google Scholar] [CrossRef]

- Cutini, S.; Basso Moro, S.; Bisconti, S. Functional near infrared optical imaging in cognitive neuroscience: An introductory review. J. Near Infrared Spectrosc. 2012, 20, 75–92. [Google Scholar] [CrossRef]

- Ernst, L.S.; Schneider, S.; Ehlis, A.-C.; Fallgatter, A.J. Functional near infrared spectroscopy in psychiatry: A critical review. J. Near Infrared Spectrosc. 2012, 20, 93–105. [Google Scholar] [CrossRef]

- Hu, X.S.; Hong, K.-S.; Ge, S.Z.S.; Jeong, M.-Y. Kalman estimator- and general linear model-based on-line brain activation mapping by near-infrared spectroscopy. Biomed. Eng. Online 2010, 9, 82. [Google Scholar] [CrossRef] [PubMed]

- Scarpa, F.; Brigadoi, S.; Cutini, S.; Scatturin, P.; Zorzi, M.; Dell’Acqua, R.; Sparacino, G. A reference-channel based methodology to improve estimation of event-related hemodynamic response from fNIRS measurements. Neuroimage 2013, 72, 106–119. [Google Scholar] [CrossRef]

- von Luhmann, A.; Li, X.E.; Muller, K.R.; Boas, D.A.; Yucel, M.A. Improved physiological noise regression in fNIRS: A multimodal extension of the general linear model using temporally embedded canonical correlation analysis. Neuroimage 2020, 208, 116472. [Google Scholar] [CrossRef]

- Barker, J.W.; Aarabi, A.; Huppert, T.J. Autoregressive model based algorithm for correcting motion and serially correlated errors in fNIRS. Biomed. Opt. Express 2013, 4, 1366–1379. [Google Scholar] [CrossRef]

- Zhang, Q.; Strangman, G.E.; Ganis, G. Adaptive filtering to reduce global interference in non-invasive NIRS measures of brain activation: How well and when does it work? Neuroimage 2009, 45, 788–794. [Google Scholar] [CrossRef]

- Aqil, M.; Hong, K.-S.; Jeong, M.-Y.; Ge, S.S. Cortical brain imaging by adaptive filtering of NIRS signals. Neurosci. Lett. 2012, 514, 35–41. [Google Scholar] [CrossRef]

- Zafar, A.; Hong, K.-S. Neuronal activation detection using vector phase analysis with dual threshold circles: A functional near-infrared spectroscopy study. Int. J. Neural Syst. 2018, 28, 1850031. [Google Scholar] [CrossRef]

- Hong, K.-S.; Zafar, A. Existence of initial dip for BCI: An illusion or reality. Front. Neurorobot. 2018, 12, 69. [Google Scholar] [CrossRef]

- Shan, Z.Y.; Wright, M.J.; Thompson, P.M.; McMahon, K.L.; Blokland, G.; de Zubicaray, G.I.; Martin, N.G.; Vinkhuyzen, A.A.E.; Reutens, D.C. Modeling of the hemodynamic responses in block design fMRI studies. J. Cereb. Blood Flow Metab. 2014, 34, 316–324. [Google Scholar] [CrossRef] [PubMed]

- Buxton, R.B.; Uludag, K.; Dubowitz, D.J.; Liu, T.T. Modeling the hemodynamic response to brain activation. Neuroimage 2004, 23, S220–S233. [Google Scholar] [CrossRef] [PubMed]

- Huang, R.S.; Hong, K.-S. Multi-channel-based differential pathlength factor estimation for continuous-wave fNIRS. IEEE Access 2021, 9, 37386–37396. [Google Scholar] [CrossRef]

- Hong, K.-S.; Nguyen, H.D. State-space models of impulse hemodynamic responses over motor, somatosensory, and visual cortices. Biomed. Opt. Express 2014, 5, 1778–1798. [Google Scholar] [CrossRef]

- Aqil, M.; Hong, K.-S.; Jeong, M.-Y.; Ge, S.S. Detection of event-related hemodynamic response to neuroactivation by dynamic modeling of brain activity. Neuroimage 2012, 63, 553–568. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).