Electroencephalography-Based Brain–Machine Interfaces in Older Adults: A Literature Review

Abstract

1. Introduction

2. Research Methodology

2.1. Search Strategy

2.2. Inclusion and Exclusion Criteria

- (a)

- HMI in older adults;

- (b)

- HMI using EEG signals;

- (c)

- Both healthy aging individuals and subjects with neurocognitive impairment;

- (d)

- Both technical issues (signals detection, feature extraction, classification) and applicative implications (users’ need, effectiveness in restoration of lost skills, and rehabilitation).

- (a)

- Studies not using EEG signals;

- (b)

- Subjects not meeting the ”ageing” criteria (mean age: 60 years and older) [6];

- (c)

- Cross-sectional studies without differences between age groups (single-group data analysis).

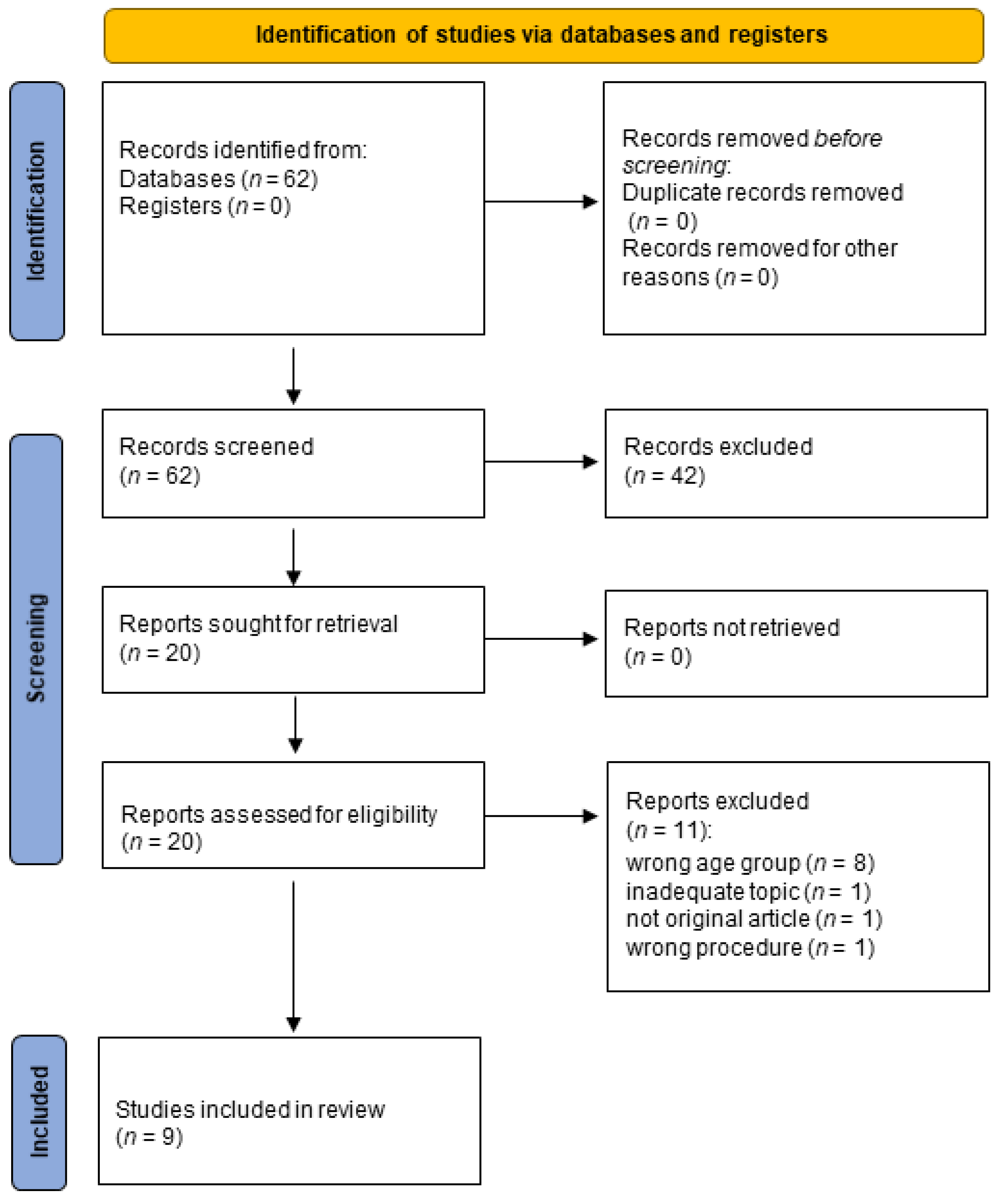

2.3. Study Selection

2.4. Outcome Measures

3. Results

3.1. Study Selection

- 23 did not take into consideration older adults (wrong age group);

- 19 were not original articles (12 reviews, 2 editorials, 2 conference papers, 1 perspective, 1 letter, 1 case);

- 6 focused on issues not concerning the aims of our review (inadequate topics);

- 4 studies did not evaluate humans (wrong species);

- 1 did not use EEG signals (wrong procedure).

3.2. Description of the Selected Studies

3.2.1. Li et al., 2022 [7]

3.2.2. Goelz et al., 2021 [8]

3.2.3. Chen et al., 2019 [9]

3.2.4. Zich et al., 2017 [10]

3.2.5. Hewerg et al., 2016 [11]

3.2.6. Gomez-Pilar et al., 2016 [12]

3.2.7. Karch et al., 2015 [13]

3.2.8. Lee et al., 2015 [14]

3.2.9. Lee et al., 2013 [15]

4. Discussion

- The study by Li et al. [7], which addressed intrinsic capacity (both cognition and vitality) during an MI task, showed that older people were less affected by the degree of cognitive fatigue, although the classification accuracy of the MI data was lower in older subjects compared to younger participants. Interestingly, the deep learning method, which extracts data from the frontal and parietal channels, may be appropriate for older individuals. Specifically, the authors found that classification accuracy on MI tasks was set by CNN at an acceptable level of about 70%. This suggests that the future prospects of BCI-MI in the older population need not to be based on SMR alone and that the appropriate algorithms can be applied without obvious lateralization of ERD. In fact, the CNN model based on fused spatial information greatly improves classification accuracy and leads to longer training time, which can be successfully used in healthy aging individuals. Therefore, it should be investigated whether these training sessions can support rehabilitation in aging people with neurological diseases, such as stroke patients.

- In the EEG-fNIRS study by Zich et al. [10], which focused on intrinsic capacity in relation to cognition, age-related changes in brain activity were analyzed in the neural correlates of both MI and ME. During MI, older adults showed lower hemispheric asymmetry of ERD% and HbR concentration than younger adults, reflecting greater ipsilateral activity. In addition, compared with no feedback, EEG-based NF-enhanced classification accuracy, thresholds, ERD% and HbR concentration for both contralateral activity and lateralization degree in both age groups. Finally, significant modulation correlations were found between ERD% and hemodynamic measurements, although there were no significant amplitude correlations. Overall, the differences between the observed effects for ERD%, HbR concentration and HbO concentration suggest that the relationship between electrophysiological motor activity and hemodynamics is far from clear. However, the results also support the idea that age-related changes in MI should be taken into account when designing MI NF protocols for patients. In particular, the influence of age should be carefully considered in the design of neuro-rehabilitation protocols for stroke patients. These results suggest a complex relationship between age and exercise-related activity in both EEG and hemodynamic measurements.

- In another study using the MI-BCI technology, Gomez-Pilar et al. [12], dealing with intrinsic capacity of cognition, showed promising results about the usefulness of NFT to improve brain plasticity and consequently neuropsychological functions (such as spatial awareness, language, and memory), which are the main concerns in older adults. This study may be helpful in the development of new NFT based on MI strategies. In particular, these data suggest the utility of BCI-based NFT in rehabilitating some cognitive functions in terms of improving brain plasticity, which seems to affect the older population.

- Karsch et al. [13] investigated inter-individual differences in brain-behavior mapping by examining the degree of model individualization required to demonstrate the feasibility of deriving person-specific models with different spatiotemporal information in three age groups (i.e., children, younger adults, and older adults). The authors focused on intrinsic capacity of cognition, i.e., mechanisms of selection and maintenance of working memory. The results show the potential of a multivariate approach to provide better discrimination than the classical non-person-specific models. Indeed, it allows easier interpretation at both individual and group levels to classify patterns based on rhythmic neural activity in the alpha frequency range across the lifespan. Specifically, information maintained at WM and the focus of spatial attention contributed to identify and quantify differences across age groups based on the different spatiotemporal properties of EEG recordings.

- Lee et al. [15] tested the potential of adapting an innovative computer-based BCI program for CT to improve attention and memory in a group of healthy English-speaking older adults. The authors, focusing on intrinsic capacity of cognition, demonstrated the effectiveness of the CT program, particularly in improving immediate and delay memory, attention, visuospatial, and global cognitive abilities. In a second randomized controlled trial [14], the same authors investigated the generalizability of their system and training task to a different language (i.e., Chinese-speaking) population of older adults. In their studies [14,15], the BCI-based intervention showed promising results in improving memory and attention. Future research should include participants with mild and severe cognitive impairment. If proven effective in a larger sample, this intervention could potentially serve to reduce, or even prevent, cognitive decline in patients with mild or major neurocognitive disorders.

- Chen et al. [9] addressed intrinsic-sensory capacity by examining whether SMR elicited by vibro-tactile stimulation shows differences in younger and healthy older adults. Their results showed that age-related electrophysiological changes significantly affect SMR properties. Specifically, older subjects showed less lateralization in somatosensory cortex in response to vibro-tactile stimulation compared to younger adults. These age-related EEG changes reflected greater susceptibility to noise and interference and resulted in lower BCI performance accuracy during classification. Future studies should focus on the effects of aging on EEG signals. In addition, NFT methods to improve cortical lateralization and algorithms not based solely on EEG lateralization should be investigated.

- Herweg et al. [11] investigated the effects of age and training in healthy older adults focusing on intrinsic sensory capacity and using a tactile stimulation protocol in a navigation task. Results showed that tactile BCI performance could be valuable, although age-related changes in somatosensory abilities were negligible. This protocol enabled learning and significantly improved BCI performance and EEG characteristics, demonstrating the positive effect of training. Future studies should focus on tactile BCI development, considering specific stimulation design, individual characteristics, and training. The results suggest that tactile BCIs can not only be a valid alternative to visual and auditory tasks, but can also be used despite age-related changes in somatosensory abilities.

- Goelz et al. [8] investigated intrinsic-sensory and locomotor capacities. The authors analyzed the classification of fine motor movements in terms of age-related differences in functional brain activity. Specifically, the authors compared the performance of younger and older healthy adults on visuomotor tracking tasks using EEG recordings. Results revealed electrophysiological brain activity patterns associated with an altered sensorimotor network in older adults, suggesting reorganization of task-related brain networks in response to task features. Future research on BCI applications should consider age-related differences in the development of BCI and neurofeedback systems when targeting the older population (e.g., in the selection of appropriate features and algorithms).

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ALS | amyotrophic lateral sclerosis |

| ANN | artificial neural network |

| ApEn | approximate entropy |

| AR | autoregressive |

| ARMA | autoregressive moving average |

| ARV | average rectified value |

| BCI | brain–computer interface |

| BSS | blind source separation |

| CNN | convolutional neural networks |

| CSP | common spatial pattern |

| CT | cognitive training |

| EEG | electroencephalogram |

| FIR | finite impulse response |

| HMI | human–machine interaction |

| ICA | independent component analysis |

| IIR | infinite impulse response |

| ITR | information transfer rate |

| LDA | linear discriminant analysis |

| LLE | local linear embedding |

| MA | moving average |

| MI | motor-imagery |

| NF | neurofeedback |

| NFT | neurofeedback training |

| PAC | phase amplitude coupling |

| PCA | principal component analysis |

| PLV | phase locking value |

| PRISMA | Preferred Reporting Items for Systematic Review and Meta-Analyses |

| PSD | power spectral density |

| RBANS | Repeatable Battery for the Assessment of Neuropsychological Status |

| RMS | root mean squared |

| SNR | signal to noise ratio |

| SVM | support vector machine |

| TFR | time–frequency representations |

| WM | working memory |

Appendix A. Background on Data Processing and Classification

Appendix A.1. Signal Acquisition

Appendix A.2. Fourier Analysis

Appendix A.3. Sampling Theory

Appendix A.4. Filtering

Appendix A.4.1. Temporal Filters and EEG Rhythms

Appendix A.4.2. Spatial Filters and Selectivity

- Principal component analysis (PCA [27]) chooses the weights in order to obtain output signals that are orthogonal (i.e., uncorrelated) to each other.

- Independent component analysis (ICA [27]) selects linear combinations that makes the outputs statistically independent from each other.

- Common spatial pattern (CSP [28]) is obtained by optimizing the filter in order to separate the signal into the sum of components which show maximum difference of the variances between two windows. An application is in enhancing the energy of a specific target portion of data (e.g., the cortical potential of interest for a BCI) and reducing that of the rest.

Appendix A.5. Feature Processing and Classification

Appendix A.5.1. Feature Extraction

- Time features can be the average rectified value (ARV) or the root mean squared (RMS) of epochs of data, the height of peaks of evoked potentials, the number of zero-crossings. More advanced features reflect the possible complexity of the data: for example, approximate entropy (ApEn [30]), and fractal dimension [31].

- Interesting features extracted from a frequency representation of the signal are the mean frequency, the median frequency or the power of different rhythms.

- Cross-correlation quantifies a linear coupling of the EEGs from two channels. The delay corresponding to the maximal cross-correlation can provide also some insight on the causal relationship between the two signals.

- Coherence provides information on correlation of oscillations as a function of their frequency.

- Nonlinear indexes indicate couplings at a statistical order larger than linear. For example, mutual information [33] and transfer entropy [34] are based on Shannon information theory and are zero for statistically independent inputs. Nonlinear interdependence is based on the study of possible coupling during recurrences, i.e., when the data show similar behavior [35].

- Phase locking value (PLV) detects periods in which the phase delay of oscillations from different channels remains constant (so that the oscillations are locked) [36].

- Granger causality indicates a causal-effect relation between the EEGs from two channels, given in terms of the improved performance in predicting future samples of a signal when information from the other is provided [37].

Appendix A.5.2. Feature Generation

Appendix A.5.3. Feature Selection

- Avoiding overfitting;

- Reducing the computational cost;

- Gaining a deeper insight into the classification/prediction model.

Appendix A.5.4. Classification

- A classification function to discriminate between discrete choices,

- A regression model to translate input observations into a continuous variable.

- Linear regression [43]. The output Y is approximated as a linear combination of the inputs (N being the number of input features)where the weights are chosen optimally with respect to some criterion. For example, imposing the minimum sum of squared error (considering all observations), the weights can be obtained analytically by pseudoinversion of the matrix A including the featureswhere M is the number of observations and are the elements of the ith feature . The problem can be written in matrix form aswhere W is the vector including all weights. The solution with minimum squared error iswhere is the pseudoinverse of A (notice that is the cross-correlation of the input features and desired output, represents the covariance between features).A linear regression problem is also obtained if a polynomial expression or another nonlinear function of the features is included as additional input to better fit the data: more columns are included in the matrix A, but we still obtain a linear problem with respect to the weights.

- Bayesian classifiers [43]. A probabilistic model is built based on the training data to evaluate the probability of the input feature conditional to specific outputs . Then, a priori information on the output probabilities allows to obtain the posterior probability of the output given the inputs, using Bayes theoremThe estimated output in the test set is given by the one with maximal posterior probability.

- Classification tree [44]. Decision trees are predictive models in which target variables are the leaves of a tree. They can take a finite set of output values. They are multistage systems, in which classes are rejected sequentially, until an accepted class is arrived upon. Specifically, the feature space is sequentially split into clusters, corresponding to classes. A set of questions is applied to individual features, which are compared to threshold values, on the basis of which the decision tree branches.Different approaches have been proposed to select the features and associated thresholds to split data until reaching a decision on the estimated class.

- Support vector machine (SVM) [45]. A hyper-surface is searched that best separates two classes (in the multi-class case, a combination of binary classifications is used). Support vectors are specific observations (i.e., points in the space of input features) at the border between two classes that define the lower and upper margins of a region separating the two classes. This hyper-surface separating the two classes is chosen in order to maximize the margin.

- Neural network [46]. An artificial neural network (ANN) is a biologically inspired computational model consisting of a complex set of interconnections between basic computational units, called neurons. Each neuron performs a nonlinear processing, by a so-called activation function applied on the sum of a bias and a linear combination of the input. The bias and the weights of the linear combinations defining the input of each neuron of the network are the parameters to be selected to obtain a specific objective, e.g., minimizing the mean-squared error in estimating the target output on a training dataset.Different topologies of the networks have been considered, e.g., multi-layer perceptron (with a forward structure of sequential layers of neurons, with each layer obtaining input from the previous one and providing output to the following one) with a different number of layers, recursive networks (with the output of one layer of neurons providing inputs to a previous layer).Different learning algorithms have been proposed: backpropagation or other methods related to the gradient of the objective functional; evolutionary algorithms (e.g., genetic approaches or particle swarm optimization) designed to prevent being stuck into a local minimum of the error function.Deep learning approaches have been recently proposed showing exceptional performances [47]. They are based on networks with many layers. Some of these layers can perform a convolution operation (convolutional neural networks—CNN) with a kernel adapted to the data.

Appendix A.5.5. Performance Evaluation

- Bias, related to the ability of the classifier to approximate the true model generating the data. It is due to inaccurate assumptions or simplifications made by the classifier.

- Variance, quantifying how much classifiers estimated from different training sets differ from each other.

- Split training data into k equal parts.

- Train the model on parts and calculate validation error on the kth one.

- Repeat k times, using each data subset for validation once.

- Split training data randomly.

- Fit the model on training data and calculate the validation error.

- Repeat for many iterations (say 100 or 500) and take the average of the validation errors.

References

- World Health Organization. Decade of Healthy Ageing: Baseline Report. 2020. Available online: https://apps.who.int/iris/handle/10665/338677 (accessed on 23 January 2023).

- Jiang, Y.; Abiri, R.; Zhao, X. Tuning up the Old Brain with New Tricks: Attention Training via Neurofeedback. Front. Aging Neurosci. 2017, 9, 52. [Google Scholar] [CrossRef] [PubMed]

- Belkacem, A.N.; Jamil, N.; Palmer, J.A.; Ouhbi, S.; Chen, C. Brain Computer Interfaces for Improving the Quality of Life of Older Adults and Elderly Patients. Front. Neurosci. 2020, 14, 692. [Google Scholar] [CrossRef] [PubMed]

- Belkacem, A.N.; Falk, T.H.; Yanagisawa, T.; Guger, C. Editorial: Cognitive and Motor Control Based on Brain-Computer Interfaces for Improving the Health and Well-Being in Older Age. Front. Hum. Neurosci. 2022, 16, 881922. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, 71. [Google Scholar] [CrossRef]

- World Health Organization. Health Topics, Ageing. 2022. Available online: https://www.who.int/health-topics/ageing#tab=tab_1 (accessed on 30 November 2022).

- Li, X.; Chen, P.; Yu, X.; Jiang, N. Analysis of the Relationship Between Motor Imagery and Age-Related Fatigue for CNN Classification of the EEG Data. Front. Aging Neurosci. 2022, 14, 909571. [Google Scholar] [CrossRef]

- Goelz, C.; Mora, K.; Rudisch, J.; Gaidai, R.; Reuter, E.; Godde, B.; Reinsberger, C.; Voelcker-Rehage, C.; Vieluf, S. Classification of visuomotor tasks based on electroencephalographic data depends on age-related differences in brain activity patterns. Neural Netw. 2021, 142, 363–374. [Google Scholar] [CrossRef]

- Chen, M.L.; Fu, D.; Boger, J.; Jiang, N. Age-Related Changes in Vibro-Tactile EEG Response and Its Implications in BCI Applications: A Comparison Between Older and Younger Populations. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 603–610. [Google Scholar] [CrossRef]

- Zich, C.; Debener, S.; Thoene, A.K.; Chen, L.C.; Kranczioch, C. Simultaneous EEG-fNIRS reveals how age and feedback affect motor imagery signatures. Neurobiol. Aging 2017, 49, 183–197. [Google Scholar] [CrossRef]

- Herweg, A.; Gutzeit, J.; Kleih, S.; Kübler, A. Wheelchair control by elderly participants in a virtual environment with a brain-computer interface (BCI) and tactile stimulation. Biol. Psychol. 2016, 121 Pt A, 117–124. [Google Scholar] [CrossRef]

- Gomez-Pilar, J.; Corralejo, R.; Nicolas-Alonso, L.F.; Álvarez, D.; Hornero, R. Neurofeedback training with a motor imagery-based BCI: Neurocognitive improvements and EEG changes in the elderly. Med. Biol. Eng. Comput. 2016, 54, 1655–1666. [Google Scholar] [CrossRef]

- Karch, J.D.; Sander, M.C.; von Oertzen, T.; Brandmaier, A.M.; Werkle-Bergner, M. Using within-subject pattern classification to understand lifespan age differences in oscillatory mechanisms of working memory selection and maintenance. NeuroImage 2015, 118, 538–552. [Google Scholar] [CrossRef] [PubMed]

- Quek, S.Y.; Lee, T.-S.; Goh, S.J.A.; Phillips, R.; Guan, C.; Cheung, Y.B.; Feng, L.; Wang, C.C.; Chin, Z.Y.; Zhang, H.H.; et al. A pilot randomized controlled trial using EEG-based brain-computer interface training for a Chinese-speaking group of healthy elderly. Clin. Interv. Aging. 2015, 10, 217–227. [Google Scholar] [CrossRef] [PubMed]

- Lee, T.-S.; Goh, S.J.A.; Quek, S.Y.; Phillips, R.; Guan, C.; Cheung, Y.B.; Feng, L.; Teng, S.S.W.; Wang, C.C.; Chin, Z.Y.; et al. A brain-computer interface based cognitive training system for healthy elderly: A randomized control pilot study for usability and preliminary efficacy. PLoS ONE 2013, 8, e79419. [Google Scholar] [CrossRef] [PubMed]

- Massetti, N.; Russo, M.; Franciotti, R.; Nardini, D.; Mandolini, G.M.; Granzotto, A.; Bomba, M.; Pizzi, S.D.; Mosca, A.; Scherer, R.; et al. A Machine Learning-Based Holistic Approach to Predict the Clinical Course of Patients within the Alzheimer’s Disease Spectrum. J. Alzheimer’S Dis. JAD 2022, 85, 1639–1655. [Google Scholar] [CrossRef]

- Luo, J.; Sun, W.; Wu, Y.; Liu, H.; Wang, X.; Yan, T.; Song, R. Characterization of the coordination of agonist and antagonist muscles among stroke patients, healthy late middle-aged and young controls using a myoelectric-controlled interface. J. Neural Eng. 2018, 15, 056015. [Google Scholar] [CrossRef]

- Lim, C.G.; Lee, T.S.; Guan, C.T.; Fung, D.S.; Zhao, Y.; Teng, S.S.; Zhang, H.; Krishnan, K.R. A brain-computer interface based attention training program for treating attention deficit hyperactivity disorder. PLoS ONE 2012, 7, e46692. [Google Scholar] [CrossRef]

- Kumar, D.P.; Srirama, S.N.; Amgoth, T.; Annavarapu, C.S.R. Survey on recent advances in IoT application layer protocols and machine learning scope for research directions. Digit. Commun. Netw. 2021, 8, 727–744. [Google Scholar]

- Marple, S.L. Digital Spectral Analysis with Applications; Prentice-Hall International, Inc.: Hoboken, NJ, USA, 1987. [Google Scholar]

- Mesin, L. Introduction to Biomedical Signal Processing; Ilmiolibro Self Publishing: Rome, Italy, 2017; ISBN 8892332481. [Google Scholar]

- Kumar, D.P.; Amgoth, T.; Annavarapu, C.S.R. Machine learning algorithms for wireless sensor networks: A survey. Inf. Fusion 2019, 49, 1–25. [Google Scholar] [CrossRef]

- Theodoridis, S.; Koutroumbas, K. Pattern Recognition; Academic Press: Cambridge, MA, USA, 2008. [Google Scholar]

- Wolpaw, J.; Winter Wolpaw, E. (Eds.) Brain–Computer Interfaces: Principles and Practice; Oxford Academic: Oxford, UK, 2012. [Google Scholar]

- Cohen, L. Time-Frequency Distributions—A review. Proc. IEEE 1989, 77, 941–981. [Google Scholar] [CrossRef]

- Rioul, O.; Vetterli, M. Wavelets and signal processing. IEEE Signal Process. Mag. 1991, 8, 14–38. [Google Scholar] [CrossRef]

- Mesin, L.; Holobar, A.; Merletti, R. Blind source separation: Application to biomedical signals. In Advanced Methods of Biomedical Signal Processing; Cerutti, S., Marchesi, C., Eds.; Wiley: Hoboken, NJ, USA, 2011; pp. 379–409. [Google Scholar]

- Koles, Z.J.; Lazaret, M.S.; Zhou, S.Z. Spatial patterns underlying population differences in the background EEG. Brain Topogr. 1990, 2, 275–284. [Google Scholar] [CrossRef]

- Jatoi, M.A.; Kamel, N.; Malik, A.S.; Faye, I.; Begum, T. A Survey of Methods Used for Source Localization Using EEG Signals. Biomed. Signal Process. Control. 2014, 11, 42–52. [Google Scholar] [CrossRef]

- Mesin, L. Estimation of Complexity of Sampled Biomedical Continuous Time Signals Using Approximate Entropy. Front. Physiol. 2018, 9, 710. [Google Scholar] [CrossRef] [PubMed]

- Mandelbrot, B. How Long is the Coast of Britain? Statistical Self-Similarity and Fractional Dimension. Science 1967, 156, 636–638. [Google Scholar] [CrossRef] [PubMed]

- Park, H.; Friston, K. Structural and functional brain networks: From connections to cognition. Science 2013, 342, 1238411. [Google Scholar] [CrossRef] [PubMed]

- Ibáñez-Molina, A.J.; Soriano, M.F.; Iglesias-Parro, S. Mutual Information of Multiple Rhythms for EEG Signals. Front. Neurosci. 2020, 14, 574796. [Google Scholar] [CrossRef] [PubMed]

- Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 2000, 85, 461–464. [Google Scholar] [CrossRef] [PubMed]

- Arnhold, J.; Grassberger, P.; Lehnertz, K.; Elger, C.E. A robust method for detecting interdependences: Application to intracranially recorded eeg. Phys. D 1999, 134, 419–430. [Google Scholar] [CrossRef]

- Lachaux, J.; Rodriguez, E.; Martinerie, J.; Varela, F. Measuring phase synchrony in brain signals. Hum. Brain Mapp. 1999, 8, 194–208. [Google Scholar] [CrossRef]

- Granger, C.W.J. Investigating Causal Relations by Econometric Models and Cross-Spectral Methods. Econometrica 1969, 37, 424–438. [Google Scholar] [CrossRef]

- Rubinov, M.; Sporns, O. Complex network measures of brain connectivity: Uses and interpretations. Neuroimage 2010, 52, 1059–1069. [Google Scholar] [CrossRef] [PubMed]

- Hyafil, A.; Giraud, A.-L.; Fontolan, L.; Gutkin, B. Neural cross-frequency coupling: Connecting architectures, mechanisms, and functions. Trends Neurosci. 2015, 38, 725–740. [Google Scholar] [CrossRef] [PubMed]

- Roweis, S.T.; Saul, L.K. Nonlinear Dimensionality Reduction by Locally Linear Embedding. Science 2000, 290, 2323–2326. [Google Scholar] [CrossRef] [PubMed]

- Xanthopoulos, P.; Pardalos, P.M.; Trafalis, T.B. Linear Discriminant Analysis. In Robust Data Mining; Springer Briefs in Optimization; Springer: New York, NY, USA, 2013. [Google Scholar]

- Akaike, H. A new look at statistical model identification. IEEE Trans. Autom. Control. 1974, 19, 716–723. [Google Scholar] [CrossRef]

- Rencher, A.C.; Schaalje, G.B. Linear Models in Statistics; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2008. [Google Scholar]

- Quinlan, R. Learning efficient classification procedures. In Machine Learning: An Artificial Intelligence Approach; Michalski, R.S., Carbonell, J.G., TMitchell, M., Eds.; Morgan Kaufmann: Burlington, MA, USA, 1983; pp. 463–482. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Haykin, S. Neural Networks: A Comprehensive Foundation; Prentice Hall: Hoboken, NJ, USA, 1999. [Google Scholar]

- Deng, L.; Yu, D. Deep Learning: Methods and Applications. Found. Trends Signal Process. 2014, 7, 197–387. [Google Scholar] [CrossRef]

| Reference | Number of Participants | Females/Males (%) | Age Range | Age in Years M (±SD) | NP Assessment | Control Group |

|---|---|---|---|---|---|---|

| Li et al., 2022 [7] | 20 | 70/30 | 66 | no | Younger adults | |

| Goelz et al., 2021 [8] | 26 | 61.5/38.5 | 55–65 | no | Younger adults | |

| Chen et al., 2019 [9] | 22 | 72.7/27.3 | over 55 | 72 (±8.1) | no | Younger adults |

| Zich et al., 2017 [10] | 37 | 55.6/44.4 | 62.6 (±5.7) | yes | Younger adults | |

| Herweg et al., 2016 [11] | 10 | 60/40 | 50–73 | 60 (±6.7) | no | no |

| Gomez-Pilar et al., 2016 [12] | 63 | 65.1/34.9 | 60–81 | yes | Older adults without training | |

| Karch et al., 2015 [13] | 52 | 70–75 | 73.3 | yes | Children and younger adults | |

| Lee et al., 2015 [14] | 39 | 69.2/30.8 | 65.2(±2.8) | yes | Older adults without training | |

| Lee et al., 2013 [15] | 31 | 60/40 | 65.1 (±2.9) | yes | Older adults without training |

| Reference | Intrinsic Capacity | BCI Assessment | Spatial Filtering | Feature Processing | Classification (Translation) |

|---|---|---|---|---|---|

| Li et al., 2022 [7] | Cognition and Vitality | Motor imagery | ICA | CNN | |

| Goelz et al., 2021 [8] | Sensory and Locomotor | Visuo-motor task | ICA | FBCSP | LDA |

| Chen et al., 2019 [9] | Sensory | Vibro-tactile | CSP | CSP | LDA |

| Zich et al., 2017 [10] | Cognition | Motor imagery | CSP | LDA | |

| Herweg et al., 2016 [11] | Sensory | Tactile sensibility | ERP | SWLDA | |

| Gomez-Pilar et al., 2016 [12] | Cognition | Motor imagery | ICA, Laplacian | Power of EEG rhythms | Power of EEG rhythms |

| Karch et al., 2015 [13] | Cognition | Working memory performance | CSP | LDA | |

| Lee et al., 2015 [14] | Cognition | Cognitive training program | CSP | Power of EEG rhythms | Score based on CSP and PSD |

| Lee et al., 2013 [15] | Cognition | Memory and attention training program | CSP | Power of EEG rhythms | Score based on CSP and PSD |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mesin, L.; Cipriani, G.E.; Amanzio, M. Electroencephalography-Based Brain–Machine Interfaces in Older Adults: A Literature Review. Bioengineering 2023, 10, 395. https://doi.org/10.3390/bioengineering10040395

Mesin L, Cipriani GE, Amanzio M. Electroencephalography-Based Brain–Machine Interfaces in Older Adults: A Literature Review. Bioengineering. 2023; 10(4):395. https://doi.org/10.3390/bioengineering10040395

Chicago/Turabian StyleMesin, Luca, Giuseppina Elena Cipriani, and Martina Amanzio. 2023. "Electroencephalography-Based Brain–Machine Interfaces in Older Adults: A Literature Review" Bioengineering 10, no. 4: 395. https://doi.org/10.3390/bioengineering10040395

APA StyleMesin, L., Cipriani, G. E., & Amanzio, M. (2023). Electroencephalography-Based Brain–Machine Interfaces in Older Adults: A Literature Review. Bioengineering, 10(4), 395. https://doi.org/10.3390/bioengineering10040395