An Improved Canonical Correlation Analysis for EEG Inter-Band Correlation Extraction

Abstract

:1. Introduction

2. Materials and Methods

2.1. Datasets and Preprocessing

2.2. Differential Entropy

2.3. Canonical Correlation Analysis

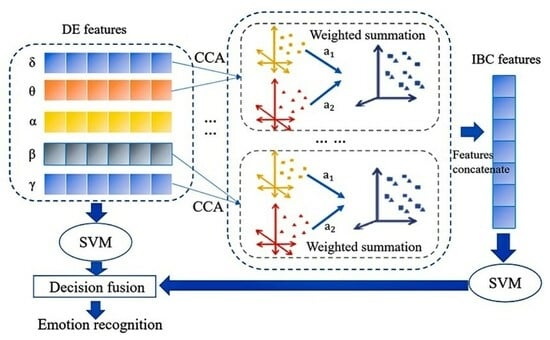

2.4. Inter-Band Correlation Features Based on CCA

- 1.

- Identify the linear combination of variables in each set that maximizes the correlation coefficient between the two sets.

- 2.

- Select an uncorrelated linear combination from the remaining options and pair it with the previously selected linear combination, selecting the one with the highest correlation coefficient.

- 3.

- Repeat steps 1 and 2 until all correlations between the two sets of indicators have been captured in the synthetic variables.

2.5. Decision-Level Fusion

2.6. Cross-Validation

3. Results

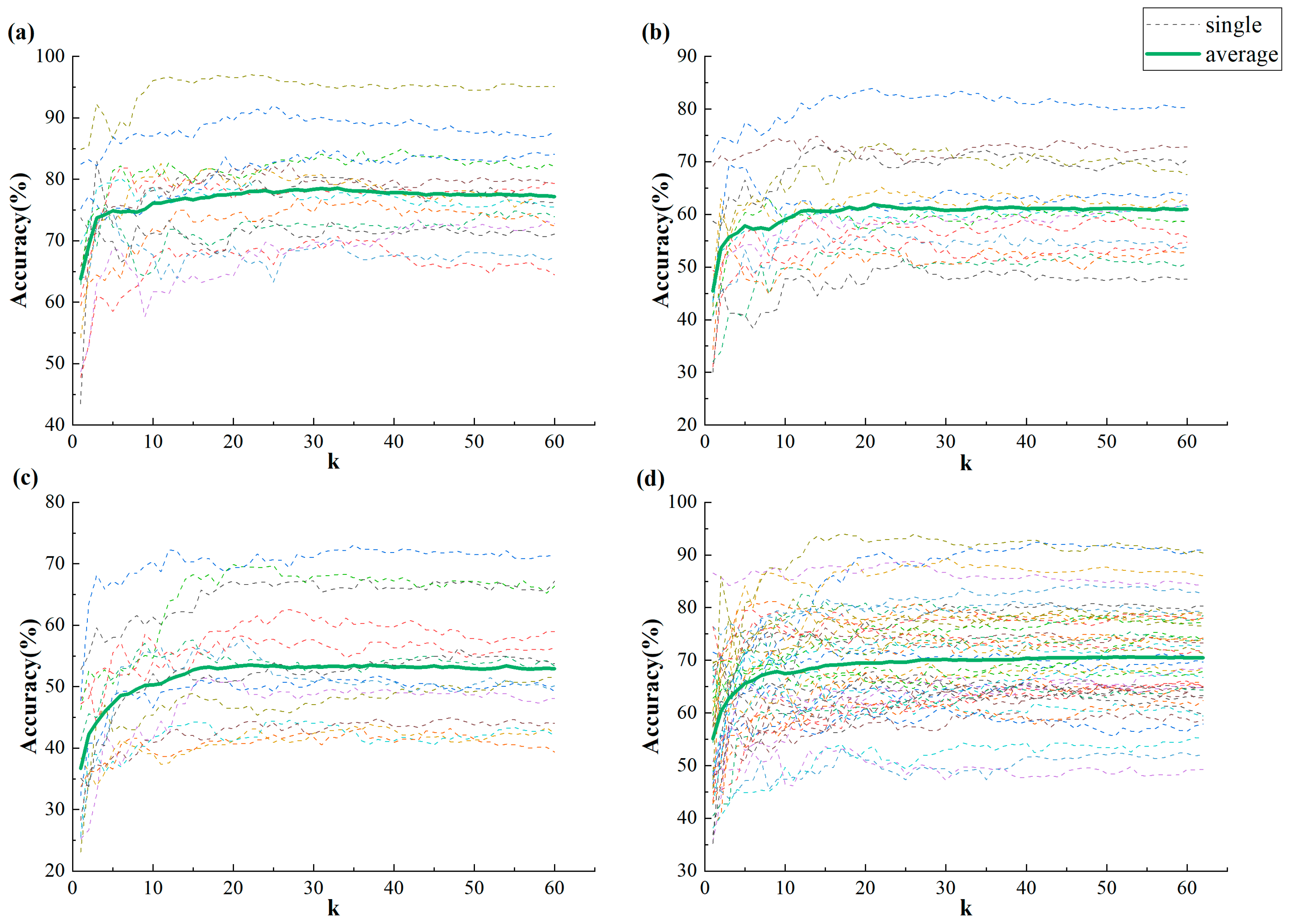

3.1. Validity of IBC Features

3.2. Classification Results

4. Discussion

4.1. The Validity of IBC Features

4.2. Information Complementarity between IBC and DE Features

4.3. Computational Complexity of IBC Features

4.4. Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Liu, Y.-J.; Zhang, J.-K.; Yan, W.-J.; Wang, S.-J.; Zhao, G.; Fu, X. A main directional mean optical flow feature for spontaneous micro-expression recognition. IEEE Trans. Affect. Comput. 2015, 7, 299–310. [Google Scholar] [CrossRef]

- Michel, P.; El Kaliouby, R. Real time facial expression recognition in video using support vector machines. In Proceedings of the 5th International Conference on Multimodal Interfaces, Vancouver, BC, Canada, 5–7 November 2003. [Google Scholar]

- Yan, J.; Zheng, W.; Xin, M.; Yan, W. Integrating facial expression and body gesture in videos for emotion recognition. IEICE Trans. Inf. Syst. 2014, 97, 610–613. [Google Scholar] [CrossRef]

- Mencattini, A.; Martinelli, E.; Ringeval, F.; Schuller, B.; Di Natale, C. Continuous estimation of emotions in speech by dynamic cooperative speaker models. IEEE Trans. Affect. Comput. 2016, 8, 314–327. [Google Scholar] [CrossRef]

- El Ayadi, M.; Kamel, M.S.; Karray, F. Survey on speech emotion recognition: Features, classification schemes, and databases. Pattern Recognit. 2011, 44, 572–587. [Google Scholar] [CrossRef]

- Wang, X.-W.; Nie, D.; Lu, B.-L. Emotional state classification from EEG data using machine learning approach. Neurocomputing 2014, 129, 94–106. [Google Scholar] [CrossRef]

- Chao, H.; Zhi, H.; Dong, L.; Liu, Y. Recognition of emotions using multichannel EEG data and DBN-GC-based ensemble deep learning framework. Comput. Intell. Neurosci. 2018, 2018, 9750904. [Google Scholar] [CrossRef] [PubMed]

- Agrafioti, F.; Hatzinakos, D.; Anderson, A.K. ECG pattern analysis for emotion detection. IEEE Trans. Affect. Comput. 2011, 3, 102–115. [Google Scholar] [CrossRef]

- Tivatansakul, S.; Ohkura, M. Emotion recognition using ECG signals with local pattern description methods. Int. J. Affect. Eng. 2016, 15, 51–61. [Google Scholar] [CrossRef]

- Jerritta, S.; Murugappan, M.; Wan, K.; Yaacob, S. Emotion recognition from facial EMG signals using higher order statistics and principal component analysis. J. Chin. Inst. Eng. 2014, 37, 385–394. [Google Scholar] [CrossRef]

- Wu, G.; Liu, G.; Hao, M. The analysis of emotion recognition from GSR based on PSO. In Proceedings of the 2010 International Symposium on Intelligence Information Processing and Trusted Computing 2010, Huanggang, China, 28–29 October 2010; pp. 360–363. [Google Scholar]

- Heller, W. Neuropsychological mechanisms of individual differences in emotion, personality, and arousal. Neuropsychology 1993, 7, 476–489. [Google Scholar] [CrossRef]

- Jenke, R.; Peer, A.; Buss, M. Feature extraction and selection for emotion recognition from EEG. IEEE Trans. Affect. Comput. 2014, 5, 327–339. [Google Scholar] [CrossRef]

- Kamble, K.; Sengupta, J. A comprehensive survey on emotion recognition based on electroencephalograph (EEG) signals. Multimed. Tools Appl. 2023, 82, 27269–27304. [Google Scholar] [CrossRef]

- Yuen, C.T.; San San, W.; Seong, T.C.; Rizon, M. Classification of human emotions from EEG signals using statistical features and neural network. Int. J. Integr. Eng. 2009, 1, 13. [Google Scholar]

- Liu, Y.; Sourina, O. Real-time fractal-based valence level recognition from EEG. In Transactions on Computational Science XVIII: Special Issue on Cyberworlds; Gavrilova, M.L., Tan, C.J.K., Kuijper, A., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; pp. 101–120. [Google Scholar]

- Hadjidimitriou, S.K.; Hadjileontiadis, L.J. Toward an EEG-based recognition of music liking using time-frequency analysis. IEEE Trans. Biomed. Eng. 2012, 59, 3498–3510. [Google Scholar] [CrossRef] [PubMed]

- Duan, R.N.; Zhu, J.Y.; Lu, B.L. Differential entropy feature for EEG-based emotion classification. In Proceedings of the 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER), San Diego, CA, USA, 6–8 November 2013. [Google Scholar]

- Wang, X.-W.; Nie, D.; Lu, B.-L. EEG-based emotion recognition using frequency domain features and support vector machines. In Proceedings of the International Conference on Neural Information Processing, Shanghai, China, 13–17 November 2011. [Google Scholar]

- Kamble, K.S.; Sengupta, J. EVNCERS: An integrated Eigenvector Centrality-Variational Nonlinear Chirp Mode Decomposition-Based EEG Rhythm Separation for Automatic Emotion Recognition. IEEE Sens. J. 2023, 23, 21661–21669. [Google Scholar] [CrossRef]

- Vanitha, V.; Krishnan, P. Time-frequency analysis of EEG for improved classification of emotion. Int. J. Biomed. Eng. Technol. 2017, 23, 191–212. [Google Scholar] [CrossRef]

- Moon, S.-E.; Jang, S.; Lee, J.-S. Convolutional neural network approach for EEG-based emotion recognition using brain connectivity and its spatial information. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018. [Google Scholar]

- Khare, S.K.; Nishad, A.; Upadhyay, A.; Bajaj, V. Classification of emotions from EEG signals using time-order representation based on the S-transform and convolutional neural network. Electron. Lett. 2020, 56, 1359–1361. [Google Scholar] [CrossRef]

- Zhang, C.; Yeh, C.-H.; Shi, W. Variational phase-amplitude coupling characterizes signatures of anterior cortex under emotional processing. IEEE J. Biomed. Health Inform. 2023, 27, 1935–1945. [Google Scholar] [CrossRef]

- Aru, J.; Aru, J.; Priesemann, V.; Wibral, M.; Lana, L.; Pipa, G.; Singer, W.; Vicente, R. Untangling cross-frequency coupling in neuroscience. Cur. Opin. Neurobiol. 2015, 31, 51–61. [Google Scholar] [CrossRef]

- Hülsemann, M.J.; Naumann, E.; Rasch, B. Quantification of phase-amplitude coupling in neuronal oscillations: Comparison of phase-locking value, mean vector length, modulation index, and generalized-linear-modeling-cross-frequency-coupling. Front. Neurosci. 2019, 13, 573. [Google Scholar] [CrossRef]

- Vergult, A.; De Clercq, W.; Palmini, A.; Vanrumste, B.; Dupont, P.; Van Huffel, S.; Van Paesschen, W. Improving the interpretation of ictal scalp EEG: BSS–CCA algorithm for muscle artifact removal. Epilepsia 2007, 48, 950–958. [Google Scholar] [CrossRef]

- Liu, Q.; Jiao, Y.; Miao, Y.; Zuo, C.; Wang, X.; Cichocki, A.; Jin, J. Efficient representations of EEG signals for SSVEP frequency recognition based on deep multiset CCA. Neurocomputing 2020, 378, 36–44. [Google Scholar] [CrossRef]

- Zheng, W.-L.; Lu, B.-L. Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Wang, X.; Pei, Y.; Zhang, L.; Zhao, S.; Luo, Z.; Yan, Y.; Yin, E. The increasing types of negative emotion impose an extra pressure on the accuracy of emotion recognition. In Proceedings of the 2022 International Conference on Computer, Artificial Intelligence, and Control Engineering, Zhuhai, China, 25–27 February 2022; Volume 12288, pp. 259–266. [Google Scholar]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Shi, L.C.; Jiao, Y.Y.; Lu, B.L. Differential entropy feature for EEG-based vigilance estimation. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Osaka, Japan, 3–7 July 2013. [Google Scholar]

- Press, W.H. Canonical Correlation Clarified by Singular Value Decomposition; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Liu, W.; Qiu, J.-L.; Zheng, W.-L.; Lu, B.-L. Comparing recognition performance and robustness of multimodal deep learning models for multimodal emotion recognition. IEEE Trans. Cogn. Dev. Syst. 2021, 14, 715–729. [Google Scholar] [CrossRef]

- Khare, S.K.; March, S.; Barua, P.D.; Gadre, V.M.; Acharya, U.R. Application of data fusion for automated detection of children with developmental and mental disorders: A systematic review of the last decade. Inf. Fusion 2023, 99, 101898. [Google Scholar] [CrossRef]

- Khan, S.M.; Khan, A.A.; Farooq, O. Selection of features and classifiers for EMG-EEG-based upper limb assistive devices—A review. IEEE Rev. Biomed. Eng. 2019, 13, 248–260. [Google Scholar] [CrossRef]

- Rabcan, J.; Levashenko, V.; Zaitseva, E.; Kvassay, M. Review of methods for EEG signal classification and development of new fuzzy classification-based approach. IEEE Access 2020, 8, 189720–189734. [Google Scholar] [CrossRef]

| CUMULATE3 | CUMULATE4 | CUMULATE5 | |||

|---|---|---|---|---|---|

| Subject | CC | Subject | CC | Subject | CC |

| S1 | 0.71 | S1 | 0.70 | S1 | 0.76 |

| S2 | 0.75 | S2 | 0.76 | S2 | 0.65 |

| S3 | 0.74 | S3 | 0.72 | S3 | 0.80 |

| S4 | 0.66 | S4 | 0.57 | S4 | 0.72 |

| S5 | 0.76 | S5 | 0.72 | S5 | 0.81 |

| S6 | 0.72 | S6 | 0.72 | S6 | 0.83 |

| S7 | 0.76 | S7 | 0.67 | S7 | 0.77 |

| S8 | 0.73 | S8 | 0.66 | S8 | 0.54 |

| S9 | 0.74 | S9 | 0.61 | S9 | 0.59 |

| S10 | 0.81 | S10 | 0.69 | S10 | 0.75 |

| S11 | 0.72 | S11 | 0.75 | S11 | 0.68 |

| S12 | 0.73 | S12 | 0.62 | S12 | 0.74 |

| S13 | 0.84 | S13 | 0.84 | S13 | 0.83 |

| S14 | 0.68 | S14 | 0.63 | S14 | 0.69 |

| S15 | 0.85 | S15 | 0.54 | S15 | 0.77 |

| AVG | 0.75 | AVG | 0.68 | AVG | 0.73 |

| STD | 0.05 | STD | 0.07 | STD | 0.09 |

| SEEDday1 | SEEDday2 | SEEDday3 | |||

|---|---|---|---|---|---|

| Subject | CC | Subject | CC | Subject | CC |

| S1 | 0.75 | S1 | 0.66 | S1 | 0.51 |

| S2 | 0.53 | S2 | 0.44 | S2 | 0.75 |

| S3 | 0.34 | S3 | 0.66 | S3 | 0.59 |

| S4 | 0.78 | S4 | 0.76 | S4 | 0.49 |

| S5 | 0.75 | S5 | 0.82 | S5 | 0.83 |

| S6 | 0.59 | S6 | 0.71 | S6 | 0.67 |

| S7 | 0.75 | S7 | 0.81 | S7 | 0.67 |

| S8 | 0.58 | S8 | 0.68 | S8 | 0.77 |

| S9 | 0.82 | S9 | 0.81 | S9 | 0.79 |

| S10 | 0.70 | S10 | 0.54 | S10 | 0.78 |

| S11 | 0.77 | S11 | 0.76 | S11 | 0.75 |

| S12 | 0.81 | S12 | 0.74 | S12 | 0.75 |

| S13 | 0.72 | S13 | 0.76 | S13 | 0.72 |

| S14 | 0.79 | S14 | 0.66 | S14 | 0.67 |

| S15 | 0.71 | S15 | 0.66 | S15 | 0.71 |

| AVG | 0.69 | AVG | 0.70 | AVG | 0.70 |

| STD | 0.13 | STD | 0.10 | STD | 0.10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Huang, R.; Yan, Y.; Luo, Z.; Zhao, S.; Wang, B.; Jin, J.; Xie, L.; Yin, E. An Improved Canonical Correlation Analysis for EEG Inter-Band Correlation Extraction. Bioengineering 2023, 10, 1200. https://doi.org/10.3390/bioengineering10101200

Wang Z, Huang R, Yan Y, Luo Z, Zhao S, Wang B, Jin J, Xie L, Yin E. An Improved Canonical Correlation Analysis for EEG Inter-Band Correlation Extraction. Bioengineering. 2023; 10(10):1200. https://doi.org/10.3390/bioengineering10101200

Chicago/Turabian StyleWang, Zishan, Ruqiang Huang, Ye Yan, Zhiguo Luo, Shaokai Zhao, Bei Wang, Jing Jin, Liang Xie, and Erwei Yin. 2023. "An Improved Canonical Correlation Analysis for EEG Inter-Band Correlation Extraction" Bioengineering 10, no. 10: 1200. https://doi.org/10.3390/bioengineering10101200

APA StyleWang, Z., Huang, R., Yan, Y., Luo, Z., Zhao, S., Wang, B., Jin, J., Xie, L., & Yin, E. (2023). An Improved Canonical Correlation Analysis for EEG Inter-Band Correlation Extraction. Bioengineering, 10(10), 1200. https://doi.org/10.3390/bioengineering10101200