1. Introduction

Recent advancements in brain-computer interface (BCI) research have significantly impacted the field of artificial intelligence, introducing effective new research methodologies. BCI systems utilize brain signals to control external devices, facilitating direct human-computer communication and interaction [

1]. These systems have been successfully applied in various domains. By decoding brain signals from the cerebral cortex, BCIs offer insights into an individual’s intentions and enable a variety of operations on external devices. In medical settings, BCIs are increasingly recognized for their potential to assist patients with neural impairments to regain autonomous movement. Specifically, Motor Imagery (MI)-based BCIs have demonstrated promising results in the rehabilitation of stroke patients [

2]. This breakthrough has the potential to significantly improve the quality of life for individuals with disabilities. In addition, brain-machine interfaces have contributed significantly to sleep research [

3]. The progression in BCI technology not only furthers artificial intelligence but also shows immense potential for improving healthcare and fostering human-machine integration [

4]. MI facilitates structural and functional reorganization in stroke rehabilitation [

5], achieved through the repeated activation of motor-neuron circuits. This process repairs connections between damaged neurons via neural plasticity, leading to improvements in motor dysfunction. Research into the recognition capabilities of brainwave signals is not only crucial for understanding brain behavior but also instrumental in advancing the medical and health industries [

6], underscoring its importance for future technological developments.

The electroencephalogram (EEG), a conventional technique for capturing temporal fluctuations in brain activity, faces significant challenges regarding the quality of its signals. These EEG signals are often marred by various artifacts, both physiological and non-physiological. A critical step in EEG analysis is the removal of baseline power [

7]. This process is not only fundamental but also essential in the feature processing of EEG signals. Researchers have developed a method known as InvBase for baseline correction. Experimental evidence has shown that this method effectively improves classification accuracy [

8].

In the array of BCI paradigms [

9], MI-based BCI is distinguished as a crucial subset within this field. MI-BCI primarily focuses on the identification and analysis of sensorimotor rhythm (SMR) signals [

10] to decode cerebral signals into actionable commands for machines [

11]. In the foundational studies of MI, the concept of Event-Related Desynchronization/Synchronization (ERD/ERS) was introduced by the BCI Laboratory at the Graz University of Technology in Austria. This concept serves as a key framework for differentiating MI tasks [

12]. MI is conceptualized as a cognitive process in the human brain, not culminating in physical actions [

13]. MI-BCI represents a leap beyond conventional paradigms, establishing a communication link between humans and machines. This connection enables cerebral control over devices such as wheelchairs and exoskeletons. It also advances neural rehabilitation based on neuroplasticity theories [

14]. These developments position MI-BCI as a powerful tool in neural rehabilitation [

15]. Early results have shown promising potential for the application of MI-BCI in stroke rehabilitation therapies [

16]. A significant challenge in the BCI field lies in the enhancement of motor imagery classification and recognition. Addressing the need for more effective classification algorithms is crucial. Improving recognition accuracy in MI-based BCIs is especially vital in the context of medical research [

17].

Numerous scholars have delved into research to enhance accuracy in this domain. Tong Guofeng and colleagues utilized a Deep Belief Network (DBN) model for classifying hyperspectral remote sensing images. They refined the training process of the DBN and validated their approach with the Salinas hyperspectral remote sensing image dataset [

18]. This method demonstrated a notable improvement in classification accuracy compared to traditional approaches. Kamada S. and Ichimura T. also applied the DBN model, this time for video information classification. They adopted an adaptive learning method to determine the optimal network structure during training [

19], achieving satisfactory levels of accuracy. Various EEG processing methods based on ERD and ERS have been proposed, including Common Spatial Patterns (CSP) [

20], autoregressive models, wavelet transforms, and DBN. With the advancement in deep learning research, DBN has emerged as an effective technique for EEG signal processing. Numerous derivative methods based on DBN have been developed, such as sparse-DBN [

21] and the Frequency-Domain Deep Belief Network (FDBN). The multiband FDBN, in particular, has shown an average accuracy improvement compared to the FDBN algorithm [

22]. This advancement suggests the potential for optimizing DBN using the sparrow search algorithm (SSA). Furthermore, DBNs have been extensively applied across various fields. While they exhibit strong classification capabilities in these applications, DBNs often lack effective optimization strategies and are susceptible to becoming trapped in local optima, which can indirectly impact classifier performance [

23]. There is a pressing need for an enhanced search algorithm to fine-tune the hyperparameters of DBN for superior classification performance.

Recent research developments, notably in 2020, saw Xue et al. introduce a new optimization algorithm known as the Sparrow Search Algorithm (SSA) [

24]. This algorithm is distinguished by its robust optimization capability and rapid convergence speed [

25,

26]. Yin implemented SSA to optimize kernel coefficients and penalty coefficients for SVM classifiers, comparing the results with those from three traditional classifiers, namely CNN and SVM. The SSA-SVM classifier exhibited significant improvements overall [

27]. The SSA algorithm, with its dual strengths in global and local search capabilities, effectively determines the hyperparameters of DBN, thus addressing DBN networks’ limitations. By optimizing DBN networks with SSA, we can enhance the classifier’s recognition ability. This approach has opened new avenues in the field of MI-BCI.

The primary contributions to this paper are as follows:

We introduce a Sparrow Search Algorithm-based optimization for a Deep Belief Network model, specifically for classifying EEG data, providing a novel approach to EEG signal classification.

To tackle the challenge of poor stability in motor imagery EEG data quality, our study integrates motor observation with motor imagery. This novel experimental paradigm enhances the quality of the EEG data collected for the experiment.

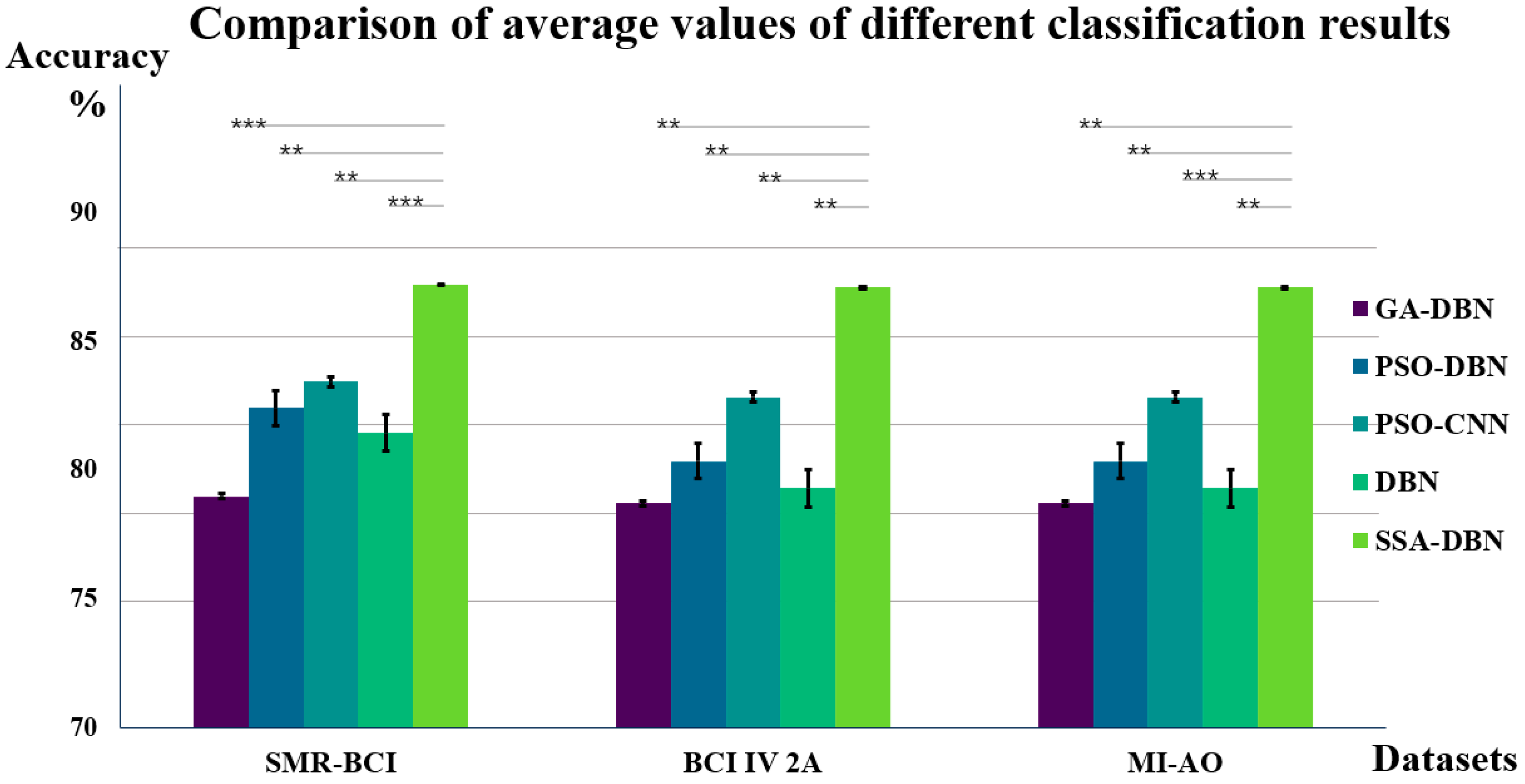

The article employed various classification methods besides the SSA-DBN algorithm, namely DBN, GA-DBN, PSO-CNN, and PSO-DBN. Upon comparing the classification results of these algorithms, it was observed that the SSA-DBN algorithm exhibits superior classification capabilities.

2. Related Work

In this study, we employ the SSA-DBN approach to develop a framework for recognizing MI in EEG signals. Initially, we selected one self-collected dataset and two publicly available datasets, namely BCI IV 2a and SMR-BCI, to assess the classifier’s performance. During the data processing phase, we utilized EMD in tandem with the Hilbert-Huang Transform (HHT) for feature extraction from EEG signals. This method effectively captures the ERD and ERS phenomena, crucial markers of MI tasks. The application of SSA enabled us to fine-tune the DBNs hyperparameters, leading to enhanced recognition capabilities. Our approach demonstrated impressive performance not only on our private dataset but also on the BCI IV 2a and SMR-BCI public datasets. Relative to the unoptimized DBN network, we observed a 10.38% improvement in classification accuracy on our self-collected dataset. For the BCI IV 2a and SMR-BCI datasets, the accuracy enhancements were 9.33% and 5.57%, respectively. Overall, our proposed algorithm offers a new perspective on MI study, with the potential to contribute significantly to advancements in BCI technology.

The remainder of this paper is organized as follows:

Section 3 details the materials and methods.

Section 4 details the results.

Section 5 and

Section 6 present the discussion and conclude, respectively. This paper Limitations and Futures, with

Section 7.

3. Materials and Methods

3.1. Dataset

3.1.1. Experimental Design and Experimental Paradigm

Two computers are involved in the experimental setup: one for the participant (PC1) and one for the experimenter (PC2). Unity 3D software is equipped on PC1, through which the experimental paradigm is designed and developed. Instructions from PC2 are received by PC1 via the TCP communication protocol, and the starting time point are recorded for subsequent data alignment. MATLAB is installed on PC2, through which time alignment is conducted and the start signal is sent Real-time EEG data from the participant are acquired and stored utilizing the Curry8(8.0.3) software [

28].

For the acquisition of EEG data, the international 10–20 system standard is adhered to with the employment of a 32-channel EEG cap. The EEG signals are relayed using a portable EEG signal amplifier. Through hardware connections, the EEG cap is linked to the amplifier. The framework of the experimental design is graphically represented in

Figure 1, outlining the EEG signal acquisition system.

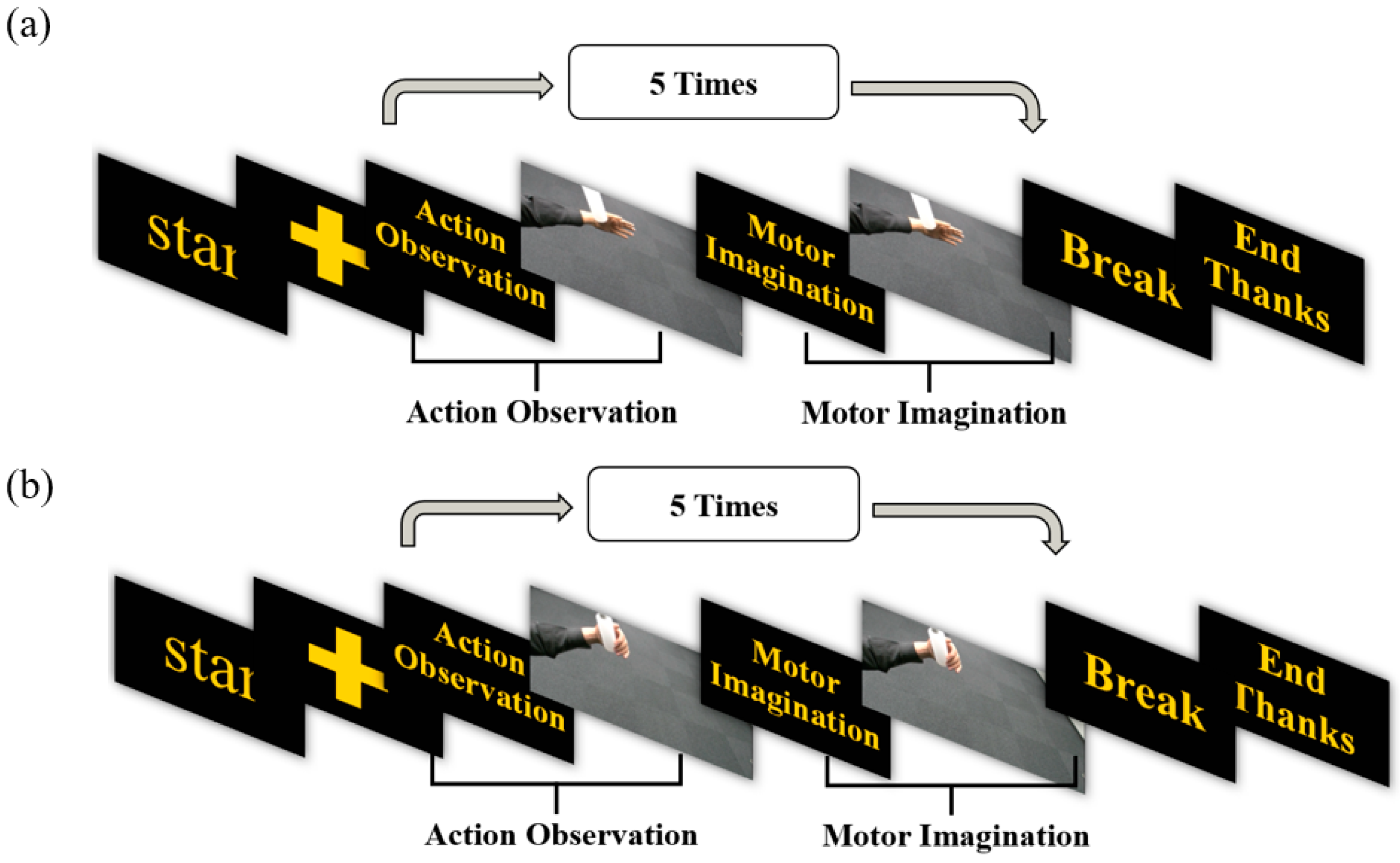

3.1.2. Experimental Paradigm

Based on the MI-BCI system, the experimental paradigm for this study is crafted, and the action observation (AO) state is integrated. Each procedure is segmented into four distinct states: 3 s dedicated to AO, 3 s for MI, a 2-s intermission for rest, and a cue phase lasting 2 s. Comprising these four states is a single cycle, and eight such cycles make up each task. A visual representation of this paradigm is provided in

Figure 2.

3.1.3. Data Preprocessing

The EEG data of eight subjects were collected in this experiment, and all experiments were completed in the laboratory of Tianjin University. The experiment was approved by the Ethics Committee of Tianjin University (TJUE-2021-138). Original EEG signals are intrinsically faint, making the experimental data collection prone to artifacts. To counteract this vulnerability, a series of preprocessing measures are adopted. Initially, the gathered data are subjected to independent component analysis (ICA) to eliminate artifacts [

29]. In our study, the EEG data undergoes a downsampling process to achieve a resolution of 512 Hz. Subsequently, it is filtered using a Butterworth filter within the 0.5–40 Hz frequency range. This filtering technique is crucial for effectively removing noise from brainwave signals [

30]. The EEG guides this selection of attributes pertinent to MI-AO. Post-preprocessing, the EEG datasets are segmented. The central focus of this research revolves around the analysis of EEG data under two conditions: observation and imagination. Furthermore, temporal markers for each experimental condition are precisely set by the experimental paradigm. Valid data segments are identified as spanning 1 s before and 3 s after each condition. The data collected in the first second before the commencement of the experiment are utilized for baseline correction. This step is essential to reduce or eliminate any drift in the EEG signals. The initial three seconds of the experiment capture the pertinent task segment, as defined by our experimental paradigm. Each data acquisition encompasses 35 channels, a procedure reiterated eight times, culminating in a comprehensive total of 35 × 512 × 4 × 8 sampling points per experiment.

3.2. Public Datasets

3.2.1. SMR-BCI Dataset

The Korea University has furnished a motion imagery SMR-BCI dataset [

31], encompassing EEG data from a cohort of 14 subjects, delineated as S01 through S14. This dataset is bifurcated into two distinct MI task categories: the imagined movement of the right hand and the conjoint imagined movement of both feet. The EEG data for each participant are systematically divided into a training set and a testing set. The training compilation is composed of 100 MI trials, averaging 50 trials for each MI task delineation. Concurrently, the testing ensemble comprises 60 MI trials, averaging 30 trials per MI task category.

3.2.2. BCI Competition IV Dataset 2a

In this investigation, the BCI Competition IV Dataset 2a (BCI IV 2a) [

32] was employed for validation. The BCI IV 2a dataset, adhering to the international standard 10–20 system, encompasses EEG signals associated with MI and is derived from 9 subjects. Four distinct motor states, namely left hand, right hand, foot, and tongue, are represented, and these are aligned with the categories 0, 1, 2, and 3, respectively. Within the BCI IV 2a dataset, data from each subject are composed of signals emanating from two experimental rounds. Within each round, six experimental sets are included, with each set encompassing 48 MI state trials. As a result, comprehensive 288 MI trials are presented in the BCI IV 2a dataset for analysis and performance classification evaluation.

3.3. Method

3.3.1. Sparrow Search Algorithm

Xue and Shen introduced a SSA that mimics the foraging behavior of sparrows [

24]. This algorithm is characterized by its simplicity in principle, minimal parameter adjustments, and ease of programming. In comparison to Particle Swarm Optimization (PSO) [

33] and Genetic Algorithm (GA) [

34] in terms of function optimization, SSA demonstrates superior search capabilities. One notable advantage of the Sparrow Search Algorithm is its relatively low temporal and spatial complexity [

35], making it well-suited for addressing large-scale problems. The spatial complexity of SSA is primarily influenced by the search space size, usually requiring modest memory resources. However, the temporal complexity of the algorithm may escalate when dealing with extensive problems, depending on the breadth and depth of the search space. The procedural steps of the algorithm are illustrated in

Figure 3.

The computational trajectory of the algorithm unfolds as follows [

27]:

First, represent a population of

Sparrows as

-d-dimensional vectors using matrices,

In the above equation,

represents the position of the

dimension of the

sparrow; The fitness of the sparrow population can be represented as:

In the above equation,

is the fitness of an individual sparrow.

represents the regional data of the

Sparrow in the

dimension.

represents a randomly distributed number.

and

represent the indicative number and the security number, respectively.

represents a symbol conforming to a normal distribution. When

is arriving at the position of the joiner. The updated formula is as follows:

Follows

is a

matrix, and

When

is in a state of surveillance and early warning behavior. The updated formula is as follows:

where

and

represent the global best fitness value and the global worst fitness value, respectively.

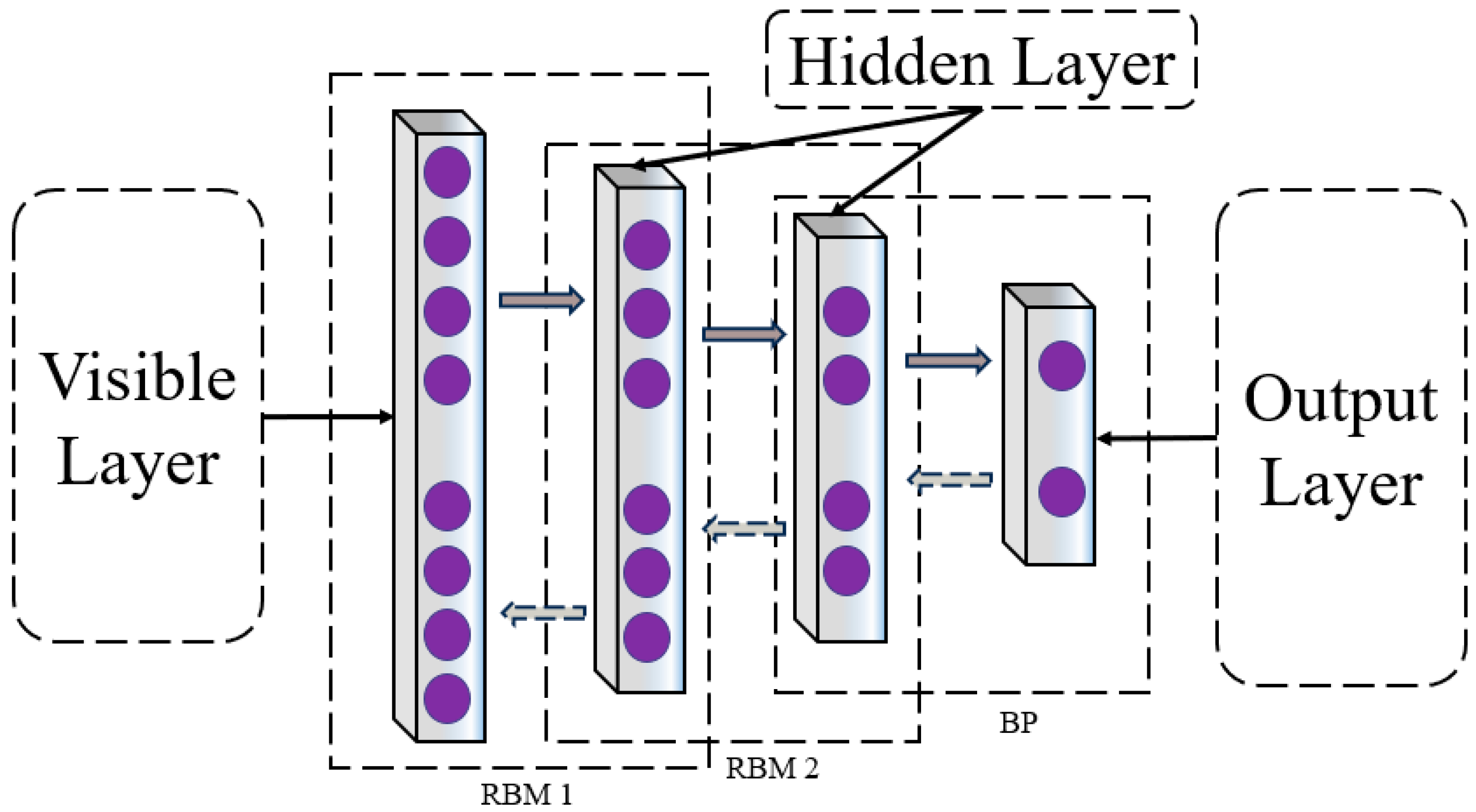

3.3.2. Deep Belief Networks

DBN epitomizes a class of neural networks adept at executing both supervised and unsupervised learning paradigms. The distinctive architecture of the DBN is delineated in the subsequent diagram. This network is an amalgam of multiple strata of Restricted Boltzmann Machines (RBM), crowned by a solitary layer of backpropagation (BP) networks. The RBM, a bidirectional, fully connected neural network structure, is composed of visible and hidden layers, serving as a linchpin in optimizing the overarching DBN network, the DBN network structure is shown in

Figure 4. Upon fine-tuning the RBMs, the DBN is poised to distill high-level features [

36].

In the unsupervised training scenario, it is crucial to ensure that the feature vectors of the trained RBM network can be mapped to different feature spaces while retaining their informative characteristics. The DBN network proceeds to extract the feature information layer by layer, with the BP network designated in the last layer to retrieve the output feature vectors from RBM for supervised classification training. As illustrated in the diagram, it is apparent that although each RBM network operates independently and does not engage in the mapping of feature vectors from other RBM network layers, the training regimen of RBM facilitates the initialization of weight parameters for each deep layer of the BP network. This assists the network in averting the challenges of local optima and extended training durations that may arise due to the random initialization of weight parameters in the BP network.

The principle of the DBN network is delineated as follows: During supervised fine-tuning training, forward propagation is carried out to obtain specific output values from the input. Thereafter, backward adjustment is applied to propagate and update the weight values

and bias terms

of the network. During forward propagation, pre-trained weights

and biases

are utilized to compute the activation values of each hidden neuron.

where

is the index value of the layer in the neural network, and the values of

and

are as follows:

where

represents the weights, and then the activation values of each hidden neuron in each layer are calculated. Afterwards, they are normalized.

Finally, we obtain the activation function

and output values

of the output layer.

In backward propagation, the backpropagation algorithm is used with the mean squared error criterion to update the parameters of the entire network. The cost function is as follows:

where,

is the squared error of DBN learning, where

and

represent the output and ideal output of the output layer, respectively.

is the sample index, and

represents the parameters of the weights and biases to be learned in layer

.

The gradient descent method is used to update the weights and biases of the network, where

represents the learning rate.

Based on the above discussion, by selecting appropriate numbers of hidden layers, determining the number of neurons in each layer, and setting the learning rate, followed by iterating through a specified number of times during training, we can achieve the desired network mapping model.

3.3.3. The SSA-DBN Method

DBNs have manifested encouraging performance in the realms of feature extraction and classification. Nevertheless, the optimal network structure of DBNs is pivotal for their performance. To tackle this, the SSA can be harnessed to optimize the DBNs. SSA identifies the Sparrow individual exhibiting the highest fitness, symbolizing the optimal position of Sparrow, to configure the optimal network structure of the DBN, culminating in the SSA-DBN classification recognition model.

The SSA-DBN model is bifurcated into two modules: the data preprocessing module and the SSA-DBN module. The data preprocessing module readies the raw data for input into the SSA-DBN module. The SSA-DBN module executes the classification and recognition of the input data. This entails training the module with the training dataset to optimize its parameters and achieve the optimal classification recognition model. Subsequently, the test dataset is channeled into the trained SSA-DBN module to obtain classification outcomes.

The algorithm unfolds as follows: Initially, a specific number of Sparrow populations are randomly initialized, and relevant parameters are established. The fitness function F(X) is evaluated to identify the optimal value. Subsequently, the positions of the Sparrows are updated, and the fitness function’s optimal value is recalculated. If the discerned optimal value aligns with the global optimum, the parameter X corresponds to the optimal network structure of DBN. Otherwise, the updating of the Sparrows’ positions continues until either the optimal value is attained or the maximum iteration limit is reached. Ultimately, based on the obtained parameters, the optimal classification model is constructed for recognition and classification tasks. The SSA algorithm boasts a higher convergence speed, augmented accuracy, and a lower propensity for entrapment in local optima, showcasing a global characteristic. Employing SSA to optimize DBN guides the Sparrow to the optimal position, as depicted in

Figure 5 of the model.

3.4. Feature Extraction: Empirical Mode Decomposition and the Hilbert–Huang Transform

The technique of EMD is extensively utilized for the analysis of nonlinear and non-stationary signals, exhibiting utility in the extraction of features from such signals. EMD operates by decomposing a signal into Intrinsic Mode Functions (IMFs), which epitomize unique patterns inherent in the data. IMFs render valuable insights into the frequency range characteristics of the original EEG signal, thereby enabling effective feature extraction [

37].

Nevertheless, EMD may be plagued by issues such as mode mixing and the presence of residual white noise in the intrinsic mode components. To ameliorate these challenges, a fully adaptive noise Complete Ensemble Empirical Mode Decomposition (CEEMD) method is proposed. The decomposition procedure encompasses the following steps:

In the above equation,

is the signal to be decomposed;

is the Gaussian white noise weighting coefficients;

= 1, 2, 3…;

is the Gaussian white noise generated during the

iteration. Perform EMD decomposition on the sequence

.

In the above equation,

represents the first mode component;

represents the Residual signal after the first decomposition.

In the equation, denotes the component of the order mode procured post-CEEMDAN decomposition. This procedure is reiterated until one of the following conditions emerges, thereby concluding the EMD decomposition process:

Achievement of the order IMF component-; The magnitude of the residual signal, termed , falls below a predefined threshold. The residual signal, denoted as , manifests as either a monotonic function or a constant.

The HHT serves to transform the foundational elements of a signal, typically sinusoidal signals, into Intrinsic Mode Functions (IMFs). From a theoretical perspective, HHT lays the groundwork for the analysis of oscillating frequencies inherent to non-stationary signals. Through the application of the Hilbert transform to every mode component that fulfills the IMF criteria, one can deduce the corresponding instantaneous frequency along with the instantaneous amplitude of the IMF.

where

is the mode component satisfying the IMF condition. The corresponding instantaneous frequency is represented as follows:

Therefore, we can obtain:

By the formula of EMD, we can derive:

By using the above equation, we can obtain the Hilbert HHT time-frequency spectrogram as follows:

Further, we can obtain the Hilbert marginal spectrum:

In this research, the marginal spectrum for each state is analyzed with a window length and shift of 1 Hz. This analysis yields 30 distinct feature vectors per state.

3.5. Parameter Optimization for Classification

To enhance the performance of the DBN network within the SSA-DBN model, the structural parameters of DBN are optimized using the SSA method, and specific parameters are established. The specific parameters of the SSA iteration process are shown in

Table 1.

Drawing from the SSA optimization procedure, we identify the peak fitness function F(X), facilitating the determination of the DBNs structural parameters, denoted as X. Subsequently, the DBN is formulated, leveraging the optimization outcomes from the SSA to ascertain the optimal neuron count, termed Best_pos. Within this framework, the implications of diverse output parameters are delineated in

Table 2.

In the present research, a three-layered RBM model of the DBN is employed for the extraction of features. Initially, the neuron count in each network layer is established as Best_pos (1,1), Best_pos (1,2), and Best_pos (1,3), in sequence. Following this, the BP classifier is utilized for the classification task. During the training phase of the RBMs, parameters are configured as follows: The number of iterations is 300, the batch of training samples is 30, and the learning rate factor is (1,4).

For the transition from DBN to a deep reverse fine-tuning network, the command “nn” = dbnunfoldtonn(DBN, ) is implemented, where symbolizes the quartet of classification outcomes. These outcomes resonate with the four classification recognition outputs from the BCl IV 2a public dataset. During the reverse fine-tuning phase, the parameters are as follows: The number of reverse fine-tuning iterations is Best_pos (1,5), the learning rate factor is 0.001, the number of training samples per iteration is 30, and the activation function is SoftMax.

3.6. The Paired Samples t-Test

The paired sample

-test, a widely used statistical method [

38], is employed in this study to conduct significance testing between the SSA-DBN classification algorithm and other competing classification algorithms. Specifically, we assess the performance of the SSA-DBN algorithm within the SMR-BCI dataset by comparing it against other algorithms. The fundamental steps for conducting a significance test between the SSA-DBN algorithm and the DBN algorithm include:

Proposing the Hypothesis : Here, posits that no significant difference exists in the mean values of the two samples, while suggests a significant difference in these mean values.

Set the significance level -value: In this verification, the significance level -value is set at 0.05.

Calculate the statistical

-value:

In the above formula, and represent the sample size, represent the sample variance, and and represent the mean values of the samples.

Calculation of the -test -value: Based on the formula, the -value is calculated. Assuming the null hypothesis holds, the statistic conforms to a -distribution with specific degrees of freedom. The -value can be derived using a -distribution table or corresponding functions. A -value less than 0.05 leads to the rejection of , thereby supporting . This indicates a significant disparity in the mean values between the two methods.

4. Results

In this paper, data from eight subjects harvested from a self-collected dataset are meticulously processed through a series of preprocessing and feature extraction stages, employing the SSA-DBN methodology for classification.

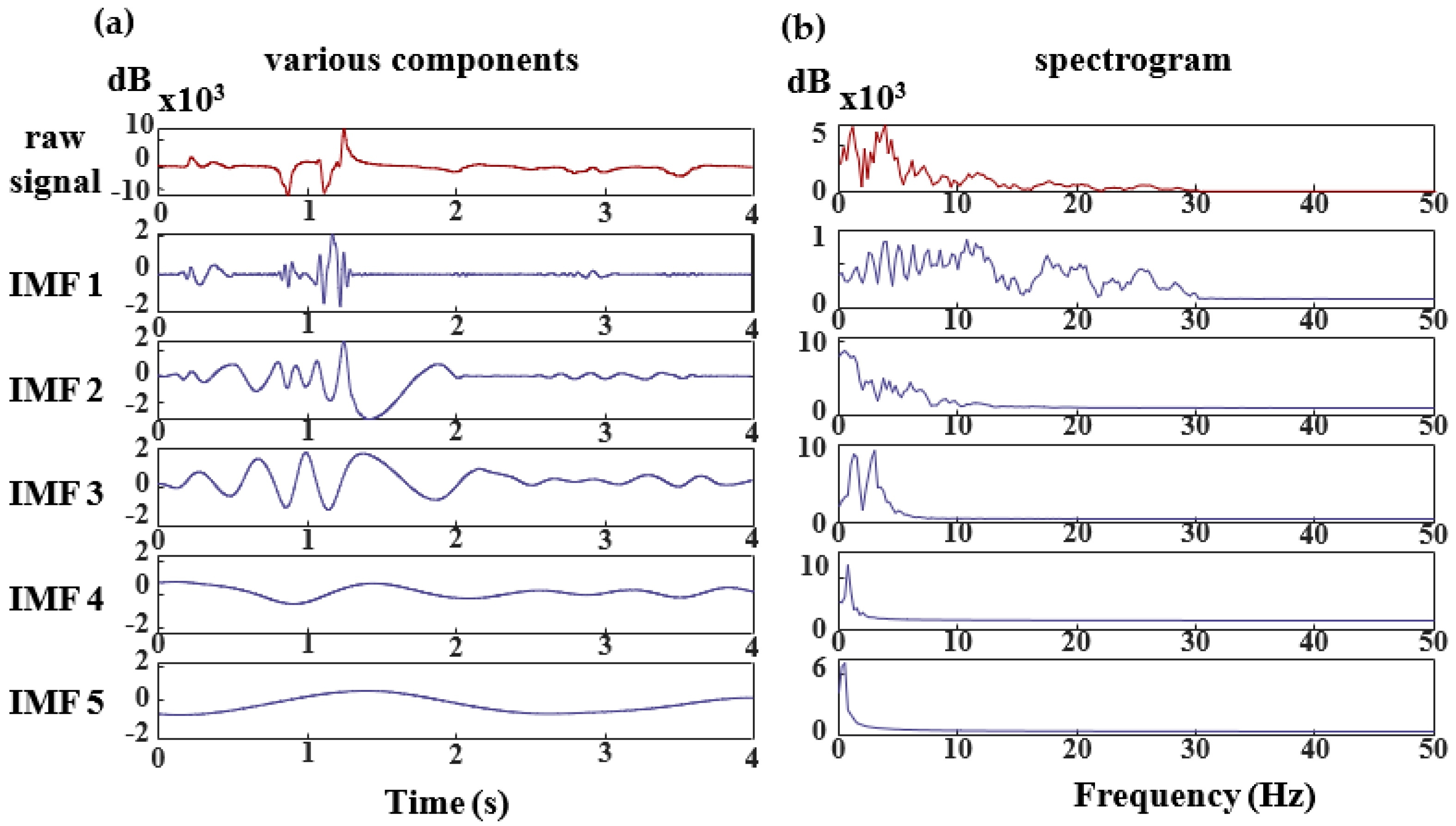

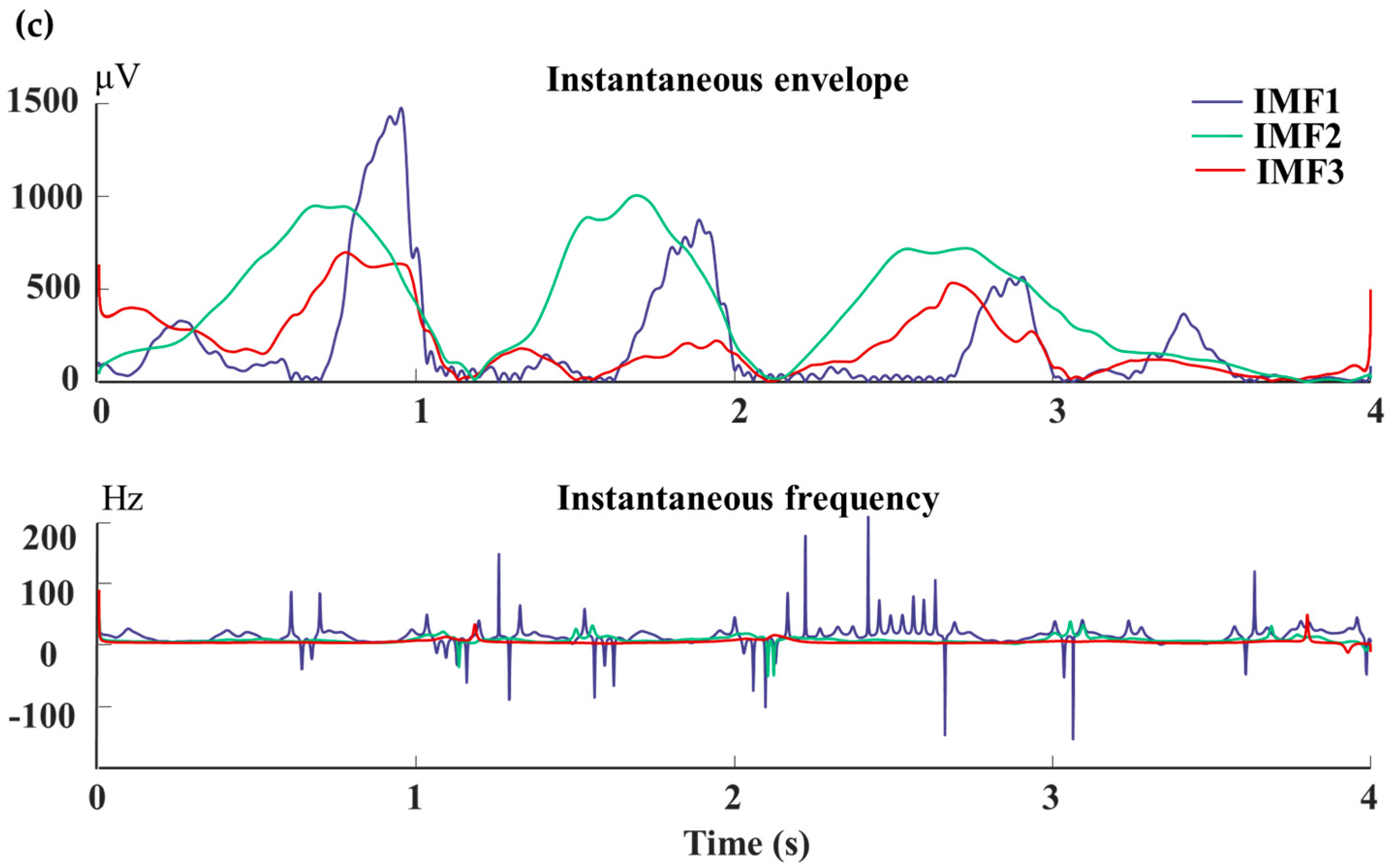

For the scrutinization of an individual’s EEG signal, the signal undergoes decomposition facilitated by CEEMDAN (Complete Ensemble Empirical Mode Decomposition with Adaptive Noise) [

39]. The resultant IMF components are illustrated in

Figure 6, where each component correlates with specific frequency bands. Specifically, the IMF4-IMF5 components predominantly manifest frequency elements centered approximately at 0 Hz. Conversely, the frequency spectrum of MI-EEG signals conventionally aligns with the

and

rhythms. Consequently, the terminal two components are disregarded.

Following the prior analysis, the first three components (IMF1–IMF3) were derived from the EMD decomposition and subjected to a Hilbert transformation to produce the frequency spectrum. The resulting three-dimensional frequency spectrum, however, was found unsuitable for feature extraction. Consequently, a marginal spectrum analysis was conducted, yielding a two-dimensional marginal spectrum better suited for feature extraction. With the implementation of this marginal spectrum analysis, the marginal spectrum for each time point was windowed, adopting a window length of 1 Hz and a shift of 1 Hz. A ceiling for the frequency range was established at 30 Hz. Through this methodology, 30 feature vectors were generated for each event, facilitating a reduction in data complexity and an enhancement in classification accuracy [

40].

Following the description outlined, our feature analysis will concentrate on channels C3, C4, and CZ. These channels are located in the central region of the brain, an area recognized as crucial for motor imagery activities [

41]. The subsequent figures show the analysis of the MI-AO state across channels C3, CZ, and C4 for letting go and handshakes:

An examination of the letting go state in

Figure 7a reveals notable differences in the energy spectrum of MI and AO under the C3 channel, particularly around 10 Hz and 25 Hz. In contrast, under the CZ channel, AO and MI display minimal variance within the same frequency range. Meanwhile, the C4 channel shows a clear energy disparity near 10 Hz. This pattern is mirrored in the handshake state, as depicted in

Figure 7b, where most energy differences are concentrated around 10 Hz and 25 Hz.

From the observations in

Figure 7, it becomes apparent that distinct variations manifest in the alpha and beta frequency bands during motor imagery. The alpha band, typically ranging from 8 to 13 Hz, is predominant when a person is awake but relaxed. Conversely, the beta band, spanning 14 to 30 Hz, is commonly associated with normal cognitive activities. It is important to note that the Gamma frequency band, generally above 30 Hz, does not significantly relate to motor imagery activities [

42]. In summary, a comprehensive analysis of

Figure 8 demonstrates that the electroencephalographic characteristics of MI and AO exhibit substantial separability post-feature extraction via EMD + HHT. This finding provides robust data to support the subsequent application of the SSA-DBN classification algorithm.

Based on the above data, feature extraction is performed, and the extracted features are then classified and recognized using the method proposed in this paper and other methods. The three optimization methods are as follows: The Genetic Algorithm-based Deep Belief Network (GA-DBN) employs GA to optimize the structure and hyperparameters of the DBN [

43]; the Particle Swarm Optimization-based Deep Belief Network (PSO-DBN) combines the PSO algorithm with the DBN to optimize the structure and parameters of the DBN [

44]; and the Particle Swarm Optimization-based Convolutional Neural Network (PSO-CNN) is a CNN that utilizes PSO. This method employs the PSO algorithm to optimize the structure, weights, and hyperparameters of the CNN [

45]. The ensuing classification outcomes undergo rigorous 5-fold cross-validation, with the computed mean value serving as the definitive measure of classification accuracy. Additionally, we have calculated the standard deviation and standard error of the data, which are supplemented in the table below. Additionally, we have computed the standard deviation and standard error for the data, which are detailed in the subsequent table. The inclusion of the standard deviation and standard error allows for a comprehensive comparison of the dispersion and variability among individual data samples relative to the overall dataset [

46]. The comparative discernment of this methodology by others concerning classification recognition based on the self-collected data are elucidated in

Table 3 herein.

After completing the analysis of the self-collected dataset, the same methodology was applied to two publicly available datasets for classification. The classification results are presented in

Table 4 and

Table 5.

The data presented in the three tables indicates that the SSA-DBN algorithm proposed in this study exhibits superior classification performance across all datasets.

Figure 7 compares and analyzes the average classification accuracies of various algorithms across these datasets, with the paired t-test significance indicated within the graph.

Analysis of

Figure 7 reveals that in binary datasets, specifically MI-AO and SMR-BCI, the SSA-DBN algorithm achieves a peak classification accuracy of 89.33%. Notably, within the BCI IV 2a dataset, which involves four categories, the highest average classification accuracy recorded is 86.14%. Across all three experimental datasets, SSA-DBN stands out as the superior classifier, exhibiting particularly remarkable performance in the self-collected dataset. This study also determined double-sided probability values (

p-values) for the SSA-DBN classification method in comparison to other methods within the same dataset. The significance evaluation indicates substantial differences between various classification techniques and the SSA-DBN approach detailed in this paper.

5. Discussion

The primary objective of this research is to address the challenge of determining the hyperparameters for classifiers in EEG classification tasks. The SSA is adept at finding optimal local solutions. The DBN demonstrates exceptional efficacy in feature extraction, making it well-suited for feature classification [

36]. Utilizing SSA to optimize the DBN network enables us to achieve optimal values, thus constructing an ideal classification model. The classification process yielded positive outcomes.

The burgeoning research in brain-machine interfaces offers new hope in rehabilitation therapies for individuals with disabilities. There is an increasing need for diverse, intelligent products in rehabilitation medicine for disabled people. The innovation and adoption of various smart products are progressively superseding traditional manual approaches [

47], enhancing convenience for subsequent treatments. Elderly individuals with physical challenges stand to benefit significantly from smart technologies that facilitate the control of household appliances. This technological advancement represents a substantial benefit for people with disabilities [

48]. It is crucial to analyze the brainwave data of stroke patients and correlate it with pertinent physiological responses, addressing a pressing contemporary need. This research extends hope to stroke patients [

2].

Effective methods of signal analysis significantly aid in deciphering brain characteristics. In the initial phase of preprocessing EEG signals, artifacts crucially influence the imaging quality. The EEG signals are predominantly affected by physiological artifacts, including eye and muscle movements. Present-day algorithms for processing these artifacts encounter various challenges, with potential solutions discussed in reference [

7]. Furthermore, the removal of baseline noise is a vital component of EEG signal preprocessing. The implementation of inverse filtering techniques for this purpose has yielded promising outcomes [

8]. These scientific developments in both domains have laid the groundwork for future progress in pattern recognition.

In this study, we utilize EMD + HHT for feature extraction from EEG signals. As evidenced in

Figure 7, it is prudent to retain IMF1~IMF3 for feature extraction. This approach not only reduces processing time, enhancing computational efficiency, but also lays the groundwork for subsequent classification tasks.

Upon applying this feature extraction method to three datasets, the classification results are depicted in

Figure 8. Five classifiers were employed to assess the experimental performance. Among these, the SSA-DBN classifier consistently showed superior performance across all datasets, particularly excelling in the self-collected dataset with a higher accuracy rate of 0.62% compared to the public SMR-BCI dataset. Additionally, in the BCI IV 2a dataset, our method surpassed PSO-CNN by 5.69% [

49]. Moreover, when compared to the DBN classifier without SSA optimization, the SSA-DBN network demonstrated enhanced classification performance in all three datasets.

While traditional DBN networks are known for their commendable classification capabilities, their limitations in hyperparameter determination restrict their overall efficacy [

36]. Therefore, selecting an optimization method that boosts classification proficiency is crucial. This study undertakes comparative analyses with three alternative algorithms, ultimately identifying the SSA-DBN optimization algorithm to elevate classification performance [

42,

43,

44]. Prior research predominantly focused on classification methods using various public datasets. This study expands upon existing literature by incorporating self-collected datasets. The contributions of this paper are twofold: enhancing classifier performance through SSA-DBN optimization and enriching existing research with additional datasets.

6. Limitations and Future

Limitations: While the feature extraction methodologies and classification recognition algorithms outlined in this study have yielded relatively positive outcomes, it is important to acknowledge certain limitations.

Firstly, the experimental data did not categorize subjects by gender, resulting in a skewed male-to-female ratio within the dataset. For future studies, ensuring gender balance among participants is essential to enhancing the broader applicability of the research findings.

Secondly, despite the proficiency of our feature extraction and classification methods in categorizing data, there is room for improvement in the data collection process. Efforts should be directed towards minimizing the impact of noise on experimental data. The challenge of eliminating wireless interference during experiments persists, which leads to the presence of artifacts in the EEG data.

Lastly, the time-intensive nature of EEG experiments can lead to decreased attention levels in participants during later stages. Additionally, a decline in the conductivity of electrode resistance over time can adversely affect the quality of research data.

Future Directions: The exploration of brain-computer interfaces holds considerable promise for clinical applications. Future research could involve collaborations with hospitals to collect and analyze EEG data from specific patient groups. Comparing this data with that of normal subjects will enable this study of differential patterns. Additionally, there is potential for integrating classification algorithms into online systems, which could significantly enhance the practical utility of brain-computer interfaces and foster the progression of clinical applications in this domain.

7. Conclusions

In this paper, we utilized the SSA-DBN classification model to conduct a comprehensive analysis of three datasets: the self-collected dataset, BCI IV 2a, and SMR-BCI. Initially, we optimized the paradigm of the self-collected data to alleviate the fatigue experienced by subjects during the observation of a single MI task. EEG signals were then collected from eight subjects and underwent preprocessing and EMD + HHT feature extraction. The extracted features were input into our SSA-DBN model, which had been optimized using the SSA. A feature extraction analysis was performed on the self-collected EEG signals, establishing a theoretical foundation for subsequent classification recognition. To evaluate the performance of our SSA-DBN algorithm, we conducted a comparative analysis with other classification algorithms, including DBN, PSO-CNN, PSO-DBN, and GA-DBN. Additionally, to ensure fairness, we validated our algorithms against other publicly available datasets, and various classification methodologies were applied to the self-collected dataset. The results obtained demonstrated that both the classification model and the self-collected dataset presented in this paper possess commendable generalization capabilities. These findings highlight the effectiveness and robustness of our proposed SSA-DBN algorithm for EEG classification tasks.