Abstract

Directly applying brain signals to operate a mobile manned platform, such as a vehicle, may help people with neuromuscular disorders regain their driving ability. In this paper, we developed a novel electroencephalogram (EEG) signal-based driver–vehicle interface (DVI) for the continuous and asynchronous control of brain-controlled vehicles. The proposed DVI consists of the user interface, the command decoding algorithm, and the control model. The user interface is designed to present the control commands and induce the corresponding brain patterns. The command decoding algorithm is developed to decode the control command. The control model is built to convert the decoded commands to control signals. Offline experimental results show that the developed DVI can generate a motion control command with an accuracy of 83.59% and a detection time of about 2 s, while it has a recognition accuracy of 90.06% in idle states. A real-time brain-controlled simulated vehicle based on the DVI was developed and tested on a U-turn road. Experimental results show the feasibility of the DVI for continuously and asynchronously controlling a vehicle. This work not only advances the research on brain-controlled vehicles but also provides valuable insights into driver–vehicle interfaces, multimodal interaction, and intelligent vehicles.

1. Introduction

Vehicles controlled directly by brain signals rather than using limbs are called brain-controlled vehicles [1]. Brain-controlled vehicles can provide an alternative or novel way for individuals with severe neuromuscular disorders to expand the scope of their life activities and thus enhance their quality of life. Brain signals-based driver–vehicle interfaces (DVIs), which are responsible for translating brain signals into driving commands, are the core part of these brain-controlled vehicles. Compared to DVIs based on eye tracking [2,3,4] and speech recognition [5,6,7], brain signals-based DVIs do not need speech input and have low or no requirements in terms of neuromuscular control capabilities. Therefore, they are especially desirable for severely disabled people (or wounded soldiers on battlefields) to operate a vehicle. Even for healthy drivers or special service drivers, such as military vehicle drivers, brain signals-based DVIs could represent a new form of driving assistance. For example, soldiers can use such DVIs to perform a secondary task (e.g., turning on/off a device), while they need to use limbs to control a vehicle and cannot free their limbs to perform a secondary task [8,9,10]. Thus, brain signals-based DVIs have important value for military and civil applications.

Recently, due to the low cost and convenient use of electroencephalogram (EEG) recording in practice [11,12,13], EEG signals have been explored for the development of DVIs. According to the type of output command, EEG-based DVIs can be classified into two groups: (1) DVIs for selecting a task and (2) DVIs for issuing a motion control command. For the first type, users first need to use brain signals to choose a task command from a predefined list and convey it to an autonomous control system, and then the autonomous control system has the responsibility of performing this selected task.

For the former, users first need to use brain signals to select a particular task from an available list of predefined tasks, which is transmitted to an autonomous control system, and then the autonomous control system has the responsibility of executing the selected task. Gohring et al. [14] applied a commercial event-related desynchronization/event-related synchronization (ERD/ERS)-based brain–computer interface (BCI) product from the Emotiv company to build a DVI that allows for path selection at the intersection of a road. Upon making a selection, the autonomous vehicle will perform the chosen direction and reach the desired destination. Bi et al. [15] also built a DVI using P300 potentials, which enable the user to select a destination from a list of nine predefined destinations. The brain-controlled vehicle can first detect the desired destination of the user through their brain signals, and then a vehicle autonomous navigation system is employed to transport the user to the desired destination. Fan et al. [16] further enhanced the destination detection performance of the DVI that was developed in [15] by combining steady-state visual evoked potentials (SSVEP) with P300 signals. However, a major weakness of this type of brain-controlled vehicle is that once the task is selected, the autonomous control system takes over the vehicle until the selected task is completed. Therefore, the user cannot further control the vehicle during vehicle motion.

For the second type of brain-controlled vehicle, users apply EEG signals to generate specific vehicle control commands (e.g., direction and speed) to control the vehicles to reach their destinations. Compared with the first type of vehicle, the second type allows drivers to control vehicle motion throughout the whole process of driving. Gohring et al. [14] developed a DVI based on a commercial ERD/ERS BCI product assisted by vehicle intelligence to perceive environments. While the vehicle will collide with objects or travel out of the safety zone, the intelligent system imminently executes braking action according to information obtained from its sensors. The experimental results from one subject showed that it is feasible to use EEG signals with the assistance of vehicle intelligence to discontinuously control an intelligent vehicle. However, they have not shown the feasibility of using EEG signals alone to continuously control a vehicle without the assistance of vehicle intelligence. To address this, Bi et al. [1] built and combined two BCIs (i.e., a novel SSVEP BCI and a BCI based on the alpha wave of EEG signals) to develop a DVI enabling the user to issue commands, including turning left, turning right, going forward, and starting and stopping. An online driving task, which included lane-keeping and avoiding obstacles, was tested on a U-shaped road. The experimental results showed that it is feasible to use EEG signals alone to continuously control a vehicle.

However, to the best of our knowledge, no studies have explored how to develop a P300-based DVI to generate a motion control command for continuously controlling a vehicle because the traditional P300 paradigm cannot issue a command rapidly and accurately [15,17,18,19] which is necessary for real-time continuously controlling of a dynamic system. However, P300-based DVIs have some advantages over other types of EEG-based DVIs. Compared to ERD/ERS-based DVIs, P300-based DVIs can require less training, and this is important for practical applications [20]. Compared to SSVEP-based DVIs, P300-based DVIs may generate fewer false alarms when users look at the interface but do not want to issue a command, and they annoy subjects less [21,22,23]. Also, it should be noted that SSVEP stimuli (particularly low-frequency SSVEP stimuli) tend to make users more annoyed, and high-frequency SSVEP responses are weaker and harder to accurately detect [24,25]. Moreover, P300-based DVIs can be applied to users to whom the SSVEP or ERD/ERS BCIs are not applicable due to BCI illiteracy [26].

Although some studies explored how to use P300 potentials to control a wheelchair or humanoid robot by issuing motion control commands [27,28], the former are task-level controlled systems, and the latter move slowly and discontinuously. Furthermore, controlling a vehicle using EEG signals is more challenging than controlling a mobile robot since brain-controlled vehicles are more complicated due to their dynamic characteristics and travel at higher speeds [1]. Mezzina and Venuto et al. [29,30] proposed a P300-based BCI to control an acrylic prototype car with ultrasonic sensors. The limitation of the two studies is that (1) the prototype car is really too small to simulate a realistic driving experience; (2) the travel speed is too low (i.e., 10 cm/s); (3) the researchers did not take the asynchronous control into consideration; and (4) the researchers did not set the controlling task and did not test the online driving performance.

We have developed a P300-based BCI that can rapidly and accurately decode human intention offline [31], which has not been applied to develop a brain-controlled vehicle. More importantly, the asynchronous control was not taken into consideration, which can reduce the workload by detecting the non-control willingness of the user in practice [32,33,34,35]. In this paper, we aim to develop a novel asynchronous P300-based DVI for continuously controlling a brain-controlled vehicle and validate the proposed DVI by using driver-in-the-loop online experiments. This work not only advances research on brain-controlled vehicles but also provides valuable insights into DVI, multimodal interactions, and intelligent vehicles.

The main contributions of this study are as follows:

- (1)

- This work is the first to use P300 potentials to develop a DVI to enable users to control a vehicle continuously and asynchronously.

- (2)

- The control command decoding algorithm, including the asynchronous function, was proposed for the development of the P300-based DVI.

- (3)

- We tested and validated the feasibility of the P300-based DVI for controlling a brain-controlled vehicle using a driver-in-the-loop online experiment.

2. Materials and Methods

2.1. System Architecture

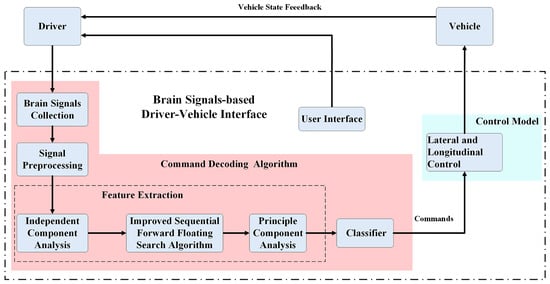

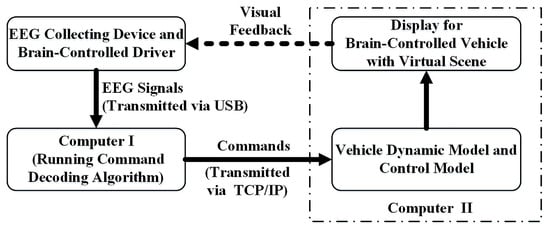

As shown in Figure 1, the proposed brain signals-based DVI includes three main components: (1) user interface, (2) command decoding algorithm, and (3) control model. The DVI can provide four types of control, including turning left, turning right, switching speed, and going forward. The working procedure is as follows. According to the vehicle state and surroundings information, brain-controlled drivers decide whether to send control commands or not to ensure the vehicle travels safely. If the direction or speed of the vehicle needs to be changed, the driver needs to pay attention to the corresponding command characters in the user interface. Otherwise, they do not need to pay attention to any of the characters. Meanwhile, the detection system constantly collects EEG signals. The collected EEG signals are first preprocessed and then transformed into independent components (ICs) using independent component analysis (ICA). After that, the improved sequential forward floating search (ISFFS) algorithm is used to select the optimal ICs. These selected ICs are employed as original features. Then, the features compressed from the original features using principal component analysis (PCA) are fed into a classifier. Finally, the output of the classifier is transformed into the inputs of the control model.

Figure 1.

Structure of brain signals-based driver-vehicle interface.

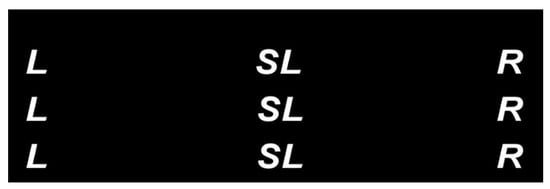

2.2. User Interface

As shown in Figure 2, we designed a novel user interface in our previous [31], where L, R, and S (including SL and SH for switching to low and high speeds, respectively) represent turning left and right and switching speed commands. In the interface, nine letters are distributed in a 3 × 3 matrix, and every three of the same letters denote one type of command. These nine letters flash sequentially in a random order every round, similar to the odd-ball P300 paradigm [36,37]. Each round refers to a flashing cycle of the nine characters, in which each letter flashes and lasts for 120 ms only once. The interval between two consecutive letter flashings is set to zero in the paradigm. Thus, the time period for one complete round is 1.08 s (120 ms × 9).

Figure 2.

User interface, where L, R, and S (SL or SH) represent turning left and right and switching speed, respectively.

Intuitively, the total flashing repetition number of every command (i.e., L, S, or R) is three in one round and six in two rounds because every three of the same letters denote one type of command. However, in some random flashing sequences, two or all of the three same characters corresponding to one command in a round may successively flash in a single round. On the basis of the findings in [38,39,40], in case the stimulus onset asynchrony (SOA) is less than 500 ms, an attention blink occurs. Therefore, for a command in these flashing sequences, only the first corresponding flashing character may catch the user’s attention, and the last one or two may not. Therefore, compared to traditional paradigms, the segmentation and summation rule of the continuous EEG data needs to be modified to avoid extracting useless information (for more detail, see Section 2.3.1).

2.3. Command Decoding Algorithm

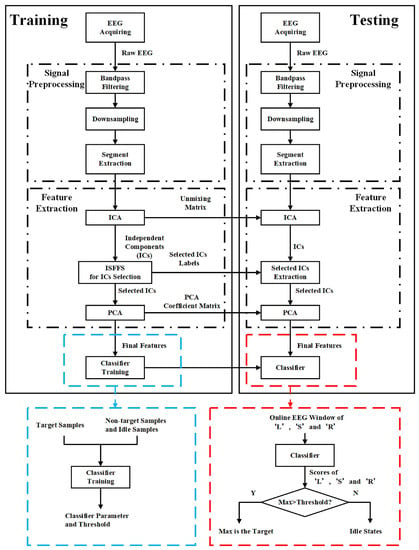

Figure 3 shows the signal flow chart of the command decoding algorithm. The flow chart includes both the training and testing phases. Each phase includes signal preprocessing, feature extraction, and classification. In the training phase, after the EEG signals were collected, the unmixing matrix of ICA, labels of optimal ICs selected by using ISFFS, transformation matrix of PCA, parameters of the classifier, and threshold were determined for online command decoding. In the testing phase, all of the trained parameters are utilized to decode the desired command from EEG signals for real-time control of a vehicle.

Figure 3.

Signal flowchart of the command decoding algorithm.

2.3.1. Training Phase

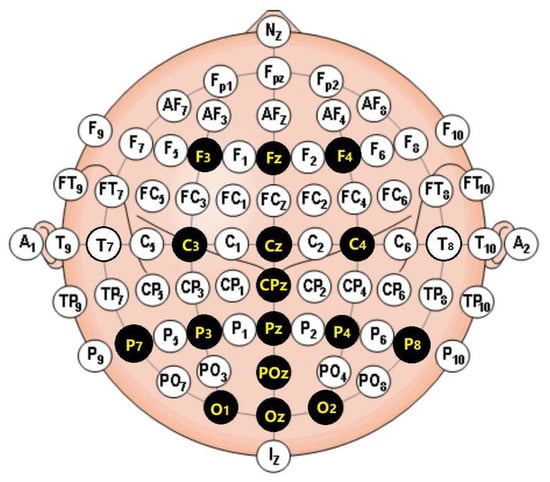

Data collection: Sixteen standard locations are set according to the international standard 10–20 montage. EEG signals are collected from each of these locations through a 16-channel amplifier made by SYMTOP, as shown in Figure 4. The mean potential of the left and right earlobes is set as the reference potential to other channels. EEG signals are amplified and digitalized with a sampling rate of 1000 Hz, and line noise is removed using a power-line notch filter.

Figure 4.

Channels used to collect EEG signals were marked in black disks with yellow text.

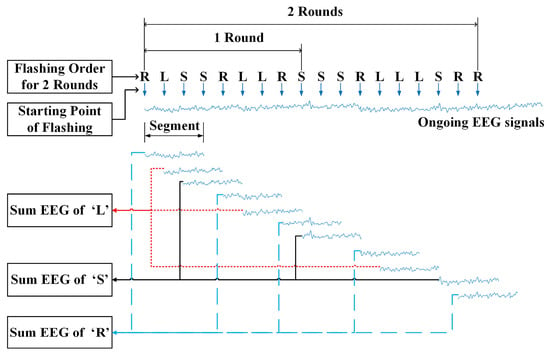

Preprocessing: Once the interface completes all rounds of flashing, the collected EEG data are filtered from 0.53 Hz to 15 Hz using a bandpass filter and down-sampled by a factor of two. EEG segments are then extracted for each command according to the following two rules. Rule 1: Command labels of all characters in all rounds are recorded, and all the corresponding EEG signals of all letters are segmented from the onset of each letter flashing to post-stimulus T. Rule 2: If there exist two, three or more successive same flashing letters in each command label, only the first letter is considered as an effective flashing letter and the corresponding EEG segment is extracted for further processing; otherwise all the flashing letters are considered as effective and all of the EEG segments are used for decoding commands. Figure 5 shows the illustration of the segment extraction procedure. Finally, the extracted EEG segments associated with each command are summed.

Figure 5.

Illustration of the EEG segment extraction procedure.

ICA: Original features are extracted from the IC domain. The extracted 16-channel EEG segment associated with each command is taken as the input of ICA, and ICs are then obtained by

where represents the extracted EEG segment with a length of 512 ms, as shown in Figure 5 from the ith channel, and L represents the number of all channels and is set as 16 in this work. represents the independent component vector, t denotes the sampling time instance, and represents the unmixing matrix, which can be obtained by using the Infomax method [41].

ISFFS: ISFFS is an improved SFFS and is used to select the optimal ICs from all ICs proposed in our previous study [31]. The feature pool F can be written as

where represents the feature vector associated with kth IC; represents the ith time domain feature of ; L represents all the number of ICs and is equal to the number of all channels; N represents the dimensionality of every IC and is set to 256 due to the down sample factor of 2.

The SFFS is a bottom-up search procedure. During the optimal feature subset search procedure, the most significant feature is included in the current optimal subset. Meanwhile, the least significant feature of the current optimal subset will be excluded. Considering the long period of high computational complexity, especially for time domain features with a high resampling rate of EEG signals in this study, we apply principal component analysis to decrease the dimensionality of the time domain features and thus significantly reduce the computational time and make it feasible in practice. This method is subsequently referred to as ISFFS. More details about the ISFFS algorithm can be seen in our previous study [29].

Classifier: After the optimal ICs are obtained, the PCA is used to decrease the dimensionality of the selected features, which are obtained by concatenating the optimal ICs. Assume that the number of optimal ICs selected by ISFFS for each subject is K, and thus, the number of the original features is K × N. The components with the highest P eigenvalues are chosen as feature weights, and new features can be presented as , which can cover more than 95% information of all the original features. P is set to 50 for all users in this work to meet the above requirement.

We apply both Fisher linear discriminant analysis (FLDA) and the support vector machine (SVM) to build the classifiers. The classifier built by the FLDA can be denoted as

where w represents the projection direction. The value of threshold is determined by the receiver of curve (ROC) method. If the score y is larger than , the sample is classified into the target class; otherwise, the sample will be classified into the non-target class or idle class.

The classifier built using the SVM with the radial basis function (RBF) as the kernel function can be represented as

where is the ith support vector (SV) of the classifier, is the weight of the ith SV of the classifier, n is the number of the SV of the classifier, g is the width of the RBF of the classifier, and b is the bias of the classifier. We used the LIBSVM software (Version 2.0) library proposed by Chang and Lin [42] to train the parameters of the SVM classifier.

2.3.2. Testing Phase

As shown in Figure 3, the testing experiment is performed using the following three steps. First, EEG signals associated with three types of command characters are extracted, and the corresponding features are computed in each cycle. Next, these features are fed into the classifier, and scores associated with three types of commands are all obtained. Third, if the maximum of the three scores is larger than , then the corresponding type of command character is recognized as the target. If the maximum is smaller than , then an idle state (i.e., a going forward command) is issued.

2.4. Control Model

Two control models, lateral and longitudinal, were designed in the proposed DVI. The lateral control model is defined as follows:

where represents the steering angle at the nth update and represent the output command of the decoding algorithm, with for turning right, for going forward, and for turning left. In addition, is a positive constant angle value set as . The value of can be calibrated for different subjects (e.g., 15°, 20°, or 30°) before the formal online experiment.

The longitudinal control model is defined as follows:

where represents the speed. is set to be about 7 km/h and is set to be about 8 km/h. represents the output command with for the switching speed.

3. Experiment

3.1. Subjects

Six healthy subjects (aged between 21 and 25) participated in the experiment. All of the subjects had no history of brain disease and had normal or corrected-to-normal vision. The study adhered to the principles of the 2013 Declaration of Helsinki and was conducted in accordance with the Declaration of Helsinki. All subjects signed an informed consent form after the experiment’s purpose, required tasks, and possible consequences of participation were explained.

3.2. Experimental Platform

As shown in Figure 6, the experimental platform includes a simulated vehicle with a virtual driving scene and the proposed DVI. The simulated vehicle was supported by 14-DOF vehicle dynamics from CarSim software (version 8.02), and the virtual scene was developed with Matlab/Simulink. The command decoding algorithm is composed of an EEG amplifier for collecting EEG signals and signal processing and a classification algorithm coded in C. The communication system between the computer running the command decoding algorithm based on EEG signals and the computer running the control model and virtual vehicle was built with the Transmission Control Protocol/Internet Protocol (TCP/IP).

Figure 6.

Experimental platform.

3.3. Experimental Procedure

Prior to conducting the experiments, some preparations were made. We adjusted the distance between the subjects and the screen displaying the user interface to make subjects feel comfortable. All of the subjects were informed of the entire experimental procedure to make them fully aware of the experiment protocol. Electrodes were appropriately attached at corresponding locations, and all the contact impedances between the scalp and electrodes were adjusted to be below 10 kΩ. In the online experiment, the user interface was presented as a head-up display system. This allowed the users to pay attention to the interface to issue control commands as well as observe the road conditions in case of traveling out of the road. All apparatuses were examined before EEG collection to ensure normal operation during the experiment.

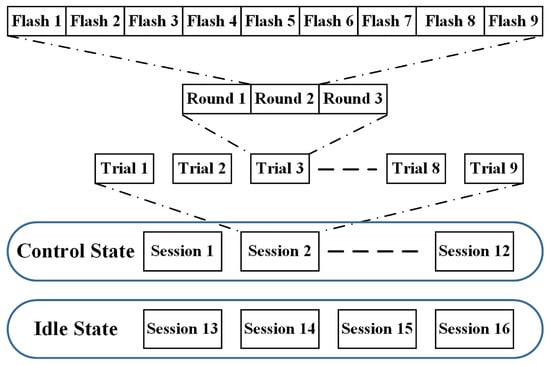

The experiment consisted of two parts: (1) training and testing the command decoding algorithm and (2) testing the developed brain-controlled vehicle based on the proposed brain signals-based DVI. In the training part, the training data were collected and used to determine the parameters and test the performance of the command decoding algorithm. EEG data from all three rounds in one trial were collected for evaluation of the performance of the command decoding algorithm offline, given different round numbers.

As shown in Figure 7, the data collection consisted of two phases: the first phase for control states (target and nontarget samples) and the second phase for idle states. After twelve sessions were completed for control command data collection, four sessions were conducted for idle state data collection. Each session included nine trials, and every trial included three rounds. In the first phase, the subjects concentrated their visual attention on the user interface and counted the flashing number of the predefined target letter immediately every time it flashed during the trials until the session was concluded. In the second phase, subjects were asked not to attend to any characters in every session while the user interface worked all the time. A few minutes were permitted for the subjects to rest between two consecutive sessions for each phase.

Figure 7.

Data collection for control and idle states.

In total, we obtained 108 target samples, 216 non-target samples, and 108 idle samples. These samples were classified into two classes. Class 1 consisted of all 108 target samples. Class 2 consisted of all 216 non-target samples when we evaluated the algorithm without considering the idle states. When we took idle states into consideration, Class 1 consisted of all 108 target samples, and Class 2 consisted of all 216 non-target and 108 idle samples. The whole offline training phase was completed within 40 min.

During the part of testing the brain signals-based DVI, subjects were required to perform the driving task: controlling the simulated vehicle to travel along the centerline of the road from the starting point to the destination using the proposed DVI. Subjects should try their best to drive the vehicle to stay inside the road boundaries while driving. If the vehicle travels out of the road boundaries, subjects should try their best to control the vehicle back to the road. Before the formal testing, subjects practiced some runs to learn how to control the brain-controlled vehicle by using the brain signals-based control system. Every subject performed five runs and was given a few minutes to rest between two consecutive runs.

4. Results and Discussion

4.1. EEG Response under the Paradigm

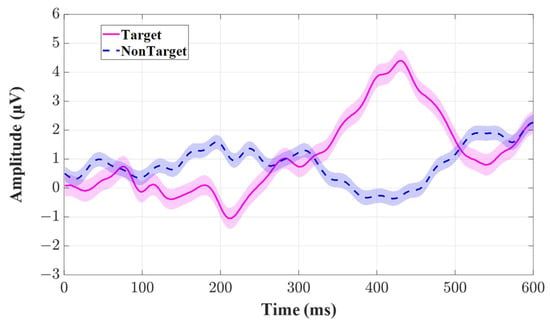

Figure 8 shows the ground-average EEG responses across all the participants to the target and non-target stimuli at channel Cz. The horizontal axis represents the time from the onset of the flashing to the post-stimuli 600 ms, and the vertical axis represents the amplitude of the EEG signals. The red solid line denotes the EEG wave morphology elicited by the target stimuli, and the blue dashed line denotes the EEG wave morphology elicited by the non-target stimuli. The shadow areas around the lines represent the standard errors corresponding to the sampling time point. We can see that the amplitude of EEG wave morphology ranged from −2 μV to 5 μV. The typical P300 component is elicited with an evident positive going wave at about 420 ms, and the N200 component is also elicited near 200 ms. Both components are present in the EEG response to the target stimuli, yet they are absent in the EEG response to nontarget stimuli.

Figure 8.

The ground-average EEG responses across all the participants to the target (red solid line) and non-target stimuli (blue dash line) at channel Cz. The shadow areas represent the standard errors at the corresponding sampling time point from the onset of the flashing to the post-stimuli 600 ms.

4.2. Performance of the Proposed DVI

We ran 100 × 6-fold cross-validations to evaluate the performance of the proposed BCI based on the samples as mentioned in section III (C) and took the idle states into consideration. Thus, the used samples for training and testing included 108 target samples, 216 non-target samples, and 108 idle samples.

Table 1 and Table 2 show the accuracy of the proposed DVI with FLDA and SVM classifiers as a function of the detection time. We can see that for the two classifiers, the accuracies in control and idle states both increase over the detection time. The FLDA significantly outperforms the SVM in both control states and idle states on average, given the different detection times (p = 0.005, p = 0.024, p = 0.043, p = 0.039, p = 0.025, p = 0.003).

Table 1.

The accuracy in the control state and idle state for the FLDA as a function of detection time across all subjects (%).

Table 2.

The accuracy in the control state and idle state for the SVM as a function of detection time across all subjects (%).

For the DVI with the FLDA classifier, the average accuracies with standard errors in control states are 77.3 ± 2.0%, 83.6 ± 2.6%, and 87.5 ± 2.6%, respectively, whereas the average accuracies with standard errors in idle states are 86.8 ± 1.4%, 90.1 ± 2.7%, and 92.7 ± 2.4%, respectively, given detection times of 1.08 s, 2.16 s, and 3.24 s. Furthermore, half of all subjects performed well with an accuracy near 90% in control states and higher than 93% in idle states, given a detection time of 2.16 s. In this paper, we took a detection time of 2.16 s for online control of a vehicle.

To make sure that the proposed method could be implemented in real-time without incurring a substantial delay, we calculated the computational time of the entire command decoding algorithm with an Intel® Core TM i7-3770 CPU (3.40 GHz). The average computational time was about 340 ms.

4.3. Performance of Brain-Controlled Vehicles Based on the Proposed Novel Asynchronous Brain Signals-Based DVI

The real-time brain-controlled simulated vehicle based on the proposed DVI was developed and tested on a U-turn road for six subjects. The task completion time and mean lateral error were selected as evaluation indexes to rate the performance of the vehicles. The task completion time was defined as the time taken for the vehicle to travel from the starting point to the destination. When the task completion time was longer than twice that of the nominal time, the task was stopped and defined as a failed task. The nominal time was calculated as the length of the centerline of the road from the starting point to the destination over the maximum speed. The mean lateral error was defined as the mean of the lateral error between the trajectory of the simulated vehicle and the centerline of the road from the starting point to the destination. It should be noted that the two measures are applicable only when an experimental run is successfully completed.

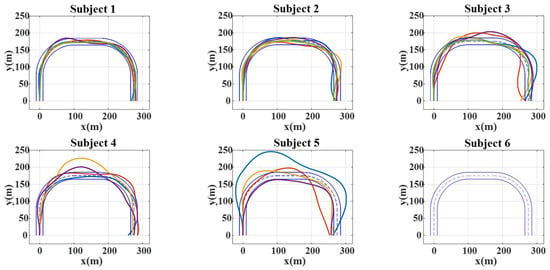

Figure 9 shows the trajectories of the brain-controlled vehicles based on the proposed DVI in the five consecutive runs for each subject. The two solid blue lines represent the road boundaries. Other color lines represent the vehicle trajectories of the successful tasks. Subjects 1, 2, and 3 achieved good performance in brain-controlled tasks, and they completed all five runs. Subjects 4 and 5 did not perform as well as Subjects 1, 2, and 3 and completed only four out of five runs. Subject 6 exhibited the worst performance and did not complete any of the runs. The task completion times of the subjects were 250.01 ± 2.79 s, 253.21 ± 1.68 s, 263.20 ± 3.17 s, 261.97 ± 5.64 s, and 265.33 ± 19.98 s and the mean lateral errors were 2.43 ± 0.48 m, 4.21 ± 0.92 m, 8.85 ± 2.05 s, 9.09 ± 1.91 s, and 18.2 ± 5.37 s, respectively. Subject 1 performed the task best of all subjects with the lowest mean lateral error and the lowest mean task completion time of about 250 s, which is close to the ideal task completion time of 245 s. Other subjects, especially Subjects 5 and 6, performed poorly.

Figure 9.

The trajectories of the developed brain-controlled vehicle based on the proposed DVI in successful runs of the consecutive 5 runs for every subject. Each thick line represents each successful run.

The major reason for the performance difference in controlling a vehicle among the subjects is that the control command recognition accuracies in control and idle states are different between the subjects. As shown in Table 1, the accuracies in control states for Subjects 1, 2, and 3 were both near 90%, while the accuracies in control states for Subjects 5 and 6 were under 80%. The accuracies in idle states for Subjects 1, 2, and 3 were both higher than 93%, while the accuracies in idle states for Subjects 5 and 6 were under 90%. A possible reason for this disparity may have involved difficulties associated with eliciting specific brain activity patterns. It has been demonstrated that BCIs do not work for some users, which is referred to as “BCI illiteracy” [43,44,45].

Another possible reason for the task performance difference is that subjects need to learn how to use the proposed DVI rather than their limbs to drive the brain-controlled vehicle, which is a completely new operation mode for them. Some subjects may not learn well or need more practice. For example, although Subject 3 had the same accuracy in control states as Subject 2 and even higher performance in idle states than Subject 2 at the same detection time, the latter performed the tasks in both less time and with a lower mean lateral error in brain-controlled tracking than the former in all five runs.

Compared to the brain-controlled vehicle reported in [1], which enabled users to issue lateral control and starting/stopping commands to control a vehicle continuously, the proposed brain-controlled vehicle can provide lateral control and switching speed commands and shows comparable performance. It should be noted that experimental results in this paper and [1] are from different subjects (four subjects used in [1] and six subjects used in this paper are not any of the same subjects). Furthermore, the proposed brain-controlled vehicle does not require subjects to issue commands by closing their eyes and thus has lower requirements for the neuromuscular control capabilities of subjects. Finally, the proposed brain-controlled vehicle may provide an alternative way for some subjects who have a relatively low accuracy in using the brain-controlled vehicle developed in [1]. For other brain-controlled systems, such as telepresence controlling an NAO humanoid robot in [28], the robot moved slowly and discontinuously and was not controlled in the first perspective. The comparison between this work and the related articles is also shown below. Table 3 shows the thorough comparison of the proposed system between this work and the related articles, including the controlled objective, whether it is a dynamic system or not, whether it is continuously controlled or not, with or without asynchronous function, active or passive BCI, and some notes, if any. Table 4 shows the thorough comparison of the performance between this work and the related articles, including the type of EEG signals, offline classification accuracy, detection time (s), online performance, and some notes, if any. Venuto et al. [29] and Mezzina et al. [30] proposed a continuously controlled dynamic system without the asynchronous function using P300 signals. The detection time is relatively low, and the online accuracy is comparable. However, the speed is relatively low, and the online driving performance is not available.

Table 3.

A thorough comparison of the proposed system between this work and the related articles.

Table 4.

A thorough comparison of the performance between this work and the related articles.

There are some limitations in this study. First, it is necessary to expand the subject pool with more diverse subjects for data collection. Experiments with a larger and more diverse group of participants could provide a more comprehensive understanding of the system’s effectiveness and potential limitations. Second, although a higher information transfer rate (ITR) does not mean better controlling performance, there is a need to conduct a comparative analysis of ITR in real-time tasks when conducting the online experiment against other relevant studies to achieve a more comprehensive evaluation of the performance of these brain-controlled systems, like that in [30].

5. Conclusions

This paper developed a novel asynchronous brain signals-based DVI that can be used to control the speed and direction of a vehicle. The proposed DVI consists of a user interface, command decoding algorithm, and control model. The real-time brain-controlled simulated vehicle based on the proposed DVI was developed and tested on a U-turn road. The experimental results demonstrate the feasibility of the proposed DVI in controlling a vehicle, at least for some users who have high recognition accuracy in control command and idle states. The study is important for the future development of brain-controlled dynamic systems (especially based on event-related potentials), driver–vehicle interfaces, intelligent vehicles, and multimodal interactions with several implications. First, it highlights the difference between evaluating BCIs and brain-controlled dynamic systems. Controlling a dynamic system is more difficult and challenging. Second, compared to brain-controlled wheelchairs and robots (e.g., NAO robots), brain-controlled vehicles are more complicated, and they travel at higher speeds. Thus, the proposed method should be useful for improving the control of brain-controlled wheelchairs and robots. Third, the proposed DVI can be applied to perform a secondary driving task (e.g., turning on/off a GPS device) while healthy drivers use limbs to control a vehicle, which provides new insights into multimodal interaction and intelligent vehicles.

Our future work aims to improve the recognition accuracy of the DVI by using deep learning methods [49,50], validate the proposed DVI with more diverse subjects in various driving scenes, design an assistive controller to improve the overall driving performance and evaluate the impact of fatigue on DVI system performance, ideally by comparing it to real driving tasks during fatigue states. We will consider user satisfaction and subjective workload assessment to offer a more holistic evaluation. We also plan to apply the proposed method to control other dynamic systems and extend the proposed DVI to perform a secondary driving task while healthy drivers use limbs to control a vehicle.

Author Contributions

Conceptualization, L.B.; methodology, J.L. and Y.G.; software, J.L. and Y.G.; validation, L.B.; formal analysis, J.L.; investigation, C.W.; resources, C.W.; data curation, J.L.; writing—original draft preparation, J.L. and Y.G.; writing—review and editing, L.B., C.W. and X.Q.; visualization, J.L. and Y.G.; supervision, L.B., C.W. and X.Q.; project administration, L.B., C.W. and X.Q.; funding acquisition, L.B. and X.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the National Natural Science Foundation of China, grant number 51975052, the Military Medical Science and Technology Youth Cultivation Program Incubation Project, grant number 20QNPY1344, and the Youth Talent Fund of Beijing Institute of Basic Medical Sciences, grant number AMMS-ONZD-2021-003.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki for procedures involving human participants. Ethical review and approval are waived for this study for the reason that in EEG signals’ acquisition, no invasive procedures were involved, nor were special biodata captured that could be used to identify the participants, and the Ethics Committee of Beijing Institute of Technology has not been established when conducting the experiment.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

All data included in this study are available upon reasonable request by contacting the corresponding author.

Acknowledgments

The authors would like to thank the study participants for their participation and for their feedback.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bi, L.; Fan, X.A.; Jie, K.; Teng, T.; Ding, H.; Liu, Y. Using a head-up display-based steady-state visually evoked potential brain–computer interface to control a simulated vehicle. IEEE Trans. Intell. Transp. Syst. 2014, 15, 959–966. [Google Scholar] [CrossRef]

- Vicente, F.; Huang, Z.; Xiong, X.; De la Torre, F.; Zhang, W.; Levi, D. Driver gaze tracking and eyes off the road detection system. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2014–2027. [Google Scholar] [CrossRef]

- Mcmullen, D.P.; Hotson, G.; Katyal, K.D.; Wester, B.A.; Fifer, M.S.; Mcgee, T.G.; Harris, A.; Johannes, M.S.; Vogelstein, R.J.; Ravitz, A.D. Demonstration of a Semi-Autonomous Hybrid Brain-Machine Interface using Human Intracranial EEG, Eye Tracking, and Computer Vision to Control a Robotic Upper Limb Prosthetic. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 784–796. [Google Scholar] [CrossRef] [PubMed]

- Meena, Y.K.; Cecotti, H.; Wonglin, K.; Dutta, A.; Prasad, G. Toward Optimization of Gaze-Controlled Human-Computer Interaction: Application to Hindi Virtual Keyboard for Stroke Patients. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 911. [Google Scholar] [CrossRef]

- Lee, J.D.; Caven, B.; Haake, S.; Brown, T.L. Speech-based interaction with in-vehicle computers: The effect of speech-based e-mail on drivers’ attention to the roadway. Hum. Factors 2001, 43, 631–640. [Google Scholar] [CrossRef]

- Li, W.; Zhou, Y.; Poh, N.; Zhou, F.; Liao, Q. Feature denoising using joint sparse representation for in-car speech recognition. IEEE Signal Process. Lett. 2013, 20, 681–684. [Google Scholar] [CrossRef]

- Huo, X.; Park, H.; Kim, J.; Ghovanloo, M. A Dual-Mode Human Computer Interface Combining Speech and Tongue Motion for People with Severe Disabilities. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 979–991. [Google Scholar] [CrossRef] [PubMed]

- He, T.; Bi, L.; Lian, J.; Sun, H. A brain signals-based interface between drivers and in-vehicle devices. In Proceedings of the Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 1333–1337. [Google Scholar]

- Thurlings, M.E.; Van Erp, J.B.; Brouwer, A.-M.; Werkhoven, P. Controlling a Tactile ERP–BCI in a Dual Task. IEEE Trans. Comput. Intell. AI Games 2013, 5, 129–140. [Google Scholar] [CrossRef]

- Allison, B.Z.; Dunne, S.; Leeb, R.; Milln, J.D.R.; Nijholt, A. Towards Practical Brain-Computer Interfaces: Bridging the Gap from Research to Real-World Applications; Springer Publishing Company Inc.: Berlin/Heidelberg, Germany, 2012; pp. 1–30. [Google Scholar]

- Wang, R.; Liu, Y.; Shi, J.; Peng, B.; Fei, W.; Bi, L. Sound Target Detection Under Noisy Environment Using Brain-Computer Interface. IEEE Trans. Neural Syst. Rehab. Eng. 2022, 31, 229–237. [Google Scholar] [CrossRef]

- Mammone, N.; De Salvo, S.; Bonanno, L.; Ieracitano, C.; Marino, S.; Marra, A.; Bramanti, A.; Morabito, F.C. Brain Network Analysis of Compressive Sensed High-Density EEG Signals in AD and MCI Subjects. IEEE Trans. Ind. Inform. 2019, 15, 527–536. [Google Scholar] [CrossRef]

- Yang, C.; Wu, H.; Li, Z.; He, W.; Wang, N.; Su, C.-Y. Mind control of a robotic arm with visual fusion technology. IEEE Trans. Ind. Inform. 2017, 14, 3822–3830. [Google Scholar] [CrossRef]

- Göhring, D.; Latotzky, D.; Wang, M.; Rojas, R. Semi-autonomous car control using brain computer interfaces. Intell. Auton. Syst. 2013, 12, 393–408. [Google Scholar]

- Bi, L.; Fan, X.-A.; Luo, N.; Jie, K.; Li, Y.; Liu, Y. A head-up display-based P300 brain–computer interface for destination selection. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1996–2001. [Google Scholar] [CrossRef]

- Fan, X.-A.; Bi, L.; Teng, T.; Ding, H.; Liu, Y. A brain–computer interface-based vehicle destination selection system using P300 and SSVEP signals. IEEE Trans. Intell. Transp. Syst. 2014, 16, 274–283. [Google Scholar] [CrossRef]

- Akram, F.; Han, S.M.; Kim, T.-S. An efficient word typing P300-BCI system using a modified T9 interface and random forest classifier. Comput. Biol. Med. 2015, 56, 30–36. [Google Scholar] [CrossRef] [PubMed]

- Lin, Z.; Zhang, C.; Zeng, Y.; Tong, L.; Yan, B. A novel P300 BCI speller based on the Triple RSVP paradigm. Sci. Rep. 2018, 8, 3350. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Jin, J.; Daly, I.; Liu, C.; Cichocki, A. Feature selection method based on Menger curvature and LDA theory for a P300 brain–computer interface. J. Neural Eng. 2022, 18, 066050. [Google Scholar] [CrossRef]

- Bi, L.; Fan, X.-A.; Liu, Y. EEG-based brain-controlled mobile robots: A survey. IEEE Trans. Hum.-Mach. Syst. 2013, 43, 161–176. [Google Scholar] [CrossRef]

- Brunner, P.; Joshi, S.; Briskin, S.; Wolpaw, J.R.; Bischof, H.; Schalk, G. Does the ‘P300’speller depend on eye gaze? J. Neural Eng. 2010, 7, 056013. [Google Scholar] [CrossRef]

- Bi, L.; Lian, J.; Jie, K.; Lai, R.; Liu, Y. A speed and direction-based cursor control system with P300 and SSVEP. Biomed. Signal Process. Control 2014, 14, 126–133. [Google Scholar] [CrossRef]

- Martinez-Cagigal, V.; Gomez-Pilar, J.; Alvarez, D.; Hornero, R. An Asynchronous P300-Based Brain-Computer Interface Web Browser for Severely Disabled People. IEEE Trans. Neural Syst. Rehabil. Eng. A Publ. IEEE Eng. Med. Biol. Soc. 2016, 25, 1332–1342. [Google Scholar] [CrossRef] [PubMed]

- Zhu, D.; Bieger, J.; Molina, G.G.; Aarts, R.M. A survey of stimulation methods used in SSVEP-based BCIs. Comput. Intell. Neurosci. 2010, 2010, 1. [Google Scholar] [CrossRef] [PubMed]

- Volosyak, I.; Valbuena, D.; Luth, T.; Malechka, T.; Graser, A. BCI Demographics II: How Many (and What Kinds of) People Can Use a High-Frequency SSVEP BCI? IEEE Trans. Neural Syst. Rehabil. Eng. 2011, 19, 232–239. [Google Scholar] [CrossRef] [PubMed]

- Allison, B.Z.; Neuper, C. Could anyone use a BCI? In Brain-Computer Interfaces. Human-Computer Interaction Series; Springer: London, UK, 2010; pp. 35–54. [Google Scholar]

- Zhang, R.; Li, Y.; Yan, Y.; Zhang, H.; Wu, S.; Yu, T.; Gu, Z. Control of a wheelchair in an indoor environment based on a brain–computer interface and automated navigation. IEEE Trans. Neural Syst. Rehab. Eng. 2016, 24, 128–139. [Google Scholar] [CrossRef]

- Li, M.; Li, W.; Niu, L.; Zhou, H.; Chen, G.; Duan, F. An event-related potential-based adaptive model for telepresence control of humanoid robot motion in an environment cluttered with obstacles. IEEE Trans. Ind. Electron. 2017, 64, 1696–1705. [Google Scholar] [CrossRef]

- De Venuto, D.; Annese, V.F.; Mezzina, G. An embedded system remotely driving mechanical devices by P300 brain activity. In Proceedings of the Design, Automation & Test in Europe Conference & Exhibition (DATE), Lausanne, Switzerland, 27–31 March 2017; pp. 1014–1019. [Google Scholar]

- Mezzina, G.; De Venuto, D. Four-Wheel Vehicle Driving by using a Spatio-Temporal Characterization of the P300 Brain Potential. In Proceedings of the 2020 AEIT International Conference of Electrical and Electronic Technologies for Automotive (AEIT AUTOMOTIVE), Turin, Italy, 18–20 November 2020; pp. 1–6. [Google Scholar]

- Lian, J.; Bi, L.; Fei, W. A novel event-related potential-based brain–computer interface for continuously controlling dynamic systems. IEEE Access 2019, 7, 38721–38729. [Google Scholar] [CrossRef]

- Zhang, H.; Guan, C.; Wang, C. Asynchronous P300-Based Brain--Computer Interfaces: A Computational Approach with Statistical Models. IEEE Trans. Biomed. Eng. 2008, 55, 1754–1763. [Google Scholar] [CrossRef]

- Pinegger, A.; Faller, J.; Halder, S.; Wriessnegger, S.C.; Muller-Putz, G.R. Control or non-control state: That is the question! An asynchronous visual P300-based BCI approach. J. Neural Eng. 2015, 12, 014001. [Google Scholar] [CrossRef]

- Panicker, R.C.; Sadasivan, P.; Ying, S. An asynchronous P300 BCI with SSVEP-based control state detection. IEEE Trans. Biomed. Eng. 2011, 58, 1781–1788. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, X.; Guo, X.; Liu, J.; Zhou, B. Design and implementation of an asynchronous BCI system with alpha rhythm and SSVEP. IEEE Access 2019, 7, 146123–146143. [Google Scholar] [CrossRef]

- Guan, C.; Thulasidas, M.; Wu, J. High performance P300 speller for brain-computer interface. In Proceedings of the IEEE International Workshop on Biomedical Circuits & Systems, Singapore, 1–3 December 2004. [Google Scholar]

- Ditthapron, A.; Banluesombatkul, N.; Ketrat, S.; Chuangsuwanich, E.; Wilaiprasitporn, T. Universal joint feature extraction for P300 EEG classification using multi-task autoencoder. IEEE Access 2019, 7, 68415–68428. [Google Scholar] [CrossRef]

- Raymond, J.E.; Shapiro, K.L.; Arnell, K.M. Temporary suppression of visual processing in an RSVP task: An attentional blink? J. Exp. Psychol. Hum. Percept. Perform. 1992, 18, 849. [Google Scholar] [CrossRef] [PubMed]

- Allison, B.Z.; Pineda, J.A. Effects of SOA and flash pattern manipulations on ERPs, performance, and preference: Implications for a BCI system. Int. J. Psychophysiol. 2006, 59, 127–140. [Google Scholar] [CrossRef] [PubMed]

- Sellers, E.W.; Krusienski, D.J.; McFarland, D.J.; Vaughan, T.M.; Wolpaw, J.R. A P300 event-related potential brain–computer interface (BCI): The effects of matrix size and inter stimulus interval on performance. Biol. Psychol. 2006, 73, 242–252. [Google Scholar] [CrossRef]

- Bell, A.J.; Sejnowski, T.J. An information-maximization approach to blind separation and blind deconvolution. Neural Comput. 1995, 7, 1129–1159. [Google Scholar] [CrossRef]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. (TIST) 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Allison, B.Z.; Wolpaw, E.W.; Wolpaw, J.R. Brain–computer interface systems: Progress and prospects. Expert Rev. Med. Devices 2007, 4, 463–474. [Google Scholar] [CrossRef]

- Zhang, X.; Guo, Y.; Gao, B.; Long, J. Alpha frequency intervention by electrical stimulation to improve performance in mu-based BCI. IEEE Trans. Neural Syst. Rehab. Eng. 2020, 28, 1262–1270. [Google Scholar] [CrossRef]

- Yao, L.; Meng, J.; Zhang, D.; Sheng, X.; Zhu, X. Combining motor imagery with selective sensation toward a hybrid-modality BCI. IEEE Trans. Biomed. Eng. 2014, 61, 2304–2312. [Google Scholar] [CrossRef]

- Teng, T.; Bi, L.; Liu, Y. EEG-Based Detection of Driver Emergency Braking Intention for Brain-Controlled Vehicles. IEEE Trans. Intell. Transp. Syst. 2017, 19, 1766–1773. [Google Scholar] [CrossRef]

- Bi, L.; Zhang, J.; Lian, J. EEG-based adaptive driver-vehicle interface using variational autoencoder and PI-TSVM. IEEE Trans. Neural Syst. Rehab. Eng. 2019, 27, 2025–2033. [Google Scholar] [CrossRef] [PubMed]

- Bai, X.; Li, M.; Qi, S.; Ng, A.C.M.; Ng, T.; Qian, W. A hybrid P300-SSVEP brain-computer interface speller with a frequency enhanced row and column paradigm. Front. Neurosci. 2023, 17, 1133933. [Google Scholar] [CrossRef]

- Anari, S.; Tataei Sarshar, N.; Mahjoori, N.; Dorosti, S.; Rezaie, A. Review of deep learning approaches for thyroid cancer diagnosis. Math. Probl. Eng. 2022, 2022, 5052435. [Google Scholar] [CrossRef]

- Kasgari, A.B.; Safavi, S.; Nouri, M.; Hou, J.; Sarshar, N.T.; Ranjbarzadeh, R. Point-of-Interest Preference Model Using an Attention Mechanism in a Convolutional Neural Network. Bioengineering 2023, 10, 495. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).