Spatiotemporal Modeling of Grip Forces Captures Proficiency in Manual Robot Control

Abstract

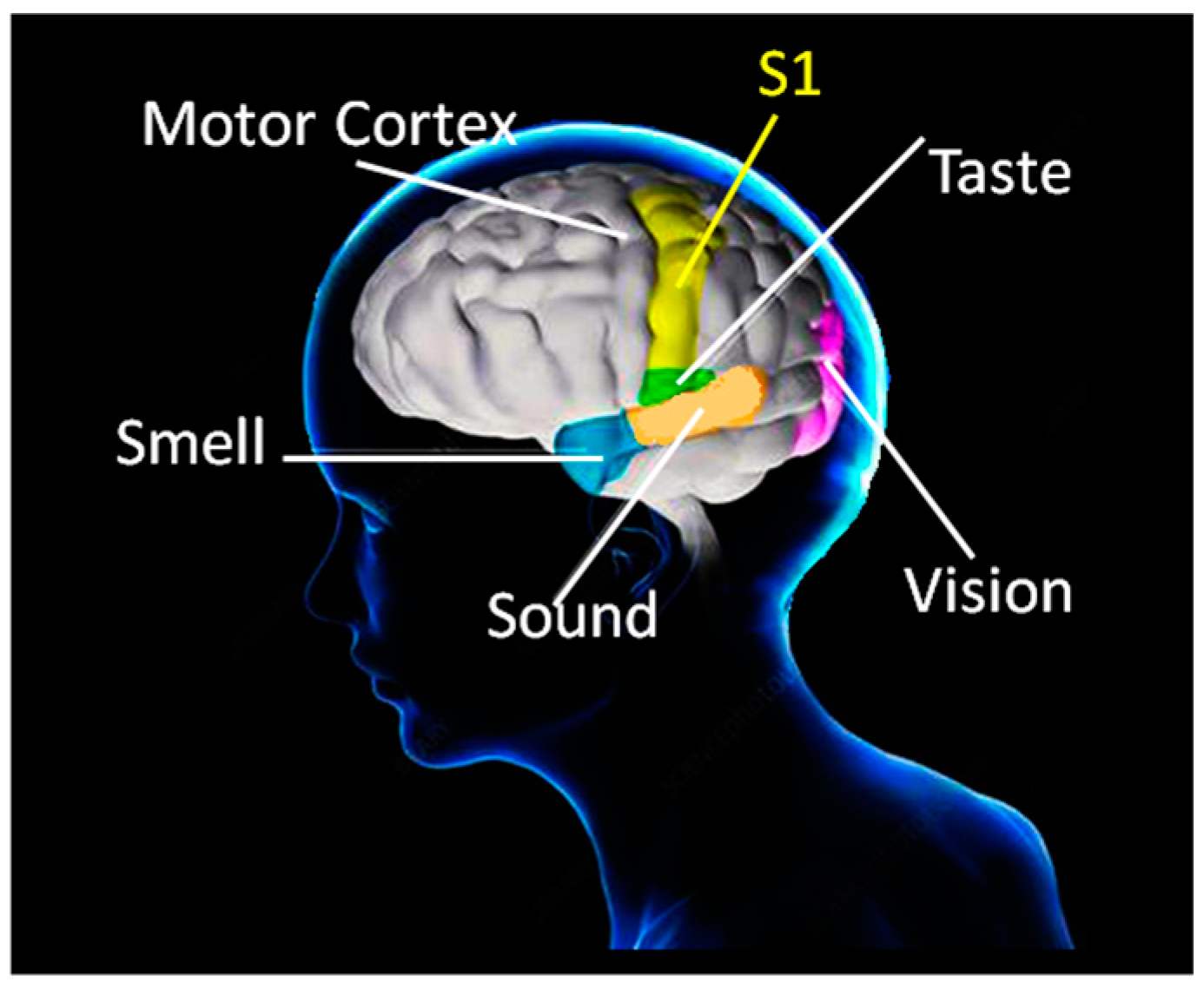

1. Introduction

2. Materials and Methods

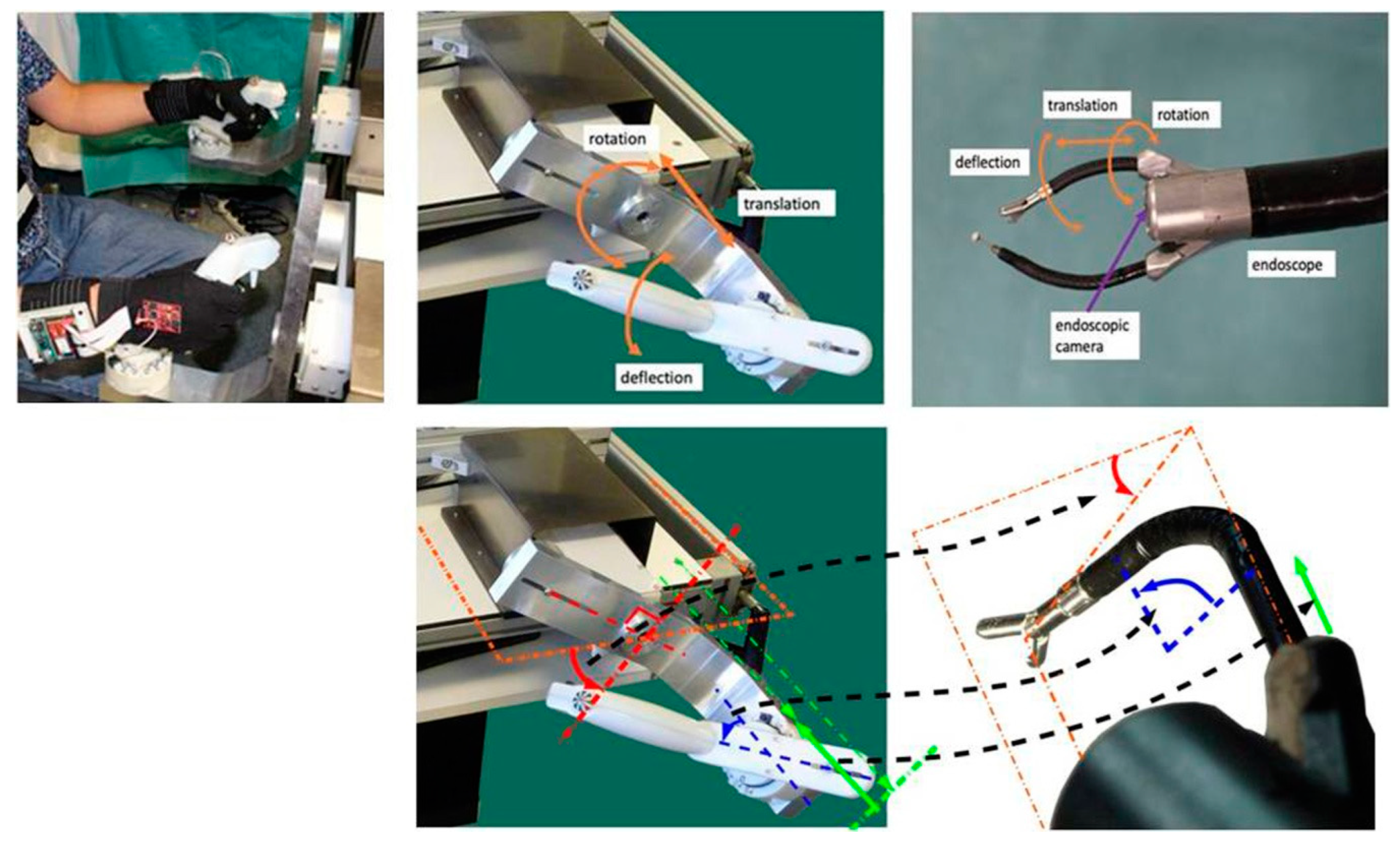

2.1. Robotic System

2.2. Sensor Gloves

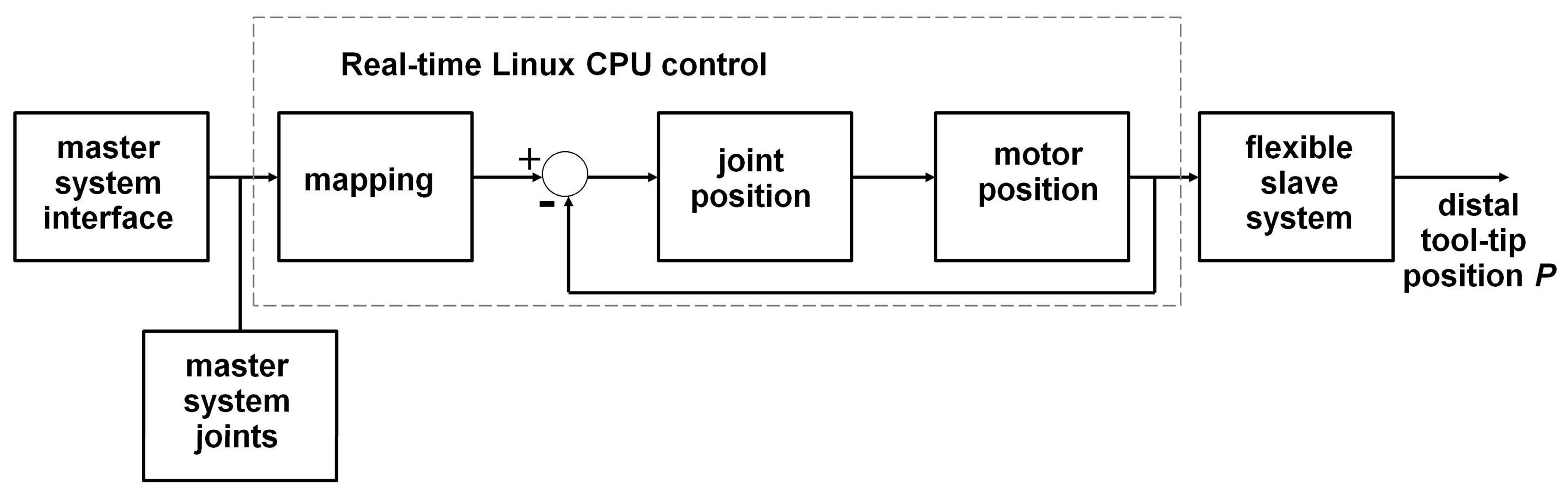

2.3. Software

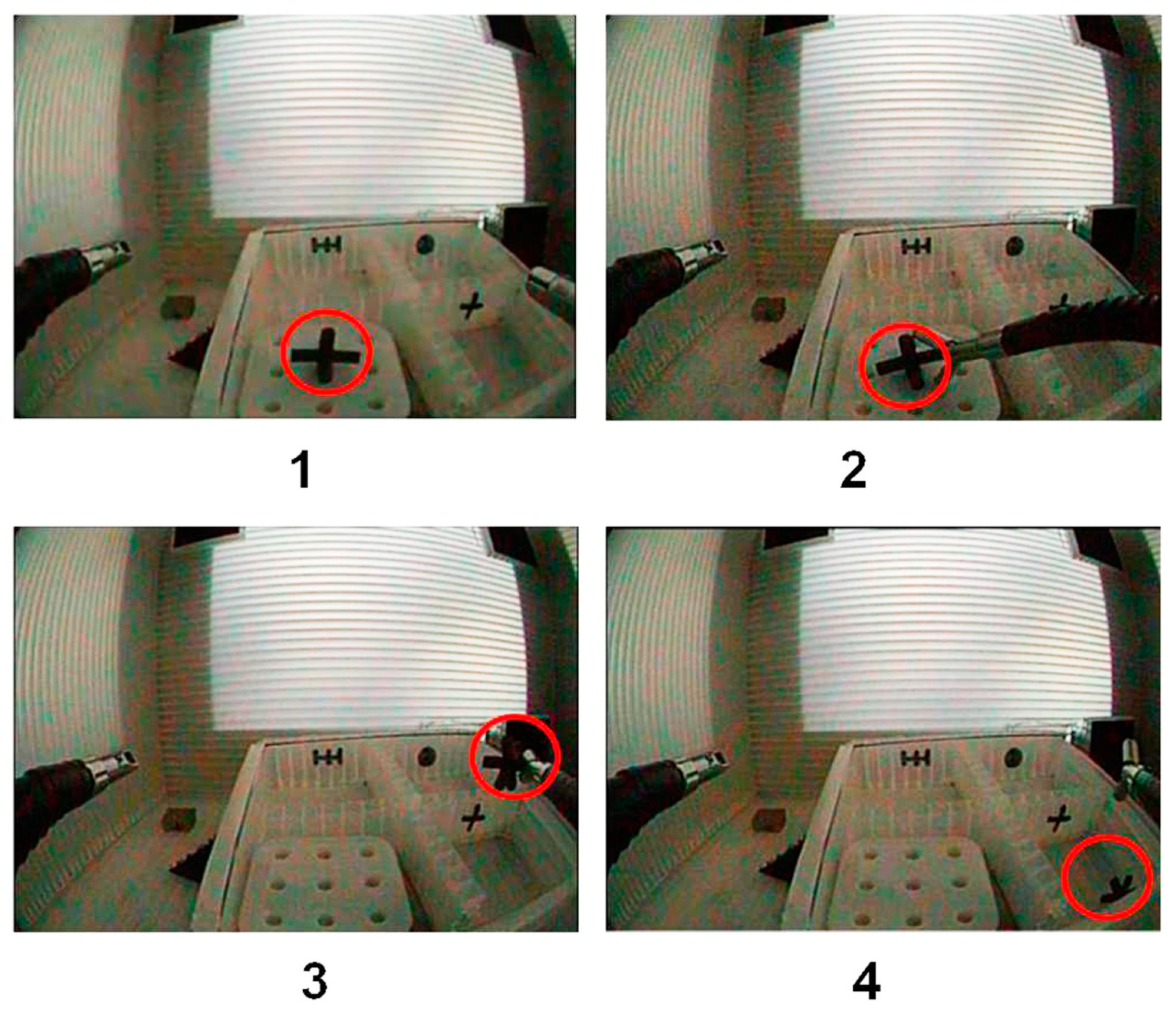

2.4. Experimental Precision Grip Task

2.5. Statistical Analyses

2.6. Neural Network Model

2.7. Rationale for the Neural Network Architecture

3. Results

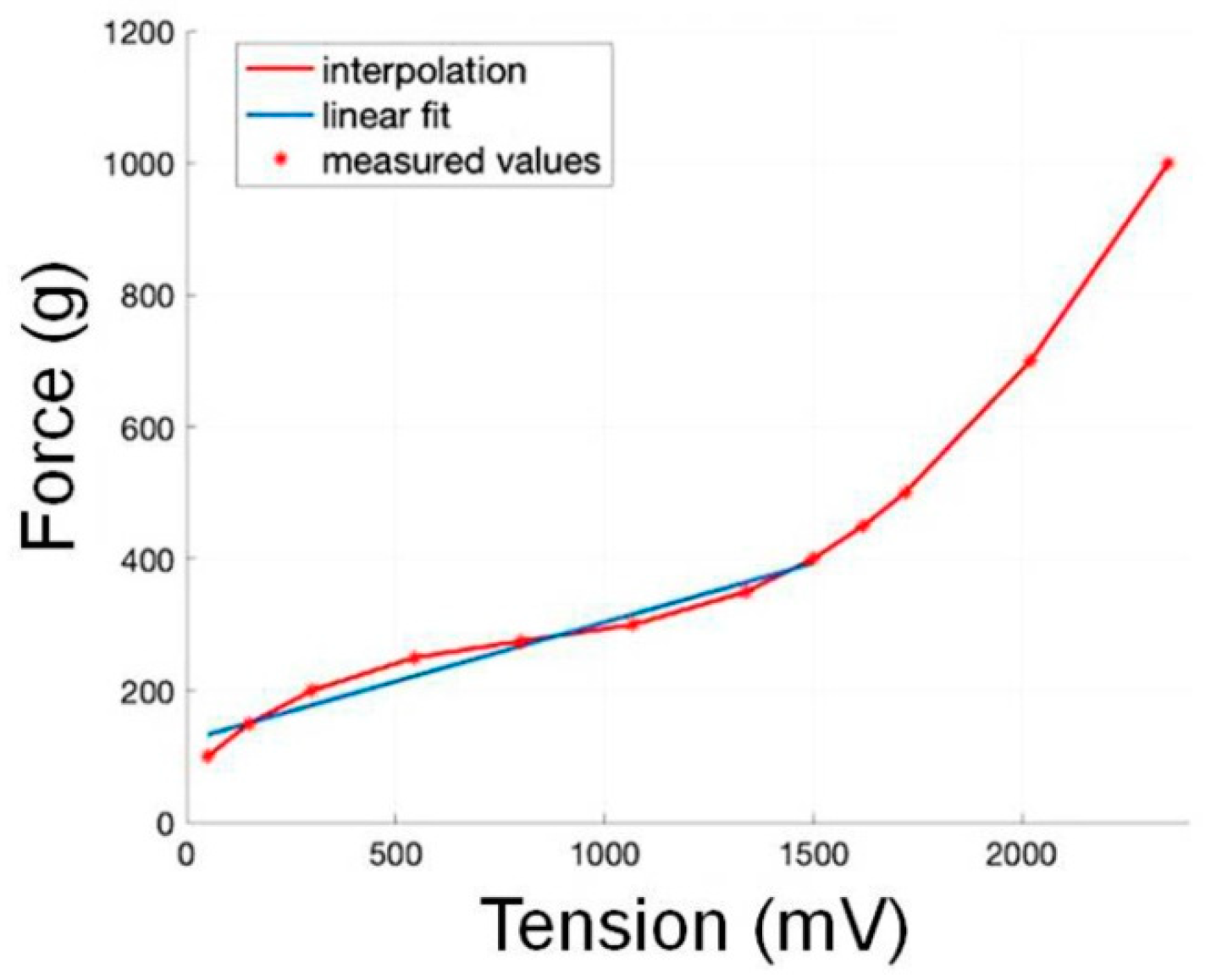

3.1. Sensor Calibration

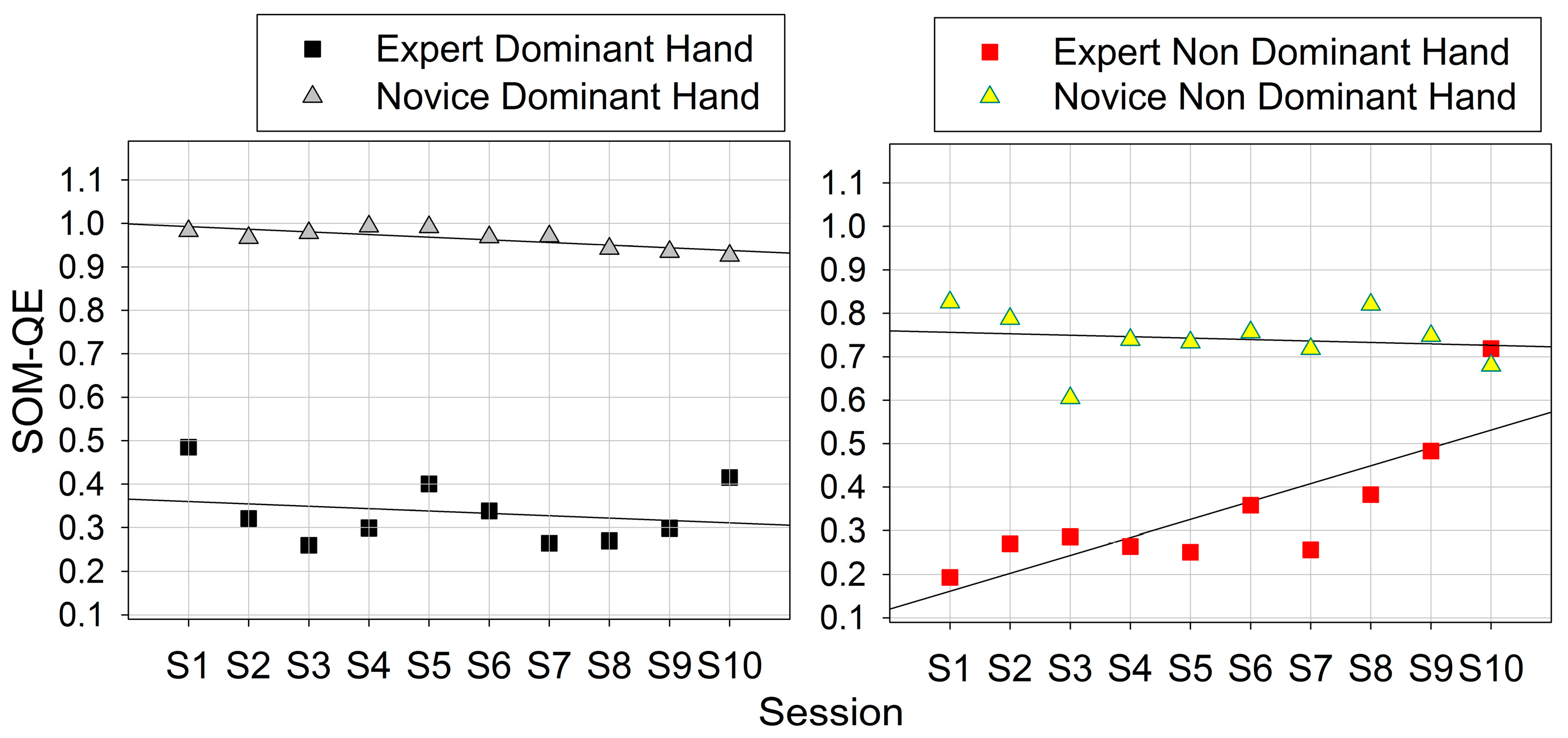

3.2. Skill-Specific Grip-Force Variability

3.3. Neural Network Model

3.4. Functionally Motivated Spatiotemporal Profiling

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dresp-Langley, B. Grip force as a functional window to somatosensory cognition. Front. Psychol. 2022. in the press. [Google Scholar] [CrossRef] [PubMed]

- Liu, R.; Dresp-Langley, B. Making Sense of Thousands of Sensor Data. Electronics 2021, 10, 1391. [Google Scholar] [CrossRef]

- Dresp-Langley, B.; Nageotte, F.; Zanne, P.; Mathelin, M. Correlating Grip Force Signals from Multiple Sensors Highlights Prehensile Control Strategies in a Complex Task-User System. Bioengineering 2020, 7, 143. [Google Scholar] [CrossRef] [PubMed]

- Batmaz, A.U.; Falek, A.M.; Zorn, L.; Nageotte, F.; Zanne, P.; de Mathelin, M.; Dresp-Langley, B. Novice and expert behavior while using a robot controlled surgery system. In Proceedings of the 2017 13th IASTED International Conference on Biomedical Engineering (BioMed), Innsbruck, Austria, 21 February 2017; pp. 94–99. [Google Scholar]

- de Mathelin, M.; Nageotte, F.; Zanne, P.; Dresp-Langley, B. Sensors for Expert Grip Force Profiling: Towards Benchmarking Manual Control of a Robotic Device for Surgical Tool Movements. Sensors 2019, 19, 4575. [Google Scholar] [CrossRef] [PubMed]

- Nageotte, F.; Zorn, L.; Zanne, P.; De Mathelin, M. STRAS: A Modular and Flexible Telemanipulated Robotic Device for Intraluminal Surgery. In Handbook of Robotic and Image-Guided Surgery; Elsevier: Amsterdam, The Netherlands, 2020; pp. 123–146. [Google Scholar]

- Oku, T.; Furuya, S. Skilful force control in expert pianists. Exp. Brain Res. 2017, 235, 1603–1615. [Google Scholar] [CrossRef]

- Flanagan, J.R.; Bowman, M.C.; Johansson, R.S. Control strategies in object manipulation tasks. Curr. Opin. Neurobiol. 2006, 16, 650–659. [Google Scholar] [CrossRef]

- Cole, K.J.; Potash, M.; Peterson, C. Failure to disrupt the ‘sensorimotor’ memory for lifting objects with a precision grip. Exp. Brain Res. 2007, 184, 157–163. [Google Scholar] [CrossRef]

- Zatsiorsky, V.M.; Latash, M.L. Multifinger Prehension: An Overview. J. Mot. Behav. 2008, 40, 446–476. [Google Scholar] [CrossRef]

- Latash, M.L.; Zatsiorsky, V.M. Multi-Finger Prehension: Control of a Redundant Mechanical System. Adv. Exp. Med. Biol. 2009, 629, 597–618. [Google Scholar] [CrossRef]

- Kjnoshita, H.; Kawai, S.; Ikuta, K. Contributions and co-ordination of individual fingers in multiple finger prehension. Ergonomics 1995, 38, 1212–1230. [Google Scholar] [CrossRef]

- Sun, Y.; Park, J.; Zatsiorsky, V.M.; Latash, M.L. Prehension synergies during smooth changes of the external torque. Exp. Brain Res. 2011, 213, 493–506. [Google Scholar] [CrossRef]

- Li, Z.-M.; Latash, M.L.; Zatsiorsky, V.M. Force sharing among fingers as a model of the redundancy problem. Exp. Brain Res. 1998, 119, 276–286. [Google Scholar] [CrossRef]

- Cha, S.M.; Shin, H.D.; Kim, K.C.; Park, J.W. Comparison of grip strength among six grip methods. J. Hand Surg. 2014, 39, 2277–2284. [Google Scholar] [CrossRef]

- Cai, A.; Pingel, I.; Lorz, D.; Beier, J.P.; Horch, R.E.; Arkudas, A. Force distribution of a cylindrical grip differs between dominant and non-dominant hand in healthy subjects. Arch. Orthop. Trauma Surg. 2018, 138, 1323–1331. [Google Scholar] [CrossRef]

- Buenaventura Castillo, C.; Lynch, A.G.; Paracchini, S. Different laterality indexes are poorly correlated with one another but consistently show the tendency of males and females to be more left- and right-lateralized, respectively. R. Soc. Open Sci. 2020, 7, 191700. [Google Scholar] [CrossRef]

- Dresp-Langley, B. Seven Properties of Self-Organization in the Human Brain. Big Data Cogn. Comput. 2020, 4, 10. [Google Scholar] [CrossRef]

- Dresp-Langley, B. Color Variability Constrains Detection of Geometrically Perfect Mirror Symmetry. Computation 2022, 10, 99. [Google Scholar] [CrossRef]

- Dresp-Langley, B. From Biological Synapses to “Intelligent” Robots. Electronics 2022, 11, 707. [Google Scholar] [CrossRef]

- Wilson, S.; Moore, C. S1 somatotopic maps. Scholarpedia 2015, 10, 8574. [Google Scholar] [CrossRef]

- Schellekens, W.; Petridou, N.; Ramsey, N.F. Detailed somatotopy in primary motor and somatosensory cortex revealed by Gaussian population receptive fields. Neuroimage 2018, 179, 337–347. [Google Scholar] [CrossRef]

- Braun, C.; Heinz, U.; Schweizer, R.; Wiech, K.; Birbaumer, N.; Topka, H. Dynamic organization of the somatosensory cortex induced by motor activity. Brain 2001, 124, 2259–2267. [Google Scholar] [CrossRef] [PubMed]

- Arber, S. Motor Circuits in Action: Specification, Connectivity, and Function. Neuron 2012, 74, 975–989. [Google Scholar] [CrossRef] [PubMed]

- Tripodi, M.; Arber, S. Regulation of motor circuit assembly by spatial and temporal mechanisms. Curr. Opin. Neurobiol. 2012, 22, 615–623. [Google Scholar] [CrossRef] [PubMed]

- Weiss, T.; Miltner, W.H.; Huonker, R.; Friedel, R.; Schmidt, I.; Taub, E. Rapid functional plasticity of the somatosensory cortex after finger amputation. Exp. Brain Res. 2000, 134, 199–203. [Google Scholar] [CrossRef] [PubMed]

- Olman, C.; Pickett, K.; Schallmo, M.-P.; Kimberley, T.J. Selective BOLD responses to individual finger movement measured with fMRI at 3T. Hum. Brain Mapp. 2011, 33, 1594–1606. [Google Scholar] [CrossRef]

- Mendoza, G.; Merchant, H. Motor system evolution and the emergence of high cognitive functions. Prog. Neurobiol. 2014, 122, 73–93. [Google Scholar] [CrossRef]

- Merel, J.; Botvinick, M.; Wayne, G. Hierarchical motor control in mammals and machines. Nat. Commun. 2019, 10, 5489. [Google Scholar] [CrossRef]

- Romanski, L.M. Convergence of Auditory, Visual, and Somatosensory Information in Ventral Prefrontal Cortex. In The Neural Bases of Multisensory Processes; Murray, M.M., Wallace, M.T., Eds.; CRC Press/Taylor & Francis: Boca Raton, FL, USA, 2012. [Google Scholar]

- Romo, R.; de Lafuente, V. Conversion of sensory signals into perceptual decisions. Prog. Neurobiol. 2013, 103, 41–75. [Google Scholar] [CrossRef]

- Buckingham, G.; Cant, J.S.; Goodale, M.A. Living in A Material World: How Visual Cues to Material Properties Affect the Way That We Lift Objects and Perceive Their Weight. J. Neurophysiol. 2009, 102, 3111–3118. [Google Scholar] [CrossRef]

- Lukos, J.R.; Choi, J.Y.; Santello, M. Grasping uncertainty: Effects of sensorimotor memories on high-level planning of dexterous manipulation. J. Neurophysiol. 2013, 109, 2937–2946. [Google Scholar] [CrossRef]

- Wu, Y.-H.; Zatsiorsky, V.M.; Latash, M.L. Static prehension of a horizontally oriented object in three dimensions. Exp. Brain Res. 2011, 216, 249–261. [Google Scholar] [CrossRef]

- Liu, R.; Nageotte, F.; Zanne, P.; De Mathelin, M.; Dresp-Langley, B. Wearable Wireless Biosensors for Spatiotemporal Grip Force Profiling in Real Time. Eng. Proc. 2020, 2, 40. [Google Scholar] [CrossRef]

- Fu, Q.; Santello, M. Coordination between digit forces and positions: Interactions between anticipatory and feedback control. J. Neurophysiol. 2014, 111, 1519–1528. [Google Scholar] [CrossRef]

- Naceri, A.; Moscatelli, A.; Haschke, R.; Ritter, H.; Santello, M.; Ernst, M.O. Multidigit force control during unconstrained grasping in response to object perturbations. J. Neurophysiol. 2017, 117, 2025–2036. [Google Scholar] [CrossRef]

- Dresp-Langley, B. Wearable Sensors for Individual Grip Force Profiling. Adv. Biosens. Rev. 2020, 3, 107–122. [Google Scholar]

- Johansson, R.S.; Cole, K.J. Sensory-motor coordination during grasping and manipulative actions. Curr. Opin. Neurobiol. 1992, 2, 815–823. [Google Scholar] [CrossRef]

- Eliasson, A.C.; Forssberg, H.; Ikuta, K.; Apel, I.; Westling, G.; Johansson, R. Development of human precision grip V. Anticipatory and triggered grip actions during sudden loading. Exp. Brain Res. 1995, 106, 425–433. [Google Scholar]

- Judkins, T.N.; Oleynikov, D.; Stergiou, N. Objective evaluation of expert performance during human robotic surgical procedures. J. Robot. Surg. 2008, 1, 307–312. [Google Scholar] [CrossRef]

- Abiri, A.; Pensa, J.; Tao, A.; Ma, J.; Juo, Y.-Y.; Askari, S.J.; Bisley, J.; Rosen, J.; Dutson, E.P.; Grundfest, W.S. Multi-Modal Haptic Feedback for Grip Force Reduction in Robotic Surgery. Sci. Rep. 2019, 9, 1–10. [Google Scholar] [CrossRef]

- Batmaz, A.U.; de Mathelin, M.; Dresp-Langley, B. Effects of 2D and 3D image views on hand movement trajectories in the sur-geon’s peri-personal space in a computer controlled simulator environment. Cogent Med. 2018, 5, 1426232. [Google Scholar] [CrossRef]

- Batmaz, A.U.; de Mathelin, M.; Dresp-Langley, B. Getting nowhere fast: Trade-off between speed and precision in training to execute image-guided hand-tool movements. BMC Psychol. 2016, 4, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Batmaz, A.U.; de Mathelin, M.; Dresp-Langley, B. Seeing virtual while acting real: Visual display and strategy effects on the time and precision of eye-hand coordination. PLoS ONE 2017, 12, e0183789. [Google Scholar] [CrossRef] [PubMed]

- Dresp-Langley, B. Principles of perceptual grouping: Implications for image-guided surgery. Front. Psychol. 2015, 6, 1565. [Google Scholar] [CrossRef] [PubMed]

- Dresp-Langley, B. Towards Expert-Based Speed–Precision Control in Early Simulator Training for Novice Surgeons. Information 2018, 9, 316. [Google Scholar] [CrossRef]

- Wandeto, J.M.; Dresp-Langley, B. The quantization error in a Self-Organizing Map as a contrast and color specific indicator of single-pixel change in large random patterns. Neural Netw. 2019, 119, 273–285. [Google Scholar] [CrossRef]

- Wandeto, J.M.; Dresp-Langley, B. Contribution to the Honour of Steve Grossberg’s 80th Birthday Special Issue: The quantization error in a Self-Organizing Map as a contrast and colour specific indicator of single-pixel change in large random patterns. Neural Netw. 2019, 120, 116–128. [Google Scholar] [CrossRef]

- Dresp-Langley, B.; Wandeto, J. Human Symmetry Uncertainty Detected by a Self-Organizing Neural Network Map. Symmetry 2021, 13, 299. [Google Scholar] [CrossRef]

- Dresp-Langley, B.; Wandeto, J.M. Unsupervised classification of cell imaging data using the quantization error in a Self Organizing Map. In Transactions on Computational Science and Computational Intelligence; Arabnia, H.R., Ferens, K., de la Fuente, D., Kozerenko, E.B., Olivas Varela, J.A., Tinetti, F.G., Eds.; Springer-Nature: Berlin/Heidelberg, Germany, 2021; pp. 201–210. [Google Scholar]

- Abe, T.; Loenneke, J.P. Handgrip strength dominance is associated with difference in forearm muscle size. J. Phys. Ther. Sci. 2015, 27, 2147–2149. [Google Scholar] [CrossRef]

- Andras, I.; Crisan, N.; Gavrilita, M.; Coman, R.-T.; Nyberg, V.; Coman, I. Every setback is a setup for a comeback: 3D laparoscopic radical prostatectomy after robotic radical prostatectomy. J. BUON 2017, 22, 87–93. [Google Scholar]

- Staderini, F.; Foppa, C.; Minuzzo, A.; Badii, B.; Qirici, E.; Trallori, G.; Mallardi, B.; Lami, G.; Macrì, G.; Bonanomi, A.; et al. Robotic rectal surgery: State of the art. World J. Gastrointest. Oncol. 2016, 8, 757–771. [Google Scholar] [CrossRef]

- Diana, M.; Marescaux, J. Robotic surgery. Br. J. Surg. 2015, 102, e15–e28. [Google Scholar] [CrossRef]

- Kim, J.; de Mathelin, M.; Ikuta, K.; Kwon, D.-S. Advancement of Flexible Robot Technologies for Endoluminal Surgeries. Proc. IEEE 2022, 110, 909–931. [Google Scholar] [CrossRef]

- Tucan, P.; Vaida, C.; Horvath, D.; Caprariu, A.; Burz, A.; Gherman, B.; Iakab, S.; Pisla, D. Design and Experimental Setup of a Robotic Medical Instrument for Brachytherapy in Non-Resectable Liver Tumors. Cancers 2022, 14, 5841. [Google Scholar] [CrossRef]

- Dagnaes-Hansen, J.; Mahmood, O.; Bube, S.; Bjerrum, F.; Subhi, Y.; Rohrsted, M.; Konge, L. Direct Observation vs. Video-Based Assessment in Flexible Cystoscopy. J. Surg. Educ. 2018, 75, 671–677. [Google Scholar] [CrossRef]

- Lam, K.; Chen, J.; Wang, Z.; Iqbal, F.M.; Darzi, A.; Lo, B.; Purkayastha, S.; Kinross, J.M. Machine learning for technical skill assessment in surgery: A systematic review. NPJ Digit. Med. 2022, 5, 24. [Google Scholar] [CrossRef]

- Maiello, G.; Schepko, M.; Klein, L.K.; Paulun, V.C.; Fleming, R.W. Humans Can Visually Judge Grasp Quality and Refine Their Judgments Through Visual and Haptic Feedback. Front. Neurosci. 2021, 14, 591898. [Google Scholar] [CrossRef]

- Parry, R.; Sarlegna, F.R.; Jarrassé, N.; Roby-Brami, A. Anticipation and compensation for somatosensory deficits in object handling: Evidence from a patient with large fiber sensory neuropathy. J. Neurophysiol. 2021, 126, 575–590. [Google Scholar] [CrossRef]

- Carson, R.G. Get a grip: Individual variations in grip strength are a marker of brain health. Neurobiol. Aging 2018, 71, 189–222. [Google Scholar] [CrossRef]

- Firth, J.A.; Smith, L.; Sarris, J.; Vancampfort, D.; Schuch, F.; Carvalho, A.F.; Solmi, M.; Yung, A.R.; Stubbs, B.; Firth, J. Handgrip Strength Is Associated with Hippocampal Volume and White Matter Hyperintensities in Major Depression and Healthy Controls: A UK Biobank Study. Psychosom. Med. 2019, 82, 39–46. [Google Scholar] [CrossRef]

- Fellows, S.J.; Noth, J. Grip force abnormalities in de novo Parkinson’s disease. Mov. Disord. 2004, 19, 560–565. [Google Scholar] [CrossRef]

- Rijk, J.M.; Roos, P.R.; Deckx, L.; Akker, M.V.D.; Buntinx, F. Prognostic value of handgrip strength in people aged 60 years and older: A systematic review and meta-analysis. Geriatr. Gerontol. Int. 2015, 16, 5–20. [Google Scholar] [CrossRef] [PubMed]

- Kobayashi-Cuya, K.E.; Sakurai, R.; Suzuki, H.; Ogawa, S.; Takebayashi, T.; Fujiwara, Y. Observational evidence of the association between handgrip strength, hand dexterity, and cognitive performance in community-dwelling older adults: A systematic review. J. Epidemiol. 2018, 28, 373–381. [Google Scholar] [CrossRef] [PubMed]

- Kunutsor, S.K.; Isiozor, N.M.; Voutilainen, A.; Laukkanen, J.A. Handgrip strength and risk of cognitive outcomes: New prospective study and meta-analysis of 16 observational cohort studies. Geroscience 2022, 44, 2007–2024. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Oh, J.W.; Son, N.-H.; Chung, W. Association between Handgrip Strength and Cognitive Function in Older Adults: Korean Longitudinal Study of Aging (2006–2018). Int. J. Environ. Res. Public Health 2022, 19, 1048. [Google Scholar] [CrossRef]

- van Nuenen, B.F.; Kuhtz-Buschbeck, J.; Schulz, C.; Bloem, B.R.; Siebner, H.R. Weight-specific anticipatory coding of grip force in human dorsal premotor cortex. J. Neurosci. 2012, 32, 5272–5283. [Google Scholar] [CrossRef][Green Version]

| Task Step | Hand–Tool Interaction Required |

|---|---|

| 1 | Activate and move tool forwards towards the pick-up target box |

| 2 | Move tool downwards towards object, open grippers, close grippers on object, lift object |

| 3 | Move tool in lateral direction towards the destination box for dropping object |

| 4 | Open grippers to drop object in the destination box |

| Sensor | Finger | Grip-Force Control |

|---|---|---|

| S5 | middle | gross grip-force deployment |

| S6 | ring | non-specific grip-force support |

| S7 | pinky | precision grip control |

| Skill Level | 1st Session Duration | Last Session | Incidents |

|---|---|---|---|

| Expert | 10.20 | 7.48 | 3 |

| Novice | 24.56 | 18.78 | 20 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, R.; Wandeto, J.; Nageotte, F.; Zanne, P.; de Mathelin, M.; Dresp-Langley, B. Spatiotemporal Modeling of Grip Forces Captures Proficiency in Manual Robot Control. Bioengineering 2023, 10, 59. https://doi.org/10.3390/bioengineering10010059

Liu R, Wandeto J, Nageotte F, Zanne P, de Mathelin M, Dresp-Langley B. Spatiotemporal Modeling of Grip Forces Captures Proficiency in Manual Robot Control. Bioengineering. 2023; 10(1):59. https://doi.org/10.3390/bioengineering10010059

Chicago/Turabian StyleLiu, Rongrong, John Wandeto, Florent Nageotte, Philippe Zanne, Michel de Mathelin, and Birgitta Dresp-Langley. 2023. "Spatiotemporal Modeling of Grip Forces Captures Proficiency in Manual Robot Control" Bioengineering 10, no. 1: 59. https://doi.org/10.3390/bioengineering10010059

APA StyleLiu, R., Wandeto, J., Nageotte, F., Zanne, P., de Mathelin, M., & Dresp-Langley, B. (2023). Spatiotemporal Modeling of Grip Forces Captures Proficiency in Manual Robot Control. Bioengineering, 10(1), 59. https://doi.org/10.3390/bioengineering10010059