Abstract

Large Language Models (LLMs) combined with visual foundation models have demonstrated significant advancements, achieving intelligence levels comparable to human capabilities. This study analyzes the latest Multimodal LLMs (MLLMs), including Multimodal-GPT, GPT-4 Vision, Gemini, and LLaVa, with a focus on hydrological applications such as flood management, water level monitoring, agricultural water discharge, and water pollution management. We evaluated these MLLMs on hydrology-specific tasks, testing their response generation and real-time suitability in complex real-world scenarios. Prompts were designed to enhance the models’ visual inference capabilities and contextual comprehension from images. Our findings reveal that GPT-4 Vision demonstrated exceptional proficiency in interpreting visual data, providing accurate assessments of flood severity and water quality. Additionally, MLLMs showed potential in various hydrological applications, including drought prediction, streamflow forecasting, groundwater management, and wetland conservation. These models can optimize water resource management by predicting rainfall, evaporation rates, and soil moisture levels, thereby promoting sustainable agricultural practices. This research provides valuable insights into the potential applications of advanced AI models in addressing complex hydrological challenges and improving real-time decision-making in water resource management

1. Introduction

Artificial intelligence (AI) is becoming a groundbreaking technology with the potential to revolutionize various specific disciplines, and hydrology is no exception. Hydrology, which encompasses the scientific study of the movement of water on Earth, will benefit greatly from the revolutionary potential of AI [1]. Such methods are increasingly being applied in hydrology to solve various problems such as rainfall-runoff modeling [2,3], water quality forecasting using deep learning [4], data augmentation [5], and climate and hydrologic forecasting using AI [6]. Large Language Models (LLMs) are a class of deep learning models that are trained on massive textual data to generate or understand human language, which could be able to generate human-like language outputs.

Some prominent examples of LLMs include GPT-4 [7] and BERT (Bidirectional Encoder Representations from Transformers) [8]. Models like Mini-GPT-4 [9] and LLaVA [10] have emerged, each endeavoring to replicate and extend GPT-4’s achievements. These initiatives have explored the fusion of visual representations with the language model’s input space, harnessing the original self-attention mechanism of language models to process complex visual information. In the realm of computer vision, ImageBERT [11] represents a promising intersection between visual and textual data, enabling improvements in vision and language tasks. In healthcare, Clinical BERT [12] leverages the analysis of clinical notes to predict hospital readmission.

In hydrology, the integration of AI has shown tremendous potential for advancing our understanding and management of water-related processes [13]. Leveraging the capabilities of LLMs in this domain introduces a novel dimension, where textual and visual information can be synthesized to enhance hydrological analyses and communication [14]. This study explores the convergence of hydrology and LLMs, with a particular focus on models such as GPT-4, Gemini, LLaVA, and Multimodal-GPT. While conventional models have made significant contributions, the emergence of LLMs provides an unprecedented opportunity to process and interpret vast amounts of hydrological data in a more nuanced and context-aware manner. The integration of visual representations into language models signifies a paradigm shift in how hydrologists can approach complex datasets [15]. These models not only inherit the language generation prowess of their predecessors but also harness advanced self-attention mechanisms to process intricate visual information.

The intersection of visual and textual data, exemplified by models like ImageBERT, opens new avenues for improving both vision and language tasks in hydrological research. As we delve into the capabilities of these LLMs, it is crucial to acknowledge the inherent limitations. While promising, the responses generated by these models may occasionally be deceptive, incorrect, or false. Balancing the enthusiasm for the potential breakthroughs with a realistic understanding of the model’s limitations is essential for responsible and effective integration into hydrological research [16].

LLMs and Vision Models in Flooding and Hydrology

Hydrology and flood detection involve complex processes that can benefit from the integration of advanced AI models [17]. The application of LLMs and vision models in flood detection and hydrology involves leveraging advanced language processing and computer vision technologies to enhance our understanding and management of water-related phenomena [18]. Multimodal Large Language Models (MLLMs) are pre-trained on a massive corpus of images and texts, thus can perform predictions even without further training or fine-tuning [19].

Data Analysis and Interpretation: LLMs can be employed to analyze textual data related to hydrological studies. They can assist in interpreting data from sensors, reports, and other reliable government sources to extract valuable insights. Simultaneously, computer vision models, such as vision transformers with LLMs, can analyze satellite imagery and detect changes in land cover or water levels indicative of potential flooding [20].

Real-Time Monitoring: Combining LLMs with vision models allows for the real-time monitoring of hydrological conditions. LLMs can process textual information from various sources, while vision models analyze images or video feeds from surveillance cameras or satellites [21].

Contextual Understanding: LLMs are powerful tools for understanding contextual information. They can generate summaries, answer questions, and provide context-specific details about hydrological events based on textual data. Vision models contribute by providing spatial context, identifying geographical features, land use changes, and patterns in satellite imagery crucial for hydrological assessments.

Decision-Support Systems: LLMs can assist in generating reports, risk assessments, and decision-making documents. They can process complex data and information from sensor networks and remote sensing resources and provide recommendations for water resource mitigation and planning decisions [22] based on textual data. Vision models contribute to decision support by visualizing hydrological events, creating visual representations of flood-prone areas, changes in river courses, and other relevant spatial information.

Predictive Analytics: LLMs contribute to predictive analytics by understanding and generating insights from historical hydrological data. They process textual information related to weather patterns, river flows, and other variables. Vision models excel at recognizing patterns in visual data, identifying trends or anomalies in satellite imagery, and aiding in predicting potential flood events [23].

Communication and Reporting: LLMs facilitate effective communication by generating human-like responses to inquiries, integrated into chatbots or conversational interfaces to provide information about hydrological conditions. Vision models contribute to visual reporting by creating maps, charts, and visualizations that enhance the understanding of hydrological data.

This study aims to explore the synergies between hydrology and Multimodal LLMs (MLLMs), examining the contributions of models like GPT-4, Gemini, LLaVA, and Multimodal-GPT, and their implications for advancing our understanding of water-related processes. Additionally, we discussed the challenges and ethical considerations associated with integrating LLMs into hydrological research, paving the way for a more informed and responsible use of AI in this critical scientific domain.

2. Background

In recent studies, the advancement of multimodal-based LLMs has shown significant improvement. The emergence of ChatGPT for text generation [24] and visual transformer-based diffusion models for image generation [25] sets the stage for AI-generated multimodal tasks. This progress enhances the cognitive abilities and decision-making capabilities of machines, especially with open-source LLMs like Vicuna [26], Flan-T5, Alpaca [27], and LLaMa [28]. Additionally, the availability of multimodal data from various sensors, including audios, videos, and text, necessitates machines to adeptly handle multimodal decision-making processes.

Several LLMs are continually being introduced, including DeepMind’s Flamingo [29], Salesforce’s BLIP [30], Microsoft’s KOSMOS-1 [31], Google’s PaLM-E [32], and Tencent’s Macaw-LLM [33]. Chatbots like ChatGPT and Gemini are examples of LMMs. However, not all multimodal systems are LMMs. For instance, text-to-image models like Stable Diffusion, Midjourney [34], and Dall-E are multimodal but lack a language model component. The integration of LLMs for visual reasoning has been influenced by the enhancement of visual models [35,36,37]. Several visual foundation models [38,39,40] are available to perform visual reasoning tasks, often incorporating task-specific LLMs [41,42,43] (Wang et al., 2023; Zhang et al., 2023; Zhu et al., 2022).

Visual reasoning models [44,45] are designed to generalize and perform well in decision-making on unseen data, as they are trained on real-world data. Text-based LLMs possess a profound understanding of other modalities and exhibit reasoning capabilities for audio and video. The integration of adapters allows text-based LLMs to connect with visual models, facilitating multimodal reasoning. This has led to the development of multimodal LLMs such as BLIP-2 [30], Flamingo [29], MiniGPT-4 [9], Video-LLaMA [46], LLaVA [10], PandaGPT [47], and SpeechGPT [48]. These models take images/videos as input and comprehend context across multiple modalities. The responses generated by these multimodal LLMs rival human intelligence, as they can accept input in any modality and generate responses in the user-requested modality.

Vision-language multimodal tasks are typically categorized into two groups: generation and vision-language understanding (VLU). In the generation models, responses can be output as text, image, or a combination of both. Text-to-image generation models like Dall-E, Midjourney, and Stable Diffusion create images based on textual input. Text generation models rely on text and produce multimodal responses in both text and image formats. For instance, image captioning models can extract text-based information from images.

Vision Language Understanding (VLU) models demonstrate proficiency in two key tasks: classification and text-based image retrieval (TBIR). In the context of classification, these models engage in image-to-text retrieval. Given an image and a predefined pool of texts, the model aims to identify the text most likely to be associated with the provided image. This task is essential for understanding the content and context depicted in images. Text-based image retrieval is another critical aspect of VLU models. In this task, the model undergoes training in a joint embedding space that encompasses both images and text. When presented with a text query, the model generates an embedding for this query. Subsequently, it identifies all images whose embeddings closely align with the generated embedding for the given text query. This approach enables the effective retrieval of relevant images based on textual input, showcasing the versatile capabilities of VLU models in bridging the gap between language understanding and visual content interpretation.

2.1. Evolution of Multimodal LLMs

The multimodal LLMs represent a significant advancement in AI technology, particularly in the field of natural language processing (NLP). These models have undergone several transformative stages, each marked by notable innovations and improvements. The researchers aim to achieve the evolution of any-to-any modality, enhancing AI generation techniques. Tang et al. [49] introduced Composable Diffusion (CoDi), a novel generative model capable of producing responses in any combination of language, image, and audio modalities. However, this model lacks the reasoning and decision-making capabilities of LLMs. In contrast, the later-developed model Visual-ChatGPT integrates LLMs with visual foundation models, enabling accurate responses based on input images [38].

HuggingGPT [50] focuses on general AI across various domains and modalities. This model utilizes LLMs and selects the AI model based on functional descriptors and user requests within Hugging Face. It can handle AI tasks involving different modalities and domains. In 2022, InfoQ reported on DeepMind’s Flamingo, a system that integrates separately pre-trained vision and language models, enabling it to answer questions about input images and videos. Furthermore, InfoQ covered OpenAI’s GPT-4, which demonstrated the capability to process image inputs. Microsoft made advancements with two vision-language models: Visual ChatGPT, leveraging ChatGPT to engage various visual foundation models for tasks, and LLaVA, a fusion of CLIP for vision and LLaMA for language, incorporating an additional network layer to seamlessly connect the two. The model was trained end-to-end in visual instruction tuning.

Multimodal LLMs demonstrate the ability to emulate human commonsense reasoning [51] and employ Chain-of-Thought (CoT) for hydrology-specific tasks [52]. CoT simulates responses based on sequences of events or data, generating domain-specific responses. Two techniques are used to induce Chain-of-Thoughts in conversation: Few-Shot-CoT and Zero-Shot-CoT. In Few-Shot-CoT [53], automated Chain-of-Thought is provided to the model by prompting intermediate steps in reasoning. Pretrained LLMs also serve as excellent task-specific exemplars, functioning as zero-shot reasoners by simply adding “Let’s think step by step” before each answer [54,55]. Multimodal CoT [56] incorporates text and vision modalities into a two-stage framework that separates rationale generation and answer inference. This approach allows answer inference to leverage better-generated rationales based on multimodal information.

2.2. Multimodal Reasoning and Response Generation

Multimodal reasoning enables AI models to comprehend information across various modalities, facilitating a holistic understanding and the generation of responses that incorporate multiple domains. The hydrology domain-specific use cases are validated using the Multimodal-GPT [57], GPT-4 Vision [58], and LLaVA: Large Language and Vision Assistant [10] in this paper. Multimodal-GPT utilizes a vision encoder to efficiently extract visual information and integrates a gated cross-attention layer for seamless interactions between images and text. Fine-tuned from OpenFlamingo, this model incorporates a Low-Rank Adapter (LoRA) in both the gated-cross-attention and self-attention components of the language model. Notably, Multimodal-GPT employs a unified template for both text and visual instruction data during training, facilitating its ability to engage in continuous dialogues with humans.

In September 2023, GPT-4 Vision was introduced, allowing users to instruct GPT-4 for various Multimodal tasks. This large multimodal model, trained similarly to GPT-4 using texts and images from licensed sources, accepts images and texts as inputs to generate textual outputs. Aligning with existing general-purpose vision-language models [59,60,61,62,63,64,65,66,67,68,69,70,71], GPT-4 Vision is versatile enough to handle single-image-text pairs or single images for tasks like image recognition, object localization, image captioning, visual question answering, visual dialogue, dense caption, and more.

LLaVA represents a comprehensively trained large multimodal model designed to seamlessly integrate a vision encoder with a Large Language Model (LLM) [10]. This integration is aimed at achieving a broad scope of visual and language understanding for general-purpose applications. Notably, LLaVA utilizes the language-only GPT-4 as part of its architecture. In its operation, the model excels in generating multimodal responses by leveraging machine-generated instruction-following data. This approach enables LLaVA to interpret and respond to inputs that involve both textual and visual elements, showcasing its proficiency in handling diverse modalities of information. One of the key strengths of LLaVA lies in its zero-shot capabilities, facilitated through the prompting of GPT-4. This makes the model provide accurate responses even when faced with complex and in-depth reasoning questions, a feature honed during its training process [72,73]. This capability enhances the model’s versatility and effectiveness in addressing a wide range of tasks that require a nuanced understanding of both language and visual content.

Limitations: MLLMs, combining language and vision models, bring considerable benefits but also pose notable limitations. Firstly, their complexity and resource demands are substantial, requiring extensive computational power for training and fine-tuning. Moreover, data dependencies are critical; these models rely heavily on large, diverse datasets, and their performance is compromised when faced with limited or biased data. Despite excelling in specific tasks, their ability to generalize across various domains remains a challenge. Interpreting the representations generated by MLLMs is another challenge. Extracting meaningful insights from these models proves challenging, hindering transparency in decision-making processes. Furthermore, their domain specificity is a constraint; they thrive in areas with abundant multimodal data but may fail in less explored or niche domains. Ethical concerns arise due to the perpetuation of biases from training data, raising questions about fairness and impartiality in outcomes. Additionally, these models exhibit limitations in contextual understanding, struggling with nuanced situations and subtle cues. Robustness against adversarial attacks is an ongoing challenge, and the high inference time for processing both language and vision concurrently can limit real-time applicability. The environmental impact of their computational demands also deserves attention, contributing significantly to carbon footprints. Acknowledging these limitations is essential for responsible deployment and ongoing research to address these challenges [74].

The study of MLLMs encompasses the exploration and advancement of AI technologies capable of processing and generating information across multiple modalities, including text, images, and audio. Recent years have witnessed significant progress in this field, driven by the emergence of LLMs like ChatGPT for text generation and visual transformers-based diffusion models for image generation. These advancements have paved the way for AI-generated multimodal tasks, with open-source LLMs such as Vicuna, Flan-T5, Alpaca, and LLaMa enhancing machines’ cognitive abilities and decision-making capabilities. The availability of multimodal data from various sensors further underscores the importance of developing LLMs capable of adeptly handling multimodal decision-making processes. As a result, researchers continue to introduce new LLMs like DeepMind’s Flamingo, Salesforce’s BLIP, and Microsoft’s KOSMOS-1, while also exploring integration with visual models to create multimodal LLMs such as BLIP-2, Flamingo, MiniGPT-4, and others. This background study sets the stage for investigating the capabilities, applications, and challenges of MLLMs in hydrology and other domains.

3. Methodology

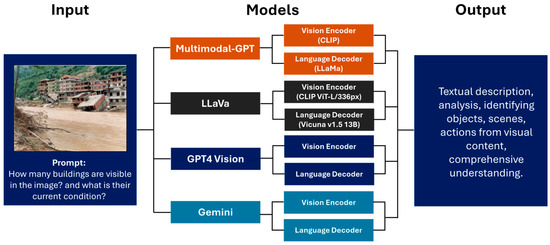

This study is designed to evaluate the application of MLLMs in hydrology, particularly for real-world scenario analysis such as flood management. The study involves an empirical assessment of four advanced MLLMs: Multimodal-GPT, LLaVA, GPT-4 Vision, and Gemini. Table 1 shows the fundamental characteristics and specific applicability of these four MLLMs in hydrological tasks. These models are evaluated based on their ability to interpret and generate responses from hydrology-specific multimodal data, involving both textual prompts and visual inputs as shown in Figure 1.

Table 1.

Key characteristics and applicability of the Multimodal Large Language Models (MLLMs) evaluated in the study.

Figure 1.

The workflow for MLLM benchmarking in hydrological tasks.

3.1. Input Data Preparation

The input for each MLLM consists of a visual element—an image depicting a hydrological scenario, such as a flood event—and a textual prompt that frames a question or a task related to the visual content. For instance, the model may be presented with an image of a flooded area and asked to determine the number of buildings visible and assess their condition. Additionally, an image with a water level gauge could be used to assess MLLM performance in hydrological tasks, providing a concrete example of how these models can interpret and quantify water levels in real time. To prepare input for a MLLMs capable of processing both images and textual questions about those images, a systematic approach involving image preprocessing, text preprocessing, and input combination is necessary. The initial step is to provide the images to ensure compliance with copyright regulations. Unless otherwise is noted, the images used in this study were sourced from Adobe Stock, a licensed platform providing high-quality visual content, except for the image input in Table 6, which was taken by the authors. It is important to note that this approach has been taken to avoid any potential copyright issues and to maintain the integrity of the publication. Next, the image data undergo preprocessing steps such as resizing to a fixed size suitable for the model, the normalization of pixel values within an appropriate range typically between 0 and 1, and conversion into a format compatible with the model’s input requirements, often involving tensors for deep learning frameworks. Following this, textual questions are subjected to tokenization, wherein they are broken down into tokens and converted into numerical IDs based on the model’s vocabulary. Special tokens like [CLS] and [SEP] are inserted to delineate the beginning and end of the text sequence, with the sequence then padded or truncated to conform to a fixed length compatible with the model.

Moving forward, the prepared inputs are combined according to the requirements of the multimodal model being employed. Some models may necessitate separate inputs for the image and text portions, while others might accept a single input combining both modalities. In scenarios where separate inputs are required, the image tensor and tokenized text sequence are typically packed into a single data structure, such as a dictionary, before being fed into the model. This ensures that the model can effectively process both the visual and textual aspects of the input data in a cohesive manner. Ultimately, by following this structured approach to input preparation, practitioners can seamlessly integrate images and corresponding textual questions into multimodal-GPT models, enabling them to generate contextually relevant and coherent responses.

3.2. Model Evaluation Process

The evaluation of the models follows a structured approach designed to evaluate their performance in accurately interpreting and generating responses to hydrology related multimodal data. The process begins by presenting the model with a specific case that includes a visual input (e.g., an image depicting a hydrological scenario) and a textual prompt framing a relevant question or task. The model’s response is then evaluated based on three key criteria: accuracy, relevance, and understanding.

Accuracy refers to the model’s ability to provide accurate and precise information in response to the prompt. This involves comparing the responses generated by the model with previously defined correct answers or known truths about the scenario depicted in the image. Relevance measures how closely the model’s response aligns with the context of the prompt and the specific hydrological application. This includes evaluating whether the model appropriately considers visual and textual data in its response. The third criterion, comprehension, measures the model’s ability to understand and integrate both visual and textual elements of the input and provide consistent and contextually appropriate responses.

To provide a comprehensive evaluation, each model’s response is compared to ground truth data or expert-generated answers. This comparative analysis helps determine the model’s effectiveness in processing and synthesizing multimodal information in the context of hydrology. By rigorously applying these evaluation criteria, the study aims to highlight the models’ strengths and limitations in real-world hydrological applications.

3.2.1. Multimodal-GPT

Multimodal-GPT is designed to facilitate human-level, multimodal dialogue between vision and language datasets, guided by the Unified Instruction Template. This template harmonizes both unimodal linguistic and multimodal vision-language data, providing structure to language-only tasks with a task description, input, and response format. The model leverages datasets such as Dolly 15k, curated for refining language model performance in instruction-based tasks like Alpaca GPT-4. Similarly, vision-language tasks utilize a similar template with the incorporation of an image token, drawing from datasets like Mini-GPT-4, A-OKVQA, COCO Caption, and OCR VQA for multimodal applications. From an architectural standpoint, Multimodal-GPT utilizes the OpenFlamingo infrastructure, comprising a vision encoder, spatial feature extractor via a perceiver resampler, and a language decoder. Pre-training involves a large number of image-text pairs to inform the model with powerful visual understanding. Low-Rank Adaptation (LoRA) is employed in the language decoder for fine-tuning against self-attention, cross-attention, and Feed-Forward Network (FFN) parts. This setup allows Multimodal-GPT to effectively integrate visual information into text, with the frozen OpenFlamingo model serving as its backbone.

3.2.2. LLaVA

LLaVA (Language and Vision for Instruction-Following with Vicuna and CLIP) integrates language and visual processing by generating multimodal language-image instruction-following data in two stages. Initially, multimodal language-image data are generated using the language-only GPT-4 model, collected through captions and bounding boxes describing various perspectives in visual scenes and individual objects. This dataset encompasses conversational QandA about images, detailed image descriptions, and complex reasoning questions beyond visible features. To align vision and language modalities, a large-scale dataset is utilized and then fine-tuned using synthesized multimodal data, enhancing the model’s generalization ability in processing and responding to natural multimodal instructions. LLaVA’s performance is evaluated against GPT-4, employing metrics like accuracy, precision, recall, and F1 score across a synthetic multimodal dataset. Post-evaluation, LLaVA is refined for specific tasks such as science QA, showcasing its domain flexibility.

LLaVA architecture combines CLIP-based vision encoders with Vicuna-based language model decoders for feature extraction from visual inputs and textual inputs, facilitating task execution. The core of LLaVA training lies in “Visual Instruction Tuning”, comprising pre-training for feature alignment and end-to-end fine-tuning using the LLaVA-Instruct-158K dataset. By fusing language and vision input instructions, LLaVA develops multimodal instruction-following capabilities, representing a significant advancement in integrating language and vision processing for complex tasks.

3.2.3. GPT-4 Vision

GPT-4 Vision extends GPT-4’s capabilities to understanding and processing visual data, introducing key stages in the visualization process. First, image preprocessing ensures standardization by resizing images to a consistent size and normalizing pixel values. This uniformity is crucial for optimizing model performance. Next, in Pixel Sequence Transformation, images are converted into sequences of pixels, akin to how language models operate on text, treating RGB values as tokens in a sequence. During Model Training, GPT-4 Vision is trained to predict pixels in a specific order, akin to predicting the next word in a sentence. This involves backpropagation and techniques like stochastic gradient descent to minimize prediction errors and assimilate patterns in image data. For image generation and classification tasks, the model predicts labels for images and generates them from seed pixel sequences. In classification, it produces a distribution of probabilities over potential labels, selecting the most probable. Lastly, for Multimodal Integration, GPT-4 Vision, capable of processing language, comprehends and describes images, answers questions based on visual content, and crafts stories integrating visual and textual elements. This holistic approach enables GPT-4 Vision to bridge the gap between visual and textual data, advancing multimodal understanding and generation capabilities.

3.2.4. Gemini

The next-generation development in multimodal AI systems introduces Gemini models, which enhance the transformer architecture to seamlessly integrate information across modalities. Designed to efficiently run on specialized hardware like Google TPUs, Gemini models excel in processing various media content, including text, audio, and visual data. With the capability to handle very long context lengths of up to 32,000 tokens, these models can process massive sequences of diverse data types, such as documents, high-resolution images, and lengthy audio files. Central to the efficiency of Gemini models are advanced attention mechanisms, which enable the models to focus their computation on the most relevant parts of input data, crucial for handling complex multimodal inputs and generating coherent outputs.

Gemini models are fine-tuned to process a wide range of input formats, from images and plots to PDFs and videos. They treat videos as sequences of frames and process audio signals at 16 kHz, leveraging features directly from the Universal Speech Model to enhance content comprehension beyond mere text translation. The development of Gemini models involves innovative training algorithms and the design of custom datasets, facilitating the integration of mixtures of text, audio, and visual data. These models are delivered in two variants: Pro, optimized for efficient scaling up of pre-training, and Nano, compact and efficient for on-device applications like summarization and reading comprehension.

Gemini models can produce outputs in both text and images, utilizing discrete image tokens for visual content creation. They can respond to textual prompts with images or augment generated text outputs with appropriate visualizations. The infrastructure-supporting Gemini is highly optimized and efficient, particularly in large-scale multimodal data processing using Google’s TPUs, from architecture initialization to fine-tuning. These optimizations reduce resource requirements for pretraining and fine-tuning. In summary, Gemini models represent a significant advancement in AI, particularly in understanding and generating multimodal content. With broad applicability across various use cases requiring the integration of text, audio, and visual data, Gemini models pave the way for innovative solutions in diverse domains.

The Methodology section outlines the approach taken to investigate MLLMs. The study first identifies relevant MLLMs, including those specialized in text generation, image generation, and multimodal tasks, based on their capabilities and contributions to the field. Subsequently, the study delves into the integration of visual models with LLMs to develop MLLMs capable of processing and generating information across different modalities. To evaluate the performance and effectiveness of these MLLMs, qualitative methods are employed. Tasks such as text-to-image generation, image captioning, visual question answering, and text-based image retrieval are undertaken to assess the models’ capabilities comprehensively. Additionally, the study evaluates the generalization capabilities of MLLMs across various domains, assessing their proficiency in handling diverse datasets and tasks. Overall, the methodology encompasses a systematic exploration of MLLMs, aiming to provide insights into their potential applications, strengths, and limitations across a range of multimodal tasks and datasets.

4. Results

The study aims to enhance multimodal technologies to address various challenges related to flood management, water level monitoring, agricultural water discharge, and water pollution management. Efforts in flood management and response focus on leveraging advanced image description techniques to provide accurate and timely information about flood conditions, enabling more effective decision-making and response planning. Similarly, in water level monitoring, multimodal approaches are employed to integrate visual and textual data for the real-time monitoring of water levels in rivers, lakes, and reservoirs, aiding in flood forecasting and early-warning systems.

For agricultural water discharge, research emphasizes the development of multimodal models to monitor and manage the discharge of water in agricultural settings, optimizing irrigation practices and reducing water wastage. Additionally, efforts are directed towards using advanced image description mechanisms to detect and analyze water pollution sources, facilitating prompt intervention and mitigation measures. By integrating visual, textual, and audio data, multimodal technologies offer a comprehensive approach to address the complex challenges in hydrology, ultimately contributing to more efficient flood management, enhanced water level monitoring, sustainable agricultural practices, and effective water pollution management strategies.

In evaluating hydrology-related image samples, recent MLLMs such as Multimodal-GPT, LLaVA, GPT-4Vision, and Gemini are utilized. These models are tested based on their ability to generate responses to textual prompts framing questions or tasks related to visual content. The generated textual descriptions are then compared across the models for accuracy, relevance, and understanding. This comparison helps assess the performance and effectiveness of each model in interpreting and generating contextually relevant descriptions for hydrology-related images. Below are examples illustrating both the images and textual descriptions generated by the MLLMs, providing insights into their respective capabilities and accuracies in interpreting visual content.

Table 2, Table 3, Table 4, Table 5, Table 6, Table 7, Table 8 and Table 9 present the questions asked based on input images and the corresponding responses generated by multimodal LLMs, including LLaVA, GPT-4 Vision, Multimodal-GPT, and Gemini. The key findings underscore the capabilities of these models in processing and interpreting complex multimodal data, which can significantly enhance various aspects of hydrological analysis and management. The evaluation demonstrated that GPT-4 Vision consistently outperformed the other models in terms of accuracy, relevance, and contextual understanding. It is important to clarify that in this context, ‘accuracy’ refers specifically to ‘visual accuracy’—the ability of the model to correctly identify and quantify visual elements within an image, such as the number of people or objects present. This model’s advanced visual and textual integration capabilities enable it to provide detailed, contextually rich responses. For example, GPT-4 Vision accurately assessed flood severity, identified drought-stricken crops, and provided detailed descriptions of environmental conditions. LLaVA and Multimodal-GPT also showed promise but occasionally fell short in providing the same level of detail and accuracy as GPT-4 Vision. Gemini, while innovative, sometimes generated arbitrary values, indicating the need for further refinement in its response generation mechanisms.

Table 2.

Example of image-based recognition and response generation of MLLMs. GPT-4 Vision accurately identifies the crop as cornfield and assesses its condition.

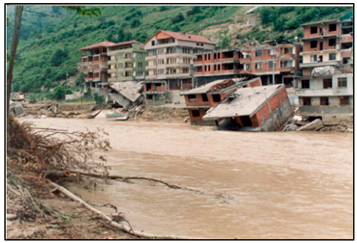

Table 3.

Example of image-based recognition and response generation of MLLMs. GPT-4 Vision accurately identifies the collapsed building resulting from flooding in the image.

Table 4.

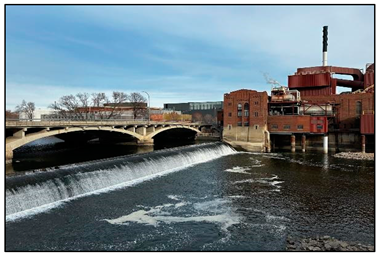

Example of image-based recognition and response generation of MLLMs. While all models generate responses, GPT-4 Vision stands out for its more accurate interpretation of the image, capturing all the details.

Table 5.

Example of image-based recognition and response generation of MLLMs. LLaVA and GPT-4 Vision both produce comprehensive responses, yet GPT-4 Vision’s output proves to be more relevant and exhaustive in addressing the question.

Table 6.

Example of image-based recognition and value estimation using MLLMs. Multimodal-GPT and Gemini provide arbitrary water discharge values in their responses, whereas LLaVA and GPT-4 Vision offer more realistic and contextually appropriate responses.

Table 7.

Example of image-based recognition and analytics using MLLMs. Gemini demonstrates more accurate recognition of the number compared to other Multimodal LLMs.

Table 8.

Example of image-based recognition and response generation of MLLMs. The GPT-4 Vision generates a detailed response, including the cause of dead fish and its impact.

Table 9.

Example of image-based recognition and response generation of MLLMs. The GPT-4 Vision is the clear winner in generating a detailed response on algal bloom compared to other Multimodal LLMs.

5. Discussions

The successful application of MLLMs in this study indicates several promising implications for hydrology. In flood identification and management, these models can analyze patterns in visual images, evaluate the severity, and provide suggestions for flood management. For instance, the models can identify floods, assess their severity, estimate the number of affected people and buildings, and suggest mitigation strategies. They can process images from various sources, including in situ photographs, satellite images, and drones, to provide real-time insights and actionable suggestions for first responders and flood managers. In water quality assessment, MLLMs can analyze images of water bodies to detect indicators such as algal blooms, industrial runoff, dead fish, and other pollutants that visibly alter water color, allowing the MLLM to identify such contaminants through these changes. This capability enhances real-time water pollution monitoring and response, providing critical information to environmental agencies and public health officials.

For climate change analysis, they can integrate data from satellite images, climate models, and historical weather records to predict the impacts of climate change on hydrological cycles and water resources. This integrated analysis helps in understanding long-term trends and planning for future water resource management. In water resource management, MLLMs can optimize the use of water resources by predicting rainfall [76], evaporation rates [77], and soil moisture levels [78]. They can suggest optimal irrigation strategies for agriculture, thereby promoting sustainable agricultural practices and efficient water use. Hydropower generation can benefit from MLLMs by analyzing data on water flow, rainfall, and environmental factors to predict hydropower potential in specific areas. This can aid in the planning and optimization of hydropower projects, ensuring efficient energy production.

Moreover, our comparative analysis with existing research highlights the significant advancements made by integrating multimodal capabilities into hydrological modeling. Previous studies, such as those by Herath et al. [2] and Wu et al. [4], primarily focused on single-modality machine learning approaches, which often relied on either textual or numerical data. In contrast, our work demonstrates the superior performance of MLLMs like GPT-4 Vision in processing and analyzing both visual and textual data simultaneously. This allows for a more comprehensive understanding of complex hydrological events, such as flood severity and water quality monitoring, offering a real-time response advantage that previous models could not achieve.

Furthermore, while studies like Sermet and Demir [13] and Slater et al. [6] have explored AI applications in flood management and climate prediction, respectively, our research enhances these approaches by utilizing MLLMs for dynamic, real-time analysis. The ability of models like Multimodal-GPT and LLaVA to integrate diverse data types, including satellite imagery and textual reports, offers unprecedented flexibility and accuracy in hydrological assessments. These advancements pave the way for more effective water resource management and disaster response strategies, marking a significant leap forward from prior methodologies.

Drought prediction is another critical area where MLLMs can be applied. By training on historical weather data and soil moisture levels, these models can forecast potential drought events, allowing for proactive drought mitigation strategies. They can also identify drought conditions in images, providing immediate visual confirmation.

Streamflow forecasting can be enhanced by MLLMs through the analysis of rainfall, snowmelt, and other environmental data to predict river flow rates. This information is valuable for flood control, water supply planning, and navigation. In groundwater management, MLLMs can predict groundwater levels using data from various sources. This helps in the sustainable management of groundwater resources, ensuring their availability for future use. Wetland conservation efforts can be supported by MLLMs through the analysis of satellite imagery, climate data, and human activity. These models can assess the health of wetlands and suggest effective conservation strategies to maintain these crucial ecosystems.

Water demand forecasting can be improved by training MLLMs on population growth, climate data, and water usage patterns to predict future water demand. This information is essential for planning and managing water supply systems to meet future needs. Ecosystem health assessment can benefit from MLLMs by analyzing data on water quality, biodiversity, and other environmental factors. These models can provide insights into the health of aquatic ecosystems and suggest remediation efforts where necessary. They can also play a role in natural calamity detection by predicting the impact of natural disasters using climate models, satellite images, and historical data. This capability can enhance preparedness and response to natural calamities. In architectural matters within hydrology, MLLMs can be used to assist with the construction of dams and other hydrological infrastructure, supporting strategic planning and development.

The integration of these findings into hydrological applications, such as flood detection, water quality assessment, and climate impact analysis, reveals the transformative potential of MLLMs in enhancing our ability to manage water-related challenges. By providing accurate, contextually rich insights that inform decision-making in real time, MLLMs are positioned to revolutionize how we approach hydrological research and management. Future research should continue to explore the integration of these models into decision-support systems, addressing challenges such as model limitations, ethical considerations, and environmental impacts to ensure responsible AI deployment in this critical field.

6. Conclusions and Future Work

The potential impact of this study on flood detection and management is significant. By exploring the capabilities of MLLMs in the hydrology domain, this research offers insights into how advanced AI technologies can revolutionize flood monitoring, response systems, and water resource management. The analysis of MLLMs such as Multimodal-GPT, GPT-4 Vision, Gemini, and LLaVA in processing hydrology-specific samples provides a foundation for developing intelligent systems capable of understanding and interpreting complex hydrological data in real time. The findings from our evaluation of Multimodal Large Language Models (MLLMs) in hydrological tasks suggest several actionable strategies that can significantly enhance policy and management practices:

- Flood Risk Management: The advanced predictive capabilities of models like GPT-4 Vision can be integrated into national and regional early warning systems. Policymakers can use these insights to allocate emergency resources more effectively, prioritizing high-risk areas based on real-time data analysis.

- Water Quality Monitoring: MLLMs can be employed to monitor water bodies continuously, detecting pollution levels with high accuracy. Regulatory agencies can leverage these insights to enforce environmental standards more rigorously, responding to contamination events as they occur.

- Sustainable Water Management: The insights from MLLMs can guide sustainable water usage policies by predicting water availability and demand patterns. This can help in formulating regulations that ensure the equitable distribution of water resources, especially in drought-prone regions.

- Data-Driven Decision Making: Governments should consider establishing collaborations between AI researchers and hydrological agencies to develop tailored MLLM applications that support policy formulation. This could involve integrating MLLM outputs into decision-support systems used by policymakers.

Moving forward, future research in hydrology, environmental analysis, and water resource management could focus on several directions to further leverage MLLMs and advance the field. One potential avenue is the development of MLLM-based systems for generating realistic flood scenarios based on textual descriptions. This could enhance predictive capabilities and aid in risk assessment and disaster preparedness. Additionally, research could explore the integration of MLLMs into decision-support systems for improved water resource management, incorporating both textual and visual data to provide comprehensive insights. Furthermore, efforts could be directed towards addressing challenges such as model limitations, ethical considerations, and the environmental impact of MLLMs. Research on mitigating biases, improving interpretability, and enhancing robustness against adversarial attacks would be valuable for ensuring the responsible deployment of AI technologies in hydrology [79]. Additionally, investigating methods to optimize computational resources and reduce carbon footprints associated with MLLMs would be crucial for sustainable development.

The study sets the stage for the integration of MLLMs into flood detection and management systems, offering a glimpse into the transformative potential of AI in addressing complex hydrological challenges [80]. By exploring future research directions and addressing key concerns, researchers can continue to advance the field and develop innovative solutions for sustainable water management in the face of climate change and increasing environmental pressures.

Author Contributions

Conceptualization: L.A.K., Y.S. and I.D. Methodology: L.A.K., Y.S., D.J.S. and O.M. Data visualization: L.A.K. Investigation: L.A.K., Y.S. and D.J.S. Resources: I.D. Supervision: I.D. Validation: L.A.K., Y.S. and O.M. Writing—original draft: L.A.K. and D.J.S. Writing—review and editing: Y.S., I.D. and O.M. All authors have read and agreed to the published version of the manuscript.

Funding

This project was funded by the National Oceanic and Atmospheric Administration (NOAA) via a cooperative agreement with the University of Alabama (NA22NWS4320003) awarded to the Cooperative Institute for Research to Operations in Hydrology (CIROH).

Data Availability Statement

The study used readily available models and tested them on the images provided in the manuscript. No additional data or code repositories are required for this research. All necessary information to reproduce the findings is contained within the manuscript itself.

Acknowledgments

During the preparation of this work, the authors used ChatGPT to improve the flow of the text, correct any potential grammatical errors, and improve the writing. After using this tool, the authors reviewed and edited the content as needed and take full responsibility for the content of the publication.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Pursnani, V.; Sermet, Y.; Kurt, M.; Demir, I. Performance of ChatGPT on the US fundamentals of engineering exam: Comprehensive assessment of proficiency and potential implications for professional environmental engineering practice. Comput. Educ. Artif. Intell. 2023, 5, 100183. [Google Scholar] [CrossRef]

- Herath, H.M.V.V.; Chadalawada, J.; Babovic, V. Hydrologically informed machine learning for rainfall–runoff modelling: Towards distributed modelling. Hydrol. Earth Syst. Sci. 2021, 25, 4373–4394. [Google Scholar] [CrossRef]

- Boota, M.W.; Zwain, H.M.; Shi, X.; Guo, J.; Li, Y.; Tayyab, M.; Yu, J. How effective is twitter (X) social media data for urban flood management? J. Hydrol. 2024, 634, 131129. [Google Scholar]

- Wu, X.; Zhang, Q.; Wen, F.; Qi, Y. A Water Quality Prediction Model Based on Multi-Task Deep Learning: A Case Study of the Yellow River, China. Water 2022, 14, 3408. [Google Scholar] [CrossRef]

- Filali Boubrahimi, S.; Neema, A.; Nassar, A.; Hosseinzadeh, P.; Hamdi, S.M. Spatiotemporal data augmentation of MODIS-landsat water bodies using adversarial networks. Water Resour. Res. 2024, 60, e2023WR036342. [Google Scholar] [CrossRef]

- Slater, L.J.; Arnal, L.; Boucher, M.A.; Chang, A.Y.Y.; Moulds, S.; Murphy, C.; Zappa, M. Hybrid forecasting: Blending climate predictions with AI models. Hydrol. Earth Syst. Sci. 2023, 27, 1865–1889. [Google Scholar] [CrossRef]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Zoph, B. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Zhu, D.; Chen, J.; Shen, X.; Li, X.; Zhang, W.; Elhoseiny, M. MiniGPT-4: Enhancing vision-language understanding with advanced large language models. arXiv 2023, arXiv:2304.10592. [Google Scholar]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual instruction tuning. arXiv 2023, arXiv:2304.08485. [Google Scholar]

- Qi, D.; Su, L.; Song, J.; Cui, E.; Bharti, T.; Sacheti, A. ImageBERT: Cross-modal Pre-training with Large-scale Weak-supervised Image-Text Data. arXiv 2020, arXiv:2001.07966. [Google Scholar]

- Huang, K.; Altosaar, J.; Ranganath, R. ClinicalBERT: Modeling Clinical Notes and Predicting Hospital Readmission. arXiv 2019, arXiv:1904.05342. [Google Scholar]

- Kamyab, H.; Khademi, T.; Chelliapan, S.; SaberiKamarposhti, M.; Rezania, S.; Yusuf, M.; Ahn, Y. The latest innovative avenues for the utilization of artificial Intelligence and big data analytics in water resource management. Results Eng. 2023, 20, 101566. [Google Scholar] [CrossRef]

- García, J.; Leiva-Araos, A.; Diaz-Saavedra, E.; Moraga, P.; Pinto, H.; Yepes, V. Relevance of Machine Learning Techniques in Water Infrastructure Integrity and Quality: A Review Powered by Natural Language Processing. Appl. Sci. 2023, 13, 12497. [Google Scholar] [CrossRef]

- Demir, I.; Xiang, Z.; Demiray, B.Z.; Sit, M. WaterBench-Iowa: A large-scale benchmark dataset for data-driven streamflow forecasting. Earth Syst. Sci. Data 2022, 14, 5605–5616. [Google Scholar] [CrossRef]

- Sermet, Y.; Demir, I. A semantic web framework for automated smart assistants: A case study for public health. Big Data Cogn. Comput. 2021, 5, 57. [Google Scholar] [CrossRef]

- Sermet, Y.; Demir, I. An intelligent system on knowledge generation and communication about flooding. Environ. Model. Softw. 2018, 108, 51–60. [Google Scholar] [CrossRef]

- Samuel, D.J.; Sermet, M.Y.; Mount, J.; Vald, G.; Cwiertny, D.; Demir, I. Application of Large Language Models in Developing Conversational Agents for Water Quality Education, Communication and Operations. EarthArxiv 2024, 7056. [Google Scholar] [CrossRef]

- Embedded, L.L.M. Real-Time Flood Detection: Achieving Supply Chain Resilience through Large Language Model and Image Analysis. Available online: https://www.linkedin.com/posts/embedded-llm_real-time-flood-detection-achieving-supply-activity-7121080789819129856-957y (accessed on 23 October 2023).

- Li, C.; Gan, Z.; Yang, Z.; Yang, J.; Li, L.; Wang, L.; Gao, J. Multimodal foundation models: From specialists to general-purpose assistants. arXiv 2023, arXiv:2309.10020. [Google Scholar]

- Samuel, D.J.; Sermet, Y.; Cwiertny, D.; Demir, I. Integrating vision-based AI and large language models for real-time water pollution surveillance. Water Environ. Res. 2024, 96, e11092. [Google Scholar] [CrossRef]

- Alabbad, Y.; Mount, J.; Campbell, A.M.; Demir, I. A web-based decision support framework for optimizing road network accessibility and emergency facility allocation during flooding. Urban Inform. 2024, 3, 10. [Google Scholar] [CrossRef]

- Li, Z.; Demir, I. Better localized predictions with Out-of-Scope information and Explainable AI: One-Shot SAR backscatter nowcast framework with data from neighboring region. ISPRS J. Photogramm. Remote Sens. 2024, 207, 92–103. [Google Scholar] [CrossRef]

- OpenAI. Introducing ChatGPT. 2022. Available online: https://openai.com/index/chatgpt/ (accessed on 30 November 2022).

- Fan, W.C.; Chen, Y.C.; Chen, D.; Cheng, Y.; Yuan, L.; Wang, Y.C.F. FRIDO: Feature pyramid diffusion for complex scene image synthesis. arXiv 2022, arXiv:2208.13753. [Google Scholar] [CrossRef]

- Chiang, W.L.; Li, Z.; Lin, Z.; Sheng, Y.; Wu, Z.; Zhang, H.; Zheng, L.; Zhuang, S.; Zhuang, Y.; Gonzalez, J.E.; et al. Vicuna: An Open-Source Chatbot Impressing GPT-4 with 90% CHATGPT Quality. Available online: https://vicuna.lmsys.org (accessed on 14 April 2023).

- Taori, R.; Gulrajani, I.; Zhang, T.; Dubois, Y.; Li, X.; Guestrin, C.; Liang, P.; Hashimoto, T.B. Stanford Alpaca: An Instruction-Following Llama Model. Available online: https://github.com/tatsu-lab/stanford_alpaca (accessed on 29 May 2023).

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Lample, G. LLaMA: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Alayrac, J.B.; Donahue, J.; Luc, P.; Miech, A.; Barr, I.; Hasson, Y.; Lenc, K.; Simonyan, K. Flamingo: A visual language model for few-shot learning. In Proceedings of the NeurIPS, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. BLIP: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In Proceedings of the 39th International Conference on Machine Learning, Baltimore, ML, USA, 17–23 July 2022. [Google Scholar]

- Huang, S.; Dong, L.; Wang, W.; Hao, Y.; Singhal, S.; Ma, S.; Lv, T.; Wei, F. Language is not all you need: Aligning perception with language models. arXiv 2023, arXiv:2302.14045. [Google Scholar]

- Driess, D.; Xia, F.; Sajjadi, M.S.M.; Lynch, C.; Chowdhery, A.; Ichter, B.; Wahid, A.; Florence, P. PALM-E: An embodied multimodal language model. arXiv 2023, arXiv:2303.03378. [Google Scholar]

- Lyu, C.; Wu, M.; Wang, L.; Huang, X.; Liu, B.; Du, Z.; Shi, S.; Tu, Z. Macaw-LLM: Multi-Modal Language Modeling with Image, Audio, Video, and Text Integration. arXiv 2023, arXiv:2306.09093. [Google Scholar]

- Midjourney. Available online: https://www.midjourney.com/home?callbackUrl=%2Fexplore (accessed on 24 January 2024).

- Parisi, A.; Zhao, Y.; Fiedel, N. TALM: Tool augmented language models. arXiv 2022, arXiv:2205.1225. [Google Scholar]

- Gao, L.; Madaan, A.; Zhou, S.; Alon, U.; Liu, P.; Yang, Y.; Callan, J.; Neubig, G. PAL: Program-aided language models. arXiv 2022, arXiv:2211.10435. [Google Scholar]

- Schick, T.; Dwivedi-Yu, J.; Dessì, R.; Raileanu, R.; Lomeli, M.; Zettlemoyer, L.; Cancedda, N.; Scialom, T. Toolformer: Language models can teach themselves to use tools. arXiv 2023, arXiv:2302.04761. [Google Scholar]

- Wu, C.; Yin, S.; Qi, W.; Wang, X.; Tang, Z.; Duan, N. Visual ChatGPT: Talking, drawing and editing with visual foundation models. arXiv 2023, arXiv:2303.04671. [Google Scholar]

- You, H.; Sun, R.; Wang, Z.; Chen, L.; Wang, G.; Ayyubi, H.A.; Chang, K.W.; Chang, S.F. IdealGPT: Iteratively decomposing vision and language reasoning via large language models. arXiv 2023, arXiv:2305.14985. [Google Scholar]

- Zhu, D.; Chen, J.; Shen, X.; Li, X.; Zhang, W.; Elhoseiny, M. ChatGPT asks, BLIP-2 answers: Automatic questioning towards enriched visual descriptions. arXiv 2023, arXiv:2303.06594. [Google Scholar]

- Wang, T.; Zhang, J.; Fei, J.; Ge, Y.; Zheng, H.; Tang, Y.; Li, Z.; Gao, M.; Zhao, S. Caption anything: Interactive image description with diverse multimodal controls. arXiv 2023, arXiv:2305.02677. [Google Scholar]

- Zhang, R.; Hu, X.; Li, B.; Huang, S.; Deng, H.; Qiao, Y.; Gao, P.; Li, H. Prompt, generate, then cache: Cascade of foundation models makes strong few-shot learners. In Proceedings of the CVPR, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Zhu, X.; Zhang, R.; He, B.; Zeng, Z.; Zhang, S.; Gao, P. PointCLIP v2: Adapting CLIP for powerful 3D open-world learning. arXiv 2022, arXiv:2211.11682. [Google Scholar]

- Yu, Z.; Yu, J.; Cui, Y.; Tao, D.; Tian, Q. Deep modular co-attention networks for visual question answering. In Proceedings of the CVPR, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Gao, P.; Jiang, Z.; You, H.; Lu, P.; Hoi, S.C.; Wang, X.; Li, H. Dynamic fusion with intra- and inter-modality attention flow for visual question answering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6639–6648. [Google Scholar]

- Zhang, H.; Li, X.; Bing, L. Video-LLaMA: An instruction-tuned audio-visual language model for video understanding. arXiv 2023, arXiv:2306.02858. [Google Scholar]

- Su, Y.; Lan, T.; Li, H.; Xu, J.; Wang, Y.; Cai, D. PandaGPT: One model to instruction-follow them all. arXiv 2023, arXiv:2305.16355. [Google Scholar]

- Zhang, D.; Li, S.; Zhang, X.; Zhan, J.; Wang, P.; Zhou, Y.; Qiu, X. SpeechGPT: Empowering large language models with intrinsic cross-modal conversational abilities. arXiv 2023, arXiv:2305.11000. [Google Scholar]

- Tang, Z.; Yang, Z.; Zhu, C.; Zeng, M.; Bansal, M. Any-to-any generation via composable diffusion. arXiv 2023, arXiv:2305.11846. [Google Scholar]

- Shen, Y.; Song, K.; Tan, X.; Li, D.; Lu, W.; Zhuang, Y. HuggingGPT: Solving AI tasks with ChatGPT and its friends in HuggingFace. arXiv 2023, arXiv:2303.17580. [Google Scholar]

- Davis, E.; Marcus, G. Commonsense reasoning and commonsense knowledge in artificial intelligence. Commun. ACM 2015, 58, 92–103. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.H.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Zhang, Z.; Zhang, A.; Li, M.; Smola, A. Automatic chain of thought prompting in large language models. arXiv 2022, arXiv:2210.03493. [Google Scholar]

- Kojima, T.; Gu, S.S.; Reid, M.; Matsuo, Y.; Iwasawa, Y. Large language models are zero-shot reasoners. Adv. Neural Inf. Process. Syst. 2022, 35, 22199–22213. [Google Scholar]

- Zelikman, E.; Wu, Y.; Mu, J.; Goodman, N. Star: Bootstrapping reasoning with reasoning. Adv. Neural Inf. Process. Syst. 2022, 35, 15476–15488. [Google Scholar]

- Zhang, Z.; Zhang, A.; Li, M.; Zhao, H.; Karypis, G.; Smola, A. Multimodal chain-of-thought reasoning in language models. arXiv 2023, arXiv:2302.00923. [Google Scholar]

- Gong, T.; Lyu, C.; Zhang, S.; Wang, Y.; Zheng, M.; Zhao, Q.; Liu, K.; Zhang, W.; Luo, P.; Chen, K. Multimodal-GPT: A vision and language model for dialogue with humans. arXiv 2023, arXiv:2305.04790. [Google Scholar]

- GPT-4V(ision) System Card. 2023. Available online: https://cdn.openai.com/papers/GPTV_System_Card.pdf (accessed on 25 September 2023).

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-up and top-down attention for image captioning and visual question answering. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. ViLBERT: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. In Proceedings of the NeurIPS, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Li, L.H.; Yatskar, M.; Yin, D.; Hsieh, C.J.; Chang, K.W. VisualBERT: A simple and performant baseline for vision and language. arXiv 2019, arXiv:1908.03557. [Google Scholar]

- Alberti, C.; Ling, J.; Collins, M.; Reitter, D. Fusion of detected objects in text for visual question answering. In Proceedings of the EMNLP, Hong Kong, China, 3–7 November 2019. [Google Scholar]

- Li, G.; Duan, N.; Fang, Y.; Gong, M.; Jiang, D.; Zhou, M. Unicoder-VL: A universal encoder for vision and language by cross-modal pre-training. In Proceedings of the AAAI, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Tan, H.; Bansal, M. LXMERT: Learning cross-modality encoder representations from transformers. In Proceedings of the EMNLP, Hong Kong, China, 3–7 November 2019. [Google Scholar]

- Su, W.; Zhu, X.; Cao, Y.; Li, B.; Lu, L.; Wei, F.; Dai, J. VL-BERT: Pre-training of generic visual-linguistic representations. In Proceedings of the ICLR, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Zhou, L.; Palangi, H.; Zhang, L.; Hu, H.; Corso, J.J.; Gao, J. Unified vision-language pre-training for image captioning and VQA. In Proceedings of the AAAI, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Chen, Y.C.; Li, L.; Yu, L.; Kholy, A.E.; Ahmed, F.; Gan, Z.; Cheng, Y.; Liu, J. UNITER: Learning universal image-text representations. In Proceedings of the ECCV, Tel Aviv, Israel, 23–28 August 2020. [Google Scholar]

- Li, Z.; Xiang, Z.; Demiray, B.Z.; Sit, M.; Demir, I. MA-SARNet: A one-shot nowcasting framework for SAR image prediction with physical driving forces. J. Photogramm. Remote Sens. 2023, 205, 176–190. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the CVPR, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the CVPR, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Chen, X.; Fang, H.; Lin, T.Y.; Vedantam, R.; Gupta, S.; Dollár, P.; Zitnick, C.L. Microsoft COCO captions: Data collection and evaluation server. arXiv 2015, arXiv:1504.00325. [Google Scholar]

- Sajja, R.; Erazo, C.; Li, Z.; Demiray, B.Z.; Sermet, Y.; Demir, I. Integrating Generative AI in Hackathons: Opportunities, Challenges, and Educational Implications. arXiv 2024, arXiv:2401.17434. [Google Scholar]

- Arman, H.; Yuksel, I.; Saltabas, L.; Goktepe, F.; Sandalci, M. Overview of flooding damages and its destructions: A case study of Zonguldak-Bartin basin in Turkey. Nat. Sci. 2010, 2, 409. [Google Scholar] [CrossRef]

- Franch, G.; Tomasi, E.; Wanjari, R.; Poli, V.; Cardinali, C.; Alberoni, P.P.; Cristoforetti, M. GPTCast: A weather language model for precipitation nowcasting. arXiv 2024, arXiv:2407.02089. [Google Scholar]

- Biswas, S. Importance of chat GPT in Agriculture: According to Chat GPT. Available online: https://ssrn.com/abstract=4405391 (accessed on 30 March 2023).

- Cahyana, D.; Hadiarto, A.; Hati, D.P.; Pratamaningsih, M.M.; Karolinoerita, V.; Mulyani, A.; Suriadikusumah, A. Application of ChatGPT in soil science research and the perceptions of soil scientists in Indonesia. Artif. Intell. Geosci. 2024, 5, 100078. [Google Scholar] [CrossRef]

- Sajja, R.; Sermet, Y.; Cwiertny, D.; Demir, I. Platform-independent and curriculum-oriented intelligent assistant for higher education. Int. J. Educ. Technol. High. Educ. 2023, 20, 42. [Google Scholar] [CrossRef]

- Cappato, A.; Baker, E.A.; Reali, A.; Todeschini, S.; Manenti, S. The role of modeling scheme and model input factors uncertainty in the analysis and mitigation of backwater induced urban flood-risk. J. Hydrol. 2022, 614, 128545. [Google Scholar] [CrossRef]

- Li, B.; Zhang, Y.; Guo, D.; Zhang, R.; Li, F.; Zhang, H.; Zhang, K.; Li, Y.; Liu, Z.; Li, C. LLaVA-OneVision: Easy Visual Task Transfer. arXiv 2024, arXiv:2408.03326. [Google Scholar]

- Wang, J.; Jiang, H.; Liu, Y.; Ma, C.; Zhang, X.; Pan, Y.; Liu, M.; Gu, P.; Xia, S.; Li, W.; et al. A Comprehensive Review of Multimodal Large Language Models: Performance and Challenges Across Different Tasks. arXiv 2024, arXiv:2408.01319. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).