Comparison of Tree-Based Ensemble Algorithms for Merging Satellite and Earth-Observed Precipitation Data at the Daily Time Scale

Abstract

1. Introduction

2. Methods

2.1. Linear Regression

2.2. Random Forests

2.3. Gradient Boosting Machines

2.4. Extreme Gradient Boosting

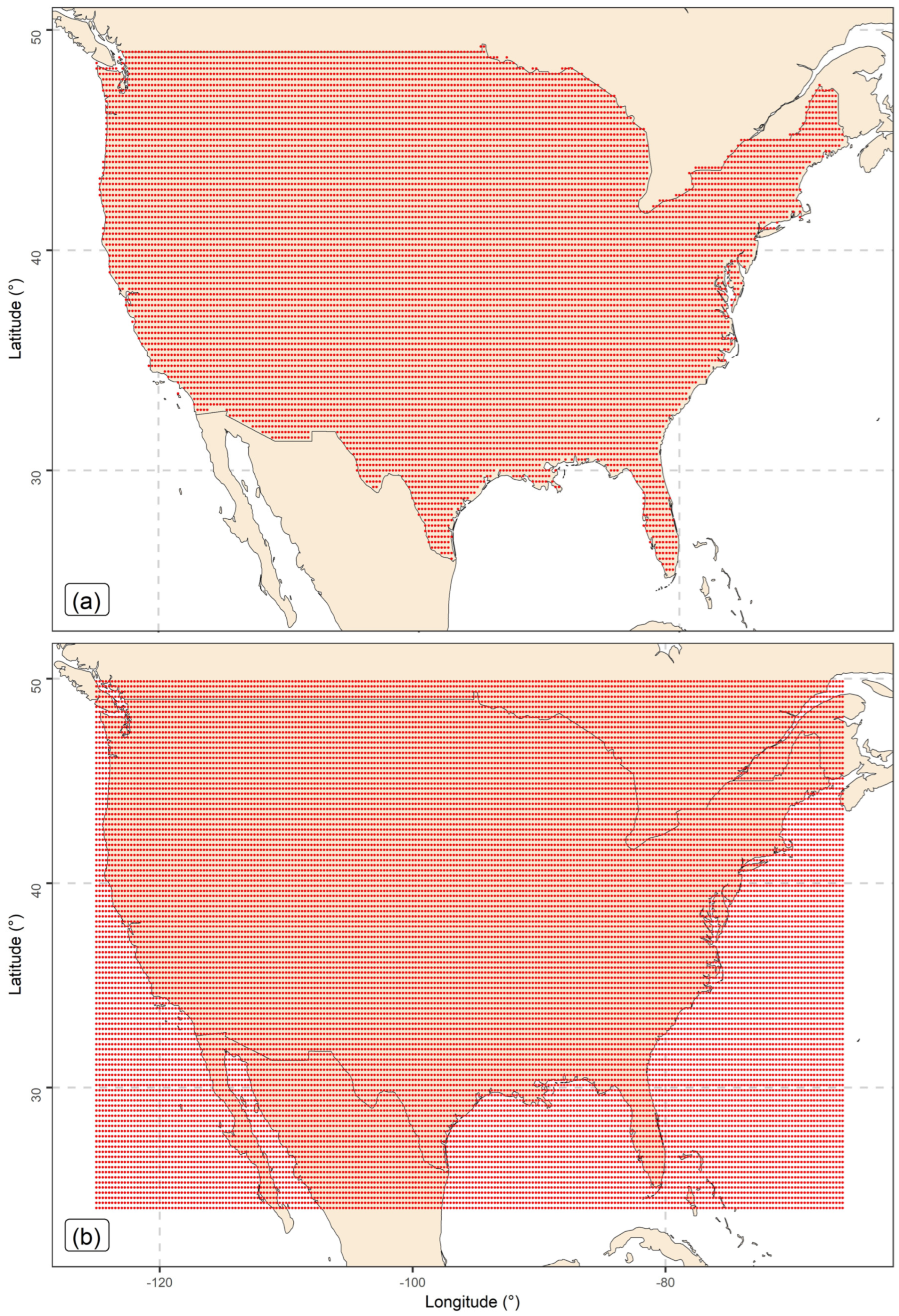

3. Data and Application

3.1. Data

3.1.1. Earth-Observed Precipitation Data

3.1.2. Satellite Precipitation Data

3.1.3. Elevation Data

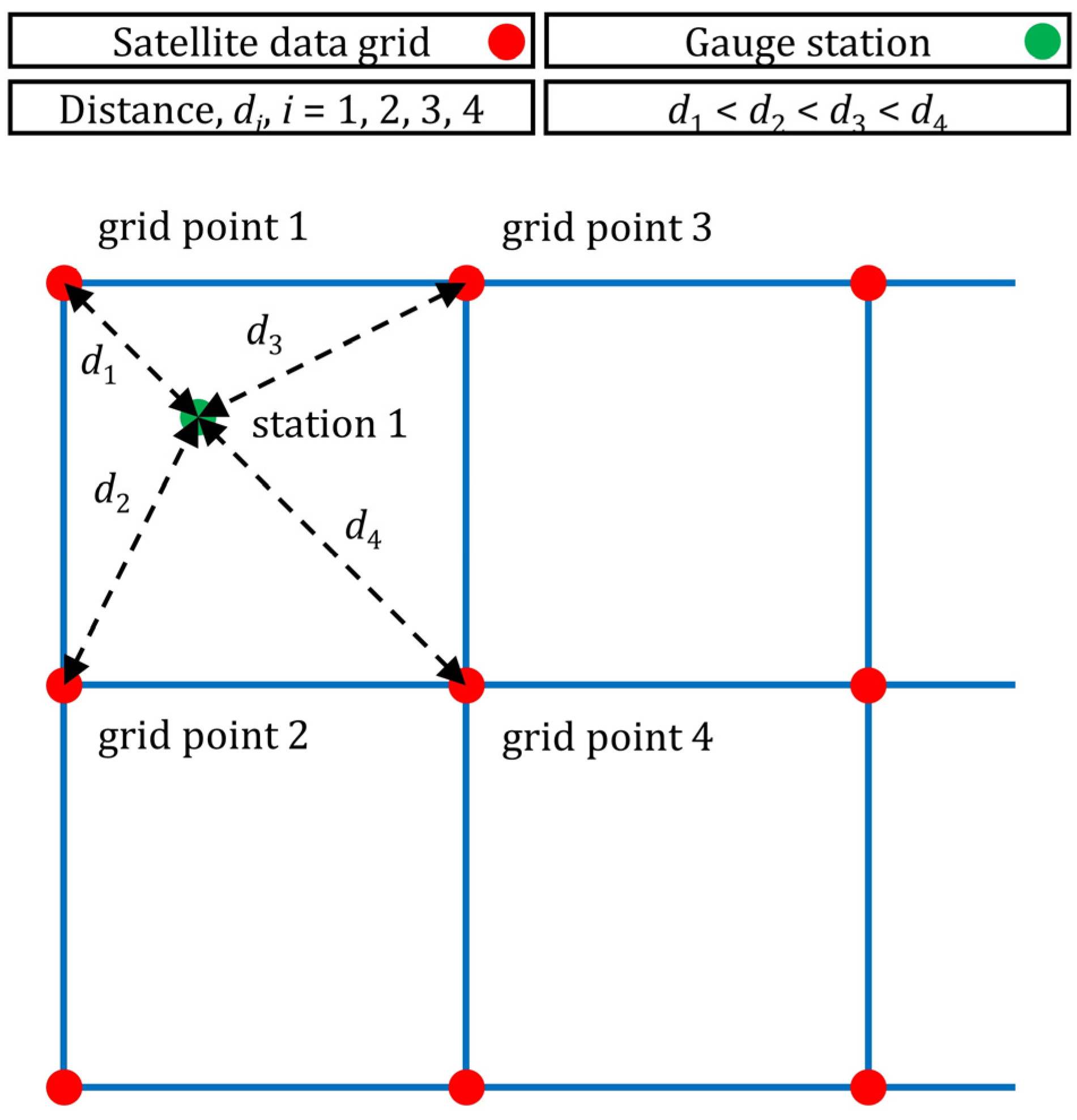

3.2. Validation Setting and Predictor Variables

3.3. Performance Metrics and Assessment

4. Results

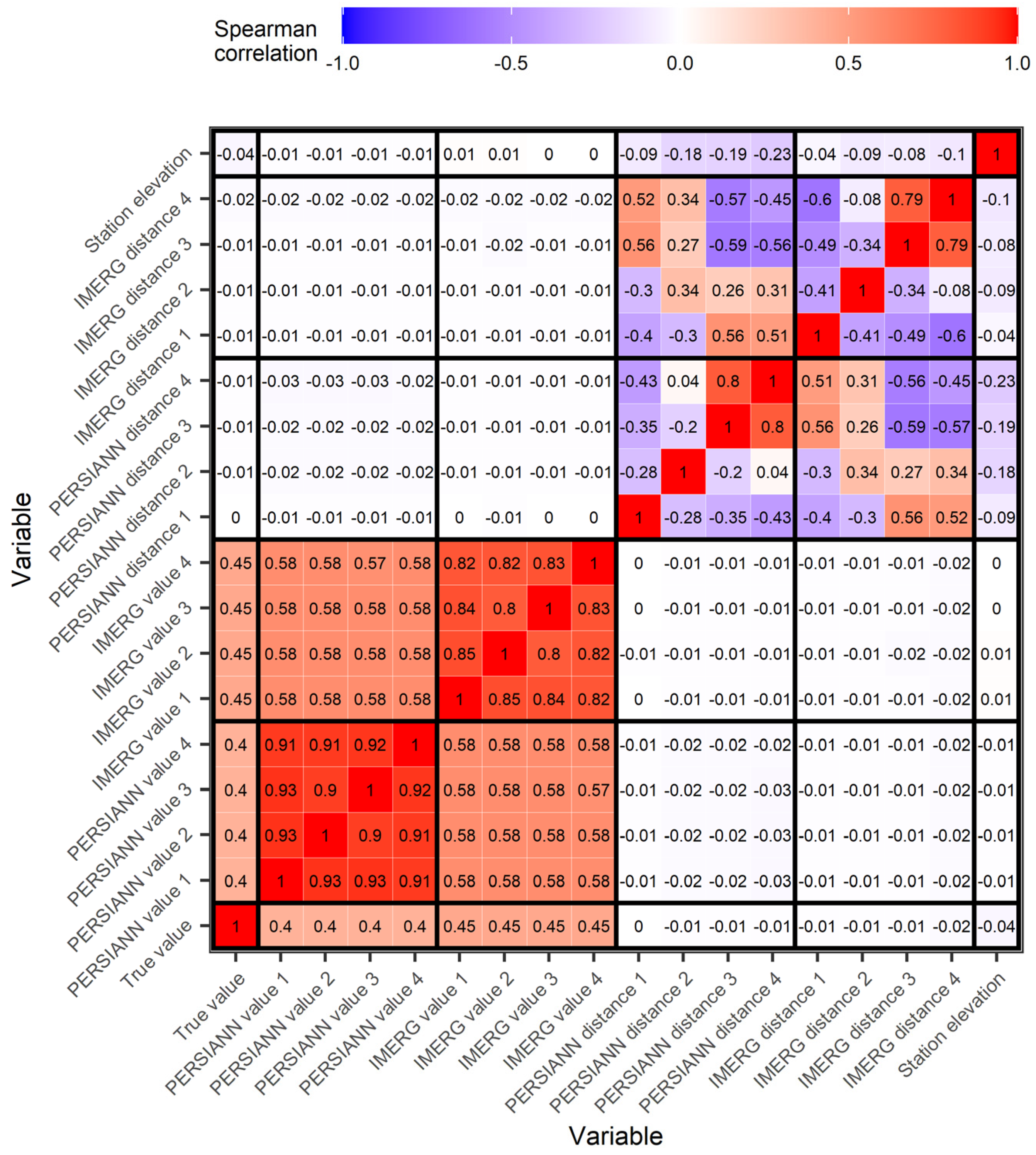

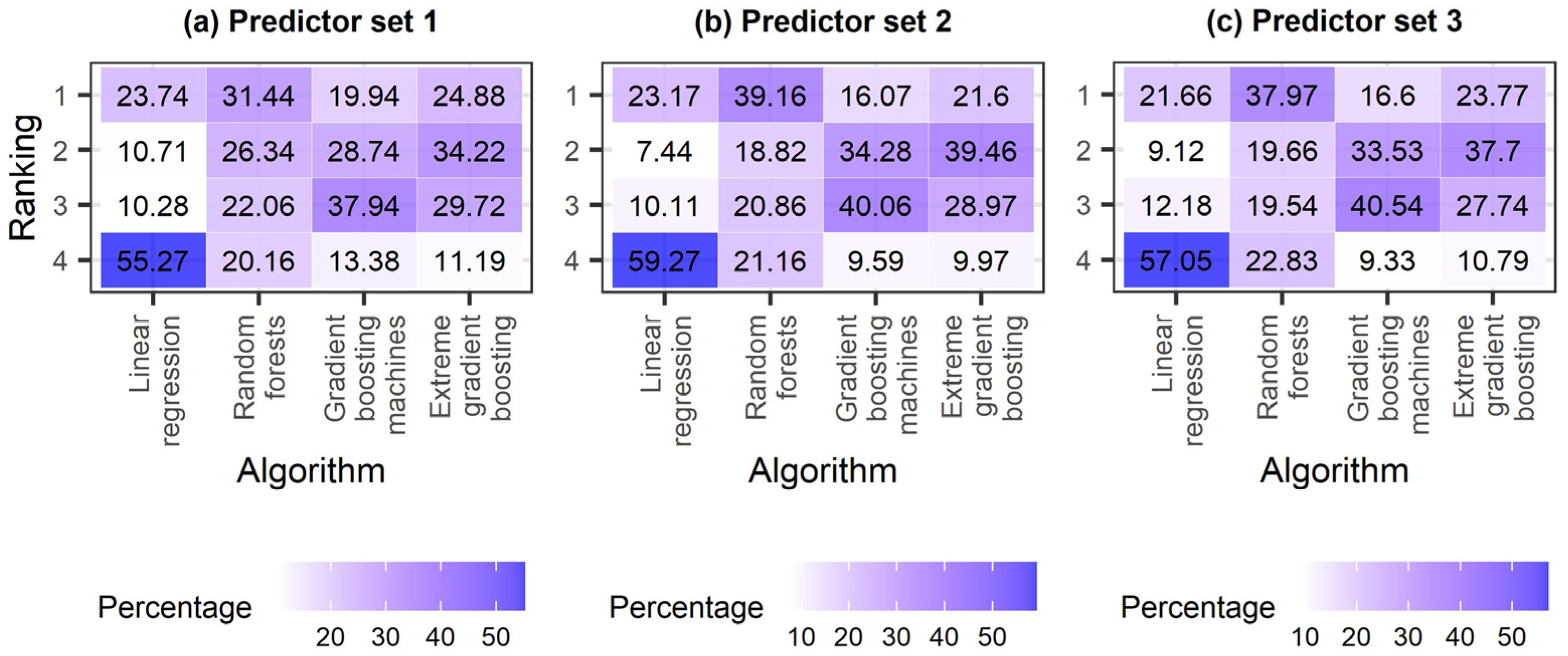

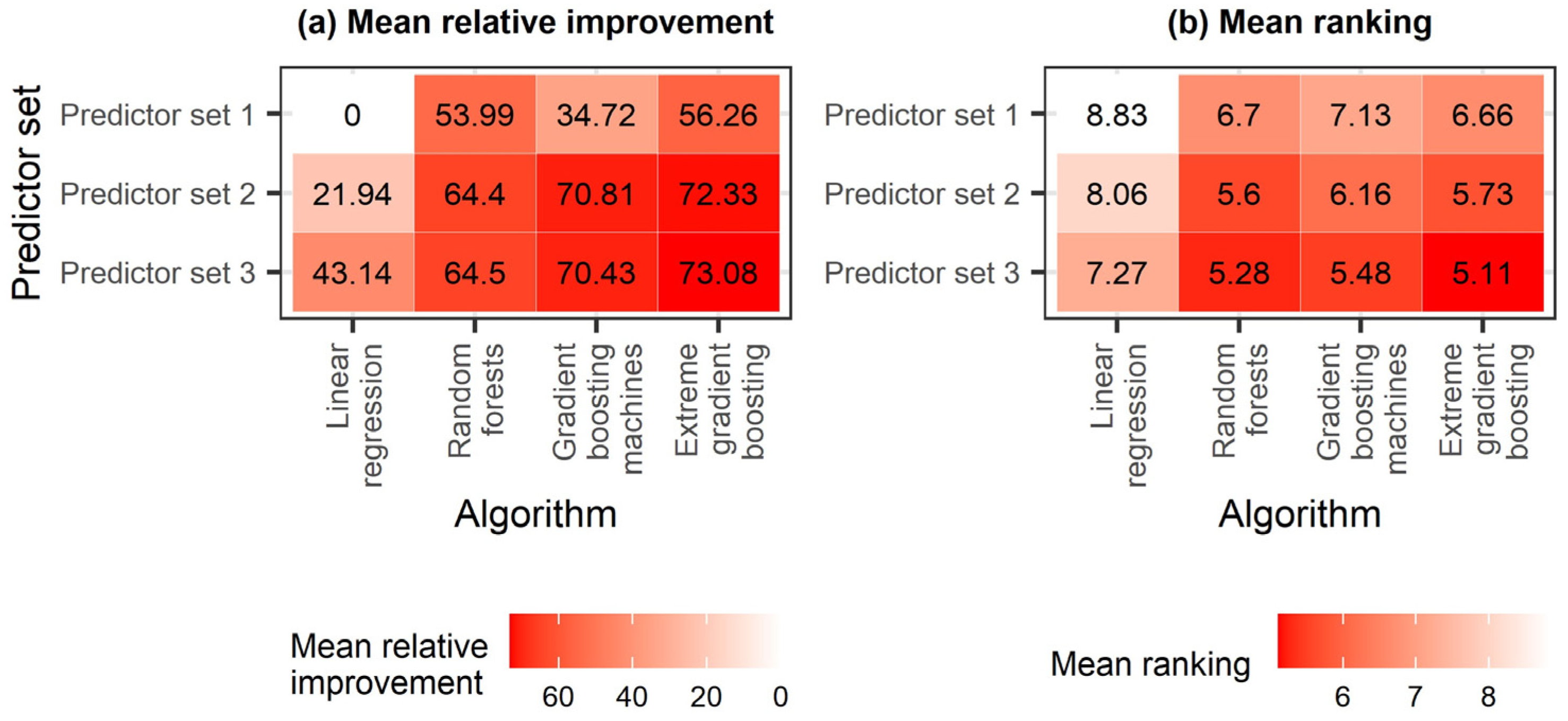

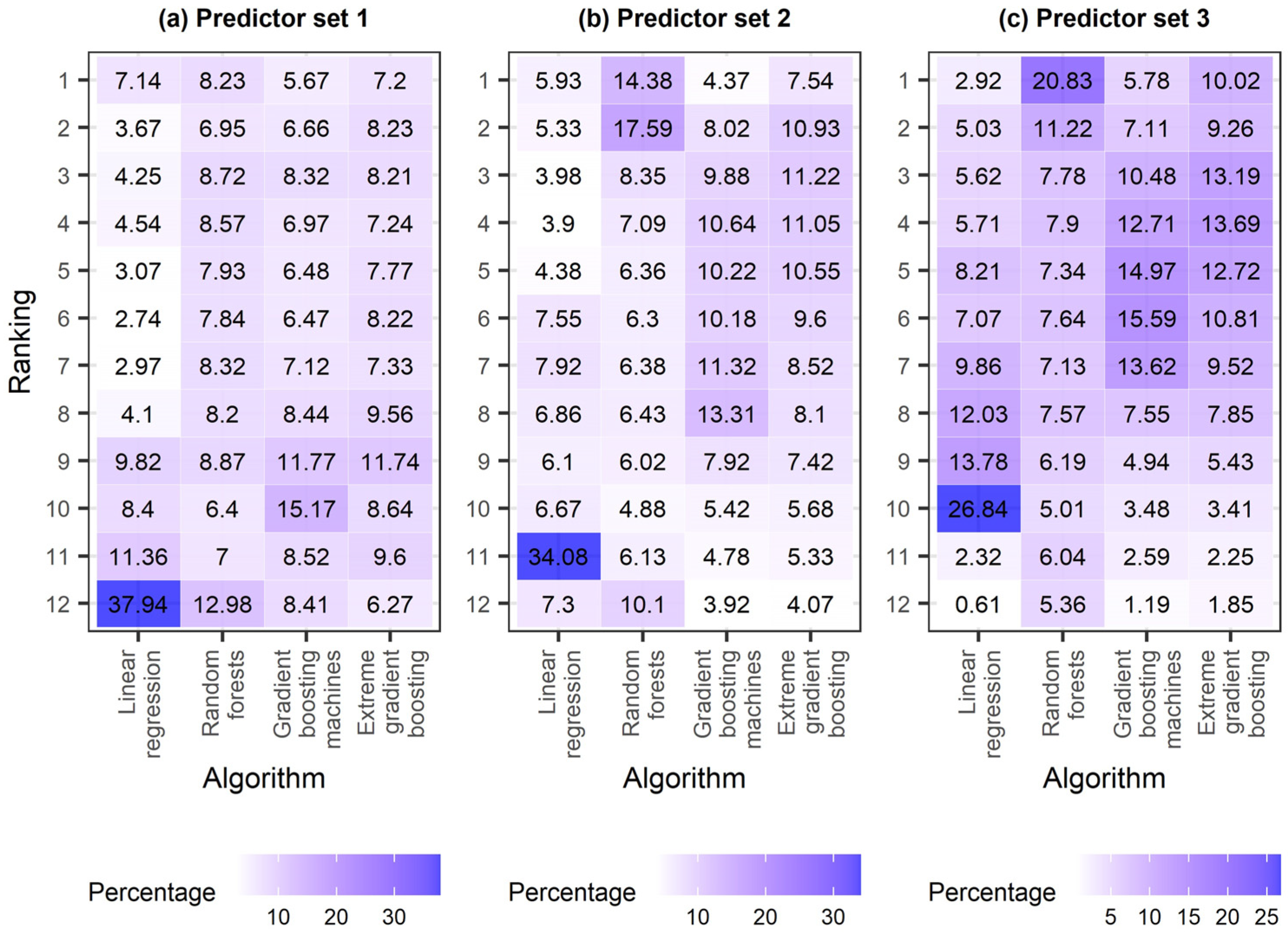

4.1. Regression Setting Exploration

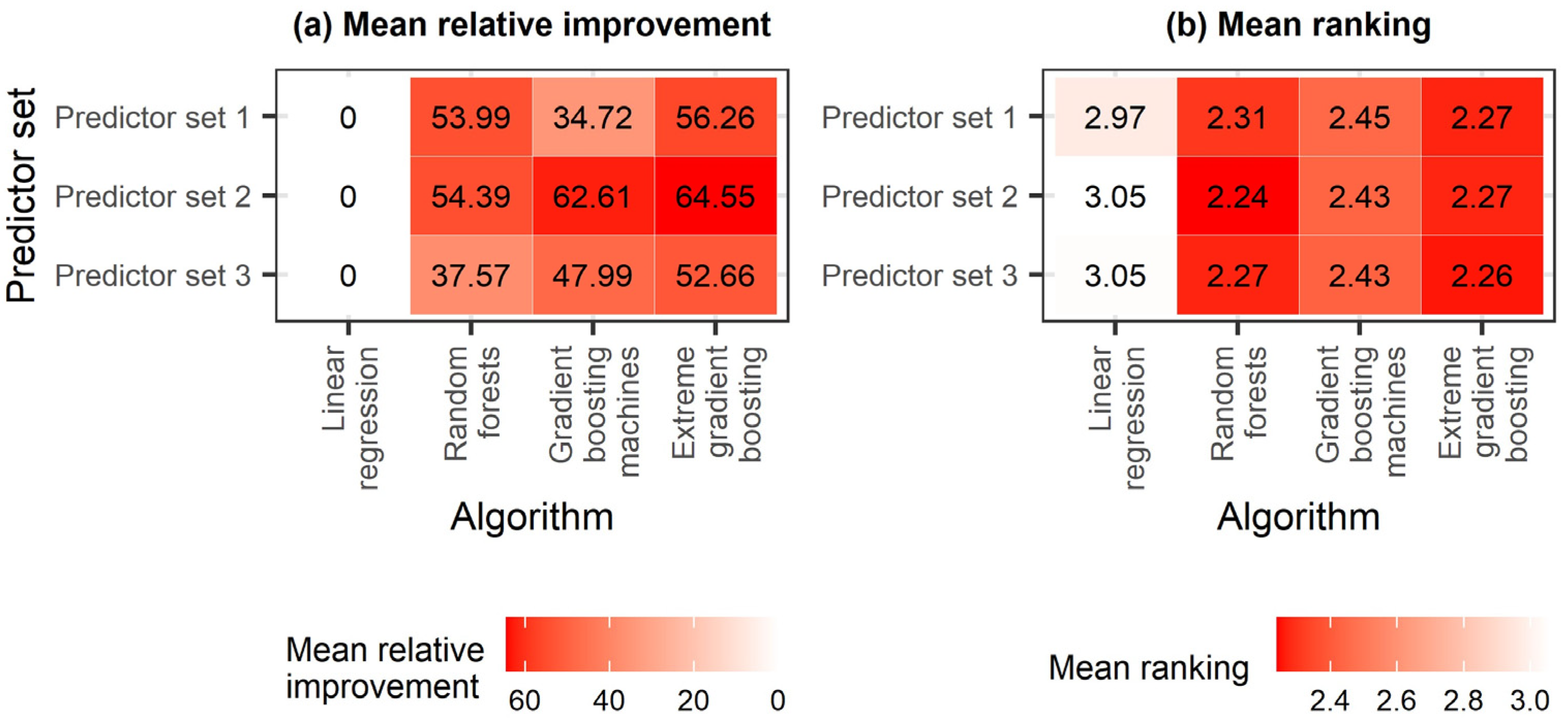

4.2. Algorithm Comparison

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning; Springer: New York, NY, USA, 2009. [Google Scholar] [CrossRef]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning; Springer: New York, NY, USA, 2013. [Google Scholar] [CrossRef]

- Efron, B.; Hastie, T. Computer Age Statistical Inference; Cambridge University Press: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Dogulu, N.; López López, P.; Solomatine, D.P.; Weerts, A.H.; Shrestha, D.L. Estimation of predictive hydrologic uncertainty using the quantile regression and UNEEC methods and their comparison on contrasting catchments. Hydrol. Earth Syst. Sci. 2015, 19, 3181–3201. [Google Scholar] [CrossRef]

- Xu, L.; Chen, N.; Zhang, X.; Chen, Z. An evaluation of statistical, NMME and hybrid models for drought prediction in China. J. Hydrol. 2018, 566, 235–249. [Google Scholar] [CrossRef]

- Quilty, J.; Adamowski, J.; Boucher, M.A. A stochastic data-driven ensemble forecasting framework for water resources: A case study using ensemble members derived from a database of deterministic wavelet-based models. Water Resour. Res. 2019, 55, 175–202. [Google Scholar] [CrossRef]

- Curceac, S.; Atkinson, P.M.; Milne, A.; Wu, L.; Harris, P. Adjusting for conditional bias in process model simulations of hydrological extremes: An experiment using the North Wyke Farm Platform. Front. Artif. Intell. 2020, 3, 82. [Google Scholar] [CrossRef] [PubMed]

- Quilty, J.; Adamowski, J. A stochastic wavelet-based data-driven framework for forecasting uncertain multiscale hydrological and water resources processes. Environ. Model. Softw. 2020, 130, 104718. [Google Scholar] [CrossRef]

- Rahman, A.T.M.S.; Hosono, T.; Kisi, O.; Dennis, B.; Imon, A.H.M.R. A minimalistic approach for evapotranspiration estimation using the Prophet model. Hydrol. Sci. J. 2020, 65, 1994–2006. [Google Scholar] [CrossRef]

- Althoff, D.; Rodrigues, L.N.; Bazame, H.C. Uncertainty quantification for hydrological models based on neural networks: The dropout ensemble. Stoch. Environ. Res. Risk Assess. 2021, 35, 1051–1067. [Google Scholar] [CrossRef]

- Fischer, S.; Schumann, A.H. Regionalisation of flood frequencies based on flood type-specific mixture distributions. J. Hydrol. X 2021, 13, 100107. [Google Scholar] [CrossRef]

- Cahyono, M. The development of explicit equations for estimating settling velocity based on artificial neural networks procedure. Hydrology 2022, 9, 98. [Google Scholar] [CrossRef]

- Papacharalampous, G.; Tyralis, H. Time series features for supporting hydrometeorological explorations and predictions in ungauged locations using large datasets. Water 2022, 14, 1657. [Google Scholar] [CrossRef]

- Mehedi, M.A.A.; Khosravi, M.; Yazdan, M.M.S.; Shabanian, H. Exploring temporal dynamics of river discharge using univariate long short-term memory (LSTM) recurrent neural network at East Branch of Delaware River. Hydrology 2022, 9, 202. [Google Scholar] [CrossRef]

- Rozos, E.; Koutsoyiannis, D.; Montanari, A. KNN vs. Bluecat—Machine learning vs. classical statistics. Hydrology 2022, 9, 101. [Google Scholar] [CrossRef]

- Rozos, E.; Leandro, J.; Koutsoyiannis, D. Development of rating curves: Machine learning vs. statistical methods. Hydrology 2022, 9, 166. [Google Scholar] [CrossRef]

- Granata, F.; Di Nunno, F.; Najafzadeh, M.; Demir, I. A stacked machine learning algorithm for multi-step ahead prediction of soil moisture. Hydrology 2023, 10, 1. [Google Scholar] [CrossRef]

- Payne, K.; Chami, P.; Odle, I.; Yawson, D.O.; Paul, J.; Maharaj-Jagdip, A.; Cashman, A. Machine learning for surrogate groundwater modelling of a small carbonate island. Hydrology 2023, 10, 2. [Google Scholar] [CrossRef]

- Goetz, J.N.; Brenning, A.; Petschko, H.; Leopold, P. Evaluating machine learning and statistical prediction techniques for landslide susceptibility modeling. Comput. Geosci. 2015, 81, 1–11. [Google Scholar] [CrossRef]

- Bahl, M.; Barzilay, R.; Yedidia, A.B.; Locascio, N.J.; Yu, L.; Lehman, C.D. High-risk breast lesions: A machine learning model to predict pathologic upgrade and reduce unnecessary surgical excision. Radiology 2018, 286, 810–818. [Google Scholar] [CrossRef]

- Feng, D.C.; Liu, Z.T.; Wang, X.D.; Chen, Y.; Chang, J.Q.; Wei, D.F.; Jiang, Z.M. Machine learning-based compressive strength prediction for concrete: An adaptive boosting approach. Constr. Build. Mater. 2020, 230, 117000. [Google Scholar] [CrossRef]

- Rustam, F.; Khalid, M.; Aslam, W.; Rupapara, V.; Mehmood, A.; Choi, G.S. A performance comparison of supervised machine learning models for Covid-19 tweets sentiment analysis. PLoS ONE 2021, 16, e0245909. [Google Scholar] [CrossRef]

- Bamisile, O.; Oluwasanmi, A.; Ejiyi, C.; Yimen, N.; Obiora, S.; Huang, Q. Comparison of machine learning and deep learning algorithms for hourly global/diffuse solar radiation predictions. Int. J. Energy Res. 2022, 46, 10052–10073. [Google Scholar] [CrossRef]

- Sagi, O.; Rokach, L. Ensemble learning: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1249. [Google Scholar] [CrossRef]

- Tyralis, H.; Papacharalampous, G.; Langousis, A. A brief review of random forests for water scientists and practitioners and their recent history in water resources. Water 2019, 11, 910. [Google Scholar] [CrossRef]

- Tyralis, H.; Papacharalampous, G. Boosting algorithms in energy research: A systematic review. Neural Comput. Appl. 2021, 33, 14101–14117. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Fan, J.; Yue, W.; Wu, L.; Zhang, F.; Cai, H.; Wang, X.; Lu, X.; Xiang, Y. Evaluation of SVM, ELM and four tree-based ensemble models for predicting daily reference evapotranspiration using limited meteorological data in different climates of China. Agric. For. Meteorol. 2018, 263, 225–241. [Google Scholar] [CrossRef]

- Besler, E.; Wang, Y.C.; Chan, T.C.; Sahakian, A.V. Real-time monitoring radiofrequency ablation using tree-based ensemble learning models. Int. J. Hyperth. 2019, 36, 427–436. [Google Scholar] [CrossRef]

- Ahmad, T.; Zhang, D. Novel deep regression and stump tree-based ensemble models for real-time load demand planning and management. IEEE Access 2020, 8, 48030–48048. [Google Scholar] [CrossRef]

- Liu, C.; Zhou, S.; Wang, Y.G.; Hu, Z. Natural mortality estimation using tree-based ensemble learning models. ICES J. Mar. Sci. 2020, 77, 1414–1426. [Google Scholar] [CrossRef]

- Ziane, A.; Dabou, R.; Necaibia, A.; Sahouane, N.; Mostefaoui, M.; Bouraiou, A.; Khelifi, S.; Rouabhia, A.; Blal, M. Tree-based ensemble methods for predicting the module temperature of a grid-tied photovoltaic system in the desert. Int. J. Green Energy 2021, 18, 1430–1440. [Google Scholar] [CrossRef]

- Park, S.; Kim, C. Comparison of tree-based ensemble models for regression. Commun. Stat. Appl. Methods 2022, 29, 561–589. [Google Scholar] [CrossRef]

- Montanari, A.; Young, G.; Savenije, H.H.G.; Hughes, D.; Wagener, T.; Ren, L.L.; Koutsoyiannis, D.; Cudennec, C.; Toth, E.; Grimaldi, S.; et al. “Panta Rhei—Everything Flows”: Change in hydrology and society—The IAHS Scientific Decade 2013–2022. Hydrol. Sci. J. 2013, 58, 1256–1275. [Google Scholar] [CrossRef]

- Blöschl, G.; Bierkens, M.F.P.; Chambel, A.; Cudennec, C.; Destouni, G.; Fiori, A.; Kirchner, J.W.; McDonnell, J.J.; Savenije, H.H.G.; Sivapalan, M.; et al. Twenty-three unsolved problems in hydrology (UPH)–A community perspective. Hydrol. Sci. J. 2019, 64, 1141–1158. [Google Scholar] [CrossRef]

- He, X.; Chaney, N.W.; Schleiss, M.; Sheffield, J. Spatial downscaling of precipitation using adaptable random forests. Water Resour. Res. 2016, 52, 8217–8237. [Google Scholar] [CrossRef]

- Baez-Villanueva, O.M.; Zambrano-Bigiarini, M.; Beck, H.E.; McNamara, I.; Ribbe, L.; Nauditt, A.; Birkel, C.; Verbist, K.; Giraldo-Osorio, J.D.; Xuan Thinh, N. RF-MEP: A novel random forest method for merging gridded precipitation products and ground-based measurements. Remote Sens. Environ. 2020, 239, 111606. [Google Scholar] [CrossRef]

- Chen, C.; Hu, B.; Li, Y. Easy-to-use spatial random-forest-based downscaling-calibration method for producing precipitation data with high resolution and high accuracy. Hydrol. Earth Syst. Sci. 2021, 25, 5667–5682. [Google Scholar] [CrossRef]

- Zhang, L.; Li, X.; Zheng, D.; Zhang, K.; Ma, Q.; Zhao, Y.; Ge, Y. Merging multiple satellite-based precipitation products and gauge observations using a novel double machine learning approach. J. Hydrol. 2021, 594, 125969. [Google Scholar] [CrossRef]

- Fernandez-Palomino, C.A.; Hattermann, F.F.; Krysanova, V.; Lobanova, A.; Vega-Jácome, F.; Lavado, W.; Santini, W.; Aybar, C.; Bronstert, A. A novel high-resolution gridded precipitation dataset for Peruvian and Ecuadorian watersheds: Development and hydrological evaluation. J. Hydrometeorol. 2022, 23, 309–336. [Google Scholar] [CrossRef]

- Lei, H.; Zhao, H.; Ao, T. A two-step merging strategy for incorporating multi-source precipitation products and gauge observations using machine learning classification and regression over China. Hydrol. Earth Syst. Sci. 2022, 26, 2969–2995. [Google Scholar] [CrossRef]

- Militino, A.F.; Ugarte, M.D.; Pérez-Goya, U. Machine learning procedures for daily interpolation of rainfall in Navarre (Spain). Stud. Syst. Decis. Control 2023, 445, 399–413. [Google Scholar] [CrossRef]

- Hu, Q.; Li, Z.; Wang, L.; Huang, Y.; Wang, Y.; Li, L. Rainfall spatial estimations: A review from spatial interpolation to multi-source data merging. Water 2019, 11, 579. [Google Scholar] [CrossRef]

- Abdollahipour, A.; Ahmadi, H.; Aminnejad, B. A review of downscaling methods of satellite-based precipitation estimates. Earth Sci. Inform. 2022, 15, 1–20. [Google Scholar] [CrossRef]

- Hengl, T.; Nussbaum, M.; Wright, M.N.; Heuvelink, G.B.M.; Gräler, B. Random forest as a generic framework for predictive modeling of spatial and spatio-temporal variables. PeerJ 2018, 6, e5518. [Google Scholar] [CrossRef]

- Mayr, A.; Binder, H.; Gefeller, O.; Schmid, M. The evolution of boosting algorithms: From machine learning to statistical modelling. Methods Inf. Med. 2014, 53, 419–427. [Google Scholar] [CrossRef]

- Natekin, A.; Knoll, A. Gradient boosting machines, a tutorial. Front. Neurorobotics 2013, 7, 21. [Google Scholar] [CrossRef] [PubMed]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A review of machine learning interpretability methods. Entropy 2020, 23, 18. [Google Scholar] [CrossRef]

- Roscher, R.; Bohn, B.; Duarte, M.F.; Garcke, J. Explainable machine learning for scientific insights and discoveries. IEEE Access 2020, 8, 42200–42216. [Google Scholar] [CrossRef]

- Belle, V.; Papantonis, I. Principles and practice of explainable machine learning. Front. Big Data 2021, 4, 688969. [Google Scholar] [CrossRef]

- Chen, T.; He, T.; Benesty, M.; Khotilovich, V.; Tang, Y.; Cho, H.; Chen, K.; Mitchell, R.; Cano, I.; Zhou, T.; et al. xgboost: Extreme Gradient Boosting. R package version 1.6.0.1. 2022. Available online: https://CRAN.R-project.org/package=xgboost (accessed on 31 December 2022).

- Durre, I.; Menne, M.J.; Vose, R.S. Strategies for evaluating quality assurance procedures. J. Appl. Meteorol. Climatol. 2008, 47, 1785–1791. [Google Scholar] [CrossRef]

- Durre, I.; Menne, M.J.; Gleason, B.E.; Houston, T.G.; Vose, R.S. Comprehensive automated quality assurance of daily surface observations. J. Appl. Meteorol. Climatol. 2010, 49, 1615–1633. [Google Scholar] [CrossRef]

- Menne, M.J.; Durre, I.; Vose, R.S.; Gleason, B.E.; Houston, T.G. An overview of the Global Historical Climatology Network-Daily database. J. Atmos. Ocean. Technol. 2012, 29, 897–910. [Google Scholar] [CrossRef]

- Xiong, L.; Li, S.; Tang, G.; Strobl, J. Geomorphometry and terrain analysis: Data, methods, platforms and applications. Earth-Sci. Rev. 2022, 233, 104191. [Google Scholar] [CrossRef]

- Papacharalampous, G.; Tyralis, H.; Doulamis, A.; Doulamis, N. Comparison of machine learning algorithms for merging gridded satellite and earth-observed precipitation data. Water 2023, 15, 634. [Google Scholar] [CrossRef]

- Spearman, C. The proof and measurement of association between two things. Am. J. Psychol. 1904, 15, 72–101. [Google Scholar] [CrossRef]

- Gneiting, T. Making and evaluating point forecasts. J. Am. Stat. Assoc. 2011, 106, 746–762. [Google Scholar] [CrossRef]

- Bogner, K.; Liechti, K.; Zappa, M. Technical note: Combining quantile forecasts and predictive distributions of streamflows. Hydrol. Earth Syst. Sci. 2017, 21, 5493–5502. [Google Scholar] [CrossRef]

- Papacharalampous, G.; Tyralis, H.; Langousis, A.; Jayawardena, A.W.; Sivakumar, B.; Mamassis, N.; Montanari, A.; Koutsoyiannis, D. Probabilistic hydrological post-processing at scale: Why and how to apply machine-learning quantile regression algorithms. Water 2019, 11, 2126. [Google Scholar] [CrossRef]

- Tyralis, H.; Papacharalampous, G.; Burnetas, A.; Langousis, A. Hydrological post-processing using stacked generalization of quantile regression algorithms: Large-scale application over CONUS. J. Hydrol. 2019, 577, 123957. [Google Scholar] [CrossRef]

- Kim, D.; Lee, H.; Beighley, E.; Tshimanga, R.M. Estimating discharges for poorly gauged river basin using ensemble learning regression with satellite altimetry data and a hydrologic model. Adv. Space Res. 2021, 68, 607–618. [Google Scholar] [CrossRef]

- Lee, D.G.; Ahn, K.H. A stacking ensemble model for hydrological post-processing to improve streamflow forecasts at medium-range timescales over South Korea. J. Hydrol. 2021, 600, 126681. [Google Scholar] [CrossRef]

- Tyralis, H.; Papacharalampous, G.; Langousis, A. Super ensemble learning for daily streamflow forecasting: Large-scale demonstration and comparison with multiple machine learning algorithms. Neural Comput. Appl. 2021, 33, 3053–3068. [Google Scholar] [CrossRef]

- Granata, F.; Di Nunno, F.; de Marinis, G. Stacked machine learning algorithms and bidirectional long short-term memory networks for multi-step ahead streamflow forecasting: A comparative study. J. Hydrol. 2022, 613, 128431. [Google Scholar] [CrossRef]

- Li, S.; Yang, J. Improved river water-stage forecasts by ensemble learning. Eng. Comput. 2022. [Google Scholar] [CrossRef]

- Papacharalampous, G.; Tyralis, H. Hydrological time series forecasting using simple combinations: Big data testing and investigations on one-year ahead river flow predictability. J. Hydrol. 2020, 590, 125205. [Google Scholar] [CrossRef]

- Cheng, B.; Titterington, D.M. Neural networks: A review from a statistical perspective. Stat. Sci. 1994, 9, 2–30. [Google Scholar]

- Jain, A.K.; Mao, J.; Mohiuddin, K.M. Artificial neural networks: A tutorial. Computer 1996, 29, 31–44. [Google Scholar] [CrossRef]

- Paliwal, M.; Kumar, U.A. Neural networks and statistical techniques: A review of applications. Expert Syst. Appl. 2009, 36, 2–17. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- Papacharalampous, G.; Tyralis, H. A review of machine learning concepts and methods for addressing challenges in probabilistic hydrological post-processing and forecasting. Front. Water 2022, 4, 961954. [Google Scholar] [CrossRef]

- Tyralis, H.; Papacharalampous, G. A review of probabilistic forecasting and prediction with machine learning. ArXiv 2022, arXiv:2209.08307. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria. 2022. Available online: https://www.R-project.org (accessed on 31 December 2022).

- Kuhn, M. caret: Classification and Regression Training. R package version 6.0-93. 2022. Available online: https://CRAN.R-project.org/package=caret (accessed on 31 December 2022).

- Dowle, M.; Srinivasan, A. data.table: Extension of ‘data.frame’. R package version 1.14.4. 2022. Available online: https://CRAN.R-project.org/package=data.table (accessed on 31 December 2022).

- Hollister, J.W. elevatr: Access Elevation Data from Various APIs. R package version 0.4.2. 2022. Available online: https://CRAN.R-project.org/package=elevatr (accessed on 31 December 2022).

- Pierce, D. ncdf4: Interface to Unidata netCDF (Version 4 or Earlier) Format Data Files. R package version 1.19. 2021. Available online: https://CRAN.R-project.org/package=ncdf4 (accessed on 31 December 2022).

- Bivand, R.S.; Keitt, T.; Rowlingson, B. rgdal: Bindings for the ‘Geospatial’ Data Abstraction Library. R package version 1.5-32. 2022. Available online: https://CRAN.R-project.org/package=rgdal (accessed on 31 December 2022).

- Pebesma, E. Simple features for R: Standardized support for spatial vector data. R J. 2018, 10, 439–446. [Google Scholar] [CrossRef]

- Pebesma, E. sf: Simple Features for R. R package version 1.0-8. 2022. Available online: https://CRAN.R-project.org/package=sf (accessed on 31 December 2022).

- Bivand, R.S. spdep: Spatial Dependence: Weighting Schemes, Statistics. R package version 1.2-7. 2022. Available online: https://CRAN.R-project.org/package=spdep (accessed on 31 December 2022).

- Bivand, R.S.; Wong, D.W.S. Comparing implementations of global and local indicators of spatial association. TEST 2018, 27, 716–748. [Google Scholar] [CrossRef]

- Bivand, R.S.; Pebesma, E.; Gómez-Rubio, V. Applied Spatial Data Analysis with R, 2nd ed.; Springer: New York, NY, USA, 2013. [Google Scholar] [CrossRef]

- Wickham, H.; Averick, M.; Bryan, J.; Chang, W.; McGowan, L.D.; François, R.; Grolemund, G.; Hayes, A.; Henry, L.; Hester, J.; et al. Welcome to the tidyverse. J. Open Source Softw. 2019, 4, 1686. [Google Scholar] [CrossRef]

- Wickham, H. tidyverse: Easily Install and Load the ‘Tidyverse’. R package version 1.3.2. 2022. Available online: https://CRAN.R-project.org/package=tidyverse (accessed on 31 December 2022).

- Greenwell, B.; Boehmke, B.; Cunningham, J. gbm: Generalized Boosted Regression Models. R package version 2.1.8.1. 2022. Available online: https://CRAN.R-project.org/package=gbm (accessed on 31 December 2022).

- Wright, M.N. ranger: A Fast Implementation of Random Forests. R package version 0.14.1. 2022. Available online: https://CRAN.R-project.org/package=ranger (accessed on 31 December 2022).

- Wright, M.N.; Ziegler, A. ranger: A fast implementation of random forests for high dimensional data in C++ and R. J. Stat. Softw. 2017, 77, 1–17. [Google Scholar] [CrossRef]

- Tyralis, H.; Papacharalampous, G. scoringfunctions: A Collection of Scoring Functions for Assessing Point Forecasts. R package version 0.0.5. 2022. Available online: https://CRAN.R-project.org/package=scoringfunctions (accessed on 31 December 2022).

- Wickham, H.; Hester, J.; Chang, W.; Bryan, J. devtools: Tools to Make Developing R Packages Easier. R package version 2.4.5. 2022. Available online: https://CRAN.R-project.org/package=devtools (accessed on 31 December 2022).

- Xie, Y. knitr: A Comprehensive Tool for Reproducible Research in R. In Implementing Reproducible Computational Research; Stodden, V., Leisch, F., Peng, R.D., Eds.; Chapman and Hall/CRC: London, UK, 2014. [Google Scholar]

- Xie, Y. Dynamic Documents with R and knitr, 2nd ed.; Chapman and Hall/CRC: London, UK, 2014. [Google Scholar]

- Xie, Y. knitr: A General-Purpose Package for Dynamic Report Generation in R. R package version 1.40. 2022. Available online: https://CRAN.R-project.org/package=knitr (accessed on 31 December 2022).

- Allaire, J.J.; Xie, Y.; McPherson, J.; Luraschi, J.; Ushey, K.; Atkins, A.; Wickham, H.; Cheng, J.; Chang, W.; Iannone, R. rmarkdown: Dynamic Documents for R. R package version 2.17. 2022. Available online: https://CRAN.R-project.org/package=rmarkdown (accessed on 31 December 2022).

- Xie, Y.; Allaire, J.J.; Grolemund, G. R Markdown: The Definitive Guide; Chapman and Hall/CRC: London, UK, 2018; ISBN 9781138359338. Available online: https://bookdown.org/yihui/rmarkdown (accessed on 31 December 2022).

- Xie, Y.; Dervieux, C.; Riederer, E. R Markdown Cookbook; Chapman and Hall/CRC: London, UK, 2020; ISBN 9780367563837. Available online: https://bookdown.org/yihui/rmarkdown-cookbook (accessed on 31 December 2022).

| Predictor Variable | Predictor Set 1 | Predictor Set 2 | Predictor Set 3 |

|---|---|---|---|

| PERSIANN value 1 | ✔ | × | ✔ |

| PERSIANN value 2 | ✔ | × | ✔ |

| PERSIANN value 3 | ✔ | × | ✔ |

| PERSIANN value 4 | ✔ | × | ✔ |

| IMERG value 1 | × | ✔ | ✔ |

| IMERG value 2 | × | ✔ | ✔ |

| IMERG value 3 | × | ✔ | ✔ |

| IMERG value 4 | × | ✔ | ✔ |

| PERSIANN distance 1 | ✔ | × | ✔ |

| PERSIANN distance 2 | ✔ | × | ✔ |

| PERSIANN distance 3 | ✔ | × | ✔ |

| PERSIANN distance 4 | ✔ | × | ✔ |

| IMERG distance 1 | × | ✔ | ✔ |

| IMERG distance 2 | × | ✔ | ✔ |

| IMERG distance 3 | × | ✔ | ✔ |

| IMERG distance 4 | × | ✔ | ✔ |

| Station elevation | ✔ | ✔ | ✔ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Papacharalampous, G.; Tyralis, H.; Doulamis, A.; Doulamis, N. Comparison of Tree-Based Ensemble Algorithms for Merging Satellite and Earth-Observed Precipitation Data at the Daily Time Scale. Hydrology 2023, 10, 50. https://doi.org/10.3390/hydrology10020050

Papacharalampous G, Tyralis H, Doulamis A, Doulamis N. Comparison of Tree-Based Ensemble Algorithms for Merging Satellite and Earth-Observed Precipitation Data at the Daily Time Scale. Hydrology. 2023; 10(2):50. https://doi.org/10.3390/hydrology10020050

Chicago/Turabian StylePapacharalampous, Georgia, Hristos Tyralis, Anastasios Doulamis, and Nikolaos Doulamis. 2023. "Comparison of Tree-Based Ensemble Algorithms for Merging Satellite and Earth-Observed Precipitation Data at the Daily Time Scale" Hydrology 10, no. 2: 50. https://doi.org/10.3390/hydrology10020050

APA StylePapacharalampous, G., Tyralis, H., Doulamis, A., & Doulamis, N. (2023). Comparison of Tree-Based Ensemble Algorithms for Merging Satellite and Earth-Observed Precipitation Data at the Daily Time Scale. Hydrology, 10(2), 50. https://doi.org/10.3390/hydrology10020050