Abstract

The growing need for rapid screening of adsorption energies in organic materials has driven substantial progress in developing various architectures of equivariant graph neural networks (eGNNs). This advancement has largely been enabled by the availability of extensive Density Functional Theory (DFT)-generated datasets, sufficiently large to train complex eGNN models effectively. However, certain material groups with significant industrial relevance, such as aromatic compounds, remain underrepresented in these large datasets. In this work, we aim to bridge the gap between limited, domain-specific DFT datasets and large-scale pretrained eGNNs. Our methodology involves creating a specialized dataset by segregating aromatic compounds after a targeted ensemble extraction process, then fine-tuning a pretrained model via approaches that include full retraining and systematically freezing specific network sections. We demonstrate that these approaches can yield accurate energy and force predictions with minimal domain-specific training data and computation. Additionally, we investigate the effects of augmenting training datasets with chemically related but out-of-domain groups. Our findings indicate that incorporating supplementary data that closely resembles the target domain, even if approximate, would enhance model performance on domain-specific tasks. Furthermore, we systematically freeze different sections of the pretrained models to elucidate the role each component plays during adaptation to new domains, revealing that relearning low-level representations is critical for effective domain transfer. Overall, this study contributes valuable insights and practical guidelines for efficiently adapting deep learning models for accurate adsorption energy predictions, significantly reducing reliance on extensive training datasets.

1. Introduction

The depletion of lighter conventional crude oils has led to the increased use of heavy, extra-heavy, and other unconventional crudes as the primary feedstock for refining [1]. This shift has continuously brought asphaltene, the heaviest and most surface-reactive non-volatile petroleum fraction, into the research spotlight. Asphaltenes present significant challenges in oil pipelines and processing equipment due to their propensity to aggregate and adhere to surfaces, leading to fouling and flow disruptions [2]. Developing effective inhibitors for asphaltene aggregation relies on precise modeling of adsorption interactions [3], where accurate predictions of adsorption energies for heavy aromatic compounds are critical.

Beyond oil industries, in catalytic refining and petrochemical processes, large aromatic molecules often act as precursors to coke. Polyaromatic hydrocarbons (PAHs) can adsorb on catalyst surfaces and polymerize into carbonaceous residues that block active sites and pores, causing catalyst deactivation [4]. For instance, in zeolite-catalyzed reactions, heavy PAHs formed in the process may stick inside pore channels and evolve into coke, progressively shutting down catalytic activity. Understanding the adsorption energies of such aromatic species on catalyst materials helps in predicting coke formation tendencies and designing more coke-resistant catalysts. While traditional descriptors, such as M-OH bond strength or the heat of metal oxide formation, provide useful insights, they primarily reflect bulk properties rather than surface phenomena, where catalytic reactions occur [5]. To reveal surface characteristics, adsorption energy has emerged as a more precise descriptor, linking structural properties to catalytic performance [6].

Moreover, aromatic carbon surfaces are also of interest in clean energy applications like hydrogen storage. Graphitic and porous carbon materials (rich in aromatic rings) can adsorb H2 via physisorption, and large PAH molecules serve as useful models for these adsorption sites. Improvements to hydrogen uptake are often sought by chemical modifications; for example, decorating aromatic structures with alkali metals (e.g., doping C60 fullerenes or coronene molecules with Na/K) markedly enhances H2 adsorption capacity [7]. Predicting how H2 interacts energetically with large aromatic frameworks is vital for screening new storage materials. If the adsorption energy is too weak, hydrogen will not be stored; if too strong, it will not release easily. Reliable predictive models of adsorption energy allow us to balance these factors and design optimized carbon-based adsorbents for hydrogen fuel systems [7]. This has industrial relevance for developing efficient on-board hydrogen tanks and storage systems.

Calculating adsorption energies traditionally relies on Density Functional Theory (DFT), which provides high accuracy but is computationally expensive, scaling poorly with system size. DFT has been successfully applied to small adsorbates (e.g., C1–C6 molecules), but for molecules the size of asphaltenes or bulky PAHs, direct DFT simulations are extremely demanding [8]. Large organics often have many atoms, flexible conformations, and possibly disordered structures, all of which lead to a huge configuration space that is expensive to sample with DFT. Moreover, achieving high accuracy requires including dispersion corrections or specialized functionals, further increasing the cost. Modeling such systems has long been a challenge because standard DFT without dispersion corrections fails to capture the London dispersion energy [9]. These limitations motivate the use of predictive modeling and machine learning (ML) as an alternative or complementary approach.

Data-driven models, once trained, can predict adsorption energies in milliseconds, effectively bypassing the need for repeated heavy DFT calculations. For example, a graph neural network (GNN) model named GAME-Net presented by Pablo-Garcia et al. [8] was able to reproduce DFT adsorption energies of organic molecules on metal surfaces with a mean error in the order of 0.1–0.2 eV, while being about a million times faster than DFT. Another benefit of ML models, especially GNNs, is their ability to capture the underlying patterns of molecular interactions from data [10]. A GNN treats the adsorbate (and sometimes the surface) as a graph of atoms and bonds, naturally encoding the connectivity and composition of systems [8,11,12,13]. Through training on many examples, the model can learn the contributions of different substructures (aromatic rings, functional groups, etc.) to the adsorption energy. In the context of complex aromatics, a GNN might learn, for instance, how an extra aromatic ring generally increases dispersion attraction, or how a sulfur heteroatom can strengthen binding via specific interactions. Such insights are implicitly gained from the data, allowing the model to generalize to new molecules [14,15]. Importantly, modern GNN frameworks can incorporate geometry and physics; equivariant GNNs (eGNNs) include the 3D spatial arrangement of atoms and respect rotational symmetries [11,16], which is crucial for adsorption problems [17] (more details in Section 2.2). By leveraging these advanced architectures, predictive models can account for both the chemical structure and the configuration of the adsorbate–surface system.

The Open Catalyst 2020 (OC20) Dataset and Community Challenges [18] has released model checkpoints for various architectures and sizes, each trained on different splits of the dataset [19]. One remaining challenge is how to leverage these pretrained models for new domains that differ from the original training distribution. For instance, large and complex adsorbates (especially poly-aromatic species like asphaltenes) remain underrepresented in public benchmarks. For example, OC20’s 82 adsorbate molecules consist mostly of small fragments relevant to renewable energy (e.g., CO, CO2, CH3OH), with no polycyclic aromatics. Similarly, the OpenDAC dataset [20] (nearly 40 million DFT calculations) focuses on CO2 adsorption in 8400 metal–organic frameworks [20] and does not include any large organic molecules. Large aromatics, which can contain dozens of carbon atoms in fused rings (often with heteroatoms like S or N), are essentially absent from these datasets. This represents a significant gap, because models trained on small adsorbates may not generalize reliably to much larger aromatic systems. In general, current eGNNs lack explicit mechanisms to represent phenomena specific to large aromatic systems (e.g., extensive π-conjugation or enhanced dispersion interactions) beyond what can be learned from smaller substructures. Without additional training, a model might not fully capture how an asphaltene’s size and ring connectivity influence its adsorption geometry and energy.

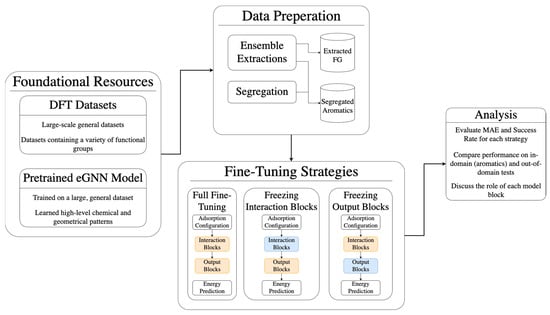

To bridge the gap toward predicting the energies of large aromatic adsorbates, this work focuses on exploring transfer learning and fine-tuning strategies with limited DFT data. More specifically, we adapt a pretrained eGNN by further training it on datasets containing larger, more complex molecules. Fine-tuning refers to the process of taking a pretrained machine learning model and adjusting its weights slightly by training it further on a new, often smaller dataset [21]. The pretrained model has already learned general features from a large dataset, so fine-tuning adapts it for a specific, related task rather than training from scratch [21]. Therefore, while prior knowledge is leveraged, the training is accelerated. A comprehensive review of fine-tuning strategies for eGNNs appears in Appendix A.1. By combining a pretrained model with data spanning diverse functional groups, we aim to deliver high-fidelity energy predictions for complex aromatic adsorbates, with implications for both reducing refinery fouling and optimizing hydrogen storage materials. In summary, our work makes the following specific contributions, which are visualized in Figure 1:

Figure 1.

A visual summary of the research workflow.

- Preparing specialized datasets by performing an ensemble extraction on existing data and segregating aromatic molecules (Section 3.1);

- Implementing and comparing fine-tuning strategies, including full model retraining and partial updates where key model components are systematically frozen (Section 3.2.2);

- Analyzing the role of model components to understand how Interaction and Output Blocks contribute to domain adaptation;

- Evaluating the impact of data diversity by augmenting the training set with chemically related, out-of-domain molecules.

This work is organized as follows: Section 2 provides the necessary background, including a detailed explanation of adsorption energy (Section 2.1), an overview of equivariant GNNs for adsorption energy prediction (Section 2.2), an introduction to the DFT datasets involved in pretraining and fine-tuning (Section 2.3), and a discussion of the challenges associated with applying GNNs to large molecules (Section 2.4). Section 3 presents the methods, beginning with the dataset processing strategies (Section 3.1) and followed by an in-depth description of the fine-tuning approach, including relevant training details and performance insights (Section 3.2). In Section 4, the results of various fine-tuning strategies are presented, and conclusions are given in Section 5.

2. Background

2.1. Adsorption Energy

Adsorption energy () quantifies the strength of the interaction between an adsorbate molecule and a substrate surface. This interaction typically results from attractive forces such as van der Waals forces, electrostatic interactions, or chemical bonding. One way to obtain is using Equation (1)

Here, is the energy of the adsorption system and and are the energy of the substrate by itself and the gas-phase molecule energy of the adsorbate, respectively [8]. When an adsorbate interacts with a solid surface, it forms a specific configuration based on the interactions with the substrate. Accurately determining adsorption energy requires identifying the global minimum energy configuration; that is, the arrangement that minimizes the system’s total energy across all possible adsorbate placements and orientations.

In the traditional way of calculating adsorption energies via DFT, identifying the global minimum often involves sampling various adsorbate–surface configurations. Thus, selecting optimal configurations has relied on expert intuition or heuristic methods leveraging surface symmetry. However, these approaches often depend on manually selecting plausible starting geometries and are inherently biased by the user’s assumptions [22]. Meanwhile, aiming to exhaustively screen all configurations is not only computationally prohibitive [23] but also, to some level, requires initial assumptions about binding sites and molecular conformations, introducing further bias [24]. As such, while these strategies have been effective in descriptor-based studies [10], they lack scalability for complex systems with numerous local energy minima. Furthermore, large adsorbates often present additional challenges, including flexible internal structures, multidentate binding geometries, and diverse interaction sites, particularly on defected or amorphous surfaces.

To mitigate these issues, machine learning-based structural relaxations on all initial configurations are employed and any configuration that violates physical constraints, such as adsorbate dissociation, desorption, or substrate mismatch, is discarded. Because even small force inaccuracies or optimizer discrepancies can introduce spurious distortions and generate novel configurations, every ML-predicted adsorption energy must be confirmed with a single-point DFT calculation to ensure accuracy [10].

2.2. Equivariant GNNS for Adsorption Energy Prediction

Equivariant GNNs are designed to predict adsorption energies by capturing the intricate interactions between adsorbates and surfaces while adhering to the physical symmetries of these systems [11]. In adsorption studies, the adsorbate–surface system can be represented as a graph using GNN, where nodes correspond to atoms, and edges represent bonds or interactions between them. To accurately represent each atom, node embeddings can be enriched with various chemical descriptors. While basic options include atomic numbers or one-hot encodings to differentiate chemical elements [8], embeddings can also incorporate additional atomic properties like electronegativity, atomic radius, and ionization energy. Structural characteristics, such as hybridization state, formal charge, and valence electrons, could potentially add further detail to node and edge representations [25]. Furthermore, self-supervised [26] and unsupervised [27] learning approaches aim for learnable feature representations, instead of relying on manually constructed material descriptors.

Recently, a significant advancement in GNNs has been the incorporation of physical symmetries through equivariant neural networks, where equivariance refers to the property of a function that transforms predictably under certain input transformations [11,28]. For physical systems, essential symmetries include translational, rotational, and reflection invariance, which ensure that properties like energy remain unchanged irrespective of a system’s orientation or position. Equivariant networks integrate these symmetries into the model’s architecture, ensuring that predictions remain physically consistent and adhere to fundamental laws [28]. Equivariant neural networks for 3D systems, such as SE(3)/E(3)-equivariant networks, leverage transformations represented by tensor fields and spherical harmonics, which project spatial information in a way that respects these symmetries (for details, see Appendix A.2). Despite the advantages of equivariant GNNs, certain challenges exist when applying them to predicting the adsorption energies of heavy aromatic molecules (details in Section 2.4).

In ML-driven energy and force predictions, different tasks are designed to approximate computational chemistry simulations efficiently. Among them, Structure to Energy and Forces (S2EF) [18] focuses on predicting the total energy of an atomic configuration along with the per-atom forces acting on each atom. This task is widely used to accelerate molecular dynamics simulations and structure relaxations. This work specifically focuses on S2EF (for other available tasks, see Appendix A.2), given its foundational role in enabling faster and more accurate force evaluations, which are critical for large-scale molecular simulations.

2.3. DFT Datasets

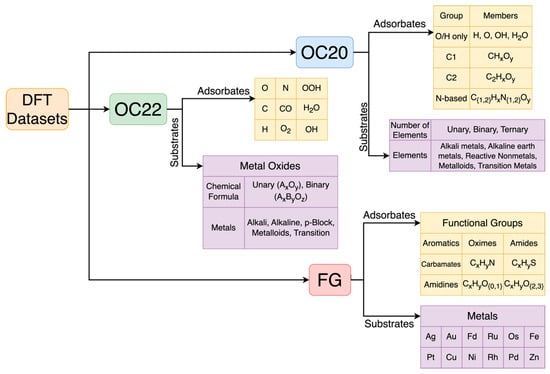

Here, we introduce the key DFT datasets utilized in this work, covering their methodologies for calculating adsorption energies and forces, as well as the structures, adsorbates, and substrates involved. Figure 2 shows an overview of the datasets and materials in each of them. Next, a brief introduction to each of the datasets is presented.

Figure 2.

Overview of the Density Functional Theory datasets used in this work and their corresponding materials. The models are pretrained on OC20 (Open Catalyst 2020) and OC22 (Open Catalyst 2022), while the FG (Functional Groups) dataset serves as the fine-tuning dataset.

2.3.1. Open Catalyst Datasets (OC20 and OC22)

The OC20 dataset [18] comprises over 1.2 million DFT relaxations, totaling approximately 250 million single-point calculations, across a diverse range of materials, surfaces, and adsorbates. This dataset focuses on small adsorbates, including C1 and C2 compounds (containing one and two carbon atoms, respectively), as well as nitrogen- and oxygen-containing intermediates, adsorbed onto various catalyst surfaces. Recognizing the limited representation of oxides in OC20, the same authors introduced the Open Catalyst 2022 (OC22) dataset [29]. OC22 addresses this gap by incorporating 62,331 DFT relaxations (~9.85 million single-point calculations) spanning a wide range of oxide materials, surface coverages, and adsorbates.

The dataset generation process applied three critical filters during DFT relaxation: excluding desorption events (non-binding adsorbates), dissociated adsorbates (the breaking of an adsorbate into different atoms or fragments), and systems with significant adsorbate-induced surface distortions [10]. Crucially, adsorbates in this context encompasses not only intact molecules but also molecular fragments (e.g., functional groups, radicals) that adsorb via distinct binding sites. However, dissociative adsorption (where fragments form during relaxation) was explicitly excluded to preserve the integrity of single-molecule adsorption energy calculations. By retaining systems where pre-defined fragments or molecules adsorb intact (without further dissociation), the dataset captures realistic adsorption mechanisms while avoiding misleading energy artifacts. This approach ensures that models trained on the data learn transferable relationships between adsorbate structure (including fragment geometries and binding-site variability) and adsorption energy. For aromatic compounds, which often adsorb as intact ring systems or functionalized derivatives, the constraints align with their typical non-dissociative binding behavior, enabling robust generalization of the GNN model to aromatic adsorption phenomena.

2.3.2. FG Dataset

The Functional Groups (FG) dataset [8] provides DFT-calculated adsorption energies and forces for a selection of functional groups adsorbed onto various substrates. It includes 207 organic molecules adsorbed onto 14 transition metals, featuring diverse functional groups and aromatic structures with heteroatoms. This dataset includes detailed information on the structural configurations of both the adsorbates and substrates.

The molecules included in the FG dataset cover key functional groups in organic chemistry, featuring nitrogen, oxygen, and sulfur heteroatoms. These functional groups are divided into several categories to reflect the diversity of chemical interactions relevant to surface adsorption. The categories include non-cyclic hydrocarbons, O-functionalized compounds (such as alcohols, ketones, aldehydes, ethers, carboxylic acids, and carbonates), and N-functionalized compounds (amines, imines, and amidines). Additionally, S-functionalized compounds, such as thiols, thioaldehydes, and thioketones, are included, as well as N- and O-functionalized combinations like amides, oximes, and carbamate esters. The dataset also contains aromatic molecules with up to two rings, which may also include heteroatoms. This dataset serves as a comprehensive resource for fine-tuning and testing ML models, particularly in predicting adsorption energies for large and diverse adsorbates.

2.4. Challenges in Applying GNNs to Predict Adsorption Energies for Large Molecules

Most existing GNNs for adsorption energy prediction are pretrained on datasets containing small adsorbates, such as the OC20. These models are optimized for relatively simple systems with fewer atoms and less structural complexity. Applying them directly to large molecules, such as those in the FG dataset, introduces significant challenges.

One major issue is the mismatch in scale and complexity. Large adsorbates often contain flexible bonds, diverse functional groups, and intricate interaction sites, significantly increasing the number of possible adsorption configurations. This added complexity can overwhelm existing models, resulting in poor predictions. Additionally, the architectural capacity of pretrained GNNs may be insufficient to process such extensive input sizes effectively. Without adaptations, these models are unable to capture the detailed interactions present in larger molecules, leading to diminished performance. Computationally, processing the full structures of large adsorbates requires significantly more memory and processing time, making direct application infeasible for high-throughput studies or large-scale datasets. In other words, the challenges associated with applying GNNs to predict adsorption energies for large molecules stem from the increased scale and complexity of the systems, as well as the limitations of models pretrained on small adsorbates. Preprocessing the FG dataset to focus on critical interactions and segregating it into molecular families for specialized fine-tuning offer practical solutions. These strategies reduce computational demands and enhance model accuracy, enabling more effective predictions for complex adsorption systems. In Section 3, we will present in detail the development of our approaches for predicting the adsorption energies of large molecules.

3. Methods

3.1. Processing the Dataset

The primary dataset in focus is the FG dataset. This section outlines the methods used to process the structures (adsorbate + substrate pairs) within the FG dataset.

3.1.1. Ensemble Extraction

The FG dataset entries each consist of a relaxed network of substrate atoms paired with a single adsorbate molecule, with each substrate built from 48 atoms. Although the aim is to eventually train models that can handle larger structures, including every adsorbate atom as input is both unnecessary and computationally expensive during fine-tuning and inference. Following an approach similar to Pablo-Garcia et al. [8], the dataset entries were streamlined to retain only the adsorbate atoms and the substrate atoms deemed essential.

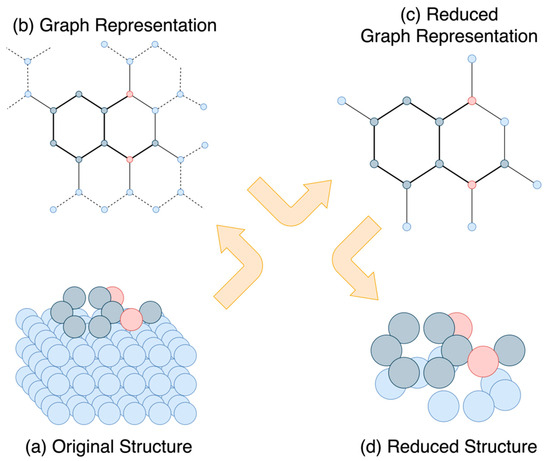

As shown in Figure 3, the process starts by generating a graph representation for the given structure. To generate the graph representation for each entry, Pablo-Garcia et al. [8] applied the Voronoi tessellation method. This technique partitions the three-dimensional space by assigning every atom a region that comprises all points closer to it than to any other atom. In the resulting graph, atoms are represented as nodes, and a connection is drawn between two nodes if their corresponding atoms share a Voronoi facet and if the distance between them is less than the sum of their covalent radii plus an additional tolerance. In this work, the covalent radii values from the original FG dataset publication were used, supplemented with a tolerance of 0.5 Å to better detect metal–adsorbate connections. Next, rather than constructing a full graph with nodes and edges for all atoms, metal atoms that were not directly connected to the adsorbate were removed from the graph. These omitted connections are represented as dotted edges in Figure 3. Finally, the refined structure was obtained by retaining only the atoms corresponding to the nodes of the reduced graph representation, while discarding the remaining atoms.

Figure 3.

Ensemble extraction on the entries of FG (Functional Groups) dataset. Blue spheres represent substrate atoms, and grey and red spheres represent adsorbate atoms. The figure is intended for conceptual illustration only and does not depict specific atomic elements.

3.1.2. Segregating the Functional Groups

The FG dataset comprises a diverse collection of adsorbate–substrate pairs, with each pair represented in one or two structural configurations, culminating in a total of 6866 entries. These adsorbates originate from nine distinct families of organic molecules, encompassing a diverse range of chemical functionalities (see the summary in Figure 2). Unfortunately, in the publicly available version of the FG dataset [8], these nine categories are not explicitly segregated. From a ML standpoint, there are advantages to fine-tuning a model exclusively on structurally and chemically similar adsorbates, since such a dataset distribution can align well with transfer learning principles, improving performance on molecules akin to those in the target category. For instance, one might hypothesize that training a model specifically on aromatic compounds could yield better predictions for a new aromatic molecule compared to training on a broader mix of functional groups.

However, there is a significant trade-off. While filtering for specific families (e.g., segregating aromatic compounds from the rest) can concentrate the training data on structurally relevant examples, it inherently reduces the overall training set size. In many ML contexts, especially in deep learning, a larger dataset, even if somewhat heterogeneous, can often outperform a smaller but more homogeneous subset. This occurs partly because the model can still learn to generalize across a range of similar chemical interactions present in all FG dataset entries. Consequently, there is an inherent balancing act: refining the dataset to closely match the desired chemical family versus retaining the breadth and volume of training samples to avoid overfitting or insufficient coverage of relevant chemical space.

In our work, we explored this balance by applying certain composition-based criteria, like the number of carbon atoms and the ratio of carbon to hydrogen atoms, to distinguish aromatic compounds from the broader FG dataset, creating a sub-dataset called FG-aromatics, with 1140 entries. The aim was to assess whether restricting the training set to those compounds would yield better model performance, compared to fine-tuning on a larger and more diverse dataset. After applying the ensemble extraction on the FG dataset and FG-aromatics, we obtain Extracted FG and Segregated Aromatics, respectively. These two datasets are the ones used for fine-tuning the model in this work.

3.2. Fine-Tuning Details

3.2.1. The Pretrained Model

The GemNet-OC-S2EFS-OC20+OC22 configuration builds upon the GemNet-OC [30] model by training on a combined dataset consisting of 133,934,018 frames from OC20 and 9,854,504 single-point calculations from OC22. Leveraging the Geometric Message Passing Neural Network (GemNet) architecture [31], GemNet-OC represents atomic systems as graphs, where atoms are depicted as nodes and connections between atoms within a specified distance serve as edges. This enhanced model is one of the most high-performing approaches to the OC20 dataset, delivering a 16% improvement in performance over the original GemNet model [31] while reducing training time by a factor of ten. The model is designed to accurately predict both the energy of the system and the forces acting on each individual atom, making it a powerful tool for fine-tuning custom datasets. Below, we will describe the specifics of GemNet-OC related to our work here (for details about this model, see Appendix A.3).

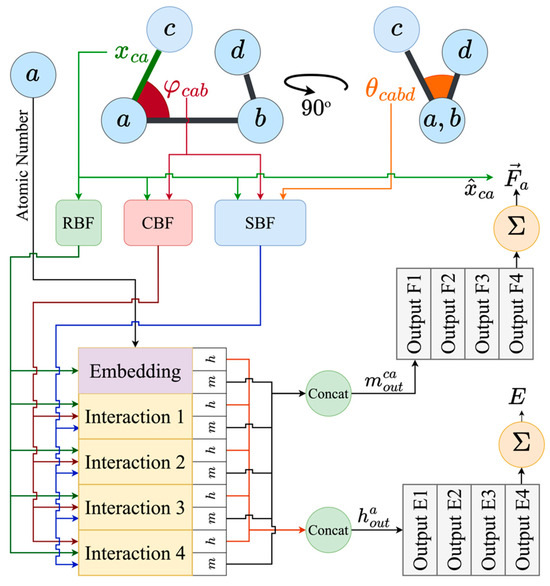

Figure 4 provides a streamlined overview of the GemNet-OC model architecture. At its core, GemNet-OC begins by embedding both atoms and the edges between them into high-dimensional vectors. This initial embedding step encodes geometric relationships via three types of Bessel functions: radial (RBF), circular (CBF), and spherical (SBF). The purpose of these functions is to capture pairwise distances, three-body angles, and four-body dihedral-like configurations. Polynomial envelopes are applied to these Bessel functions for smooth differentiability, following the approach of the DimeNet family of models [12,28].

Figure 4.

The simplified architecture of GemNet-OC. The model starts by embedding atoms and edges with Basis functions. These embeddings pass through an Embedding layer and multiple Interaction layers that refine representations for energy and force prediction. The Interaction stage outputs are then fed into the Output Blocks, which convert the concatenated features into per-atom energy and force contributions that are then aggregated across all atoms.

The embeddings are then passed through an Embedding layer and multiple Interaction layers. Each of these layers produces two representations, and , which in GemNet-OC are used to compute energy and force information, respectively. Stacking several Interaction layers refines these representations, enabling the model to learn progressively richer chemical and geometric features. Finally, each Interaction stage feeds into Output Blocks, consisting of dense and residual layers (labeled “Output E1-4” and “Output F1-4” in Figure 4). These blocks convert the concatenated representation from the Interaction Blocks into energy and force contributions for each node (atom). The total energy and forces on each atom are obtained by aggregating the energy and force outputs across all atoms.

The GemNet-OC model demonstrated strong performance on the pretraining data, achieving a 50.05% success rate and an energy mean absolute error (MAE) of 0.1694 eV when trained on the OC20 test dataset as reported in the AdsorbML paper [10], and an energy MAE of 0.483 eV when trained on both the OC20 and OC22 datasets. However, its performance significantly declines when evaluated directly on the Extracted FG dataset. Specifically, the success rate plummets to just 1.59%, and the energy MAE rises sharply to 5.562 eV. This stark contrast highlights the essential need to fine-tune in order to transfer the model’s capabilities to different or more constrained datasets.

In this work, GemNet-OC serves as the base model and is fine-tuned exclusively using the Extracted FG and Segregated Aromatics datasets.

3.2.2. Fine-Tuning Strategies

In adapting the GemNet-OC model to the Extracted FG dataset, three distinct fine-tuning strategies were explored, each differing in how much of the pretrained network was updated (shown in Table 1). These strategies were developed based on the fact that deep learning models typically learn features in a hierarchical manner, where the representations in early layers capture low-level details and progressively build up to more abstract concepts in deeper layers. This hierarchical progression of learned features has been widely observed and discussed in the literature [32,33]. Similarly, in the GemNet-OC model, the design of its blocks follows a comparable principle. The Interaction Blocks (see Figure 3), which are positioned earlier in the network, primarily focus on encoding the geometrical relationships and local interactions of the input. In contrast, the Output Blocks are located deeper in the network and are tasked with synthesizing the processed information into representations that are directly related to predicting forces and energies. For instance, Yang et al. [34] demonstrate the application of intermediate embeddings of a modified GemNet-dT model. By treating the intermediate representations as fingerprints, they enable efficient similarity searches in large databases, showcasing the versatility of GemNet-OC’s architecture. Inspired by these works, the fine-tuning strategies developed here are designed to probe the model’s ability to capture nuanced geometric relationships as well as to uncover its potential for applications in materials discovery and structure-property mapping.

Table 1.

Summary of the fine-tuning strategies.

As shown in Table 1, the first strategy involved freezing the weights in the Interaction Blocks (FIB), which means those layers responsible for multi-level message passing remained fixed. Only the Output Blocks and any additional layers outside of the Interaction Blocks were trained. This approach preserved the geometric and chemical insights learned during pretraining, assuming that the fundamental understanding of interatomic relationships remained applicable to the new dataset. At the same time, the Output Blocks and the other parts could adjust their parameters to better align the existing embeddings with the new target task.

The second strategy froze the weights in the Output Blocks (FOB), allowing only the Interaction Blocks and remaining modules to update. Since the Output Blocks produce the final transformations to energy and force predictions, freezing them retains the final layers’ pretrained behavior. In contrast, the Interaction Blocks must adapt their embeddings to match these fixed output transformations while incorporating new data from the Extracted FG dataset. Lastly, the third strategy was full fine-tuning (FFT), where every learnable parameter in the GemNet-OC model was allowed to update. This complete approach lets the network recalibrate both the geometry-learning and prediction layers, though it risks overfitting if the reduced dataset lacks sufficient coverage relative to the model’s complexity. Each of these strategies balances preserving valuable pretraining knowledge with allowing enough flexibility for the new domain.

3.2.3. Evaluation Metrics

During the training and validation process, a range of metrics is used to monitor the accuracy of both energy and force predictions. Specifically, the mean absolute error (MAE) is measured for the predicted energies, while separate MAEs are calculated for the x, y, and z components of the forces. An overall forces MAE is also tracked, along with the cosine similarity between predicted and true force vectors, the magnitude error, and a combined metric that evaluates whether both energy and forces remain within certain thresholds. This assortment of metrics offers a detailed view of the model’s performance across various dimensions of the prediction task, with the primary metric during training being the forces MAE.

For final evaluations, the key indicators are the success rate and the energy MAE. The success rate is defined as the percentage of test frames whose predicted energy lies within 0.1 eV of the DFT reference, following the metric proposed in the AdsorbML paper [10] and other seminal references [18,35,36]. Meanwhile, the energy MAE provides a direct measurement of how closely the model’s energy predictions match the ground-truth DFT values. By considering both the success rate and the energy MAE, it is possible to balance the need for overall accuracy with the stricter requirement of matching DFT-level precision on a high proportion of test examples.

It is important to note that the two primary evaluation metrics, mean absolute error (MAE) and success rate, are inversely related by definition; a lower MAE corresponds to a higher success rate, and vice versa.

4. Results and Discussions

4.1. Comparing Data Diversity

Table 2 shows that the two fine-tuned models, each fully trained for 16 epochs, outperform the pretrained baseline by a large margin in both the Segregated Aromatics and the Extracted FG tests. The pretrained network exhibits very poor performance, with success rates under 2% and MAE values above 5 eV in both test sets. This result highlights how relying on a model trained solely on the original OC20 data is not sufficient for either the FG or the aromatics distribution. In Table 2, extracted FG refers to ensembles extracted from the full FG dataset (6866 entries), while segregated aromatics consists exclusively of ensembles containing an aromatic molecule adsorbate from the FG dataset (1140 entries).

Table 2.

The evaluation metrics for the pretrained checkpoint and fine-tuned model using Extracted FG and FG-aromatics.

To establish a baseline trained on the FG dataset, GAME-Net is utilized. GAME-Net, a GNN introduced in the same study [8] as the FG dataset for adsorption energy prediction, incorporates three core components: (1) fully connected layers for node-level feature transformation, (2) convolutional layers to aggregate neighbor node information, and (3) a pooling layer to generate graph-level energy predictions. With only 285,761 parameters, the architecture prioritizes efficiency while capturing local and global interactions. To evaluate its performance, a testing protocol analogous to ensemble extraction was employed, ensuring robustness in predictions.

GAME-Net’s results were compared against fine-tuned versions of the larger GemNet-OC model (41 million parameters) on the same dataset. The comparison was limited to MAE, as the authors of GAME-Net did not include success rate. GAME-Net achieved a MAE of 0.34 eV for adsorption energy prediction of aromatics, where GemNet-OC, when fine-tuned on segregated aromatic compounds, reduced the MAE to 0.125 eV, and further to 0.084 eV when trained on Extracted FG data. This substantial performance gap highlights the efficacy of fine-tuning large pretrained models. However, GAME-Net demonstrates competitive utility as a lightweight alternative for scenarios prioritizing computational efficiency over accuracy gains. The comparison underscores the trade-offs between model scale, generalizability, and precision in adsorption energy prediction tasks.

Comparing the two fully fine-tuned models, the one trained on the full FG dataset gives the highest success rate and the lowest MAE in both the Segregated Aromatics and Extracted FG tests. Even in the Segregated Aromatics test, where one might expect the network fine-tuned solely on aromatics to excel, the FG-trained model still performs better. This suggests that using a larger and more varied dataset (FG) helps the network learn geometry and energy relationships that transfer reasonably well to aromatics. Even when the additional data is not aromatics, it is more similar to aromatics compared to the pretraining data (OC20 and OC22). In contrast, the model trained strictly on aromatics becomes too specialized for aromatic systems and struggles with FG test cases that deviate from aromatic structures, leading to a substantial drop in performance on the test set of Extracted FG. Nonetheless, its accuracy on aromatics is not far behind that of the FG-trained network, confirming that it is indeed better adapted to those particular molecular configurations but lacks the broader coverage provided by the more diverse FG data.

This outcome is underpinned by an interplay between the nature and volume of the fine-tuning data. The superior performance of the model fine-tuned on Extracted FG stems from its exposure to a “chemically adjacent” space; the non-aromatic molecules it sees are structurally more complex than the simple pretraining data, containing very small adsorbates (e.g., CO, CH3OH). This exposure allows the model to learn more robust and generalizable features, such as handling larger carbon frameworks, which are directly beneficial for the task of energy prediction for aromatics. In contrast, the model fine-tuned only on the Segregated Aromatics dataset lacks the broad exposure needed for generalization. This narrower focus leads to overspecialization, reducing its performance on non-aromatic structures. Additionally, the smaller size of the Segregated Aromatics dataset (1132 samples vs. 6915 in Extracted FG) increases the risk of overfitting. As a result, the model may learn patterns too specific to the training data, which can slightly weaken its ability to generalize, even to new aromatic structures, compared to the more robust model trained on the larger, more diverse dataset.

4.2. Comparing Fine-Tuning Strategies

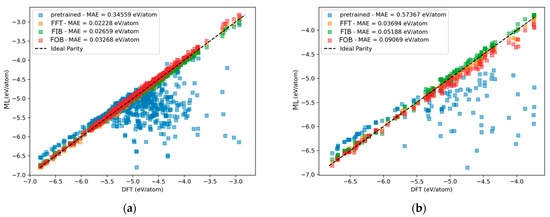

In the plots in Figure 5, each point shows the model’s predicted energy versus the true DFT value after only one epoch of fine-tuning. The diagonal line indicates perfect agreement: points closer to that line are more accurate. The pretrained model (blue squares) remains widely scattered, reflecting a high MAE in both Extracted FG and Segregated Aromatics. This is because it has not yet adapted to the new domain. In contrast, the fully fine-tuned model (orange squares) lies tightly around the diagonal after just one epoch, achieving the lowest MAE in both training sets.

Figure 5.

Parity plots showing each model’s energy predictions versus the Density Functional Theory values after a single epoch of training on the Extracted FG (a) and Segregated Aromatics (b) subsets. MAE: mean absolute error; FFT: full fine-tuning; FIB: freezing the Interaction Blocks; FOB: freezing the Output Blocks.

As stated before and highlighted in the work of Yang et al. [34], the early layers of GemNet models, specifically Embedding and Interaction blocks, can be used to generate the atomic fingerprints to describe the local environment of an atom within a chemical system. This suggests a functional distinction between the layers: the earlier blocks primarily focus on capturing geometric relationships (e.g., bond angles, distances, and directional interactions), while the Output blocks are more involved in transforming these geometric representations into predictions of forces and energies. However, this distinction is not absolute, as both sets of layers inherently contain information related to geometry and forces. Thus, when analyzing fine-tuning strategies, it is reasonable to associate earlier layers with geometric encoding and deeper layers with property prediction, while acknowledging the interconnected nature of these tasks.

In FIB strategy, when the Interaction Blocks are frozen (green squares), there is some improvement over the baseline, but the geometry layers remain stuck in their original configuration, so predictions are still more spread out than the fully fine-tuned case. Freezing the Output Blocks (FOB, red squares) helps the geometry layers adapt, though the static output layer imposes a temporary mismatch between newly learned embeddings and the final predictions. As a result, red points are closer than blue but less accurate than green for now.

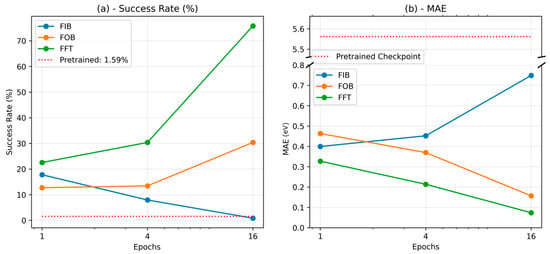

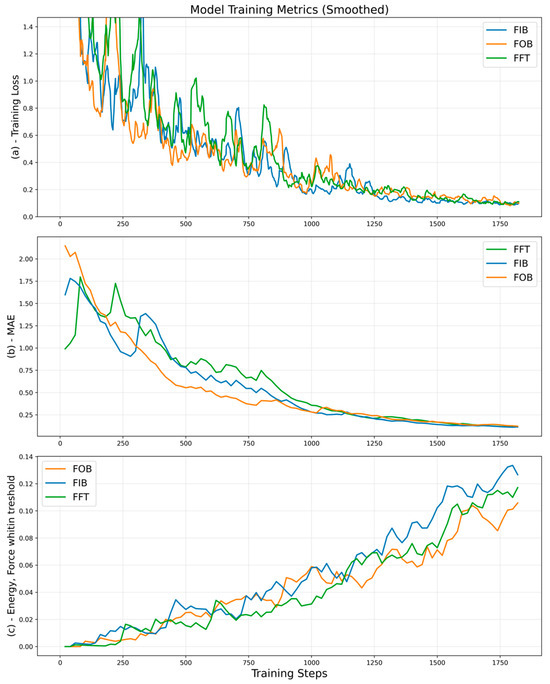

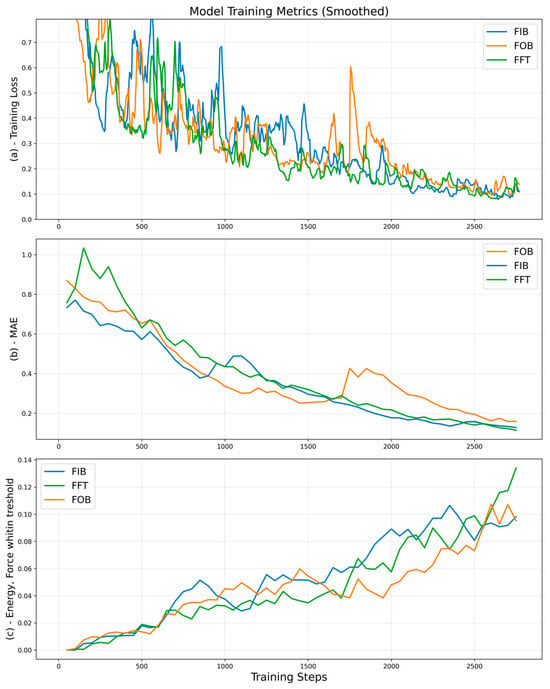

For more detailed study of different fine-tuning strategies, the evaluation metrics were thoroughly investigated over different training epochs. Figure 6 shows that fully fine-tuning the entire GemNet-OC network (FFT) yields the best improvement in accuracy on the Extracted FG dataset, as evidenced by the highest success rate (over 70% by epoch 16) and the lowest MAE. When the Interaction Blocks are frozen (FIB), the model’s success rate actually declines over time, and the MAE steadily increases. This suggests that the geometry-focused portion of the model (i.e., the message-passing layers) must be allowed to adapt to the new domain for good performance. Simply retraining the Output Blocks cannot compensate if the underlying geometric embeddings remain stuck in their pretrained state. By contrast, freezing the Output Blocks while allowing the Interaction Blocks to train (FOB) leads to moderate improvement. Here, the model can re-learn or refine the geometric relationships in the new dataset, although the final layers are still inherited from pretraining. This strategy yields better results than leaving the geometry layers frozen, but it still lags behind fully fine-tuning the entire network. As shown in Figure 6, allowing both the geometry (Interaction Blocks) and final prediction stages (Output Blocks) to adapt yields the highest accuracy. This is evidenced by the increased success rate and lower MAE. This suggests that, with sufficient data, full fine-tuning best adapts the model to a domain that differs significantly from its original training data.

Figure 6.

Evaluation metrics for fine-tuned models on Extracted FG in different training epochs: (a) success rate, and (b) energy’s mean absolute error (MAE).

When the Interaction Blocks remain frozen, the model is locked into a geometric representation tuned to the original pretraining data. If the Extracted FG dataset differs significantly, either in atomic configurations or chemical contexts, the fixed geometry layers cannot adapt to these new patterns. Although the Output Blocks may adjust initially, they do not have enough flexibility by themselves to reconcile the mismatch between an old geometric representation and the new domain. As training proceeds, this mismatch can actually grow worse. The Output Blocks, trying to compensate for an outdated geometric embedding, might overfit or make inconsistent corrections. Consequently, the overall accuracy begins to decline instead of improving with additional epochs. By contrast, FOB and FFT allow either the Interaction Blocks or the entire network to update and learn geometry more suited to the Extracted FG dataset, which explains why their performance improves over time while the FIB approach degrades.

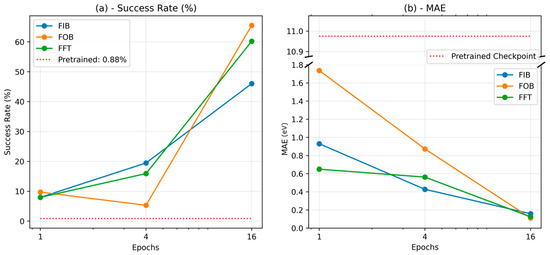

Figure 7 shows the results for the reduced set of only aromatic molecules (FG-aromatics). It can be seen that the trends differ slightly from the full dataset. In the case of full fine-tuning, the situation is similar to the FG dataset: there is constant improvement with more epochs, though the absolute values of the evaluation metrics are worse compared to fine-tuning on all FG data. As explained in the previous section, this stems from the model underfitting due to less data, despite that data being more homogeneous.

Figure 7.

Evaluation metrics for fine-tuned models on Segregated Aromatics in different training epochs: (a) success rate, and (b) energy’s mean absolute error (MAE).

When the Interaction Blocks are frozen (FIB), although the geometric side of the model cannot be adjusted, its output (the input to the Output Blocks) is more consistent because of the more homogeneous geometry of aromatics, which is reflected in the output of the Interaction Blocks. Therefore, the Output Blocks can align themselves with this consistent signal, and this alignment leads to the continuous improvement of the FIB approach in both evaluation metrics.

The case of FOB is more complex. Because the aromatics are more homogeneous and closer in type to one another, the geometry representations may converge more smoothly toward a suitable embedding. The suitability of the intermediate embedding (the output of the Interaction Blocks and the input to the Output Blocks) must account for two factors: first, learning a good geometric representation of the aromatics; secondly, ensuring that representation matches the frozen output layer’s transformation into force and energy values. Achieving both of these goals with a smaller dataset can still benefit from more epochs, which is evident in the steady and steep decrease in the MAE as shown in Figure 7.

However, there are two observations that need explanation in Figure 7: (a) unlike in Figure 6, the FOB method trained for 16 epochs performs better than FFT on Segregated Aromatics, and its success rate is on par with full fine-tuning on Extracted FG; (b) there is a dip in the success rate of the FOB method from epoch 1 to epoch 4, followed by a steep rise at epoch 16. To explain observation (a), one likely reason is that for a smaller, more homogeneous domain such as Segregated Aromatics, the pretrained Output Blocks still provide a robust mapping from geometry to force/energy values. Meanwhile, the trainable Interaction Blocks can converge relatively well toward representations that match these frozen Output Blocks. In contrast, fully fine-tuning on a small dataset may lead to more significant parameter shifts in both geometry and output layers, sometimes causing slower convergence or partial overfitting. Because the FOB approach only refines the geometry to align with a stable, pretrained Output Block, it can ultimately exceed the performance of fully fine-tuning under these conditions.

The superior performance of the FOB strategy over FFT on the smaller, more homogeneous Segregated Aromatics dataset, while seemingly counter-intuitive, can be explained by established principles of transfer learning and regularization. One of the primary challenges when fine-tuning a large, high-capacity model on a small dataset is the significant risk of overfitting. Overfitting occurs when a model learns not only the underlying physical patterns in the training data but also its statistical noise and specific quirks. This leads to a model that performs well on the data it has seen but fails to generalize to new, unseen examples. In the context of the FFT strategy on the small Segregated Aromatics dataset, the model has enough freedom to drastically alter its pretrained weights, potentially “forgetting” robust, general features in favor of memorizing the limited set of training examples.

The fine-tuning strategies that involve parameter freezing, such as FOB and FIB, serve as a form of structural regularization [37] that creates a fundamental trade-off. On the one hand, freezing layers prevents overfitting by preserving the robust, general knowledge learned during pretraining and by simplifying the optimization landscape for the smaller dataset [38]. This regularization effect is powerful and explains why FOB and FIB can achieve competitive results on the smaller, homogeneous Segregated Aromatics dataset, as they avoid the instability inherent in training a high-capacity model on limited data [39].

On the other hand, this same constraint inherently limits the model’s flexibility and capacity to adapt to the specific nuances of a new chemical domain. The optimal balance between these competing effects (preventing overfitting versus enabling adaptation) appears to depend on the size and diversity of the fine-tuning data. For the larger and more diverse Extracted FG dataset, the model has sufficient data to learn without severe overfitting, making the flexibility of FFT (full fine-tuning) the dominant factor for achieving the best performance. In contrast, for the smaller Segregated Aromatics dataset, the risk of overfitting is much higher, making the regularization provided by FOB and FIB a more critical component for achieving a robust and accurate model.

Another point to note about the dip in performance of FOB at epoch 4 is that the success rate metric is relatively sensitive. As shown in Appendix B (where the “Energy, Forces within threshold” metric was used, similar to success rate but incorporating both energy and forces), performance can fluctuate throughout training. These fluctuations arise from transient misalignments between the evolving geometry representation and the frozen output stage, which do not necessarily show up as strongly in a relatively smoother error metric like MAE.

4.3. Implications

Large aromatic compounds have profound environmental impacts across both fossil fuel refining and clean energy storage. In the oil industry, heavy aromatics such as asphaltenes foul wells and refinery equipment by precipitating and depositing on surfaces, forcing plants to burn extra fuel to maintain throughput, thereby increasing CO2 emissions and generating toxic waste that must be treated or landfilled [40]. Conversely, in hydrogen storage systems, porous carbon materials with aromatic ring structures enable efficient H2 physisorption at milder conditions, offering a pathway to safer, higher-density storage without extreme pressures or cryogenics [41].

Rapid, accurate prediction of adsorption energies for these large aromatics is therefore essential. In refineries, it can inform anti-fouling strategies that reduce emissions and operational waste. Also, in energy storage, it can guide the design of next-generation adsorbents that lower lifecycle impacts. Equivariant graph neural networks (eGNNs) represent a data-efficient machine learning approach to this challenge. By fine-tuning pretrained eGNNs on targeted aromatic datasets, we can deliver fast, high-fidelity adsorption predictions that drive both cleaner oil processing and more sustainable hydrogen storage solutions.

One key area for development for the methodology presented in this work is exploring alternative deep learning architectures. Investigating models like Equiformers [11,16], alongside systematic evaluation of different fine-tuning strategies, could offer valuable comparisons to the current model and highlight the key components necessary for robust domain adaptation. For instance, this study used only two datasets and showed that full fine-tuning maximizes accuracy for a 40M-parameter GemNet model on larger datasets (e.g., Extracted FG), while partial parameter freezing achieves similar results with 40% less computing on smaller datasets (e.g., Segregated Aromatics). Expanding to hierarchical DFT datasets (progressively smaller and more specialized), paired with advanced models like Equiformer v2, could systematically reveal how model size, data granularity, and tuning strategies interact. By mapping these relationships across architectures, empirical scaling laws could emerge, defining thresholds where specific model–data pairings optimize accuracy or efficiency.

5. Conclusions

In this work, the capabilities of equivariant graph neural networks for predicting the adsorption energies of aromatic molecules on metal substrates were investigated. A GemNet-OC model [30], which had been pretrained on the OC20/OC22 dataset [18,29] of smaller molecules, was fine-tuned on the FG dataset [8], which comprises molecules with up to 12 carbon atoms, including aromatics. Preprocessing was applied to the FG dataset to extract adsorbate atoms and nearby substrate atoms, thereby reducing structural complexity. Furthermore, the aromatic entries were segregated to form a dedicated FG-aromatics dataset.

Full fine-tuning of the GemNet-OC model on both the Extracted FG and FG-aromatics datasets was performed. Superior performance was observed when the model was fine-tuned on the FG dataset, as demonstrated by evaluations on the test splits of both FG and FG-aromatics. Although improvements were noted for the FG-aromatics dataset, more significant gains were recorded on FG, likely due to the greater structural diversity. The improvements with Extracted FG can be associated with the larger volume of training data (non-aromatic entries of the FG dataset), which still more closely resembled aromatic configurations compared to the original pretraining data (OC20/OC22). Ultimately, the decision to segregate was shown to depend on the specific objectives, and requires balancing the importance of detecting nuanced aromatic interactions with the potential loss of predictive power that might arise from not utilizing a more comprehensive dataset.

To elucidate the role of different modules in the GemNet-OC model, three fine-tuning strategies were adopted: freezing the Interaction Blocks (FIB), freezing the Output Blocks (FOB), and fully fine-tuning all modules (FFT). It was observed that FFT yielded the best overall performance, while the decline in performance under the FIB approach underscored the critical contribution of the Interaction Blocks. The success of FFT highlights the necessity of updating both geometric (Interaction Blocks) and task-specific (Output Blocks) components when transferring models to new molecular systems.

For the FG-aromatics subset, further observations were recorded regarding the fine-tuning strategies. When the Interaction Blocks were frozen (FIB), the geometric outputs were rendered more consistent because of the homogeneous nature of aromatic molecules. This consistency enabled the Output Blocks to be effectively aligned with the stable signal, resulting in continuous improvements in both evaluations. On the other hand, with the FOB strategy, the convergence of the geometric representations toward a suitable embedding had to be achieved while ensuring that the fixed output layer correctly transformed this embedding into force and energy values. This caused larger errors in earlier steps of the training, but extended training epochs contributed to the alignment, as reflected by a steady decrease in mean absolute error.

It is important to note that our primary objective was to benchmark and improve model performance specifically for aromatic systems, given their unique electronic properties and prevalence in catalysis. Our analysis and fine-tuning strategies are therefore intentionally centered on the aromatic subset to provide a deep and focused investigation into the models’ handling of these molecules, rather than a broad comparative study across different functional groups or metal substrates. Such analyses are left for future work.

In summary, this work demonstrates that enhanced predictive performance could be achieved by fine-tuning an eGNN on a diverse dataset and that the Interaction Blocks played a pivotal role in adapting the model to new molecular domains. For accurate and transferable predictions, balancing data diversity with domain specificity and architecting models is key to probing the adsorptions of complex molecules onto metal substrates. These findings establish a roadmap for optimizing graph neural networks in computational surface science. Furthermore, the eGNN framework presented here is property-agnostic and holds significant promise for broader applications. While this study focused on adsorption energy, the model architecture could be readily adapted to predict other crucial quantum chemical properties, such as electronic band gaps, vibrational frequencies, or transition state barriers, provided that appropriately labeled training datasets were available. This flexibility underscores the potential of eGNNs as a versatile tool for accelerated materials and catalyst discovery.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/chemengineering9040085/s1.

Author Contributions

Conceptualization, H.I.P. and C.J.; methodology, H.I.P.; software, H.I.P.; validation, H.I.P.; formal analysis, H.I.P.; writing—original draft preparation, H.I.P.; writing—review and editing, C.J.; supervision, C.J.; project administration, C.J.; funding acquisition, C.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Sciences and Engineering Research Council of Canada (NSERC), grant number RGPIN-2020-06099. The computing resources were provided by the Digital Research Alliance of Canada.

Data Availability Statement

Data is contained within the Supplementary Materials.

Acknowledgments

We are grateful for the help of Santiago Morandi, for his invaluable assistance in navigating and exploring the published FG dataset.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Appendix A.1. Advances in Fine-Tuning GNNs

When it comes to adjusting the models for predicting the energy of a specific group of material, a common approach is to take a model pretrained on a broad dataset (like OC20) and fine-tune it on a smaller, domain-specific dataset of interest. The key is to adapt to the new domain (e.g., aromatic adsorbates on surfaces) while preserving the general chemical insight learned from the source domain. Simply retraining all parameters on a tiny aromatic dataset risks overfitting and “forgetting” the prior knowledge [21]. However, this loss of prior knowledge may be acceptable if the goal is accurate prediction within the new domain and the model performs well on the test split of the fine-tuning dataset. Overall, one should carefully monitor the models’ performance on test and validation splits and, when necessary, use techniques that prevent overfitting. For example, fine-tuning only the last few layers of the network allows reuse of low-level features (learned on small-molecule data) and focuses the adaptation on high-level energy predictions. In some cases of distribution shift, even tuning just the early layers can be beneficial. The optimal strategy depends on how the target domain differs from the source.

Several recent studies demonstrate the potential and limitations of fine-tuning eGNNs for new regimes. Jiao et al. showed that an OC20-trained GNN could be adapted to predict aqueous-phase adsorption energies, which are relevant to CO2 reduction in water, by fine-tuning with a carefully selected subset of new data [14]. They employed an active learning approach to identify the most informative configurations to calculate with DFT, thereby maximizing model improvement from a limited data budget. This highlights the importance of data efficiency when venturing into underrepresented domains (like different solvents or much larger adsorbates). Active learning or selective sampling can mitigate the lack of large training sets by focusing the fine-tuning on cases that teach the model aspects of the new chemistry it would otherwise miss.

Beyond conventional fine-tuning, hybrid strategies have emerged to handle small data regimes. One novel approach is to combine a pretrained eGNN’s learned representation with a simple machine learning model for the new task. For instance, Falk et al. [15] introduced a mean embedding kernel ridge regression (MEKRR) technique. They first train an eGNN on a large dataset (OC20) to learn general chemical features, then freeze the GNN and use its outputs as descriptors in a kernel ridge regression fitted to the target task. This two-step method preserved the eGNN’s ability to encode geometric and elemental interactions (ensuring rotational and translational symmetry by design) while using a lightweight tunable model to make final energy predictions. The result was a highly data-efficient adaptation that succeeded on complex catalytic datasets outside the original training distribution. Such an approach addresses some shortcomings of naive fine-tuning: by avoiding updating all neural weights, it prevents catastrophic forgetting and requires very few target examples to fit the simple regression model. The trade-off is that the flexibility of the model is somewhat limited by the fixed representation. Nonetheless, the success of MEKRR in predicting energies for systems with bond-breaking and other unseen events hints that rich latent representations from large-scale pretraining can be leveraged for new chemistry with minimal additional data, if combined with the right fine-tuning strategy.

Despite these advances, shortcomings remain in current approaches when dealing with large aromatic adsorbates. For instance, there is the issue of size extrapolation. That is, while some evidence shows that an eGNN can generalize from small fragments to moderately larger molecules, as system size grows further, new physics can come into play (e.g., long-range dispersion, intramolecular strain in a large floppy aromatic). In most of the current GNNs, message passing typically has a fixed cutoff distance. However, a large aromatic adsorbate might reach beyond that, potentially fragmenting the modeled interactions. In practice, this could be a limitation in representing an asphaltene adsorbed across multiple surface sites or interacting with itself (aggregation). Most existing studies stop at molecules with tens of atoms, so the behavior on systems with hundreds of atoms (approaching asphaltene cluster sizes) is not well characterized in the literature.

Also, standard benchmarks and leaderboards have oriented the community toward incremental improvements in given tasks, leaving certain application-relevant scenarios under-explored. The focus has been on small, common adsorbates for which data is plentiful. Thus, there is a knowledge gap regarding how to model the large aromatic adsorbates that are crucial in some industries (e.g., petroleum refining, where asphaltene adsorption leads to catalyst fouling). The lack of benchmark data for such cases means that even state-of-the-art eGNNs may struggle without further development. In summary, current eGNN models and fine-tuning methods do not yet comprehensively address scaling up to complex aromatic systems.

Appendix A.2. Equivariant GNNs

Equivariant graph neural networks (GNNs) are designed to predict adsorption energies by capturing the intricate interactions between adsorbates and surfaces while adhering to the physical symmetries of these systems. In adsorption studies, the adsorbate–surface system can be represented as a graph where nodes correspond to atoms, and edges represent bonds or interactions between them. Equivariant GNNs enhance traditional GNNs by incorporating rotational, translational, and reflectional symmetries directly into their architectures, ensuring that predictions remain invariant under physical transformations.

The way GNNs work is by converting the atomic structure of the adsorbate–surface system into a graph. In the graph, atoms are treated as nodes, and the bonds or interactions between them are edges, effectively capturing the molecular or crystal structure as a graph. To accurately represent each atom, node embeddings can be enriched with various chemical descriptors. While basic options include atomic numbers or one-hot encodings to differentiate chemical elements, embeddings can also incorporate additional atomic properties like electronegativity, atomic radius, and ionization energy. Structural characteristics, such as hybridization state, formal charge, and valence electrons, add further detail to node representations, and environmental factors, e.g., the number of bonded neighbors or distances to adjacent atoms, provide additional refinement.

Representing atoms as nodes in a graph has become a common practice in the literature. However, determining the edges within this framework involves various approaches. The most straightforward approach is to derive edges from the molecular graph, specifically the chemical bonds. Despite its prevalence, this approach is not MD-compatible as it introduces discontinuity and disrupts the underlying physics [28]. MD compatibility requires the predicted force field generated by the model, when applicable, to constitute a conservative vector field. A straightforward approach to ensure this is to have the model predict the potential energy function, from which the force can be derived through gradient computation (utilizing back-propagation with respect to atom coordinates).

A significant advancement in GNNs is the incorporation of physical symmetries through equivariant neural networks, where equivariance refers to the property of a function that transforms predictably under certain input transformations. For physical systems, essential symmetries include translational, rotational, and reflectional invariance, which ensure that properties like energy remain unchanged irrespective of a system’s orientation or position. Equivariant networks integrate these symmetries into the model’s architecture, ensuring that predictions remain physically consistent and adhere to fundamental laws. For a detailed explanation of equivariance, symmetries in 3D space (SE(3)/E(3)), and the requirements for models to adhere to these symmetries, see Appendix A of the original Equiformer paper [11].

Equivariant neural networks for 3D systems, such as SE(3)/E(3)-equivariant networks, leverage transformations represented by tensor fields and spherical harmonics, which project spatial information in a way that respects these symmetries. This approach offers several advantages:

Enhanced Modeling of Physical Laws: Equivariant networks respect conservation laws and symmetries, ensuring their predictions align with physical reality. These networks incorporate inductive biases that make their internal representations and predictions equivariant to 3D translations, rotations, and optionally inversions. They build equivariant features for each node using vector spaces of irreducible representations (irreps) and enable interactions through equivariant operations like tensor products. This is crucial for accurately predicting properties such as forces and energies, as improper symmetry handling can lead to unrealistic results. For example, models like Tensor Field Networks [42] achieve 3D rotational and translational equivariance, using spherical harmonics and irreps to ensure predictions adhere to these symmetries.

Reduction of Model Complexity: By embedding physical constraints directly into the model’s architecture, equivariant networks achieve high accuracy with fewer parameters, simplifying the architecture. This constraint-based approach reduces overfitting, improves computational efficiency, and often avoids the need for manual feature engineering. Models like DimeNet [28] use a basis representation that combines directional and distance information, enabling compact and efficient representations of atomic interactions.

Improved Data Efficiency: Encoding symmetry information into the model structure itself enhances data efficiency, reducing the amount of data required to capture underlying physics. This efficiency is valuable in domains like adsorption modeling, where datasets may be limited in scope or costly to generate. Since these models understand rotational and translational invariance as part of their structure, they require fewer training examples to learn accurate predictions for complex molecules [11].

Incorporating these symmetries requires constructing node features through invariant or equivariant representations, and applying directional embeddings and tensor products to ensure that message-passing steps preserve symmetry across layers. For instance, in DimeNet [28], directional message passing is refined by joint 2D representations of interatomic distances and angles using spherical harmonics and Bessel functions, creating rotationally invariant features. Furthermore, to maintain differentiability, a requirement in physical simulations, equivariant models avoid non-continuous activations (like ReLU) and opt for basis representations that stabilize predictions even under small deformations [28].

In ML-driven energy and force predictions, different tasks are designed to approximate computational chemistry simulations efficiently. Among them, Structure to Energy and Forces (S2EF), Initial Structure to Relaxed Structure (IS2RS), and Initial Structure to Relaxed Energy (IS2RE) serve distinct yet interconnected purposes across various applications beyond catalysis [18].

S2EF focuses on predicting the total energy of an atomic configuration along with the per-atom forces acting on each atom. This task is fundamental in approximating computationally expensive quantum chemistry calculations and is widely used to accelerate molecular dynamics simulations and structure relaxations. IS2RS, on the other hand, takes an initial molecular or atomic configuration and predicts the final relaxed atomic positions. This is particularly relevant for modeling stable molecular structures, understanding reaction mechanisms, and simulating physical processes that involve structural rearrangements. IS2RE bypasses the explicit relaxation process by directly predicting the energy of the final relaxed configuration from an initial structure, providing a rapid alternative for estimating system stability and adsorption properties.

This work specifically focuses on S2EF, given its foundational role in enabling faster and more accurate force evaluations, which are critical for large-scale molecular simulations.

Appendix A.3. Details on the Architecture of GemNet-OC

The GemNet-OC model is engineered to predict the energy and forces within atomic systems through a sophisticated graph-based framework. It begins by receiving the atomic numbers and spatial positions of all atoms in the system as input, eliminating the need for additional information such as bond types. In this representation, atoms are modeled as nodes, and edges are established between atoms based on their proximity, forming a graph that encapsulates the system’s structural information.

As explained in Section 3.2, GemNet-OC begins by embedding atoms and the edges between them into high-dimensional vectors that encode geometric relationships using three distinct Bessel functions—radial, circular, and spherical—to capture pairwise distances, three-body angles, and four-body dihedral-like configurations, with polynomial envelopes ensuring smooth differentiability. These initial embeddings are then processed through an embedding layer and further refined by multiple interaction layers, each producing dual representations that are leveraged to compute energy and force information, thereby progressively building richer chemical and geometric features. Finally, each Interaction stage feeds into Output Blocks that predict individual contributions to energy and forces, with the total energy and forces on each atom being obtained by aggregating these contributions across all atoms.

It should be noted that ML models usually output energy and then predict forces by calculating via back-propagation. This approach ensures a conservative force field, which is crucial for the stability of molecular dynamics simulations. However, equivariant neural networks, such as GemNet-OC, can also directly predict forces and other vector quantities, leveraging their symmetry-preserving architectures. For further details on this direct force prediction approach, refer to Section 3 of the original GemNet paper.

The Interaction Blocks of the model employ a multi-level message-passing mechanism that enhances traditional two-level schemes [17,28,43]. This hierarchy includes atom-to-atom interactions, which efficiently exchange information using only the distances between atoms without imposing neighbor restrictions. Additionally, atom-to-edge and edge-to-atom interactions were added to GemNet-OC and did not exist in the original implementation of GemNet. These interactions facilitate detailed information flow by incorporating directional embeddings, geometric data, and learnable filters. During this process, the model calculates interatomic distances, angles between neighboring edges, and dihedral angles formed by triplets of edges, enabling it to understand complex geometric relationships within the atomic system.

Unlike many models that use a fixed distance cutoff to define interatomic connections, GemNet-OC selects a predetermined number of nearest neighbors for each atom. While this might initially raise concerns about differentiability if atoms switch neighbor order, the authors report no practical problems. This approach ensures a consistent neighborhood size, enhancing the model’s scalability and performance across diverse systems. To further optimize computational efficiency, the model utilizes simplified basis functions by decoupling the radial and angular components. Gaussian or 0-order Bessel functions represent radial dependencies, while the angular dependencies are captured using the outer product of order 0 spherical harmonics, simplifying to Legendre polynomials.

GemNet-OC’s architecture includes four Interaction Blocks, each comprising 52 layers (about 22.7 million parameters in total), which facilitate extensive multi-level message passing. These blocks integrate geometric information such as distances, angles, and dihedral angles to progressively refine the embeddings. Following the Interaction Blocks are five Output Blocks, each containing 22 layers (about 15.1 million parameters in total). Instead of making direct predictions, these blocks generate intermediate embeddings that are concatenated and processed through multi-layer perceptrons (MLPs) to produce the final predictions for energy and forces. Separate MLP pathways convert the concatenated embeddings into precise output values, with a learnable MLP enhancing the atom embeddings and ensuring an efficient information flow from embeddings to energy predictions.

In addition to the Interaction and Output Blocks, GemNet-OC incorporates 28 additional layers that add up to approximately 3.3 million parameters distributed across various modules. These include initial embedding layers that initialize the atomic and edge embeddings, specialized modules that handle different basis functions for radial and angular components, and components that finalize the prediction outputs. This comprehensive layering ensures a seamless transition from input embeddings through complex interaction layers to the final predictive outputs.

Table A1.

The embedding block.

Table A1.

The embedding block.

| Module | Layer Name | Type | Neurons (in→out) |

|---|---|---|---|

| Embedding Init | atom_emb.embeddings | Embedding | (83 elements → 256-dim vector) |

| edge_emb.dense.linear | Linear + SiLU | 640 → 512 | |

| Basis Embedding (Quadruplet) | mlp_rbf_qint.linear | Linear | 128 → 16 |

| mlp_cbf_qint | Basis Embedding | (implicit) | |

| mlp_sbf_qint | Basis Embedding | (implicit) | |

| Basis Embedding (Atom–Edge Interaction) | mlp_rbf_aeint.linear | Linear | 128 → 16 |

| mlp_cbf_aeint | Basis Embedding | (implicit) | |

| mlp_rbf_eaint.linear | Linear | 128 → 16 | |

| mlp_cbf_eaint | Basis Embedding | (implicit) | |

| mlp_rbf_aint | Basis Embedding | (implicit) | |

| Basis Embedding (Triplet) | mlp_rbf_tint.linear | Linear | 128 → 16 |

| mlp_cbf_tint | Basis Embedding | (implicit) | |

| Misc/Basis Embedding | mlp_rbf_h.linear | Linear | 128 → 16 |

| mlp_rbf_out.linear | Linear | 128 → 16 |

Table A2.

The interaction block.

Table A2.

The interaction block.

| Module | Layer Name | Type | Neurons (in→out) |

|---|---|---|---|

| Core Update (edge) | dense_ca.linear | Linear + SiLU | 512 → 512 |

| Triplet Interaction (trip_interaction) | dense_ba.linear | Linear + SiLU | 512 → 512 |

| mlp_rbf.linear | Linear | 16 → 512 | |

| mlp_cbf.bilinear.linear | Efficient Bilinear | 1024 → 64 | |

| down_projection.linear | Linear + SiLU | 512 → 64 | |

| up_projection_ca.linear | Linear + SiLU | 64 → 512 | |

| up_projection_ac.linear | Linear + SiLU | 64 → 512 | |