Improved Real-Time Detection Transformer with Low-Frequency Feature Integrator and Token Statistics Self-Attention for Automated Grading of Stropharia rugoso-annulata Mushroom

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset Source

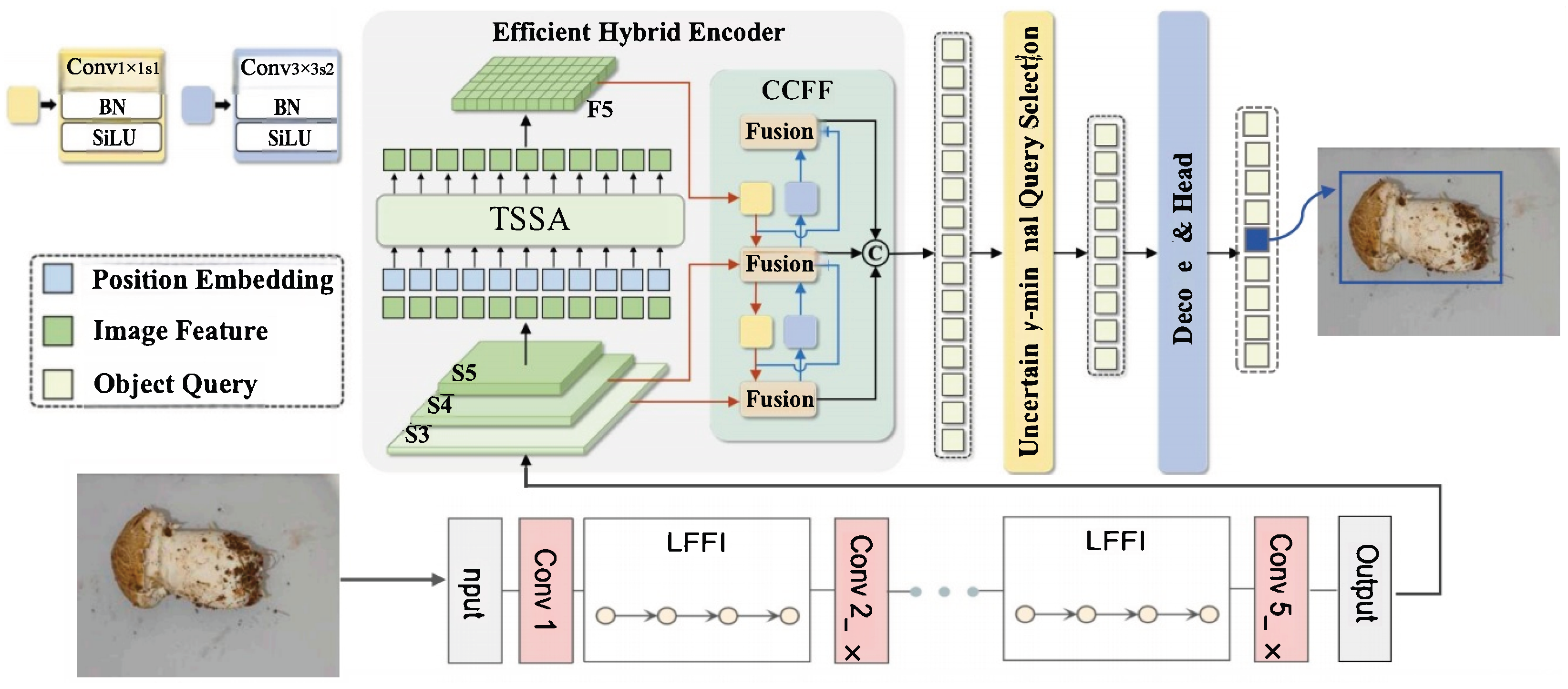

2.2. The Network Structure of the Improved RT-DETR

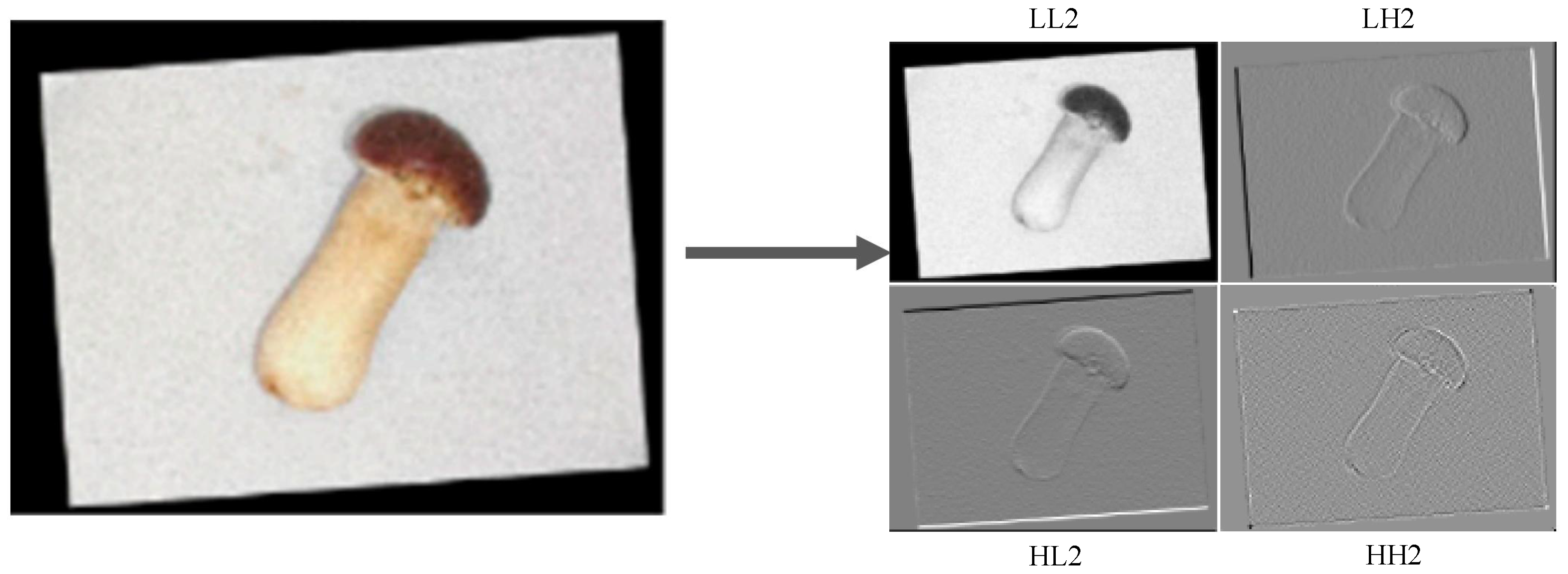

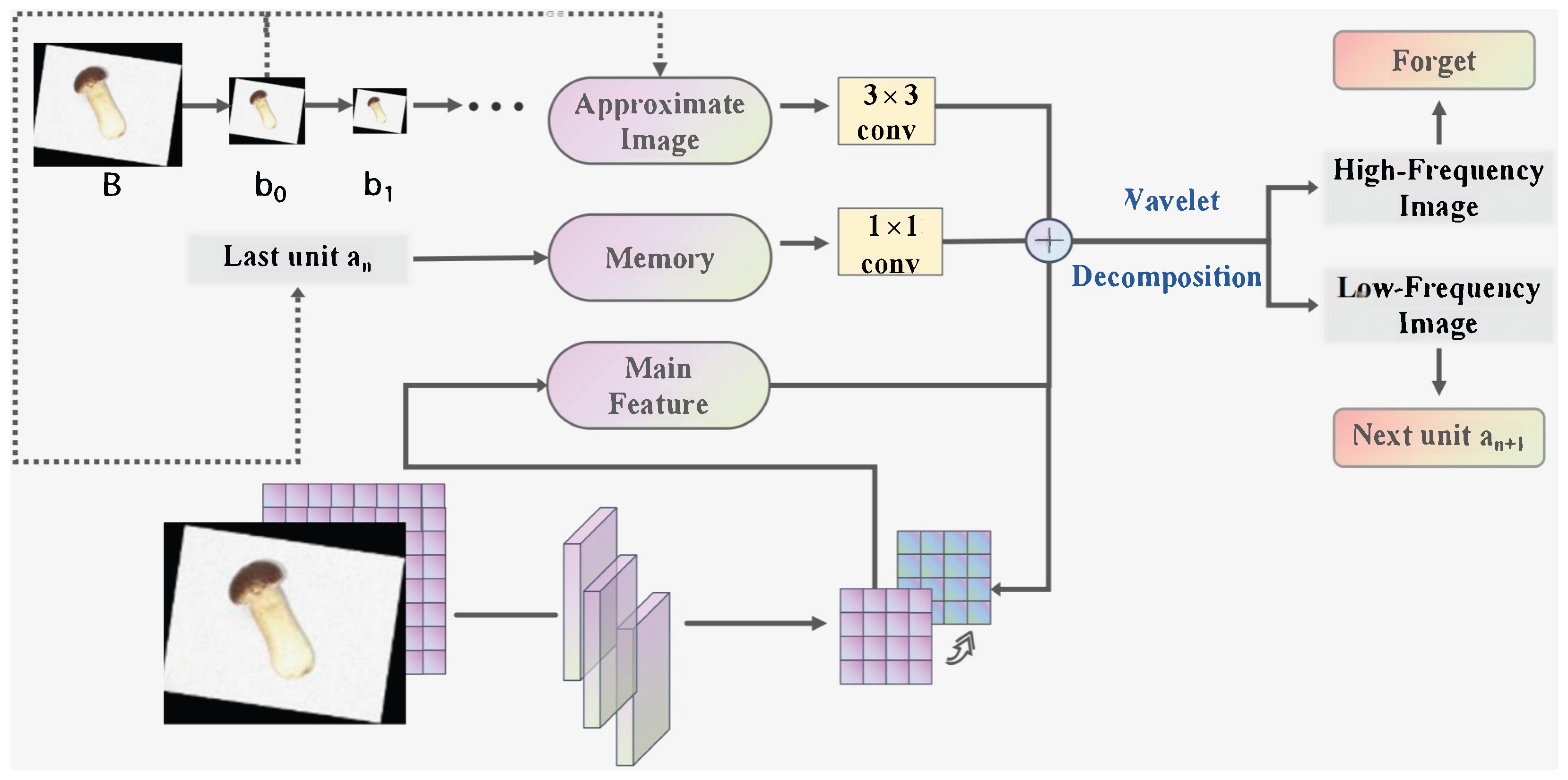

2.2.1. LFFI (Low-Frequency Feature Integrator) Module

- Low frequency (LL): Approximates the original image, retaining global structural information.

- Horizontal high frequency (LH): Captures horizontal edge details.

- Vertical high frequency (HL): Captures vertical edge details.

- Diagonal high frequency (HH): Captures diagonal edge details.

- Input ComponentsEach unit n receives three inputs:Memory feature : Output of the previous unit, representing accumulated low-frequency information from prior decompositions.Approximate image : Low-frequency (LL) sub-band from the n-th wavelet decomposition of the original image, containing global structural information at scale n. For the initial unit (), the memory feature is initialized as .Main feature map: Output of the n-th convolutional downsampling layer in the backbone, rich in high-frequency details but lacking low-frequency context.

- Low-Frequency FusionThe approximate image and memory feature are first processed via convolutional layers to adjust their channel dimensions to match the main feature map (without altering spatial resolution). These adjusted features are then added element-wise to the main feature map, resulting in an updated main feature map that integrates low-frequency global structure with high-frequency local details.

- Memory UpdateThe fused feature map (after step 2) undergoes another wavelet decomposition, from which only the low-frequency (LL) sub-band is retained, while the other three sub-bands are forgotten. This sub-band serves as the memory feature and is passed to the next unit, ensuring cumulative preservation of low-frequency information across multiple scales.

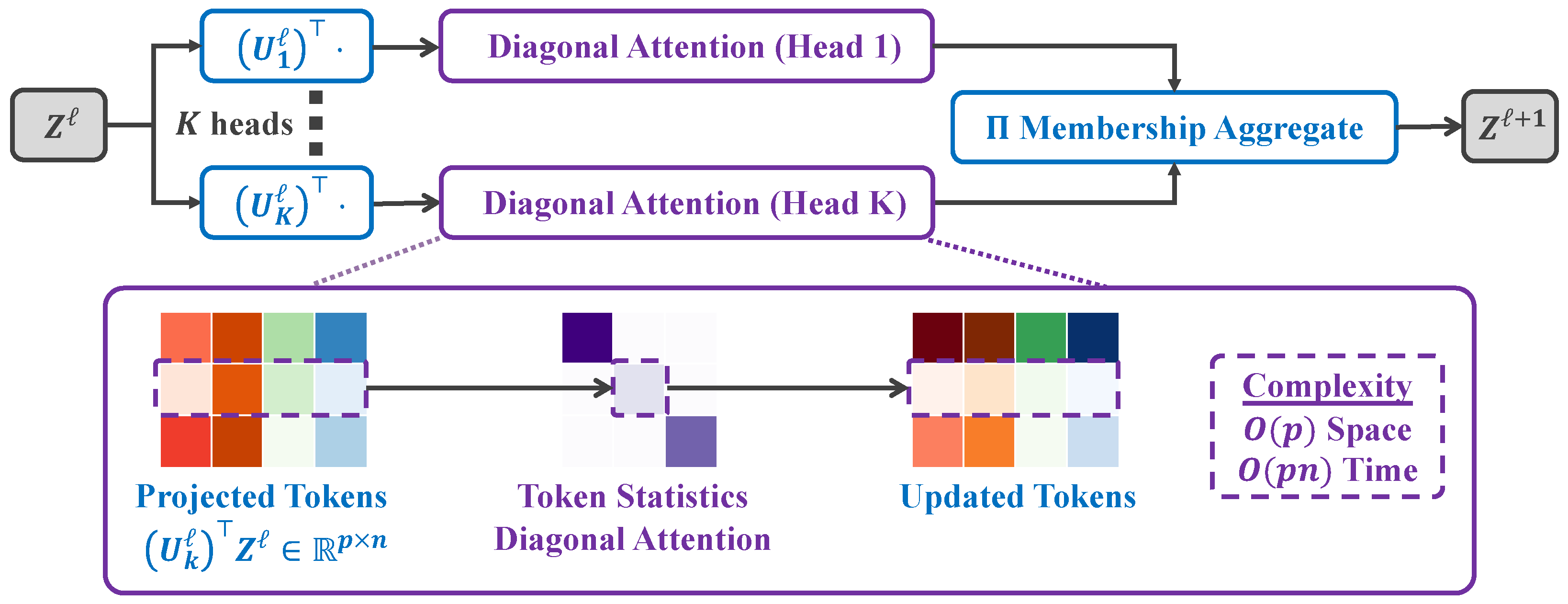

2.2.2. The Token Statistics Self-Attention (TSSA)

3. Experiment

3.1. Experiment Environment Setting

3.2. Evaluation Metrics

3.3. Ablation Experiments

3.3.1. Impact of the LFFI Module

3.3.2. Impact of the TSSA Module

3.3.3. Synergistic Effects of LFFI and TSSA

3.4. Comparison Experiments

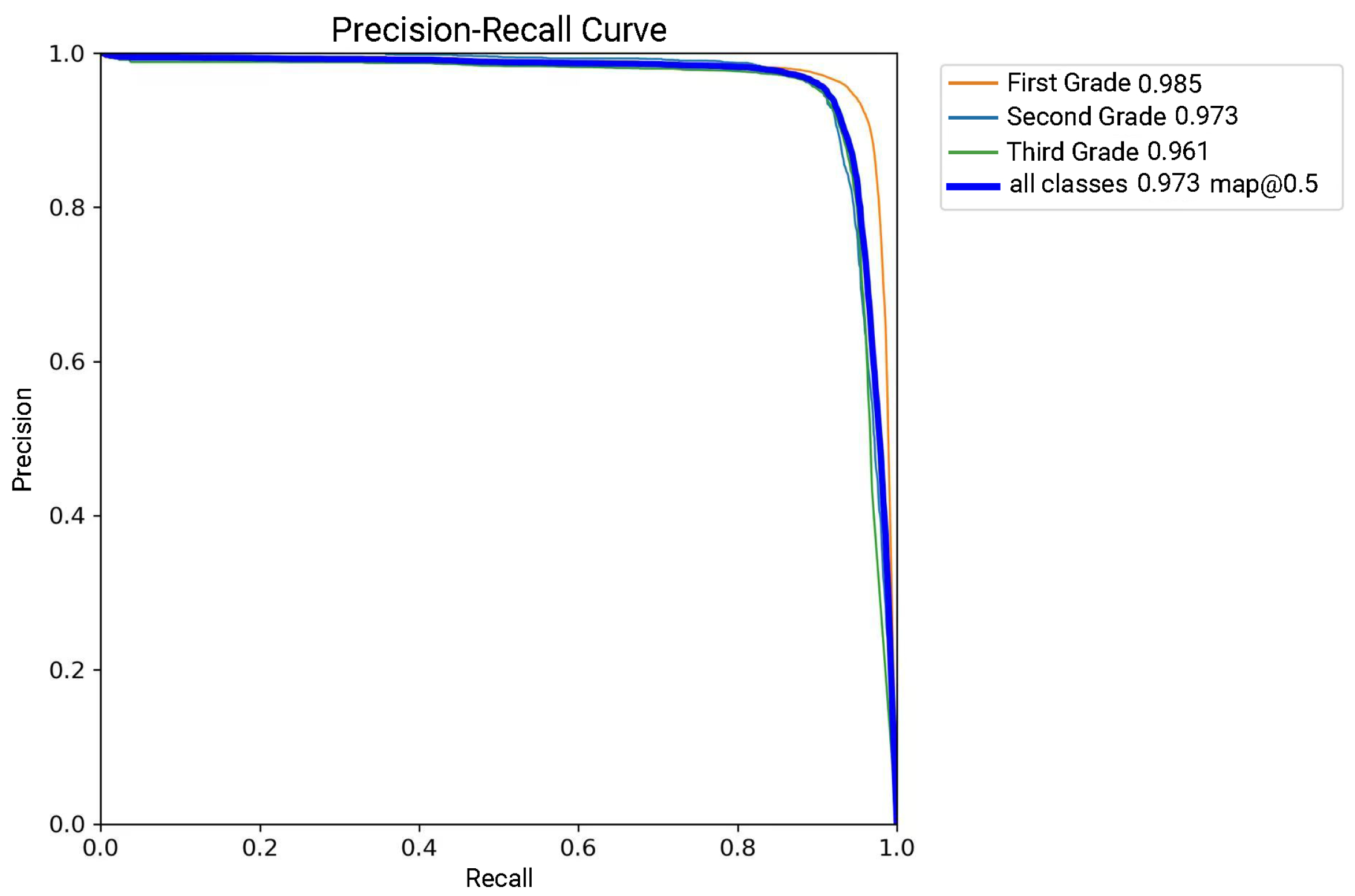

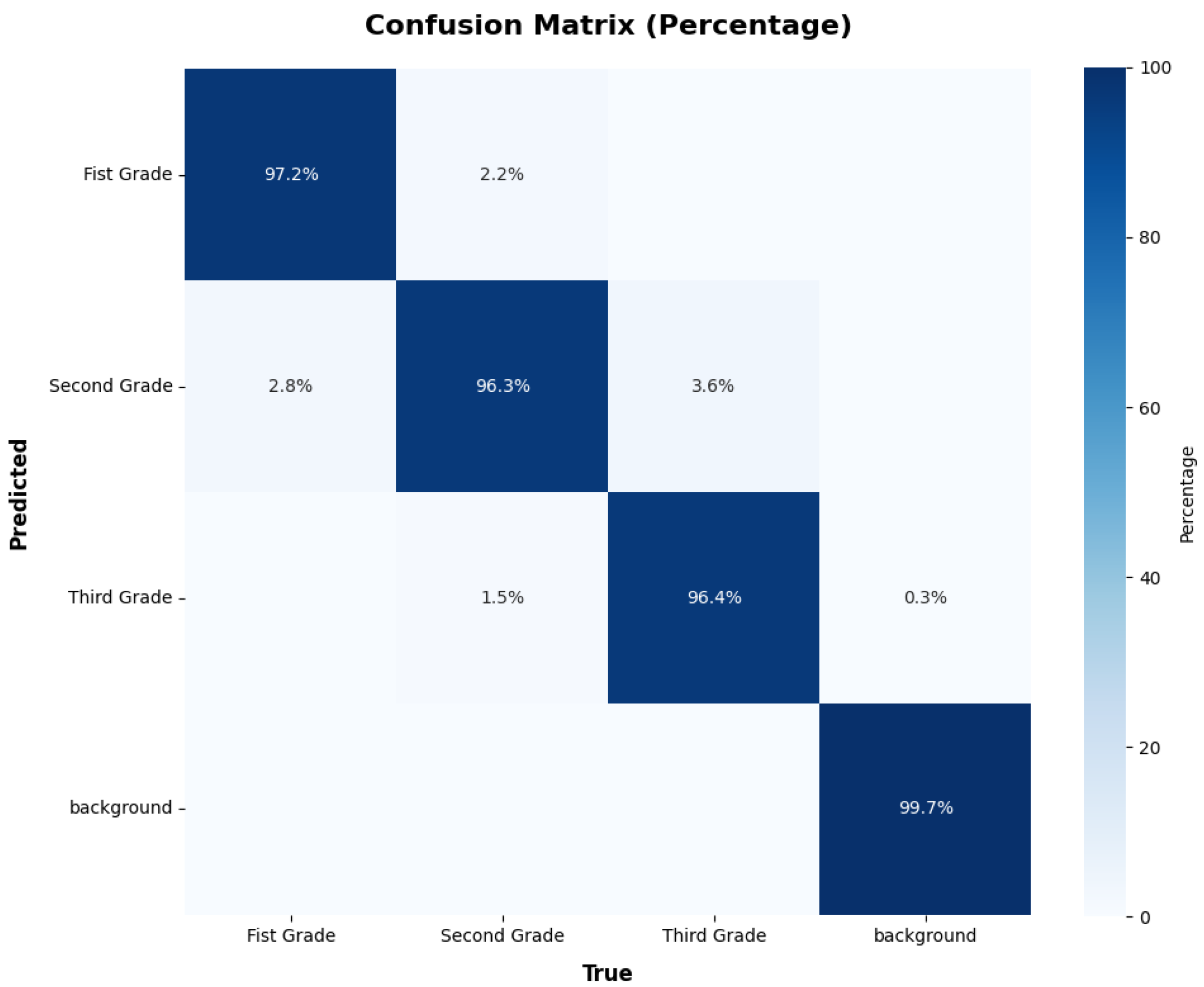

3.4.1. Accuracy Performance

3.4.2. Computational Efficiency

3.4.3. Industrial Deployability

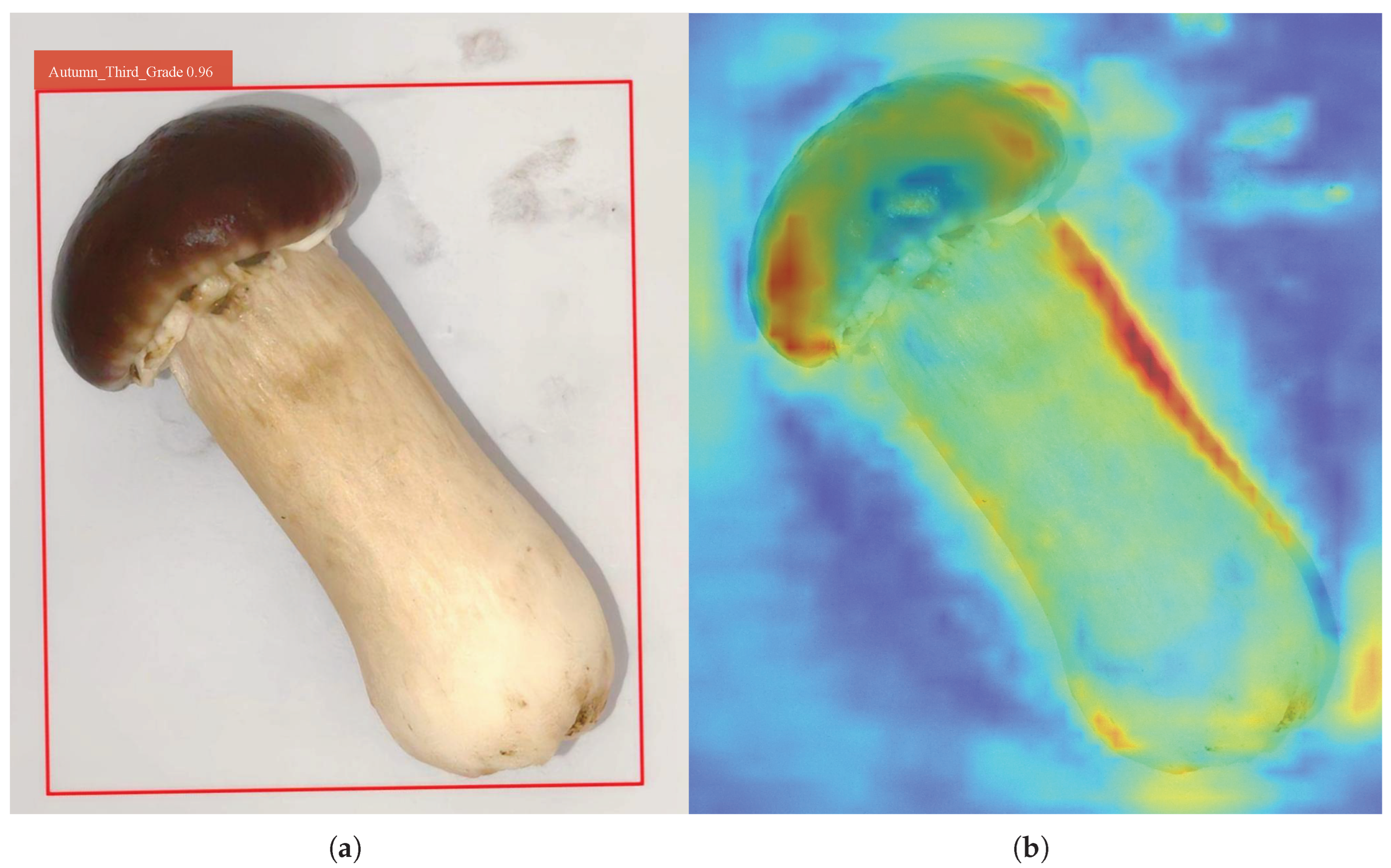

3.5. Visualization of Detection Results

4. Conclusions and Future Work

4.1. Conclusions

4.2. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, W.; Chen, W.; Wang, J.; Li, Z.; Zhang, Z.; Wu, D.; Yan, M.; Ma, H.; Yang, Y. Structure–Activity Relationship of Novel ACE Inhibitory Undecapeptides from Stropharia rugosoannulata by Molecular Interactions and Activity Analyses. Foods 2023, 12, 3461. [Google Scholar] [CrossRef]

- Huang, L.; He, C.; Si, C.; Shi, H.; Duan, J. Nutritional, bioactive, and flavor components of giant Stropharia (Stropharia rugoso-annulata): A review. J. Fungi 2023, 9, 792. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhao, Q.; Deng, H.; Li, Y.; Gong, D.; Huang, X.; Long, D.; Zhang, Y. The nutrients and volatile compounds in Stropharia rugoso-annulata by three drying treatments. Foods 2023, 12, 2077. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M. YOLOv1 to YOLOv10: The fastest and most accurate real-time object detection systems. APSIPA Trans. Signal Inf. Process. 2024, 13, e29. [Google Scholar] [CrossRef]

- Liu, R.M.; Su, W.H. APHS-YOLO: A Lightweight Model for Real-Time Detection and Classification of Stropharia rugoso-annulata. Foods 2024, 13, 1710. [Google Scholar] [CrossRef]

- Lv, M.; Kong, L.; Zhang, Q.Y.; Su, W.H. Automated Discrimination of Appearance Quality Grade of Mushroom (Stropharia rugoso-annulata) Using Computer Vision-Based Air-Blown System. Sensors 2025, 25, 4482. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Zhao, Y.; Xie, Q. Review of Deep Learning Applications for Detecting Special Components in Agricultural Products. Computers 2025, 14, 309. [Google Scholar] [CrossRef]

- He, W.; Zhang, Y.; Xu, T.; An, T.; Liang, Y.; Zhang, B. Object detection for medical image analysis: Insights from the RT-DETR model. In Proceedings of the 2025 International Conference on Artificial Intelligence and Computational Intelligence, Kuala Lumpur, Malaysia, 14–16 February 2025; pp. 415–420. [Google Scholar]

- Yao, J.; Zhu, Z.; Yuan, M.; Li, L.; Wang, M. The Detection of Maize Leaf Disease Based on an Improved Real-Time Detection Transformer Model. Symmetry 2025, 17, 808. [Google Scholar] [CrossRef]

- Liu, B.; Jin, J.; Zhang, Y.; Sun, C. WRRT-DETR: Weather-robust RT-DETR for drone-view object detection in adverse weather. Drones 2025, 9, 369. [Google Scholar] [CrossRef]

- Sun, B.; Tang, H.; Gao, L.; Bi, K.; Wen, J. RTDETR-MARD: A Multi-Scale Adaptive Real-Time Framework for Floating Waste Detection in Aquatic Environments. J. Mar. Sci. Eng. 2025, 13, 996. [Google Scholar] [CrossRef]

- Wu, D.; Peng, K.; Wang, S.; Leung, V.C. Spatial–temporal graph attention gated recurrent transformer network for traffic flow forecasting. IEEE Internet Things J. 2023, 11, 14267–14281. [Google Scholar] [CrossRef]

- Wang, S.; Li, B.Z.; Khabsa, M.; Fang, H.; Ma, H. Linformer: Self-attention with linear complexity. arXiv 2020, arXiv:2006.04768. [Google Scholar] [CrossRef]

- Choromanski, K.; Likhosherstov, V.; Dohan, D.; Song, X.; Gane, A.; Sarlos, T.; Hawkins, P.; Davis, J.; Mohiuddin, A.; Kaiser, L.; et al. Rethinking attention with performers. arXiv 2020, arXiv:2009.14794. [Google Scholar]

- Xiong, Y.; Zeng, Z.; Chakraborty, R.; Tan, M.; Fung, G.; Li, Y.; Singh, V. Nyströmformer: A nyström-based algorithm for approximating self-attention. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 11–15 October 2021; Volume 35, pp. 14138–14148. [Google Scholar]

- Katharopoulos, A.; Vyas, A.; Pappas, N.; Fleuret, F. Transformers are rnns: Fast autoregressive transformers with linear attention. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 13–18 July 2020; pp. 5156–5165. [Google Scholar]

- Wu, Z.; Ding, T.; Lu, Y.; Pai, D.; Zhang, J.; Wang, W.; Yu, Y.; Ma, Y.; Haeffele, B.D. Token statistics transformer: Linear-time attention via variational rate reduction. arXiv 2024, arXiv:2412.17810. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Kim, Y.; Kim, S. Automation and optimization of food process using CNN and six-axis robotic arm. Foods 2024, 13, 3826. [Google Scholar] [CrossRef] [PubMed]

- Wu, F.; Wu, J.; Kong, Y.; Yang, C.; Yang, G.; Shu, H.; Carrault, G.; Senhadji, L. Multiscale low-frequency memory network for improved feature extraction in convolutional neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, Canada, 20–27 February 2024; Volume 38, pp. 5967–5975. [Google Scholar]

- Li, Y.; Liu, Z.; Yang, J.; Zhang, H. Wavelet transform feature enhancement for semantic segmentation of remote sensing images. Remote Sens. 2023, 15, 5644. [Google Scholar] [CrossRef]

- Yannan, W.; Shudong, Z.; Hui, L. Study of image compression based on wavelet transform. In Proceedings of the 2013 Fourth International Conference on Intelligent Systems Design and Engineering Applications, Zhangjiajie, China, 6–7 November 2013; IEEE: New York, NY, USA, 2013; pp. 575–578. [Google Scholar]

- Xie, A.; Zhang, Y.; Wu, H.; Chen, M. Monitoring the Aging and Edible Safety of Pork in Postmortem Storage Based on HSI and Wavelet Transform. Foods 2024, 13, 1903. [Google Scholar] [CrossRef]

- Benedetto, J.J.; Li, S. The theory of multiresolution analysis frames and applications to filter banks. Appl. Comput. Harmon. Anal. 1998, 5, 389–427. [Google Scholar] [CrossRef]

- Jawerth, B.; Sweldens, W. An overview of wavelet based multiresolution analyses. SIAM Rev. 1994, 36, 377–412. [Google Scholar] [CrossRef]

- Liu, H.; Mi, X.; Li, Y. Smart deep learning based wind speed prediction model using wavelet packet decomposition, convolutional neural network and convolutional long short term memory network. Energy Convers. Manag. 2018, 166, 120–131. [Google Scholar] [CrossRef]

- Sifuzzaman, M.; Islam, M.R.; Ali, M.Z. Application of wavelet transform and its advantages compared to Fourier transform. J. Phys. Sci. 2009, 13, 121–134. [Google Scholar]

- Zhang, D. Wavelet transform. In Fundamentals of Image Data Mining: Analysis, Features, Classification and Retrieval; Springer: Berlin/Heidelberg, Germany, 2019; pp. 35–44. [Google Scholar]

- Franco, J.; Bernabé, G.; Fernández, J.; Acacio, M.E. A parallel implementation of the 2D wavelet transform using CUDA. In Proceedings of the 2009 17th Euromicro International Conference on Parallel, Distributed and Network-based Processing, Weimar, Germany, 18–20 February 2009; IEEE: New York, NY, USA, 2009; pp. 111–118. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

| Grade | Train | Validation | Test | Total | Ratio (%) |

|---|---|---|---|---|---|

| First Grade | 2500 | 300 | 300 | 3100 | 33.8 |

| Second Grade | 2700 | 350 | 350 | 3400 | 37.1 |

| Third Grade | 2136 | 267 | 267 | 2670 | 29.1 |

| Total | 7336 | 917 | 917 | 9170 | 100 |

| Grade | RDHP | RLDS |

|---|---|---|

| First Grade | 1.5∼2.5 | 0∼1.5 |

| Second Grade | 1.0∼1.5 | 1.5∼2.5 |

| Third Grade | 0∼1.0 | >2.5 |

| Hyperparameter | Configuration |

|---|---|

| Optimizer | SGD |

| Batch Size | 32 |

| Epoch | 150 |

| Image Size | 640 × 640 |

| Learning Rate | 0.01 |

| Workers | 8 |

| RT-DETR | AIFI | TSSA | LFFI | Params (M) | FLOPs (G) | FPS | P | mAP (0.5:0.95) |

|---|---|---|---|---|---|---|---|---|

| ✓ | ✓ | ✗ | ✗ | 20.2 | 58.9 | 217 | 0.932 | 0.917 |

| ✓ | ✓ | ✗ | ✓ | 20.5 | 61.3 | 208 | 0.961 | 0.947 |

| ✓ | ✗ | ✓ | ✗ | 17.8 | 40.1 | 267 | 0.949 | 0.924 |

| ✓ | ✗ | ✓ | ✓ | 18.2 | 42.8 | 262 | 0.972 | 0.952 |

| Model | Params (M) | FLOPs (G) | FPS | PMU | P | mAP (0.5:0.95) |

|---|---|---|---|---|---|---|

| Improved RT-DETR | 18.2 | 42.8 | 262 | 1.1 | 0.972 | 0.952 |

| APSH-YOLO | 5.5 | 21.4 | 230 | 1.2 | 0.963 | 0.944 |

| RT-DETR | 20.2 | 58.9 | 217 | 2.2 | 0.932 | 0.917 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, Y.-H.; Duan, S.-Y.; Su, W.-H. Improved Real-Time Detection Transformer with Low-Frequency Feature Integrator and Token Statistics Self-Attention for Automated Grading of Stropharia rugoso-annulata Mushroom. Foods 2025, 14, 3581. https://doi.org/10.3390/foods14203581

He Y-H, Duan S-Y, Su W-H. Improved Real-Time Detection Transformer with Low-Frequency Feature Integrator and Token Statistics Self-Attention for Automated Grading of Stropharia rugoso-annulata Mushroom. Foods. 2025; 14(20):3581. https://doi.org/10.3390/foods14203581

Chicago/Turabian StyleHe, Yu-Hang, Shi-Yun Duan, and Wen-Hao Su. 2025. "Improved Real-Time Detection Transformer with Low-Frequency Feature Integrator and Token Statistics Self-Attention for Automated Grading of Stropharia rugoso-annulata Mushroom" Foods 14, no. 20: 3581. https://doi.org/10.3390/foods14203581

APA StyleHe, Y.-H., Duan, S.-Y., & Su, W.-H. (2025). Improved Real-Time Detection Transformer with Low-Frequency Feature Integrator and Token Statistics Self-Attention for Automated Grading of Stropharia rugoso-annulata Mushroom. Foods, 14(20), 3581. https://doi.org/10.3390/foods14203581