1. Introduction

Chinese liquor is one of the most popular distillates worldwide and has a long history of over 6000 years [

1]. According to the National Bureau of Statistics, approximately 7.2 billion liters of Chinese liquor was consumed in 2021, with sales of US

$90 billion [

2]. Chinese liquor is a traditional alcohol. It is generally produced from grains using traditional methods, including fermentation, distillation, storage, and blending [

3]. The product obtained after distillation and storage without blending is called base liquor [

4]. Almost all commercial Chinese liquors are blended using base liquors and specific blending techniques [

5]. Although manufacturing processes differ, base liquors determine the quality of commercial liquors [

6]. Due to the different base liquor qualities, there is a great variation of quality among different commercial liquors [

7]. Therefore, evaluating base liquor quality is necessary for the quality control of commercial liquors.

The main components of Chinese liquors are alcohol and water, accounting for 98% of the total weight. Other components comprise less than 2% of the trace components but contribute to the complex aroma of the liquors, including esters, aldehydes, ketones, phenols, acids, nitrogen compounds, and sulfides [

1]. The most common method for assessing the quality of base liquors is sensory evaluation and chemical/spectroscopic analysis [

8]. However, the accuracy and objectivity of the results of the sensory evaluation method cannot be guaranteed because experts may be influenced by their health conditions, emotional states, or environmental factors [

9]. Analysis methods, such as chromatography [

10] and spectroscopy [

11], are demanding and time-consuming. Base liquors that are inaccurately assessed are downgraded or destroyed, causing unnecessary waste. Therefore, it is necessary to develop an objective, convenient, rapid, and accurate method to detect the quality of base liquor.

An electronic nose (E-nose) is a device that simulates human olfactory perception using gas sensors. It consists of an array of gas sensors, a signal processing system, and a pattern recognition system [

12]. Machine learning (ML) is a rapidly growing technology. It refers to algorithms that automatically learn information from data input [

13]. Many researchers have distinguished Chinese commercial liquor using ML methods and E-nose data. Qi et al. [

14] used an E-nose and support vector machine (SVM) classifier to distinguish six types of Chinese liquors with 90.8% accuracy. Jing et al. [

15] employed an E-nose and a multilinear classifier to classify liquors with similar aromas and different prices, achieving an accuracy of 97.22%. Zhang et al. [

16] proposed a channel attention convolutional neural network (CA-CNN) for the authenticity identification of Chinese liquor using an E-nose and obtained 98.53% prediction accuracy. Zhao et al. [

17] presented a deep learning method with a stacked sparse autoencoder (SSAE) to classify seven brands of Chinese liquor utilizing E-nose data, achieving 96.67% prediction accuracy. Although the E-nose has shown good performance in distinguishing Chinese commercial liquors, few studies have focused on the classification of base liquors, which generally have similar microconstituents with very subtle differences, for different aging durations and aging environments. In addition, it is necessary to develop a method for the simultaneous identification of base and commercial liquors for practical applications.

In this study, we propose a novel ML framework for identifying base liquors and commercial liquors using multidimensional signals from an E-nose. The contributions of this paper can be summarized as follows.

A novel machine learning framework consisting of a deep residual network (ResNet)18 backbone and a light gradient boosting machine (LightGBM) classifier (ResNet-GBM) is proposed for the quality detection of base liquor and commercial liquor. The ResNet18 backbone is a powerful feature extractor and automatically extracts a sufficient number of comprehensive and significant features from the raw, multidimensional E-nose signals. The LightGBM is employed as the classifier to strengthen the identification ability of the liquor’s quality.

Ablation and comprehensive comparative experiments are conducted on three datasets to analyze the contribution of the models’ components and the performance of the ResNet-GBM framework using five evaluation metrics (accuracy, sensitivity, precision, F1 score, and kappa score). A base liquor dataset and a commercial liquor dataset are used in the comparative experiments to assess the applicability of the proposed ResNet-GBM framework. In addition, a mixed dataset containing base liquor and commercial liquor data is used to evaluate the proposed framework’s robustness, generalization ability, and performance for the identification of Chinese liquors in a complex application scenario.

The proposed method for the quality identification of base liquor and commercial liquor enables rapid detection and has high accuracy, providing a potential tool for quality control and promoting the practical application of E-nose devices.

2. Materials and Methods

2.1. Chinese Liquor Samples

All Chinese liquor samples (light flavor) were provided by the Shanxi Luxian Liquor Industry Co., Ltd. We tested nine types of Chinese liquor (six types of base liquors and three types of commercial liquors). The six types of base liquors with different aging durations were denoted as BL (year), where BL represents the base liquor, and (year) represents the aging duration. Thus, the six types of base liquors were BL (13), BL (11), BL (8), BL (6), BL (5), and BL (3). The details are listed in

Table 1.

The three commercial liquors (CL) were blended using different proportions of the six base liquors. The details are listed in

Table 2.

2.2. Instrument and Experiment

A PEN3 E-nose (Airsense Analytics GmbH, Schwerin, Germany) with a sensor array with ten metal oxide semiconductor (MOS) sensors (

Table 3) was employed to collect the characteristic flavor information of the liquors.

All experiments were implemented in a clean and well-ventilated testing room of the authors’ laboratory at a temperature of 26 ± 2 °C and relative humidity of 50 ± 2%. The experiments lasted 25 days, and 9 different individual samples of each type were measured every day within the same procedure (total 25 days × 9 individual samples = 225 individual samples per type). These individual samples were from different production batches (the base liquor samples were from different liquor storages, and the commercial liquor samples were from different bottles) and were provided by the manufacturer directly. Each sample was measured once and updated if it was used, which ensured that no repeated measurements existed in the experiments. Therefore, the experiments contained 2025 independent measurements (9 types of liquor samples × 225 individual samples per type). Before the measurement, 3 mL of each sample was placed into a single hermetic vial (20 mL) and airproofed for 3 min to allow the liquor’s volatile compounds to disperse into the sampler.

The acquisition of the volatile compound profile was conducted in a well-ventilated location to minimize baseline fluctuations and interference from other volatile compounds. The zero gas (a baseline) was produced using two active charcoal filters (Filter 1 and Filter 2 in

Figure 1) to ensure that the reference air and the air used for the samples had the same source.

The workflow of the E-nose includes the collection stage and flushing stage. Before collecting the data, an automatic zero-point trim was conducted for the E-nose by pumping clean air through filter 2 for 5 s. Then, the volatile compounds of the liquor sample were pumped into the sensor chamber with a flow rate of 600 mL/min to contact the sensor array for 100 s. During the collection stage, the gas molecules were adsorbed on the surface of the sensors, changing the sensors’ conductivity due to the redox reaction on the surface of the sensor’s active element. The sensors’ conductivity eventually stabilized at a constant value when the adsorption was saturated. The collection stage lasted 100 s, and sampling continued at one sample per second. In the flushing stage, clean air was pumped into the E-nose to flush the analytes. The collection and flushing stages were repeated to acquire the raw response data of the nine liquor samples. The workflow of the E-Nose followed the manufacturer’s instructions in the manual of the PEN3 E-nose [

19].

2.3. Datasets

Three datasets (Dataset A, Dataset B, and Dataset C) were established using the multidimensional signals from the E-nose system. Dataset A was used to evaluate the performance of the proposed method for the classification of the six base liquors with different aging durations. Dataset B was utilized to test the effectiveness of the proposed method for the classification of commercial liquors with differing quality. Dataset C (mixed dataset) consisted of data from all liquor samples and was used to evaluate the ability of the proposed method to distinguish the base liquors and commercial liquors simultaneously.

Dataset A: This dataset comprised 1350 samples (25 days × 6 base liquors × 9 individual samples) of the six base liquors. The dataset for the SVM, random forest (RF), k-nearest neighbor (KNN), extreme gradient boosting (XGBoost), multidimensional scaling-support vector machine (MDS-SVM), and back-propagation neural network (BPNN) contained 27,000 samples (1350 samples × 20 measurements of the last 20 s) × 10 (number of sensors). The dataset for the ResNet-GBM framework contained 135,000 samples (1350 samples × 100 measurements during 100 s) × 10 sensors.

Dataset B: This dataset comprised 675 samples (25 days × 3 commercial liquors × 9 individual samples) of the three commercial liquors. The dataset for the SVM, RF, KNN, XGBoost, MDS-SVM, and BPNN contained 13,500 samples (675 samples × 20 measurements of the last 20 s) × 10 (number of sensors). The dataset for the ResNet-GBM framework contained 67,500 samples (675 samples × 100 measurements during 100 s) × 10 sensors.

Dataset C: This dataset comprised 2025 samples (25 days × 9 liquors × 9 individual samples) of the base liquors and commercial liquors. The dataset for the SVM, RF, KNN, XGBoost, MDS-SVM, and BPNN contained 40,500 samples (2025 samples × 20 measurements of the last 20 s) × 10 (number of sensors). The dataset for the ResNet-GBM framework contained 202,500 samples (2025 samples × 100 measurements during 100 s) × 10 sensors.

2.4. Principal Component Analysis

Principal component analysis (PCA) is a common multivariate statistical algorithm for dimensionality reduction (also known as feature extraction). It reduced the complexity of the data set while retaining most of the feature information [

20]. The PCA accomplishes dimensionality reduction by transforming the original data into a new coordinate system according to the largest contribution of the variance from all variables; the coordinates are called the principal components [

21]. Thus, a sample can be represented by a few principal components. A cumulative variance contribution of the first few principal components of 95% is considered reasonable [

22].

2.5. Light Gradient Boosting Machine

The LightGBM is a learning framework based on the gradient boosting decision tree (GBDT) proposed by Microsoft Research in 2017 [

23]. It has many improvements over the GBDT, such as gradient-based one-side sampling (GOSS) and exclusive feature bundling (EFB) to deal with data and features. It uses histogram-based algorithms to speed up the training process [

24]. The LightGBM algorithm has the advantages of fast training speed, low memory overhead, no overfitting, and automatic feature processing. It also supports parallel processing and is suitable for large sample sizes and high-dimensional data [

25].

2.6. ResNet

The ResNet is a commonly used convolutional neural network [

26] consisting of a stack of residual blocks. It is not prone to gradient fading [

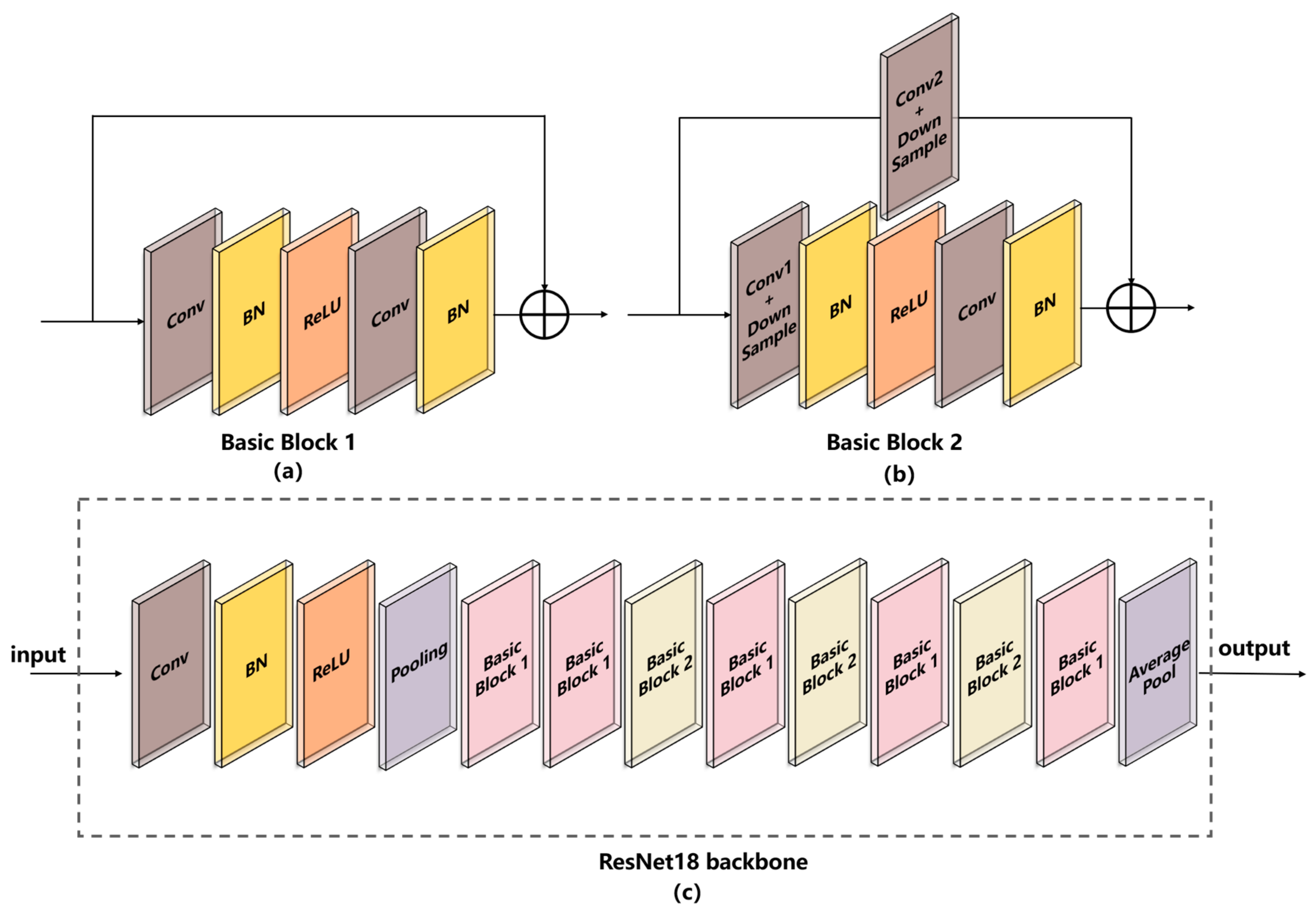

27]. The residual block is shown in

Figure 2, where x and y are the input and output of the block, respectively.

and

represent the weights of the first and second layers, respectively. The curvilinear arrows represent shortcut connections.

represents the first layer’s output after linear transformation and activation. After the linear transformation by the

weight layer,

and the original input x are added to obtain

, which is activated by a ReLU function to derive output y. Due to the residual blocks, ResNet can optimize the network layer, reducing redundancy [

28].

2.7. Model Evaluation Metrics

Five evaluation metrics (accuracy, sensitivity, precision, F1 score, and kappa score) were used to assess model performance. Four parameters were used to calculate the metrics: True Positive (TP), False Positive (FP), True Negative (TN), and False Negative (FN).

Accuracy is defined as the proportion of correctly classified samples (

TP samples and

TN samples) to the total number of samples; it is calculated as follows:

Sensitivity represents the proportion of the

TP samples to the total number of positive samples (

TP samples and

FN samples); it is defined as follows:

Precision (

P) represents the proportion of the

TP samples to the total number of positive predictions (

TP samples and

FP samples); the formula is as follows:

The

F1 score is defined as the harmonic mean of the

precision and

sensitivity; it is calculated as follows:

The

kappa score is an evaluation metric for multi-class classification models that measures the consistency between categories and the classification accuracy: it is calculated as follows:

where

represents the overall classification accuracy, and

is the expected agreement.

3. Proposed Method

An ML framework called ResNet-GBM, consisting of a ResNet18 backbone and a LightGBM classifier, was proposed to process the multidimensional signals from the E-Nose.

Figure 3 shows the flowchart of ResNet-GBM.

The raw data obtained from the 10 channels was a 100 (measurement time of 100 s) × 10 (number of MOS sensors) matrix. Due to the multi-channel input of the ResNet18 model, a 10-channel input was constructed by converting the raw E-nose data from the sensor array. The 100 raw response points of each sensor were converted into a 10 × 10 matrix, and the 10 matrices of the sensor array were converted into a 10 × 10 × 10 matrix used for the 10-channel input of the ResNet18 model.

As shown in

Figure 4c, the ResNet18 backbone consists of six stages, including Conv 1, Layer 1, Layer 2, Layer 3, Layer 4, and Pool. Conv 1 consists of a convolutional layer, a batch normalization layer, a ReLU layer, and a pooling layer. The kernel size, stride size, and padding size of the convolutional layer are 7 × 7, 2, and 3, respectively. The kernel size, stride size, and padding size of the pooling layer are 3 × 3, 2, and 1, respectively. Layer 1 consists of two Basic Blocks 1. Layer 2, Layer 3, and Layer 4 consist of Basic Block 1 and Basic Block 2. The two Basic Blocks are presented in

Figure 4a,b.

There are two convolutional layers in Basic Block 1, and the kernel size, stride size, and padding size of the two convolutional layers have the same value: 3 × 3, 1, and 1, respectively. Basic Block 2 has three convolutional layers, two of which are down-sampling layers. The kernel size, stride size, and padding size of the down-sampling convolutional layer 1 are 3 × 3, 2, and 1, respectively. The kernel size, stride size, and padding size of the down-sampling convolutional layer 2 are 1 × 1, 2, and 1, respectively. The kernel size, stride size, and padding size of the third convolutional layer are 3 × 3, 1, and 1, respectively. The details of the ResNet18 backbone are listed in

Table 4.

The 512 features extracted by the ResNet18 backbone were input into the LightGBM model. In the LightGBM, a grid search method (GSM) was employed to derive the main parameters (num_leaves and learning_rate) and obtain the best performance of the classifier. After training the proposed model using the training set, the test set was used to evaluate the effectiveness of the trained model for the quality detection of base liquors and commercial liquors.

4. Results and Discussion

4.1. Principal Component Analysis

Since the measurement phase lasted 100 s, and the response value of each sensor was stable after 80 s, the last 20 response points were chosen as the input features for PCA. As shown in

Figure 5, the x-axis, y-axis, and z-axis represent principal component 1 (PC1), principal component 2 (PC2), and principal component 3 (PC3), respectively. The percentages of the variance of PC1, PC2, and PC3 were 69.4%, 15.3%, and 11.5%, respectively. The cumulative variance of PC1, PC2, and PC3 was 96.2%, indicating that sufficient sample information was contained in the three principal components. However, the subplot indicated that the clusters were in close proximity, i.e., there was overlap. During dimensionality reduction, some principal components with a small contribution rate were overlooked, but these components may have contained critical information. Therefore, PCA is not an effective method for separating the classes in this study.

4.2. Experiments

Four sets of experiments were conducted: experiment I, experiment II, experiment III, and experiment IV. Experiment I was an ablation study to verify the contributions of the proposed ResNet-GBM’s components. Experiments II to IV were performed to compare the proposed ResNet-GBM framework with six other methods (including four common machine learning methods (SVM, RF, KNN, and XGBoost) and two methods proposed by other authors (MDS-SVM and BPNN)) on Dataset A, Dataset B, and Dataset C, respectively. MDS-SVM is a pattern recognition method based on multidimensional scaling and SVM. It was developed by Li et al. [

29] to classify ten brands of Chinese liquors. BPNN is a multi-layered feedforward neural network that was used by Liu et al. [

30] to distinguish different wines based on their properties. The models were implemented using NVIDIA GeForce MX250 graphics cards and the open-source PyTorch framework.

4.2.1. Experiment I: Ablation Study of ResNet-GBM

The comparative results of the ablation experiments on Datasets A, B, and C are displayed in

Table 5. The proposed ResNet-GBM had the best performance for the classification of the Chinese liquor on the three datasets with accuracies of 0.9704, 0.9814, and 0.9803, respectively. The LightGBM provided an unsatisfactory performance and could not identify the liquors accurately. It is possible that it cannot mine a sufficient number of features. The ResNet18 exhibited better performance than the LightGBM, indicating that the automatic feature extraction capability significantly improved the classification performance. The proposed ResNet-GBM model combines the merits of ResNet18 and the LightGBM classifier, reducing the possibility of overfitting during training and improving the model’s classification performance.

4.2.2. Experiment II: Performance of the Proposed Framework on Dataset A

Dataset A was used in Experiment II. It contains 6 types of base liquor with different aging durations. Dataset A was divided into training sets (data from the first twenty days: 20 days × 6 liquor samples × 9 individual samples) and test sets (data from the last five days: 5 days × 6 liquor samples × 9 individual samples).

The radial basis function (RBF) was selected as the kernel function of the SVM model. The SVM model includes two important parameters: the penalty coefficient (C) and kernel function coefficient (gamma). A GSM with C ∈ [10, 50, 100, 200, 500] and gamma ∈ [0.1, 1.0, 5.0, 10.0, 20.0] was employed to determine the optimal parameters. The C was 100, and the gamma was 1. The number of decision trees and the number of randomly selected features obtained from each decision tree () in the RF model were selected using the equation , where M represents the number of features. In our experiments, 10 decision trees ( = 10) were used. In the KNN model, the n_neighbors is a critical parameter; it was set to 3. The parameters of the XGBoost model were similar to those of the LightGBM model. The number of leaves (num_leaves) and the learning rate (learning_rate) are important parameters of the LightGBM model. The GSM was employed to search the parameters; the range of values for num_leaves was [8, 16, 32, 64, 128], and the learning_rate was [0.001, 0.01, 0.1, 0.5, 1]. The num_leaves was 16, and the learning_rate was 0.1.

The classification results derived from the seven models are displayed in

Table 6. Five evaluation metrics were used to evaluate the classification models. As shown in

Table 6, the proposed ResNet-GBM obtained the best performance for all evaluation metrics, with an accuracy of 0.9704, a sensitivity of 0.9704, a precision of 0.9716, an F1 score of 0.9710, and a kappa score of 0.9644. SVM, RF, KNN, XGBoost, MDS-SVM, and BPNN achieved accuracies of 0.3175, 0.4018, 0.4053, 0.4246, 0.7852, and 0.8963, respectively. The performances of these six models were unsatisfactory because they failed to extract a sufficient number of deep features. The experimental results demonstrated the effectiveness and superior performance of the proposed ResNet-GBM framework to identify different base liquors.

4.2.3. Experiment III: Performance of the Proposed Framework on Dataset B

Experiment III used commercial liquor samples to assess the generalization performance of the models. Dataset B was divided into training sets (data from the first twenty days: 20 days × 3 liquor samples × 9 individual samples) and test sets (data from the last five days: 5 days × 3 liquor samples × 9 individual samples).

The parameters of the models were the same as those in Experiment II. The results are listed in

Table 7. The ResNet-GBM model exhibited the best results for the commercial liquors, with an accuracy of 0.9814, a sensitivity of 0.9814, a precision of 0.9825, an F1 score of 0.9815, and a kappa score of 0.9722. SVM, RF, KNN, XGBoost, MDS-SVM, and BPNN achieved accuracies of 0.3649, 0.6351, 0.5088, 0.6105, 0.8235, and 0.9118, respectively. The results showed that the proposed model could accurately detect different grades of commercial liquor. The classification performance of the model was better for the commercial liquor than for the base liquor.

4.2.4. Experiment IV: Performance of the Proposed Framework on Dataset C

Experiment IV evaluated the generalization performance of the ResNet-GBM model on Dataset C (a mixture of base liquors and commercial liquors). Dataset C was also divided into training sets (data from the first twenty days: 20 days × 9 liquor samples × 9 individual samples) and test sets (data from the last five days: 5 days × 9 liquor samples × 9 individual samples).

The classification results of the seven models are listed in

Table 8. The ResNet-GBM framework obtained the best results with an accuracy of 0.9803, a sensitivity of 0.9803, a precision of 0.9819, an F1 score of 0.9801, and a kappa score of 0.9778 for the simultaneous classification of the base liquors and commercial liquors. SVM, RF, KNN, XGBoost, MDS-SVM, and BPNN achieved accuracies of 0.3389, 0.4468, 0.4304, 0.4901, 0.8148, and 0.9074, respectively. The comparison results indicate that the ResNet-GBM framework achieved better performances for extracting significant features from the multidimensional sensor signals and provided superior performance for the classification of base liquors, commercial liquors, and a mixture of both.

We further analyzed the results of each sample and selected the comprehensive evaluation metric F1 score to assess the performance of ResNet-GBM. The results are listed in

Table 9. The proposed model achieved similar performances in Experiment II and Experiment IV for the classification of the base liquors (BL13, BL11, BL8, BL6, BL5, and BL3). The F1 scores of CL1 and CL2 in Experiment IV are 1.0000, higher than that in Experiment III. The comparison results showed that the classification performance of the proposed model for commercial liquor was higher when base liquors and commercial liquors were analyzed simultaneously. This result indicated that the model could mine deeper features based on the base liquor samples, which contributed to the high classification accuracy of the commercial liquors in Experiment IV.

5. Conclusions

We proposed a ResNet-GBM framework to identify base liquors and commercial liquors with different qualities using a MOS-based E-nose. The main conclusions are as follows:

PCA was used to distinguish nine liquor samples using the E-nose data. High coincidence points in the PCA result indicated that the odor information of different liquors was highly similar. The unsatisfactory PCA results indicated that this method could not distinguish liquors with different qualities, and meaningful feature information was lost during dimensionality reduction.

A ResNet-GBM framework consisting of the ResNet18 backbone and the LightGBM classifier was proposed for the quality detection of base liquors and commercial liquors. Ablation experiments were conducted to determine the contributions of the ResNet-GBM’s components for identification. The results indicated the effectiveness of the proposed framework. The significant features contained in the multidimensional signals were extracted by the ResNet18 backbone. The LightGBM classifier strengthened the identification ability of the ResNet model, and the proposed model achieved classification accuracies of 0.9704, 0.9814, and 0.9803 for Datasets A, B, and C, respectively.

The superiority of the proposed framework was demonstrated by comparing it with six other methods (SVM, RF, KNN, XGBoost, MDS-SVM, and BPNN) using the three datasets. The comparative experiments proved that the proposed framework had higher classification performance and better generalization ability than the other models using the multidimensional E-nose signals as input.

The F1 scores of the ResNet-GBM model for all samples were compared using the three datasets (base liquor dataset, commercial liquor dataset, and mixed dataset). The proposed ResNet-GBM model achieved better performance for the classification of commercial liquor using the mixed dataset (1.0000 for CL1, CL2, and CL3) than the commercial liquor dataset (0.9730 for CL1, 0.9714 for CL2, and 1.0000 for CL3). The results indicated that the excellent performance for distinguishing base liquors resulted in a higher classification accuracy of commercial liquors when base liquors and commercial liquors were analyzed simultaneously.

The results were encouraging and demonstrated that a deep learning framework could be used to identify base liquors and commercial liquors with different qualities using E-nose data. This approach provides a potential tool for the quality control of liquor and promotes the practical application of E-nose devices. This deep learning framework is expected to have broad application value for food quality control.

Author Contributions

Conceptualization, B.L. and Y.G.; methodology, B.L. and Y.G.; validation, B.L. and Y.G.; formal analysis, B.L.; writing—original draft preparation, B.L.; writing—review and editing, Y.G.; visualization, Y.G.; supervision, Y.G.; funding acquisition, Y.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China [Grant No. 62276174].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ResNet | Residual Network |

| LightGBM | Light Gradient Boosting Machine |

| ResNet-GBM | A Resnet18 backboned with a LightGBM classifier |

| E-nose | Electronic nose |

| SVM | Support Vector Machine |

| RF | Random Forest |

| KNN | K-nearest Neighbor |

| XGBOOST | Extreme Gradient Boosting |

| MDS | Multidimensional Scaling |

| MDS-SVM | Algorithm based on MDS and SVM |

| BPNN | Back-Propagation Neural Network |

| ML | Machine Learning |

| CA-CNN | Channel Attention Convolutional Neural Network |

| SSAE | Stacked Sparse Autoencoder |

| BL | Base Liquor |

| CL | Commercial Liquor |

| MOS | Metal Oxide Semiconductor |

| PCA | Principal Component Analysis |

| GBDT | Gradient Boosting Decision Tree |

| GOSS | Gradient-based One-side Sampling |

| EFB | Exclusive Feature Bundling |

| TP | True Positive |

| FP | False Positive |

| TN | True Negative |

| FN | False Negative |

| GSM | Grid Search Method |

| PC1 | Principal Component 1 |

| PC2 | Principal Component 2 |

| PC3 | Principal Component 3 |

| RBF | Radial Basis Function |

References

- Gu, Y.; Wang, Y.-F.; Li, Q.; Liu, Z.-W. A 3D CFD Simulation and Analysis of Flow-Induced Forces on Polymer Piezoelectric Sensors in a Chinese Liquors Identification E-Nose. Sensors 2016, 16, 1738. [Google Scholar] [CrossRef] [PubMed]

- National Bureau of Statistics. Available online: https: //data.stats.gov.cn/easyquery.htm?cn=A01&zb=A020909&sj=202112 (accessed on 5 January 2023).

- Tu, W.; Cao, X.; Cheng, J.; Li, L.; Zhang, T.; Wu, Q.; Xiang, P.; Shen, C.; Li, Q. Chinese Baijiu: The perfect works of microorganisms. Front. Microbiol. 2022, 13, 919044. [Google Scholar] [CrossRef] [PubMed]

- National Public Service Platform for Standards Information. Available online: http://c.gb688.cn/bzgk/gb/showGb?type=online&hcno=D2F1ED3F0BAA0EBE99AEE34293C0BC43 (accessed on 5 January 2023).

- Sun, Z.; Li, J.; Wu, J.; Zou, X.; Ho, C.-T.; Liang, L.; Yan, X.; Zhou, X. Rapid qualitative and quantitative analysis of strong aroma base liquor based on SPME-MS combined with chemometrics. Food Sci. Hum. Wellness 2021, 10, 362–369. [Google Scholar] [CrossRef]

- Sun, Z.; Xin, X.; Zou, X.; Wu, J.; Sun, Y.; Shi, J.; Tang, Q.; Shen, D.; Gui, X.; Lin, B. Rapid qualitative and quantitative analysis of base liquor using FTIR combined with chemometrics. Spectrosc. Spectr. Anal. 2017, 37, 2756–2762. [Google Scholar]

- Wang, L.; Zhong, K.; Luo, A.; Chen, J.; Shen, Y.; Wang, X.; He, Q.; Gao, H. Dynamic changes of volatile compounds and bacterial diversity during fourth to seventh rounds of Chinese soy sauce aroma liquor. Food Sci. Nutr. 2021, 9, 3500–3511. [Google Scholar] [CrossRef]

- Yu, D.; Wang, X.; Liu, H.; Gu, Y. A multitask learning framework for multi-property detection of wine. IEEE Access 2019, 7, 123151–123157. [Google Scholar] [CrossRef]

- Li, Z.; Wang, P.P.; Huang, C.C.; Shang, H.; Pan, S.Y.; Li, X.J. Application of Vis/NIR spectroscopy for Chinese liquor discrimination. Food Anal. Methods 2014, 7, 1337–1344. [Google Scholar] [CrossRef]

- Xu, M.L.; Yu, Y.; Ramaswamy, H.S.; Zhu, S.M. Characterization of Chinese liquor aroma components during aging process and liquor age discrimination using gas chromatography combined with multivariable statistics. Sci. Rep. 2017, 7, 39671. [Google Scholar] [CrossRef]

- Zhu, W.; Chen, G.; Zhu, Z.; Zhu, F.; Geng, Y.; He, X.; Tang, C. Year prediction of a mild aroma Chinese liquors based on fluorescence spectra and simulated annealing algorithm. Measurement 2017, 97, 156–164. [Google Scholar]

- Qiu, S.; Wang, J. The prediction of food additives in the fruit juice based on electronic nose with chemometrics. Food Chem. 2017, 230, 208–214. [Google Scholar] [CrossRef]

- Dymerski, T.M.; Chmiel, T.M.; Wardencki, W. Invited Review Article: An odor-sensing system—Powerful technique for foodstuff studies. Rev. Sci. Instrum. 2011, 82, 111101. [Google Scholar] [CrossRef] [PubMed]

- Qi, P.F.; Meng, Q.H.; Zhou, Y.; Jing, Y.Q.; Zeng, M. A portable E-nose system for classification of Chinese liquor. In Proceedings of the 2015 IEEE Sensors, Busan, Republic of Korea, 1–4 November 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–4. [Google Scholar]

- Jing, Y.; Meng, Q.; Qi, P.; Zeng, M.; Li, W.; Ma, S. Electronic nose with a new feature reduction method and a multi-linear classifier for Chinese liquor classification. Rev. Sci. Instrum. 2014, 85, 055004. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Cheng, Y.; Luo, D.; He, J.; Wong, A.K.Y.; Hung, K. Channel attention convolutional neural network for Chinese baijiu detection with E-nose. IEEE Sens. J. 2021, 21, 16170–16182. [Google Scholar] [CrossRef]

- Zhao, W.; Meng, Q.-H.; Zeng, M.; Qi, P.-F. Stacked sparse auto-encoders (SSAE) based electronic nose for Chinese liquors classification. Sensors 2017, 17, 2855. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Li, Q.; Li, Z.; Gu, Y. A Suppression Method of Concentration Background Noise by Transductive Transfer Learning for a Metal Oxide Semiconductor-Based Electronic Nose. Sensors 2020, 20, 1913. [Google Scholar] [CrossRef]

- AIRSENSE Analytics. Available online: https://airsense.com/en (accessed on 12 February 2023).

- Abdi, H.; Williams, L.J. Principal component analysis. Wiley Interdiscip. Rev. Comput. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- Ringnér, M. What is principal component analysis. Nat. Biotechnol. 2008, 26, 303–304. [Google Scholar] [CrossRef]

- Shlens, J. A tutorial on principal component analysis. arXiv 2014, arXiv:1404.1100. [Google Scholar]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 3146–3154. [Google Scholar]

- Fan, J.; Ma, X.; Wu, L.; Zhang, F.; Yu, X.; Zeng, W. Light Gradient Boosting Machine: An efficient soft computing model for estimating daily reference evapotranspiration with local and external meteorological data. Agric. Water Manag. 2019, 225, 105758. [Google Scholar] [CrossRef]

- Chun, P.; Izumi, S.; Yamane, T. Automatic detection method of cracks from concrete surface imagery using two-step light gradient boosting machine. Comput. Aided Civ. Infrastruct. Eng. 2021, 36, 61–72. [Google Scholar] [CrossRef]

- Ou, X.; Yan, P.; Zhang, Y.; Tu, B.; Zhang, G.; Wu, J.; Li, W. Moving object detection method via ResNet-18 with encoder–decoder structure in complex scenes. IEEE Access 2019, 7, 108152–108160. [Google Scholar] [CrossRef]

- Li, B.; He, Y. An improved ResNet based on the adjustable shortcut connections. IEEE Access 2018, 6, 18967–18974. [Google Scholar] [CrossRef]

- Wang, A.; Wang, M.; Wu, H.; Jiang, K.; Iwahori, Y. A novel LiDAR data classification algorithm combined capsnet with resnet. Sensors 2020, 20, 1151. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Gu, Y.; Jia, J. Classification of multiple Chinese liquors by means of a QCM-based e-nose and MDS-SVM classifier. Sensors 2017, 17, 272. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Li, Q.; Yan, B.; Zhang, L.; Gu, Y. Bionic electronic nose based on MOS sensors array and machine learning algorithms used for wine properties detection. Sensors 2018, 19, 45. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).