1. Introduction

With the expansion of network scale and the sudden growth of traffic, communication among data centers (DCs) [

1,

2] requires high network performance to support fast data transmission, data backup, and data synchronization. However, the traditional inter-DC networks, in which electrical switching is the core technology, encounter technical bottlenecks in terms of bandwidth capacity, energy consumption, and transmission latency. To address the high performance requirements of inter-DC communication, elastic optical networks (EONs) [

3,

4,

5,

6,

7,

8] are widely regard as the most promising technology for DC interconnecting. EONs can provide a relatively better infrastructure for meeting the high-bandwidth and low-latency requirements of interconnecting datacenters. In the elastic optical inter-datacenter network, in order to effectively control the state information and network resources of the whole network, the software-defined network (SDN) technology is proposed [

9,

10]. SDN is a network virtualization technology, which realizes centralized control of the entire network by separating the control plane and the forwarding plane [

11,

12]. For multi-tenant service requests, the SDN controller can adaptively allocate network resources, improving the flexibility of the network [

13,

14,

15].

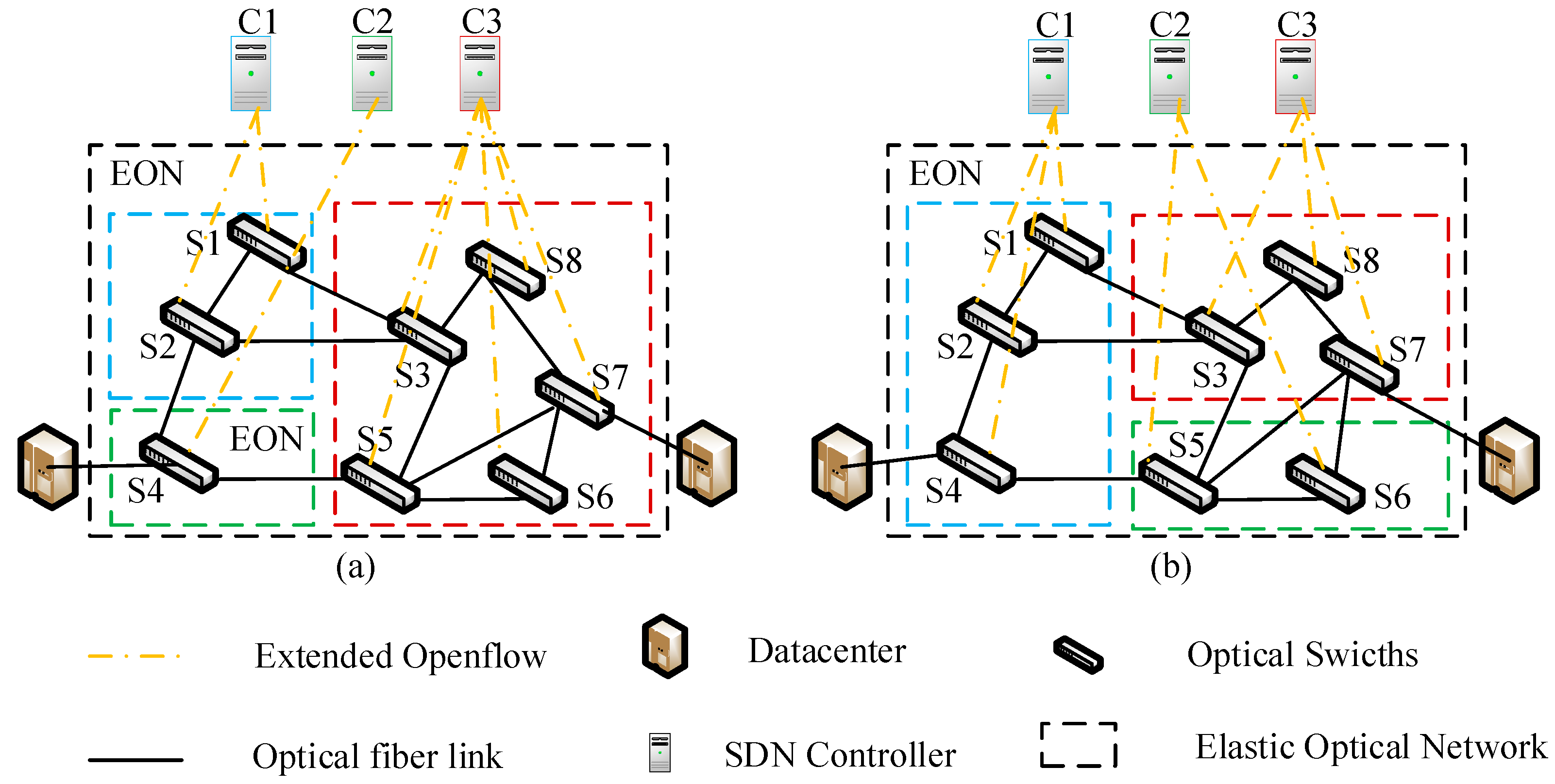

In the actual network operation process, a single SDN controller can easily handle the flow request messages generated by the small-scale network without overloading. However, when the network scale expands and the flow request messages grow in bursts, the SDN controller will be overloaded due to the processing of a large number of request messages. In response to this problem, multi-controller deployment is proposed, which has achieved distributed management and control by dividing the network into multiple domain networks. In

Figure 1a, three SDN controllers control domain networks of different scales, respectively. Due to the different scales of each domain network, the processing overhead of each controller for the flow request message is different, so this will cause load imbalance among multiple controllers. In order to solve the above problems, existing work proposes a dynamic switch migration strategy for load balancing among multiple controllers. As shown in

Figure 1b, controller load balancing can be achieved by migrating switches among the domain networks associated with the three controllers.

In the case of dynamic traffic changes, through the switch migration optimization mechanism, the balanced distribution of loads among multiple controllers can be effectively achieved, thereby improving the stability of the control plane. During actual network execution, the switch migration mechanism selects the switch to be migrated from the set of switches controlled by the overloaded controller and migrates it to the set of switches controlled by the underloaded controller. In the existing research work, most of them use the amount of request messages sent by the switch as a measure of the controller load, and then use the difference in the load of different controllers to judge whether the controller is overloaded. In addition, they select the switch with the highest flow request rate from the domain network controlled by the overloaded controller and migrate it to the domain network controlled by the underloaded controller to complete the switch migration activity. However, the challenge faced by most existing switch migration mechanisms is that they ignore other performance indicators that affect the load of the controller, such as the calculation and formulation overhead of routing rules by the controller, which leads to low load balancing performance of the controller. At the same time, when selecting switches to be migrated, they ignore the additional cost of switch migration, which leads to higher migration costs.

In response to the above problems, in this work, we design and propose a cost-aware switch migration (CASM) strategy to achieve balanced distribution of controller load and improve the effectiveness of switch migration. The main contributions of this work are as follows:

Different from the existing research work that takes the amount of flow request messages sent by the switch as the controller load indicator, our proposed CASM strategy takes the controller’s processing overhead of flow request messages and rule formulation as the controller load indicator at the same time. Additionally, based on the load of different controllers, the CASM strategy accurately measures whether the controller is overloaded by calculating the average response time of the controller, thereby improving the load balancing performance of the controller;

Most of the existing research work usually selects the switch with the highest flow request rate as the switch to be migrated, ignoring the migration cost of the switch. In response to this problem, the proposed CASM strategy reduces the migration cost of switches in the switch migration activities by defining multiple performance indicators that affect the migration cost, and selecting the optimal switch based on the minimum migration cost;

Experimental simulations show that the proposed CASM strategy improves the load balance performance of the controller by 43.2% and reduces the switch migration cost of the by 41.5% compared with existing research schemes [

16,

17,

18].

The remainder of this paper is organized as follows. In

Section 2, we discuss related works. In

Section 3, the detailed design of CASM strategy is presented. In

Section 4, we evaluate the performance of CASM through Mininet simulations. Finally, we conclude the paper in

Section 5.

2. Related Works

Aiming at the uneven distribution of multi-controller loads, Dixit et al. [

16] achieved switch migration activities by selecting switches from the domain network controlled by the overloaded controller and associating them with the controllers adjacent to the overloaded controller. In the actual network execution process, since it does not consider whether the target controller is overloaded, after switch migration, this migration strategy may cause the target controller to be overloaded, resulting in poor load balancing performance of the controller. In order to improve the load balancing performance of the controller, Chen et al. [

19] proposed a switch migration mechanism based on the zero-sum game theory, which associates the selected switch to be migrated with the light-loaded controller. However, this kind of migration mechanism needs to select multiple switches to be migrated and light-loaded controllers in one migration activity. Since the load of the controllers changes dynamically in each time period, after the switches are migrated, the relationship between the controllers and switches may not be optimal. To improve the effectiveness of switch migration, Yu et al. [

20] proposed a load notification-based switch migration mechanism. In the actual execution process, when the controller is overloaded, the system will notify the controller that the overload occurs, and the migration mechanism is activated, and the distribution of the controller load has been adjusted by migrating switches between domain networks controlled by multiple controllers. To achieve load balancing among multiple controllers, Cello et al. [

21] proposed a heuristic-based switch migration optimization mechanism (BalCon). The mechanism uses a heuristic algorithm to select the best matching relationship between the controller and the switch. In order to improve the effectiveness of switch migration, Lan et al. [

22] proposed a switch migration mechanism based on a game decision-making mechanism. This mechanism selects the optimal target controller to associate with the switch to be migrated by maximizing the resource utilization of the controller, thereby improving the load balancing performance of the controller. In order to effectively measure whether the controller is overloaded, Cui et al. [

23] used the average response time of the controller to flow request messages to determine whether the controller is overloaded. If the average response time of the controller is greater than a given threshold, the controller is identified as overloaded, otherwise it is underloaded. Through the analysis of the above solutions, we can see that most of the existing solutions may cause the target controller to be overloaded after the switch is migrated, resulting in unsatisfactory load balancing performance of the controller. In addition, during the switch migration process, the switch migration will generate additional migration costs, resulting in large energy consumption of the network.

During the switch migration process, the response time of the controller to the flow request message is also an effective indicator to measure the load performance of the controller. In order to improve the migration efficiency of switches, Zhou et al. [

24] selected a group of switches from the set of switches controlled by the overloaded controller as the switches to be migrated, and implemented multiple switches to migrate in one switch migration activity, thereby improving the load balancing of the controller. However, during the switch migration process, this solution does not consider the switch migration cost. Cui et al. [

23] judged whether the controller is overloaded or underloaded by the average response time of the controller to the flow request message, thereby improving the load balancing performance of the controller. However, during the switch migration process, this mechanism also does not consider the switch migration cost. During the switch migration process, Sahoo et al. [

25] used the Karush-Kuhn-Tucker condition to find the best target controller, and then associated it with the switch to be migrated, thereby improving the switch migration efficiency. For the analysis of the above solutions, although the existing switch migration optimization mechanism can improve the migration efficiency of the switch by constraining the response time of the controller, the additional migration cost is not reduced after the switch is migrated, and the load balancing performance of the controller is not significantly improved.

In order to reduce the extra cost of switch migration activities, during the switch migration process, Wang et al. [

26] selected the optimal switch from the domain network controlled by the overload controller as the switch to be migrated based on minimizing the migration cost, and migrated it to the domain network controlled by the target control. In order to improve the migration efficiency of switches, Hu et al. [

27] calculated the controller load by considering multiple performance indicators that affect the controller load, and selected the optimal switch based on the minimized cost, so as to achieve a balanced distribution of the controller load. In addition, Hu et al. [

28]. propose an efficient switch migration mechanism, which selects the switches to be migrated from the switch set by sensing the migration cost of the switches and associates them with light-load controllers. Through the above analysis, although the existing solution can reduce the cost of switch migration, there are other costs affecting switch migration that are not considered, and the load balancing performance of the controller is not significantly improved after switch migration.

From the analysis of the above research work, the existing switch migration strategy cannot make a good trade-off between the controller load balancing performance and the migration cost. For most research works, they only consider the flow request rate as a controller load metric. During the switch migration process, since there are multiple performance indicators that affect the load of the controller, this will result in a low load balancing performance of the controller. In addition, they incur significant migration costs during switch migration. In addition, for some solutions considering switch migration cost, they ignore some cost indicators that affect switch migration, and only consider one cost indicators, which will lead to the inability to significantly reduce switch migration cost. In this paper, the proposed CASM strategy calculates the load of the controller through multiple indicators, and accurately measures whether the controller is overloaded based on the controller’s response time to flow request messages, which can achieve a balanced distribution of the controller load. In addition, the switch with the smallest migration cost is selected from the switch set associated with the overloaded controller based on multiple cost indicators that affect switch migration.

3. Detailed Design of CASM Strategy

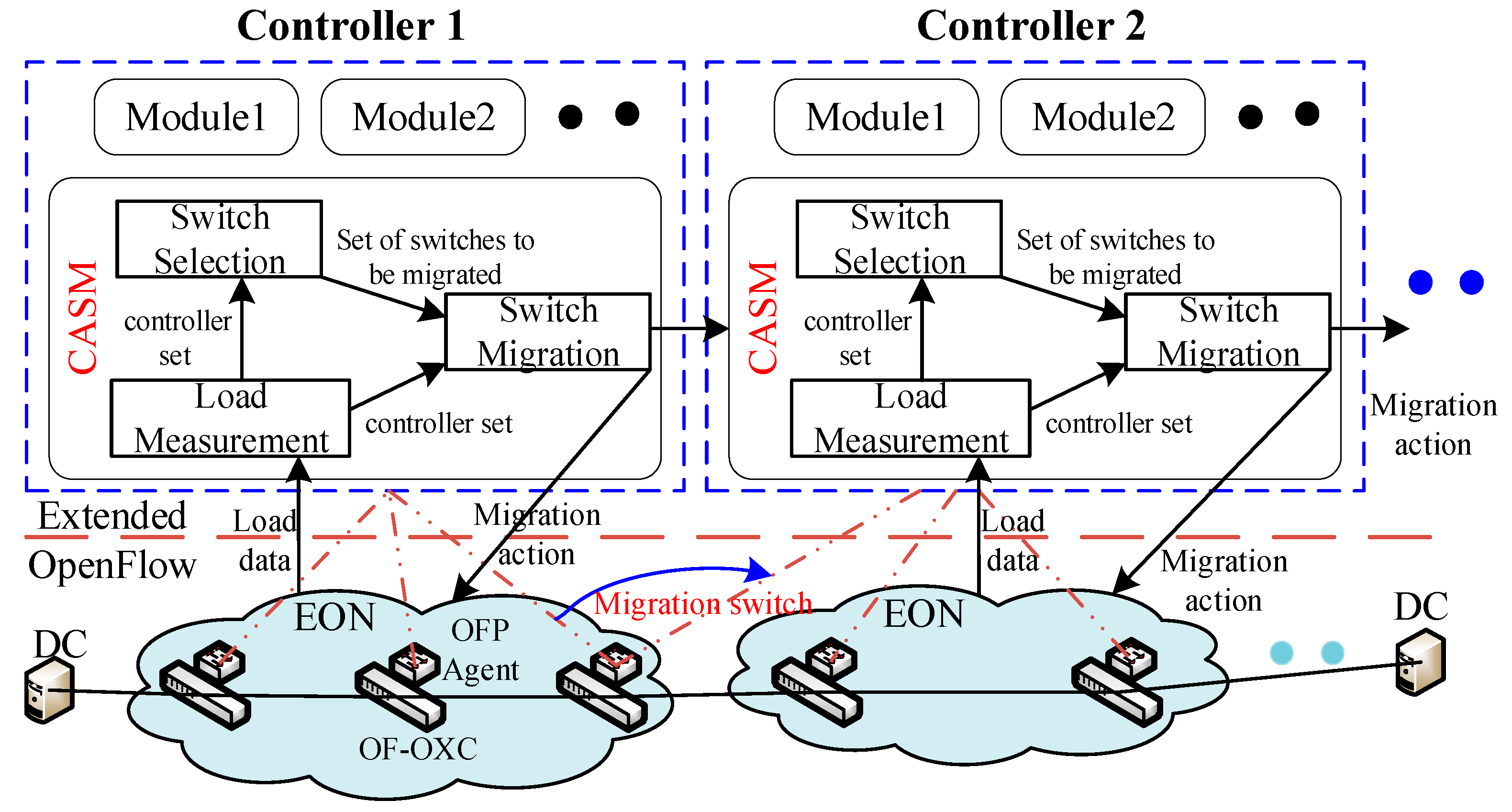

To achieve balanced distribution of controller load, we propose a cost-aware switch migration (CASM) mechanism. As shown in

Figure 2, we design the system framework of the CASM scheme. Based on SDN technology, the CASM system framework is divided into a control plane and a data forwarding plane, and a communication interface protocol (Extended OpenFlow) is defined between the control plane and the forwarding plane. On the data forwarding plane, multiple data centers are interconnected through elastic optical networks. In actual deployment, we use OpenFlow-enabled optical cross-connects (OF-OXCs) as a node facility, and on each OF-OXCs node, OpenFlow protocol agent (OFP Agent) is deployed to receive messages sent by the controller. In the actual execution process, through the extended OpenFlow protocol [

29,

30,

31,

32], the controller centrally controls the node facilities of the forwarding plane. In the control plane, multiple controllers are deployed for distributed control of the entire network. Each controller is deployed with multiple functional modules, including the proposed CASM module.

As the core functional module of the controller, the CASM module is mainly composed of three functions: (1) Load Measurement is used to identify whether the controller is overloaded, and output the overloaded controller set and the underloaded controller set; (2) Switch Selection is used to select the optimal switch from the set of switches controlled by the overloaded controller as the switch to be migrated; (3) Switch Migration is used to associate the switch to be migrated with the target controller to complete the switch migration activity. In the network execution process, the CASM strategy first collects the load data of the domain network associated with the SDN controller, and then identifies whether the controller is overloaded based on the load information of each controller, and outputs the overloaded controller set and the underloaded controller set, respectively. After that, the CASM strategy selects the switch with the least migration cost from the set of switches associated with the overload controller through the switch selection component and passes it to the switch migration component for dynamic switch migration activities. Finally, the CASM strategy delivers the generated switch migration function to the data forwarding plane, so as to implement switch migration activities among multiple domain networks.

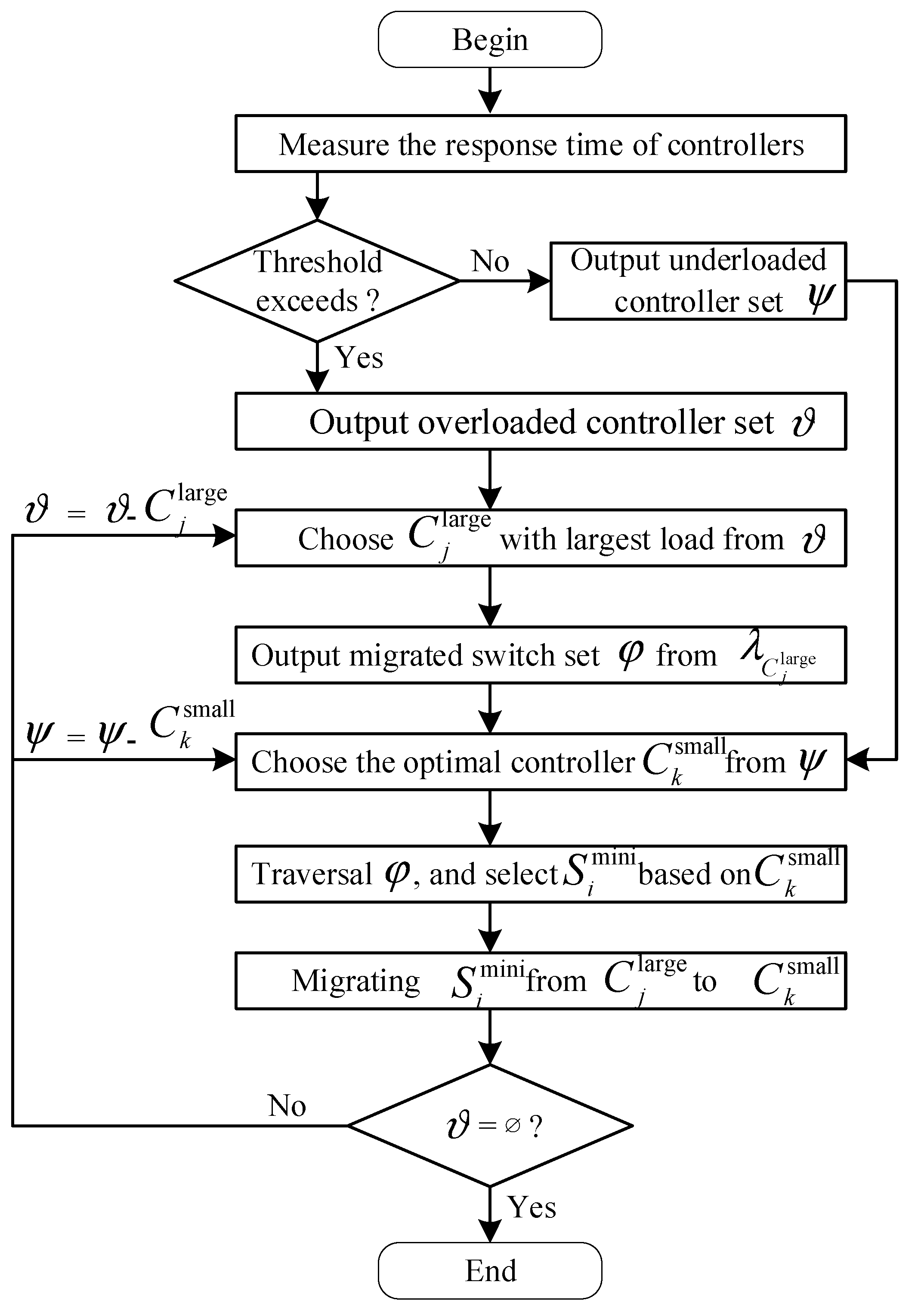

The specific execution flow of the CASM scheme is shown in

Figure 3. CASM first calculates the average response time of each controller, and judges whether the controller is overloaded based on the response time threshold

. If the response time of the controller exceeds the threshold

, the set

of overloaded controllers is output; otherwise, the set

of underloaded controllers is output. In the actual execution process, the overloaded controller

with the largest load is first selected from the overloaded controller set

for the switch migration activity. For overloaded controller

, CASM selects the optimal set

of switches from the set

of switches it controls. Additionally, the underloaded controller

with the least load is selected from the set

of underload controllers. Based on the underloaded controller

, CASM selects the optimal switch

from the set

of switches to be migrated. Finally, the switch

is migrated from the domain network of the overloaded controller

to the domain network controlled by the underloaded controller

to complete the switch migration activity. In the actual execution process, according to the execution flow of the CASM scheme, the execution of the scheme is stopped until the overloaded controller set

is empty.

In the actual execution process, we model the network model of the forwarding plane as a graph

, where

represents a series of node facilities, including switch and controller nodes.

represents a series of links. In the forwarding plane,

represents a series of switch nodes, where

refers to the

switch in the switch set

. Additionally,

represents a series of controller nodes, where

refers to the

controller in the controller set

. In actual operation, the network is divided into

sub-domain networks by deploying multiple controllers.

represents the set of switches associated with the controller

. In

Table 1, we list the main symbols and explain them. Below we mainly elaborate and explain the design of the proposed CASM scheme in detail.

3.1. Load Measurement

For the load measurement module, it is mainly used to calculate the load of different controllers, and at the same time judge whether the controller is overloaded or underloaded based on the response time of the controller to the request message. In the actual execution process, the performance indicators that affect the load of the controller mainly include two types: (1) the controller’s processing overhead for flow request messages (Packet_in); (2) The controller’s processing overhead for rule formulation messages (Packet_out). In actual execution, when a new flow arrives at the switch, it is forwarded by matching the flow table entry. If there is no matching flow table entry on the switch, the switch sends a Packet_in message to the controller. After the controller receives the flow request message, it will calculate the routing rules according to the state information of the whole network. After the routing rule is formulated, the controller sends the flow table entry to the switch with a packet_out message for flow matching and forwarding [

33,

34]. The matching relationship between the switch

and the controller

can be defined by a matrix

.

is a mapping relationship between the switch

and the controller

, as shown in Equation (1).

Let

denote the number of Packet_in messages sent by the switch

to controller

at time at a time interval

.

is the average size of a Packet_in message. The processing overhead

of the controller

for flow request messages can be defined as Equation (2).

Let

denote the average size of a rule formulation message (Packet_out). The processing overhead

of the controller

for rule formulation messages (packet_out) is shown in Equation (3).

Therefore, to sum up the above, as shown in Equation (4), the load

of controller

is the sum of the above two performance metrics.

To measure whether the controller

is overloaded, we use the average response time

of the controller

to messages to identify. The messages mentioned here include flow request messages (Packet_in) and rule formulation messages (Packet_out). Let

denote the time when a Packet_in message arrive at the controller

. After the controller

processes the Packet_in message, a Packet_out message is generated and sent to the switch

. Let

denote the time when a Packet_out message arrive at the switch

. Therefore, we easily acquire the response time

of the controller

to the single round-trip message between the switch

and the controller

, where the round-trip message refers to the Packet_in and Packet_out messages, as shown in the Equation (5).

In addition, we denote the response time to the whole round-trip messages between the switch

and the controller

as

. Therefore, the total response time of all the round-trip messages associated with the controller

can be easily obtained. After acquiring these data, we can directly obtain the average response time

of the controller

for a single message size, as shown in the Equation (6). Therefore, for all controllers

, we can obtain the average response time of a single controller and use it as a threshold

for the response time of the controller, as shown in the Equation (7).

The specific execution process of the load measurement module is shown in Algorithm 1. Algorithm 1 first calculates the loads

of all controllers

, as shown in Equation (4). Then, based on the load of different controllers, the average response time

of the controller

can be obtained. Meanwhile, the algorithm use the set

represent the response time values of

controllers in a time interval. Finally, the algorithm traverses the value

in the set

. When

, the controller

is added to the overloaded controller set

, otherwise, the controller

is added to the underloaded controller set

. Therefore, through this algorithm, the set

of overloaded controllers and the set

of underloaded controllers can be output separately.

3.2. Switch Selection

In this module, switch selection is mainly used to select the best switch from the domain network associated with the overload controller as the switch to be migrated. In the actual implementation process, we choose the optimal switch by minimizing the migration cost. The number of switches associated with the overloaded controller is defined as , while the number of the underloaded controllers is defined as . In the switch selection process, there are two performance indicators that affect the switch migration cost, which are the change cost of the controller’s processing overhead of the flow request message and the controller’s deployment cost of the migration rule.

The change cost

of the controller’s processing overhead of the flow request message is shown in Equation (8). In Equation (8),

represents the number of hops from switch

to controller

, and

represents the number of hops from switch

to controller

.

In the actual execution process, the controller

formulates the corresponding migration rules and delivers them to the switch

, and the switch migration activity has been realized. Thus, the deployment cost

of the migration rule is shown in Equation (9).

represents the number of migration rule messages sent by controller

.

From the above analysis, we can see that the migration cost of the switch is actually composed of the change cost of the controller to the flow request message and the deployment cost of the controller to the migration rules. We modeled the two cost metrics separately above, so the migration cost

of the switch

can be expressed as Equation (10).

During the switch selection process, the switch selection module calculates the migration cost of all switches in the domain network associated with the overload controller

, and selects the optimal switch

as the switch to be migrated based on the minimum migration cost

. The specific execution process of switch selection is shown in Algorithm 2. The algorithm firstly calculates the two cost indexes that affect switch migration, and then calculates the migration cost

of each switch

based on this. Based on the migration costs

of different switches, the algorithm further constructs a migration cost set

. Then, for the migration cost

of each switch in

, the algorithm is based on minimizing the migration cost, and selects the switch

with the smallest migration cost as the switch to be migrated by matching each underloaded controller

. Finally, the algorithm forms all the selected switches into a switch set as the switch set

to be migrated.

3.3. Switch Migration

For the switch migration module, the module selects the best switch from the set of switches associated with the overloaded controller based on the minimum migration cost and associates it with the underloaded controller , thereby realizing the switch migration activity. In the existing research work, most research schemes can only handle one overloaded controller in one switch migration activity, but cannot handle multiple migration activities. Therefore, in our proposed work, in one switch migration activity, we are able to process multiple overloaded controllers in parallel by multi-threading to improve the efficiency of switch migration. The specific execution process of switch migration is shown in Algorithm 3.

In actual execution, Algorithm 3 firstly measures whether the controller

is overloaded through the load measurement function module, and outputs the overloaded controller set

and the underloaded controller set

at the same time. Then, the algorithm obtains the overloaded controller

with the largest load from the set

of overloaded controllers, and the underloaded controller

with the least load from the set

of underloaded controllers. In addition, the algorithm calculates the switch

with the smallest migration cost as the optimal switch from the set

to be migrated. Finally, the algorithm migrates switches

from the domain network associated with the overloaded controller

to the domain network associated with the underloaded controller

, thus completing the switch migration activity. In the actual running process, the proposed CASM scheme stops running until the overloaded controller set

is empty.

4. Performance Evaluation

In this section, in order to evaluate the performance of the CASM scheme, we leverage the Mininet simulation tool and Ryu controller to build a test platform. The experiment is run on a physical server which is a Supermicro X11DPL-i with two 12-core Intel Xeon Silver-4116 2.10 GHz CPUs and 64 GB memory, and an Intel Ethernet Connection X722 for 1 GbE. The server runs Ubuntu 16.04–64 bit system with Linux 4.15.0 kernel. In the actual testing process, we use topology structures of different network scales, which are all derived from actual industrial map models. We compare and analyze the performance of the proposed scheme and related research works under different topologies. The different topologies and features are shown in

Table 2. These topologies all come from typical networking structures in the industry. For example, the NSFNET topology is a backbone network structure formed by connecting supercomputing centers in the United States.

During the execution of the experiment, we used the Iperf software tool to simulate the typical traffic model in the data center network. Assume that there is a pair of bidirectional fibers on each link, and the available spectral width of each fiber is set to 4000 GHz with a width of 12.5 GHz. The bandwidth requirements of the links are randomly distributed between 12.5 Gb/s and 100 Gb/s during network execution. The flow requests are generated according to a Poisson process with a rate of

requests per service provision period, and the duration of a request follows an exponential distribution with an average value of

service provision periods. Hence, the traffic load can be quantified with

in Erlangs. To analyze the performance of the proposed scheme, we compare it with existing related research works, which include:

(1) Static Switch Matching (SSM): it realizes the optimal deployment of the controller through the static association between the switch and the controller [

17];

(2) Random Switch Migration (RSM): it randomly selects the switch as the switch to be migrated during the switch migration activity [

16];

(3) Maximum Utilization Controller Selection (MUCS): it selects the controller with the largest resources as the target controller during switch migration [

18]. During the experiment, we choose two indicators that can reflect the performance of the scheme as the experimental evaluation indicators, which are the load imbalance degree of the controller and the migration cost of the switch. The load imbalance degree

represents the load balancing performance among multiple controllers, where

represents the average load of the controller. From the analysis of performance data, the smaller its value is, the more it can reflect that the scheme has a better effect on the load balancing performance of the controller. On the contrary, the load balancing performance of the controller is low. Migration costs represent additional costs incurred in switch migration activities. When the migration cost index of the solution is too high, it indicates that the migration efficiency of the solution is low, resulting in high network energy consumption. If the migration cost index of the solution is small, it indicates that the solution is more efficient in switch migration activities.

During the actual experiment, we conduct experimental tests and analysis on the load imbalance indicators of different schemes, as shown in

Figure 4. For the data values output by the experiment, we can find that the smaller the load imbalance index is, the better the load balancing performance of the controller is. Under different traffic loads, we can see that the load balancing performance of CASM is better than the other three schemes. Since in the switch migration activity, CASM uses different load indicators to calculate the load of the controller, and judges whether the controller is overloaded based on the response time of the controller to the request message, thereby improving the load balancing performance of the controller. In

Figure 4a, we test the performance of the proposed CASM scheme based on the NSFNET topology. From the experimental data, it can be seen that compared with SSM, RSM and MUCS, the load balancing performance of the proposed CASM scheme is improved by 55.3%, 46.2% and 37.5%, respectively. As shown in

Figure 4b, under the OS3E topology, compared with the existing scheme SSM, RSM and MUCS, the proposed CASM scheme improves the load balancing performance by 47.3%, 36.2% and 25.6%, respectively. Under the other two network topologies, compared with the existing research schemes, the proposed CASM scheme also has better load balancing performance. Since in the implementation process of the proposed CASM scheme, in addition to considering the performance indicators that affect the load of the controller, the response time of the controller to the message is also considered, which makes the CASM scheme have better load balancing performance.

For the migration cost of the switch, under different network topologies, we analyze and compare the migration cost indicators of different schemes, as shown in

Figure 5. Under different traffic loads, the migration cost index of the proposed CASM scheme is much lower than that of the existing scheme. Since in the actual implementation process, the CASM scheme selects the best switch from the set of switches associated with the overloaded controller as the switch to be migrated by minimizing the migration cost when selecting switches, thereby reducing the additional migration cost generated by switch migration activities. In

Figure 5a, we test the performance of the proposed CASM scheme based on the NSFNET topology. From the experimental data, it can be seen that compared with RSM and MUCS, the migration cost of the proposed CASM scheme is reduced by 45.9% and 55.3%, respectively. For other types of topologies, compared with RSM and MUCS schemes, the proposed CASM scheme reduces an average of 43.7% and 51.2%. Compared with the MUCS scheme, the CASM scheme reduces the migration cost by about 15%. Since during the switch selection process, the CASM scheme selects the best switch based on minimizing the migration cost and associates it with the target controller.