Density Awareness and Neighborhood Attention for LiDAR-Based 3D Object Detection

Abstract

1. Introduction

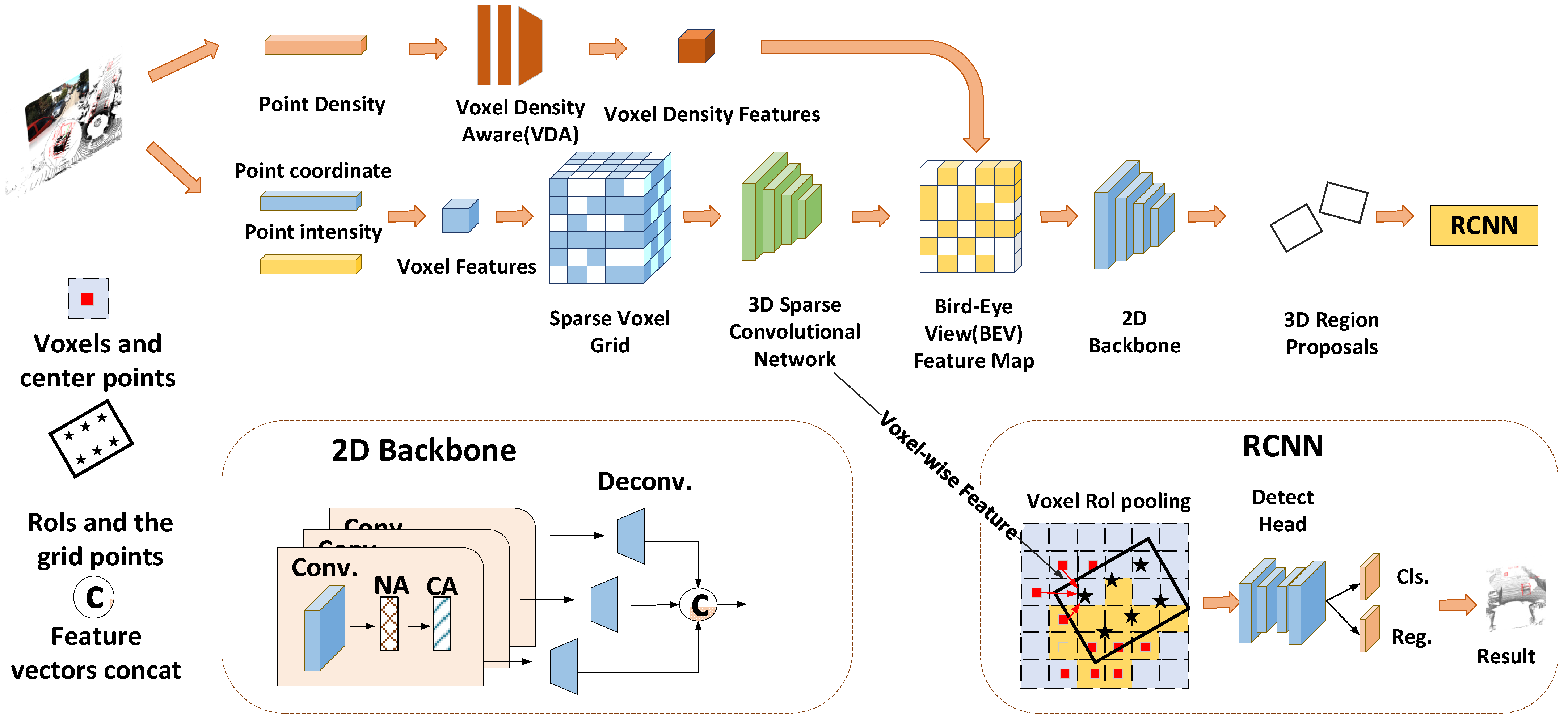

- This work summarizes the use of density information in existing 3D object detectors and proposes DenNet, which focuses on the density and neighbor information of 3D objects.

- Voxel density-aware (VDA) is proposed to retain the density information during voxelization. We also introduce NA and CA to the 2D backbone network to efficiently enrich the token representations with fine-level information.

- In this manner, we achieved competitive performance for the KITTI dataset, especially in the cyclist category, where we realized state-of-the-art (SOTA) results. On the more complex ONCE dataset, which conforms with the Chinese traffic environment, we achieved an improvement of 3.96% relative to the baseline mAP.

2. Related Works

2.1. Voxel-Based 3D Object Detection

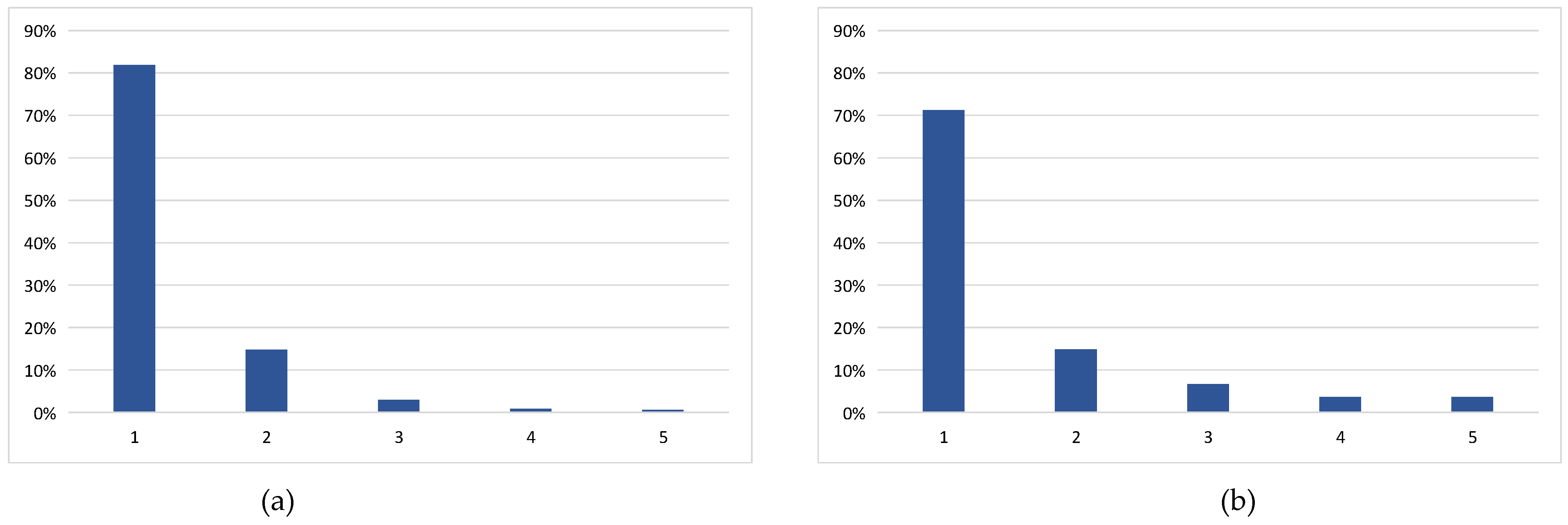

2.2. Point Density Estimation

2.3. Transformers for 3D Object Detection

3. Methods

3.1. Revisiting Voxel R-CNN Backbone

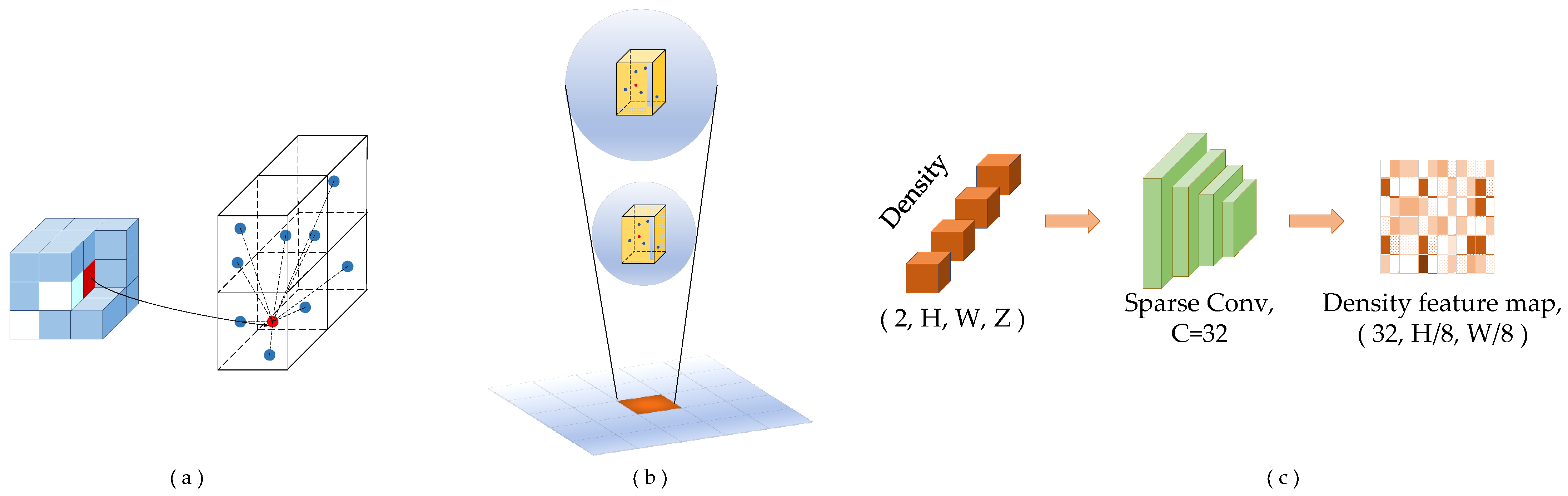

3.2. Voxel Density-Aware Module

3.2.1. Original Mean VFE

3.2.2. VDA Module

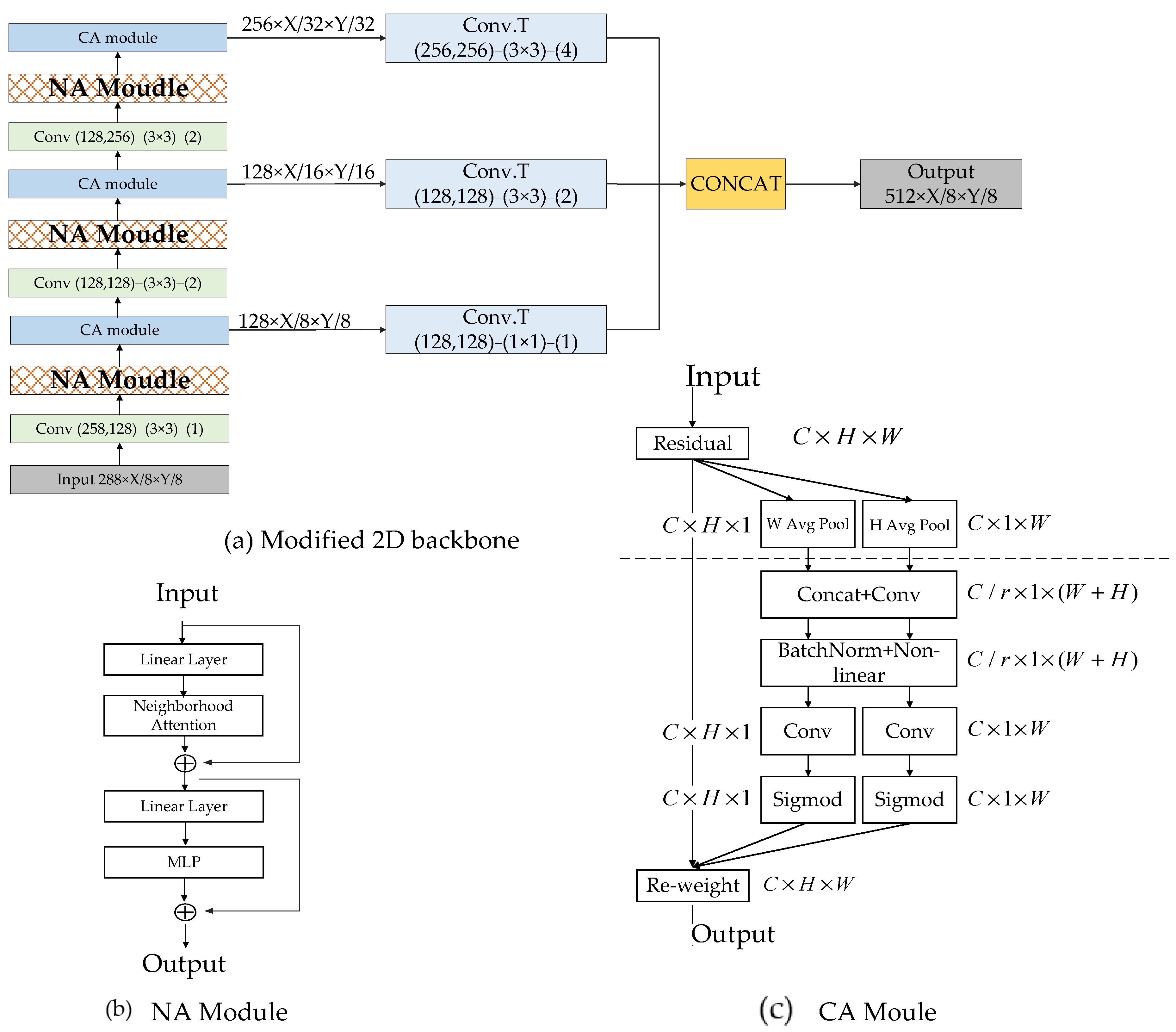

3.3. Modified 2D Backbone

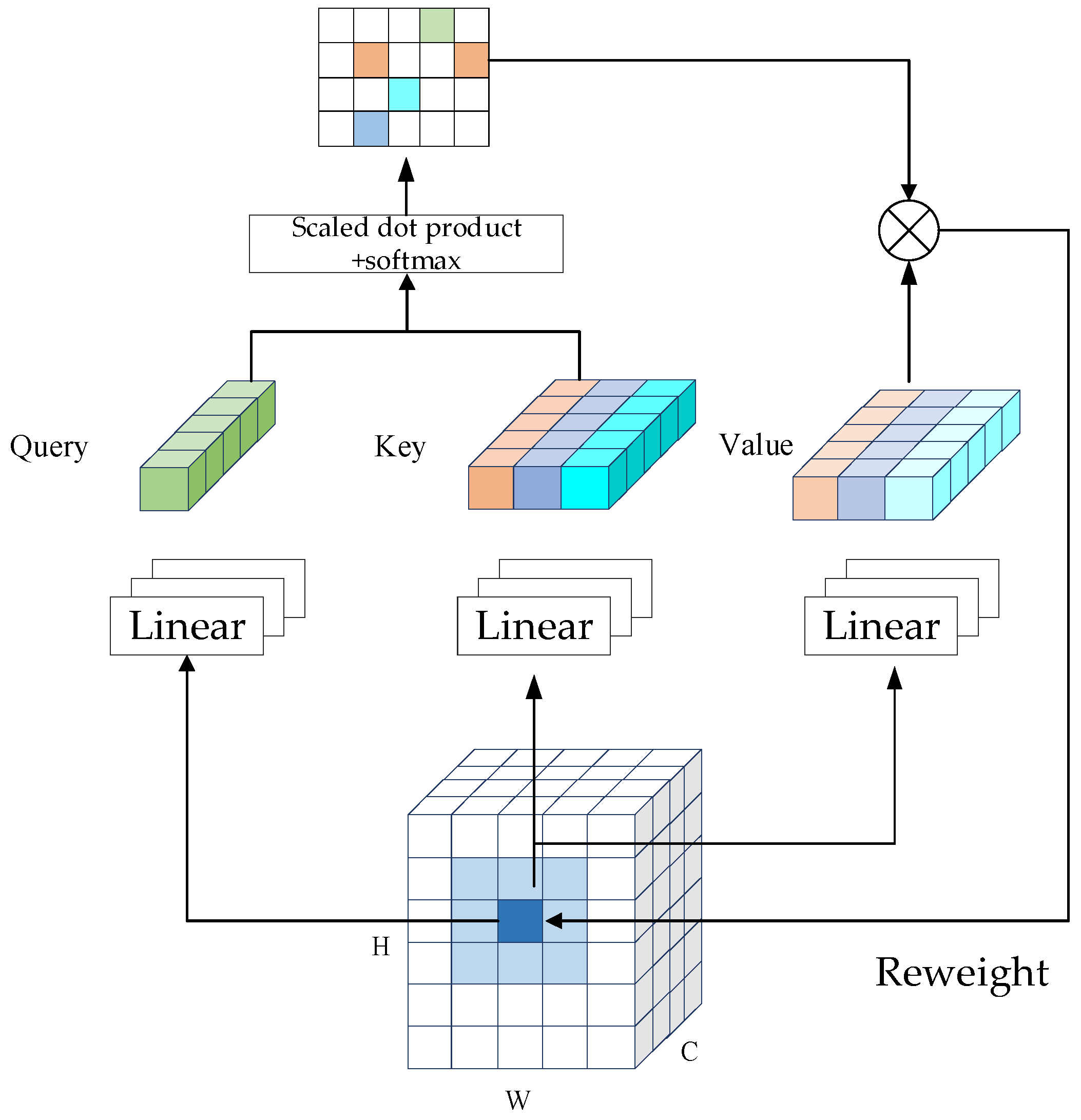

3.3.1. Neighborhood Attention (NA)

3.3.2. 2D Backbone with Neighborhood Attention and Coordinate Attention

4. Experimental Results

4.1. Dataset and Network Details

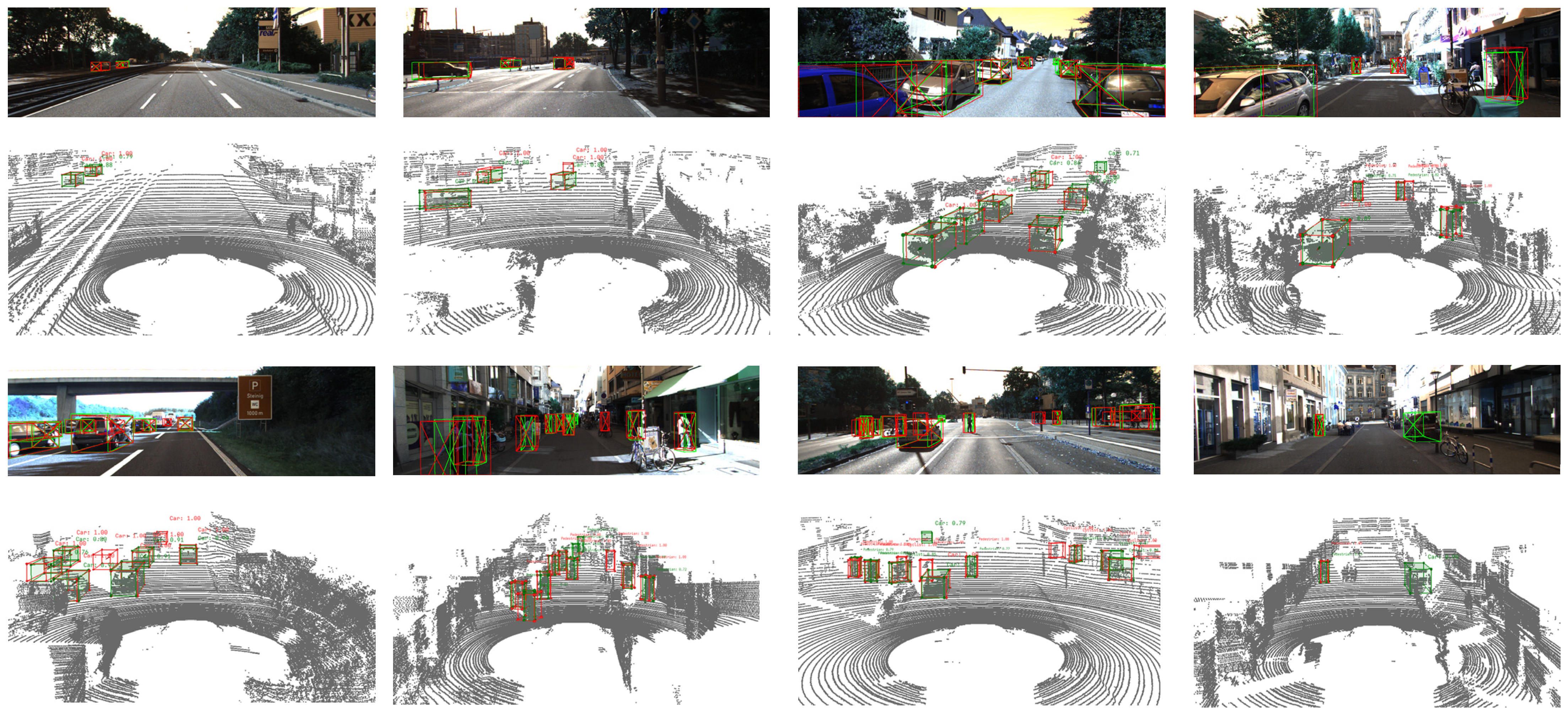

4.2. Results and Analysis

4.2.1. Evaluation with KITTI Dataset

4.2.2. Evaluation with ONCE Validation Set

4.3. Ablation Study

4.3.1. Effectiveness of VDA Module

4.3.2. Effectiveness of NA and CA Modules

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Mao, J.; Shi, S.; Wang, X.; Li, H. 3D Object Detection for Autonomous Driving: A Review and New Outlooks. arXiv 2022, arXiv:2206.09474. [Google Scholar]

- Qian, R.; Lai, X.; Li, X. 3D Object Detection for Autonomous Driving: A Survey. Pattern Recognit. 2022, 130, 108796. [Google Scholar] [CrossRef]

- Li, J.; Hu, Y. A Density-Aware PointRCNN for 3D Object Detection in Point Clouds. arXiv 2020, arXiv:2009.05307. [Google Scholar]

- Ning, J.; Da, F.; Gai, S. Density Aware 3D Object Single Stage Detector. IEEE Sens. J. 2021, 21, 23108–23117. [Google Scholar] [CrossRef]

- Hu, J.S.; Kuai, T.; Waslander, S.L. Point Density-Aware Voxels for Lidar 3d Object Detection. arXiv 2022, arXiv:2203.05662v2. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are We Ready for Autonomous Driving? The Kitti Vision Benchmark Suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Mao, J.; Niu, M.; Jiang, C.; Liang, H.; Chen, J.; Liang, X.; Li, Y.; Ye, C.; Zhang, W.; Li, Z. One Million Scenes for Autonomous Driving: Once Dataset. arXiv 2021, arXiv:2106.11037. [Google Scholar]

- Hassani, A.; Walton, S.; Li, J.; Li, S.; Shi, H. Neighborhood Attention Transformer. arXiv 2022, arXiv:2204.07143. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-End Learning for Point Cloud Based 3d Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. Second: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Shi, S.; Li, P.; Zhou, W.; Zhang, Y.; Li, H. Voxel R-Cnn: Towards High Performance Voxel-Based 3d Object Detection. AAAI Conf. Artif. Intell. 2021, 35, 1201–1209. [Google Scholar] [CrossRef]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. Pv-Rcnn: Point-Voxel Feature Set Abstraction for 3d Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10529–10538. [Google Scholar]

- Mao, J.; Xue, Y.; Niu, M.; Bai, H.; Feng, J.; Liang, X.; Xu, H.; Xu, C. Voxel Transformer for 3D Object Detection. arXiv 2021, arXiv:2109.02497. [Google Scholar]

- Mao, J.; Niu, M.; Bai, H.; Liang, X.; Xu, H.; Xu, C. Pyramid R-Cnn: Towards Better Performance and Adaptability for 3d Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 6 September 2021; pp. 2723–2732. [Google Scholar]

- Gao, F.; Li, C.; Zhang, B. A Dynamic Clustering Algorithm for LiDAR Obstacle Detection of Autonomous Driving System. IEEE Sens. J. 2021, 21, 25922–25930. [Google Scholar] [CrossRef]

- Sheng, H.; Cai, S.; Liu, Y.; Deng, B.; Huang, J.; Hua, X.-S.; Zhao, M.-J. Improving 3d Object Detection with Channel-Wise Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 2743–2752. [Google Scholar]

- Fan, L.; Pang, Z.; Zhang, T.; Wang, Y.-X.; Zhao, H.; Wang, F.; Wang, N.; Zhang, Z. Embracing Single Stride 3d Object Detector with Sparse Transformer. arXiv 2022, arXiv:2112.06375. [Google Scholar]

- Team, O.D. OpenPCDet: An Open-Source Toolbox for 3D Object Detection from Point Clouds. Ph.D. Thesis, The Chinese University of Hong Kong, Hong Kong, China, 2020. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast Encoders for Object Detection from Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3d Object Proposal Generation and Detection from Point Cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 770–779. [Google Scholar]

- Shi, W.; Rajkumar, R. Point-Gnn: Graph Neural Network for 3d Object Detection in a Point Cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1711–1719. [Google Scholar]

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From Points to Parts: 3d Object Detection from Point Cloud with Part-Aware and Part-Aggregation Network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2647–2664. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Zhao, X.; Huang, T.; Hu, R.; Zhou, Y.; Bai, X. Tanet: Robust 3d Object Detection from Point Clouds with Triple Attention. AAAI Conf. Artif. Intell. 2020, 34, 11677–11684. [Google Scholar] [CrossRef]

- Yang, Z.; Sun, Y.; Liu, S.; Jia, J. 3dssd: Point-Based 3d Single Stage Object Detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11040–11048. [Google Scholar]

- Chen, C.; Chen, Z.; Zhang, J.; Tao, D. SASA: Semantics-Augmented Set Abstraction for Point-Based 3D Object Detection. arXiv 2022, arXiv:2201.01976. [Google Scholar] [CrossRef]

- Zhang, Y.; Hu, Q.; Xu, G.; Ma, Y.; Wan, J.; Guo, Y. Not All Points Are Equal: Learning Highly Efficient Point-Based Detectors for 3d Lidar Point Clouds. arXiv 2022, arXiv:2203.11139. [Google Scholar]

- Yin, T.; Zhou, X.; Krahenbuhl, P. Center-Based 3d Object Detection and Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 11784–11793. [Google Scholar]

| Method | Type | Car 3D Detection (IoU = 0.7) | Ped. 3D Detection (IoU = 0.7) | Cyc. 3D Detection (IoU = 0.7) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Easy | Mod. | Hard. | Easy | Mod. | Hard. | Easy | Mod. | Hard. | ||

| SECOND [10] | 1-stage | 84.65 | 75.96 | 68.71 | 45.31 | 35.52 | 33.14 | 75.83 | 60.82 | 53.67 |

| PV-RCNN [12] | 2-stage | 90.25 | 81.43 | 76.82 | 52.17 | 43.29 | 40.29 | 78.60 | 63.71 | 57.65 |

| PointPillars [19] | 1-stage | 82.58 | 74.31 | 68.99 | 51.45 | 41.92 | 38.89 | 77.10 | 58.65 | 51.92 |

| PointRCNN [20] | 2-stage | 86.96 | 75.64 | 70.70 | 47.98 | 39.37 | 36.01 | 74.96 | 58.82 | 52.53 |

| PointGNN [21] | 1-stage | 88.33 | 79.47 | 72.29 | 51.92 | 43.77 | 40.14 | 78.60 | 63.48 | 57.08 |

| Part-A2 [22] | 2-stage | 87.81 | 78.49 | 73.51 | 53.10 | 43.35 | 40.06 | 79.17 | 63.52 | 56.93 |

| TANet [23] | 2-stage | 84.39 | 75.94 | 68.82 | 53.72 | 44.34 | 40.49 | 75.70 | 59.44 | 52.53 |

| 3DSSD [24] | 1-stage | 88.36 | 79.57 | 74.55 | - | - | - | - | - | - |

| SASA [25] | 1-stage | 88.76 | 82.16 | 77.16 | - | - | - | - | - | - |

| Voxel R-CNN [11] | 2-stage | 90.90 | 81.62 | 77.06 | - | - | - | - | - | - |

| PDV [5] | 2-stage | 90.43 | 81.86 | 77.36 | - | - | - | 83.04 | 67.81 | 60.46 |

| DenNet (Ours) | 2-stage | 90.72 | 81.93 | 77.48 | 46.10 | 40.23 | 33.47 | 78.89 | 64.15 | 56.81 |

| Method | Car 3D Detection | Ped. 3D Detection | Cyc. 3D Detection | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard | |

| PV-RCNN [12] | 90.25 | 81.43 | 76.82 | 52.17 | 43.29 | 40.29 | 78.60 | 63.71 | 57.65 |

| 3DSSD [24] | 91.04 | 82.32 | 79.81 | 59.14 | 55.19 | 50.86 | 88.05 | 69.84 | 65.41 |

| IA-SSD [26] | 91.88 | 83.41 | 80.44 | 61.22 | 56.77 | 51.15 | 88.42 | 70.14 | 65.99 |

| CT3D [16] | 92.34 | 84.97 | 82.91 | 61.05 | 56.67 | 51.10 | 89.01 | 71.88 | 67.91 |

| PDV [5] | 92.56 | 85.29 | 83.05 | 66.90 | 60.80 | 55.85 | 92.72 | 74.23 | 69.60 |

| SASA [25] | 91.82 | 84.48 | 82.00 | 62.32 | 58.02 | 53.30 | 89.11 | 72.61 | 68.19 |

| Voxel R-CNN [11] | 92.86 | 85.13 | 82.84 | 69.37 | 61.56 | 56.39 | 91.63 | 74.52 | 70.25 |

| DenNet (ours) | 92.62 | 85.39 | 82.83 | 66.26 | 59.52 | 54.15 | 93.22 | 75.27 | 70.59 |

| Method | Vehicle | Pedestrian | Cyclist | mAP | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Overall | 0–30 m | 30–50 m | >50 m | Overall | 0–30 m | 30–50 m | >50 m | Overall | 0–30 m | 30–50 m | >50 m | ||

| PointPillars [19] | 68.57 | 80.86 | 62.07 | 47.04 | 17.63 | 19.74 | 15.15 | 10.23 | 46.81 | 58.33 | 40.32 | 25.86 | 44.34 |

| SECOND [10] | 71.19 | 84.04 | 63.02 | 47.25 | 26.44 | 29.33 | 24.05 | 18.05 | 58.04 | 69.96 | 52.43 | 34.61 | 51.89 |

| CenterPoints [27] | 66.79 | 80.10 | 59.55 | 43.39 | 49.90 | 56.24 | 42.61 | 26.27 | 63.45 | 74.28 | 57.94 | 41.48 | 60.05 |

| PV-RCNN [12] | 77.77 | 89.39 | 72.55 | 58.64 | 23.50 | 25.61 | 22.84 | 17.27 | 59.37 | 71.66 | 52.58 | 36.17 | 53.55 |

| PointRCNN [20] | 52.09 | 74.45 | 40.89 | 16.81 | 4.28 | 6.17 | 2.40 | 0.91 | 29.84 | 46.03 | 20.94 | 5.46 | 28.74 |

| IA-SSD [26] | 70.30 | 83.01 | 62.84 | 47.01 | 39.82 | 47.45 | 32.75 | 18.99 | 62.17 | 73.78 | 56.31 | 39.53 | 57.43 |

| Voxel R-CNN [11] | 73.53 | 87.07 | 65.59 | 49.97 | 35.66 | 42.38 | 29.80 | 18.15 | 59.85 | 73.57 | 51.59 | 33.65 | 56.35 |

| DenNet (Ours) | 80.24 | 89.88 | 73.57 | 60.49 | 35.68 | 41.72 | 32.88 | 18.79 | 65.59 | 77.77 | 57.60 | 41.24 | 60.31 |

| VDA | NA | CA | Vehicle | Pedestrian | Cyclist | mAP | Inference Time (ms) |

|---|---|---|---|---|---|---|---|

| 73.53 | 35.66 | 59.85 | 56.35 | 27.72 | |||

| ✓ | 78.82 | 28.94 | 61.24 | 55.89 | 32.99 | ||

| ✓ | ✓ | 79.94 | 32.28 | 64.35 | 58.86 | 34.68 | |

| ✓ | ✓ | ✓ | 80.24 | 35.68 | 65.59 | 60.31 | 35.56 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qian, H.; Wu, P.; Sun, X.; Guo, X.; Su, S. Density Awareness and Neighborhood Attention for LiDAR-Based 3D Object Detection. Photonics 2022, 9, 820. https://doi.org/10.3390/photonics9110820

Qian H, Wu P, Sun X, Guo X, Su S. Density Awareness and Neighborhood Attention for LiDAR-Based 3D Object Detection. Photonics. 2022; 9(11):820. https://doi.org/10.3390/photonics9110820

Chicago/Turabian StyleQian, Hanxiang, Peng Wu, Xiaoyong Sun, Xiaojun Guo, and Shaojing Su. 2022. "Density Awareness and Neighborhood Attention for LiDAR-Based 3D Object Detection" Photonics 9, no. 11: 820. https://doi.org/10.3390/photonics9110820

APA StyleQian, H., Wu, P., Sun, X., Guo, X., & Su, S. (2022). Density Awareness and Neighborhood Attention for LiDAR-Based 3D Object Detection. Photonics, 9(11), 820. https://doi.org/10.3390/photonics9110820