A Checkerboard Corner Detection Method for Infrared Thermal Camera Calibration Based on Physics-Informed Neural Network

Abstract

1. Introduction

- We tested and analyzed the applicability of the YOLO model family for corner detection tasks, and improved the YOLO network structure for checkerboard corner detection tasks. Compared to baseline methods such as MATLAB and OpenCV, our model has improved robustness and accuracy.

- An unsupervised training method driven by camera physical information is proposed for training the YOLO corner detection model. This method allows the model to learn the intrinsic relationship between corner points, breaks through the bottleneck of conventional training, and effectively improves camera calibration accuracy.

- A real infrared thermal camera calibration dataset is constructed, and a set of experiments are conducted based on this dataset. The effectiveness of the YOLO model and the unsupervised training method proposed in this paper are verified through these experiments. Ultimately, compared to the baseline approach, our method achieves state-of-the-art performance on our test sets.

2. Materials and Methods

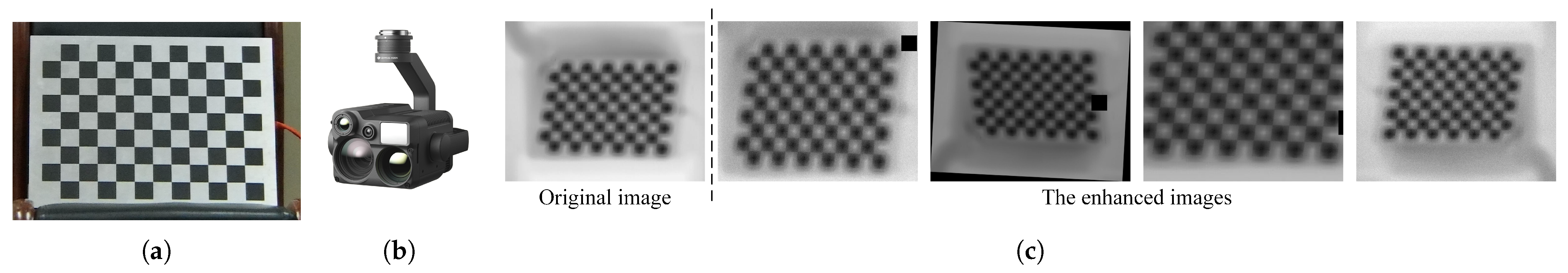

2.1. Materials

2.2. Conventional Methods

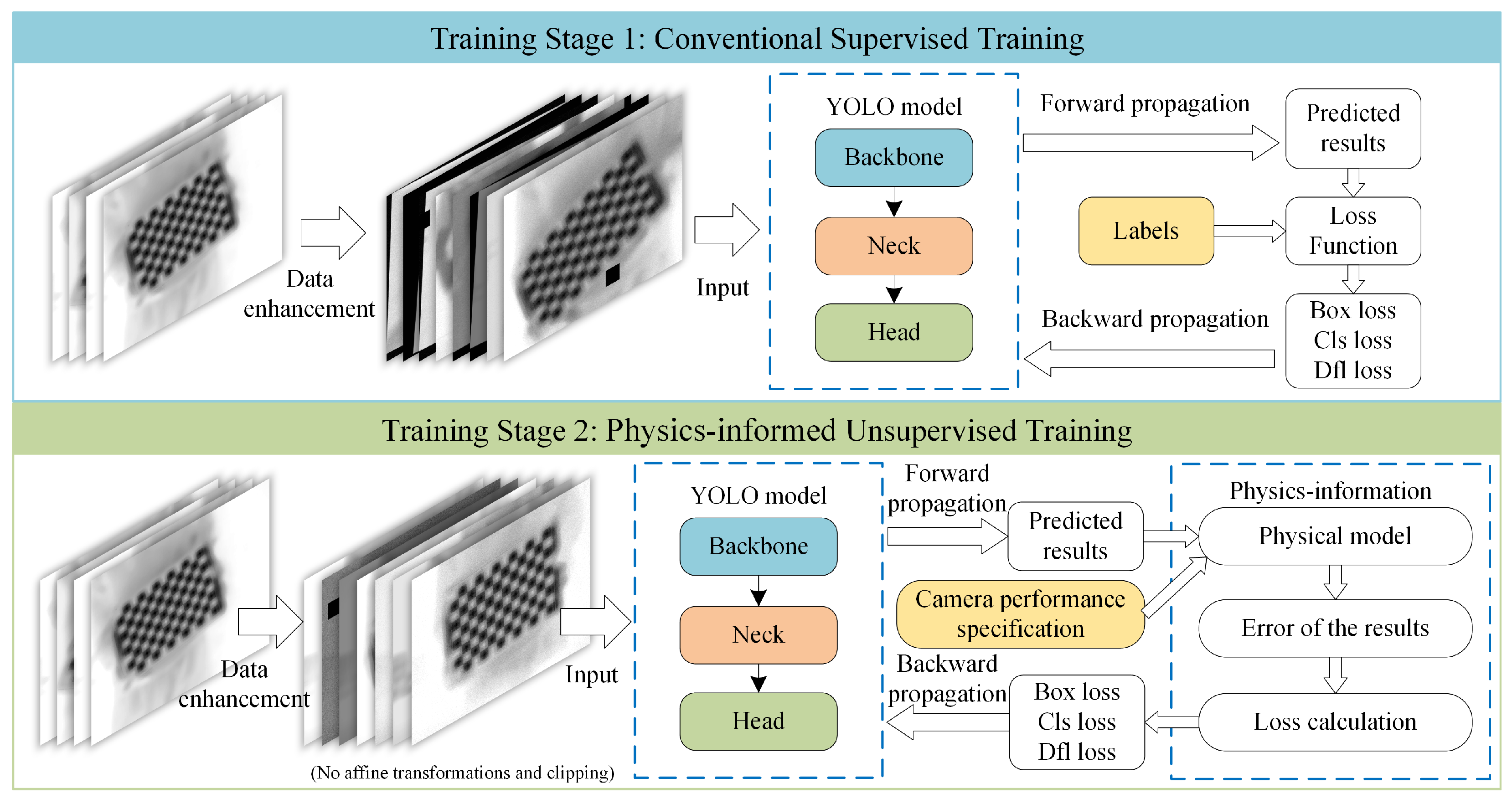

2.3. Proposed Method

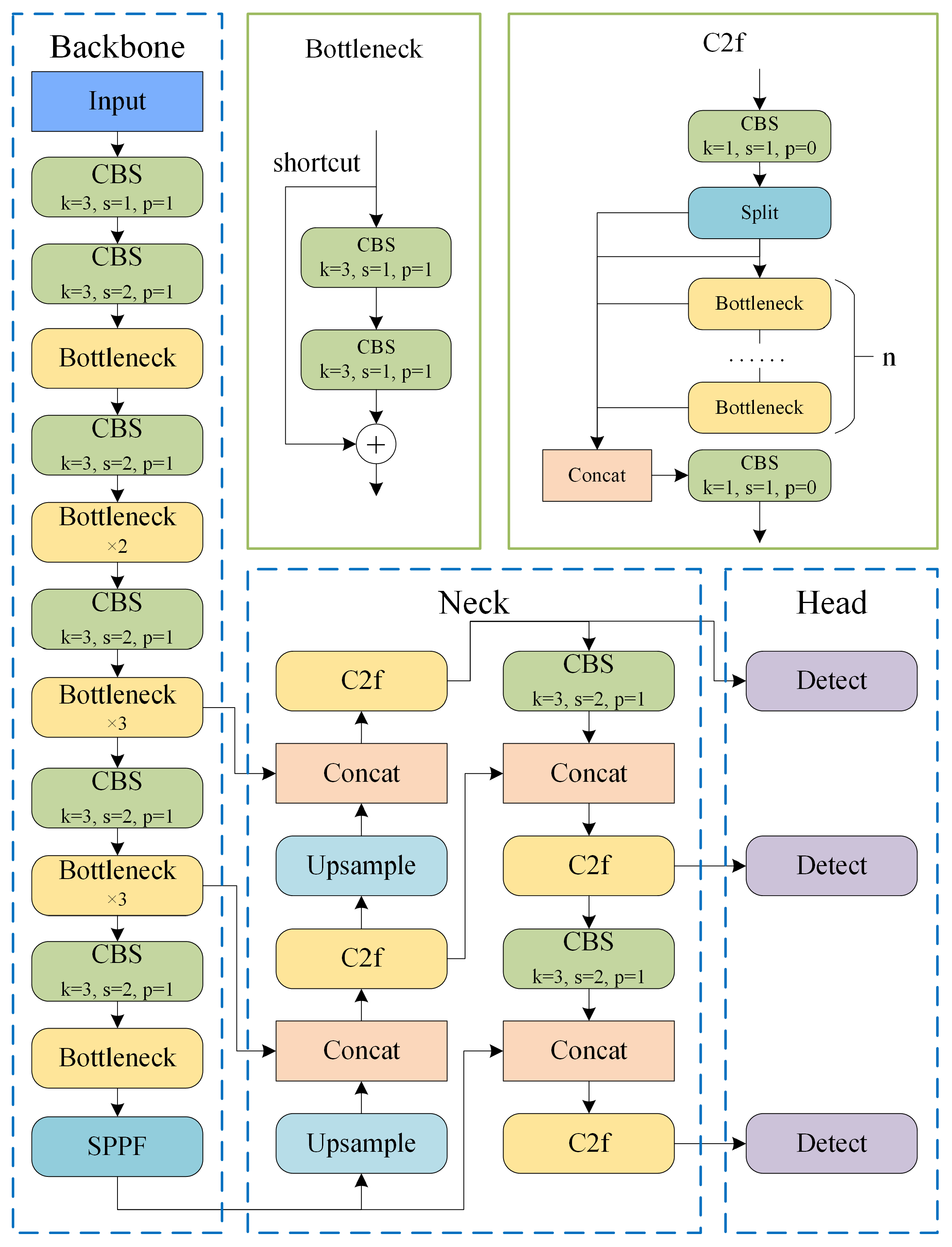

2.3.1. Model Structure

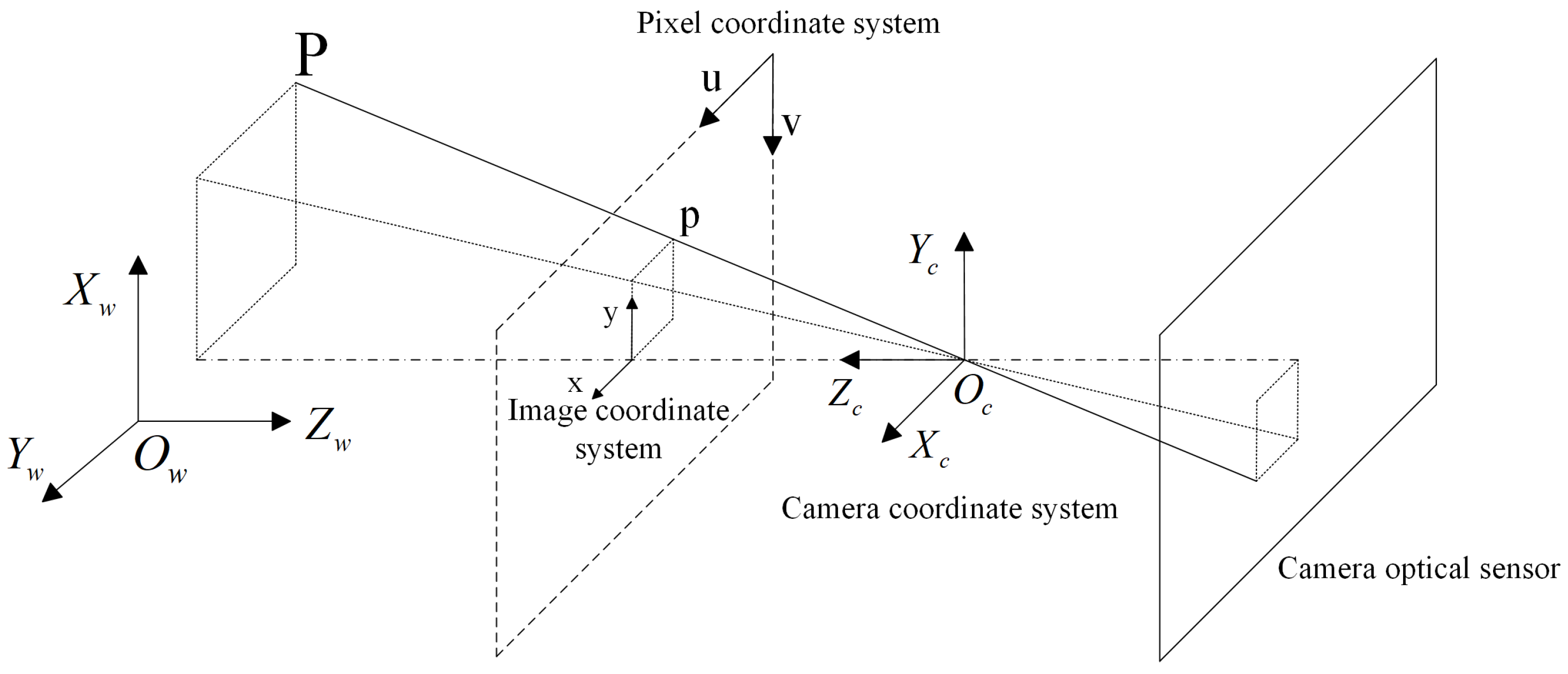

2.3.2. Physics-Informed Method

- Estimation of external parameters and distortion coefficients based on model predictions and a priori physical information :

- Substitute the estimated parameter into (5) to compute the expected corner point locations , and calculate the prediction error and the intersection over union:where and are the width and height of the predicted box, respectively;

- Calculate the loss according to the conventional target detection loss function:

2.4. Implementation Details

3. Experimental Results and Discussion

3.1. Baselines

3.2. Evaluation Criteria

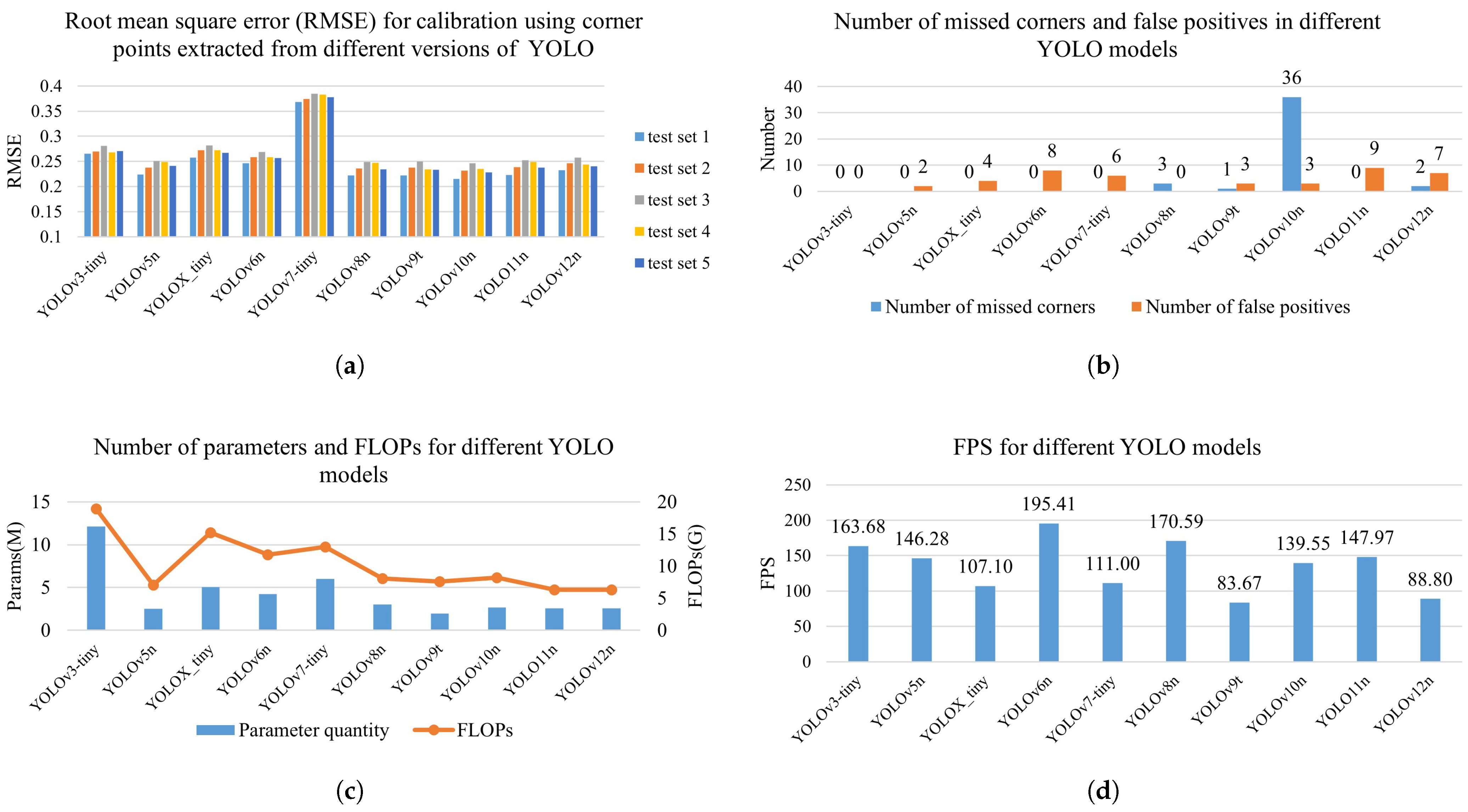

3.3. Comparison of Conventional YOLO Models

3.4. Ablation Experiment

- Adding a CBS module with a step size of 1 to be placed in the first layer of Backbone.

- Replacing the C2f modules in Backbone with Bottleneck modules.

- Add a Bottleneck module to be placed in the third layer of Backbone.

- Starting from the second layer of the network, the width is adjusted to 1.5 times the original value, and the depth of the fourth, sixth, and eighth layers is increased by 1.

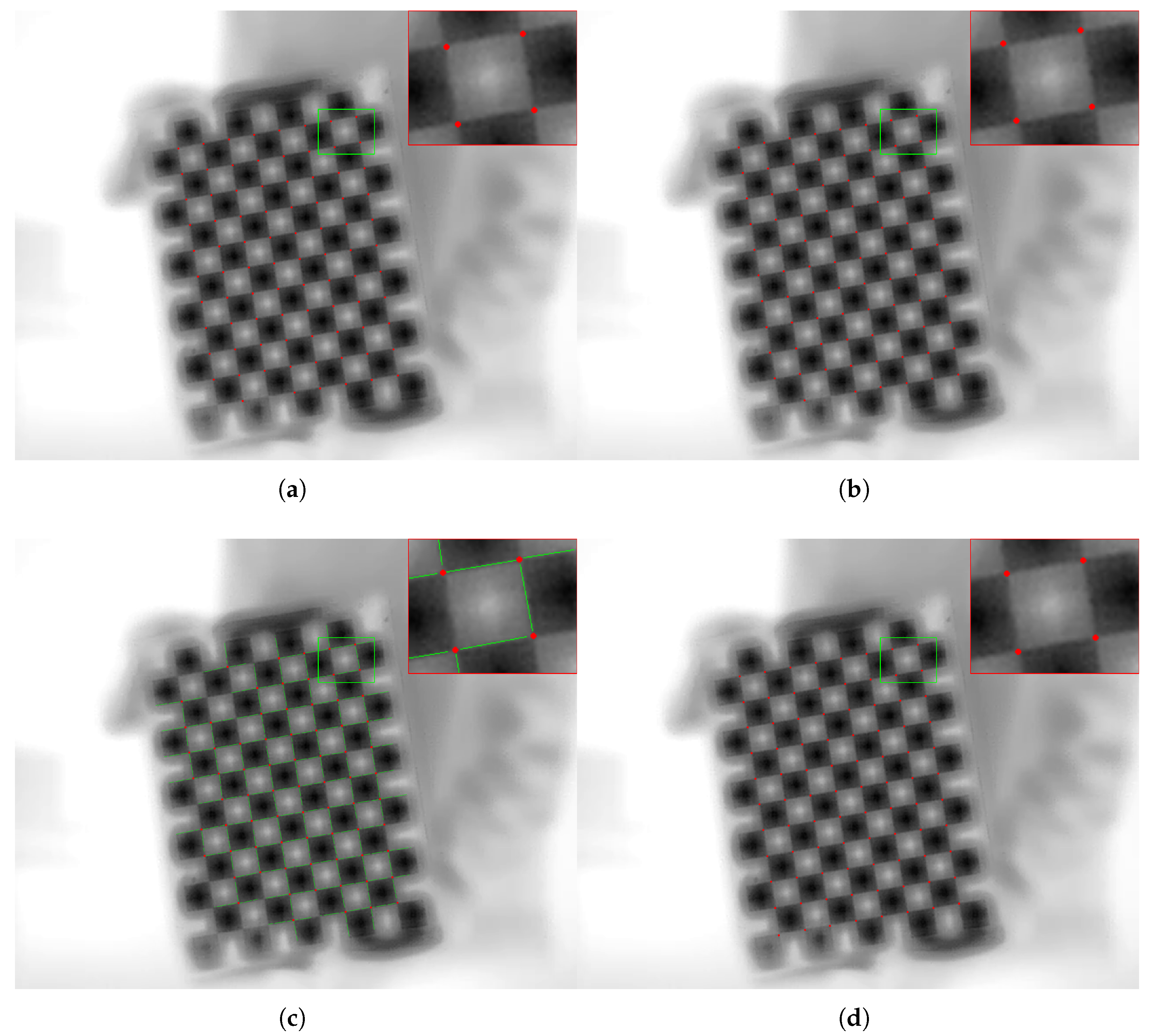

3.5. Corner-Point Localization Experiment

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Qu, Z.; Jiang, P.; Zhang, W. Development and application of infrared thermography non-destructive testing techniques. Sensors 2020, 20, 3851. [Google Scholar] [CrossRef]

- Manullang, M.C.T.; Lin, Y.H.; Lai, S.J.; Chou, N.K. Implementation of thermal camera for non-contact physiological measurement: A systematic review. Sensors 2021, 21, 7777. [Google Scholar] [CrossRef]

- Wang, J.; Tchapmi, L.P.; Ravikumar, A.P.; McGuire, M.; Bell, C.S.; Zimmerle, D.; Savarese, S.; Brandt, A.R. Machine vision for natural gas methane emissions detection using an infrared camera. Appl. Energy 2020, 257, 113998. [Google Scholar] [CrossRef]

- Perpetuini, D.; Filippini, C.; Cardone, D.; Merla, A. An overview of thermal infrared imaging-based screenings during pandemic emergencies. Int. J. Environ. Res. Public Health 2021, 18, 3286. [Google Scholar] [CrossRef] [PubMed]

- Mashekova, A.; Zhao, Y.; Ng, E.Y.K.; Zarikas, V.; Fok, S.C.; Mukhmetov, O. Early detection of the breast cancer using infrared technology–A comprehensive review. Therm. Sci. Eng. Prog. 2022, 27, 101142. [Google Scholar] [CrossRef]

- Wang, S.; Du, Y.; Zhao, S.; Gan, L. Multi-scale infrared military target detection based on 3X-FPN feature fusion network. IEEE Access 2023, 11, 141585–141597. [Google Scholar] [CrossRef]

- Chen, H.W.; Gross, N.; Kapadia, R.; Cheah, J.; Gharbieh, M. Advanced automatic target recognition (ATR) with infrared (IR) sensors. In Proceedings of the 2021 IEEE Aerospace Conference (50100), Big Sky, MT, USA, 6–13 March 2021; pp. 1–13. [Google Scholar] [CrossRef]

- Koide, K.; Menegatti, E. General hand–eye calibration based on reprojection error minimization. IEEE Robot. Autom. Lett. 2019, 4, 1021–1028. [Google Scholar] [CrossRef]

- Su, S.; Gao, S.; Zhang, D.; Wang, W. Research on the hand–eye calibration method of variable height and analysis of experimental results based on rigid transformation. Appl. Sci. 2022, 12, 4415. [Google Scholar] [CrossRef]

- Yuan, D.; Zhang, H.; Shu, X.; Liu, Q.; Chang, X.; He, Z.; Shi, G. Thermal infrared target tracking: A comprehensive review. IEEE Trans. Instrum. Meas. 2023, 73, 5000419. [Google Scholar] [CrossRef]

- Hou, F.; Zhang, Y.; Zhou, Y.; Zhang, M.; Lv, B.; Wu, J. Review on infrared imaging technology. Sustainability 2022, 14, 11161. [Google Scholar] [CrossRef]

- ElSheikh, A.; Abu-Nabah, B.A.; Hamdan, M.O.; Tian, G.Y. Infrared camera geometric calibration: A review and a precise thermal radiation checkerboard target. Sensors 2023, 23, 3479. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Usamentiaga, R.; Garcia, D.F.; Ibarra-Castanedo, C.; Maldague, X. Highly accurate geometric calibration for infrared cameras using inexpensive calibration targets. Measurement 2017, 112, 105–116. [Google Scholar] [CrossRef]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Proceedings, Part I 9. Springer: Berlin/Heidelberg, Germany, 2006; pp. 430–443. [Google Scholar]

- Rosten, E.; Porter, R.; Drummond, T. Faster and better: A machine learning approach to corner detection. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 105–119. [Google Scholar] [CrossRef] [PubMed]

- Du, F.; Liu, P.; Zhao, W.; Tang, X. Correlation-guided attention for corner detection based visual tracking. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6836–6845. [Google Scholar] [CrossRef]

- Wu, H.; Wan, Y. A highly accurate and robust deep checkerboard corner detector. Electron. Lett. 2021, 57, 317–320. [Google Scholar] [CrossRef]

- Song, W.; Zhong, B.; Sun, X. Building corner detection in aerial images with fully convolutional networks. Sensors 2019, 19, 1915. [Google Scholar] [CrossRef] [PubMed]

- Dantas, M.S.M.; Bezerra, D.; de Oliveira Filho, A.T.; Barbosa, G.; Rodrigues, I.R.; Sadok, D.H.; Kelner, J.; Souza, R. Automatic template detection for camera calibration. Res. Soc. Dev. 2022, 11, e173111436168. [Google Scholar] [CrossRef]

- Kang, J.; Yoon, H.; Lee, S.; Lee, S. Sparse checkerboard corner detection from global perspective. In Proceedings of the 2021 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuala Terengganu, Malaysia, 13–15 September 2021; pp. 12–17. [Google Scholar] [CrossRef]

- Zhu, H.; Zhou, Z.; Liang, B.; Han, X.; Tao, Y. Sub-pixel checkerboard corner localization for robust vision measurement. IEEE Signal Process. Lett. 2023, 31, 21–25. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Xicai, L.; Qinqin, W.; Yuanqing, W. Binocular vision calibration method for a long-wavelength infrared camera and a visible spectrum camera with different resolutions. Opt. Exp. 2021, 29, 3855–3872. [Google Scholar] [CrossRef]

- Wang, G.; Zheng, H.; Zhang, X. A robust checkerboard corner detection method for camera calibration based on improved YOLOX. Front. Phys. 2022, 9, 819019. [Google Scholar] [CrossRef]

- Son, M.; Ko, K. Multiple projector camera calibration by fiducial marker detection. IEEE Access 2023, 11, 78945–78955. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 15–17 September 1988; Volume 15, pp. 10–5244. [Google Scholar]

- Shi, D.; Huang, F.; Yang, J.; Jia, L.; Niu, Y.; Liu, L. Improved Shi–Tomasi sub-pixel corner detection based on super-wide field of view infrared images. Appl. Opt. 2024, 63, 831–837. [Google Scholar] [CrossRef] [PubMed]

- Shi, J. Good features to track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; IEEE: Piscataway, NJ, USA, 1994; pp. 593–600. [Google Scholar]

- Du, X.; Jiang, B.; Wu, L.; Xiao, M. Checkerboard corner detection method based on neighborhood linear fitting. Appl. Opt. 2023, 62, 7736–7743. [Google Scholar] [CrossRef] [PubMed]

- Dan, X.; Gong, Q.; Zhang, M.; Li, T.; Li, G.; Wang, Y. Chessboard corner detection based on EDLines algorithm. Sensors 2022, 22, 3398. [Google Scholar] [CrossRef]

- Lü, X.; Meng, L.; Long, L.; Wang, P. Comprehensive improvement of camera calibration based on mutation particle swarm optimization. Measurement 2022, 187, 110303. [Google Scholar] [CrossRef]

- Lawal, Z.K.; Yassin, H.; Lai, D.T.C.; Che Idris, A. Physics-informed neural network (PINN) evolution and beyond: A systematic literature review and bibliometric analysis. Big Data Cognit. Comput. 2022, 6, 140. [Google Scholar] [CrossRef]

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer Vision with the OpenCV Library; O’Reilly: Sebastopol, CA, USA, 2008. [Google Scholar]

- Fetić, A.; Jurić, D.; Osmanković, D. The procedure of a camera calibration using Camera Calibration Toolbox for MATLAB. In Proceedings of the 35th International Convention MIPRO, Opatija, Croatia, 21–25 May 2012; pp. 1752–1757. [Google Scholar]

- Conrady, A.E. Lens-systems, decentered. Mon. Not. Roy. Astron. Soc. 1919, 79, 384–390. [Google Scholar] [CrossRef]

- Brown, D.C. Decentering distortion of lenses. Photogramm. Eng. 1966, 32, 444–462. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

| Camera Performance Specifications | |

|---|---|

| Model | ZH20N |

| Focal Length | 44.5 mm |

| Image Width | 640 pixels |

| Image Length | 512 pixels |

| Digital Zoom Ratio | 1.19 |

| Focal Length In 35 mm Film | 196 mm |

| parameter | ||||

| numerical | 4418.2675 | 4418.2675 | 320.0 | 256.0 |

| Corner Detection Method | RMSE | MRE | Maximum Error | Standard Deviation | Missed Corners | False Positive Corners |

|---|---|---|---|---|---|---|

| YOLOv8n | 0.2377 | 0.1901 | 1.5575 | 0.1427 | 3 | 0 |

| After step 1 | 0.2346 | 0.1868 | 1.4684 | 0.1419 | 2 | 1 |

| After step 2 | 0.2334 | 0.1867 | 1.4347 | 0.1401 | 0 | 3 |

| After step 3 | 0.2328 | 0.1862 | 1.5940 | 0.1397 | 1 | 2 |

| After step 4 (Ours) | 0.2277 | 0.1807 | 1.6009 | 0.1384 | 2 | 1 |

| MATLAB [35] | 0.2332 | 0.1835 | 1.8645 | 0.1438 | 45 (0.2%) | 0 |

| OpenCV [34] | 0.3027 | 0.2502 | 1.4504 | 0.1702 | 18,656 (84.8%) | 0 |

| Corner Detection Method | Training Data | RMSE | MRE | Maximum Error | Standard Deviation | Missed Corners | False Positive Corners |

|---|---|---|---|---|---|---|---|

| Ours | Training set | 0.2572 | 0.2100 | 1.6133 | 0.1485 | 2 | 1 |

| Test set | 0.2452 | 0.1977 | 1.6579 | 0.1450 | 0 | 0 |

| Corner Detection Method | Training Data | Epochs | RMSE | MRE | Maximum Error | Standard Deviation | Missed Corners | False Positive Corners | |

|---|---|---|---|---|---|---|---|---|---|

| Ours | Training stage 1 | Training set | 100 | 0.2277 | 0.1807 | 1.6009 | 0.1384 | 2 | 1 |

| +Training stage 2 | Training set | 50 | 0.2174 | 0.1712 | 1.5800 | 0.1340 | 2 | 0 | |

| 100 | 0.2140 | 0.1691 | 1.5030 | 0.1312 | 2 | 2 | |||

| Test set | 50 | 0.2060 | 0.1597 | 1.5292 | 0.1301 | 0 | 0 | ||

| 100 | 0.1625 | 0.1278 | 1.2318 | 0.1003 | 0 | 0 | |||

| YOLOv8n | +Training stage 2 | Training set | 50 | 0.2325 | 0.1847 | 1.5595 | 0.1411 | 4 | 0 |

| 100 | 0.2191 | 0.1725 | 1.5539 | 0.1351 | 3 | 0 | |||

| Test set | 50 | 0.2144 | 0.1669 | 1.5080 | 0.1345 | 0 | 1 | ||

| 100 | 0.1864 | 0.1452 | 1.3698 | 0.1168 | 0 | 1 | |||

| MATLAB [35] | – | – | 0.2332 | 0.1835 | 1.8645 | 0.1438 | 45 (0.2%) | 0 | |

| OpenCV [34] | – | – | 0.3027 | 0.2502 | 1.4504 | 0.1702 | 18,656 (84.8%) | 0 | |

| LSCCL [22] | – | – | 0.2593 | 0.2121 | 1.6463 | 0.1492 | 17 | 14 | |

| EDLines-based [31] | – | – | 0.3955 | 0.3210 | 3.5541 | 0.2308 | 2445 (11.1%) | 19 | |

| Parameter | /Pixel | /Pixel | /Pixel | /Pixel | |||||

|---|---|---|---|---|---|---|---|---|---|

| Direct linear transformation | 4418.268 | 4418.268 | 320.000 | 256.000 | – | – | – | – | – |

| MATLAB [35] | 4527.619 | 4533.591 | 288.734 | 249.268 | 3.45 | −512.70 | |||

| OpenCV [34] | 4474.011 | 4487.650 | 308.506 | 173.580 | 2.56 | −219.09 | |||

| LSCCL [22] | 4545.433 | 4551.421 | 279.798 | 244.823 | 3.28 | −431.80 | |||

| EDLines-based [31] | 4505.926 | 4510.617 | 281.054 | 254.483 | 2.95 | −349.50 | |||

| Ours | 4489.067 | 4496.667 | 307.170 | 268.942 | 2.98 | −376.13 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zuo, Z.; Wu, Z.; Wei, J.; Wu, P.; Huang, S.; Cheng, Z. A Checkerboard Corner Detection Method for Infrared Thermal Camera Calibration Based on Physics-Informed Neural Network. Photonics 2025, 12, 847. https://doi.org/10.3390/photonics12090847

Zuo Z, Wu Z, Wei J, Wu P, Huang S, Cheng Z. A Checkerboard Corner Detection Method for Infrared Thermal Camera Calibration Based on Physics-Informed Neural Network. Photonics. 2025; 12(9):847. https://doi.org/10.3390/photonics12090847

Chicago/Turabian StyleZuo, Zhen, Zhuoyuan Wu, Junyu Wei, Peng Wu, Siyang Huang, and Zhangjunjie Cheng. 2025. "A Checkerboard Corner Detection Method for Infrared Thermal Camera Calibration Based on Physics-Informed Neural Network" Photonics 12, no. 9: 847. https://doi.org/10.3390/photonics12090847

APA StyleZuo, Z., Wu, Z., Wei, J., Wu, P., Huang, S., & Cheng, Z. (2025). A Checkerboard Corner Detection Method for Infrared Thermal Camera Calibration Based on Physics-Informed Neural Network. Photonics, 12(9), 847. https://doi.org/10.3390/photonics12090847