Enhanced Defect Detection in Additive Manufacturing via Virtual Polarization Filtering and Deep Learning Optimization

Abstract

1. Introduction

2. Principle

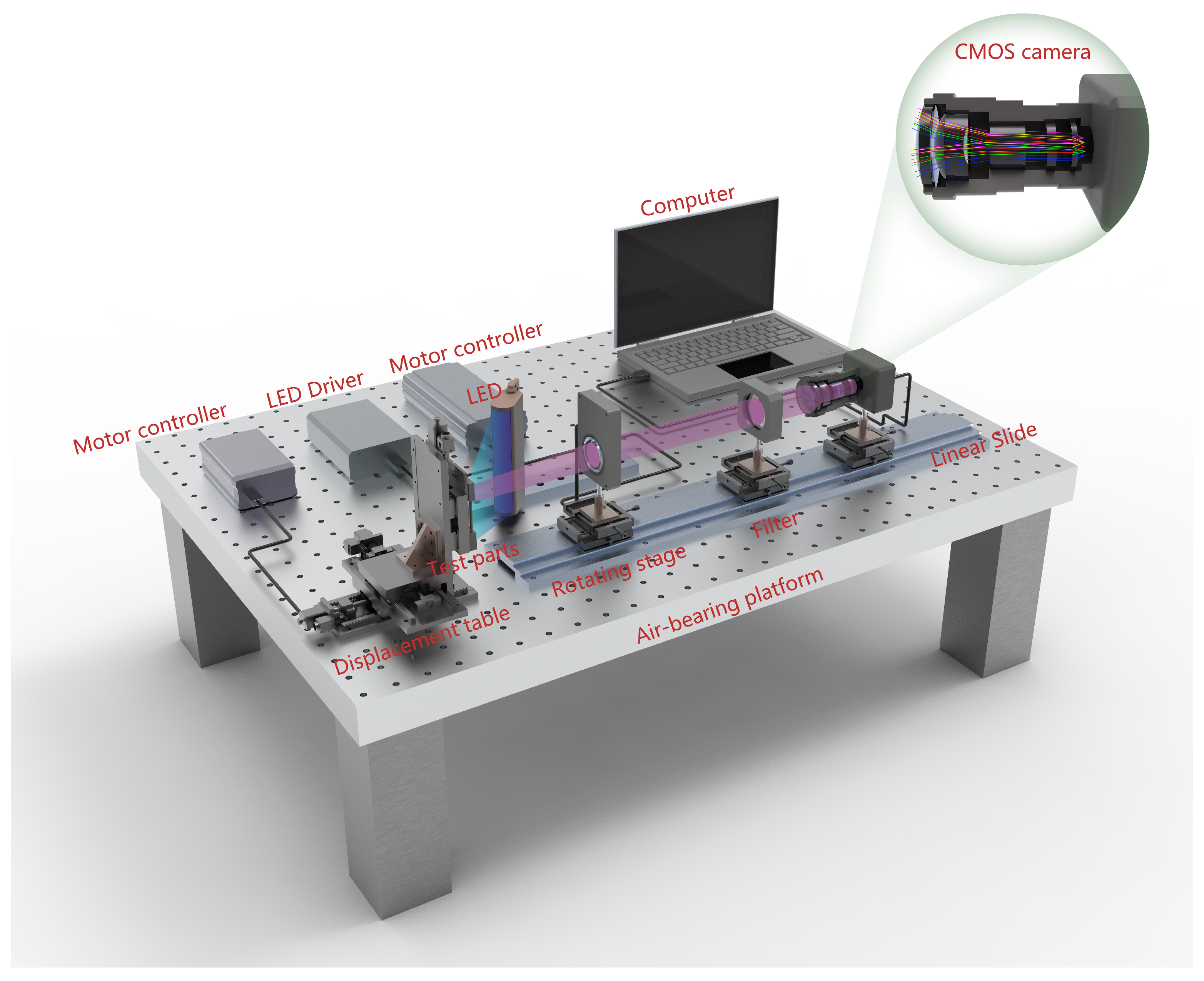

2.1. Multi-Source Polarized Imaging System

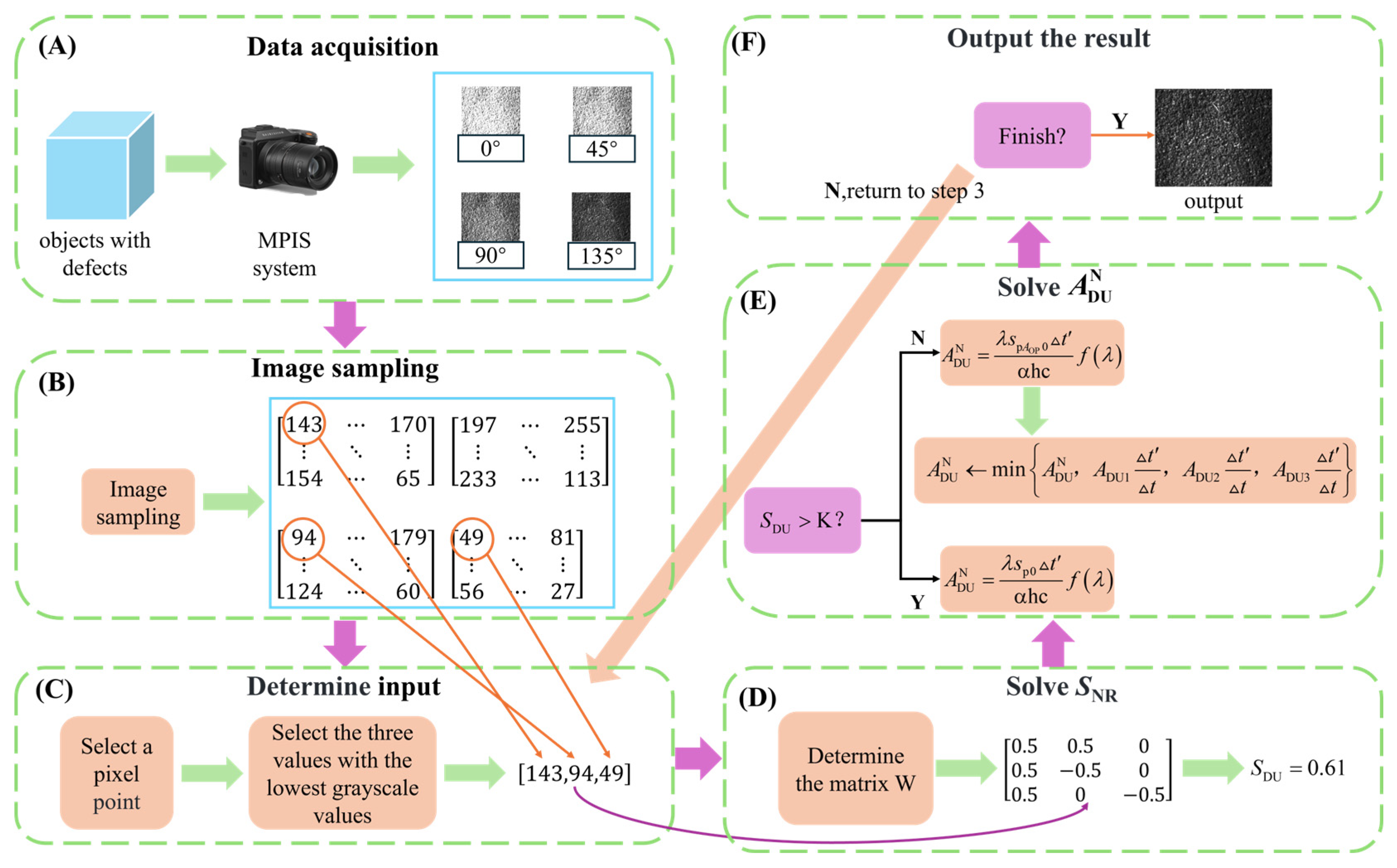

2.2. Image Enhancement Based on Virtual Polarization Filtering Algorithm

2.3. Detection Process

3. The IEVPF Methodology

3.1. Feasibility Proof of IEVPF

3.2. Principle of IEVPF Algorithm

3.3. Optimization and Processing for Overexposure in the IEVPF Algorithm

3.3.1. IEVPF Algorithm Optimization

3.3.2. Processing for Overexposure

- (1)

- If , then . Thus, all input images must be overexposed, and the algorithm cannot solve any information from them, and the output grayscale value is 255.

- (2)

- If is not much greater than , then there may exist non-overexposed images in the input images. If they exist, then the grayscale value calculated by the original method must be greater than the product of the non-overexposed grayscale value and the exposure time ratio , and it does not play a filtering role. Consequently, let to achieve overexposure processing.

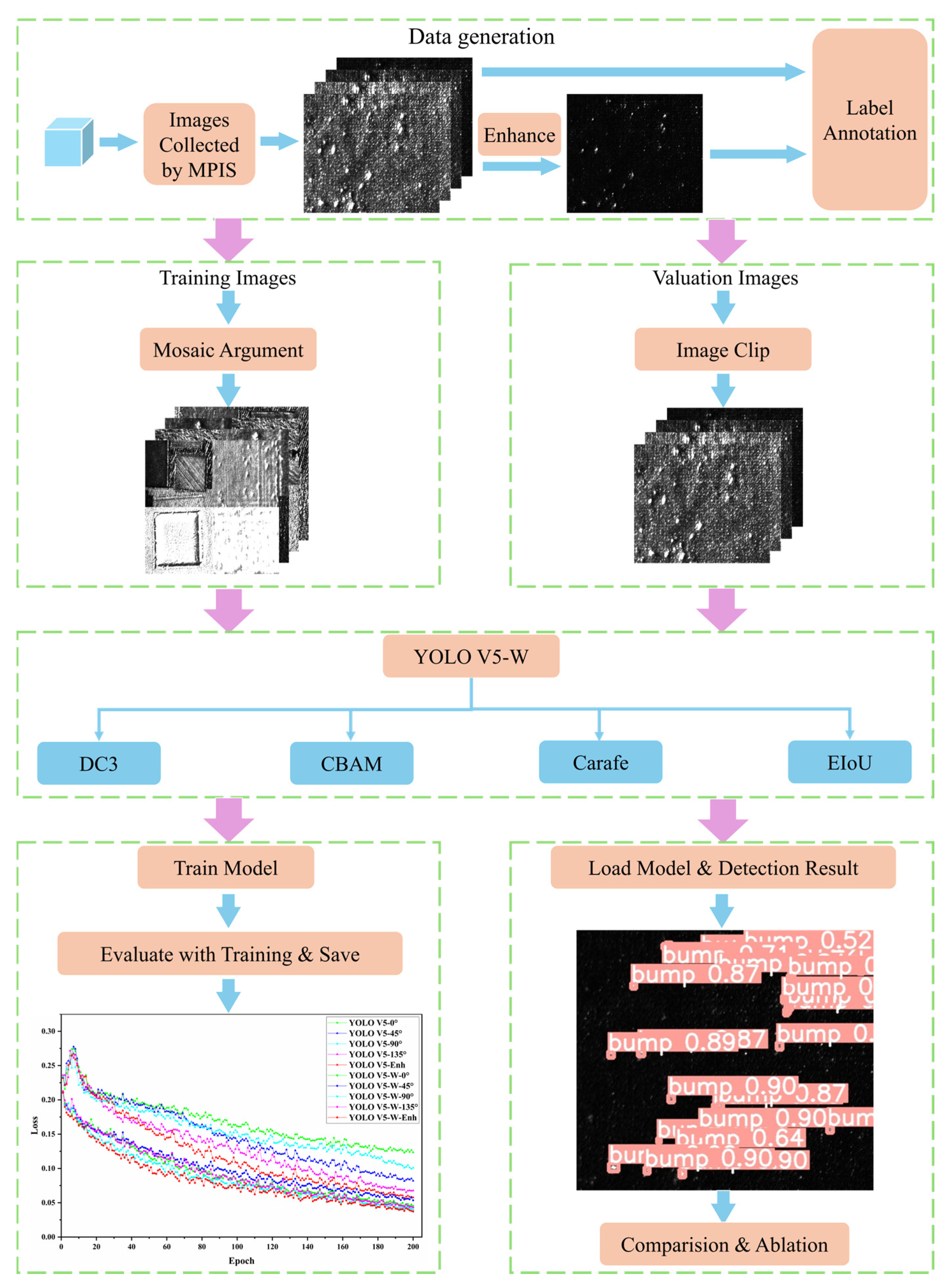

4. The Improvement of the Automative Deep Learning-Based Defect Detection Algorithm

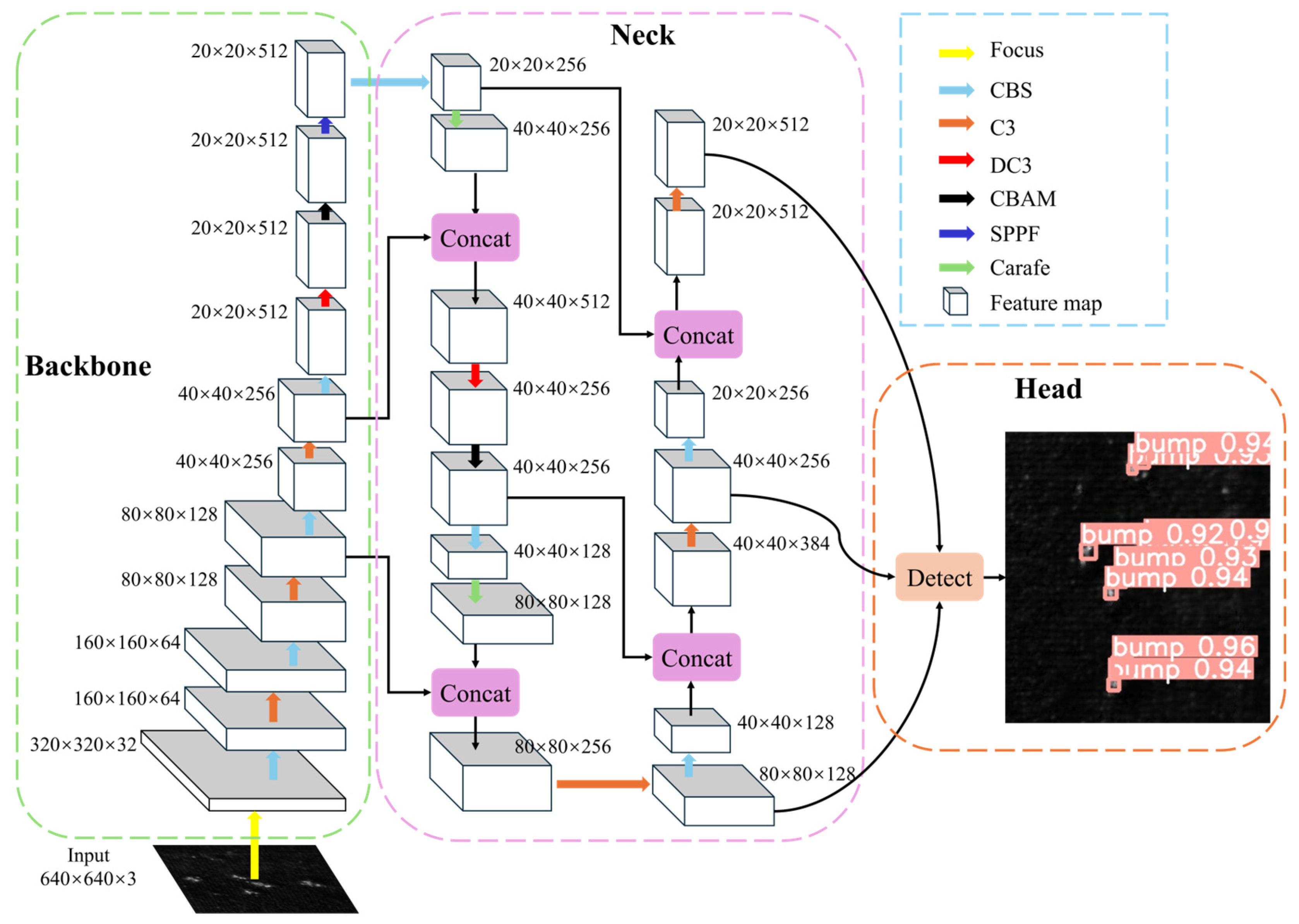

4.1. YOLO V5 Model

4.2. YOLO V5-W

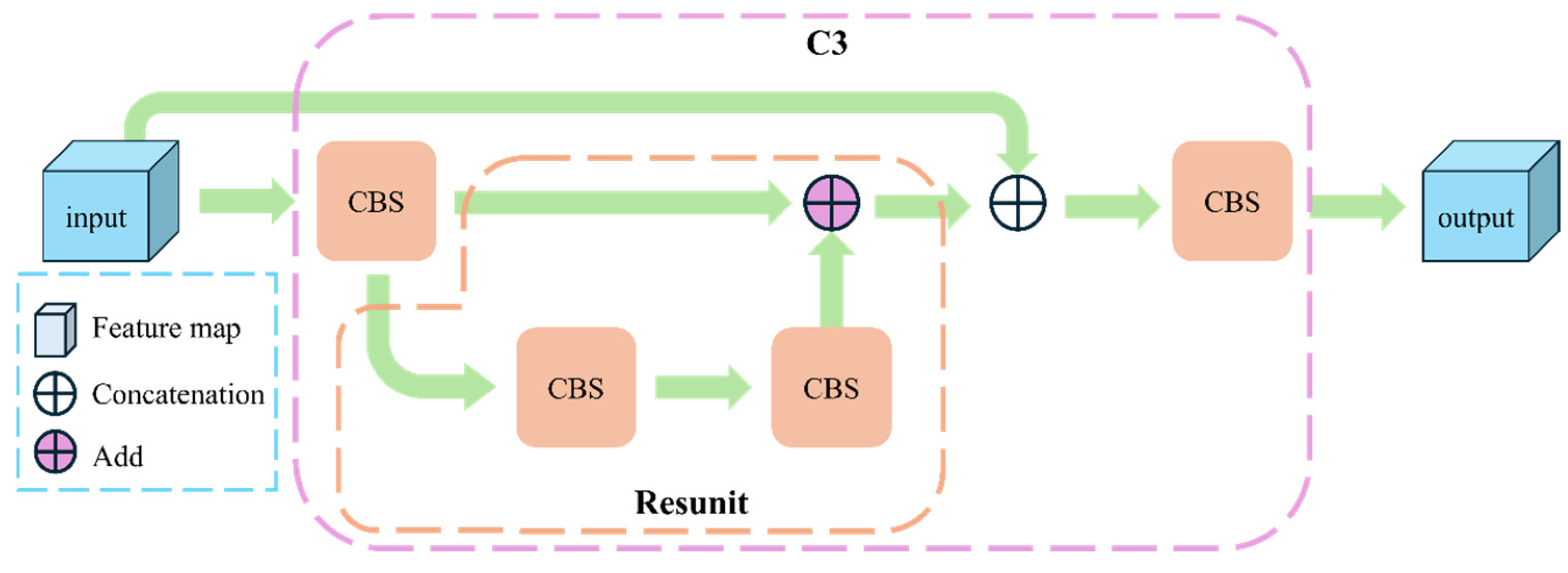

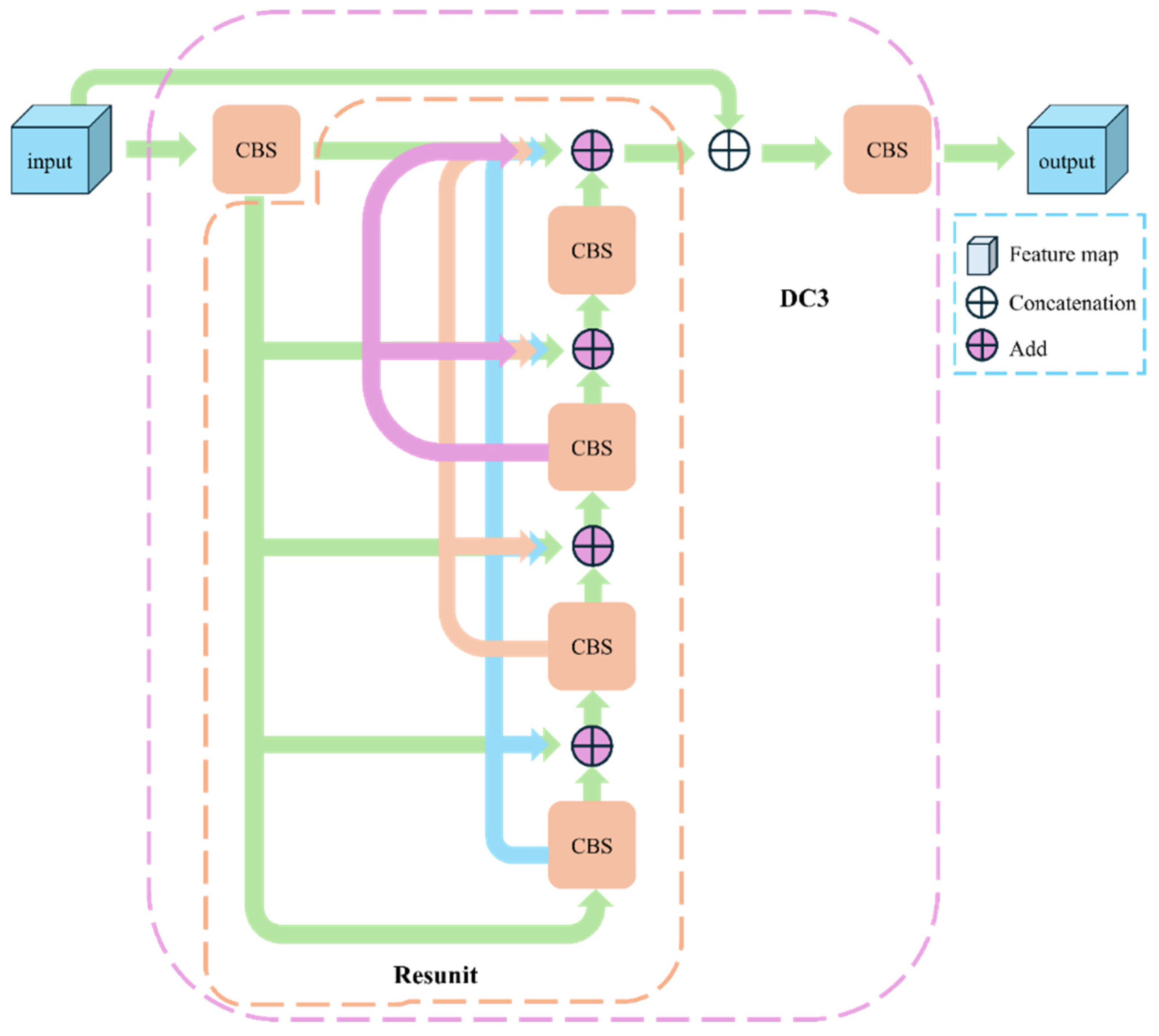

4.2.1. Algorithm Optimization

4.2.2. Introduction of Attention Mechanism

- (1)

- The expression for the channel attention mechanism CAM is as follows:where σ represents the sigmoid activation function, and and represent the parameters of the neural network’s multi-layer perceptron. The specific algorithm flow of CAM is as follows: Firstly, apply global max pooling and global average pooling to the input feature map to obtain two feature maps of size 1 × 1 × C. These two feature maps are then fed into an MLP comprising two layers, generating two corresponding outputs. Subsequently, these two outputs are integrated via element-wise addition and subjected to a Sigmoid activation function, thereby generating the channel attention features . This channel attention mechanism adaptively modulates the importance of each channel in the feature map, thereby enhancing the network’s sensitivity to critical features.

- (2)

- The expression for the spatial attention mechanism SAM is the following:where represents the convolution operation with a convolution kernel size of 7 × 7. The detailed algorithmic procedure of SAM is outlined as follows: Firstly, multiply the channel attention feature with the original feature map to generate the input feature map for the spatial attention mechanism. Subsequently, global max pooling and global average pooling are applied to the feature map to obtain two feature maps with dimensions H × W × 1. Next, concatenate these two feature maps and process them through a convolution operation with a convolution kernel size of 7 × 7, generating a spatial attention feature map. Finally, the feature map is subjected to a Sigmoid activation function, yielding the spatial attention feature . Ultimately, multiply with the feature map to generate the optimized feature output. This spatial attention mechanism enhances the network’s sensitivity to critical spatial regions by adaptively modulating the importance of spatial locations in the feature map.

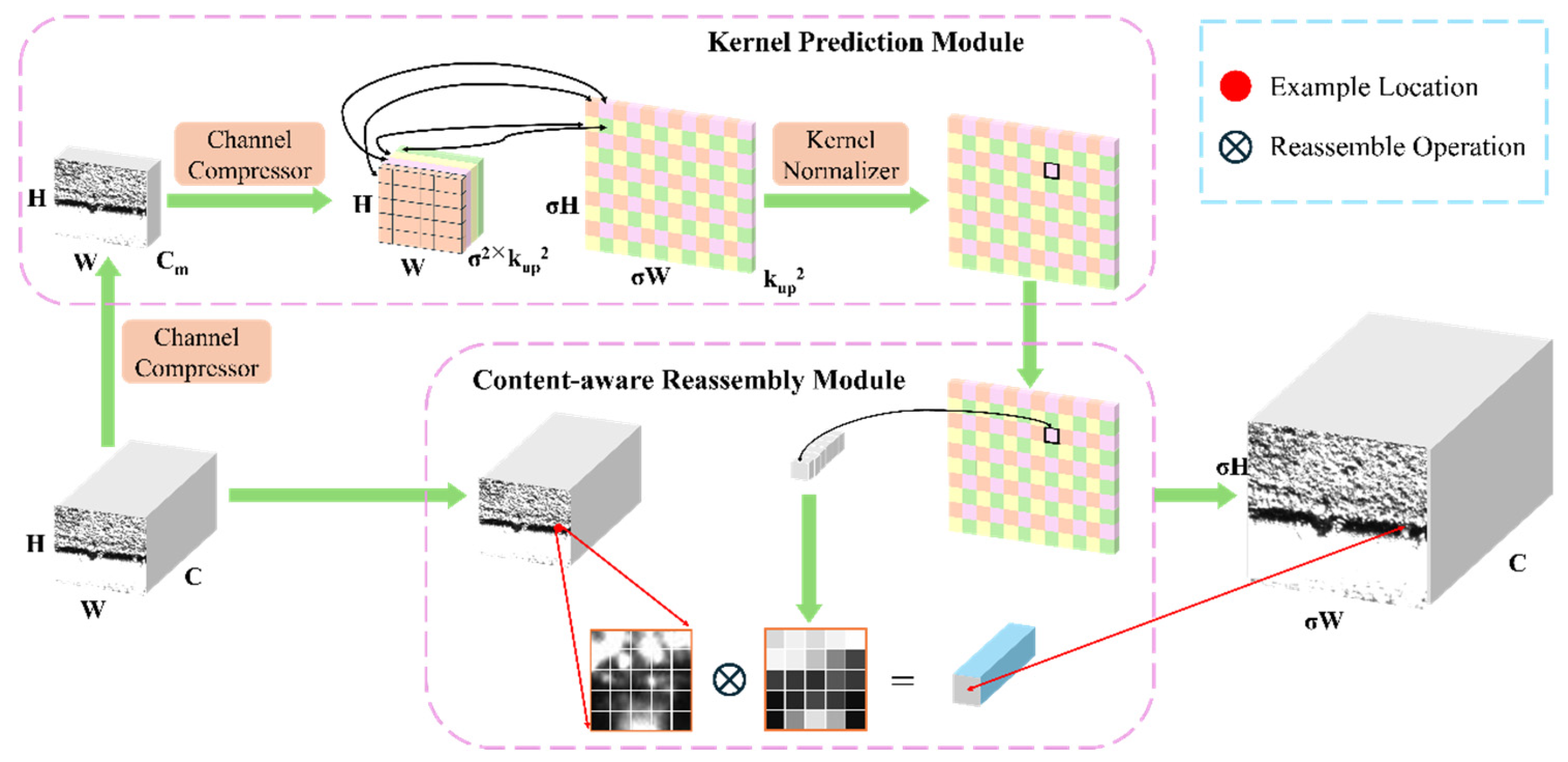

4.2.3. The Improvement of the Upsampling Operator

- (1)

- The up-sampling kernel prediction module initially compresses the channels of the feature map, converting the size of the original feature map from to , where is the number of compressed channels, implemented by a convolution operation. Next, a further convolution operation is performed on the compressed feature map to generate a feature map of size . Here, represents the upsampling scale factor, and is the size of the upsampling kernel. Finally, this feature map is expanded into an up-sampling kernel of shape . To ensure the stability of the convolution operation, the upsampling kernel is normalized such that the sum of its weights equals 1, thereby preserving the overall energy and information quantity in the feature map.

- (2)

- Subsequently, the feature reorganization module employs the upsampling kernel to project the feature information from the low-resolution feature map onto the high-resolution domain. More specifically, this process employs the predicted upsampling kernel to compute pixel-wise weighted sums for each spatial location, thereby generating a high-fidelity high-resolution feature map. This approach significantly restores image details and improves image clarity through precise weighted operations, thus enhancing the spatial resolution and feature representation of the image.

4.2.4. The Improvement of the Loss Function

5. Experimental Results and Discussion

5.1. IEVPF Experiment

5.1.1. IEVPF Evaluation Criteria

5.1.2. IEVPF Experimental Results and Discussion

- (1)

- IEVPF’s results

- (2)

- Analysis of experimental results

5.2. Surface Defect Detection Experiment

5.2.1. Evaluation Criteria

5.2.2. Hyperparameter Settings

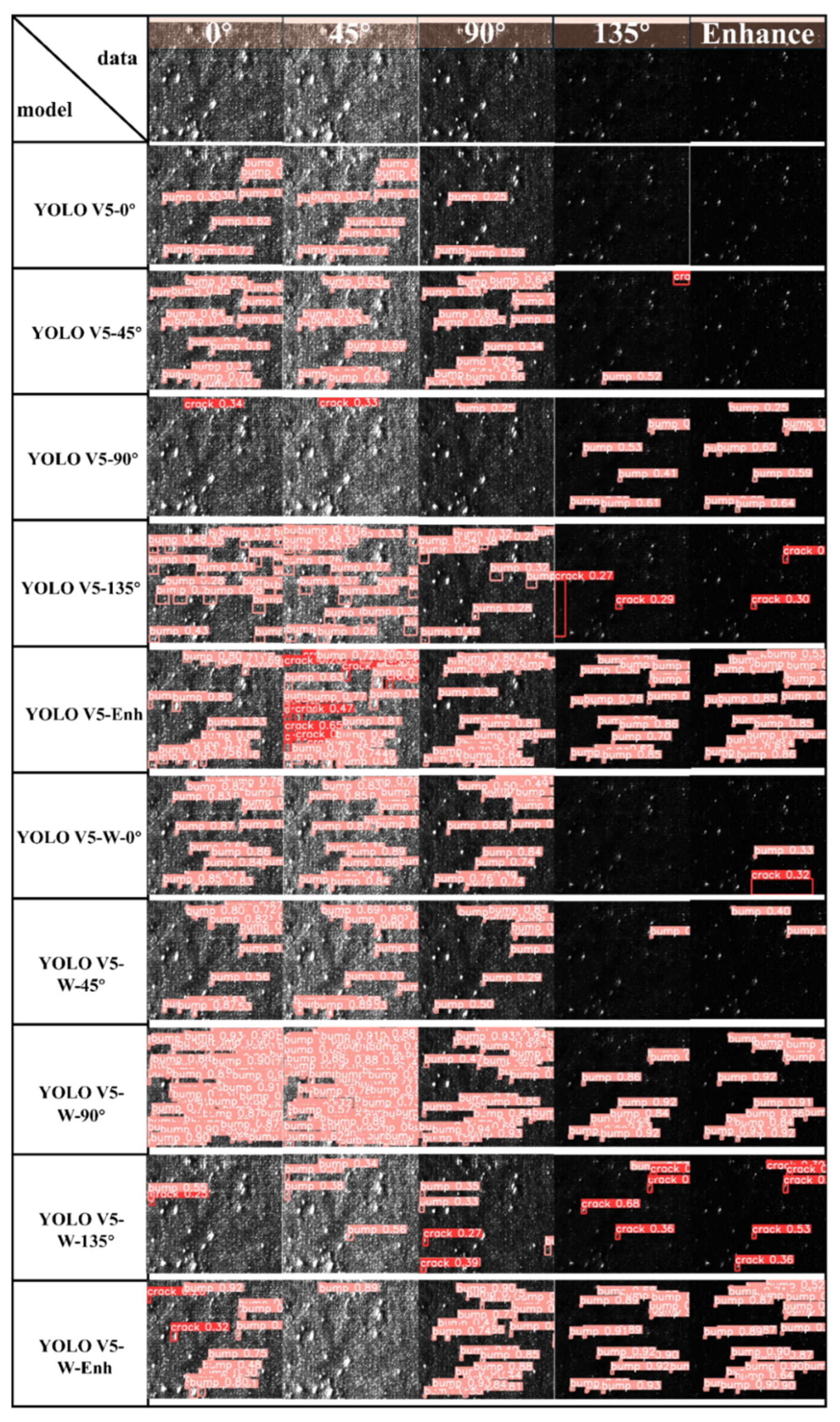

5.2.3. Results and Discussion

- (1)

- For the same model, the model trained on the IEVPF dataset has a lower Loss value than the model trained on the datasets of the four polarization directions.

- (2)

- For the same dataset, the Loss value of YOLO V5-W is lower than that of YOLO V5.

- (3)

- The YOLO V5-W model trained on the IEVPF dataset has the lowest Loss value.

- (1)

- On these five datasets, the Loss values are reduced by 51.1%, 34.5%, 50.6%, 32.0%, and 33.1%, with an average reduction of 40.3%. It is evident that across all datasets, the YOLOv5-W model demonstrates superior performance compared to the YOLOv5 model.

- (2)

- Compared to the YOLO V5 model trained on the four polarization direction datasets, the YOLO V5 model trained on the IEVPF dataset has Loss values reduced by 37.2%, 24.7%, 31.0%, and 12.6%, which possesses an average reduction of 26.4%. The YOLO V5-W model trained on the IEVPF dataset has Loss values reduced by 14.0%, 23.1%, 6.7%, and 14.0% compared to the models trained on the four polarization direction datasets, with an average reduction of 14.5%. These results not only demonstrate that the virtual polarization filtering-enhanced dataset is better suited for target detection tasks but also highlight the strong robustness of the YOLOv5-W model, enabling it to effectively adapt to dataset variations.

- (1)

- The training results on the five datasets illustrate that the precision of the YOLO V5-W model has increased by 11.8%, 14.6%, 7.3%, 9.3%, and 11.2%, with an average increase of 10.8%. This result indicates that the YOLOv5-W model exhibits a higher precision across all datasets when compared to the YOLOv5 model.

- (2)

- The YOLO V5 model trained on the IEVPF dataset has a precision increased by 3.0%, 7.1%, −2.4%, and 3.4%, compared to the models trained on the four polarization direction datasets, with an average increase of 2.8%. The YOLO V5-W model trained on the IEVPF dataset has a precision increased by 2.4%, 3.7%, 1.52%, and 5.33% compared to the models trained on the four polarization direction datasets, with an average increase of 3.2%. These results demonstrate that training models with the IEVPF dataset can enhance model detection accuracy, thereby validating the potential of virtual polarization filtering as an effective data augmentation technique for improving the performance of surface defect detection models.

- (1)

- On these five datasets, the recall has increased by 10.8%, 16.0%, 7.9%, 6.7%, and 10.2%, with an average increase of 10.3%. No matter which dataset, YOLO V5-W presents a higher recall compared to YOLO V5.

- (2)

- The YOLO V5 model trained on the IEVPF dataset has a recall increased by 2.4%, 10.8%, −1.0%, and 2.0% compared to the models trained on the four polarization direction datasets, with an average increase of 3.6%. The YOLO V5-W model trained on the IEVPF dataset has a recall increased by 1.7%, 4.9%, 1.3%, and 5.4% compared to the models trained on the four polarization direction datasets, with an average increase of 3.3%. This indicates that training models with the IEVPF dataset can enhance model recall, thereby validating the potential of virtual polarization filtering as an effective data augmentation technique for improving the performance of surface defect detection models.

- (1)

- On these five datasets, the mAP value has increased by 14.9%, 15.0%, 13.2%, 12.6%, and 12.7%, with an average increase of 13.7%. No matter which dataset, YOLO V5-W presents a higher mAP value compared to YOLO V5.

- (2)

- The YOLO V5 model trained on the IEVPF dataset has a mAP value increased by 6.4%, 11.6%, 2.4%, and 6.3% compared to the models trained on the four polarization direction datasets, with an average increase of 6.7%. The YOLO V5-W model trained on the IEVPF dataset has a mAP value increased by 4.2%, 9.3%, 1.7%, and 6.4% compared to the models trained on the four polarization direction datasets, with an average increase of 5.4%. This indicates that training models with the IEVPF dataset can modestly enhance model mAP, thereby validating the potential of virtual polarization filtering as an effective data augmentation technique for improving the performance of surface defect detection models.

| YOLO V5-0 | YOLO V5-45 | YOLO V5-90 | YOLO V5-135 | YOLO V5-Enh | |

| mAP*10−2 | 53.57 | 48.36 | 57.59 | 53.68 | 59.94 |

| YOLO V5-W-0 | YOLO V5-W-45 | YOLO V5-W-90 | YOLOV5-W-135 | YOLO V5-W-Enh | |

| mAP*10−2 | 68.42 | 63.33 | 70.86 | 66.23 | 72.59 |

- (1)

- For the same model, the one trained on the IEVPF dataset exhibits a higher detection precision than that trained on datasets corresponding to four polarization filtering directions.

- (2)

- For the same dataset, YOLO V5-W has a higher recognition precision than YOLO V5.

- (3)

- When detecting images with inadequate polarization suppression effects by models trained on the IEVPF dataset (as illustrated at 0° and 90°), some continuous undulations may be erroneously classified as cracks. This phenomenon occurs because following virtual polarization filtering enhancement, a marked difference emerges between cracks and a series of continuous bumps. By contrast, in images with inadequate polarization suppression, such differentiation is less distinct. Therefore, it can be concluded that images enhanced post virtual polarization filtering are more amenable to model training for target detection tasks.

- (4)

- Overall, the YOLOv5-W-Enh model, which is based on the improved YOLOv5-W architecture and trained on the IEVPF dataset, is the only one that has neither missed nor falsely detected any defects in the virtual polarization filtering enhancement experiments, achieving the highest detection accuracy and confidence scores.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pragana, J.P.M.; Bragança, I.M.F.; Martins, P.A.F. Hybrid metal additive manufacturing: A state-of-the-art review. Adv. Ind. Manuf. Eng. 2021, 2, 100032. [Google Scholar] [CrossRef]

- Zhou, K.; Bai, X.Y.; Tan, P.F.; Yan, W.T.; Li, S.F. Preface: Modeling of additive manufacturing. Int. J. Mech. Sci. 2024, 265, 108909. [Google Scholar] [CrossRef]

- Mercado, F.; Rojas, A. Additive manufacturing methods: Techniques, materials, and closed-loop control applications. Int. J. Adv. Manuf. Technol. 2020, 109, 17–31. [Google Scholar] [CrossRef]

- Niu, P.D.; Li, R.D.; Zhu, S.Y.; Wang, M.B.; Chen, C.; Yuan, T.C. Hot cracking, crystal orientation and compressive strength of an equimolar CoCrFeMnNi high-entropy alloy printed by selective laser melting. Opt. Laser Technol. 2020, 127, 106147. [Google Scholar] [CrossRef]

- Peng, X.; Kong, L.B.; Chen, Y.; Wang, J.H.; Xu, M. A preliminary study of in-situ defects measurement for additive manufacturing based on multi-spectrum. In Proceedings of the 9th International Symposium on Advanced Optical Manufacturing and Testing Technologies: Subdiffraction-Limited Plasmonic Lithography and Innovative Manufacturing Technology, Chengdu, China, 26–29 June 2018; SPIE: Bellingham, WA, USA; p. 1084217. [Google Scholar]

- Vafadar, A.; Guzzomi, F.; Rassau, A.; Hayward, K. Advances in metal additive manufacturing: A review of common processes, industrial applications, and current challenges. Appl. Sci. 2021, 11, 1213. [Google Scholar] [CrossRef]

- Frazier, W.E. Metal additive manufacturing: A review. J. Mater. Eng. Perform. 2014, 23, 1917–1928. [Google Scholar] [CrossRef]

- Wong, K.V.; Hernandez, A. A review of additive manufacturing. Int. Sch. Res. Not. 2012, 10, 208760. [Google Scholar] [CrossRef]

- Kruth, J.P.; Froyen, L.; Van Vaerenbergh, J.; Mercelis, P.; Rombouts, M.; Lauwers, B. Selective laser melting of iron-based powder. J. Mater. Process. Technol. 2004, 149, 616–622. [Google Scholar] [CrossRef]

- Paulson, N.H.; Gould, B.; Wolff, S.J.; Stan, M.; Greco, A.C. Correlations between thermal history and keyhole porosity in laser powder bed fusion. Addit. Manuf. 2020, 34, 101213. [Google Scholar] [CrossRef]

- Gu, D.D.; Hagedorn, Y.; Meiners, W.; Meng, G.B.; Batista, R.J.S.; Wissenbach, K.; Poprawe, R. Densification behavior, microstructure evolution, and wear performance of selective laser melting processed commercially pure titanium. Acta Mater. 2012, 60, 3849–3860. [Google Scholar] [CrossRef]

- Fu, Y.Z.; Downey, A.R.J.; Yuan, L.; Zhang, T.Y.; Pratt, A.; Balogun, Y. Machine learning algorithms for defect detection in metal laser-based additive manufacturing: A review. J. Manuf. Process. 2022, 75, 693–710. [Google Scholar] [CrossRef]

- Peng, X.; Kong, L.B.; Han, W.; Wang, S. Multi-Sensor Image Fusion Method for Defect Detection in Powder Bed Fusion. Sensors 2022, 22, 8023. [Google Scholar] [CrossRef]

- Papa, I.; Lopresto, V.; Langella, A. Ultrasonic inspection of composites materials: Application to detect impact damage. Int. J. Lightweight Mater. Manuf. 2021, 4, 37–42. [Google Scholar] [CrossRef]

- Tian, G.; Sophian, A.; Taylor, D.; Rudlin, J. Electromagnetic and eddy current NDT: A review. Insight Non-Destr. Test. Cond. Monit. 2001, 43, 302–306. [Google Scholar]

- Wu, Q.; Dong, K.; Qin, X.P.; Hu, Z.Q.; Xiong, X.C. Magnetic particle inspection: Status, advances, and challenges—Demands for automatic non-destructive testing. NDT E Int. 2024, 143, 103030. [Google Scholar] [CrossRef]

- Yang, W.N.; Chen, M.Y.; Wu, H.; Lin, Z.Y.; Kong, D.Q.; Xie, S.L.; Takamasu, K. Deep learning-based weak micro-defect detection on an optical lens surface with micro vision. Opt. Express 2023, 31, 5593–5608. [Google Scholar] [CrossRef]

- Xu, L.S.; Dong, S.H.; Wei, H.T.; Ren, Q.Y.; Huang, J.W.; Liu, J.Y. Defect signal intelligent recognition of weld radiographs based on YOLO V5-IMPROVEMENT. J. Manuf. Process. 2023, 99, 373–381. [Google Scholar] [CrossRef]

- Liu, G.; Dwivedi, P.; Trupke, T.; Hameiri, Z. Deep Learning Model to Denoise Luminescence Images of Silicon Solar Cells. Adv. Sci. 2023, 10, e2300206. [Google Scholar] [CrossRef] [PubMed]

- Ma, D.Y.; Jiang, P.; Shu, L.S.; Geng, S.N. Multi-sensing signals diagnosis and CNN-based detection of porosity defect during Al alloys laser welding. J. Manuf. Syst. 2022, 62, 334–346. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Girshick, R.B.; Donahue, J.; Darrell, T.; Malik, J.; Berkeley, U. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.M.; Zhang, X.; Ren, S.Q.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Bhatt, P.; Malhan, R.; Rajendran, P.; Shah, B.C.; Thakar, S.; Yoon, Y.J.; Gupta, S.K. Image-Based Surface Defect Detection Using Deep Learning: A Review. ASME J. Comput. Inf. Sci. Eng. 2021, 21, 040801. [Google Scholar] [CrossRef]

- Liu, G.L. Surface Defect Detection Methods Based on Deep Learning: A Brief Review. In Proceedings of the 2020 2nd International Conference on Information Technology and Computer Application (ITCA), Guangzhou, China, 18–20 December 2020; pp. 200–203. [Google Scholar]

- Peng, G.F.; Song, T.; Cao, S.X.; Zhou, B.; Jiang, Q. A two-stage defect detection method for unevenly illuminated self-adhesive printed materials. Sci. Rep. 2024, 14, 20547. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.; Kweon, I. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Wang, J.Q.; Chen, K.; Xu, R.; Liu, Z.W.; Loy, C.C.; Lin, D.H. CARAFE: Content-Aware ReAssembly of FEatures. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3007–3016. [Google Scholar]

- Zhang, Y.F.; Ren, W.Q.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and Efficient IOU Loss for Accurate Bounding Box Regression. arXiv 2021, arXiv:2101.08158. [Google Scholar] [CrossRef]

- Tong, L.; Huang, X.Y.; Wang, P.; Ye, L.; Peng, M.; An, L.; Sun, Q.; Zhang, Y.; Yang, G.; Li, Z.; et al. Stable mid-infrared polarization imaging based on quasi-2D tellurium at room temperature. Nat. Commun. 2020, 11, 2308. [Google Scholar] [CrossRef] [PubMed]

- Wolrige, S.H.; Howe, D.; Majidiyan, H. Intelligent Computerized Video Analysis for Automated Data Extraction in Wave Structure Interaction; A Wave Basin Case Study. J. Mar. Sci. Eng. 2025, 13, 617. [Google Scholar] [CrossRef]

- Majidiyan, H.; Enshaei, H.; Howe, D.; Wang, Y. An Integrated Framework for Real-Time Sea-State Estimation of Stationary Marine Units Using Wave Buoy Analogy. J. Mar. Sci. Eng. 2024, 12, 2312. [Google Scholar] [CrossRef]

- Powell, S.B.; Garnett, R.; Marshall, J.; Rizk, C. and Gruev, V. Bioinspired polarization vision enables underwater geolocalization. Sci. Adv. 2018, 4, eaao6841. [Google Scholar] [CrossRef]

- Zhu, Z.M.; Xiang, P.; Zhang, F.M. Polarization-based method of highlight removal of high-reflectivity surface. Optik 2020, 221, 165345. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, S.J.; Zou, R.B. Select-Mosaic: Data Augmentation Method for Dense Small Object Scenes. arXiv 2024, arXiv:2406.05412. [Google Scholar]

- Mikš, A.; Pokorný, P. Explicit calculation of Point Spread Function of optical system. Optik 2021, 239, 166885. [Google Scholar] [CrossRef]

- KU, S.; Mahato, K.K.; Mazumder, N. Published in Lasers in Medical Science. Polarization-resolved Stokes-Mueller imaging: A review of technology and applications. Lasers Med. Sci. 2019, 34, 1283–1293. [Google Scholar] [CrossRef] [PubMed]

- Xiong, W.; Hsu, C.; Bromberg, Y.; Antonio-Lopez, J.; Correa, R.A.; Cao, H. Complete polarization control in multimode fibers with polarization and mode coupling. Light Sci. Appl. 2017, 7, 54. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E.; Fu, C.Y.; Berg, A. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Cham, Switzerland, 2015; pp. 21–37. [Google Scholar]

- Zhang, S.F.; Wen, L.Y.; Bian, X.; Lei, Z.; Li, S. Single-Shot Refinement Neural Network for Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4203–4212. [Google Scholar]

- Wang, D.F.; Zhang, B.; Cao, Y.; Lu, M.Y. SFssD: Shallow feature fusion single shot multiboxdetector. In Proceedings of the International Conference in Communications, Signal Processing, and Systems, Urumqi, China, 20–22 July 2019; Springer: Singapore, 2019. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Hussain, M. YOLOv1 to v8: Unveiling Each Variant–A Comprehensive Review of YOLO. IEEE Access 2024, 12, 42816–42833. [Google Scholar] [CrossRef]

- Li, X. A real-time detection algorithm for kiwifruit defects based on YOLOv5. Electronics 2021, 10, 1711. [Google Scholar] [CrossRef]

- Qi, J.T.; Liu, X.N.; Liu, K.; Xu, F.; Guo, H.; Tian, X.L.; Li, M.; Bao, Z.Y.; Li, Y. An improved YOLOv5 model based on visual attention mechanism: Application to recognition of tomato virus disease. Comput. Electron. Agric. 2022, 194, 106780. [Google Scholar] [CrossRef]

- Zhang, D.; Han, J.; Cheng, G.; Yang, M. Weakly supervised object localization and detection: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5866–5885. [Google Scholar] [CrossRef]

- Parsania, P.; Virparia, P. A Review: Image Interpolation Techniques forImage Scaling. Int. J. Innov. Res. Comput. Commun. Eng. 2015, 2, 7409–7414. [Google Scholar] [CrossRef]

- Wang, X.; Song, J. ICIoU: Improved Loss Based on Complete Intersection Over Union for Bounding Box Regression. IEEE Access 2021, 9, 105686–105695. [Google Scholar] [CrossRef]

- Zheng, Z.H.; Wang, P.; Liu, W.; Li, J.Z.; Ye, R.G.; Ren, D.W. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. arXiv 2019, arXiv:1911.08287. [Google Scholar] [CrossRef]

- Kaur, R.; Singh, S. A comprehensive review of object detection with deep learning. Digit. Signal Process. 2022, 132, 103812. [Google Scholar] [CrossRef]

| Equipment | Module |

|---|---|

| Operate system | Windows 11 operating system |

| CPU | 11th Gen Intel(R) Core (TM) i7-11800H |

| RAM | 16.0 GB |

| GPU | NVIDIA GeForce GTX 3060 |

| CUDA | 11.7 |

| Pytorch | 2.2.2 |

| Python | 3.8.19 |

| Image Type | [0, 50] | [51, 101] | [102, 152] | [153, 203] | [204, 255] |

|---|---|---|---|---|---|

| 0° | 36,851 | 169,060 | 248,843 | 250,369 | 548,253 |

| 45° | 16,445 | 93,285 | 163,133 | 202,253 | 778,260 |

| 90° | 117,346 | 452,768 | 395,023 | 181,519 | 106,720 |

| 135° | 506,940 | 611,276 | 112,140 | 15,802 | 7218 |

| Enhance | 674,910 | 459,317 | 97,738 | 14,617 | 6794 |

| YOLO V5-0 | YOLO V5-45 | YOLO V5-90 | YOLO V5-135 | YOLO V5-Enh | |

| Loss*10−2 | 15.34 | 12.80 | 13.98 | 11.03 | 9.64 |

| YOLO V5-W-0 | YOLO V5-W-45 | YOLO V5-W-90 | YOLO V5-W-135 | YOLO V5-W-Enh | |

| Loss*10−2 | 7.50 | 8.39 | 6.91 | 7.50 | 6.45 |

| YOLO V5-0 | YOLO V5-45 | YOLO V5-90 | YOLO V5-135 | YOLO V5-Enh | |

| Precision*10−2 | 82.50 | 78.40 | 87.85 | 82.07 | 85.49 |

| YOLO V5-W-0 | YOLO V5-W-45 | YOLO V5-W-90 | YOLOV5-W-135 | YOLO V5-W-Enh | |

| Precision*10−2 | 94.28 | 92.99 | 95.14 | 91.33 | 96.66 |

| YOLO V5-0 | YOLO V5-45 | YOLO V5-90 | YOLO V5-135 | YOLO V5-Enh | |

| Recall*10−2 | 86.41 | 78.01 | 89.79 | 86.82 | 88.80 |

| YOLO V5-W-0 | YOLO V5-W-45 | YOLO V5-W-90 | YOLOV5-W-135 | YOLO V5-W-Enh | |

| Recall*10−2 | 97.25 | 94.03 | 97.72 | 93.54 | 98.97 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, X.; Peng, X.; Zhou, X.; Cao, H.; Shan, C.; Li, S.; Qiao, S.; Shi, F. Enhanced Defect Detection in Additive Manufacturing via Virtual Polarization Filtering and Deep Learning Optimization. Photonics 2025, 12, 599. https://doi.org/10.3390/photonics12060599

Su X, Peng X, Zhou X, Cao H, Shan C, Li S, Qiao S, Shi F. Enhanced Defect Detection in Additive Manufacturing via Virtual Polarization Filtering and Deep Learning Optimization. Photonics. 2025; 12(6):599. https://doi.org/10.3390/photonics12060599

Chicago/Turabian StyleSu, Xu, Xing Peng, Xingyu Zhou, Hongbing Cao, Chong Shan, Shiqing Li, Shuo Qiao, and Feng Shi. 2025. "Enhanced Defect Detection in Additive Manufacturing via Virtual Polarization Filtering and Deep Learning Optimization" Photonics 12, no. 6: 599. https://doi.org/10.3390/photonics12060599

APA StyleSu, X., Peng, X., Zhou, X., Cao, H., Shan, C., Li, S., Qiao, S., & Shi, F. (2025). Enhanced Defect Detection in Additive Manufacturing via Virtual Polarization Filtering and Deep Learning Optimization. Photonics, 12(6), 599. https://doi.org/10.3390/photonics12060599