Research Progress on Modulation Format Recognition Technology for Visible Light Communication

Abstract

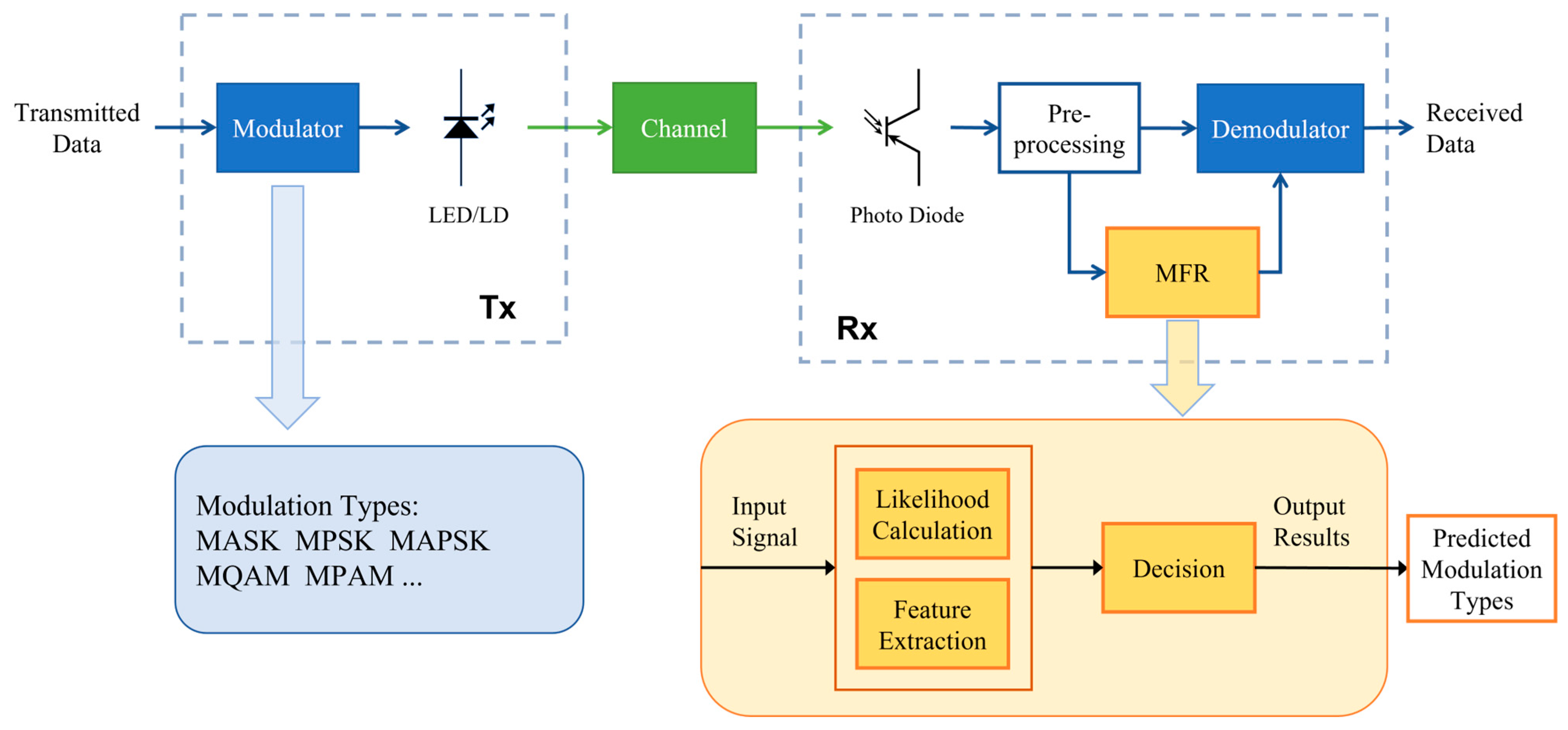

1. Introduction

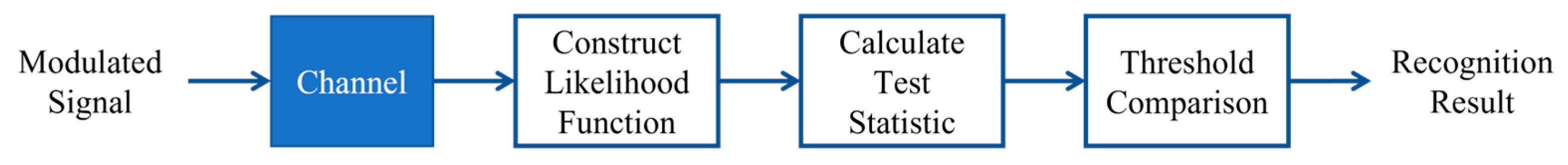

2. Likelihood-Based Modulation Recognition Methods

2.1. Signal Model

2.2. Average Likelihood Ratio Test (ALRT)

2.3. Generalized Likelihood Ratio Test (GLRT)

2.4. Hybrid Likelihood Ratio Test (HLRT)

2.5. Summary of Section 2

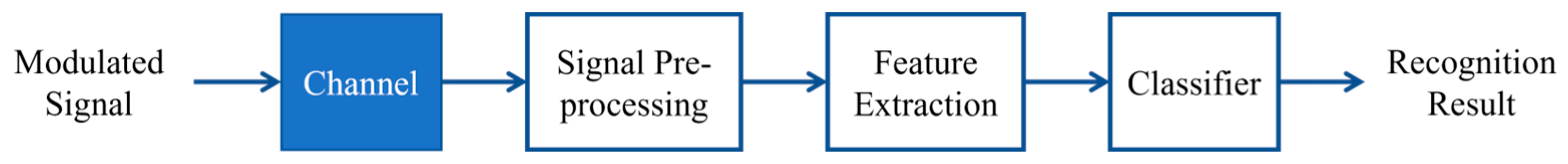

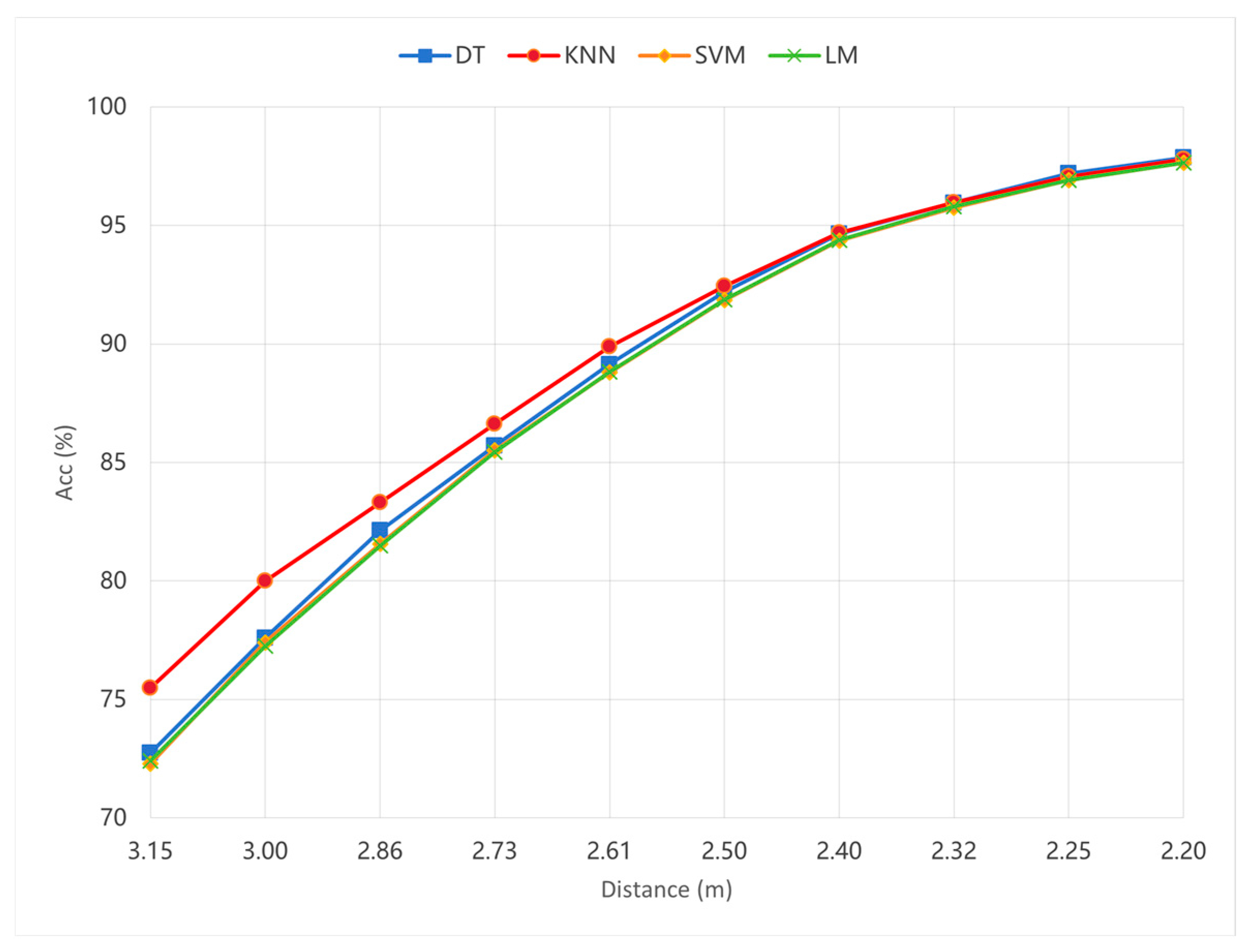

3. Feature-Based Modulation Recognition Methods

3.1. Higher-Order Statistics (HOS) Features

3.2. Constellation Diagram Features

3.3. Wavelet Transform

3.4. Integral Feature Extraction

3.5. Chaotic Mapping and Autocorrelation Estimation

| Algorithm 1: Chaotic Baker Map Permutation for Constellation Diagram Pixels |

| Input: : Constellation diagram ( matrix) : Image size (integer) : Partition sequence (array of integers where ) Output: : Permuted constellation diagram ( matrix) Procedure: //Initialize :

// Compute CBM permuted pixel coordinates

|

3.6. Frequency-Domain Histogram

3.7. Summary of Section 3

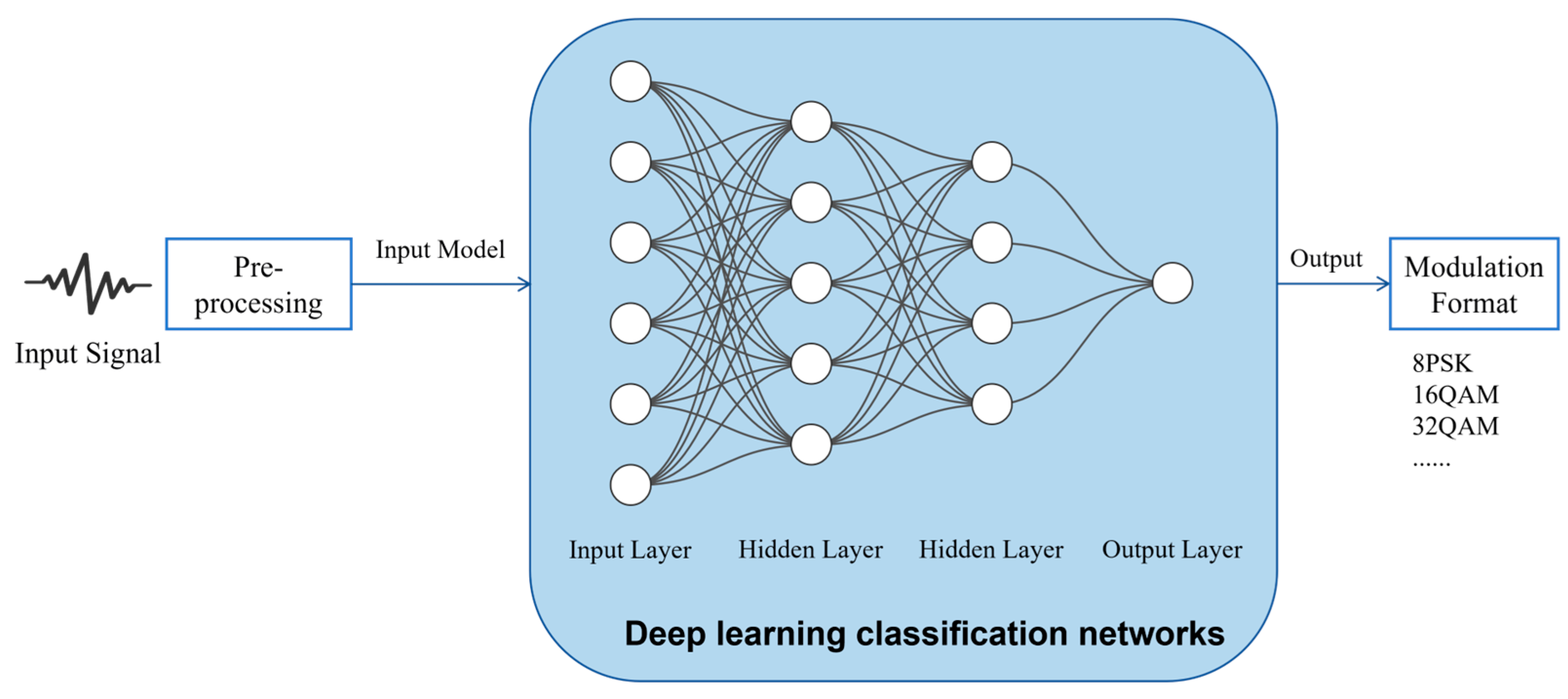

4. Deep Learning-Based Modulation Recognition Methods

4.1. Convolutional Neural Networks (CNN)

4.2. Recurrent Neural Networks (RNN)

4.3. Other Innovative Deep Learning Models

4.4. Introduction to the VLC MFR Datasets

4.5. Prospects for the Practical Hardware Deployment of VLC MFR

4.6. Summary of Section 4

5. Conclusions and Outlook

5.1. Current Challenges

- (1)

- Algorithm limitations in dynamic and non-stationary channels

- (2)

- Data scarcity and domain-shift issues

- (3)

- Bottlenecks in hardware–algorithm co-design

- (4)

- Cross-scenario robustness and scalability

- (5)

- Real-time processing and energy efficiency trade-offs

- (6)

- Standardization and cross-layer design gap

5.2. Future Outlook

- (1)

- Multi-Domain Feature Fusion and Adaptive Feature Extraction

- (2)

- Reducing Computational Complexity and Model Size to Meet Real-Time Requirements

- (3)

- Enhancing Interdisciplinary Technology Integration and Innovation

- (4)

- Feature Enhancement Under Complex Channels

- (5)

- Joint Optimization of Channel Estimation and Classifier

- (6)

- Promoting Model Generalization from Simulation to Real-World Scenarios

- (7)

- Cross-Scenario Adaptability: Building a Meta-Learning-Driven Adaptive Recognition Framework

6. Summary

Author Contributions

Funding

Conflicts of Interest

References

- Yang, P.; Xiao, Y.; Xiao, M.; Li, S. 6G Wireless Communications: Vision and Potential Techniques. IEEE Netw. 2019, 33, 70–75. [Google Scholar] [CrossRef]

- Chi, N.; Zhou, Y.; Wei, Y.; Hu, F. Visible Light Communication in 6G: Advances, Challenges, and Prospects. IEEE Veh. Technol. Mag. 2020, 15, 93–102. [Google Scholar] [CrossRef]

- Tang, L.; Wu, Y.; Cheng, Z.; Teng, D.; Liu, L. Over 23.43 Gbps Visible Light Communication System Based on 9 V Integrated RGBP LED Modules. Opt. Commun. 2023, 534, 129317. [Google Scholar] [CrossRef]

- Li, C.Y.; Lu, H.H.; Tsai, W.S.; Wang, Z.H.; Hung, C.W.; Su, C.W.; Lu, Y.F. A 5 m/25 Gbps Underwater Wireless Optical Communication System. IEEE Photonics J. 2018, 10, 1–9. [Google Scholar] [CrossRef]

- Matthews, W.; Ahmed, Z.; Ali, W.; Collins, S. A 3.45 Gigabits/s SiPM-Based OOK VLC Receiver. IEEE Photonics Technol. Lett. 2021, 33, 487–490. [Google Scholar] [CrossRef]

- Xu, B.; Min, T.; Yue, C.P. Design of PAM-8 VLC Transceiver System Employing Neural Network-Based FFE and Post-Equalization. Electronics 2022, 11, 3908. [Google Scholar] [CrossRef]

- Zou, P.; Hu, F.; Zhao, Y.; Chi, N. On the Achievable Information Rate of Probabilistic Shaping QAM Order and Source Entropy in Visible Light Communication Systems. Appl. Sci. 2020, 10, 4299. [Google Scholar] [CrossRef]

- Zenhom, Y.A.; Hamad, E.K.I.; Alghassab, M.; Elnabawy, M.M. Optical-OFDM VLC System: Peak-to-Average Power Ratio Enhancement and Performance Evaluation. Sensors 2024, 24, 2965. [Google Scholar] [CrossRef]

- Zhou, Y.; Wei, Y.; Hu, F.; Hu, J.; Zhao, Y.; Zhang, J.; Jiang, F.; Chi, N. Comparison of Nonlinear Equalizers for High-Speed Visible Light Communication Utilizing Silicon Substrate Phosphorescent White LED. Opt. Express 2020, 28, 2302–2316. [Google Scholar] [CrossRef]

- Yakkati, R.R.; Tripathy, R.K.; Cenkeramaddi, L.R. Radio Frequency Spectrum Sensing by Automatic Modulation Classification in Cognitive Radio System Using Multiscale Deep CNN. IEEE Sens. J. 2022, 22, 926–938. [Google Scholar] [CrossRef]

- Huang, S.; Lin, C.; Xu, W.; Gao, Y.; Feng, Z.; Zhu, F. Identification of Active Attacks in Internet of Things: Joint Model- and Data-Driven Automatic Modulation Classification Approach. IEEE Internet Things J. 2021, 8, 2051–2065. [Google Scholar] [CrossRef]

- Reddy, R.; Sinha, S. State-of-the-Art Review: Electronic Warfare against Radar Systems. IEEE Access 2025, 13, 57530–57567. [Google Scholar] [CrossRef]

- Sliti, M.; Mrabet, M.; Garai, M.; Ammar, L.B. A Survey on Machine Learning Algorithm Applications in Visible Light Communication Systems. Opt. Quantum Electron. 2024, 56, 1351. [Google Scholar] [CrossRef]

- Zedini, E.; Oubei, H.M.; Kammoun, A.; Hamdi, M.; Ooi, B.S.; Alouini, M.-S. Unified Statistical Channel Model for Turbulence-Induced Fading in Underwater Wireless Optical Communication Systems. IEEE Trans. Commun. 2019, 67, 2893–2907. [Google Scholar] [CrossRef]

- Jajoo, G.; Kumar, Y.; Yadav, S.K.; Adhikari, B.; Kumar, A. Blind Signal Modulation Recognition Through Clustering Analysis of Constellation Signature. Expert Syst. Appl. 2017, 90, 13–22. [Google Scholar] [CrossRef]

- Xu, J.L.; Su, W.; Zhou, M. Likelihood-Ratio Approaches to Automatic Modulation Classification. IEEE Trans. Syst. Man Cybern. Part C 2010, 41, 455–469. [Google Scholar] [CrossRef]

- Zheng, J.; Lv, Y. Likelihood-Based Automatic Modulation Classification in OFDM with Index Modulation. IEEE Trans. Veh. Technol. 2018, 67, 8192–8204. [Google Scholar] [CrossRef]

- Ali, A.; Yangyu, F. Unsupervised Feature Learning and Automatic Modulation Classification Using Deep Learning Model. Phys. Commun. 2017, 25, 75–84. [Google Scholar] [CrossRef]

- Ren, H.; Yu, J.; Wang, Z.; Chen, J.; Yu, C. Modulation Format Recognition in Visible Light Communications Based on Higher Order Statistics. In Proceedings of the 2017 Conference on Lasers and Electro-Optics Pacific Rim (CLEO-PR), Singapore, 31 July–4 August 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–2. [Google Scholar]

- He, J.; Zhou, Y.; Shi, J.; Tang, Q. Modulation Classification Method Based on Clustering and Gaussian Model Analysis for VLC System. IEEE Photonics Technol. Lett. 2020, 32, 651–654. [Google Scholar] [CrossRef]

- Xiong, S. Intelligent Modulation Recognition Algorithm for Optical Communication. J. Intell. Fuzzy Syst. 2021, 40, 5845–5852. [Google Scholar] [CrossRef]

- Ağır, T.T.; Sönmez, M. The Modulation Classification Methods in PPM–VLC Systems. Opt. Quantum Electron. 2023, 55, 223. [Google Scholar] [CrossRef]

- Mohamed, S.E.D.N.; Mortada, B.; El-Shafai, W.; Khalaf, A.A.M.; Zahran, O.; Dessouky, M.I.; El-Rabaie, E.-S.M.; Abd El-Samie, F.E. Automatic Modulation Classification in Optical Wireless Communication Systems Based on Cancellable Biometric Concepts. Opt. Quantum Electron. 2023, 55, 389. [Google Scholar] [CrossRef]

- Zhang, X.; Zeng, Z.; Du, P.; Lin, B.; Chen, C. Intelligent Index Recognition for OFDM with Index Modulation in Underwater OWC Systems. IEEE Photonics Technol. Lett. 2024, 36, 1249–1252. [Google Scholar] [CrossRef]

- Jin, H.; Jeong, S.; Park, D.C.; Kim, S.C. Convolutional Neural Network Based Blind Automatic Modulation Classification Robust to Phase and Frequency Offsets. IET Commun. 2020, 14, 3578–3584. [Google Scholar] [CrossRef]

- O’Shea, T.J.; Corgan, J.; Clancy, T.C. Convolutional Radio Modulation Recognition Networks. In Proceedings of the 17th International Conference on Engineering Applications of Neural Networks (EANN 2016), Aberdeen, UK, 2–5 September 2016; Springer: Cham, Switzerland, 2016; pp. 213–226. [Google Scholar]

- Liu, W.; Li, X.; Yang, C.; Luo, M. Modulation Classification Based on Deep Learning for DMT Subcarriers in VLC System. In Proceedings of the 2020 Optical Fiber Communications Conference and Exhibition (OFC), San Diego, CA, USA, 8–12 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–3. [Google Scholar]

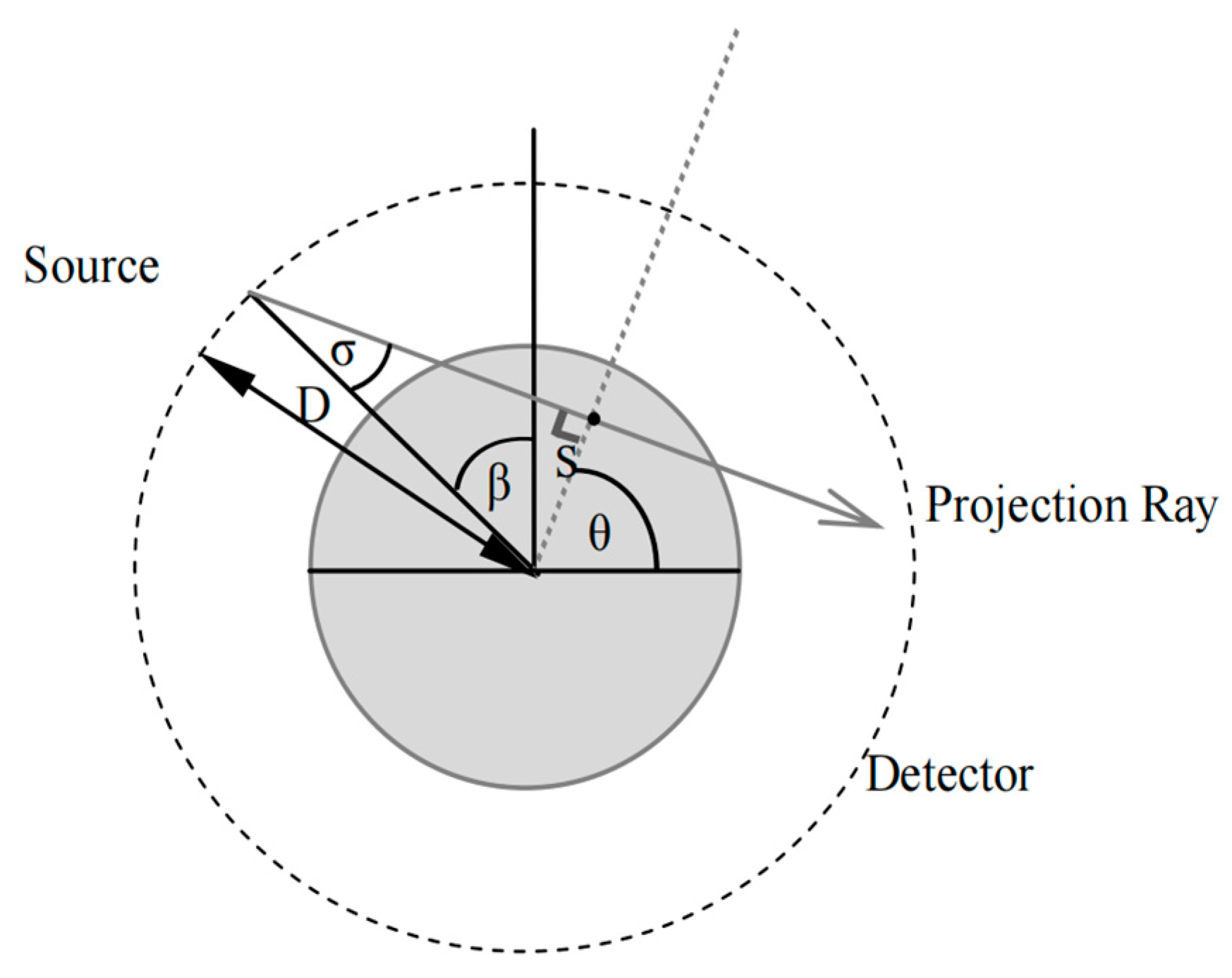

- Mortada, B.; El-Shafai, W.; Mohamed, S.E.D.N.; Zahran, O.; El-Rabaie, E.-S.M.; Abd El-Samie, F.E. Fan-Beam Projection for Modulation Classification in Optical Wireless Communication Systems. Appl. Opt. 2022, 61, 1041–1048. [Google Scholar] [CrossRef]

- Gu, Y.; Wu, Z.; Li, X.; Tian, R.; Ma, S.; Jia, T. Modulation Format Identification in a Satellite to Ground Optical Wireless Communication Systems Using a Convolution Neural Network. Appl. Sci. 2022, 12, 3331. [Google Scholar] [CrossRef]

- Gao, W.; Xu, C.; Xu, Z.; Jin, R.Z.; Chi, N. Modulation Format Recognition Based on Coordinate Transformation and Combination in VLC System. In Proceedings of the 2022 Asia Communications and Photonics Conference (ACP), Shenzhen, China, 5–8 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1151–1155. [Google Scholar]

- Wang, Y.; Wu, Z.; Zhao, Y.; Yan, Z.; Mao, R.; Zhu, H. Improved YOLOv5s Algorithm for Modulation Format Recognition of Visible Light Communication Signal. Opt. Commun. Technol. 2024, 48, 18–22. (In Chinese) [Google Scholar]

- Arafa, N.A.; Lizos, K.A.; Alfarraj, O.; Shawki, F.; Abd El-atty, S.M. Deep Learning Approach for Automatic Modulation Format Identification in Vehicular Visible Light Communications. Opt. Quantum Electron. 2024, 56, 1083. [Google Scholar] [CrossRef]

- Gao, W.; Wang, Y.; Xu, C.; Xu, Z.; Chi, N. Research on Modulation Format Recognition for Underwater Visible Light Communication Based on BiGRU. Study Opt. Commun. 2025, 2, 240023. Available online: http://kns.cnki.net/kcms/detail/42.1266.TN.20240223.1857.004.html (accessed on 20 March 2025). (In Chinese).

- Zhang, L.; Zhou, X.; Du, J.; Tian, P. Fast Self-Learning Modulation Recognition Method for Smart Underwater Optical Communication Systems. Opt. Express 2020, 28, 38223–38240. [Google Scholar] [CrossRef]

- Finn, C.; Abbeel, P.; Levine, S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. In Proceedings of the 34th International Conference on Machine Learning (ICML 2017), Sydney, Australia, 6–11 August 2017; PMLR: Cambridge, MA, USA, 2017; pp. 1126–1135. [Google Scholar]

- Zhao, Z.; Khan, F.N.; Li, Y.; Wang, Z.; Zhang, Y.; Fu, H.Y. Application and Comparison of Active and Transfer Learning Approaches for Modulation Format Classification in Visible Light Communication Systems. Opt. Express 2022, 30, 16351–16361. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Lin, X.; Shi, J.; Li, Z.; Chi, N. Modulation Format Recognition in a UVLC System Based on Reservoir Computing with Coordinate Transformation and Folding Algorithm. Opt. Express 2023, 31, 17331–17344. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Liu, M.; Yang, J.; Gui, G. Data-Driven Deep Learning for Automatic Modulation Recognition in Cognitive Radios. IEEE Trans. Veh. Technol. 2019, 68, 4074–4077. [Google Scholar] [CrossRef]

- Yao, L.; Li, F.; Zhang, H.; Zhou, Y.; Wei, Y.; Li, Z.; Shi, J.; Zhang, J.; Shen, C.; Chi, N. Modulation Format Recognition in a UVLC System Based on an Ultra-Lightweight Model with Communication-Informed Knowledge Distillation. Opt. Express 2024, 32, 13095–13110. [Google Scholar] [CrossRef]

- Zheng, X.; He, Y.; Zhang, C.; Miao, P. VLCMnet-Based Modulation Format Recognition for Indoor Visible Light Communication Systems. Photonics 2024, 11, 403. [Google Scholar] [CrossRef]

- O’Shea, T.J.; Roy, T.; Clancy, T.C. Over-the-air deep learning based radio signal classification. IEEE J. Sel. Top. Signal Process. 2018, 12, 168–179. [Google Scholar] [CrossRef]

- Tekbıyık, K.; Ekti, A.R.; Görçin, A.; Kurt, G.K.; Keçeci, C. Robust and fast automatic modulation classification with CNN under multipath fading channels. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25–28 May 2020; pp. 1–6. [Google Scholar]

- Xu, W.; Zhang, M.; Han, D.; Ghassemlooy, Z.; Luo, P.; Zhang, Y. Real-time 262-Mb/s visible light communication with digital predistortion waveform shaping. IEEE Photonics J. 2018, 10, 1–10. [Google Scholar] [CrossRef]

- Wang, Q.; Giustiniano, D.; Zuniga, M. In light and in darkness, in motion and in stillness: A reliable and adaptive receiver for the internet of lights. IEEE J. Sel. Areas Commun. 2017, 36, 149–161. [Google Scholar] [CrossRef]

- Mao, L.; Li, C.; Li, H.; Chen, X.; Mao, X.; Chen, H. A mixed-interval multi-pulse position modulation scheme for real-time visible light communication system. Opt. Commun. 2017, 402, 330–335. [Google Scholar] [CrossRef]

- Wu, H.; Wang, Q.; Xiong, J.; Zuniga, M. SmartVLC: Co-designing smart lighting and communication for visible light networks. IEEE Trans. Mob. Comput. 2019, 19, 1956–1970. [Google Scholar] [CrossRef]

- Randy, L.D.; Sebastián, B.P.J.; San Millán, H.E. Time synchronization technique hardware implementation for OFDM systems with Hermitian Symmetry for VLC applications. IEEE Access 2023, 11, 42222–42233. [Google Scholar] [CrossRef]

- Liang, J.; Lin, S.; Ke, X. Design of Indoor Visible Light Communication PAM4 System. Appl. Sci. 2024, 14, 1663. [Google Scholar] [CrossRef]

- Perlaza, J.S.B.; Domínguez, R.L.; Heredia, E.S.M. Phase Characterization and Correction in a Hardware Implementation of an OFDM-Based System for VLC Applications. IEEE Photonics J. 2023, 15, 1–7. [Google Scholar] [CrossRef]

- Kumar, S.; Mahapatra, R.; Singh, A. Automatic modulation recognition: An FPGA implementation. IEEE Commun. Lett. 2022, 26, 2062–2066. [Google Scholar] [CrossRef]

- Woo, J.; Jung, K.; Mukhopadhyay, S. Efficient Hardware Design of DNN for RF Signal Modulation Recognition Employing Ternary Weights. IEEE Access 2024, 12, 80165–80175. [Google Scholar] [CrossRef]

| Reference | Year | Main Design Scheme | Research Objective | Performance (%) | SNR (dB) |

|---|---|---|---|---|---|

| [19] | 2017 | Utilizing fourth-order cumulants for noise suppression and feature extraction, establishing classification thresholds through Monte Carlo simulations. | To distinguish high-order complex modulation formats and enhance noise robustness and modulation sensitivity. | 88.9 (Acc) | 15 |

| [20] | 2020 | Extracting constellation diagram features through clustering analysis and two-dimensional Gaussian models, combined with decision trees for M-QAM signal recognition. | To improve the recognition accuracy of M-QAM under low-SNR conditions. | 100 (Acc) | 18 |

| [21] | 2021 | Discrete Wavelet Transform for multi-scale noise suppression, integrating multi-dimensional features with supervised learning classification models. | To address the issue of decreased modulation recognition accuracy in optical communication systems under time-varying noise conditions. | 96 (Acc) | 15 |

| [22] | 2023 | Designing time-domain integral windows for energy accumulation feature extraction for L-PPM signals, using multi-classifier comparison. | To achieve real-time recognition of multi-order PPM signals under complex channels. | 97.78 (Acc) | 25 |

| [23] | 2023 | Combining chaotic mapping and wavelet fusion to process constellations, generating encrypted templates and employing autocorrelation estimation for classification. | To efficiently classify eight modulation formats (PSK/QAM) under dynamic channels with low complexity. | 100 (AROC) | 5 |

| [24] | 2024 | Extracting frequency-domain histogram feature vectors and applying machine learning methods for classification. | To identify the number of activated subcarriers in OFDM-IM sub-blocks in dynamic underwater optical communication channels in real-time. | 100 (Acc) | 13 |

| Dataset | Channel Conditions | Modulation Types | File Format | Data Shape | Dataset Size | SNR Range (dB) |

|---|---|---|---|---|---|---|

| RML 2016.10a | Including time-varying channels such as carrier frequency offset, sampling rate offset, AWGN, multipath, and fading | 11 classes (8PSK, BPSK, CPFSK, GFSK, PAM4, 16QAM, AM-DSB, AM-SSB, 64QAM, QPSK, WBFM) | .pkl | 2 × 128 | 220,000 | −20:2:18 |

| RML 2018.01a | Tested and generated in a real laboratory environment, considering more complex channel conditions | 24 classes (OOK, 4ASK, 8ASK, BPSK, QPSK, 8PSK, 16PSK, 32PSK, 16APSK, 32APSK, 64APSK, 128APSK, 16QAM, 32QAM, 64QAM, 128QAM, 256QAM, AM-SSB-WC, AM-SSB-SC, AM-DSB-WC, AM-DSB-SC, FM, GMSK, OQPSK) | .h5 | 2 × 1024 | 2,555,904 | −20:2:30 |

| HisarMod 2019.1 | Simulated channel conditions include ideal, static, Rayleigh, Rician (k = 3), and Nakagami-m (m = 2) channels with varying numbers of channel taps. | 26 classes (AM-DSB, AM-SC, AM-USB, AM-LSB, FM, PM, 2FSK, 4FSK, 8FSK, 16FSK, 4PAM, 8PAM, 16PAM, BPSK, QPSK, 8PSK, 16PSK, 32PSK, 64PSK, 4QAM, 8QAM, 16QAM, 32QAM, 64QAM, 128QAM, 256QAM) | .mat | 2 × 1024 | 780,000 | −20:2:18 |

| Reference | System Platform | Channel Conditions | Modulation Types | Sample Format | Dataset Size | Parameter Range |

|---|---|---|---|---|---|---|

| Mortada B. et al. [28] | Optisystem | A 4-km FSO link with an attenuation of 0.43 dB/km | 8 classes (2/4/8/16PSK, 8/16/32/64QAM) | Fan-beam constellation diagram | Unclear | 5:5:30 dB (OSNR) |

| Gu Y. et al. [29] | MATLAB | Gamma–gamma atmospheric channel, AWGN | 4 classes (OOK, BPSK, QPSK, 16QAM) | 2 × 1024 I/Q sequence | 960,000 | 10:30 dB (SNR) |

| Wang Y. et al. [31] | MATLAB | AWGN, Doppler shift, Rician multipath fading, clock offset | 4 classes (BPSK, QPSK, 8PSK, 16QAM) | Time-frequency diagram. | 4400 | 0:2:20 dB (SNR) |

| Arafa N. et al. [32] | MATLAB R2020b | Simulation of a realistic traffic multi-vehicle VLC path loss model | 8 classes (QPSK, 8/16PSK, 4/8/16/32/64QAM) | Hough constellation diagram | 32,000 | 5:25 dB (SNR) |

| Gao W. et al. [33] | Real UVLC experimental system | Real UVLC channel with adjustable LED driving voltage | 10 classes (2/4/8/16/32/64QAM, 8/16/32/64APSK) | 4 × N sequence | Unclear | 0.1~0.55 V (Voltage) |

| Zhang L. et al. [34] | MATLAB R2018b | UOWC channel incorporating scattering, absorption, and turbulence effects | 15 classes (4/8/16/32/64/128/256 QAM, 2/4/8ASK, 2/4/8/16/32 PSK) | Constellation diagram | 300 | 6:3:15 dB (SNR) |

| Reference | Year | Input | Model | Modulation Types | Typical Accuracy (%) | Typical Conditions |

|---|---|---|---|---|---|---|

| Liu W. et al. [27] | 2020 | Pseudo constellation diagram | GoogLeNet V3 | BPSK, 4/8/16/32/64QAM | 98 | SNR = 15 dB |

| Mortada B. et al. [28] | 2022 | Fan-beam constellation diagram | AlexNet | 2/4/8/16PSK, 8/16/32/64QAM | 100 | OSNR = 15 dB |

| Gu Y. et al. [29] | 2022 | 2 × 1024 IQ sequence | CNN | OOK, BPSK, QPSK, 16QAM | 99.98 | SNR = 10~30 dB |

| Gao W. et al. [30] | 2022 | 2 × 2N matrix | DrCNN | 4/8/16QAM,8PSK, OOK, 16APSK | 98.3 | SNR = 20 dB |

| Wang Y. et al. [31] | 2024 | Time-frequency diagram | YOLOv5s | BPSK, QPSK, 8PSK, 16QAM | 0.993 (mAP) | SNR = 20 dB |

| Arafa N. et al. [32] | 2024 | Hough transform constellation diagram | Pre-Trained AlexNet | QPSK, 8/16PSK, 4/8/16/32/64QAM | 100 | SNR = 12 dB |

| Gao W. et al. [33] | 2024 | 4 × N sequence | BiGRU | 2/4/8/16/32/64QAM, 8/16/32/64APSK | >96 | Linear working area |

| Zhang L. et al. [34] | 2020 | Constellation diagram | PGML-CNN | 4/8/16/32/64/128/256 QAM, 2/4/8ASK, 2/4/8/16/32 PSK | 95.63 | SNR = 6 dB |

| Zhao Z. et al. [36] | 2022 | Contour stellar image | AlexNet-AL | 2/4/8/16/32/64QAM | 88.78 | SNR = 0~15 dB |

| Li F. et al. [37] | 2023 | IQ samples processed by coordinate transformation and folding algorithm. | Reservoir Computing | OOK, 4QAM, 8QAM-DIA, 8QAM-CIR, 16APSK, 16QAM | >90 | Linear working area |

| Yao L. et al. [39] | 2024 | Constellation diagram | CIKD-CNN | PAM4, QPSK, 8QAM-CIR, 8QAM-DIA, 16/32QAM, 16/32APSK | 100 | Ideal working area |

| Zheng X. et al. [40] | 2024 | Constellation diagram | TCN-LSTM + MMAnet | 4/8/16/32/64QAM | 99.2 | SNR = 4 dB |

| Methods | Characteristics | Advantages | Disadvantages |

|---|---|---|---|

| Likelihood-Based Methods | Construct likelihood functions based on Bayesian statistical theory; require assumptions about channel models and parameter distributions | Optimal in Bayesian theory; rigorous modeling of noise statistical properties | Extremely high computational complexity; dependence on precise prior parameter distributions; difficulty in distinguishing nested modulation types |

| Feature-Based Methods | Manually designed time/frequency/statistical features; combined with traditional classifiers; strong feature interpretability | Lower computational complexity; effective under small sample conditions; simple hardware implementation | Dependence on high SNR; expert knowledge required for manual feature design; poor adaptability to time-varying channels |

| Deep Learning-Based Methods | Data-driven automatic feature extraction; capable of end-to-end classification | Ability to capture complex features; better performance under low SNR; adaptive to new modulation formats | Requires large amounts of labeled data; time-consuming model training; poor interpretability; high hardware resource demands |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, S.; Du, W.; Li, C.; Liu, S.; Li, R. Research Progress on Modulation Format Recognition Technology for Visible Light Communication. Photonics 2025, 12, 512. https://doi.org/10.3390/photonics12050512

Zhou S, Du W, Li C, Liu S, Li R. Research Progress on Modulation Format Recognition Technology for Visible Light Communication. Photonics. 2025; 12(5):512. https://doi.org/10.3390/photonics12050512

Chicago/Turabian StyleZhou, Shengbang, Weichang Du, Chuanqi Li, Shutian Liu, and Ruiqi Li. 2025. "Research Progress on Modulation Format Recognition Technology for Visible Light Communication" Photonics 12, no. 5: 512. https://doi.org/10.3390/photonics12050512

APA StyleZhou, S., Du, W., Li, C., Liu, S., & Li, R. (2025). Research Progress on Modulation Format Recognition Technology for Visible Light Communication. Photonics, 12(5), 512. https://doi.org/10.3390/photonics12050512