1. Introduction

Single-pixel imaging (SPI) is a computational optical imaging technique that has developed rapidly in recent years, showing significant potential for various applications. The concept of SPI originated from experiments in which a single-point detector was used to capture modulated light after optical field modulation. These experiments date back to the 1880s and involved imaging based on point-by-point scanning. Specifically, British researchers developed a scanning-based electronic visual display technique using a selenium cell to detect light intensities that passed through the holes of a Nipkow disk from different spatial locations at different times [

1]. As research on area array detectors was not advanced at that time, this technique became one of the primary methods for obtaining two-dimensional images of objects. In 2005, Sen et al. [

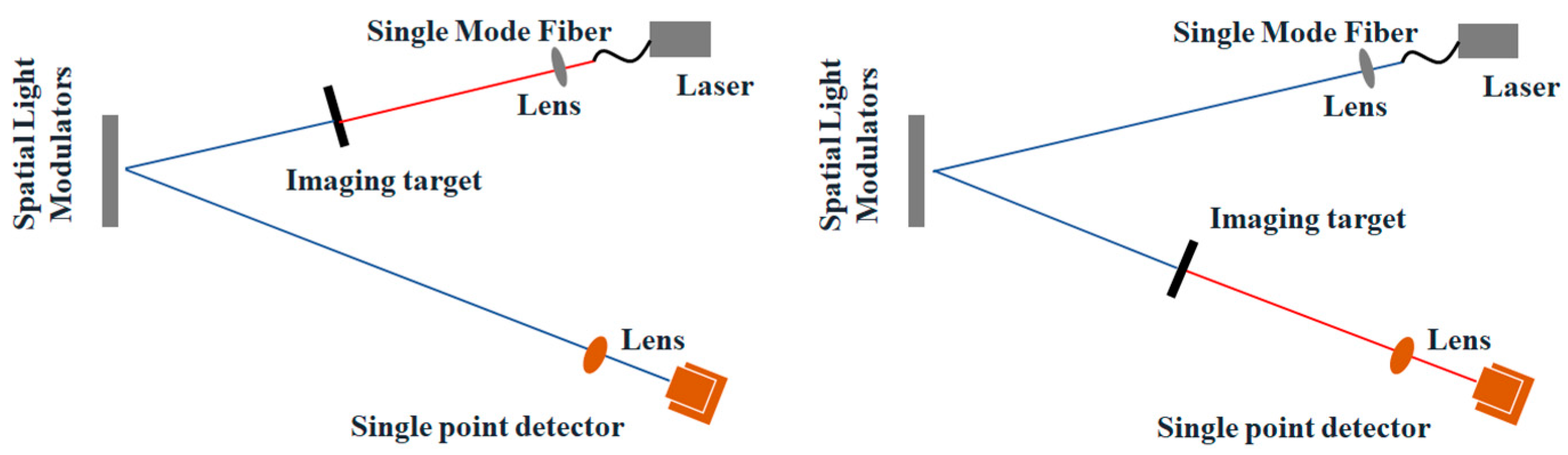

2] from Stanford University proposed an image acquisition method called dual-photography, which utilized a single-pixel detector to measure the intensity of modulated light. This method can be considered a prototype of SPI. Since then, SPI has evolved into an imaging method characterized by high sensitivity, low noise, a large dynamic range, and low cost. The technique enables high-quality image reconstruction in low-light conditions, acquiring full image information using only one detector. SPI can be divided into pre-modulation SPI and post-modulation SPI according to different light field modulation methods [

3]; the specific imaging principle is shown in

Figure 1. Although there are some differences between the two imaging methods in the optical path, the imaging principle and reconstruction algorithm are basically similar, so they are both included in the category of SPI techniques.

SPI offers several advantages: (1) It breaks through the limitations of conventional imaging methods by employing an innovative non-imaging approach. Rather than relying on a complete image reconstruction, it directly measures light intensity in the scene using a single-pixel detector. This allows the rapid acquisition of the geometrical moments of the target object, facilitating fast focusing. (2) Single-point detectors used in SPI outperform array detectors in detection efficiency, sensitivity, and performance [

3]. By capturing the total light intensity across the scene, they are particularly effective for capturing low-light signals. These detectors also exhibit a broad spectral response, making them suitable for specialized bands such as infrared [

4] and terahertz regions [

5,

6,

7]. (3) The incorporation of advanced signal processing techniques [

8], including compressed sensing and deep learning, enhances imaging efficiency compared to traditional point-by-point scanning. (4) SPI is highly robust to noise, particularly in low signal-to-noise ratio conditions, where the integration of optical signals using a single-pixel detector effectively suppresses noise. This robustness ensures stable performance in autofocusing technology even in high-noise environments.

As research has progressed, SPI has addressed several challenges associated with conventional imaging [

9], including imaging under low-light conditions and imaging through turbid media. SPI is particularly advantageous in scenarios where pixelated detectors are unsuitable, such as X-ray imaging [

10,

11], fluorescence imaging [

12], and real-time terahertz imaging [

7]. Furthermore, SPI shows broad application potential in areas such as hyperspectral imaging [

13,

14], optical encryption [

15,

16], remote sensing and tracking [

17,

18], 3D imaging technology [

19,

20], and ultrafast imaging [

21]. The current mainstream mechanisms of SPI can be broadly categorized into three types: coded aperture, transverse scanning, and longitudinal scanning.

This study begins by introducing single-pixel detectors, followed by a detailed discussion of the typical applications of SPI within the frameworks of coded aperture, transverse scanning, and longitudinal scanning mechanisms. The applications of SPI in coded aperture mechanisms include X-ray coded aperture imaging and ghost imaging. In transverse scanning mechanisms, SPI is applied in optical coherence tomography (OCT) and single-photon light detection and ranging (LiDAR), while in longitudinal scanning mechanisms, it is employed in Fourier transform infrared (FTIR) spectrometry and intensity interferometry. Subsequently, this study provides an overview of the integration of deep learning (DL) techniques in SPI applications. Finally, the study summarizes the existing challenges in key SPI techniques and discusses potential directions for the future development of SPI.

2. Single-Pixel Detectors

Single-photon detectors (SPDs) are essential devices for single-photon detection, offering the capability to capture and convert the energy of individual photons due to their ultra-high sensitivity. SPDs are widely utilized for detecting signals with intensity levels equivalent to the energy of only a few photons. Their detection principle is based on the photoelectric effect, and their primary function is to convert optical signals into electrical signals.

The earliest photodetector, the photomultiplier tube (PMT), is a device based on the external photoelectric effect. It was first successfully applied to single-photon detection in 1949 and was primarily used for low-light detection [

22]. In 1964, Haitz’s research team [

23] introduced the avalanche photodiode (APD), a device that responds to single photons and utilizes the internal photoelectric effect, unlike the PMT. APDs can operate in two modes: Geiger mode and linear mode. When operating in Geiger mode, APDs are referred to as single-photon avalanche diodes (SPADs) [

24]. The key difference between these modes lies in their operating voltage. In 1998, Golovin and Sadygov [

25] proposed the multi-pixel silicon photomultiplier (SiPM). Later, in 2001, Gol’tsman et al. [

26] developed the superconducting nanowire single-photon detector (SNSPD) and successfully detected photon response signals using this device. The main characteristics of selected detectors are summarized in

Table 1.

Owing to increasingly complex application scenarios and stringent detection standards, detectors are now subject to new requirements and challenges, particularly in terms of the dynamic range, response speed, signal-to-noise ratio, and other critical aspects. Consequently, extensive research has been conducted in recent years to promote advancements in detector technologies. The development trend for detectors emphasizes achieving high photon detection efficiency, low dark count rates, a broad spectral range, high count rates, and other enhanced technical specifications. Future research directions include the following: (1) improving detector structures to increase the photosensitive interval and maximize detection capabilities [

27]; (2) refining production processes to reduce the influence of interference factors and enhance detector stability [

28]; and (3) enhancing the photoelectric performance of SPDs through the application of new photoelectric materials [

29].

3. Coded Aperture-Based SPI

The target undergoes wavefront phase modulation via a phase mask, resulting in a coded and modulated image. The detector captures the coded and modulated two-dimensional image, enabling the establishment of the relationship between the point spread function (PSF) and the distance, thus forming a three-dimensional image of the target. Typical applications of coded aperture-based SPI include X-ray coded aperture imaging and ghost imaging.

3.1. Fundamentals of Coded Aperture Imaging

Coded aperture imaging originates from pinhole imaging. While smaller pinhole apertures yield higher imaging resolutions, they significantly reduce the amount of light passing through, necessitating long exposure times. Additionally, excessively small apertures may result in diffraction effects, leading to imaging failure.

The coded aperture technique [

30] involves integrating a specifically shaped mask into a conventional camera and adjusting the transmittance of light such that part of the light passes through while the rest is blocked. The shape and arrangement of the apertures in the coded mask are regular rather than random, with alterations in their shape or arrangement influencing the system’s resolution.

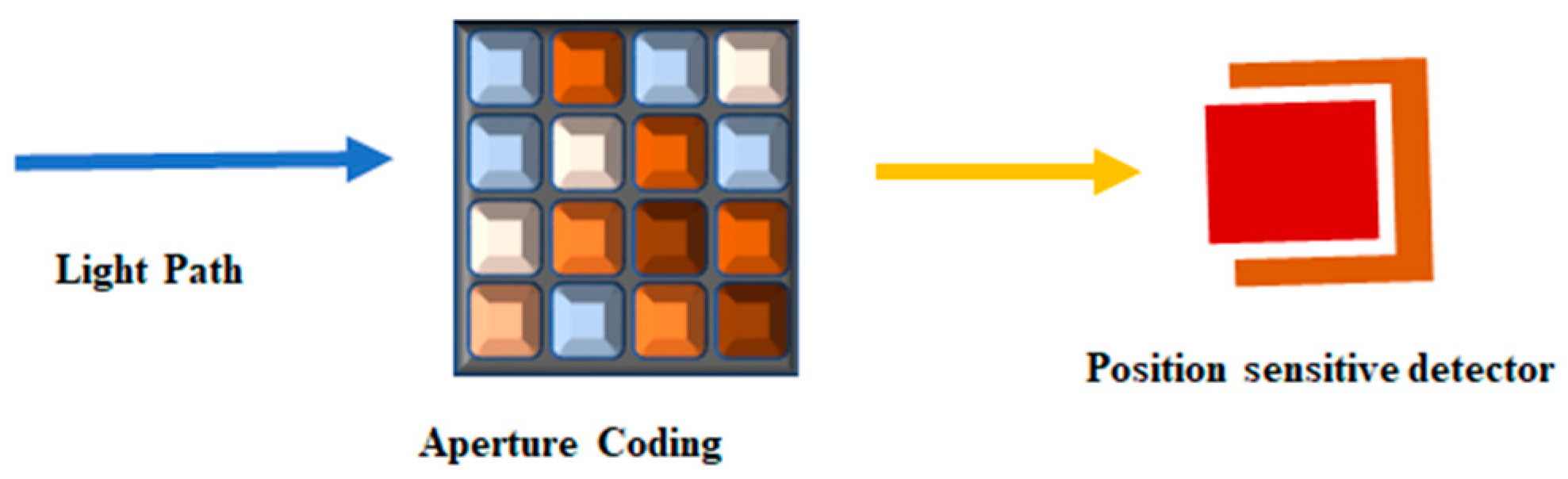

Typically, a coded aperture imaging system comprises a coded aperture and a position-sensitive detector (PSD), as illustrated in

Figure 2. The PSD may include components such as a scintillation screen or fiber optic array coupled to a position-sensitive PMT, a charge-coupled device (CCD) camera, an SiPM array, or other semiconductor devices.

For an

square mask, the coding process [

31] in a coded aperture system can be modeled as follows:

where g is the coded image reshaped into an

column vector; M is a

measurement matrix derived from the PSF; x represents the reshaped source distribution with a dimension of

; and

is a

noise term vector.

3.2. X-Ray Coded Aperture Imaging

To address the low light collection efficiency and signal-to-noise ratio of single-pinhole imaging, coded aperture imaging, an imaging technique that does not require a conventional lens, was first introduced in X-ray transmission imaging systems in 1961 [

32]. Compared to single-pinhole imaging cameras, this technique significantly increases the optical system’s throughput, thereby improving its signal-to-noise efficiency. Various coded aperture modalities have been developed for X-ray imaging recently [

33], including Fresnel zone plates, random arrays, non-redundant arrays (NRAs), uniformly redundant arrays (URAs), and ring apertures, the main features are shown in

Table 2. However, coded images must be decoded using optical or digital image processing methods to reconstruct the image accurately via the reconstruction algorithm, which inevitably increases the complexity and cost of the system.

Fresnel zone plates [

34] offer higher throughput than single pinholes, enabling improved resolution and flexibility. However, these plates demand high manufacturing precision to ensure that the ring width, spacing, and thickness are maintained within a high tolerance range, allowing for the coherent superposition of light waves. Artifacts generated during production can significantly reduce the signal-to-noise ratio. Moreover, Fresnel zone plates generally require short object distances, limiting the placement of other detectors. These limitations have restricted the broader application of Fresnel zone plates.

Random arrays, whose distribution is determined by a random number table [

35], have the advantage of high throughput, with light transmission through the coding plate reaching up to 50%. However, their drawbacks include an overly strong background, low image contrast, and the production of artifacts. Consequently, random arrays are primarily suitable for imaging isolated point objects, such as in astronomical X-ray imaging.

NRAs [

36] provide high resolution and flexibility. However, their pass area is relatively small, limiting the amount of light received. Therefore, NRAs are not suitable for imaging weakly radiating targets.

A URA [

37,

38] is a two-dimensional random aperture array with an entrance pupil area that accounts for approximately half of the total area of the coding plate [

39]. This design offers significant advantages, including high throughput, the absence of correlated noise, good background characteristics, and the ability to image weak radiation sources.

Ring apertures [

40] feature a single annular opening. Compared with Fresnel zone plates, ring apertures have lower machining precision requirements, a simpler structure, and lower production costs. Additionally, ring apertures can achieve high resolution while maintaining the necessary light collection efficiency and signal-to-noise ratio, making them suitable for applications such as plasma diagnosis.

3.3. Ghost Imaging

3.3.1. Basic Theory

In 1995, Pittman et al. [

41] experimentally demonstrated two-photon imaging, reconstructing the two-dimensional intensity information of a target by correlating measurements from the signal and reference optical paths. In 2005, Valencia et al. [

42] achieved two-photon imaging using thermal light generated by a pseudo-thermal source, as shown in

Figure 3. Later, in 2008, Shapiro et al. [

43] introduced lensless computational ghost imaging, which eliminated the need for the reference light path traditionally required in two-beam systems.

The fundamental principle of computational ghost imaging involves the following steps: A laser source generates a beam that is encoded by a spatial light modulator (SLM). This encoded beam is projected onto a scene or object using a lens, encoding and modulating the scene’s information. The modulated light is then captured by a single-pixel detector, which measures the light intensity. Finally, a reconstruction algorithm is applied to recover the scene image from the acquired intensity data.

3.3.2. Coded Sampling Method

To reduce the computational cost of reconstruction algorithms and address hardware limitations, Aßmann et al. [

44] proposed a compressive adaptive ghost imaging technique in 2013. By employing a wavelet basis, this method reconstructs high-quality images from low-resolution images by automatically detecting regions with large coefficients and increasing image resolution. Subsequently, in 2014, Yu et al. [

45] developed another compressive adaptive ghost imaging approach based on wavelets, designed to enable real-time ghost imaging and produce high-quality images in noisy environments. This method is adaptable for imaging applications across a range of wavelengths.

Noiselets, which are highly incoherent with Haar wavelets, were introduced by Anna et al. [

46] in 2016 as measurement and compression matrices for compressed sensing ghost imaging. This method leverages complex-valued, nonbinary noiselet functions for object sampling in systems illuminated by incoherent light, achieving high-quality image reconstruction at low sampling rates. Gaussian white noise was incorporated into the theoretical model to enhance reconstruction quality. However, this method has notable drawbacks, including high computational costs and extended recovery times.

Hadamard basis patterns, which are binary and have a mosaic-like discrete form, are particularly well suited for error-free quantization and display on high-speed binary spatial light modulators such as digital micromirror devices (DMDs). This makes Hadamard basis patterns highly robust to noise in reconstructed images. In 2015, Edgar M. P. [

4] and colleagues designed a real-time video system for simultaneous imaging in visible and short-wave infrared wavelengths. Their system employed Hadamard basis patterns for real-time sampling and iterative reconstruction, using an optimization algorithm to achieve higher-quality real-time video imaging.

In contrast to Hadamard basis patterns, Fourier basis patterns are sparser and more efficient for sampling most natural scenes. Fourier basis patterns, which are grayscale fringe patterns, can be modulated using time-division multiplexing on DMDs. Although they can also be displayed on other spatial light modulators, their modulation rate is significantly reduced in such cases. In 2015, Zhang et al. [

47] proposed using Fourier basis patterns to reconstruct high-quality, recognizable images with fewer measurements. These patterns were displayed in binary form via a DMD. To further enhance imaging speed, the same research team [

48] developed a fast computational ghost imaging approach using Fourier basis patterns in 2017. This approach significantly improved illumination rates compared to the original grayscale patterns.

4. Transverse Scanning-Based SPI

A two-dimensional (2D) dataset is constructed by performing multiple axial scans at different transverse locations, integrating orientation information with depth information at each location of the target. This process ultimately forms a three-dimensional (3D) image of the scene from a series of two-dimensional datasets. Typical applications of transverse scanning in SPI include OCT and single-photon LiDAR.

4.1. OCT

4.1.1. OCT Imaging Theory

OCT is a non-invasive optical imaging technology that utilizes the low-coherence property of a broad-spectrum light source (usually near-infrared light) to process the collected scattered light signals to obtain an interferogram, which is used to show the structural characteristics of and pathological changes in biological tissues [

49,

50]. OCT plays an important role in early detection and diagnosis in dermatology [

51], dentistry [

52,

53], ophthalmology [

54,

55,

56], and other fields.

Most current OCT systems (as shown in

Figure 4) utilize two-beam interferometers (e.g., Michelson interferometers) to achieve short coherence lengths by employing broadband light sources. The basic model of OCT [

50] can be expressed as

where

denotes the time delay,

is the real component operator,

is the convolution operator,

denotes the impulse response function, and

denotes the coherence function of the light source of the OCT.

is equivalent to the longitudinal PSF of the OCT system, which can be expressed as follows:

where

denotes the central wavelength,

is the speed of light, and

denotes the bandwidth of the light source used in the OCT system. The light sources generally follow a Gaussian distribution. Additionally, the spatial amplitude distribution of the light source is modeled as the longitudinal PSF of the OCT system. The transverse PSF or the amplitude distribution of a Gaussian-distributed beam can be expressed as

where

represents the beam radius at which the beam intensity drops to

of its central value. The radius is given by the following equation:

where

denotes the minimum value of

,

is the vertical coordinate,

denotes the boundary of the confocal region, and

denotes the wavelength of the light source.

4.1.2. Scanning OCT Imaging System

Time-domain OCT (TD-OCT) represents the first generation of OCT imaging systems [

57], utilizing low-coherence interferometry to obtain scanning intensity distributions. This process involves splitting the light and directing it toward a reference mirror and a sample. Intensity information, in the form of depth reflectivity profiles, is extracted from the resulting interferometric profiles. Adjusting the position of the reference mirror allows the detection of backscattered tissue intensity levels from varying depths within the tissue sample. However, the image acquisition speed of TD-OCT is constrained by mechanical limitations, with a maximum achievable speed of 400 scans/s [

58].

Fourier-domain OCT (FD-OCT) represents the next generation of OCT imaging, featuring key advancements such as a stationary reference mirror, the simultaneous measurement of the reflected light spectrum, and the conversion of the frequency domain to the time domain using a Fourier transform. FD-OCT is further divided into spectral-domain OCT (SD-OCT) and swept-source OCT (SS-OCT) [

57]. In SD-OCT, signals are recorded via spatial encoding using a line array camera, whereas SS-OCT records spectral signals sequentially over time using a single-point detector. The two techniques also differ in their central wavelengths: SD-OCT commonly employs a wavelength of 850 nm, while SS-OCT uses 1050 nm [

59]. Longer wavelengths, such as those used in SS-OCT, provide superior penetration, making SS-OCT particularly effective for imaging deeper structures [

60]. Scan acquisition speeds for SD-OCT can reach approximately 100,000 scans per second, while SS-OCT can exceed 200,000 scans per second [

59]. These techniques enhance sensitivity, improve signal-to-noise ratios, and deliver higher-quality scans, including 3D imaging capabilities.

Table 3 outlines the specific characteristics of each OCT technique.

4.2. Single-Photon LiDAR

LiDAR technology is capable of precisely measuring the position, motion, and shape of a target, as well as tracking it. The narrow wavelength range of light waves allows for highly accurate detection. A typical LiDAR system consists of three main components: a laser source, a photodetector (see

Table 1), and a signal processing module. Based on different scanning modalities, LiDAR systems are generally classified into three categories: mechanical, semi-solid, and solid-state [

61]. Mechanical LiDAR achieves scanning through a rotating mechanical mechanism; however, its application is often limited by factors such as reliability, size, and cost [

62]. To address these limitations, semi-solid and solid-state LiDAR systems have been developed, offering higher reliability and compactness (shown in

Table 4).

4.2.1. Detection Principles

With advancements in single-photon detection technology, single-photon LiDARs have emerged and rapidly gained prominence. Compared with conventional LiDAR systems, single-photon LiDARs provide higher resolution, improved sensitivity, and enhanced anti-jamming capabilities. These advantages make them particularly suitable for low signal-to-noise environments and applications requiring high-precision target information. Additionally, single-photon LiDARs outperform conventional systems in terms of size, weight, power consumption, and complexity.

- (1)

Time-correlated single-photon counting (TCSPC)

In photon-counting LiDAR systems employing TCSPC [

63], the Poisson response of an SPD to photons within a given time interval,

, is modeled as follows:

In the aforementioned equations, is the echo signal energy, and is the energy of a single photon. represents the probability of detecting k photon events in time.

- (2)

Time-of-flight (TOF) method

The TOF method measures distance by utilizing a laser that emits laser pulses. The system begins measuring time when the pulses leave the laser and records the stop signal upon receiving the reflected pulses from the target.

The TOF of a photon

can be obtained as follows [

64]:

In the aforementioned equation, c is the speed of light, is the sum of the distance inside the measuring device and the distance from the laser to the object , and is the offset time inside the measuring device.

4.2.2. Reconstruction Algorithms

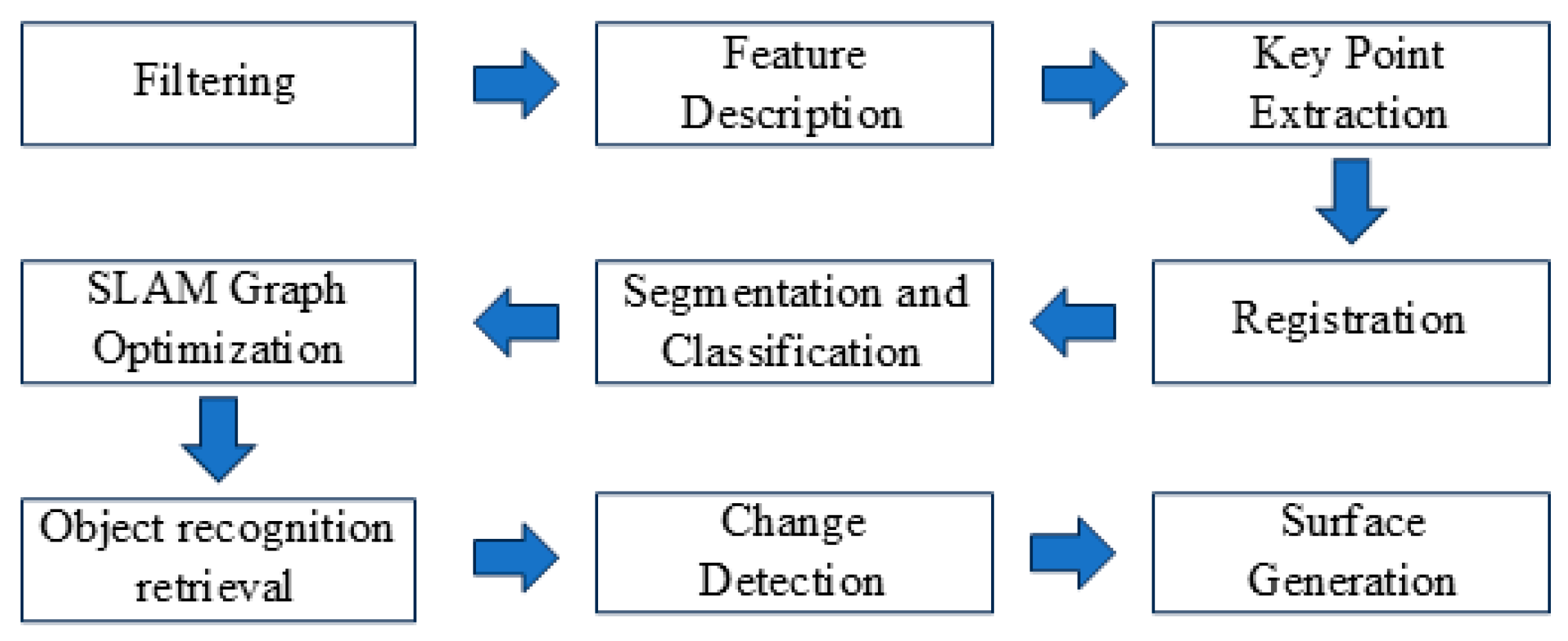

Point Cloud Reconstruction

LiDAR systems capture extensive 3D sample points, forming datasets known as point clouds. A point cloud contains the x, y, and z coordinates of the object’s surface relative to the sensor’s position. In some cases, additional information such as color and echo signal intensity is included. Point clouds can accurately describe the geometric characteristics and spatial locations of objects. The basic steps for processing point clouds [

65] are illustrated in

Figure 5. Point cloud-based surface reconstruction algorithms [

66] are presented in the following section, including the Poisson surface reconstruction algorithm and the ball-pivoting surface reconstruction algorithm.

- (1)

Poisson surface reconstruction algorithm

The Poisson surface reconstruction algorithm is used to construct a smooth surface with the aid of an indicator function

(defined as 1 for a voxel inside the body

M and 0 for a voxel outside

M) [

67]. The vector field

is determined from the data sample

S. The algorithm aims to determine the scalar function

by minimizing the following equation:

where

and

. ∂ is the gradient operator, and

is the inner product of

. Through the application of the divergence operator to form the Poisson equation, the governing equation is expressed as

where

and

denote the Laplace and divergence operators, respectively. The vector field

v is defined by convolving the normal field with the Dirac function

.

In this equation, is the inward surface normal vector at the point , is the Dirac function, and is the boundary of the solid M. Solving Equation (10) provides the solution . The reconstructed surface S is then defined as the semi-equivalent surface of the scalar function .

- (2)

Ball-pivoting surface reconstruction algorithm

The ball-pivoting algorithm is a surface reconstruction method that generates boundary shapes using

shapes [

68]. The

shapes (

) are selected from the data sample

S to form the boundary shape, where the boundary is determined by a positive parameter

:

Here,

is defined as the reciprocal of the planar Euclidean distance between the current point and its next neighboring point.

Here, the function is used to calculate the planar Euclidean distance between and . In this method, spheres of varying radii roll over the sample points to create triangles. If three consecutive points touched by the rolling sphere do not contain any additional points within the sphere, a new triangle is formed.

Frequency-Domain Reconstruction

Frequency-domain reconstruction is based on the Fourier transform of discrete signals. The method generates high-resolution images by encoding spatial information into the frequency domain by irradiating the object with the light of different spatial frequency structures and then converting the frequency-domain information back to the spatial domain.

Spatial frequency-domain fringes generated by light incident on an object are expressed as follows:

Here, is the DC component of the incident light, is the contrast, and is the initial phase.

The total intensity of reflected light

is given by

where

is the illumination area, and

is the position distribution of the object.

The total reflected light intensity measured by the detector,

, is given by

Here, is the optical noise intensity, and γ is the detector gain.

Following the four-step phase-shift algorithm, the spectrum of the object,

, is expressed as follows:

Here, is the Fourier transform.

Subsequently, image reconstruction is achieved through the equation:

In the above equation, is the Fourier transform.

4.2.3. Applications

- (1)

Autonomous driving

Compared to multi-sensor approaches, single-photon LiDAR does not rely on complex algorithms, operates more efficiently, responds faster, and performs robustly under various lighting conditions. It enables vehicles to acquire remote, high-resolution 3D images of their surroundings, even in challenging environments such as low light or turbid media (e.g., rain, fog, snow, and dust) [

69,

70]. In 2022, Wu et al. [

71] at East China Normal University developed a single-photon LiDAR system capable of high-quality imaging in dense fog with a transmittance of 0.023. This innovation offers a practical solution for imaging in turbid media. Moreover, the system significantly reduces energy consumption, contributing to energy conservation and emission reduction.

- (2)

Remote sensing imaging of complex scenes

The long-range and high-resolution capabilities of single-photon LiDAR provide valuable data for applications such as monitoring forest changes, assessing water resources, and managing wildlife habitats [

72]. In 2005, the United States Lincoln Laboratory developed the Jigsaw airborne single-photon LiDAR system, which successfully achieved the three-dimensional imaging of vehicles and buildings under tree canopies [

73]. These LiDAR systems can also be utilized to inspect structural safety distances in infrastructure such as bridges, dams, and power lines.

- (3)

Space exploration

When a ground-based laser irradiates into space, atmospheric attenuation and other factors result in only a small number of photons being sent back via diffuse reflection, making it challenging to detect and extract the target photons. In 2016, Chinese scientists utilized an SNSPD system to successfully measure a target satellite located 3000 km away [

74]. With further optimization and advancements in single-photon LiDAR technology, higher range accuracy and the ability to detect smaller space debris are expected in the future.

5. Longitudinal Scanning-Based SPI

In Fourier space scans, the energy of the object is concentrated in the low-frequency region, enabling the encoding of information from other objects into the medium- and high-frequency regions. This approach provides additional degrees of freedom and allows for three-dimensional imaging. Typical applications of longitudinal scanning-based SPI include Fourier transform infrared (FTIR) spectrometry and intensity interferometry.

5.1. FTIR Spectrometry

5.1.1. FTIR Spectrometry Based on Michelson Interferometer

An FTIR spectrometer differs from traditional spectroscopic methods, such as prism or grating spectroscopy, by employing a Michelson interferometer (as shown in

Figure 6) to generate an interferogram. The interferogram, which varies with time, is converted into a frequency-dependent spectrogram using a Fourier transform. The incident light is split into two beams by a beam splitter: one beam is directed toward a fixed mirror, while the other is reflected by a movable mirror. The two beams are recombined at the beam splitter, producing interference signals that are detected by a photodetector [

75].

When the sample absorbs a specific wavelength, the interference signal I(δ) can be expressed as [

75]

where

is the optical path difference,

is the wave number, and

is the spectral power density.

can be obtained by performing a Fourier transform on

according to the following equation:

The theoretical spectral resolution

is given by the following equation:

where

is the maximum displacement of the movable mirror. As shown in Equation (21), the spectral resolution is determined by the displacement of the movable micromirror. The resolution increases with the extension of the displacement. However, the movable mirror must remain well aligned during movement.

5.1.2. Characteristics and Applications of FTIR Spectrometers

An FTIR spectrometer [

76] is a universal instrument used to study the infrared optical response of solid-, liquid-, and gas-phase samples. Infrared spectroscopy not only identifies different types of materials (qualitative analysis) but also quantifies the amount of material present (quantitative analysis). FTIR spectrometers offer a variety of advantages (see

Table 5 for specific performance metrics), such as a high signal-to-noise ratio, good reproducibility, a large spectral measurement range, the use of a single photodetector, and fast sweep speed. These features make them highly applicable in various industries, including pharmaceuticals, chemicals, energy, environmental sciences, and semiconductors.

The rapid development and commercialization of portable spectrometers are gaining significant traction. One common solution for the miniaturization of Fourier transform infrared (FTIR) spectrometers involves replacing the removable mirror modules in conventional Michelson interferometer-based FTIR systems with MEMS micromirrors. So far, three main types of MEMS micromirrors have been employed for FTIR miniaturization: electrostatic [

77,

78], electromagnetic [

79], and electrothermal [

80].

5.2. Intensity Interferometry

5.2.1. Basic Principles

Intensity interferometry has not seen significant advancements since the 1970s due to the potential loss of phase information. However, with the advent of technologies such as SPDs and signal digital correlators, it has re-emerged as a high-resolution observational technique in astronomy [

81]. Intensity interferometry utilizes different optical telescopes or detectors to measure fluctuations in the light intensity of celestial objects and records the temporal correlation between the arrival times of photons at different locations. The correlation function of the measured light intensity

is expressed as [

82]

Here, denotes averaging over time, denotes the cross-correlation function between SPDs 1 and 2, and is the absolute value of the cross-correlation function.

5.2.2. Large Space Telescopes (LSTs)

The Cherenkov Telescope Array (CTA) [

83] is a ground-based observatory designed for the study of ultra-high-energy gamma rays. It comprises multiple LSTs at the array’s center. Each LST is equipped with a fast PMT camera.

The PMT camera consists of thousands of pixels, with each pixel being a combination of a high-quantum-efficiency PMT and a condenser. This setup enables unprecedented high resolution and sensitivity, along with the capability to detect extreme radiation. Consequently, the CTA holds great research potential in the field of astronomy.

The European Extremely Large Telescope (E-ELT), built by the European Southern Observatory (ESO), is another ground-based optical telescope [

84]. To address the limited temporal resolution of conventional CCD detectors, Dravins et al. [

85] proposed the QuantEYE instrument project. This project was successfully applied to the E-ELT for high-temporal-resolution astronomical observations by segmenting the primary mirror of the Overwhelmingly Large (OWL) telescope into 100 pupil segments and placing a SPAD detector on each segment.

6. Application of DL Techniques in SPI

One of the primary challenges in SPI is the need for large amounts of measurement data. Over the years, various approaches have been developed to reduce the sampling rate. DL, as an emerging technology, has shown significant potential in recovering high-quality images even at very low sampling rates [

86]. The following sections discuss three approaches: data-driven DL, physically augmented DL, and physically driven DL.

6.1. Data-Driven DL

In 2017, Lyu et al. [

87] introduced the first data-driven DL method and applied it to ghost imaging, marking a foundational development in the application of DL in SPI. This method enhances the quality of reconstructed images while predicting low-noise images, thereby increasing the signal-to-noise ratio of the images. Building on this, Wang et al. [

88] proposed an end-to-end neural network capable of directly obtaining one-dimensional bucket signals. Wu et al. [

89] further improved image reconstruction quality by integrating dense connection blocks and attention mechanisms. However, data-driven methods rely on large datasets to train neural networks, making the training process time-intensive and less suitable for practical applications.

6.2. Physically Augmented DL

To address the limitations of data-driven DL, Wang et al. [

90] proposed a physically augmented DL-based SPI method for image reconstruction in 2022. This approach incorporates physical information layers and model-driven fine-tuning into the neural network. By imposing strong physical model constraints, the method enhances the network’s generalization ability and improves reconstruction accuracy. However, the method still needs to be trained on datasets.

6.3. Physically Driven DL

In 2023, Li et al. [

91] proposed an SPI method based on an untrained reconstruction network, utilizing a physical model to construct a network that maps the data and reconstructs the image. The network parameters are optimized through interactions between the neural networks and physical constraints. Unlike previous methods, this approach does not require training on tens of thousands of labeled datasets, thereby eliminating the need for extensive pre-preparation. Moreover, the method is highly generalizable and interpretable, surpassing the aforementioned approaches in image reconstruction quality and noise immunity. However, the method relies on iterative processes, and its imaging time and quality are influenced by the number of iterations.

7. Conclusions and Prospects

This paper reviews and summarizes the SPI technique. Starting from the single-pixel detector, this paper introduces the typical application of SPI technology under different imaging systems and some applications of deep learning techniques in SPI at this stage. The following is a brief summary of the typical applications introduced in the previous sections of this paper, as shown in

Table 6.

SPI, as a notable computational imaging technique, has achieved significant advancements and exhibits distinct advantages in low-light imaging and specialized bands, such as near-infrared and terahertz bands. The technique is gradually being applied in practical scenarios. However, several limitations must still be addressed: (1) Image reconstruction quality—when acquiring complex physical images, only limited information can be obtained, making it challenging to completely reconstruct the physical information and resulting in poor imaging results. (2) Limited imaging efficiency—the light source fails to respond quickly to the system, and the sample collection time is long. (3) Limited range of technical applications—the technique is applicable only in small field-of-view imaging, and it fails to ensure real-time imaging while obtaining a high resolution. (4) Imaging process errors have a significant impact—adding modulation and demodulation steps to the imaging process increases the complexity of the system while obtaining more information, and process errors have a significant impact on the final results.

With the continuous advancement of related technologies, SPI is likely to evolve in the following directions: (1) Some image processing steps can be completed during the sampling process, reducing information redundancy in the transmission channel and saving computing resources and time used in image processing. (2) Given SPI’s diverse imaging mechanisms, it may be possible to achieve faster, higher-resolution imaging by combining multiple mechanisms. (3) In the current era of rapid artificial intelligence development, DL technologies hold tremendous potential for quality enhancement, efficiency improvement, and robustness. Further exploration of DL could yield more effective solutions for increasingly complex scenarios and disruptive factors. (4) Focus on the integration, miniaturization, and on-chip implementation of detection systems is expected. It is believed that in the near future, SPI will develop into a dazzling nova in the field of computational imaging to better adapt to the needs of different scenarios.

Author Contributions

Conceptualization, Q.A.; resources, S.Y., T.L., L.M. and J.H.; writing—original draft preparation J.H.; writing—review and editing, Q.A.; supervision, Q.A., W.W. and L.W.; funding acquisition, Q.A., W.W. and L.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (No. 12373090).

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

The authors thank Yahan Luo for her help in writing this manuscript.

Conflicts of Interest

Author Tong Li was employed by the company Sinomach Hainan Development Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CCD | charge-coupled device |

| CTA | Cherenkov Telescope Array |

| DL | deep learning |

| DMDs | digital micromirror devices |

| DOI | digital object identifier |

| ESO | European Southern Observatory |

| FTS | Fourier transform spectrometer |

| LSTs | Large Space Telescopes |

| OCT | optical coherence tomography |

| OWL | Overwhelmingly Large |

| PMT | photomultiplier tube |

| PSD | position-sensitive detector |

| PSF | point spread function |

| SLM | spatial light modulator |

| SNSPD | superconducting nanowire single-photon detector |

| SPAD | single-photon avalanche diode |

| SPDs | single-photon detectors |

| SPI | single-pixel imaging |

| TCSPC | time-correlated single-photon counting |

| TOF | time-of-flight |

| URAs | Uniformly redundant arrays |

References

- Guarnieri, M. The Television: From Mechanics to Electronics [Historical]. IEEE Ind. Electron. Mag. 2010, 4, 43–45. [Google Scholar] [CrossRef]

- Sen, P.; Chen, B.; Garg, G.; Marschner, S.R.; Horowitz, M.; Levoy, M.; Lensch, H.P.A. Dual Photography. ACM Trans. Graph. 2005, 24, 745–755. [Google Scholar] [CrossRef]

- Edgar, M.P.; Gibson, G.M.; Padgett, M.J. Principles and Prospects for Single-Pixel Imaging. Nat. Photon 2019, 13, 13–20. [Google Scholar] [CrossRef]

- Edgar, M.P.; Gibson, G.M.; Bowman, R.W.; Sun, B.; Radwell, N.; Mitchell, K.J.; Welsh, S.S.; Padgett, M.J. Simultaneous Real-Time Visible and Infrared Video with Single-Pixel Detectors. Sci. Rep. 2015, 5, 10669. [Google Scholar] [CrossRef] [PubMed]

- Stantchev, R.I.; Sun, B.; Hornett, S.M.; Hobson, P.A.; Gibson, G.M.; Padgett, M.J.; Hendry, E. Noninvasive, near-Field Terahertz Imaging of Hidden Objects Using a Single-Pixel Detector. Sci. Adv. 2016, 2, e1600190. [Google Scholar] [CrossRef]

- Hornett, S.M.; Stantchev, R.I.; Vardaki, M.Z.; Beckerleg, C.; Hendry, E. Subwavelength Terahertz Imaging of Graphene Photoconductivity. Nano Lett. 2016, 16, 7019–7024. [Google Scholar] [CrossRef] [PubMed]

- Stantchev, R.I.; Yu, X.; Blu, T.; Pickwell-MacPherson, E. Real-Time Terahertz Imaging with a Single-Pixel Detector. Nat. Commun. 2020, 11, 2535. [Google Scholar] [CrossRef] [PubMed]

- Radwell, N.; Johnson, S.D.; Edgar, M.P.; Higham, C.F.; Murray-Smith, R.; Padgett, M.J. Deep Learning Optimized Single-Pixel LiDAR. Appl. Phys. Lett. 2019, 115, 231101. [Google Scholar] [CrossRef]

- Lu, T.; Qiu, Z.; Zhang, Z.; Zhong, J. Comprehensive Comparison of Single-Pixel Imaging Methods. Opt. Lasers Eng. 2020, 134, 106301. [Google Scholar] [CrossRef]

- Schori, A.; Shwartz, S. X-Ray Ghost Imaging with a Laboratory Source. Opt. Express 2017, 25, 14822–14828. [Google Scholar] [CrossRef] [PubMed]

- Zhang, A.-X.; He, Y.-H.; Wu, L.-A.; Chen, L.-M.; Wang, B.-B. Tabletop X-Ray Ghost Imaging with Ultra-Low Radiation. Optica 2018, 5, 374–377. [Google Scholar] [CrossRef]

- Tanha, M.; Ahmadi-Kandjani, S.; Kheradmand, R.; Ghanbari, H. Computational Fluorescence Ghost Imaging. Eur. Phys. J. D 2013, 67, 44. [Google Scholar] [CrossRef]

- Xiao, Y.; Zhou, L.; Chen, W. Direct Single-Step Measurement of Hadamard Spectrum Using Single-Pixel Optical Detection. IEEE Photonics Technol. Lett. 2019, 31, 845–848. [Google Scholar] [CrossRef]

- Rousset, F.; Ducros, N.; Peyrin, F.; Valentini, G.; D’Andrea, C.; Farina, A. Time-Resolved Multispectral Imaging Based on an Adaptive Single-Pixel Camera. Opt. Express OE 2018, 26, 10550–10558. [Google Scholar] [CrossRef] [PubMed]

- Zafari, M.; Ahmadi-Kandjani, S. Optical Encryption with Selective Computational Ghost Imaging. J. Opt. 2014, 16, 105405. [Google Scholar] [CrossRef]

- Xu, C.; Li, D.; Guo, K.; Yin, Z.; Guo, Z. Computational Ghost Imaging with Key-Patterns for Image Encryption. Opt. Commun. 2023, 537, 129190. [Google Scholar] [CrossRef]

- Shi, D.; Yin, K.; Huang, J.; Yuan, K.; Zhu, W.; Xie, C.; Liu, D.; Wang, Y. Fast Tracking of Moving Objects Using Single-Pixel Imaging. Opt. Commun. 2019, 440, 155–162. [Google Scholar] [CrossRef]

- Sun, S.; Lin, H.; Xu, Y.; Gu, J.; Liu, W. Tracking and Imaging of Moving Objects with Temporal Intensity Difference Correlation. Opt. Express 2019, 27, 27851–27861. [Google Scholar] [CrossRef]

- Zhang, F.; Zhang, K.; Cao, J.; Cheng, Y.; Hao, Q.; Mou, Z. Study on the Performance of Three-Dimensional Ghost Image Affected by Target. Pattern Recognit. Lett. 2019, 125, 508–513. [Google Scholar] [CrossRef]

- Sun, M.-J.; Zhang, J.-M. Single-Pixel Imaging and Its Application in Three-Dimensional Reconstruction: A Brief Review. Sensors 2019, 19, 732. [Google Scholar] [CrossRef] [PubMed]

- Zhao, W.; Chen, H.; Yuan, Y.; Zheng, H.; Liu, J.; Xu, Z.; Zhou, Y. Ultrahigh-Speed Color Imaging with Single-Pixel Detectors at Low Light Level. Phys. Rev. Appl. 2019, 12, 034049. [Google Scholar] [CrossRef]

- Morton, G.A. Photomultipliers for Scintillation Counting. R C A Rev. 1949, 10. [Google Scholar]

- Razeghi, M. Single-Photon Avalanche Photodiodes. In Technology of Quantum Devices; Razeghi, M., Ed.; Springer: Boston, MA, USA, 2010; pp. 425–455. ISBN 978-1-4419-1056-1. [Google Scholar]

- Zhang, J.; Itzler, M.A.; Zbinden, H.; Pan, J.-W. Advances in InGaAs/InP Single-Photon Detector Systems for Quantum Communication. Light Sci. Appl. 2015, 4, e286. [Google Scholar] [CrossRef]

- Renker, D. Geiger-Mode Avalanche Photodiodes, History, Properties and Problems. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrometers Detect. Assoc. Equip. 2006, 567, 48–56. [Google Scholar] [CrossRef]

- Gol’tsman, G.N.; Okunev, O.; Chulkova, G.; Lipatov, A.; Semenov, A.; Smirnov, K.; Voronov, B.; Dzardanov, A.; Williams, C.; Sobolewski, R. Picosecond Superconducting Single-Photon Optical Detector. Appl. Phys. Lett. 2001, 79, 705–707. [Google Scholar] [CrossRef]

- Acerbi, F.; Paternoster, G.; Gola, A.; Regazzoni, V.; Zorzi, N.; Piemonte, C. High-Density Silicon Photomultipliers: Performance and Linearity Evaluation for High Efficiency and Dynamic-Range Applications. IEEE J. Quantum Electron. 2018, 54, 4700107. [Google Scholar] [CrossRef]

- Knehr, E.; Kuzmin, A.; Vodolazov, D.Y.; Ziegler, M.; Doerner, S.; Ilin, K.; Siegel, M.; Stolz, R.; Schmidt, H. Nanowire Single-Photon Detectors Made of Atomic Layer-Deposited Niobium Nitride. Supercond. Sci. Technol. 2019, 32, 125007. [Google Scholar] [CrossRef]

- Vines, P.; Kuzmenko, K.; Kirdoda, J.; Dumas, D.C.S.; Mirza, M.M.; Millar, R.W.; Paul, D.J.; Buller, G.S. High Performance Planar Germanium-on-Silicon Single-Photon Avalanche Diode Detectors. Nat. Commun. 2019, 10, 1086. [Google Scholar] [CrossRef]

- Caroli, E.; Stephen, J.B.; Di Cocco, G.; Natalucci, L.; Spizzichino, A. Coded Aperture Imaging in X- and Gamma-Ray Astronomy. Space Sci. Rev. 1987, 45, 349–403. [Google Scholar] [CrossRef]

- Liu, B.; Lv, H.; Xu, H.; Li, L.; Tan, Y.; Xia, B.; Li, W.; Jing, F.; Liu, T.; Huang, B. A Novel Coded Aperture for γ-Ray Imaging Based on Compressed Sensing. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrometers Detect. Assoc. Equip. 2022, 1021, 165959. [Google Scholar] [CrossRef]

- Haboub, A.; MacDowell, A.A.; Marchesini, S.; Parkinson, D.Y. Coded Aperture Imaging for Fluorescent X-Rays. Rev. Sci. Instrum. 2014, 85, 063704. [Google Scholar] [CrossRef] [PubMed]

- Cieślak, M.J.; Gamage, K.A.A.; Glover, R. Coded-Aperture Imaging Systems: Past, Present and Future Development—A Review. Radiat. Meas. 2016, 92, 59–71. [Google Scholar] [CrossRef]

- Shimano, T.; Nakamura, Y.; Tajima, K.; Sao, M.; Hoshizawa, T. Lensless Light-Field Imaging with Fresnel Zone Aperture: Quasi-Coherent Coding. Appl. Opt. AO 2018, 57, 2841–2850. [Google Scholar] [CrossRef] [PubMed]

- Dicke, R.H. Scatter-Hole Cameras for X-Rays and Gamma Rays. Astrophys. J. 1968, 153, L101. [Google Scholar] [CrossRef]

- Golay, M.J.E. Point Arrays Having Compact, Nonredundant Autocorrelations. J. Opt. Soc. Am. JOSA 1971, 61, 272–273. [Google Scholar] [CrossRef]

- Fenimore, E.E.; Cannon, T.M. Coded Aperture Imaging with Uniformly Redundant Arrays. Appl. Opt. AO 1978, 17, 337–347. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Z.; Li, D.; Wang, Y.; Liang, X.; Zhou, W.; Wang, M.; Wang, X.; Hu, X.; Shuai, L.; et al. Comparison of the Modified Uniformly Redundant Array with the Singer Array for Near-Field Coded Aperture Imaging of Multiple Sources. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrometers Detect. Assoc. Equip. 2023, 1051, 168230. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, L.; Ning, J.; Lu, W.; Wang, X.-F.; Zhang, H.-W.; Tuo, X.-G. Simulation of an Imaging System for Internal Contamination of Lungs Using MPA-MURA Coded-Aperture Collimator. Nucl. Sci. Tech. 2021, 32, 17. [Google Scholar] [CrossRef]

- Ress, D.; Ciarlo, D.R.; Stewart, J.E.; Bell, P.M.; Kania, D.R. A Ring Coded-aperture Microscope for High-resolution Imaging of High-energy x Rays. Rev. Sci. Instrum. 1992, 63, 5086–5088. [Google Scholar] [CrossRef]

- Pittman, T.B.; Shih, Y.H.; Strekalov, D.V.; Sergienko, A.V. Optical Imaging by Means of Two-Photon Quantum Entanglement. Phys. Rev. A 1995, 52, R3429–R3432. [Google Scholar] [CrossRef] [PubMed]

- Valencia, A.; Scarcelli, G.; D’Angelo, M.; Shih, Y. Two-Photon Imaging with Thermal Light. Phys. Rev. Lett. 2005, 94, 063601. [Google Scholar] [CrossRef] [PubMed]

- Shapiro, J.H. Computational Ghost Imaging. Phys. Rev. A 2008, 78, 061802. [Google Scholar] [CrossRef]

- Aβmann, M.; Bayer, M. Compressive Adaptive Computational Ghost Imaging. Sci. Rep. 2013, 3, 1545. [Google Scholar] [CrossRef] [PubMed]

- Yu, W.-K.; Li, M.-F.; Yao, X.-R.; Liu, X.-F.; Wu, L.-A.; Zhai, G.-J. Adaptive Compressive Ghost Imaging Based on Wavelet Trees and Sparse Representation. Opt. Express OE 2014, 22, 7133–7144. [Google Scholar] [CrossRef] [PubMed]

- Pastuszczak, A.; Szczygieł, B.; Mikołajczyk, M.; Kotyński, R. Efficient Adaptation of Complex-Valued Noiselet Sensing Matrices for Compressed Single-Pixel Imaging. Appl. Opt. AO 2016, 55, 5141–5148. [Google Scholar] [CrossRef]

- Zhang, Z.; Ma, X.; Zhong, J. Single-Pixel Imaging by Means of Fourier Spectrum Acquisition. Nat. Commun. 2015, 6, 6225. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, X.; Zheng, G.; Zhong, J. Fast Fourier Single-Pixel Imaging via Binary Illumination. Sci. Rep. 2017, 7, 12029. [Google Scholar] [CrossRef] [PubMed]

- Schmitt, J.M. Optical Coherence Tomography (OCT): A Review. IEEE J. Sel. Top. Quantum Electron. 1999, 5, 1205–1215. [Google Scholar] [CrossRef]

- Lian, J.; Hou, S.; Sui, X.; Xu, F.; Zheng, Y. Deblurring Retinal Optical Coherence Tomography via a Convolutional Neural Network with Anisotropic and Double Convolution Layer. IET Comput. Vis. 2018, 12, 900–907. [Google Scholar] [CrossRef]

- Davis, A.; Levecq, O.; Azimani, H.; Siret, D.; Dubois, A. Simultaneous Dual-Band Line-Field Confocal Optical Coherence Tomography: Application to Skin Imaging. Biomed. Opt. Express 2019, 10, 694–706. [Google Scholar] [CrossRef]

- Bakhsh, T.A.; Tagami, J.; Sadr, A.; Luong, M.N.; Turkistani, A.; Almhimeed, Y.; Alshouibi, E. Effect of Light Irradiation Condition on Gap Formation under Polymeric Dental Restoration; OCT Study. Z. Med. Phys. 2020, 30, 194–200. [Google Scholar] [CrossRef]

- Kang, H.; Darling, C.L.; Fried, D. Use of an Optical Clearing Agent to Enhance the Visibility of Subsurface Structures and Lesions from Tooth Occlusal Surfaces. J. Biomed. Opt. 2016, 21, 081206. [Google Scholar] [CrossRef][Green Version]

- Iorga, R.E.; Moraru, A.; Ozturk, M.R.; Costin, D. The Role of Optical Coherence Tomography in Optic Neuropathies. Rom. J. Ophthalmol. 2018, 62, 3–14. [Google Scholar] [CrossRef] [PubMed]

- Iester, M.; Cordano, C.; Costa, A.; D’Alessandro, E.; Panizzi, A.; Bisio, F.; Masala, A.; Landi, L.; Traverso, C.E.; Ferreras, A.; et al. Effectiveness of Time Domain and Spectral Domain Optical Coherence Tomograph to Evaluate Eyes with And Without Optic Neuritis in Multiple Sclerosi Patients. J. Mult. Scler. 2016, 3, 2. [Google Scholar] [CrossRef]

- Alexopoulos, P.; Madu, C.; Wollstein, G.; Schuman, J.S. The Development and Clinical Application of Innovative Optical Ophthalmic Imaging Techniques. Front. Med. 2022, 9, 891369. [Google Scholar] [CrossRef]

- Fujimoto, J.; Swanson, E. The Development, Commercialization, and Impact of Optical Coherence Tomography. Investig. Ophthalmol. Vis. Sci. 2016, 57, OCT1–OCT13. [Google Scholar] [CrossRef] [PubMed]

- Qin, J.; An, L. Optical Coherence Tomography for Ophthalmology Imaging. Adv. Exp. Med. Biol. 2021, 3233, 197–216. [Google Scholar] [CrossRef]

- Geevarghese, A.; Wollstein, G.; Ishikawa, H.; Schuman, J.S. Optical Coherence Tomography and Glaucoma. Annu. Rev. Vis. Sci. 2021, 7, 693–726. [Google Scholar] [CrossRef]

- Xu, J.; Song, S.; Wei, W.; Wang, R.K. Wide Field and Highly Sensitive Angiography Based on Optical Coherence Tomography with Akinetic Swept Source. Biomed. Opt. Express 2017, 8, 420–435. [Google Scholar] [CrossRef] [PubMed]

- Li, N.; Ho, C.P.; Xue, J.; Lim, L.W.; Chen, G.; Fu, Y.H.; Lee, L.Y.T. A Progress Review on Solid-State LiDAR and Nanophotonics-Based LiDAR Sensors. Laser Photonics Rev. 2022, 16, 2100511. [Google Scholar] [CrossRef]

- Hu, M.; Pang, Y.; Gao, L. Advances in Silicon-Based Integrated Lidar. Sensors 2023, 23, 5920. [Google Scholar] [CrossRef]

- Fu, C.; Zheng, H.; Wang, G.; Zhou, Y.; Chen, H.; He, Y.; Liu, J.; Sun, J.; Xu, Z. Three-Dimensional Imaging via Time-Correlated Single-Photon Counting. Appl. Sci. 2020, 10, 1930. [Google Scholar] [CrossRef]

- Staffas, T.; Elshaari, A.; Zwiller, V. Frequency Modulated Continuous Wave and Time of Flight LIDAR with Single Photons: A Comparison. Opt. Express 2024, 32, 7332–7341. [Google Scholar] [CrossRef]

- Liu, X.; Meng, W.; Guo, J.; Zhang, X. A Survey on Processing of Large-Scale 3D Point Cloud. In Proceedings of the E-Learning and Games; El Rhalibi, A., Tian, F., Pan, Z., Liu, B., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 267–279. [Google Scholar]

- Ruchay, A.; Dorofeev, K.; Kalschikov, V.; Kober, A. Accuracy Analysis of Surface Reconstruction from Point Clouds. In Proceedings of the 2020 International Conference on Information Technology and Nanotechnology (ITNT), Samara, Russia, 26–29 May 2020; pp. 1–4. [Google Scholar]

- Wang, J.; Shi, Z. Multi-Reconstruction from Points Cloud by Using a Modified Vector-Valued Allen–Cahn Equation. Mathematics 2021, 9, 1326. [Google Scholar] [CrossRef]

- Ma, W.; Li, Q. An Improved Ball Pivot Algorithm-Based Ground Filtering Mechanism for LiDAR Data. Remote Sens. 2019, 11, 1179. [Google Scholar] [CrossRef]

- Royo, S.; Ballesta-Garcia, M. An Overview of Lidar Imaging Systems for Autonomous Vehicles. Appl. Sci. 2019, 9, 4093. [Google Scholar] [CrossRef]

- Taher, J.; Hakala, T.; Jaakkola, A.; Hyyti, H.; Kukko, A.; Manninen, P.; Maanpää, J.; Hyyppä, J. Feasibility of Hyperspectral Single Photon Lidar for Robust Autonomous Vehicle Perception. Sensors 2022, 22, 5759. [Google Scholar] [CrossRef] [PubMed]

- Shi, H.; Shen, G.; Qi, H.; Zhan, Q.; Pan, H.; Li, Z.; Wu, G. Noise-Tolerant Bessel-Beam Single-Photon Imaging in Fog. Opt. Express OE 2022, 30, 12061–12068. [Google Scholar] [CrossRef]

- Boretti, A. A Perspective on Single-Photon LiDAR Systems. Microw. Opt. Technol. Lett. 2024, 66, e33918. [Google Scholar] [CrossRef]

- Marino, R.M.; Davis, W.R. Jigsaw: A Foliage-Penetrating 3D Imaging Laser Radar System. Linc. Lab. J. 2005, 15, 23–36. [Google Scholar]

- Li, H.; Chen, S.; You, L.; Meng, W.; Wu, Z.; Zhang, Z.; Tang, K.; Zhang, L.; Zhang, W.; Yang, X.; et al. Superconducting Nanowire Single Photon Detector at 532 Nm and Demonstration in Satellite Laser Ranging. Opt. Express 2016, 24, 3535. [Google Scholar] [CrossRef]

- Chai, J.; Zhang, K.; Xue, Y.; Liu, W.; Chen, T.; Lu, Y.; Zhao, G. Review of MEMS Based Fourier Transform Spectrometers. Micromachines 2020, 11, 214. [Google Scholar] [CrossRef] [PubMed]

- Abbas, M.A.; Jahromi, K.E.; Nematollahi, M.; Krebbers, R.; Liu, N.; Woyessa, G.; Bang, O.; Huot, L.; Harren, F.J.M.; Khodabakhsh, A. Fourier Transform Spectrometer Based on High-Repetition-Rate Mid-Infrared Supercontinuum Sources for Trace Gas Detection. Opt. Express OE 2021, 29, 22315–22330. [Google Scholar] [CrossRef] [PubMed]

- Kenda, A.; Kraft, M.; Tortschanoff, A.; Scherf, W.; Sandner, T.; Schenk, H.; Lüttjohann, S.; Simon, A. Development, Characterization and Application of Compact Spectrometers Based on MEMS with in-Plane Capacitive Drives. In Proceedings of the SPIE 9101, Next-Generation Spectroscopic Technologies VII, Baltimore, MD, USA, 21 May 2014; p. 910102. [Google Scholar]

- Sandner, T.; Grasshoff, T.; Gaumont, E.; Schenk, H.; Kenda, A. Translatory MOEMS Actuator and System Integration for Miniaturized Fourier Transform Spectrometers. J. Micro/Nanolith. MEMS MOEMS 2014, 13, 011115. [Google Scholar] [CrossRef]

- Xue, Y.; He, S. A Translation Micromirror with Large Quasi-Static Displacement and High Surface Quality. J. Micromech. Microeng. 2017, 27, 015009. [Google Scholar] [CrossRef]

- Wang, W.; Chen, J.; Zivkovic, A.S.; Xie, H. A Fourier Transform Spectrometer Based on an Electrothermal MEMS Mirror with Improved Linear Scan Range. Sensors 2016, 16, 1611. [Google Scholar] [CrossRef]

- Rivet, J.-P.; Vakili, F.; Lai, O.; Vernet, D.; Fouché, M.; Guerin, W.; Labeyrie, G.; Kaiser, R. Optical Long Baseline Intensity Interferometry: Prospects for Stellar Physics. Exp. Astron. 2018, 46, 531–542. [Google Scholar] [CrossRef]

- Yi, S.; An, Q.; Zhang, W.; Hu, J.; Wang, L. Astronomical Intensity Interferometry. Photonics 2024, 11, 958. [Google Scholar] [CrossRef]

- Barrio, J.A. Status of the Large Size Telescopes and Medium Size Telescopes for the Cherenkov Telescope Array Observatory. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrometers Detect. Assoc. Equip. 2020, 952, 161588. [Google Scholar] [CrossRef]

- Padovani, P.; Cirasuolo, M. The Extremely Large Telescope. Contemp. Phys. 2023, 64, 47–64. [Google Scholar] [CrossRef]

- Dravins, D.; Barbieri, C.; Fosbury, R.A.E.; Naletto, G.; Nilsson, R.; Occhipinti, T.; Tamburini, F.; Uthas, H.; Zampieri, L. QuantEYE: The Quantum Optics Instrument for OWL. arXiv 2005, arXiv:astro-ph/0511027. [Google Scholar]

- Deng, Y.; She, R.; Liu, W.; Lu, Y.; Li, G. Single-Pixel Imaging Based on Deep Learning Enhanced Singular Value Decomposition. Sensors 2024, 24, 2963. [Google Scholar] [CrossRef] [PubMed]

- Lyu, M.; Wang, W.; Wang, H.; Wang, H.; Li, G.; Chen, N.; Situ, G. Deep-Learning-Based Ghost Imaging. Sci. Rep. 2017, 7, 17865. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Wang, H.; Wang, H.; Li, G.; Situ, G. Learning from Simulation: An End-to-End Deep-Learning Approach for Computational Ghost Imaging. Opt. Express 2019, 27, 25560. [Google Scholar] [CrossRef]

- Wu, H.; Wang, R.; Zhao, G.; Xiao, H.; Wang, D.; Liang, J.; Tian, X.; Cheng, L.; Zhang, X. Sub-Nyquist Computational Ghost Imaging with Deep Learning. Opt. Express 2020, 28, 3846–3853. [Google Scholar] [CrossRef]

- Wang, F.; Wang, C.; Deng, C.; Han, S.; Situ, G. Single-Pixel Imaging Using Physics Enhanced Deep Learning. Photonics Res. 2021, 10, 01000104. [Google Scholar] [CrossRef]

- Li, Z.; Huang, J.; Shi, D.; Chen, Y.; Yuan, K.; Hu, S.; Wang, Y. Single-Pixel Imaging with Untrained Convolutional Autoencoder Network. Opt. Laser Technol. 2023, 167, 109710. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).