Optical Coherence Imaging Hybridized Deep Learning Framework for Automated Plant Bud Classification in Emasculation Processes: A Pilot Study

Abstract

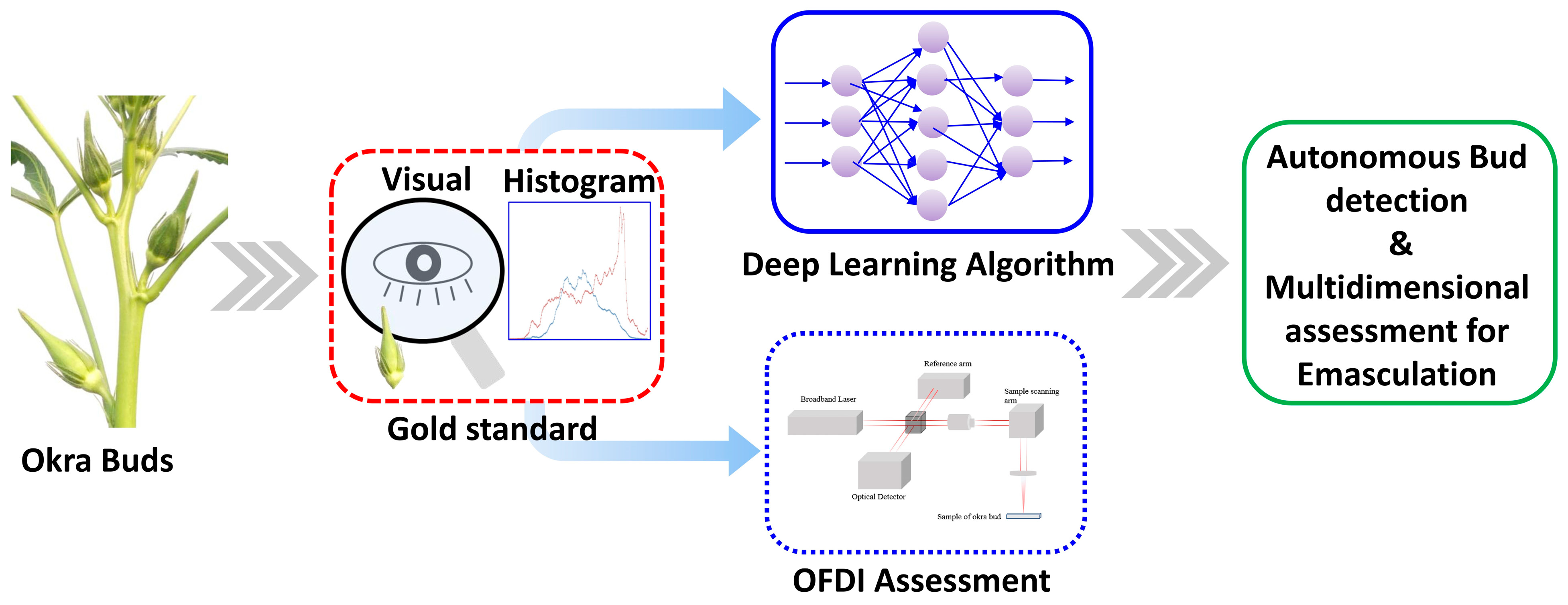

1. Introduction

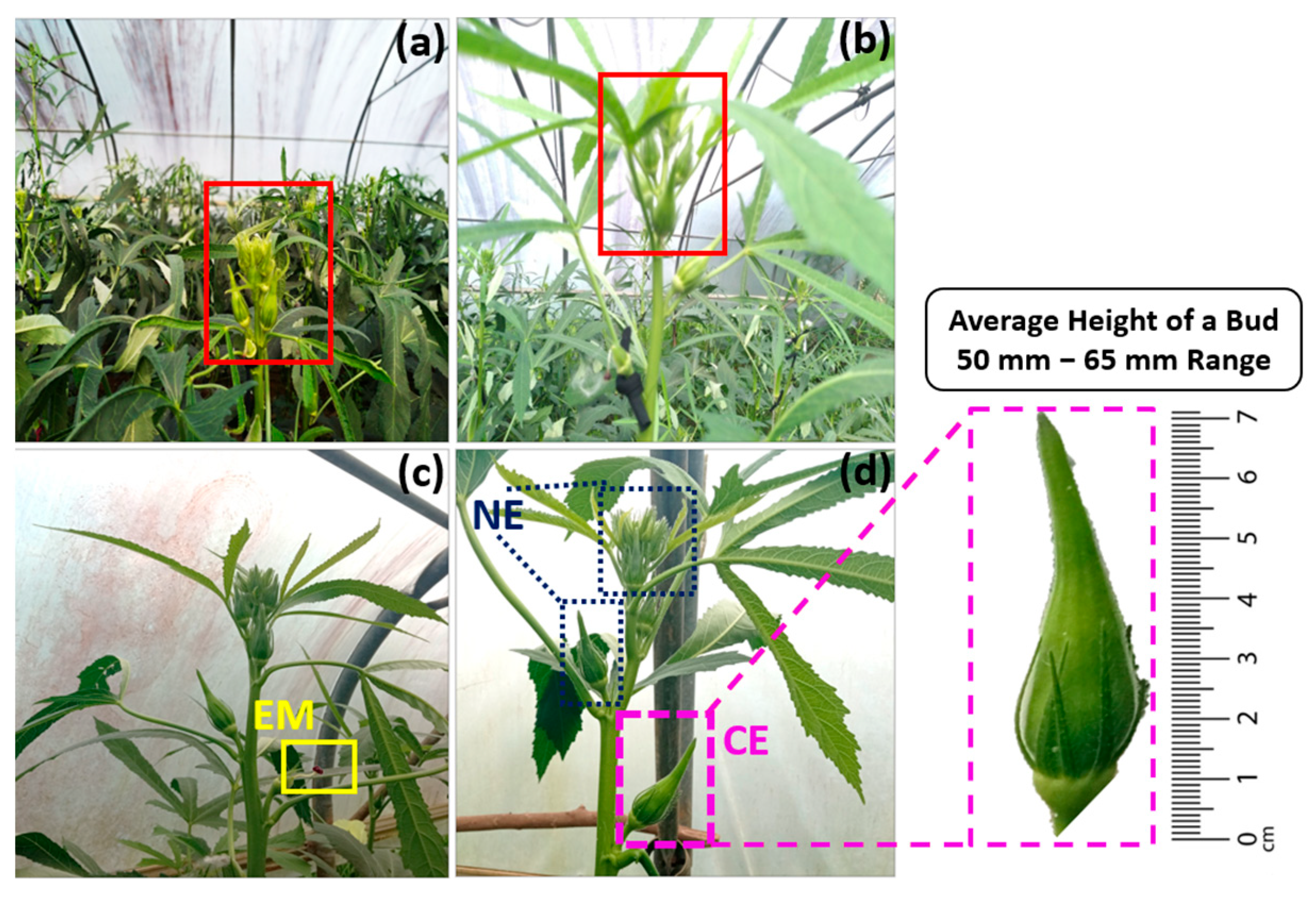

2. Materials and Methods

2.1. Acquisition of the Plant Material Images

2.2. Preprocessing and Augmentation of Data

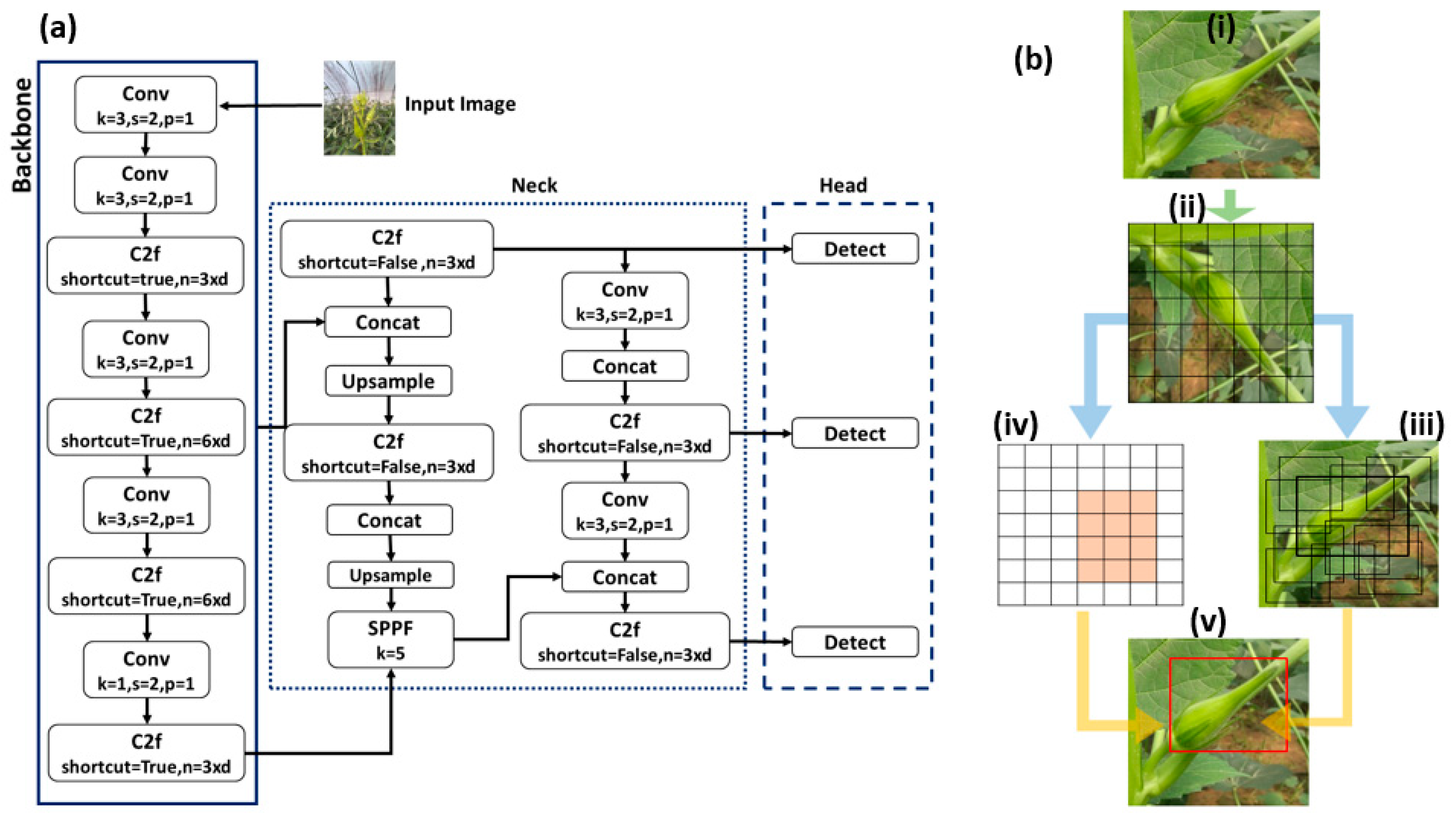

2.3. Training the Network

2.4. Training Process

2.5. Performance Evaluation

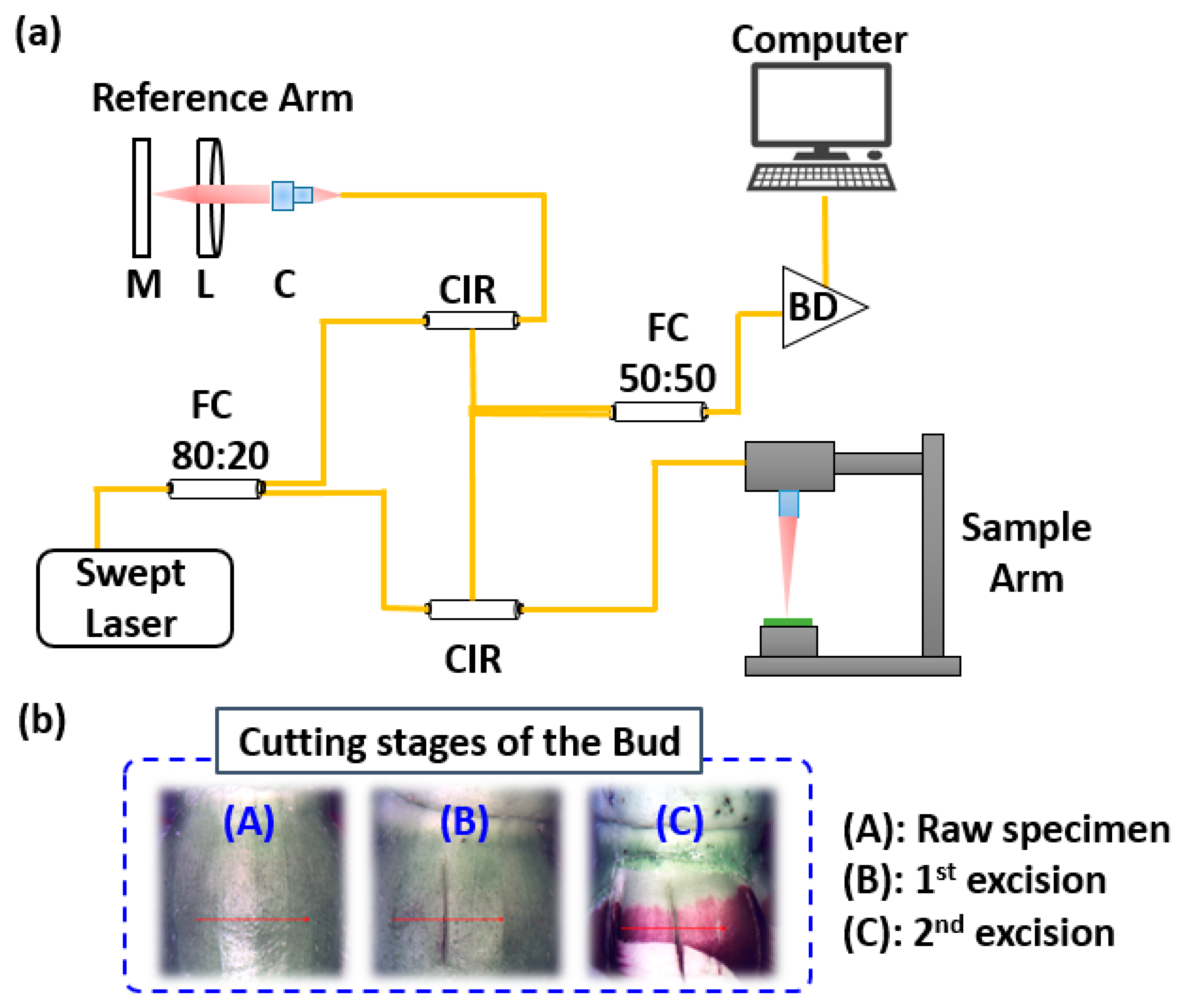

2.6. Non-Invasive Multi-Dimensional Optical Coherence Imaging

2.7. Histograms and Intensity Variations Based on Light Conditions: Gold Standard Framework Based on Digital Images

3. Results

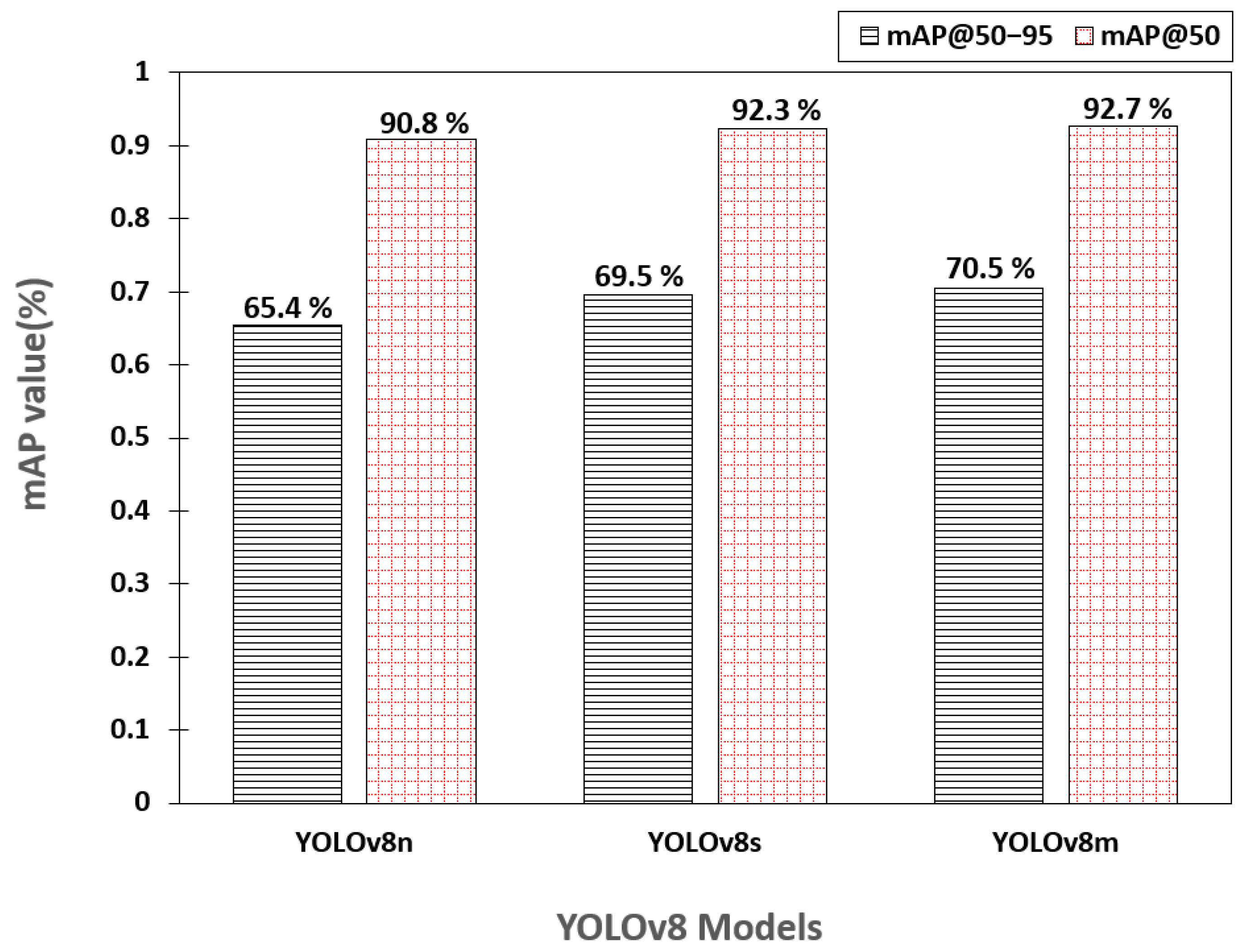

3.1. Training Evaluation of YOLOv8 Variants

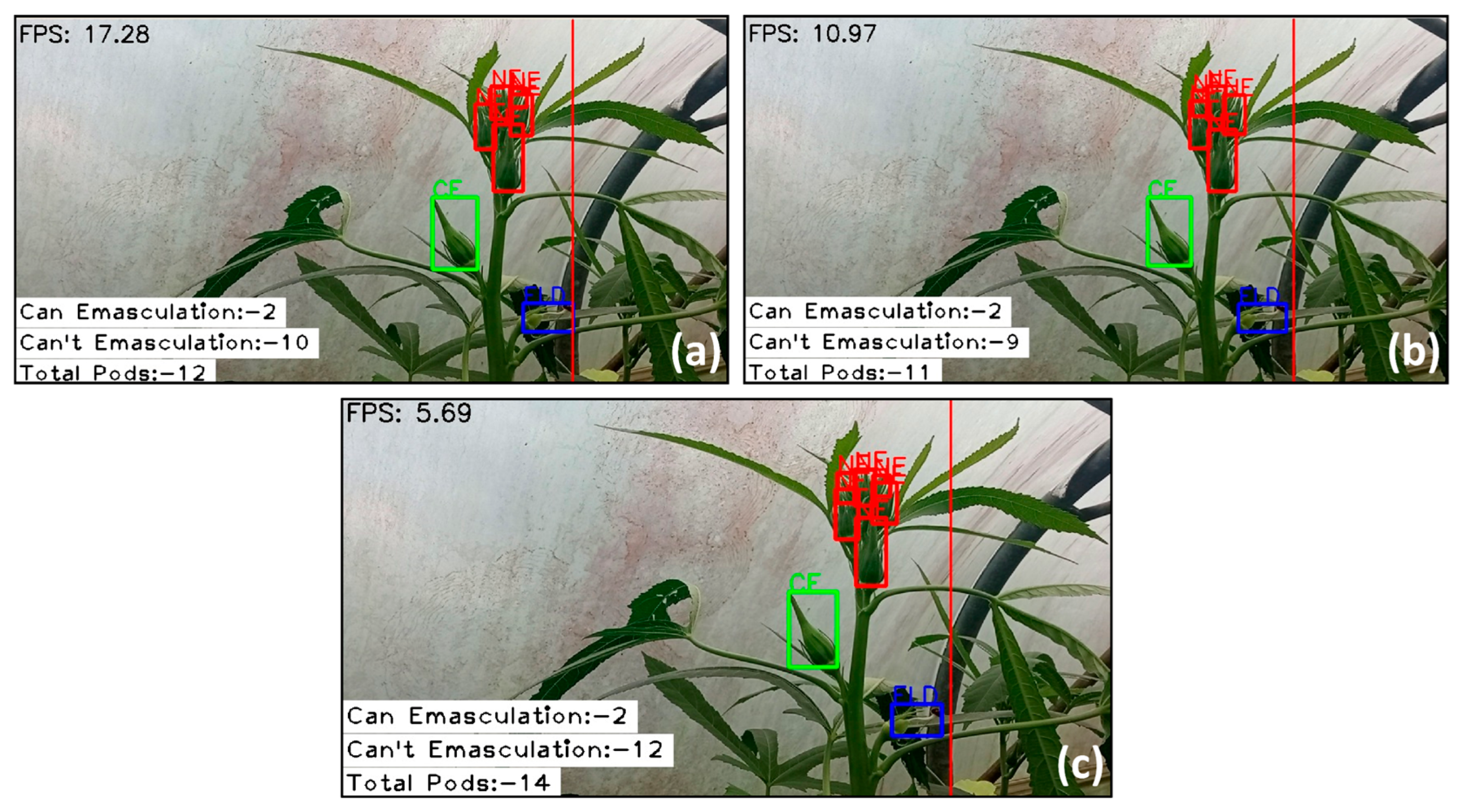

3.2. Frame Rate Comparison Across YOLOv8 Variants

3.3. Comprehensive Evaluation of the YOLOv8n Detection Model: Performance and Insights

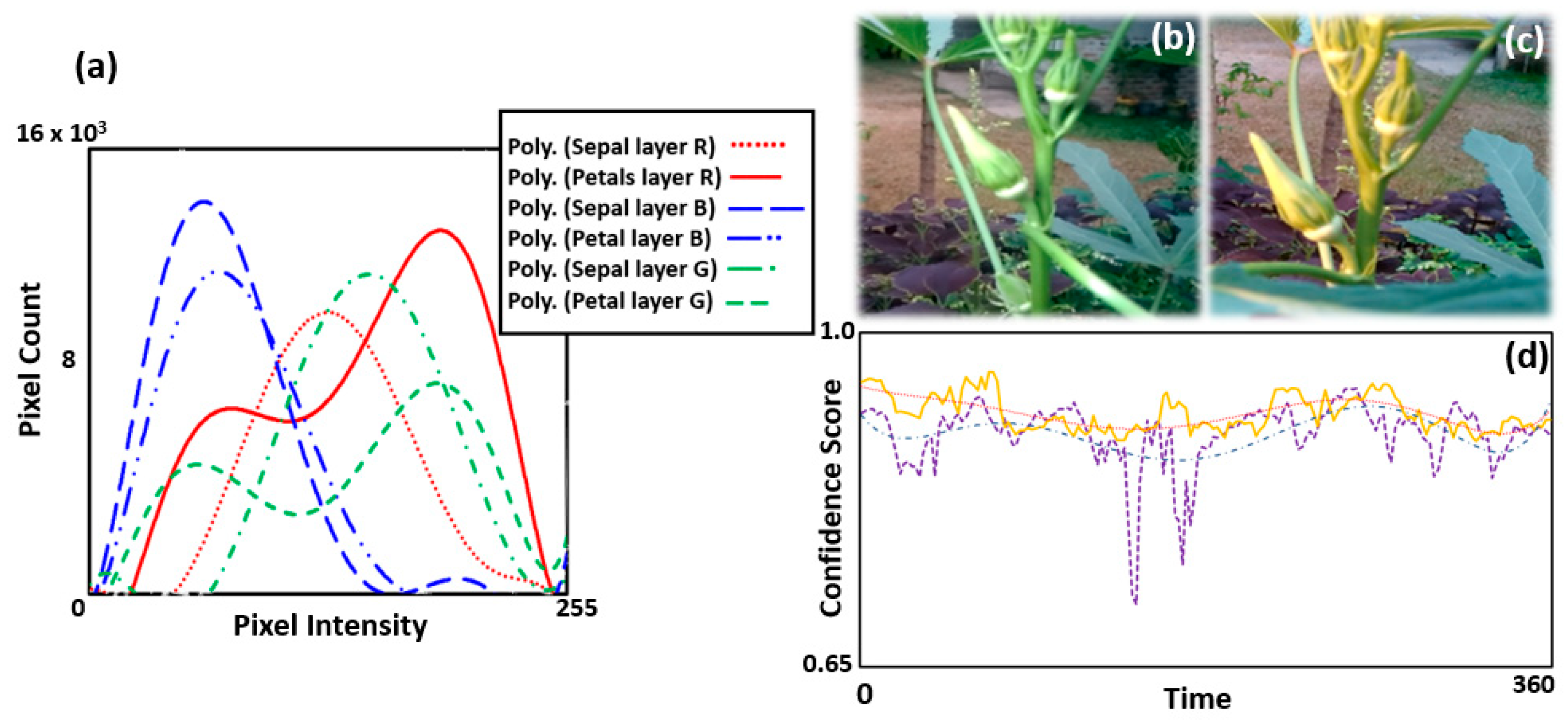

3.4. Depth Analysis of the Okra Bud Layers Using OCT Imaging

3.5. Qualitative Depth Analysis Based on 3D-Enface-OCT Representations

3.6. Correlation with YOLOv8 Prediction and the OCT Depth Analysis

3.7. Gold Standard Analysis of Histogram and Intensity Variations with Varying Light Conditions

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| 2D | Two-Dimensional |

| 3D | Three-Dimensional |

| AIs | Artificial Intelligence |

| CLS | Circular Leaf Spot |

| DL | Deep Learning |

| Faster R-CNN | Faster Region-based Convolutional Neural Networks |

| FPS | Frames Per Second |

| ML | Machine Learning |

| OCT | Optical Coherence Tomography |

| SNR | Signal-to-Noise Ratio |

| SSD | Single Shot MultiBox Detector |

| SVC | Support Vector Classifier |

| YOLO | You Only Look Once |

References

- Kwok, C.T.-K.; Ng, Y.-F.; Chan, H.-T.L.; Chan, S.-W. An Overview of the Current Scientific Evidence on the Biological Properties of Abelmoschus esculentus (L.) Moench (Okra). Foods 2025, 14, 177. [Google Scholar] [CrossRef]

- Mishra, G.P.; Seth, T.; Karmakar, P.; Sanwal, S.K.; Sagar, V.; Priti; Singh, P.M.; Singh, B. Breeding Strategies for Yield Gains in Okra (Abelmoschus esculentus L.). In Advances in Plant Breeding Strategies: Vegetable Crops: Volume 9: Fruits and Young Shoots; Al-Khayri, J.M., Jain, S.M., Johnson, D.V., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 205–233. ISBN 978-3-030-66961-4. [Google Scholar]

- Suma, A.; Joseph John, K.; Bhat, K.V.; Latha, M.; Lakshmi, C.J.; Pitchaimuthu, M.; Nissar, V.A.M.; Thirumalaisamy, P.P.; Pandey, C.D.; Pandey, S.; et al. Genetic Enhancement of Okra [Abelmoschus esculentus (L.) Moench] Germplasm through Wide Hybridization. Front. Plant Sci. 2023, 14, 1284070. [Google Scholar] [CrossRef]

- Pitchaimuthu, M.; Dutta, O.P.; Swamy, K.R.M. Studies on Inheritance of Geneic Male Sterility (GMS) and Hybrid Seed Production in Okra [Abelmoschus esculentus (L.) Moench.]. J. Hortic. Sci. 2012, 7, 199–202. [Google Scholar] [CrossRef]

- Ezenarro, J.; García-Pizarro, Á.; Busto, O.; de Juan, A.; Boqué, R. Analysing Olive Ripening with Digital Image RGB Histograms. Anal. Chim. Acta 2023, 1280, 341884. [Google Scholar] [CrossRef]

- Akkem, Y.; Biswas, S.K.; Varanasi, A. Smart Farming Using Artificial Intelligence: A Review. Eng. Appl. Artif. Intell. 2023, 120, 105899. [Google Scholar] [CrossRef]

- Ghazal, S.; Munir, A.; Qureshi, W.S. Computer Vision in Smart Agriculture and Precision Farming: Techniques and Applications. Artif. Intell. Agric. 2024, 13, 64–83. [Google Scholar] [CrossRef]

- Kalupahana, D.; Kahatapitiya, N.S.; Silva, B.N.; Kim, J.; Jeon, M.; Wijenayake, U.; Wijesinghe, R.E. Dense Convolutional Neural Network-Based Deep Learning Pipeline for Pre-Identification of Circular Leaf Spot Disease of Diospyros Kaki Leaves Using Optical Coherence Tomography. Sensors 2024, 24, 5398. [Google Scholar] [CrossRef] [PubMed]

- Patel, K.K.; Kar, A.; Jha, S.N.; Khan, M.A. Machine Vision System: A Tool for Quality Inspection of Food and Agricultural Products. J. Food Sci. Technol. 2012, 49, 123–141. [Google Scholar] [CrossRef]

- Zhu, R.; Wang, X.; Yan, Z.; Qiao, Y.; Tian, H.; Hu, Z.; Zhang, Z.; Li, Y.; Zhao, H.; Xin, D.; et al. Exploring Soybean Flower and Pod Variation Patterns During Reproductive Period Based on Fusion Deep Learning. Front. Plant Sci. 2022, 13, 922030. [Google Scholar] [CrossRef]

- Chen, B.; Liang, J.; Xiong, Z.; Pan, M.; Meng, X.; Lin, Q.; Ma, Q.; Zhao, Y. An Improved YOLOv8 Approach for Small Target Detection of Rice Spikelet Flowering in Field Environments. arXiv 2025, arXiv:2507.20506. [Google Scholar] [CrossRef]

- Khan, Z.; Shen, Y.; Liu, H. ObjectDetection in Agriculture: A Comprehensive Review of Methods, Applications, Challenges, and Future Directions. Agriculture 2025, 15, 1351. [Google Scholar] [CrossRef]

- Hiraguri, T.; Kimura, T.; Endo, K.; Ohya, T.; Takanashi, T.; Shimizu, H. Shape Classification Technology of Pollinated Tomato Flowers for Robotic Implementation. Sci. Rep. 2023, 13, 2159. [Google Scholar] [CrossRef] [PubMed]

- Bataduwaarachchi, S.D.; Sattarzadeh, A.R.; Stewart, M.; Ashcroft, B.; Morrison, A.; North, S.; Huynh, V.T. Towards Autonomous Cross-Pollination: Portable Multi-Classification System for In Situ Growth Monitoring of Tomato Flowers. Smart Agric. Technol. 2023, 4, 100205. [Google Scholar] [CrossRef]

- Khanal, S.R.; Sapkota, R.; Ahmed, D.; Bhattarai, U.; Karkee, M. Machine Vision System for Early-Stage Apple Flowers and Flower Clusters Detection for Precision Thinning and Pollination. IFAC-PapersOnLine 2023, 56, 8914–8919. [Google Scholar] [CrossRef]

- Li, G.; Suo, R.; Zhao, G.; Gao, C.; Fu, L.; Shi, F.; Dhupia, J.; Li, R.; Cui, Y. Real-Time Detection of Kiwifruit Flower and Bud Simultaneously in Orchard Using YOLOv4 for Robotic Pollination. Comput. Electron. Agric. 2022, 193, 106641. [Google Scholar] [CrossRef]

- Ahmad, K.; Park, J.-E.; Ilyas, T.; Lee, J.-H.; Lee, J.-H.; Kim, S.; Kim, H. Accurate and Robust Pollinations for Watermelons Using Intelligence Guided Visual Servoing. Comput. Electron. Agric. 2024, 219, 108753. [Google Scholar] [CrossRef]

- Huang, D.; Swanson, E.A.; Lin, C.P.; Schuman, J.S.; Stinson, W.G.; Chang, W.; Hee, M.R.; Flotte, T.; Gregory, K.; Puliafito, C.A.; et al. Optical Coherence Tomography. Science 1991, 254, 1178–1181. [Google Scholar] [CrossRef]

- Saleah, S.A.; Kim, S.; Luna, J.A.; Wijesinghe, R.E.; Seong, D.; Han, S.; Kim, J.; Jeon, M. Optical Coherence Tomography as a Non-Invasive Tool for Plant Material Characterization in Agriculture: A Review. Sensors 2023, 24, 219. [Google Scholar] [CrossRef]

- Choma, M.A.; Sarunic, M.V.; Yang, C.; Izatt, J.A. Sensitivity Advantage of Swept Source and Fourier Domain Optical Coherence Tomography. Opt. Express 2003, 11, 2183–2189. [Google Scholar] [CrossRef]

- Cogliati, A.; Canavesi, C.; Hayes, A.; Tankam, P.; Duma, V.-F.; Santhanam, A.; Thompson, K.P.; Rolland, J.P. MEMS-Based Handheld Scanning Probe with Pre-Shaped Input Signals for Distortion-Free Images in Gabor-Domain Optical Coherence Microscopy. Opt. Express 2016, 24, 13365–13374. [Google Scholar] [CrossRef] [PubMed]

- He, B.; Zhang, Y.; Zhao, L.; Sun, Z.; Hu, X.; Kang, Y.; Wang, L.; Li, Z.; Huang, W.; Li, Z.; et al. Robotic-OCT Guided Inspection and Microsurgery of Monolithic Storage Devices. Nat. Commun. 2023, 14, 5701. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Feng, Y.; Wang, Y.; Zhu, H.; Song, D.; Shen, C.; Luo, Y. Enhancing Optical Non-Destructive Methods for Food Quality and Safety Assessments with Machine Learning Techniques: A Survey. J. Agric. Food Res. 2025, 19, 101734. [Google Scholar] [CrossRef]

- Roboflow: Computer Vision Tools for Developers and Enterprises. Available online: https://roboflow.com/ (accessed on 11 November 2024).

- Zhai, X.; Huang, Z.; Li, T.; Liu, H.; Wang, S. YOLO-Drone: An Optimized YOLOv8 Network for Tiny UAV Object Detection. Electronics 2023, 12, 3664. [Google Scholar] [CrossRef]

- PyCharm: The Python IDE for Data Science and Web Development. Available online: https://www.jetbrains.com/pycharm/ (accessed on 12 November 2024).

- Colab. Google. Available online: https://colab.google/ (accessed on 12 November 2024).

- VegaTM Series SS-OCT Systems. Available online: https://www.thorlabs.com (accessed on 27 July 2025).

- Ravichandran, N.K.; Wijesinghe, R.E.; Shirazi, M.F.; Park, K.; Lee, S.-Y.; Jung, H.-Y.; Jeon, M.; Kim, J. In Vivo Monitoring on Growth and Spread of Gray Leaf Spot Disease in Capsicum Annuum Leaf Using Spectral Domain Optical Coherence Tomography. J. Spectrosc. 2016, 2016, 1–6. [Google Scholar] [CrossRef]

| Confidence Scores | Excision Step | Average Depth (mm) | Standard Deviation |

|---|---|---|---|

| 78–88% | 1st excision | 0.53 | 0.15 |

| 2nd excision | 1.79 | 0.23 | |

| 3rd excision | 2.16 | 0.22 | |

| Total average depth | 4.48 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tharaka, D.; Withanage, A.; Kahatapitiya, N.S.; Abhayapala, R.; Wijenayake, U.; Wijethunge, A.; Ravichandran, N.K.; Silva, B.N.; Jeon, M.; Kim, J.; et al. Optical Coherence Imaging Hybridized Deep Learning Framework for Automated Plant Bud Classification in Emasculation Processes: A Pilot Study. Photonics 2025, 12, 966. https://doi.org/10.3390/photonics12100966

Tharaka D, Withanage A, Kahatapitiya NS, Abhayapala R, Wijenayake U, Wijethunge A, Ravichandran NK, Silva BN, Jeon M, Kim J, et al. Optical Coherence Imaging Hybridized Deep Learning Framework for Automated Plant Bud Classification in Emasculation Processes: A Pilot Study. Photonics. 2025; 12(10):966. https://doi.org/10.3390/photonics12100966

Chicago/Turabian StyleTharaka, Dasun, Abisheka Withanage, Nipun Shantha Kahatapitiya, Ruvini Abhayapala, Udaya Wijenayake, Akila Wijethunge, Naresh Kumar Ravichandran, Bhagya Nathali Silva, Mansik Jeon, Jeehyun Kim, and et al. 2025. "Optical Coherence Imaging Hybridized Deep Learning Framework for Automated Plant Bud Classification in Emasculation Processes: A Pilot Study" Photonics 12, no. 10: 966. https://doi.org/10.3390/photonics12100966

APA StyleTharaka, D., Withanage, A., Kahatapitiya, N. S., Abhayapala, R., Wijenayake, U., Wijethunge, A., Ravichandran, N. K., Silva, B. N., Jeon, M., Kim, J., Kumarasinghe, U., & Wijesinghe, R. E. (2025). Optical Coherence Imaging Hybridized Deep Learning Framework for Automated Plant Bud Classification in Emasculation Processes: A Pilot Study. Photonics, 12(10), 966. https://doi.org/10.3390/photonics12100966