Abstract

Neural Radiance Fields (NeRF) reconstruction faces significant challenges under non-ideal conditions, such as sparse viewpoints or missing camera pose information. Existing approaches frequently assume accurate camera poses and validate their effectiveness on standard datasets, which restricts their applicability in real-world scenarios. To tackle the challenge of sparse viewpoints and the inability of Structure-from-Motion (SfM) to accurately estimate camera poses, we propose a novel approach. Our method replaces SfM with the MASt3R-SfM algorithm to robustly compute camera poses and generate dense point clouds, which serve as depth–space constraints for NeRF reconstruction, mitigating geometric information loss caused by limited viewpoints. Additionally, we introduce a high-frequency annealing encoding strategy to prevent network overfitting and employ a depth loss function leveraging Pearson correlation coefficients to extract low-frequency information from images. Experimental results demonstrate that our approach achieves high-quality NeRF reconstruction under conditions of sparse viewpoints and missing camera poses while being better suited for real-world applications. Its effectiveness has been validated on the Real Forward-Facing dataset and in real-world scenarios.

1. Introduction

NeRF, a neural network-based technique, has achieved remarkable success in 3D scene reconstruction and novel view synthesis in recent years. By learning from multi-view image data, NeRF [1] can generate high-quality 3D scene representations and demonstrates significant potential in applications such as virtual reality, augmented reality, and film production. However, despite its superior performance under ideal conditions, NeRF faces numerous challenges when applied to real-world scenarios, particularly in 3D reconstruction under non-ideal conditions. Non-ideal conditions generally refer to significant deficiencies in input data, such as poor lighting, sparse viewpoints, inaccurate camera poses, incomplete image data, or noisy inputs. These issues are prevalent in practical applications and pose substantial challenges to the reconstruction accuracy and stability of NeRF [2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18].

Our work focuses on two specific conditions: sparse viewpoints and inaccurate camera poses. Sparse viewpoints limit the amount of training information available, making it challenging to capture the complete geometric structure and details of the scene. This limitation often results in blurred or distorted novel view images generated by NeRF. Furthermore, inaccurate camera poses can directly impact the quality of scene reconstruction and rendering, as NeRF training relies on precise camera poses (typically image extrinsics) to correctly estimate spatial relationships within the scene. Inaccurate poses may lead to stretching, distortion, or misalignment of objects, significantly compromising the quality of reconstruction results. In real-world applications, we observe that sparse viewpoints and the absence or inaccuracy of camera poses often occur simultaneously. This phenomenon substantially increases the complexity and difficulty of 3D reconstruction. Moreover, we find that existing NeRF improvement methods fail to address this issue effectively. Current NeRF improvement methods, whether targeting sparse viewpoints or camera pose optimization, are typically based on ideal assumptions, such as dense viewpoint coverage and precise camera poses. It should be noted that these studies primarily validate their methods on standard datasets, where dozens of views are used to compute accurate camera poses with COLMAP [19], which are then stored for direct use during reconstruction. These studies seem to overlook a critical issue: when only 3–5 views are available, camera poses estimated using SfM algorithms often contain significant noise. This is primarily because traditional SfM methods rely on limited matching points under sparse input viewpoints, inevitably leading to unstable camera pose estimation and significant deviations or even failures in NeRF reconstruction.

To address these challenges, this paper proposes a novel NeRF reconstruction method suitable for sparse viewpoints and conditions without camera poses. We introduce the MASt3R-SfM [20] algorithm as a replacement for the traditional SfM pipeline, enabling robust camera pose estimation from sparse inputs and generating dense point clouds to constrain NeRF’s depth–space reconstruction and compensate for geometric information loss due to limited viewpoints. Additionally, we design a high-frequency annealing encoding strategy that progressively enhances the network’s ability to learn high-frequency information, preventing overfitting and local optima during training. Finally, we propose a depth loss function based on Pearson correlation coefficients, combined with a ray-sampling strategy in low-frequency regions to further improve the network’s learning capability under limited viewpoints. The proposed method was experimentally validated on the Real Forward-Facing dataset and real-world scenarios. Results demonstrate that this method achieves high-quality 3D reconstruction under non-ideal conditions, such as sparse viewpoints and absent camera poses, while better aligning with practical application requirements.

The main contributions of this paper are as follows:

- A novel NeRF reconstruction framework for sparse viewpoints and conditions without camera poses, effectively addressing geometric information loss and training instability in real-world scenarios.

- A high-frequency annealing encoding strategy designed to progressively enhance the network’s ability to learn high-frequency information, preventing overfitting.

- The introduction of the MASt3R-SfM algorithm for robust camera pose estimation from sparse inputs and dense point cloud generation, serving as depth–space constraints for NeRF.

- A depth loss function based on Pearson correlation coefficients, combined with a ray-sampling strategy in low-frequency regions to explore low-frequency information in limited viewpoints.

2. Related Work

To tackle the challenges posed by sparse viewpoints and inaccurate or missing camera poses, researchers have proposed various enhancements to NeRF to reduce its reliance on precise input data and camera pose information. Some of these approaches integrate self-supervised camera pose optimization, allowing NeRF to reconstruct scenes without depending on accurate pose data. BARF [21] introduces the traditional Bundle Adjustment (BA) framework into NeRF training, enabling joint optimization of camera poses and scene parameters to iteratively refine initial pose estimates. This novel joint optimization framework effectively resolves the challenges of NeRF training under conditions of unknown or inaccurate camera poses by employing dynamic frequency encoding and a coarse-to-fine optimization approach. However, the performance of this coarse-to-fine strategy is highly contingent on the complexity of the task scene and the specific parameter configurations for frequency scheduling. NeRF-- [22] (NeRF minus minus) incorporates camera poses as learnable parameters during training, addressing the scene reconstruction problem under unknown camera conditions by leveraging scene consistency constraints and optimization. However, while NeRF-- enables camera pose learning, it suffers from slow training convergence, sensitivity to initial pose estimates, performance degradation under extremely sparse inputs, and limited robustness to dynamic scenes. NoPe–NeRF [23] is a pose-free NeRF training method that integrates monocular depth priors with multi-view geometric constraints. Using an innovative joint optimization framework, it effectively addresses the challenges of scene reconstruction and novel view rendering under extensive camera motion, providing novel insights for the application of NeRF in scenarios without pose information. NoPe–NeRF typically relies on 20–30 input views to achieve high-quality novel view rendering and scene reconstruction. Although it excels in sparse scenarios, its performance is constrained under extremely sparse input conditions.

Overall, these advancements in NeRF reconstruction without accurate camera poses still depend on multiple input images. However, in real-world applications, if a sufficient number of images is available, traditional SfM algorithms can be employed to achieve relatively accurate camera pose estimations. Consequently, NeRF-based approaches that do not require camera poses lose their practical value under such circumstances. In practical applications, we often have access to only a limited number of images, making it challenging for traditional SfM algorithms to accurately estimate camera poses. This necessitates the development of algorithms capable of accurately estimating camera positions and performing precise 3D reconstruction and novel view synthesis under sparse-view conditions.

Several recent advancements in NeRF-based methods have addressed some of these challenges under sparse input settings. SparseNeRF [24] introduces a new approach that extracts depth ordering and continuity priors from coarse depth maps, effectively mitigating reconstruction degradation under sparse viewpoints. However, this method has limited capacity for handling unobserved occluded regions, and the decline in the quality of coarse depth maps may affect the model’s reconstruction performance. RegNeRF [25] enhances NeRF’s interpolation capability for unobserved regions under sparse viewpoints by introducing several regularization techniques, such as view consistency and spatial consistency, thus reducing the reliance on complete viewpoint coverage. However, this method has limited ability to complete details in unobserved regions, which may result in blurry predictions. FreeNeRF [26] provides a simple baseline that requires no complex modifications, significantly improving NeRF performance under sparse viewpoints with just two lines of code changes: frequency regularization and occlusion regularization. It offers novel insights for future neural rendering research, particularly regarding the crucial role of frequency control in low-data environments. IBRNet [27] introduces a general and efficient multi-view rendering framework that combines dynamic feature extraction of IBR with NeRF’s volumetric rendering to enable novel view generation for complex scenes. This method does not require scene-specific optimization and exhibits strong generalization ability. However, when input views are extremely sparse, rendering quality may degrade, particularly in complex geometric scenes. DS-NeRF [28] introduces a depth supervision loss to enhance NeRF’s geometric reconstruction ability under sparse viewpoints. By utilizing sparse point clouds generated by SfM at no additional cost, it significantly improves both reconstruction quality and training efficiency. DDP-NeRF [29] significantly enhances NeRF’s geometric reconstruction and rendering quality under sparse viewpoints by leveraging dense depth priors and uncertainty estimation. However, this method relies on sparse point clouds generated by SfM, and the quality of the input depth may affect the completion performance when the depth is inaccurate. Moreover, depth completion methods may need to be extended for dynamic or non-rigid scenes. DietNeRF [30] significantly enhances viewpoint synthesis performance under sparse viewpoints by incorporating semantic consistency supervision. It utilizes CLIP’s [31] powerful visual encoding capabilities to achieve reasonable completion of unobserved regions while preserving geometric consistency, marking a significant breakthrough for sparse-viewpoint NeRF applications. However, while the semantic consistency loss converges quickly under sparse viewpoints, it struggles to capture high-frequency texture details. Furthermore, the performance of this method depends on the quality of the pre-trained visual encoder, which may limit its effectiveness in small or specific scenes. PixelNeRF [32] achieves high-quality novel view synthesis under sparse input conditions by leveraging image features and learning scene priors, offering significant advantages in reducing training time and enhancing generalization ability. However, this method may face challenges in maintaining geometric and appearance consistency in scenes with complex backgrounds or significant occlusions. MVSNeRF [33] innovatively combines multi-view stereo geometry reasoning and neural radiance field reconstruction, significantly enhancing reconstruction quality and generalization ability under sparse viewpoints, providing a practical solution for efficient novel view synthesis. The aforementioned studies have optimized and improved the NeRF algorithm under sparse input conditions. However, it is important to acknowledge a critical reality: in existing research exploring the application of NeRF under conditions of no camera pose and sparse viewpoints, notable limitations still persist. On one hand, no-pose NeRF methods rely on a large number of input views to compensate for the lack of camera pose information, but capturing such a large number of images in real-world scenarios is often impractical. On the other hand, sparse-view NeRF methods achieve good reconstruction results with fewer input views, but they typically assume accurate camera poses, which are often challenging to satisfy in real-world applications, particularly in scenarios with limited viewpoints or low image quality.

To address these challenges, this paper proposes a new method designed to tackle both no-pose and sparse-view conditions simultaneously. Our method enables high-quality novel view synthesis and geometric reconstruction with only a few input views and without the need for accurate camera poses. Furthermore, we further validate the effectiveness of the method on the Real Forward-Facing dataset and real-world captured scenes, showcasing its robustness and generalization ability under sparse-view and no-pose conditions.

3. Method

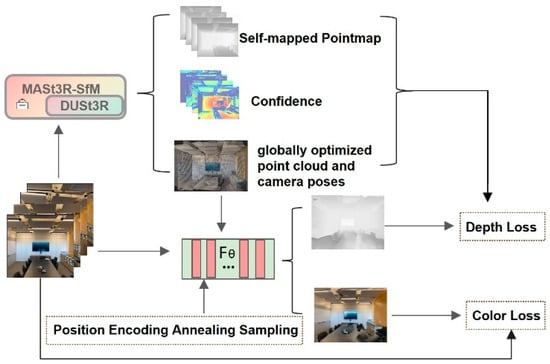

This section provides a detailed explanation of the proposed sparse-view NeRF implementation method. As illustrated in Figure 1, the method consists of three main components.

Figure 1.

Overall Workflow Diagram of SparsePose–NeRF. Our method first utilizes the MASt3R-SfM frontend to robustly estimate camera poses from sparse input views while simultaneously generating an initial self-mapped point map and a final globally optimized point cloud. This geometric prior information is used to construct a two-stage depth loss. Concurrently, the NeRF network (FΘ) is trained using a position encoding annealing sampling strategy. Finally, the network is optimized by combining the depth loss and the traditional color loss to achieve high-quality reconstruction.

- Pose Estimation and Map Generation

The first component involves pose estimation based on the MASt3R-SfM algorithm and the generation of auxiliary maps, including an initial point cloud map, a normalized point map, and a confidence map.

- High-Frequency Annealing Encoding Strategy

The second component introduces a high-frequency annealing strategy for positional encoding in the NeRF network, ensuring that high-frequency overfitting does not occur during the early stages of training.

- Depth-Supervised Training Regularization

The third component focuses on regularizing the NeRF network training process to improve 3D reconstruction and novel view synthesis under sparse input conditions. To achieve this, two depth-related loss functions—denoted as LU and LM—are proposed.

3.1. The Principles and Characteristics of MASt3R-SfM and Its Role Within the Network

The MASt3R-SfM [20] network is essentially an extension of the MASt3R network’s downstream tasks. It leverages the encoder and decoder of the pre-trained MASt3R [34] model to construct a more robust SfM algorithm. MASt3R is an enhanced version of the DUSt3R [35] network, better suited for high-precision matching and relative pose estimation tasks. DUSt3R’s primary focus is on 3D reconstruction, which simultaneously predicts point maps and camera calibration parameters. Specifically, DUSt3R achieves 3D reconstruction by predicting dense 3D point maps, with matching relationships as byproducts. This approach relies on point regression for 3D reconstruction but is susceptible to noise, particularly in low-texture regions or under large baseline variations, leading to reduced matching accuracy. DUSt3R introduces a matching head to directly learn local feature descriptors instead of relying solely on 3D point map regression. This enhances feature matching robustness, especially in scenarios with significant viewpoint changes or repetitive patterns. Owing to its superior performance, MASt3R-SfM outperforms existing methods across multiple datasets and metrics, particularly excelling in sparse scenes and unordered image collections. Traditional SFM methods rely on keypoint matching to construct point trajectories across multiple images. However, MASt3R is inherently a pairwise image matcher. If traditional SFM methods are applied, the complexity grows quadratically with the number of images N. To address this issue, a minimal yet sufficient subset is constructed and input into MASt3R, where the subset structure forms a scene graph G. The graph G = (V,E) consists of vertices I ∈ V (representing images) and edges e = (n,m) ∈ E (representing undirected connections between potentially overlapping images In and Im). This graph ensures all images are connected directly or indirectly. To construct an appropriate connected graph, MASt3R’s encoder is used to predict the co-visibility score s ∈ (0,1) between two images, In and Im. The detailed construction process is referenced in MASt3R-SfM. During runtime, the algorithm applies the MASt3R inference process to each edge e, generating the corresponding point maps and sparse pixel matches Mnm. In summary, the process represents the forward inference process of MASt3R, where the input is an image pair (In, Im) and the output is the sparse feature matching Mnm between the two images. This approach also yields different point maps, such as Xnn, Xnm, Xmn, and Xmm, where Xnn and Xmm represent self-mapped point maps (i.e., 3D point maps generated within the coordinate system of a single image). These self-mapped point maps encode the 3D coordinates of pixels derived solely from intra-image information, independent of inter-image geometric relationships. The next step involves constructing canonical point maps through global fusion of estimated 3D scene points across images. This fusion eliminates viewpoint discrepancies and registration errors while ensuring global consistency, ultimately achieving a more accurate scene representation via weighted averaging. The canonical point map formulation is defined as follows:

where

- is the canonical 3D point for pixel (i, j) of image In;

- is the set of all edges in the scene graph connected to image In;

- is the estimated 3D point for pixel (i, j) of image In derived from the image pair associated with edge e;

- is the corresponding confidence value for that 3D point estimation.

For each pixel (i, j), the estimated values are averaged using confidence-based weighting. Through this formulation, a canonical point map is generated, integrating information from all associated image pairs. Additionally, the constrained point map is constructed based on the canonical point maps. The constrained point map rigorously enforces alignment of estimated 3D points (i.e., points generated from images) with a pinhole projection model. This constraint ensures that the point cloud adheres to the camera’s geometric characteristics, thereby establishing a foundation for subsequent optimization. The constrained point map at pixel (i, j) is defined as follows:

where

- is the constrained 3D point in the world coordinate system for pixel (i, j);

- Kn is the camera intrinsic matrix for image In;

- is the depth value at pixel (i, j);

- is a per-camera scale factor;

- is the homogeneous coordinate of the pixel.

This equation ensures that the 3D points in the point cloud strictly conform to the pinhole projection model. The optimization objective is the minimization of the reprojection error. After normalization, the next step involves performing coarse alignment, which primarily aims to globally optimize and align the initial 3D point cloud to a unified world coordinate system. The optimization objective of coarse alignment is to minimize the loss function as follows:

where

- is the confidence of a given match c;

- and are the 2D pixel coordinates of the match in images In and Im, respectively;

- and are the corresponding 3D points;

- is the projection function for camera n;

- ρ is a robust error function.

The above provides a concise summary of the MASt3R-SfM network’s workflow and core optimization steps. Subsequently, our framework utilizes the self-mapped point clouds generated by the network, combined with globally optimized point clouds and camera poses, to achieve sparse-view NeRF reconstruction.

3.2. The Implementation Approach of Our Network and the Regularization Optimization Process

In NeRF, positional encoding is a crucial operation that maps the input 3D coordinates into a high-dimensional space, enabling the network to better capture high-frequency details. Conventional fully connected neural networks inherently prioritize low-frequency signal modeling, whereas NeRF explicitly targets high-frequency detail reconstruction (e.g., edges and textures) in 3D scenes. Through positional encoding, the input coordinates are transformed into multi-frequency signal representations, thereby facilitating MLPs’ learning of high-frequency content. The positional encoding process utilizes a series of sine and cosine functions to project the input signals. For a 3D input point x = (x, y, z), the positional encoding γ(x) is defined as follows:

where

- is the positional encoding function for an input coordinate x;

- x is a 3D inputpoint (x, y, z);

- L is the number of frequency levels, controlling the amount of high-frequency components.

Here, L represents the number of frequency levels, which controls the quantity of high-frequency components in positional encoding. Under sparse-view conditions, the network excessively focuses on high-frequency information (e.g., surface textures or local geometric variations), causing the MLP to overfit these details. Due to insufficient low-frequency sampling, the scene’s global geometry may become distorted, compromising the network’s ability to model object shapes and structural coherence. This limitation further manifests as artifacts in lighting and material properties. Smooth lighting transitions and material consistency are poorly reconstructed, often resulting in localized brightness spikes or color discontinuities. The root cause lies in inappropriate extrapolation of high-frequency patterns to unobserved regions, inducing spurious textures in empty spaces. Consequently, reconstructed surfaces exhibit unnatural complexity while lacking low-frequency smoothness. To address these challenges, we introduce a frequency-annealed positional encoding strategy during NeRF training, defined as follows:

where

- is the maximum frequency level available to the network at the current training stage;

- L is the total number of frequency levels in the encoding;

- t is the current training step;

- T is the total number of training steps per epoch.

Frequency encoding plays a crucial role in determining the performance of the Neural Radiance Fields during training. In the formula, T denotes the total number of training steps per epoch, L represents the number of frequency levels in the encoding, Lmax indicates the maximum frequency level accessible to the network at the current stage, and t refers to the current training step. In most NeRF networks, L is typically capped at a maximum value of 10. Under this setting, it is generally assumed that when Lmax ≥ 6, the network begins to capture high-frequency information, which is essential for reconstructing fine details in the scene. The annealing schedule gradually activates the network’s ability to sample high-frequency information as the training process reaches its halfway point. Each step t corresponds to an update of the network’s weights, achieved by computing gradients using a batch of data and updating the parameters accordingly. This approach forces the network to focus on limited low-frequency information during the early stages of training, compensating for the deficiencies caused by sparse viewpoints. Merely limiting the network’s capacity to sample high-frequency information does not address the persistent challenge that low-frequency information remains constrained below a fixed threshold. This limitation makes it challenging for the network to learn additional low-frequency features effectively. Beyond sampling and learning low-frequency information in the color domain, we aim to enable the network to acquire low-frequency information about the scene in the depth domain. During the initial stages of network training, we propose introducing depth supervision by comparing the depth differences between adjacent pixels within predefined patches. This approach focuses on capturing depth loss at a patch-level granularity, rather than directly comparing depth values at the pixel level. There are two primary reasons for this approach:

- It injects additional low-frequency geometric priors to constrain training and mitigate sparse-view limitations.

- Pixel-level depth comparisons introduce high-frequency noise from estimation errors, destabilizing early training phases.

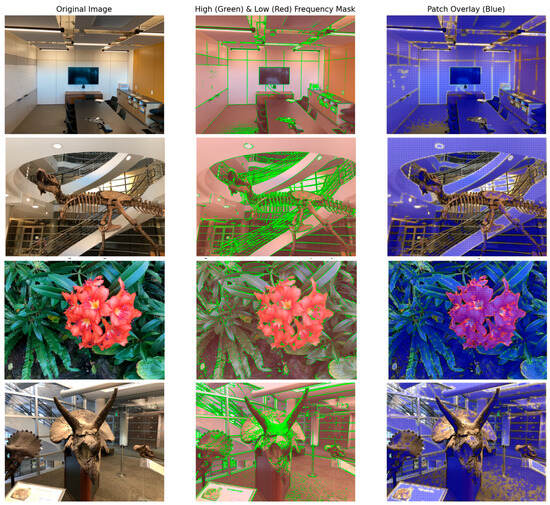

In practice, the Sobel operator computes horizontal (Gx) and vertical (Gy) gradients, which are combined to obtain the gradient magnitude Gmag for each pixel. To clearly illustrate the processing procedure, we visually illustrate the processing of high- and low-frequency information in images in Figure 2. We employed distinct color annotations to differentiate high-frequency and low-frequency regions in the results. Regions where the gradient fell below the defined threshold were designated as low-frequency zones, indicated in light red, whereas regions with gradients exceeding the threshold were labeled as high-frequency zones, depicted in dark green. In the early stages of network training, we implemented an annealing strategy for NeRF positional encoding, which limited the network’s ability to learn high-frequency features. As a result, during the initial phase of training, avoiding the selection of pixels in high-frequency regions for sampling rays proved to be both resource-efficient and beneficial for faster network convergence. Our approach involves continuously sampling multiple patches from each low-frequency region, with each patch sized at 32 × 32 pixels, encompassing a total of 1024 pixels per patch. To ensure comprehensive coverage, we maintain a 30% overlap between sampled regions. If a given region contains fewer than 1024 pixels in total, it is skipped entirely. Unlike the Vanilla NeRF strategy, which randomly shuffles all training set pixels and selects them randomly during the early stages of training, our method ensures that all sampled pixels are contained within the same batch.

Figure 2.

In the early stages of network training, we employed the Sobel operator to compute horizontal and vertical gradients, and the gradients from both directions were synthesized to obtain the gradient magnitude of each pixel. To visually illustrate this processing, we applied color-coded annotations to highlight high-frequency and low-frequency regions in the results. Specifically, regions with gradients below a predefined threshold were marked as low-frequency areas (light red), while regions with gradients exceeding the threshold were identified as high-frequency areas (dark green). Our sampling strategy involved continuously extracting multiple patches from each low-frequency region, with each patch sized 32 × 32 pixels (containing 1024 pixels). Additionally, we ensured 30% overlap between sampled regions. If the total number of pixels in a given region was fewer than 1024, that region was excluded from the sampling process.

The purpose of this approach is to compare the depth map rendered by NeRF during training with the initial depth map derived from the self-mapped point cloud of each image and to construct a Pearson correlation coefficient loss. The rationale for utilizing the initial depth map instead of the depth information from the final normalized point cloud lies primarily in the pairwise-image depth estimation properties: these maps reflect matching relationships between image pairs and exhibit feature-aligned consistency. In contrast, the normalized point cloud represents a weighted average of multiple estimations, smoothing out depth details. The initial depth map provides globally coherent priors that facilitate low-frequency acquisition in NeRF-based 3D reconstruction. However, its scale ambiguity precludes similarity evaluation via L2 loss with mean-based comparisons. To address this, we employ the Pearson correlation coefficient to measure patch-wise depth similarity between NeRF-rendered values and MASt3R-SfM self-mapped depths. During early training stages, we extend the Vanilla NeRF framework by augmenting its color–space loss LC with a depth–space Pearson loss LU.

where

- is the color–space loss;

- is the depth–space Pearson loss;

- PCC is the Pearson correlation coefficient function;

- is the predicted color for sample i and is the ground truth color;

- is the NeRF-rendered depth map for a patch;

- is the corresponding depth map from the MASt3R-SfM self-mapped point cloud;

- E(⋅) denotes the expectation (mean) operator.

In this loss function, N represents the total number of samples used for the calculation, and it denotes the Pearson correlation coefficient between dN and dM within the i-th patch. By minimizing this function, the correlation between the predicted depth dN and the target depth dM is pushed closer to 1, ensuring that both exhibit similar variation trends. Since the calculation of the Pearson correlation coefficient (PCC) is inherently normalized, this loss function is insensitive to global scale changes in depth values. By computing PCC within a patch, the function captures local correlations, which helps address issues arising from non-uniform depth variations across the scene. By maximizing local correlation, the method ensures correct depth variation trends within the scene, leveraging trend consistency to optimize the network in low-texture or low-confidence regions. As the number of training steps increases and reaches half of the total training steps, the annealing function gradually releases the network’s capacity for high-frequency sampling. At this stage, the network begins to focus on high-frequency details in the scene. We introduced two significant changes to the network during this phase: First, we modified the ray sampling strategy within each batch. Initially, sampling was patch-based, where patches were randomly selected from each viewpoint. However, this approach contributed limitedly to the model’s understanding of the global geometry of the scene. To address this, we adopted a random view sampling with rays strategy in the second half of the training. This adjustment improved the network’s global geometric comprehension of the scene and prevented over-reliance on data from specific viewpoints. However, consistently using this sampling strategy might slow down the learning progress for details in certain viewpoints. To mitigate this, we incorporated importance sampling in regions of interest to increase sampling density in high-frequency areas of the scene, facilitating the capture of textures and edge details. Additionally, we introduced hybrid sampling, where a portion of rays were sampled uniformly across the entire scene, while others were concentrated in high-gradient regions, edges, or other important areas. By dynamically alternating among these three sampling strategies as training progressed, we significantly enhanced the training performance of NeRF while reducing unnecessary computational overhead. The second improvement involves an adjustment to the depth constraint by incorporating global-scale depth information. This constraint enables NeRF to align depth values learned from different viewpoints, effectively reducing errors and enhancing overall reconstruction capability. Previously, depth loss calculations were performed on individual depth maps without standardized scales. While MASt3R-SfM provided an initial global alignment through its self-mapped point cloud outputs in earlier stages, the precision was limited. In later stages, MASt3R-SfM further refined the alignment by conducting global fine optimization on top of coarse alignment, significantly improving the accuracy of camera poses and scene geometry, resulting in normalized point cloud information. To ensure alignment between NeRF’s rendering results and the final normalized point cloud in terms of scale for loss computation, we adopted the following approach: At the start of network training, the sampling distances for near and far were carefully set. This allowed NeRF’s depth information to align with the scale of the point cloud by matching the nearest and farthest points, thereby naturally aligning the scale of NeRF with the optimized point cloud output by MASt3R-SfM.

where

- near and far are the near and far rendering bounds for NeRF;

- represents the depth values of the globally optimized point cloud from MASt3R-SfM.

Here, ξ represents a small redundancy value introduced to prevent insufficient depth range caused by errors or occlusions. Subsequently, in the aligned depth–space, the difference between the rendered depth dN,j along each ray and the corresponding point cloud back-projected depth dP,j is computed to formulate the loss function, which is defined by the following equation:

where

- LM is the global-scale depth loss function;

- N is the number of sampled rays in a batch;

- is the depth rendered by NeRF along ray j;

- is the corresponding depth from the back-projected globally optimized point cloud.

In the formula, the value in the denominator represents the square of the confidence level of the corresponding point cloud. This value reflects the uncertainty in the depth prediction of the pixel. Based on this uncertainty, a normalization operation is applied to the depth difference. This implies that when the predicted depth value exhibits high uncertainty, the influence of the error term is attenuated. Conversely, when the prediction uncertainty is low, the model imposes stricter penalties on large prediction errors. This design allows the model to relax error constraints in regions where predictions are inherently challenging. Finally, the total loss function for the NeRF network is defined as follows:

where

- is the total loss function for the NeRF network;

- , , and are the color loss, local Pearson depth loss, and global depth loss, respectively.

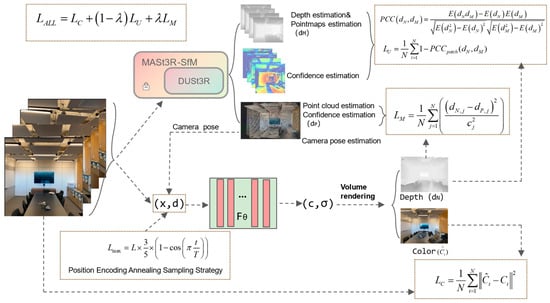

Where λ is a hyperparameter. At training step t ≤ T/2, λ = 0, and at step t > T/2, it takes a value between 0 and 1. Based on the comprehensive analysis above, we have comprehensively annotated and visualized both the detailed execution workflow of SparsePose–NeRF and its corresponding loss functions at each step in Figure 3.

Figure 3.

The input unconstrained images are first processed by the MASt3R-SfM network to compute camera poses, self-mapped point maps, confidence maps, and normalized point maps. The estimated camera poses are then passed to the NeRF network for ray sampling, where a position-encoded high-frequency quenching strategy is applied to prevent the network from overfitting to high-frequency information. During rendering, the depth maps generated by the NeRF network are compared with the precomputed depth values to construct the depth regularization loss functions LM and LU. Additionally, the RGB values rendered by the NeRF network are compared with the input images to formulate the color–space regularization loss function. The red, green, and blue lines denote the estimated camera poses generated by our model.

4. Experiments and Results

In the experimental section, we conducted three groups of experiments to evaluate the network’s performance under different conditions. Specifically, the first set of experiments assessed the accuracy of pose estimation in complex environmental conditions. The second and third sets of experiments evaluated the network’s ability to reconstruct dataset scenarios and real-world scenes under the dual adverse conditions of unknown camera poses and sparse viewpoints, as well as its capability to synthesize novel views.

All experiments were conducted on a workstation equipped with a 13th Gen Intel(R) Core(TM) i7-13700KF CPU and an NVIDIA GeForce RTX 4090 GPU. Our method follows a per-scene optimization paradigm. For each scene, we train our SparsePose–NeRF model for 200,000 iterations, a number consistent with related state-of-the-art works such as DietNeRF and FreeNeRF to ensure full convergence. The entire pipeline for a typical 3-view scene is highly efficient. The front-end pose and geometry estimation using MASt3R-SfM is completed in approximately 4 s. We also note that MASt3R-SfM reduces the computational complexity from quadratic to quasi-linear by constructing a sparse scene graph, as detailed in its original paper. The subsequent back-end NeRF optimization takes about 4.5 h. Once trained, rendering a new 800 × 800 resolution view takes approximately 5–10 s. This optimization time is competitive and notably faster than standard NeRF baselines, which can require over 10 h to converge on sparse data. The near and far rendering bounds were determined from the point cloud provided by the front end, with the redundancy margin ξ (Equations (9) and (10)) set to 5% of the scene’s depth range. For the hyperparameter λ in our loss function (Equation (12)), which weights the global, confidence-based depth loss LM, it is activated in the second half of training and linearly increases from 0 to its final value of 0.1. We found this setting to be robust across all scenes and datasets, requiring no per-scene tuning.

4.1. Validation of Camera Pose Estimation Accuracy

To validate the accuracy of the network in estimating camera poses, we conducted experiments on the Tanks and Temples [36] dataset, the CO3Dv2 [37] dataset, and the Rea-lEstate10K [38] dataset. The Tanks and Temples dataset features a wide range of complex indoor and outdoor scenes, encompassing various lighting conditions, texture complexities, and scene scales. The design of the scenes in this dataset considers real-world complexities, such as non-uniform lighting, low-texture regions, and intricate geometric structures. These challenging characteristics make it an ideal benchmark for evaluating the robustness of algorithms. To quantitatively assess the accuracy of camera pose estimation, we compared our network with the COLMAP algorithm. It is worth noting that the COLMAP algorithm computes camera poses using all the images in each dataset, and its results are treated as ground truth for comparison purposes.

To calculate the Absolute Trajectory Error (ATE) [39], we align two camera trajectories using the Procrustes alignment method. This involves three key steps: decentering, normalization, and rotational alignment of both trajectories. After alignment, the translational error between the two poses is computed. We also evaluate the Relative Rotation Accuracy (RRA@5) and Relative Translation Accuracy (RTA@5) to assess the precision of relative rotations and translations between camera pairs. RRA@5 is a commonly used metric for high-precision rotation error evaluation. Considering that COLMAP performs remarkably well in high-overlap and high-texture scenes where it achieves highly accurate rotation estimations, we adopted a rigorous evaluation criterion. In addition, we report the registration success rate (Reg), which measures the proportion of cameras with successfully estimated poses. This metric is an essential indicator of an algorithm’s robustness and applicability across various scenarios. In the Tanks and Temples dataset, we selected eight scenes and performed resampling and subdivision of the original dataset into subsets containing 25, 50, and 100 frames. Our algorithm was run under these three conditions, and the corresponding camera pose estimation metrics were reported, as summarized in Table 1.

Table 1.

Quantitative experimental results of pose estimation on the Tanks and Temples dataset.

The experimental results comprehensively validate the efficiency and robustness of our method in multi-view 3D reconstruction tasks across various view numbers (25, 50, and 100 views) and diverse scenes (e.g., Ballroom, Barn, and Church). As the number of views increases, the absolute trajectory error (ATE) significantly decreases, and both reconstruction accuracy (RRA@5) and time efficiency (RTA@5) consistently improve. For example, in geometrically rich scenes (e.g., Barn and Ignatius), our method exhibits near-perfect reconstruction results under all view configurations, with extremely low ATE values and RRA@5 and RTA@5 approaching 100%. Furthermore, even in complex scenes with sparse texture and geometry (e.g., Museum), our method maintains a 100% registration rate (Reg), demonstrating its robustness in handling global keypoints. In scenes with intricate structures and more dynamic changes (e.g., Family and Church), our method demonstrates superior capability in capturing finer details, and as the number of views increases, it significantly enhances accuracy and consistency, further narrowing the gap with baseline methods.

This performance indicates that our method not only excels in traditional static scenes but also adapts well to scenes with complex geometries and dynamic changes. Overall, these experimental results fully confirm the comprehensive advantages of our method in terms of accuracy, efficiency, robustness, and scene adaptability. Whether in geometrically rich, simple scenes or challenging scenarios with sparse texture or dynamic changes, our method consistently exhibits high stability and consistency, providing strong support for improving accuracy and adapting to complex scenes in 3D reconstruction tasks.

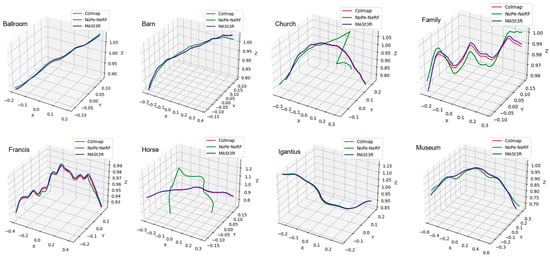

In the qualitative experiments, we utilized COLMAP to generate high-quality 3D reconstructions and camera pose estimations as ground truth. To evaluate the accuracy of our network’s camera pose estimation, we compared the camera trajectories generated by our method against those produced by the state-of-the-art pose-free NeRF algorithm, NoPe–NeRF, across eight selected scenarios. During the experiments, we applied the Procrustes alignment method to align and visualize the camera trajectories, as illustrated in Figure 4. From the visualized results, it can be observed that our proposed method performs well on complex datasets such as Tanks and Temples. For instance, in most scenes (e.g., Ballroom, Barn, Francis, and Museum), the trajectories reconstructed by MASt3R-SfM are highly consistent with those of COLMAP, demonstrating superior accuracy and robustness. This indicates that our method can reliably recover 3D trajectories in these scenarios. In contrast, NoPe–NeRF exhibits significant deviations or instability in certain scenes (e.g., Horse and Church), highlighting its limitations in handling complex or structurally unique environments, possibly due to constraints in its model capacity or algorithmic design. Moreover, in scenes with intricate structures and variations (e.g., Family and Church), MASt3R-SfM outperforms NoPe–NeRF by capturing finer details and maintaining alignment with COLMAP’s trajectories. Overall, these trajectory visualizations clearly illustrate the advantages of our algorithm in terms of camera pose estimation accuracy, stability, and adaptability to diverse scenes. However, the results also reveal that NoPe–NeRF requires further optimization to handle challenging scenarios effectively.

Figure 4.

Trajectory prediction experiments were conducted on eight scenes from the Tanks and Temples dataset. The Procrustes alignment method was applied to align and visualize the camera trajectories, with the trajectories scaled to ensure their full extent could be displayed within the visualization. In the visualized plots, the camera poses estimated by COLMAP served as the ground truth. Comparisons were made between the camera trajectories predicted by the proposed method and those generated by the NoPe–NeRF algorithm.

To evaluate the accuracy of our network’s camera pose estimation under sparse viewpoints, we conducted experiments on the CO3Dv2 and RealEstate10K datasets, both of which provide ground truth camera parameters. On the RealEstate10K dataset, we reported the mAA(30) metric, while on the CO3Dv2 dataset, we included RRA@15, RTA@15, and mAA(30). This choice is justified because RealEstate10K is primarily designed for novel view synthesis (NVS), focusing on the quality of generated views rather than precise pose estimation. The dataset typically involves views captured across large-scale scenes, which may lack highly accurate 3D pose annotations. The mAA(30) metric, being directly based on the matching quality of synthesized views, better reflects overall image-level performance compared to RRA and RTA, which are more reliant on precise camera poses. Furthermore, we set the threshold parameters for RRA and RTA to 15° because a rotational error within this range is generally acceptable in various computer vision tasks such as multi-view geometry, SLAM, and visual localization. For tasks like scene understanding or novel view synthesis, visual consistency is often maintained even with rotational errors within 15°. Additionally, practical applications such as indoor navigation and scene modeling do not demand extremely fine-grained rotational accuracy, making 15° a reasonable threshold to evaluate robustness in common scenarios. During the experiments, we selected 10 scenes from the test set of the RealEstate10K dataset. For each scene, three subsets were created by randomly sampling 3, 5, and 10 consecutive images, respectively. Similarly, from the CO3Dv2 dataset, we selected five categories: hotdog, motorcycle, bicycle, laptop, and toy bus. For each category, two shooting scenes were chosen, and subsets of varying sizes were constructed following the same approach used for the RealEstate10K dataset. Finally, the average parameter values were calculated across all scenes, and the results are presented in Table 2.

Table 2.

Experiments were conducted on the RealEstate10K and CO3Dv2 datasets to evaluate the accuracy of camera pose estimation.

From the experimental results, it can be observed that our network achieves highly accurate camera pose estimation even under conditions of extreme sparsity in image data. This effectively addresses the challenge faced by NeRF networks in obtaining precise camera poses when operating with sparse viewpoints.

4.2. Comparative Experiments on LLFF Dataset with Sparse-View NeRF Models

We conducted comparative experiments on the LLFF [40] dataset with other sparse-view NeRF models. The experiments focused primarily on scene reconstruction and novel view synthesis using three input views. Both quantitative and qualitative analyses were performed to evaluate the results. Previous improvements in sparse-view NeRFs often evaluated their models by directly sampling a fixed number of images from datasets and utilizing the camera poses provided within those datasets for NeRF reconstruction. However, an overlooked fact is that these camera poses are estimated using the COLMAP algorithm by processing dozens of images of the same scene collectively. Under such conditions, the errors in camera pose estimation are minimal.

Accurate camera poses are crucial for NeRF reconstruction, as NeRF fundamentally relies on optimizing scene density and radiance fields. This optimization requires known camera poses to establish the origin and direction of rays, correlating 3D points in space with corresponding image pixels. In real-world applications, obtaining accurate camera poses under sparse-views is highly challenging. COLMAP typically requires at least five images to generate stable sparse point clouds. Moreover, it assumes sufficient overlap in the field of view and robust feature matching between images. When the number of images is limited or the overlap and viewing angle differences are minimal, the number of matched points decreases significantly. This results in incomplete scene reconstruction and inaccurate camera pose estimation. Through our experimental validation, we observed that existing methods for sparse-view reconstruction perform exceptionally well when provided with more than six input views. Therefore, our experiments focus on scenarios with three input views, where current NeRF-based methods for sparse-view improvements generally underperform. Even when these NeRF models directly utilize accurate camera poses from datasets—thus avoiding the challenges of inaccurate camera poses in real-world scenarios—their reconstruction quality and novel view synthesis remain suboptimal. This limitation is primarily due to insufficient scene information, which prevents the network from capturing adequate low-frequency information. By improving the NeRF network architecture, our proposed model effectively addresses these challenges and achieves superior performance. We compared four different methods for achieving NeRF-based 3D reconstruction under sparse view inputs. Due to experimental constraints, we used only three input views.

Directly using COLMAP to compute camera parameters from these three views often failed or resulted in significant camera pose errors. Furthermore, there were no ground truth images available for the new view synthesis experiments under these conditions. To address these challenges and facilitate experiments with three input views, we adopted a strategy of directly reading standard camera pose parameters from the dataset for the input to the comparison networks. Specifically, we selected three images from each of the four scenes in the Real Forward-Facing dataset as training inputs for NeRF and one additional image from the remaining images as ground truth for evaluating novel view synthesis performance. However, during the experiments with our network, we did not use the camera poses provided by the dataset; we consistently utilized our MASt3R-SfM frontend network to estimate camera poses. While our estimated poses exhibited minor deviations from those computed by COLMAP in the dataset, these differences had negligible effects on quantitative analysis. In qualitative experiments, the slight shifts in viewing angles were insignificant. This demonstrates that our method’s pose estimation is a viable replacement for the traditional SfM algorithm in the NeRF pipeline.

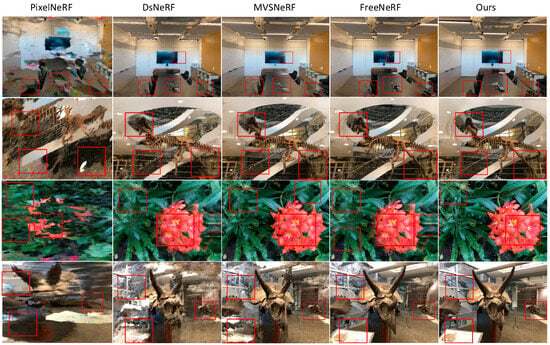

The final results, as shown in Figure 5, highlight the performance differences across methods. For the “room” scene, PixelNeRF exhibited poor performance, with blurred boundaries on walls and ceilings and significant geometric distortions in objects on the desk and floor. DSNeRF improved boundary sharpness, particularly at wall-ceiling transitions. MVSNeRF captured geometric consistency effectively, with shapes closer to reality and improved detail recovery, though textures on small objects remained blurred. FreeNeRF achieved geometric consistency comparable to MVSNeRF but excelled in restoring details on small objects. Our method outperformed all others, delivering clear and realistic details on small objects on the floor and boundary regions of walls, while also achieving consistent depth and lighting in transitions between walls and ceilings. In the quantitative analysis, we calculated PSNR, SSIM [41], and LPIPS [42] metrics.

Figure 5.

Comparative experiments were conducted on the LLFF dataset to evaluate the performance of our network against four other networks. The results demonstrate that our network achieved superior performance in this benchmark, validating its effectiveness. The red boxes highlight regions where the differences among rendering results of different methods are most noticeable.

It is worth noting that the PixelNeRF and MVSNeRF networks used in the experiments were not fine-tuned for the current scenes. This is because our focus is on reconstruction performance under the three-view condition, while typical fine-tuning procedures require two to three additional images per scene, undermining the comparability of results. Therefore, we used non-fine-tuned versions of these networks for the experiments. The detailed comparison results are presented in Table 3.

Table 3.

Quantitative comparison experiments were conducted on the LLFF dataset to evaluate the performance of our network in relation to other methods (↑ indicates higher is better; ↓ indicates lower is better).

4.3. Sparse Input-Based Reconstruction and View Synthesis in Real-World Scenes

In real-world scenarios, accurate camera pose information is typically unavailable, unlike the data provided by synthetic datasets. To achieve precise 3D scene reconstruction and novel view synthesis under these conditions, the pose estimation module of the network must be capable of accurately estimating camera poses even with sparse input. We sampled three real-world scenes using a smartphone and extracted frames at fixed intervals with FFmpeg to create the training and testing images required for our experiments. Our goal is to enable NeRF-based 3D reconstruction and novel view synthesis with only three images in real-world applications.

Experimental results indicate that when other networks employ the traditional SfM algorithm for pose estimation following NeRF’s pipeline, the reconstruction quality of all four baseline networks deteriorates significantly. Consequently, a direct comparison with our method becomes impractical. The primary reason lies in the inherent limitations of traditional SfM algorithms, which require substantial overlap between input images and sufficient texture features within overlapping regions. Additionally, camera positions must have a baseline (viewpoint difference) allowing triangulation constraints. Even under these conditions, SfM-estimated camera poses often contain significant noise, leading to poor reconstruction quality and making comparative experiments unnecessary.

Given these limitations, our final experimental setup uses our network’s frontend, MASt3R-SfM, to estimate camera poses for all three input images. The same estimated poses are then used for NeRF reconstruction by the four baseline networks. In our assumed real-world scenario (only three images available), there is no standardized test set like the Real Forward-Facing dataset. Ground truth images for quantitative evaluation are unavailable.

To mitigate this, we use adjacent frames near the rendered viewpoints as the best possible reference. We acknowledge that this introduces a slight viewpoint parallax, which can affect the absolute values of the reported metrics. However, since this evaluation condition is applied consistently across all compared methods, it provides a fair and valid basis for assessing their relative performance. We render images from the same viewpoints across all networks for comparison. The ground truth consists of adjacent frames near rendered viewpoints, which—despite slight viewpoint differences—represent the best possible reference setup.

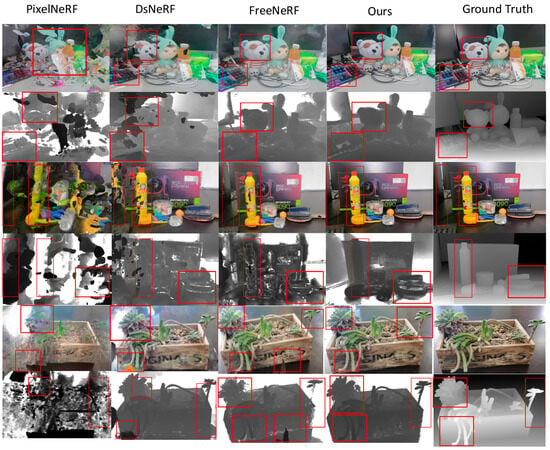

To mitigate viewpoint differences in the ground truth during quantitative experiments, we also render depth maps for each network. These depth maps provide direct visualization of geometric consistency and detail recovery. The ground truth depth maps are estimated using the current state-of-the-art monocular depth estimation network, DepthAnythingV2 [43,44]. It is important to note that these depth maps serve strictly as a qualitative visual reference for geometric plausibility and are not used for any quantitative evaluation metrics. Our quantitative analysis is performed exclusively on the rendered RGB images. In these maps, closer points are shown in white and farther points in black—the inverse of NeRF-rendered depth maps, where closer points appear in black and farther points in white. The qualitative and quantitative experimental results are shown in Figure 6 and Table 4, respectively.

Figure 6.

Three-view 3D reconstruction and novel view synthesis from smartphone-captured images. This experiment uses images captured by a smartphone and compares the performance of different sparse-view methods under the same camera poses estimated by our frontend. The figure alternately displays the rendered RGB images and their corresponding depth maps. It is evident that other baseline networks (e.g., PixelNeRF, DSNeRF, and FreeNeRF) exhibit significant defects in both geometric reconstruction (depth maps) and appearance rendering (RGB images), such as structural collapse, blurry edges, and distorted textures. In contrast, our method (Ours) performs best in both aspects, achieving the most complete geometric reconstruction and rendering the clearest, most realistic images. The red boxes highlight regions where rendering differences among different methods are most evident. The non-English texts visible on certain objects (e.g., packaging labels) are part of the original captured scenes.

Table 4.

Quantitative comparison experiments were conducted across three distinct scenarios (↑ indicates higher is better; ↓ indicates lower is better).

From the results of quantitative experiments, it is evident that our model achieves superior reconstruction quality compared to other sparse-view NeRF networks. This advantage primarily stems from the incorporation of the sampling annealing strategy and a series of regularization optimization techniques in our network. Our method demonstrates strong performance across three quantitative metrics. From the RGB images output by different comparison networks, it is apparent that PixelNeRF produces subpar reconstruction quality (MVSNeRF performs at a similar level and is therefore not presented separately), exhibiting significant blurriness and artifacts. Many regions lose texture and color information, likely due to the lack of fine-tuning, which substantially degrades the reconstruction quality of these networks. In the red-boxed areas, there are noticeable color shifts and texture blurriness (e.g., the doll’s face, the leaves of the plant, and the bottom box). Although DSNeRF shows notable improvements compared to PixelNeRF, it still suffers from artifacts and color shifts. The occlusion boundaries in the red-boxed areas (e.g., the edges of the doll’s ears and the plant’s leaves) exhibit blending issues, leading to unnatural texture and color rendering. FreeNeRF achieves better texture restoration in the red-boxed regions (e.g., plant details) but still encounters blurriness in geometrically complex regions (e.g., the edges of plant leaves and the doll’s ears). In contrast, our method accurately reconstructs texture and color in the red-boxed regions (e.g., the doll’s ears, plant leaves, and bottles) without noticeable blurriness or artifacts. The occlusion boundaries and segmentation between the foreground and background are sharp, with high color consistency. Our network also reconstructs fine textures in complex geometric areas (e.g., plant stems and leaves) with minimal artifacts. Additionally, comparing the depth maps output by different networks reveals that PixelNeRF produces depth maps with significant blurriness and noise, particularly in detail-rich or geometrically complex regions (e.g., areas marked by red boxes). Although DSNeRF demonstrates significant improvement over PixelNeRF, it still exhibits incomplete geometry in certain regions and insufficient sharpness at depth boundaries. FreeNeRF generally outperforms DSNeRF in sharpness, but depth estimation errors persist in some regions (e.g., gaps between objects or small objects). Our method produces depth maps with the best clarity, detail, and boundary sharpness, closely approximating the ground truth. The red-boxed regions (e.g., edge details, occluded areas, and small objects) demonstrate significantly higher accuracy than other methods. Overall, our approach outperforms other methods significantly in both RGB rendering and depth map reconstruction quality.

4.4. Ablation Study

4.4.1. Efficacy of the High-Frequency Annealing Strategy

To isolate and rigorously assess the contribution of our high-frequency annealing strategy for positional encoding in sparse-view scenarios, we conduct a targeted ablation study. This evaluation is performed on the NeRF-synthetic dataset, as its scenes—characterized by sharp geometric boundaries and uniform color surfaces—present an ideal testbed for evaluating the network’s susceptibility to overfitting on high-frequency information. To eliminate confounding factors from camera pose inaccuracies, we utilize the ground truth camera poses provided with the dataset. This experimental setup ensures that any observed performance differences are solely attributable to the inclusion or exclusion of the proposed annealing strategy.

We compare the following two model variants, each trained using three sparse input views.

Ours (w/Annealing): This is our full model, which incorporates the proposed high-frequency annealing strategy. The strategy progressively unmasks frequency bands by incrementally increasing the maximum level, Lmax, of the positional encoding. This compels the network to first learn the coarse, low-frequency structure of the scene during the initial training stages before gradually enabling the learning of fine, high-frequency details.

Ours (w/o Annealing): This is a baseline variant where the high-frequency annealing strategy is ablated. In this configuration, the network has access to the full spectrum of positional encoding frequencies from the outset of training. This approach, particularly in a sparse-view setting, makes the model prone to overfitting on high-frequency noise rather than capturing the underlying global scene structure.

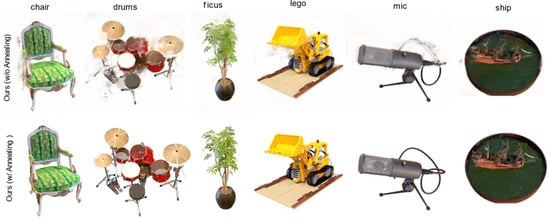

The experimental results clearly demonstrate the necessity of the high-frequency annealing strategy for mitigating overfitting in sparse-view settings. As illustrated in Figure 7, the baseline model (top row), which lacks this strategy, exhibits catastrophic failure. Specifically, its rendered novel views are plagued by erroneous geometric artifacts, often termed “floaters,” that appear in otherwise empty regions of space, resulting in an overall blurry and noisy scene. In sharp contrast, our full model (bottom row) with the annealing strategy generates clean, sharp, and geometrically faithful images that are highly consistent with the ground truth. This stark visual disparity is corroborated by the quantitative metrics in Table 5. Our full model achieves a PSNR of 24.47, more than doubling the 12.31 score of the baseline. Similarly, it shows a decisive advantage across other metrics, with SSIM improving from 0.513 to 0.832 and LPIPS decreasing from 0.482 to 0.162. These results confirm substantial improvements in both structural similarity and perceptual quality.

Figure 7.

Qualitative ablation study of the high-frequency annealing strategy. On the NeRF-synthetic dataset, removing this strategy (top row) leads to severe overfitting, manifested as significant geometric “floaters” in empty space. Our full model (bottom row) successfully suppresses these artifacts, producing clean and sharp images.

Table 5.

Quantitative results of the ablation study on high-frequency annealing. (↑ indicates higher is better; ↓ indicates lower is better).

This experiment conclusively demonstrates that our high-frequency annealing is a critical component for robust reconstruction from sparse views. By guiding the network to learn in a coarse-to-fine manner, it ensures the model first establishes a robust, low-frequency foundation of the scene before progressively refining high-frequency details. Notably, even under the idealized condition of perfect camera poses, this strategy remains indispensable for achieving high-fidelity renderings.

4.4.2. Impact of Low-Frequency Regularization on Geometric Accuracy

To verify the synergistic contribution of our two-part regularization strategy—which involves sampling contiguous patches from low-frequency regions and an accompanying depth regularization term, LU, based on the Pearson correlation coefficient—we conduct a targeted ablation study on the DTU dataset. This strategy is designed to stabilize the training process and enhance geometric accuracy. The DTU dataset is selected for its primary advantage of providing high-precision, ground truth 3D geometry, which enables a direct quantitative evaluation of our model’s geometric fidelity. To isolate the impact of the regularization strategy, we utilize the provided ground truth camera poses, thereby eliminating potential confounding effects from pose estimation errors.

We compare two variants of our model, both trained on sparse input views:

Ours (Full Model): Our standard model, which employs both the low-frequency patch sampling strategy and the Pearson correlation-based depth loss (LU) during the first half of the training process (t ≤ T/2).

Ours (w/o Low-Freq. Regularization): A baseline variant where both components of the low-frequency regularization are ablated. In this setting, the sampling scheme reverts to standard random pixel sampling across the entire image, and the depth regularization term LU is omitted from the loss function.

Our ablation study reveals that the low-frequency regularization strategy is critical for ensuring geometric stability and the final rendering quality. This is particularly evident in the qualitative results presented in Figure 8. The baseline model (top row), which omits this strategy, suffers from severe visual artifacts. Specifically, its rendered surfaces exhibit unnatural splotches of color and shading, an effect typically indicative of an incorrectly learned underlying 3D geometry. Furthermore, the boundaries between the object and the background are poorly defined, further evidencing defects in its geometric boundary reconstruction.

Figure 8.

Qualitative ablation study of the low-frequency regularization strategy. On the DTU dataset, removing this strategy (top row) results in blurry renders with distorted lighting and structural errors. Our full model (bottom row) reconstructs sharp and geometrically correct views, proving the strategy’s importance for render quality.

These qualitative degradations are quantitatively substantiated by the metrics in Table 6. Our full model achieves a PSNR of 21.63, outperforming the baseline’s 15.23 by a significant margin of 6.4 points. Commensurate advantages are observed in the SSIM and LPIPS metrics, collectively demonstrating a substantial leap in both image fidelity and perceptual quality. We also note that the training process of the baseline is markedly unstable, exhibiting slower convergence and greater volatility in its loss curve.

Table 6.

Quantitative results of the ablation study on low-frequency regularization. (↑ indicates higher is better; ↓ indicates lower is better).

In summary, both the qualitative and quantitative results provide compelling evidence for our central claim: the strategy of sampling contiguous patches in low-frequency regions and the Pearson-based depth regularization loss are complementary and act in synergy. This composite strategy plays a pivotal role throughout the optimization process by stabilizing training and ensuring geometric integrity. It is therefore indispensable for mitigating geometric artifacts induced by sparse views to ultimately achieve high-fidelity view synthesis.

4.4.3. Ablation on the Front-End Pose and Geometry Estimation

To demonstrate the foundational and indispensable role of our integrated MASt3R-SfM front-end, we conduct a final ablation study. We establish a baseline that emulates a conventional NeRF pipeline by first estimating camera poses from the sparse input views using a classical SfM algorithm (i.e., COLMAP). Replacing our MASt3R-SfM front end, however, deprives the system of the initial self-supervised depth maps and the final normalized point cloud it generates. Consequently, our core depth regularization losses, LU and LM, cannot be applied.

To enable this baseline to function, we leverage a pre-trained DepthAnythingV2 model to generate monocular relative depth priors for each input view. These priors are then used to guide our patch sampling strategy and to offer a basic geometric scaffold. This comparative experiment is performed on the challenging forward-facing scenes of the LLFF dataset.

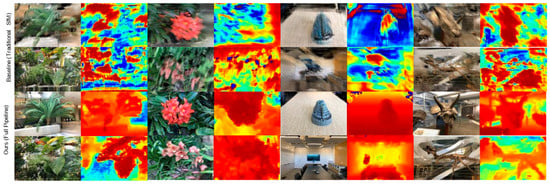

Our final ablation study conclusively demonstrates that the integrated MASt3R-SfM front-end is the cornerstone of our framework. As shown in Figure 9 and Table 7, replacing it with a baseline composed of traditional SfM and a monocular depth estimator leads to a precipitous and catastrophic decline in reconstruction performance. The baseline’s renderings are rife with severe geometric distortions and blurry artifacts, rendering the scene structure entirely unrecognizable. This visual collapse is corroborated by the quantitative metrics: the baseline achieves a PSNR of only 7.56, a score indicative of complete reconstruction failure. In stark contrast, our full pipeline achieves a PSNR of 21.36, with a similarly vast performance gap observed for the SSIM and LPIPS metrics.

Figure 9.

Qualitative ablation study of the frontend pose and geometry estimation module. On the LLFF dataset, replacing our frontend with traditional SfM (top row) results in catastrophic failure, with both RGB images and depth maps showing a total collapse of the scene structure. Our full pipeline (bottom row) successfully reconstructs a coherent and high-quality scene.

Table 7.

Quantitative results of the ablation study on the frontend module. (↑ indicates higher is better; ↓ indicates lower is better).

The root cause of this failure is twofold. First, for complex, real-world scenes such as those in the LLFF dataset, traditional SfM algorithms struggle to estimate accurate and robust camera poses from only 3–5 sparse views. The resulting pose inaccuracies fundamentally corrupt the geometric foundation required for NeRF. Second, while the monocular depth maps from DepthAnythingV2 provide relative depth cues, they fall significantly short of the priors generated by our MASt3R-SfM in terms of accuracy, scale, and multi-view consistency.

Ultimately, this experiment demonstrates that MASt3R-SfM is more than a mere pose estimator; it provides a dual-pronged foundation for the system. It delivers not only high-accuracy, robust camera poses but also a set of multi-view consistent geometric priors that are critical for regularization. Under the challenging conditions of extreme view sparsity in real-world scenes, both of these elements are indispensable prerequisites for achieving high-quality reconstruction.

Taken together, these three ablation studies demonstrate the strong synergistic effects between our proposed components. The front-end ablation (Section 4.4.3) shows that the robust pose estimation and multi-view consistent geometry from MASt3R-SfM form an indispensable foundation. Concurrently, the back-end ablations (Section 4.4.1 and Section 4.4.2) prove that even with a strong geometric foundation, our novel regularization strategies are critical for preventing overfitting and achieving high-fidelity results under sparse-view conditions.

5. Discussion

Our research is predicated on a key hypothesis: under real-world sparse-view conditions, front-end camera pose estimation constitutes a more fundamental and fragile bottleneck than back-end NeRF rendering. Our experimental results, particularly the front-end ablation study (Figure 9, Table 7), provide conclusive evidence for this hypothesis. When we replaced our front end with a traditional SfM pipeline, the entire reconstruction system suffered a catastrophic failure even while the back-end NeRF architecture remained unchanged. This finding reveals a critical issue often sidestepped in prior work that assumes known or accurate camera poses.

This result positions our work in dialog with existing literature. On one hand, compared to sparse-view methods dedicated to improving NeRF regularization (e.g., DS-NeRF and FreeNeRF), our work advances this line of research. We argue that in practical sparse-view scenarios, the “accurate pose” premise upon which these methods are built often does not hold. Therefore, SparsePose–NeRF is not merely a regularization scheme but a more holistic solution, spanning from raw images to the final model. By integrating MASt3R-SfM, we first address the “elephant in the room”—pose estimation—thereby allowing the back-end regularization strategies to realize their full potential on a stable and reliable foundation.

On the other hand, in contrast to pose-free methods that attempt to jointly optimize poses and the scene (e.g., BARF, NoPe–NeRF), our framework adopts a distinct and more pragmatic philosophy: decoupling and specialization. While the concept of joint optimization is elegant, its vast search space renders it highly susceptible to local minima under extreme view sparsity. Our results demonstrate that by first resolving the geometry with a specialized and battle-tested front end, the learning burden on the back-end NeRF is substantially reduced. This “divide and conquer” approach exhibits superior robustness and practicality in challenging sparse-view conditions. Regarding the robustness to image noise, our framework has two inherent advantages. First, the learning-based MASt3R-SfM front-end, trained on diverse real-world imagery, is less sensitive to common image noise and blur compared to classical keypoint-based SfM. Second, our back-end regularization, particularly the high-frequency annealing strategy, explicitly prevents the network from overfitting to high-frequency details, which would include sensor noise, thereby promoting a smoother and more plausible scene representation.

In summary, our work highlights the immense potential of a deep integration between classic multi-view geometry and modern neural rendering. This hybrid paradigm, combining the strengths of classical geometry and neural rendering, offers a valuable blueprint for tackling other complex vision tasks, such as robotic navigation and scene understanding.

6. Conclusions and Future Work

6.1. Conclusions

In this paper, we introduced SparsePose–NeRF, a highly robust 3D reconstruction framework designed to address the dual challenges of sparse views and unknown camera poses, which are prevalent in real-world applications of Neural Radiance Fields (NeRF). Conventional NeRF pipelines falter when processing such non-ideal data, as their front-end SfM algorithms often fail due to insufficient information. To overcome this hurdle, our core contribution is the introduction of the advanced MASt3R-SfM algorithm as a powerful front end. It not only robustly estimates high-accuracy camera poses from extremely sparse views but also provides the subsequent NeRF reconstruction with invaluable multi-view consistent geometric priors.

Building on this foundation, we further designed novel back-end regularization strategies. By employing high-frequency annealing for positional encoding, we effectively guide the network to learn scenes in a coarse-to-fine manner, thus preventing overfitting to high-frequency noise under data sparsity. Through an innovative two-stage depth loss function—comprising a local, Pearson correlation-based loss (LU) and a global, confidence-based loss (LM)—we significantly enhanced the model’s perception of and constraints on the 3D geometric structure. Extensive comparative experiments and ablation studies demonstrate that our method’s reconstruction quality comprehensively surpasses that of existing state-of-the-art methods across various datasets, particularly on the most challenging real-world scenes (LLFF). Ultimately, our work represents a solid step towards transitioning NeRF technology from idealized laboratory settings to complex and varied real-world applications.

6.2. Limitations and Future Work

Despite the notable success of our method, several limitations exist, which in turn point to promising avenues for future research, as follows:

Dependence on the Front End: The performance of our framework is highly dependent on the output of the MASt3R-SfM front-end. While its robustness far exceeds that of traditional SfM, it may still encounter challenges in extreme cases, such as completely textureless surfaces or severe lighting variations.

Efficiency of the Two-Stage Pipeline: Our current method employs a two-stage “pose-then-NeRF” process. While this non-end-to-end paradigm is stable, it is potentially less efficient than future joint optimization frameworks.

Static Scene Assumption: Similarly to most NeRF-based methods, our framework is currently applicable only to the reconstruction of static scenes and cannot handle dynamic elements commonly found in the real world, such as pedestrians or vehicles.

Based on this analysis, we propose the following directions for future work:

End-to-End Joint Optimization Framework: A key research direction is to integrate pose estimation and NeRF’s scene representation learning into a unified, end-to-end network. By designing a differentiable rendering and geometry module, a joint optimization of camera poses, scene geometry, and appearance could be achieved, which promises to further enhance both reconstruction accuracy and runtime efficiency.

Modeling and Reconstruction of Dynamic Scenes: Extending our framework to dynamic scenes is a highly valuable research direction. This could involve exploring the use of timestamps as an additional input and learning a deformation field from a canonical static space to the dynamic observation space, thereby enabling 4D capture and rendering of dynamic scenes.