High-Performance 3D Point Cloud Image Distortion Calibration Filter Based on Decision Tree

Abstract

1. Introduction

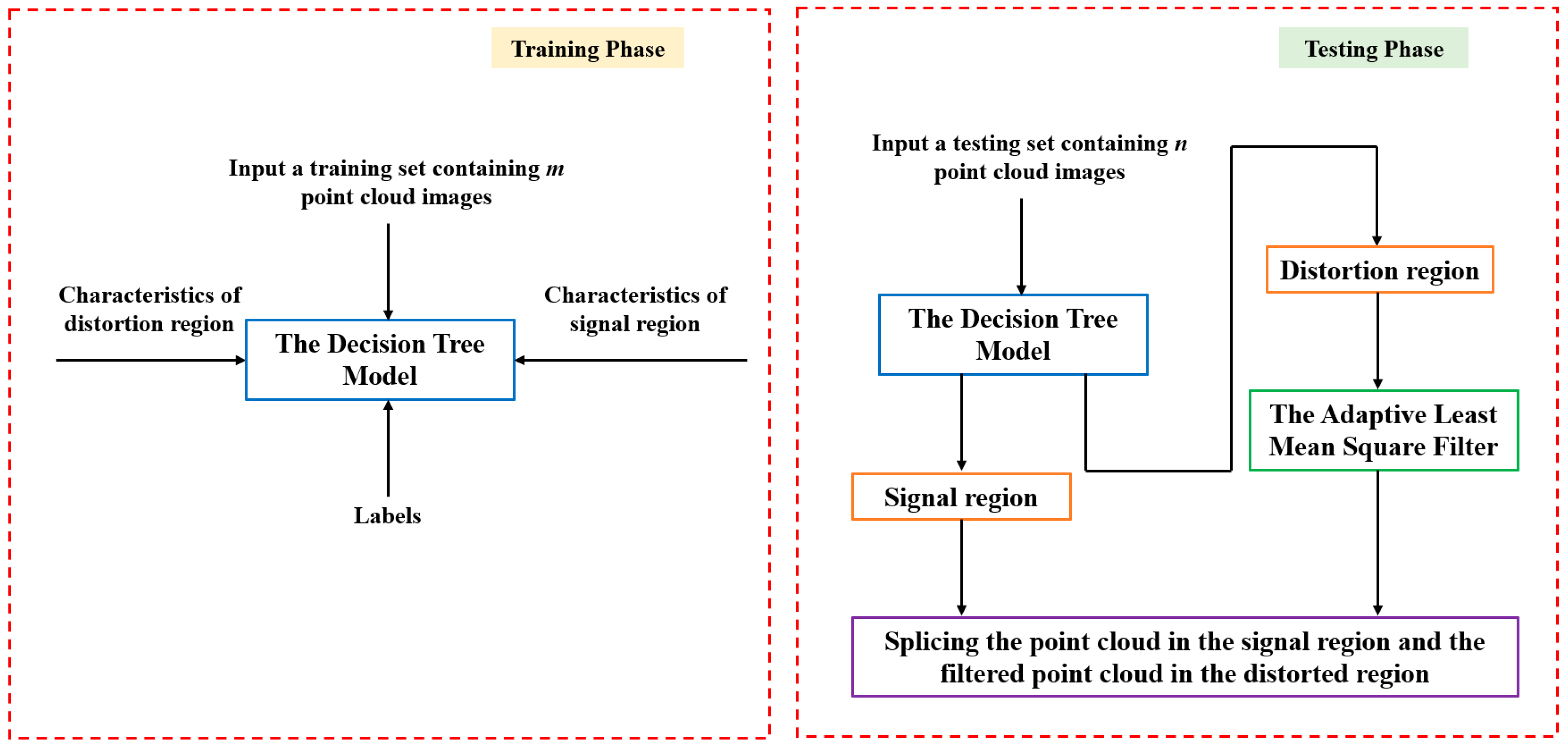

2. High-Performance 3D Point Cloud Least Mean Square Filter Based on Decision Tree

2.1. Training Phase

- (1)

- Point Density: . It reflects the richness of data in local regions, where distorted areas typically exhibit abnormal density due to sensor errors.

- (2)

- The normal vector: . The normal vectors in signal regions tend to be consistent, whereas those in distorted regions tend to be scattered.

- (3)

- Neighborhood Variance: . The variance in distorted regions is significantly higher than that in signal regions due to noise interference.

- (4)

- Local Curvature: . The curvature in signal regions tends to be close to zero, whereas regions along edges or areas with distortion exhibit higher curvature values.

- (5)

- Intensity Gradient: . Abnormal fluctuations in reflection intensity may occur in distortion areas due to sensor errors.

- (1)

- Minimum sample size threshold: the splitting is stopped when the number of samples contained in the current node is less than .

- (2)

- Information gain threshold: If the information gain after splitting is less than , the split is rejected.

| Algorithm 1 The Decision Tree Model |

| Input: Training dataset |

| Attribute set |

| Process |

| Output |

| 1: Generating node. |

| 2: if the samples in all belong to the same category then |

| 3: Mark the node as a C-class leaf node. |

| 4: end if |

| 5: if or then |

| 6: Mark the node as a leaf node. |

| 7: Mark its category as the class with the largest number of samples in . |

| 8: end if |

| 9: Select the optimal partition attribute from . |

| 10: for in do |

| 11: Generate a branch for node. |

| 12: Let represent a subset of samples in that take the value . |

| 13: if then |

| 14: Mark a branch node as a leaf node. |

| 15: Mark its category as the class with the largest number of samples in . |

| 16: else |

| 17: Take as a branch node. |

| 18: end if |

| 19: end for |

| 20: Output a decision tree with node as the root node. |

2.2. Testing Phase

| Algorithm 2 The Adaptive Least Mean Square (ALMS) filter |

| Input in the distorted region |

| The filter order K |

| Step factor |

| Output |

| . |

| 2: for do |

| 3: |

| 4: |

| 5: |

| 6: |

| 7: |

| 8: end for |

| . |

3. Complexity Analysis

4. Performance Analysis

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tu, D.; Cui, H.; Shen, S. PanoVLM: Low-Cost and accurate panoramic vision and LiDAR fused mapping. ISPRS J. Photogramm. Remote Sens. 2023, 206, 149–167. [Google Scholar] [CrossRef]

- Tachella, J.; Altmann, Y.; Mellado, N.; McCarthy, A.; Tobin, R.; Buller, G.S.; Tourneret, J.-Y.; McLaughlin, S. Real-time 3D reconstruction from single-photon lidar data using plug-and-play point cloud denoisers. Nat. Commun. 2019, 10, 4984. [Google Scholar] [CrossRef]

- Vines, P.; Kuzmenko, K.; Kirdoda, J.; Dumas, D.C.S.; Mirza, M.M.; Millar, R.W.; Paul, D.J.; Buller, G.S. High performance planar germanium-on-silicon single-photon avalanche diode detectors. Nat. Commun. 2019, 10, 1086. [Google Scholar] [CrossRef]

- Le Gentil, C.; Vidal-Calleja, T.; Huang, S. 3D Lidar-IMU Calibration Based on Upsampled Preintegrated Measurements for Motion Distortion Correction. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2149–2155. [Google Scholar]

- Han, Y.; Salido-Monzú, D.; Butt, J.A.; Schweizer, S.; Wieser, A. A feature selection method for multimodal multispectral LiDAR sensing. ISPRS J. Photogramm. Remote Sens. 2024, 212, 42–57. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, S.; Sun, J.; Zhang, X.; Zhou, X.; Zhang, H. Noise-tolerant depth image estimation for array Gm-APD LiDAR through atmospheric obscurants. Opt. Laser Technol. 2024, 175, 110706. [Google Scholar] [CrossRef]

- Wu, M.; Lu, Y.; Li, H.; Mao, T.; Guan, Y.; Zhang, L.; He, W.; Wu, P.; Chen, Q. Intensity-guided depth image estimation in long-range lidar. Opt. Lasers Eng. 2022, 155, 107054. [Google Scholar] [CrossRef]

- Ni, H.; Sun, J.; Ma, L.; Liu, D.; Zhang, H.; Zhou, S. Research on 3D image reconstruction of sparse power lines by array GM-APD lidar. Opt. Laser Technol. 2024, 168, 109987. [Google Scholar] [CrossRef]

- Peng, Z.; Wang, H.; She, X.; Xue, R.; Kong, W.; Huang, G. Marine remote target signal extraction based on 128 line-array single photon LiDAR. Infrared Phys. Technol. 2024, 143, 105592. [Google Scholar] [CrossRef]

- Chen, M.; Rao, P.R.; Venialgo, E. Depth estimation in SPAD-based LiDAR sensors. Opt. Express 2024, 32, 3006–3030. [Google Scholar] [CrossRef] [PubMed]

- Gottfried, J.M.; Fehr, J.; Garbe, C.S. Computing Range Flow from Multi-Modal Kinect Data. In Proceedings of the Advances in Visual Computing: 7th International Symposium, ISVC 2011, Las Vegas, NV, USA, 26–28 September 2011; Part I 7. Springer: Berlin/Heidelberg, Germany, 2011; pp. 758–767. [Google Scholar]

- Zhang, Z. Microsoft kinect sensor and its effect. IEEE Multimed. 2012, 19, 4–10. [Google Scholar] [CrossRef]

- Han, J.; Shao, L.; Xu, D.; Shotton, J. Enhanced computer vision with microsoft kinect sensor: A review. IEEE Trans. Cybern. 2013, 43, 1318–1334. [Google Scholar] [CrossRef]

- Diego-Mas, J.A.; Alcaide-Marzal, J. Using Kinect™ sensor in observational methods for assessing postures at work. Appl. Ergon. 2014, 45, 976–985. [Google Scholar] [CrossRef]

- DiFilippo, N.M.; Jouaneh, M.K. Characterization of different Microsoft Kinect sensor models. IEEE Sens. J. 2015, 15, 4554–4564. [Google Scholar] [CrossRef]

- Khoshelham, K.; Elberink, S.O. Accuracy and resolution of Kinect depth data for indoor mapping applications. Sensors 2012, 12, 1437–1454. [Google Scholar] [CrossRef] [PubMed]

- Bähler, N.; El Helou, M.; Objois, É.; Okumuş, K.; Süsstrunk, S. Pogain: Poisson–Gaussian image noise modeling from paired samples. IEEE Signal Process. Lett. 2022, 29, 2602–2606. [Google Scholar] [CrossRef]

- Mannam, V.; Zhang, Y.; Zhu, Y.; Nichols, E.; Wang, Q.; Sundaresan, V.; Zhang, S.; Smith, C.; Bohn, P.W.; Howard, S.S. Real-time image denoising of mixed Poisson–Gaussian noise in fluorescence microscopy images using Image. Optica 2022, 9, 335–345. [Google Scholar] [CrossRef]

- Bahador, F.; Gholami, P.; Lakestani, M. Mixed Poisson–Gaussian noise reduction using a time–space fractional differential equations. Inf. Sci. 2023, 647, 119417. [Google Scholar] [CrossRef]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; IEEE: Piscataway, NJ, USA, 2001; pp. 145–152. [Google Scholar]

- Chen, H.-X.; Li, K.; Fu, Z.; Liu, M.; Chen, Z.; Guo, Y. Distortion-aware monocular depth estimation for omnidirectional images. IEEE Signal Process. Lett. 2021, 28, 334–338. [Google Scholar] [CrossRef]

- Narawade, N.S.; Kanphade, R.D. Geometric distortion correction in images using proposed spy pixel and size. In Proceedings of the 2014 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Delhi, India, 24–27 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1413–1417. [Google Scholar]

- Yothers, M.P.; Browder, A.E.; Bumm, L.A. Real-space post-processing correction of thermal drift and piezoelectric actuator nonlinearities in scanning tunneling microscope images. Rev. Sci. Instrum. 2017, 88, 013705. [Google Scholar] [CrossRef]

- Wu, Y.; Fan, Z.; Fang, Y.; Liu, C. An effective correction method for AFM image distortion due to hysteresis and thermal drift. IEEE Trans. Instrum. Meas. 2020, 70, 1–12. [Google Scholar] [CrossRef]

- Van de Weijer, J.; Van den Boomgaard, R. Local mode filtering. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001; IEEE: Piscataway, NJ, USA, 2001; Volume 2, p. II-II. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1397–1409. [Google Scholar] [CrossRef]

- Liu, S.; Lai, P.; Tian, D.; Gomila, C.; Chen, C.W. Joint trilateral filtering for depth map compression. In Proceedings of the Visual Communications and Image Processing 2010, Huangshan, China, 11–14 July 2010; SPIE: Bellingham, WA, USA, 2010; Volume 7744, pp. 132–141. [Google Scholar]

- Oh, K.J.; Vetro, A.; Ho, Y.S. Depth coding using a boundary reconstruction filter for 3-D video systems. IEEE Trans. Circuits Syst. Video Technol. 2011, 21, 350–359. [Google Scholar] [CrossRef]

- Xu, X.; Po, L.M.; Cheung, T.C.H.; Cheung, K.W.; Feng, L.; Ting, C.W.; Ng, K.H. Adaptive depth truncation filter for MVC-based compressed depth image. Signal Process. Image Commun. 2014, 29, 316–331. [Google Scholar] [CrossRef]

- Zhao, L.; Wang, A.; Zeng, B.; Wu, Y. Candidate value-based boundary filtering for compressed depth images. Electron. Lett. 2015, 51, 224–226. [Google Scholar] [CrossRef]

- Zhao, L.; Bai, H.; Wang, A.; Zhao, Y.; Zeng, B. Two-stage filtering of compressed depth images with Markov random field. Signal Process. Image Commun. 2017, 54, 11–22. [Google Scholar] [CrossRef]

- Charbuty, B.; Abdulazeez, A. Classification based on decision tree algorithm for machine learning. J. Appl. Sci. Technol. Trends 2021, 2, 20–28. [Google Scholar] [CrossRef]

- Karchi, N.; Kulkarni, D.; Pérez de Prado, R.; Divakarachari, P.B.; Patil, S.N.; Desai, V. Adaptive least mean square controller for power quality enhancement in solar photovoltaic system. Energies 2022, 15, 8909. [Google Scholar] [CrossRef]

- Nagabushanam, M.; Chakrasali, S.; Gangadharaiah, S.L.; Patel, S.H.; Ramaiah, G.; Basak, R. An Optimized VLSI Implementation of the Least Mean Square (LMS) Adaptive Filter Architecture on the Basis of Distributed Arithmetic Approach. J. Inst. Eng. India Ser. B 2024, 106, 861–870. [Google Scholar] [CrossRef]

- Rosalin; Rout, N.K.; Das, D.P. Adaptive Exponential Trigonometric Functional Link Neural Network Based Filter Proportionate Maximum Versoria Least Mean Square Algorithm. J. Vib. Eng. Technol. 2024, 12, 8829–8837. [Google Scholar] [CrossRef]

| Algorithm | Training Time | Testing Time |

|---|---|---|

| 3D point cloud least mean square filter based on SVM | 1726.25 s | 72.52 s |

| The proposed D−LMS filtering algorithm | 1.31 s | 0.06 s |

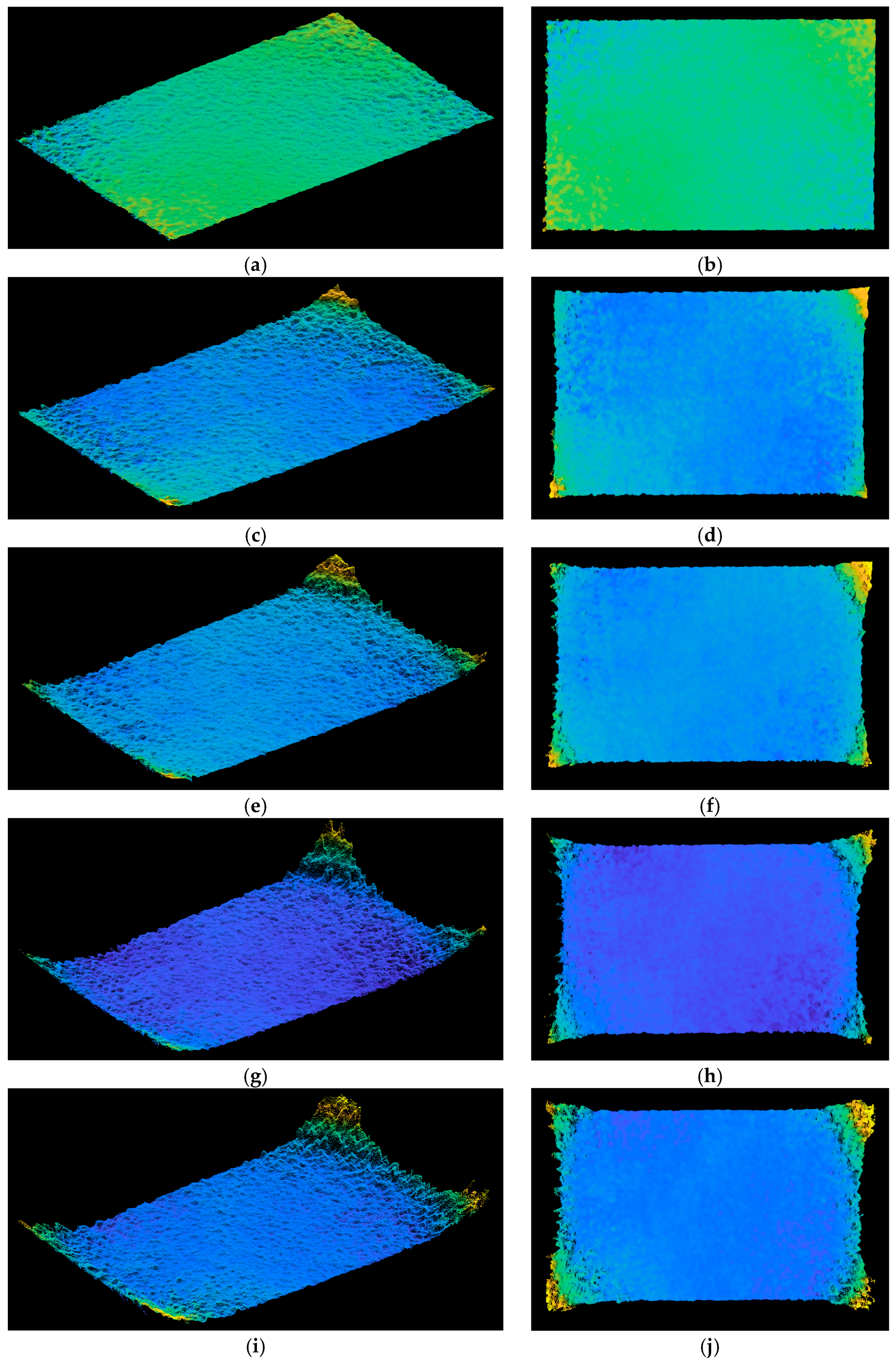

| The Testing Dataset | Distance | Number of Points in Signal Region | Number of Points in Distorted Region |

|---|---|---|---|

| Dataset 1 | 0.6 m | 231,651 | 9646 |

| Dataset 2 | 0.9 m | 211,051 | 29,800 |

| Dataset 3 | 1.2 m | 199,536 | 41,316 |

| Dataset 4 | 1.6 m | 175,473 | 64,782 |

| Dataset 5 | 1.9 m | 172,829 | 64,940 |

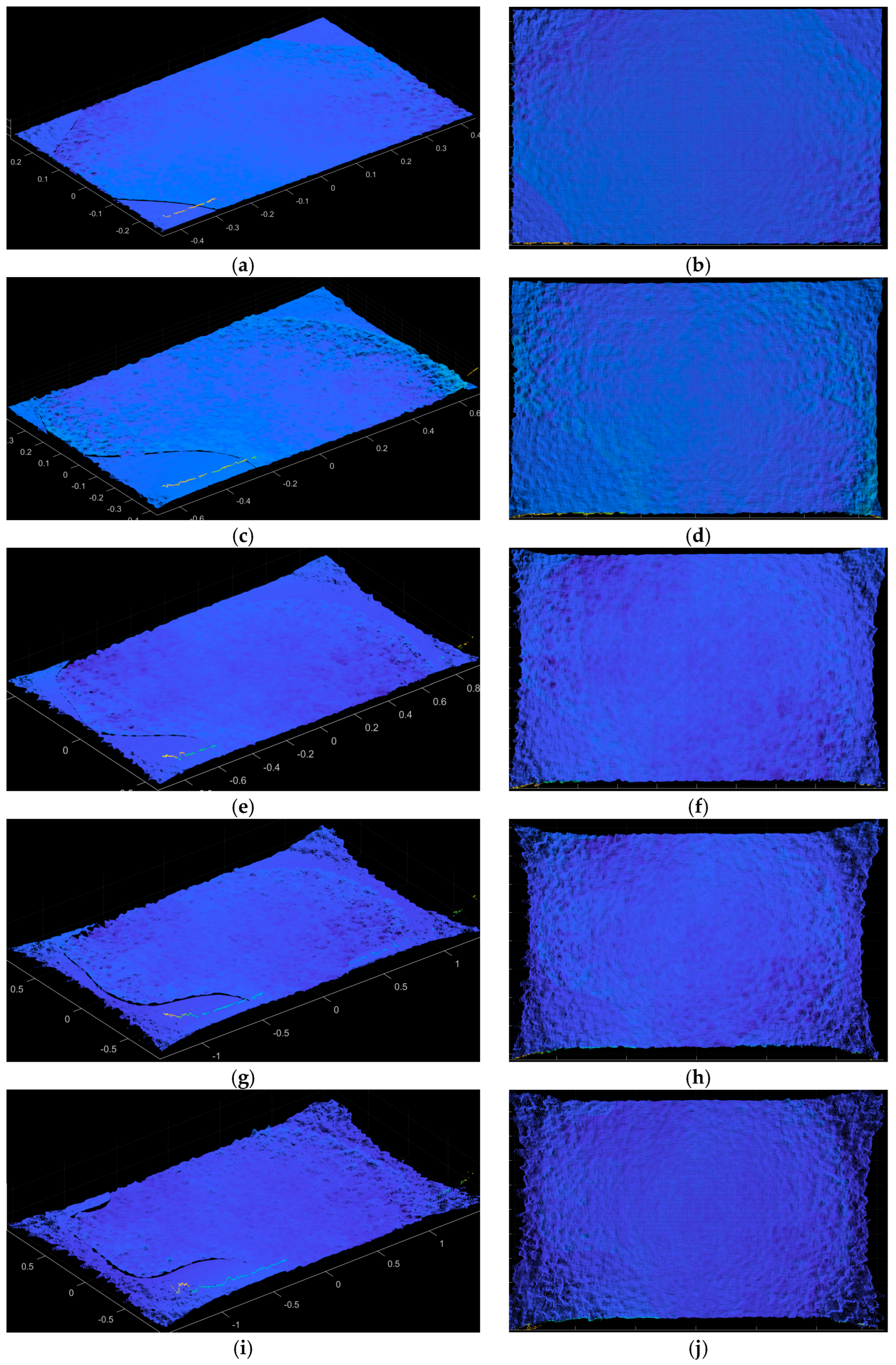

| Algorithm | Dataset 1 | Dataset 2 | Dataset 3 | Dataset 4 | Dataset 5 |

|---|---|---|---|---|---|

| Without algorithm processing | 96.00% | 87.63% | 82.85% | 73.04% | 72.69% |

| 3D point cloud least mean square filter based on SVM | 98.87% | 95.78% | 92.39% | 87.54% | 86.17% |

| The proposed D−LMS filtering algorithm | 99.52% | 97.25% | 95.03% | 93.55% | 92.38% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Duan, Y. High-Performance 3D Point Cloud Image Distortion Calibration Filter Based on Decision Tree. Photonics 2025, 12, 960. https://doi.org/10.3390/photonics12100960

Duan Y. High-Performance 3D Point Cloud Image Distortion Calibration Filter Based on Decision Tree. Photonics. 2025; 12(10):960. https://doi.org/10.3390/photonics12100960

Chicago/Turabian StyleDuan, Yao. 2025. "High-Performance 3D Point Cloud Image Distortion Calibration Filter Based on Decision Tree" Photonics 12, no. 10: 960. https://doi.org/10.3390/photonics12100960

APA StyleDuan, Y. (2025). High-Performance 3D Point Cloud Image Distortion Calibration Filter Based on Decision Tree. Photonics, 12(10), 960. https://doi.org/10.3390/photonics12100960