Particle Shape Recognition with Interferometric Particle Imaging Using a Convolutional Neural Network in Polar Coordinates

Abstract

1. Introduction

2. Experimental Setup and Image Conversion to Polar Coordinates

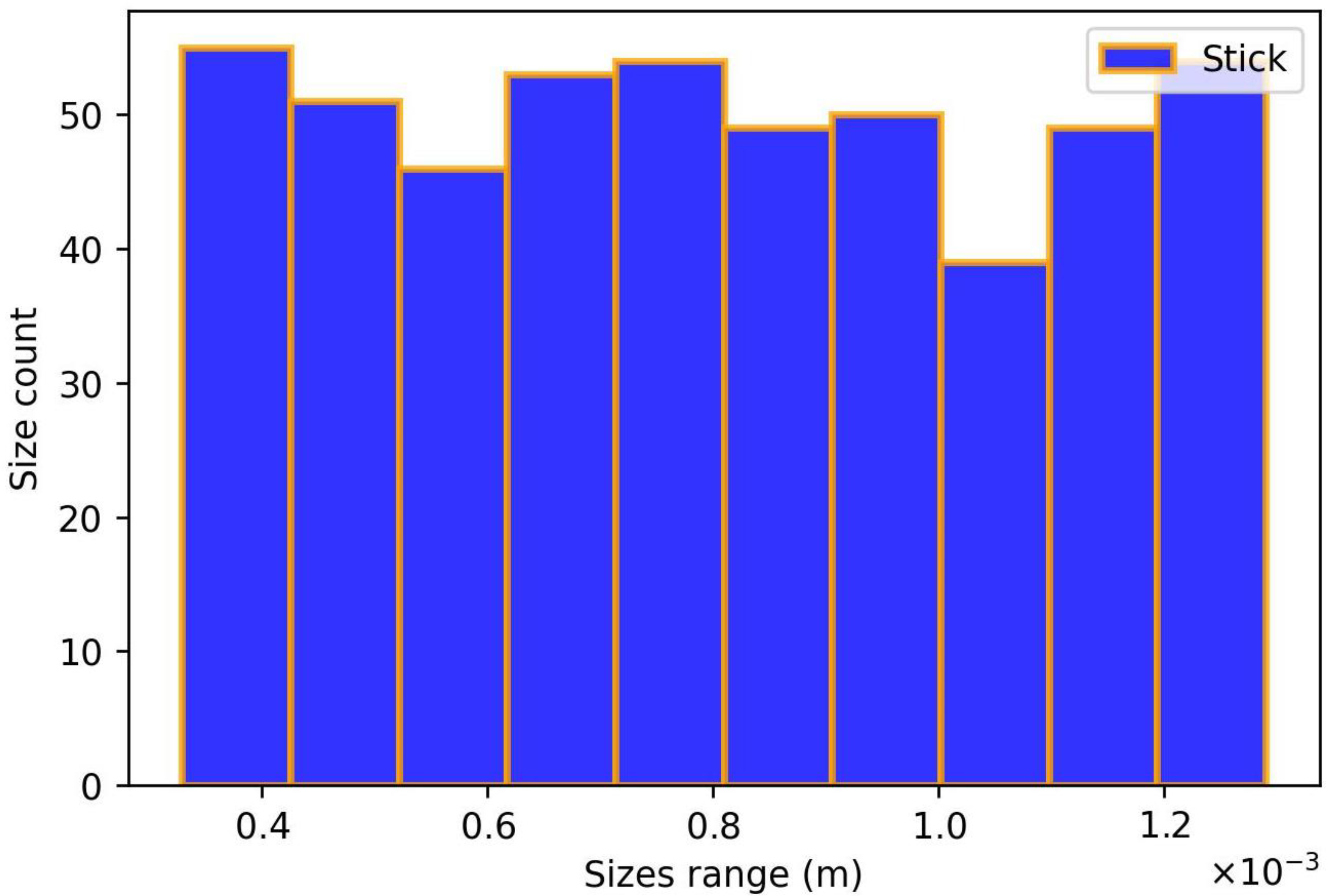

2.1. Programmable Rough Particles

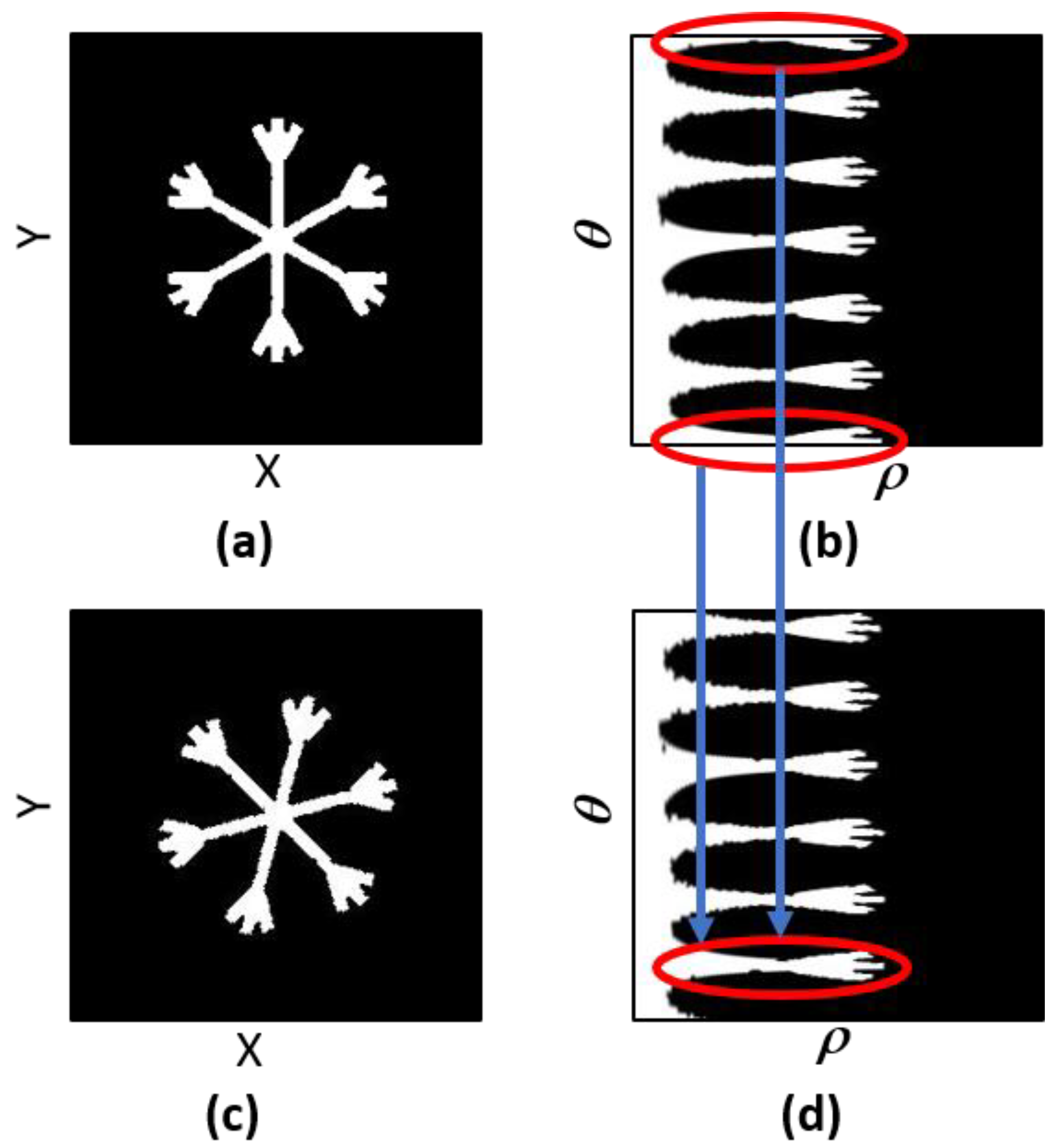

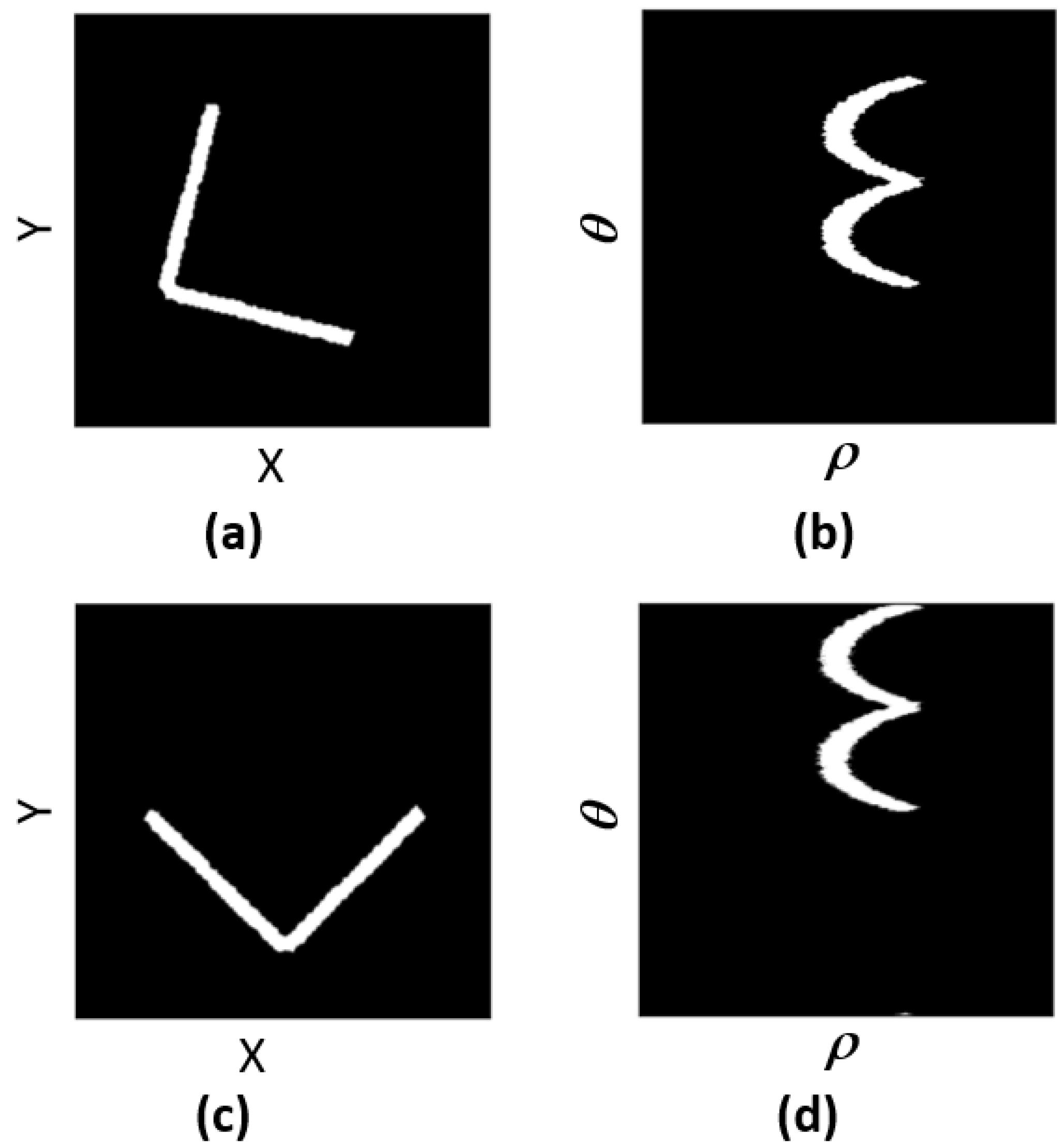

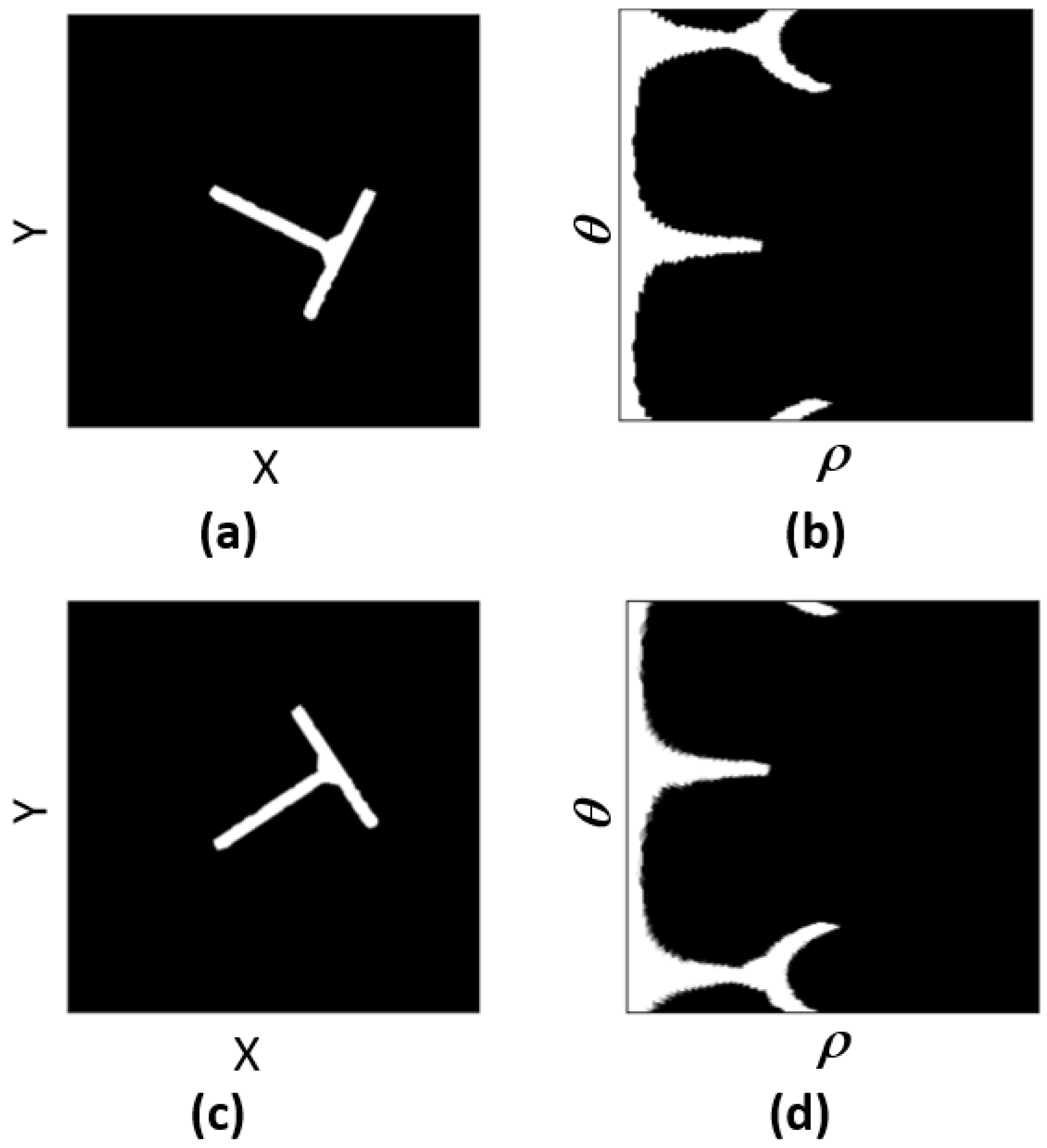

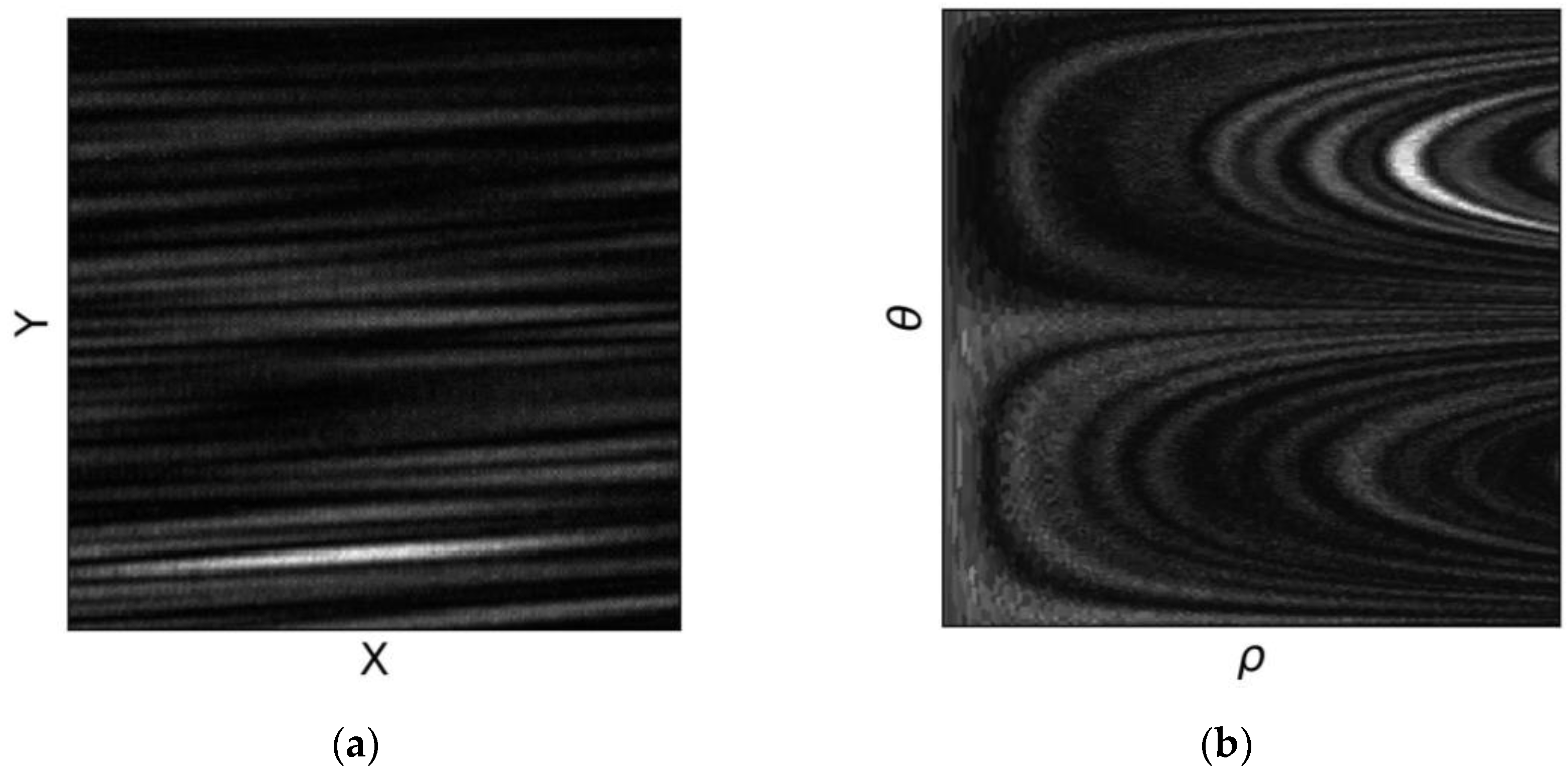

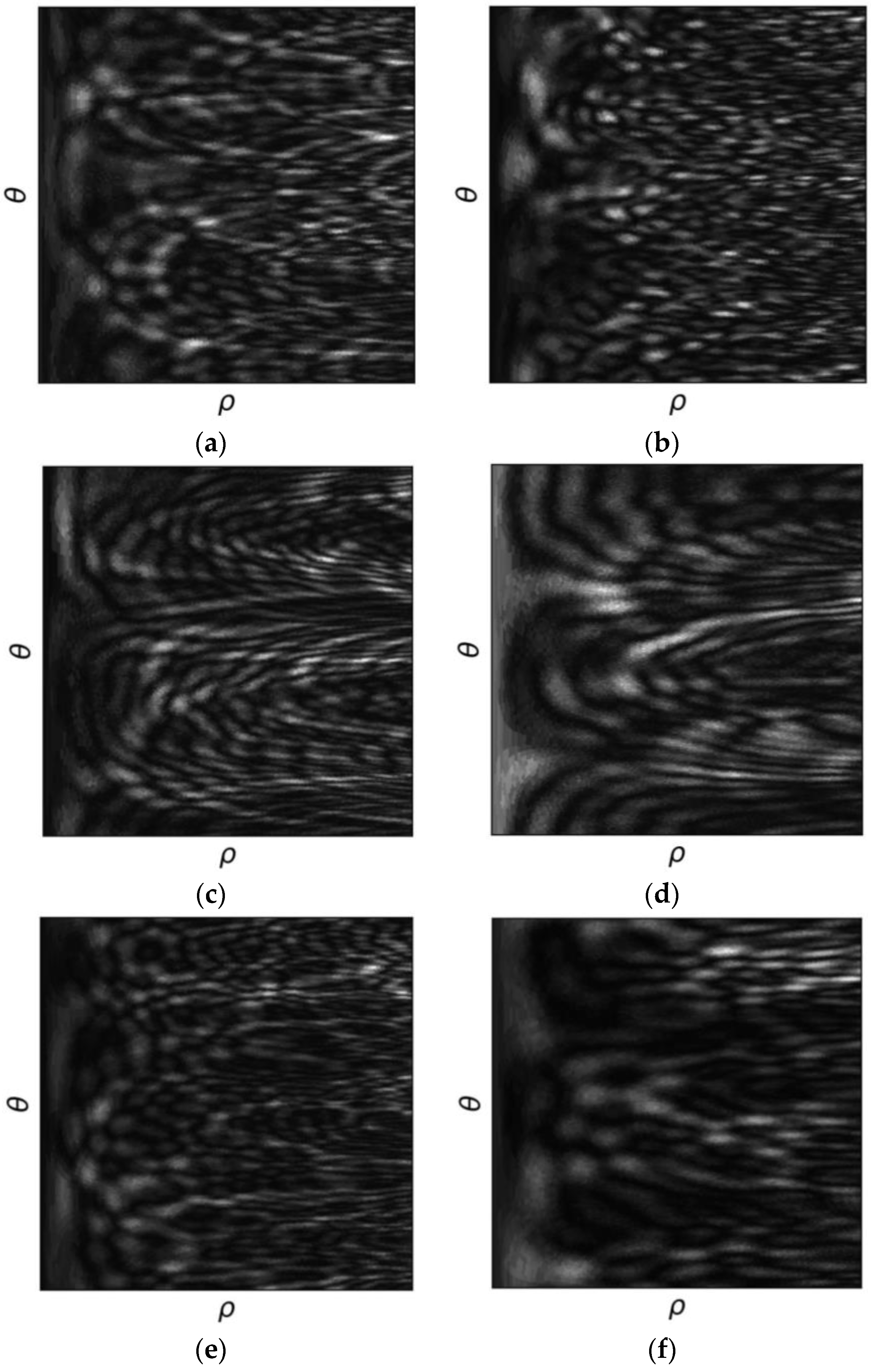

2.2. Conversion of the Interferograms to Polar Coordinates

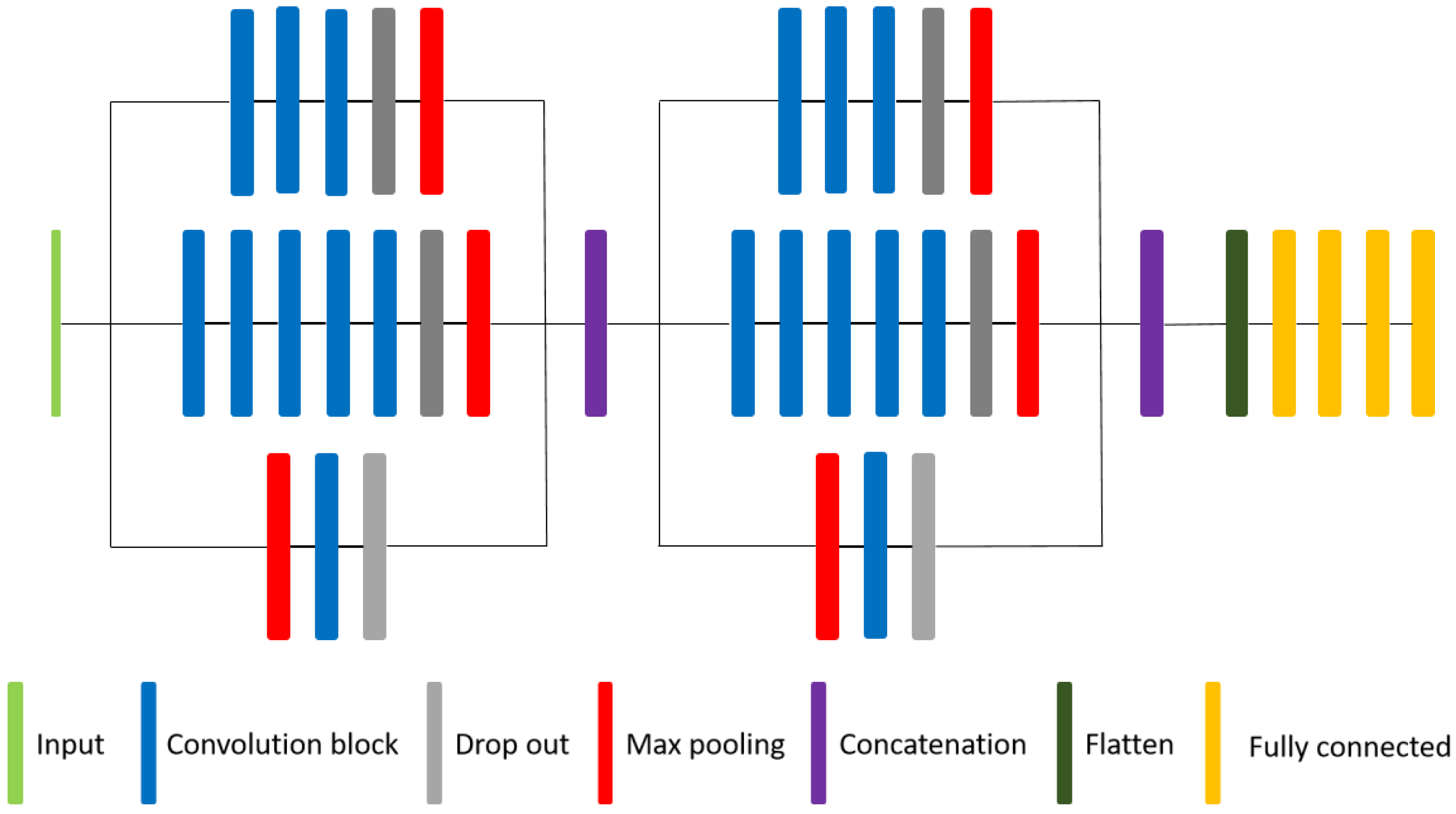

2.3. CNN Architecture

- -

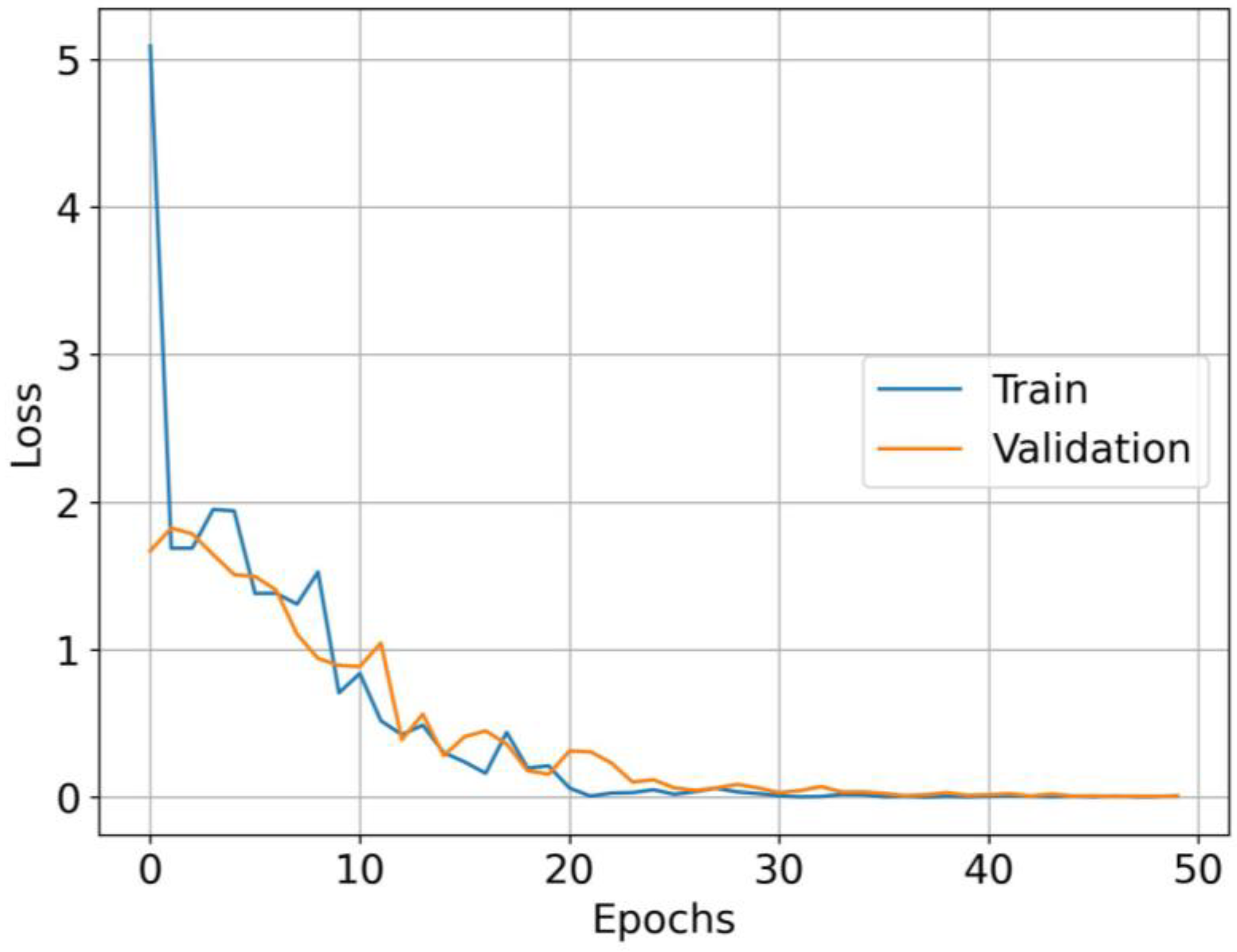

- Concerning the computing time and memory capacity, the network was trained on a GPU with 8 GB of memory capacity. With this capacity, the GPU could store the model, the batch of images, and the gradient calculation for each epoch.

- -

- In interferometric particle imaging, particle sizing is possible when there is a sufficient number of fringes (for droplets) or speckle grains (for rough particles) in the image. The number of speckle grains in the selection is directly linked to the size of the particle and the geometry of the experimental setup (in particular, the defocus parameter). In the selected part of the image, we always had more than 30 bright spots. If this was not respected, the recognition of the smallest particles would not have been possible.

3. Results

3.1. Examples of Tested Data

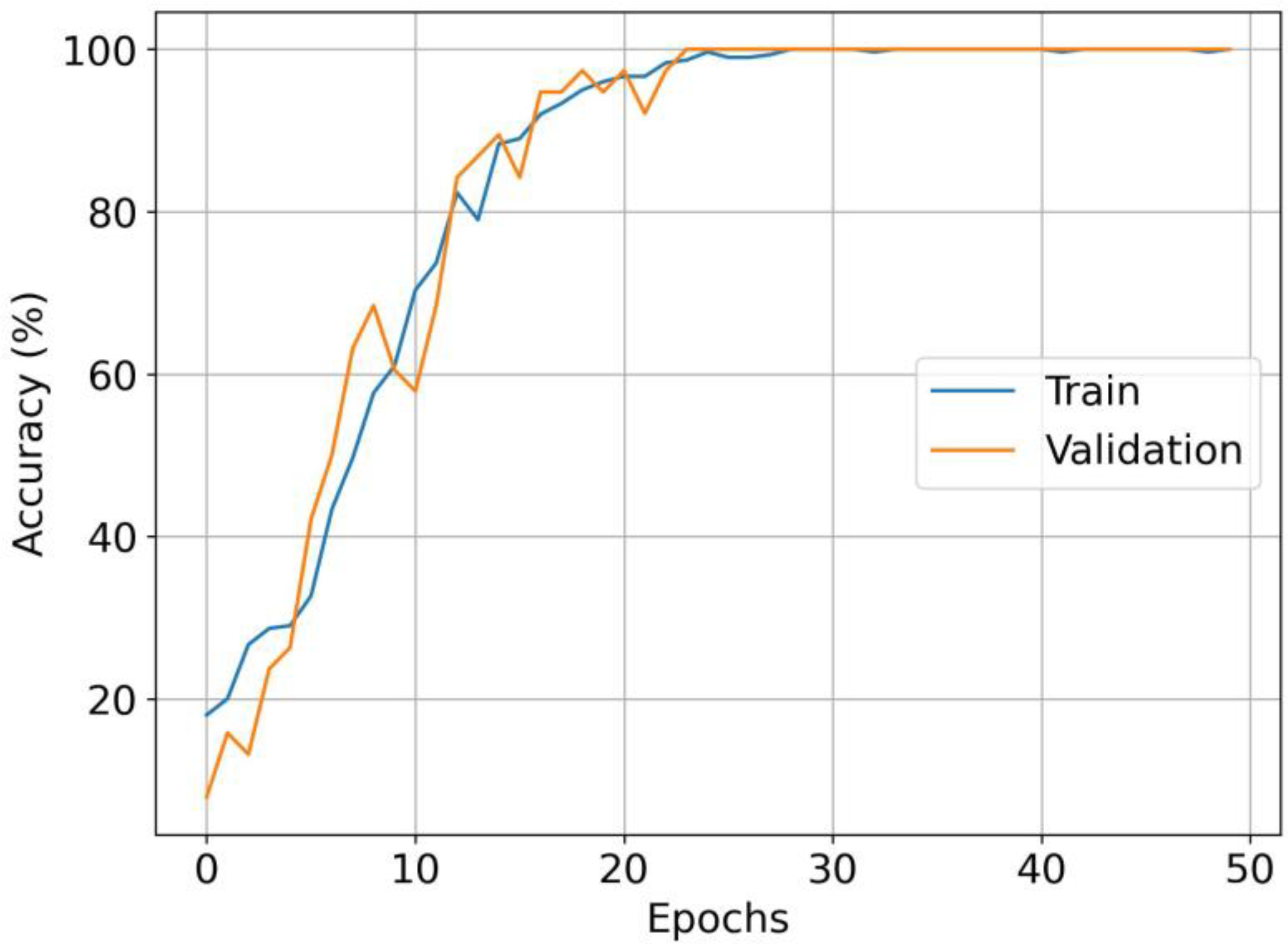

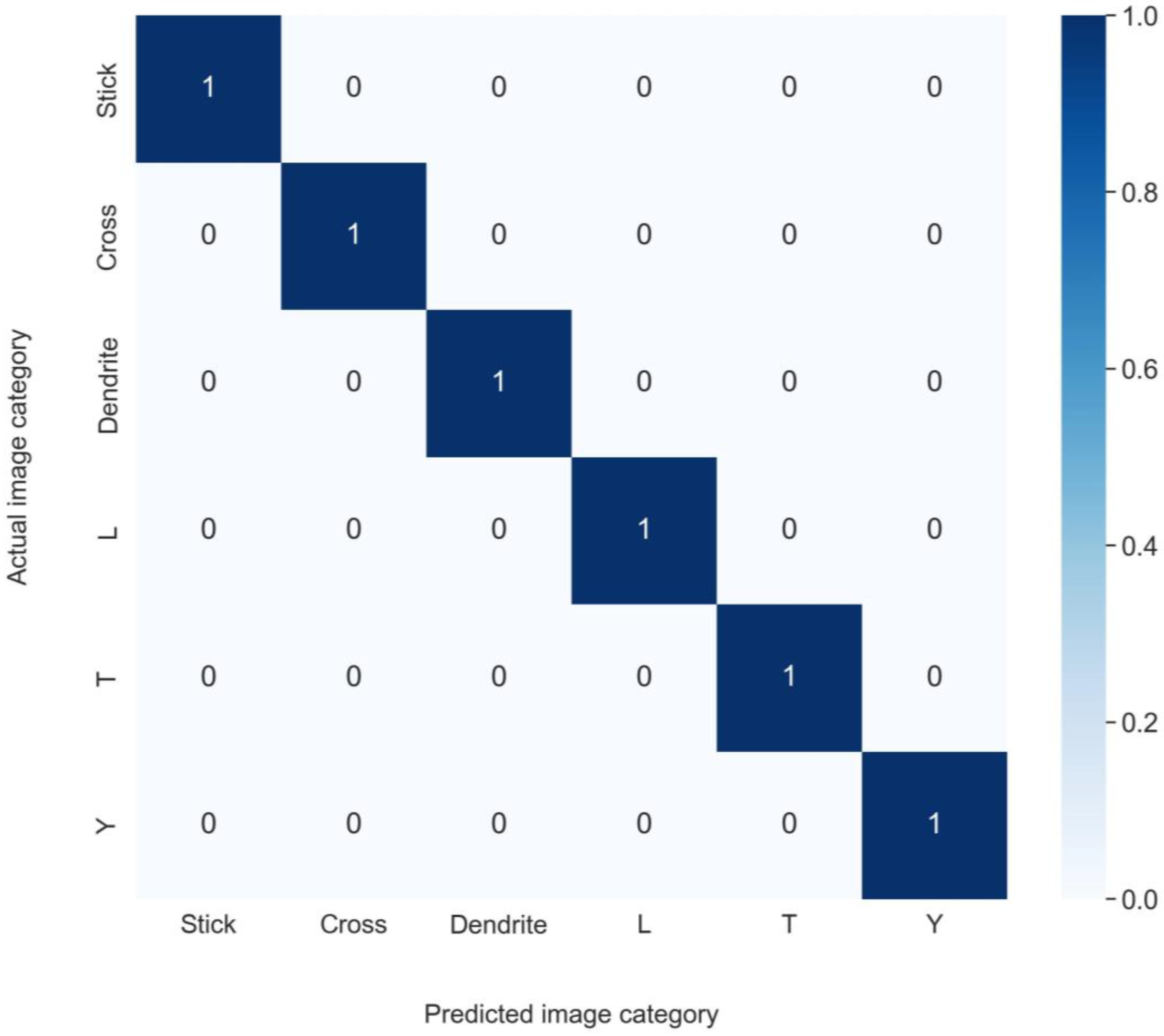

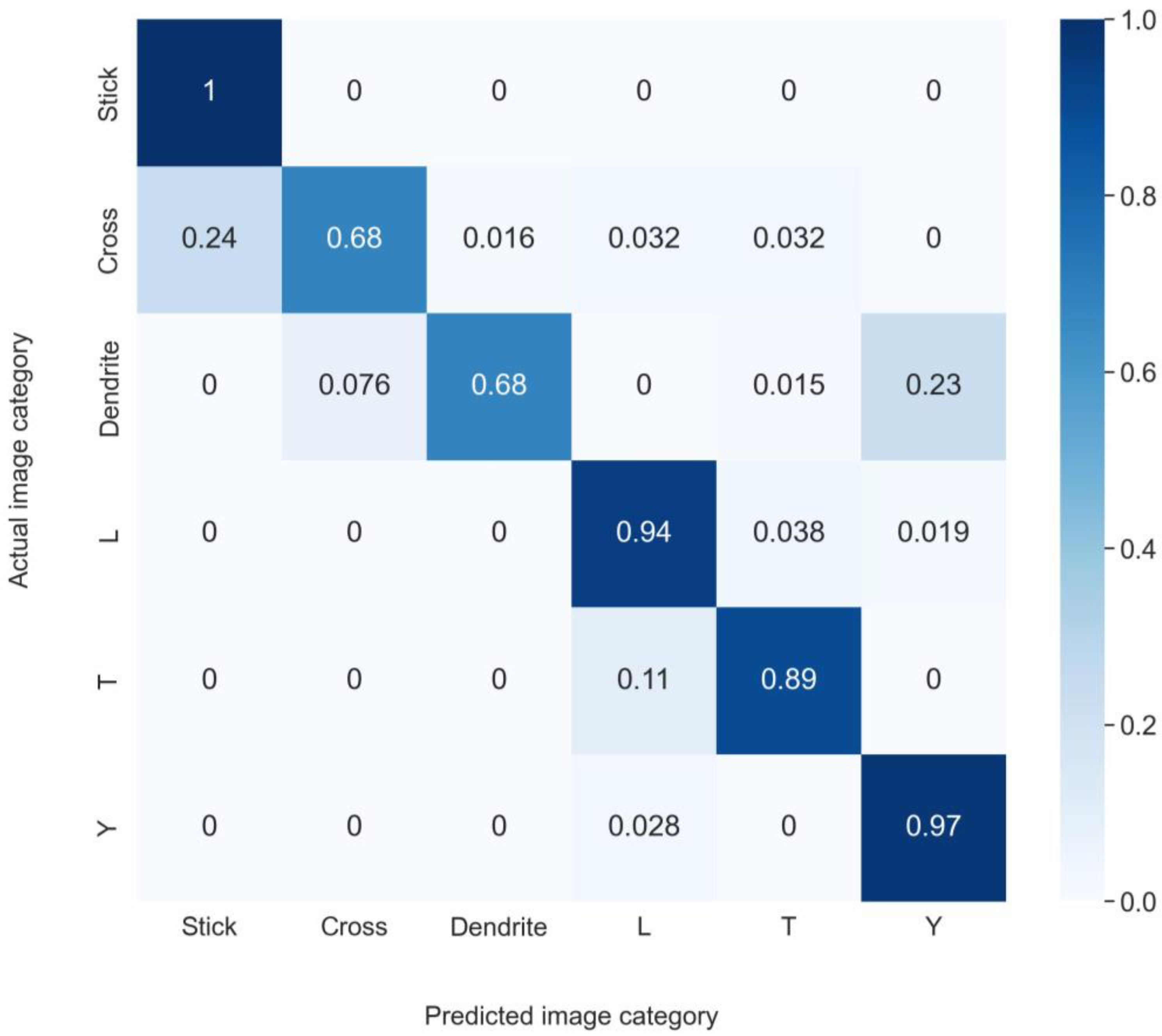

3.2. Obtained Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- König, G.; Anders, K.; Frohn, A. A new light-scattering technique to measure the diameter of periodically generated moving droplets. J. Aerosol. Sci. 1986, 17, 157–167. [Google Scholar] [CrossRef]

- Glover, A.R.; Skippon, S.M.; Boyle, R.D. Interferometric laser imaging for droplet sizing: A method for droplet-size measurement in sparse spray systems. Appl. Opt. 1995, 34, 8409–8421. [Google Scholar] [CrossRef] [PubMed]

- Mounaïm-Rousselle, C.; Pajot, O. Droplet sizing by Mie scattering interferometry in a spark ignition engine. Part. Part. Syst. Charact. 1999, 16, 160–168. [Google Scholar] [CrossRef]

- Kawaguchi, T.; Akasaka, Y.; Maeda, M. Size measurements of droplets and bubbles by advanced interferometric laser imaging technique. Meas. Sci. Technol. 2002, 13, 308. [Google Scholar] [CrossRef]

- Damaschke, N.; Nobach, H.; Tropea, C. Optical limits of particle concentration for multi-dimensional particle sizing techniques in fluid mechanics. Exp. Fluids 2002, 32, 143. [Google Scholar]

- Dehaeck, S.; van Beeck, J.P.A.P. Designing a maximum precision interferometric particle imaging set-up. Exp. Fluids 2007, 42, 767–781. [Google Scholar] [CrossRef]

- Shen, H.; Coëtmellec, S.; Gréhan, G.; Brunel, M. ILIDS revisited: Elaboration of transfer matrix models for the description of complete systems. Appl. Opt. 2012, 51, 5357–5368. [Google Scholar] [CrossRef] [PubMed]

- Brunel, M.; Shen, H. Design of ILIDS configurations for droplet’s characterization. Particuology 2013, 11, 148–157. [Google Scholar] [CrossRef]

- Shen, H.; Coëtmellec, S.; Brunel, M. Simultaneous 3D location and size measurement of spherical bubbles using cylindrical interferometric out-of-focus imaging. J. Quant. Spectrosc. Radiat. Transf. 2013, 131, 153–159. [Google Scholar] [CrossRef]

- Wu, Y.; Li, H.; Brunel, M.; Chen, J.; Gréhan, G.; Madler, L. Phase interferometric particle imaging (PHIPI) for simultaneous measurements of micron-sized droplet and nanoscale size changes. Appl. Phys. Lett. 2017, 111, 041905. [Google Scholar] [CrossRef]

- Garcia-Magarino, A.; Sor, S.; Bardera, R.; Munoz-Campillejo, J. Interferometric laser imaging for droplet sizing method for long range measurements. Measurement 2021, 168, 108418. [Google Scholar] [CrossRef]

- Parent, G.; Zimmer, L.; Renaud, A.; Richecoeur, F. Adaptation of a PTV method for droplets evaporating in vicinity of a flame. Exp. Fluids 2022, 63, 100. [Google Scholar] [CrossRef]

- Talbi, M.; Duperrier, R.; Delestre, B.; Godard, G.; Brunel, M. Interferometric ice particle imaging in a wind tunnel. Optics 2021, 2, 216–227. [Google Scholar] [CrossRef]

- Brunel, M.; Ouldarbi, L.; Fahy, A.; Perret, G. 3D-tracking of sand particles in a wave flume using interferometric imaging. Optics 2022, 3, 254–267. [Google Scholar] [CrossRef]

- Ulanowski, Z.; Hirst, E.; Kaye, P.H.; Greenaway, R.S. Retrieving the size of particles with rough and complex surfaces from two-dimensional scattering patterns. J. Quant. Spectrosc. Radiat. Transfer 2012, 113, 2457. [Google Scholar] [CrossRef]

- Brunel, M.; Shen, H.; Coëtmellec, S.; Gréhan, G.; Delobel, T. Determination of the size of irregular particles using interferometric out-of-focus imaging. Int. J. Opt. 2014, 2014, 143904. [Google Scholar] [CrossRef]

- García Carrascal, P.; González Ruiz, S.; van Beeck, J.P.A.J. Irregular particle sizing using speckle pattern for continuous wave laser applications. Exp. Fluids 2014, 55, 1851. [Google Scholar] [CrossRef]

- Brunel, M.; Gonzalez Ruiz, S.; Jacquot, J.; van Beeck, J. On the morphology of irregular rough particles from the analysis of speckle-like interferometric out-of-focus images. Opt. Commun. 2015, 338, 193–198. [Google Scholar] [CrossRef]

- Jacquot-Kielar, J.; Wu, Y.; Coëtmellec, S.; Lebrun, D.; Gréhan, G.; Brunel, M. Size determination of mixed liquid and frozen water droplets using interferometric out-of-focus imaging. J. Quant. Spectrosc. Radiat. Transf. 2016, 178, 108–116. [Google Scholar] [CrossRef]

- Ruiz, S.G.; van Beeck, J. Sizing of sand and ash particles using their speckle pattern: Influence of particle opacity. Exp. Fluids 2017, 58, 100. [Google Scholar] [CrossRef]

- Wu, Y.; Gong, Y.; Shi, L.; Lin, Z.; Wu, X.; Gong, C.; Zhou, Z.; Zhang, Y. Backward interferometric speckle imaging for evaluating size and morphology of irregular coal particles. Opt. Commun. 2021, 491, 126957. [Google Scholar] [CrossRef]

- Fienup, R.; Crimmins, T.R.; Holsztynski, W. Reconstruction of the support of an object from the support of its autocorrelation. J. Opt. Soc. Am. 1982, 7, 3–13. [Google Scholar] [CrossRef]

- Shen, H.; Wu, L.; Li, Y.; Wang, W. Two-dimensional shape retrieval from the interferometric out-of-focus image of a nonspherical particle—Part I: Theory. Appl. Opt. 2018, 57, 4968–4976. [Google Scholar] [CrossRef] [PubMed]

- Delestre, B.; Abad, A.; Talbi, M.; Fromager, M.; Brunel, M. Experimental particle’s shapes reconstructions from their interferometric images using the Error-Reduction algorithm. Opt. Commun. 2021, 498, 127229. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Piedra, P.; Kalume, A.; Zubko, E.; Mackowski, D.; Pan, Y.; Videen, G. Particle-shape classification using light scattering: An exercise in deep learning. J. Quant. Spectrosc. Radiat. Transf. 2019, 231, 140–156. [Google Scholar] [CrossRef]

- Zhang, H.; Li, Z.; Sun, J.; Fu, Y.; Jia, D.; Liu, T. Characterization of particle size and shape by an IPI system through deep learning. J. Quant. Spectrosc. Radiat. Transf. 2021, 268, 107642. [Google Scholar] [CrossRef]

- Kim, J.; Jung, W.; Kim, H.; Lee, J. CyCNN: A rotation invariant CNN using polar mapping and cylindrical convolution layers. arXiv 2020, arXiv:2007.10588. [Google Scholar]

- Bencevic, M.; Galic, I.; Habijan, M.; Babin, D. Training on Polar Image Transformations Improves Biomedical Image Segmentation. IEEE Access 2021, 9, 133365–133375. [Google Scholar] [CrossRef]

- Ahmed, M.; Afreen, N.; Ahmed, M.; Sameer, M.; Ahamed, J. An inception V3 approach for malware classification using machine learning and transfer learning. Int. J. Intell. Netw. 2023, 4, 11–18. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Fromager, M.; Aït Ameur, K.; Brunel, M. Digital micromirror device as programmable rough particle in interferometric particle imaging. Appl. Opt. 2017, 56, 3594–3598. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial Transformer Networks. arXiv 2016, arXiv:1506.02025. [Google Scholar]

- Brunel, M.; Lemaitre, P.; Porcheron, E.; Coëtmellec, S.; Gréhan, G.; Jacquot-Kielar, J. Interferometric out-of-focus imaging of ice particles with overlapping images. Appl. Opt. 2016, 55, 4902–4909. [Google Scholar] [CrossRef] [PubMed]

- Cai, S.; Mao, Z.; Wang, Z.; Yin, M.; Em Karniadakis, G. Physics-Informed Neural Networks (PINNs) for fluid mechanics: A review. Acta Mech. Sin. 2021, 37, 1727–1738. [Google Scholar] [CrossRef]

- Cai, S.; Wang, Z.; Wang, S.; Perdikaris, P.; Em Karniadakis, G. Physics-Informed Neural Networks for Heat Transfer Problems. J. Heat Transfer. 2021, 143, 060801. [Google Scholar] [CrossRef]

- Delestre, B.; Abad, A.; Talbi, M.; Brunel, M. Tomography of irregular rough particles using the Error-Reduction algorithm with multiviews interferometric particle imaging. J. Opt. Soc. Am. A 2021, 38, 1237–1247. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abad, A.; Fahy, A.; Frodello, Q.; Delestre, B.; Talbi, M.; Brunel, M. Particle Shape Recognition with Interferometric Particle Imaging Using a Convolutional Neural Network in Polar Coordinates. Photonics 2023, 10, 779. https://doi.org/10.3390/photonics10070779

Abad A, Fahy A, Frodello Q, Delestre B, Talbi M, Brunel M. Particle Shape Recognition with Interferometric Particle Imaging Using a Convolutional Neural Network in Polar Coordinates. Photonics. 2023; 10(7):779. https://doi.org/10.3390/photonics10070779

Chicago/Turabian StyleAbad, Alexis, Alexandre Fahy, Quentin Frodello, Barbara Delestre, Mohamed Talbi, and Marc Brunel. 2023. "Particle Shape Recognition with Interferometric Particle Imaging Using a Convolutional Neural Network in Polar Coordinates" Photonics 10, no. 7: 779. https://doi.org/10.3390/photonics10070779

APA StyleAbad, A., Fahy, A., Frodello, Q., Delestre, B., Talbi, M., & Brunel, M. (2023). Particle Shape Recognition with Interferometric Particle Imaging Using a Convolutional Neural Network in Polar Coordinates. Photonics, 10(7), 779. https://doi.org/10.3390/photonics10070779